二进制安装kubernetes(v1.20.16)

目录

1.集群规划

2.软件版本

3.下载地址

4.初始化虚拟机

4.1安装虚拟机

4.2升级内核

4.3安装模块

4.4系统设置

4.5设置hoss

4.6设置IPv4转发

4.7时间同步

4.8安装依赖软件包

5.SSH免密登录

6.创建相关目录

7.下载软件

8.准备cfss工具

9.生成etcd证书

9.1自签CA申请文件

9.2生成自签CA证书

9.3创建etcd证书申请文件

9.4签发Etcd HTTPS证书

10.部署Etcd集群

10.1生成配置文件

10.2生成etcd管理文件

10.3分发文件

10.4核对文件

10.5启动etcd集群

10.6查看etcd集群状态

11.安装docker-ce

11.1创建docker管理文件

11.2分发文件

11.3核对文件

11.4启动docker

12.部署Master

12.1自签CA证书

12.1.1生成CA证书配置

12.1.2生成CA证书

12.2部署Apiserver

12.2.1创建证书申请文件

12.2.2签发apiserver 证书

12.2.3创建配置文件

12.2.4启用 TLS Bootstrapping 机制

12.2.5创建管理文件

12.2.7分发文件

12.2.8核对文件

12.2.9启动kube-apiserver

12.3部署ControllerManager

12.3.1创建配置文件

12.3.2生成证书配置文件

12.3.3生成证书文件

12.3.4生成kubeconfig文件

12.3.5生成管理文件

12.3.6分发文件

12.3.8启动ControllerManager

12.4部署Scheduler

12.4.1生成配置文件

12.4.2生成证书配置文件

12.4.3生成证书文件

12.4.4生成kubeconfig文件

12.4.5生成管理文件

12.4.6分发文件

12.4.7核对文件

12.4.8启动scheduler

13.检查集群组件状态

13.1生成连接集群证书配置

13.2生成连接证书

13.3生成kubeconfig文件

13.4分发文件

13.5查看集群组件状态

14.授权用户允许请求证书

15.部署WorkNode节点

15.1创建工作目录

15.2分发文件

15.3核对文件

15.4部署kubelet

15.4.1创建配置文件

15.4.2配置参数文件

15.4.3创建管理文件

15.4.4创建kubeconfig文件

15.4.5分发文件

15.4.7启动kubelet

15.4.8批准kubelet证书申请

15.5部署kube-proxy

15.5.1创建配置文件

15.5.2创建参数文件

15.5.3生成证书配置文件

15.5.4生成证书文件

15.5.5生成kubeconfig文件

15.5.6生成管理文件

15.5.7分发文件

15.5.8核对文件

15.5.9启动kube-proxy

16.新增其他WorkNode

16.2新增vm02

16.2.1分发文件

16.2.2核对文件

16.2.3启动kubelet

16.2.3批准新Node证书申请

16.2.4启动kube-proxy

16.3新增vm03

16.3.1分发文件

16.3.2核对文件

16.3.3启动kubelet

16.3.4批准新Node证书请求

16.3.51启动kube-proxy

17.部署calico网络组件

17.1calico网络架构

17.2部署calico

17.3查看网络组件状态

18.部署coredns组件

18.1创建yaml组件

18.2部署coredns组件

19.部署dashboard

19.1创建yaml文件

19.2创建dashboard组件

19.3查看组件状态

19.4修改svc类型

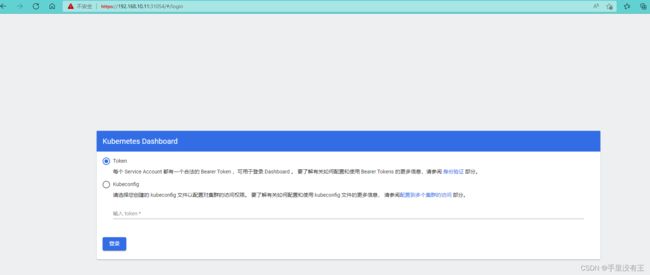

19.5访问web页面

19.6生成token

19.7登录web

20.部署MetricsServer

20.1创建yaml文件

20.2部署MetricsServer组件

20.3查看组件状态

20.4查看资源使用情况

21.安装kuboard

21.1部署kuboard

21.2查看组件状态

21.3访问 Kuboard

1.集群规划

| 序号 | IP | 角色 | Hostname | 安装组件 |

|---|---|---|---|---|

| 1 | 192.168.10.11 | Master,Node | vm01 | Apiserver,ControllerManager,Scheduler,Kubelet,Proxy,Etcd |

| 2 | 192.168.10.12 | Node | vm02 | Kubelet,Proxy,Etcd |

| 3 | 192.168.10.13 | Node | vm03 | Kubelet,Proxy,Etcd |

2.软件版本

| 序号 | 软件名称 | 版本 |

|---|---|---|

| 1 | Centos | 7.9.2009,内核升级到5.17.1-1.el7.elrepo.x86_64 |

| 2 | Docker-ce | v20.10.9 |

| 3 | Etcd | v3.4.9 |

| 4 | Kubernetes | v1.20.14 |

| 5 | cfssl | v1.6.1 |

3.下载地址

| 序号 | 软件 | 下载地址 |

|---|---|---|

| 1 | cfssl | https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl_1.6.1_linux_amd64 |

| 2 | cfssljson | https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssljson_1.6.1_linux_amd64 |

| 3 | cfssl-certinfo | https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl-certinfo_1.6.1_linux_amd64 |

| 4 | etcd | https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz |

| 5 | kubernetes | https://dl.k8s.io/v1.20.14/kubernetes-server-linux-amd64.tar.gz |

| 6 | docker-ce | https://download.docker.com/linux/static/stable/x86_64/docker-20.10.9.tgz |

4.初始化虚拟机

4.1安装虚拟机

在所有虚拟机上进行以下操作

4.2升级内核

#在所有虚拟机上进行操作

#更新yum源仓库

yum update -y

#导入ELRepo仓库的公共密钥

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

#安装ELRepo仓库的yum源

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

#查看可用的系统内核包

[root@vm01 ~]# yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* elrepo-kernel: ftp.yz.yamagata-u.ac.jp

Available Packages

elrepo-release.noarch 7.0-5.el7.elrepo elrepo-kernel

kernel-lt.x86_64 5.4.188-1.el7.elrepo elrepo-kernel

kernel-lt-devel.x86_64 5.4.188-1.el7.elrepo elrepo-kernel

kernel-lt-doc.noarch 5.4.188-1.el7.elrepo elrepo-kernel

kernel-lt-headers.x86_64 5.4.188-1.el7.elrepo elrepo-kernel

kernel-lt-tools.x86_64 5.4.188-1.el7.elrepo elrepo-kernel

kernel-lt-tools-libs.x86_64 5.4.188-1.el7.elrepo elrepo-kernel

kernel-lt-tools-libs-devel.x86_64 5.4.188-1.el7.elrepo elrepo-kernel

kernel-ml-devel.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

kernel-ml-doc.noarch 5.17.1-1.el7.elrepo elrepo-kernel

kernel-ml-headers.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

kernel-ml-tools.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

kernel-ml-tools-libs.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

kernel-ml-tools-libs-devel.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

perf.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

python-perf.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

#安装最新版本内核

yum --enablerepo=elrepo-kernel install -y kernel-ml

#查看系统上的所有可用内核

sudo awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

#设置默认版本,其中 0 是上面查询出来的可用内核

grub2-set-default 0

#生成 grub 配置文件

grub2-mkconfig -o /boot/grub2/grub.cfg

#重启

reboot

#删除旧内核(可选)

#查看系统中全部的内核

rpm -qa | grep kernel

#删除旧内核的 RPM 包,具体内容视上述命令的返回结果而定

yum remove kernel-3.10.0-514.el7.x86_64 \

kernel-tools-libs-3.10.0-862.11.6.el7.x86_64 \

kernel-tools-3.10.0-862.11.6.el7.x86_64 \

kernel-3.10.0-862.11.6.el7.x86_64

4.3安装模块

#在所有虚拟机上操作 [root@vm01 ~]# modprobe -- ip_vs [root@vm01 ~]# modprobe -- ip_vs_rr [root@vm01 ~]# modprobe -- ip_vs_wrr [root@vm01 ~]# modprobe -- ip_vs_sh [root@vm01 ~]# modprobe -- nf_conntrack_ipv4 modprobe: FATAL: Module nf_conntrack_ipv4 not found. [root@vm01 ~]# lsmod | grep ip_vs ip_vs_sh 16384 0 ip_vs_wrr 16384 0 ip_vs_rr 16384 0 ip_vs 159744 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr nf_conntrack 159744 1 ip_vs nf_defrag_ipv6 24576 2 nf_conntrack,ip_vs libcrc32c 16384 3 nf_conntrack,xfs,ip_vs [root@vm01 ~]# lsmod | grep nf_conntrack_ipv4

4.4系统设置

#关闭并停止防火墙, systemctl stop firewalld && systemctl disable firewalld #禁用SELinux,让容器可以顺利地读取主机文件系统 sed -i 's/enforcing/disabled/' /etc/selinux/config setenforce 0 #关闭swap swapoff -a sed -ri 's/.*swap.*/#&/' /etc/fstab

4.5设置hoss

cat >> /etc/hosts << EOF 192.168.10.11 vm01 192.168.10.12 vm02 192.168.10.13 vm03 EOF

4.6设置IPv4转发

cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system

4.7时间同步

yum -y install chrony vi /etc/chrony.conf server ntp.aliyun.com iburst server ntp1.aliyun.com iburst server ntp2.aliyun.com iburst server ntp3.aliyun.com iburst systemctl restart chronyd [root@vm01 ~]# chronyc -a makestep 200 OK [root@vm01 ~]# chronyc sourcestats 210 Number of sources = 2 Name/IP Address NP NR Span Frequency Freq Skew Offset Std Dev ============================================================================== 203.107.6.88 26 13 35m -0.792 1.898 -24us 1486us 120.25.115.20 23 16 36m +0.055 0.709 -251us 545us [root@vm01 ~]# chronyc sources -v 210 Number of sources = 2 .-- Source mode '^' = server, '=' = peer, '#' = local clock. / .- Source state '*' = current synced, '+' = combined , '-' = not combined, | / '?' = unreachable, 'x' = time may be in error, '~' = time too variable. || .- xxxx [ yyyy ] +/- zzzz || Reachability register (octal) -. | xxxx = adjusted offset, || Log2(Polling interval) --. | | yyyy = measured offset, || \ | | zzzz = estimated error. || | | \ MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* 203.107.6.88 2 8 377 150 +1569us[+1671us] +/- 22ms ^+ 120.25.115.20 2 8 377 86 -772us[ -772us] +/- 25ms

4.8安装依赖软件包

yum install -y ipvsadm ipset sysstat conntrack libseccomp wget git

5.SSH免密登录

#此步操作在Master主机上进行 [root@vm01 ~]# ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:nmsw1JMs6U2M+TE0fh+ZmQrI2doLV5kbpO7X0gutmE4 root@vm01 The key's randomart image is: +---[RSA 2048]----+ | | | o . | | . & = o = | | X # * * | | o OSB = . | | *.*.o.. | | *E..o. | | .++ooo | | o=..... | +----[SHA256]-----+ #配置公钥到其他节点,输入对方密码即可完成从master到vm02的免密访问 [root@vm01 ~]# ssh-copy-id vm02 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" The authenticity of host 'vm02 (192.168.10.12)' can't be established. ECDSA key fingerprint is SHA256:pFfmADyl1dFq2Uadp/YwSEe+yW29sxkfzoQD/y6jvts. ECDSA key fingerprint is MD5:27:53:f0:aa:b8:6c:2c:2e:b7:e5:ef:c7:fb:32:10:6f. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@vm02's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'vm02'" and check to make sure that only the key(s) you wanted were added. [root@vm01 ~]# ssh-copy-id vm03 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" The authenticity of host 'vm03 (192.168.10.13)' can't be established. ECDSA key fingerprint is SHA256:pFfmADyl1dFq2Uadp/YwSEe+yW29sxkfzoQD/y6jvts. ECDSA key fingerprint is MD5:27:53:f0:aa:b8:6c:2c:2e:b7:e5:ef:c7:fb:32:10:6f. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@vm03's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'vm03'" and check to make sure that only the key(s) you wanted were added.

6.创建相关目录

#在所有虚拟机上进行操作

mkdir -p /opt/TLS/{download,etcd,k8s}

mkdir -p /opt/TLS/etcd/{cfg,bin,ssl}

mkdir -p /opt/TLS/k8s/{cfg,bin,ssl}

7.下载软件

#以下操作只在master上进行

#进入到下载目录

cd /opt/TLS/download

#下载并解压cfssl

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl_1.6.1_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssljson_1.6.1_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl-certinfo_1.6.1_linux_amd64

chmod +x cfssl*

[root@vm03 download]# ll

total 40232

-rwxr-xr-x 1 root root 16659824 Dec 7 15:36 cfssl_1.6.1_linux_amd64

-rwxr-xr-x 1 root root 13502544 Dec 7 15:35 cfssl-certinfo_1.6.1_linux_amd64

-rwxr-xr-x 1 root root 11029744 Dec 7 15:35 cfssljson_1.6.1_linux_amd64

#下载并解压etcd

wget https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz

tar -xvf etcd-v3.4.9-linux-amd64.tar.gz

chmod +x etcd-v3.4.9-linux-amd64/etcd*

[root@vm03 download]# ll etcd-v3.4.9-linux-amd64/

total 40540

drwxr-xr-x 14 630384594 600260513 4096 May 22 2020 Documentation

-rwxr-xr-x 1 630384594 600260513 23827424 May 22 2020 etcd

-rwxr-xr-x 1 630384594 600260513 17612384 May 22 2020 etcdctl

-rw-r--r-- 1 630384594 600260513 43094 May 22 2020 README-etcdctl.md

-rw-r--r-- 1 630384594 600260513 8431 May 22 2020 README.md

-rw-r--r-- 1 630384594 600260513 7855 May 22 2020 READMEv2-etcdctl.md

#下载并解压kubernetes

wget https://dl.k8s.io/v1.20.14/kubernetes-server-linux-amd64.tar.gz

tar zxvf kubernetes-server-linux-amd64.tar.gz

chmod +x kubernetes/server/bin/{kubectl,kubelet,kube-apiserver,kube-controller-manager,kube-scheduler,kube-proxy}

[root@vm03 download]# ll kubernetes/server/bin/

total 1134068

-rwxr-xr-x 1 root root 57724928 Feb 16 20:49 apiextensions-apiserver

-rwxr-xr-x 1 root root 45211648 Feb 16 20:49 kubeadm

-rwxr-xr-x 1 root root 51773440 Feb 16 20:49 kube-aggregator

-rwxr-xr-x 1 root root 131301376 Feb 16 20:49 kube-apiserver

-rw-r--r-- 1 root root 8 Feb 16 20:48 kube-apiserver.docker_tag

-rw------- 1 root root 136526848 Feb 16 20:48 kube-apiserver.tar

-rwxr-xr-x 1 root root 121110528 Feb 16 20:49 kube-controller-manager

-rw-r--r-- 1 root root 8 Feb 16 20:48 kube-controller-manager.docker_tag

-rw------- 1 root root 126336000 Feb 16 20:48 kube-controller-manager.tar

-rwxr-xr-x 1 root root 46592000 Feb 16 20:49 kubectl

-rwxr-xr-x 1 root root 54333584 Feb 16 20:49 kubectl-convert

-rwxr-xr-x 1 root root 124521440 Feb 16 20:49 kubelet

-rwxr-xr-x 1 root root 1507328 Feb 16 20:49 kube-log-runner

-rwxr-xr-x 1 root root 44163072 Feb 16 20:49 kube-proxy

-rw-r--r-- 1 root root 8 Feb 16 20:48 kube-proxy.docker_tag

-rw------- 1 root root 114255872 Feb 16 20:48 kube-proxy.tar

-rwxr-xr-x 1 root root 49618944 Feb 16 20:49 kube-scheduler

-rw-r--r-- 1 root root 8 Feb 16 20:48 kube-scheduler.docker_tag

-rw------- 1 root root 54844416 Feb 16 20:48 kube-scheduler.tar

-rwxr-xr-x 1 root root 1437696 Feb 16 20:49 mounter

#下载并解压docker-ce

wget https://download.docker.com/linux/static/stable/x86_64/docker-20.10.9.tgz

tar -xvf docker-20.10.9.tgz

chmod +x docker/*

[root@vm03 download]# ll docker

total 200840

-rwxr-xr-x 1 1000 1000 33908392 Oct 5 00:08 containerd

-rwxr-xr-x 1 1000 1000 6508544 Oct 5 00:08 containerd-shim

-rwxr-xr-x 1 1000 1000 8609792 Oct 5 00:08 containerd-shim-runc-v2

-rwxr-xr-x 1 1000 1000 21131264 Oct 5 00:08 ctr

-rwxr-xr-x 1 1000 1000 52883616 Oct 5 00:08 docker

-rwxr-xr-x 1 1000 1000 64758736 Oct 5 00:08 dockerd

-rwxr-xr-x 1 1000 1000 708616 Oct 5 00:08 docker-init

-rwxr-xr-x 1 1000 1000 2784145 Oct 5 00:08 docker-proxy

-rwxr-xr-x 1 1000 1000 14352296 Oct 5 00:08 runc

8.准备cfss工具

cfssl是一个开源的证书管理工具,使用json文件生成证书,相比openssl更方便使用。

#只在master上操作 cd /opt/TLS/download cp cfssl_1.6.1_linux_amd64 /usr/local/bin/cfssl cp cfssljson_1.6.1_linux_amd64 /usr/local/bin/cfssljson cp cfssl-certinfo_1.6.1_linux_amd64 /usr/local/bin/cfssl-certinfo [root@vm03 download]# ll /usr/local/bin/cfssl* -rwxr-xr-x 1 root root 16659824 Apr 4 08:46 /usr/local/bin/cfssl -rwxr-xr-x 1 root root 13502544 Apr 4 08:46 /usr/local/bin/cfssl-certinfo -rwxr-xr-x 1 root root 11029744 Apr 4 08:46 /usr/local/bin/cfssljson

9.生成etcd证书

9.1自签CA申请文件

cd /opt/TLS/etcd/ssl

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json << EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

9.2生成自签CA证书

[root@vm03 ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca - 2022/04/04 08:51:25 [INFO] generating a new CA key and certificate from CSR 2022/04/04 08:51:25 [INFO] generate received request 2022/04/04 08:51:25 [INFO] received CSR 2022/04/04 08:51:25 [INFO] generating key: rsa-2048 2022/04/04 08:51:26 [INFO] encoded CSR 2022/04/04 08:51:26 [INFO] signed certificate with serial number 464748957865402020542542705181876295838207954582 [root@vm03 ssl]# ll total 20 -rw-r--r-- 1 root root 287 Apr 4 08:51 ca-config.json -rw-r--r-- 1 root root 956 Apr 4 08:51 ca.csr -rw-r--r-- 1 root root 209 Apr 4 08:51 ca-csr.json -rw------- 1 root root 1679 Apr 4 08:51 ca-key.pem -rw-r--r-- 1 root root 1216 Apr 4 08:51 ca.pem #上述操作,会生成ca.pem和ca-key.pem两个文件

9.3创建etcd证书申请文件

cat > server-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"192.168.10.11",

"192.168.10.12",

"192.168.10.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

#上述文件hosts字段中IP为所有etcd节点的集群内部通信IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

9.4签发Etcd HTTPS证书

[root@vm03 ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server 2022/04/04 08:55:55 [INFO] generate received request 2022/04/04 08:55:55 [INFO] received CSR 2022/04/04 08:55:55 [INFO] generating key: rsa-2048 2022/04/04 08:55:55 [INFO] encoded CSR 2022/04/04 08:55:55 [INFO] signed certificate with serial number 177379691802225269854687255587397345225756828558 [root@vm03 ssl]# ll total 36 -rw-r--r-- 1 root root 287 Apr 4 08:51 ca-config.json -rw-r--r-- 1 root root 956 Apr 4 08:51 ca.csr -rw-r--r-- 1 root root 209 Apr 4 08:51 ca-csr.json -rw------- 1 root root 1679 Apr 4 08:51 ca-key.pem -rw-r--r-- 1 root root 1216 Apr 4 08:51 ca.pem -rw-r--r-- 1 root root 1013 Apr 4 08:55 server.csr -rw-r--r-- 1 root root 290 Apr 4 08:55 server-csr.json -rw------- 1 root root 1675 Apr 4 08:55 server-key.pem -rw-r--r-- 1 root root 1338 Apr 4 08:55 server.pem #上述操作会生成server.pem和server-key.pem两个文件

10.部署Etcd集群

10.1生成配置文件

#这里为了方便操作,同时生成了3个etcd虚拟机上的配置文件,然后将各自的配置文件分发至不同的虚拟机,减少了修改的操作。 cd /opt/TLS/etcd/cfg #------------------------------------- #生成vm01虚拟机上对应的配置文件 #------------------------------------- cat > etcd01.conf << EOF #[Member] ETCD_NAME="etcd-1" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.10.11:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.10.11:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.11:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.11:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.10.11:2380,etcd-2=https://192.168.10.12:2380,etcd-3=https://192.168.10.13:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" EOF #------------------------------------- #生成vm02虚拟机上对应的配置文件 #------------------------------------- cat > etcd02.conf << EOF #[Member] ETCD_NAME="etcd-2" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.10.12:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.10.12:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.12:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.12:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.10.11:2380,etcd-2=https://192.168.10.12:2380,etcd-3=https://192.168.10.13:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" EOF #------------------------------------- #生成vm03虚拟机上对应的配置文件 #------------------------------------- cat > etcd03.conf << EOF #[Member] ETCD_NAME="etcd-3" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.10.13:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.10.13:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.13:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.13:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.10.11:2380,etcd-2=https://192.168.10.12:2380,etcd-3=https://192.168.10.13:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" EOF #查看已生成的配置文件清单列表 [root@vm03 cfg]# ll total 16 -rw-r--r-- 1 root root 509 Apr 4 09:05 etcd01.conf -rw-r--r-- 1 root root 509 Apr 4 09:05 etcd02.conf -rw-r--r-- 1 root root 509 Apr 4 09:05 etcd03.conf #---------------------------备注说明------------------------------- # • ETCD_NAME:节点名称,集群中唯一 # • ETCD_DATA_DIR:数据目录 # • ETCD_LISTEN_PEER_URLS:集群通信监听地址 # • ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址 # • ETCD_INITIAL_ADVERTISE_PEERURLS:集群通告地址 # • ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址 # • ETCD_INITIAL_CLUSTER:集群节点地址 # • ETCD_INITIALCLUSTER_TOKEN:集群Token # • ETCD_INITIALCLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群 #-----------------------------------------------------------------

10.2生成etcd管理文件

cd /opt/TLS/etcd/cfg cat > etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/etcd/cfg/etcd.conf ExecStart=/opt/etcd/bin/etcd \ --cert-file=/opt/etcd/ssl/server.pem \ --key-file=/opt/etcd/ssl/server-key.pem \ --peer-cert-file=/opt/etcd/ssl/server.pem \ --peer-key-file=/opt/etcd/ssl/server-key.pem \ --trusted-ca-file=/opt/etcd/ssl/ca.pem \ --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \ --logger=zap Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF #查看已生成的文件列表清单 [root@vm03 cfg]# ll total 16 -rw-r--r-- 1 root root 509 Apr 4 09:05 etcd01.conf -rw-r--r-- 1 root root 509 Apr 4 09:05 etcd02.conf -rw-r--r-- 1 root root 509 Apr 4 09:05 etcd03.conf -rw-r--r-- 1 root root 535 Apr 4 09:05 etcd.service

10.3分发文件

#创建etcd运行时所需的目录

mkdir -p /var/lib/etcd/default.etcd

ssh vm02 "mkdir -p /var/lib/etcd/default.etcd"

ssh vm03 "mkdir -p /var/lib/etcd/default.etcd"

#创建ecd配置文件目录

mkdir -p /opt/etcd/{bin,cfg,ssl}

ssh vm02 "mkdir -p /opt/etcd/{bin,cfg,ssl}"

ssh vm03 "mkdir -p /opt/etcd/{bin,cfg,ssl}"

#分发etcd可执行文件

scp -r /opt/TLS/download/etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

scp -r /opt/TLS/download/etcd-v3.4.9-linux-amd64/{etcd,etcdctl} vm02:/opt/etcd/bin/

scp -r /opt/TLS/download/etcd-v3.4.9-linux-amd64/{etcd,etcdctl} vm03:/opt/etcd/bin/

#分发etcd配置文件

scp -r /opt/TLS/etcd/cfg/etcd01.conf /opt/etcd/cfg/etcd.conf

scp -r /opt/TLS/etcd/cfg/etcd02.conf vm02:/opt/etcd/cfg/etcd.conf

scp -r /opt/TLS/etcd/cfg/etcd03.conf vm03:/opt/etcd/cfg/etcd.conf

#分发etcd管理文件

scp -r /opt/TLS/etcd/cfg/etcd.service /usr/lib/systemd/system/etcd.service

scp -r /opt/TLS/etcd/cfg/etcd.service vm02:/usr/lib/systemd/system/etcd.service

scp -r /opt/TLS/etcd/cfg/etcd.service vm03:/usr/lib/systemd/system/etcd.service

#分发etcd证书文件

scp -r /opt/TLS/etcd/ssl/*pem /opt/etcd/ssl

scp -r /opt/TLS/etcd/ssl/*pem vm02:/opt/etcd/ssl

scp -r /opt/TLS/etcd/ssl/*pem vm03:/opt/etcd/ssl

10.4核对文件

#核对etcd可执行文件 [root@vm01 cfg]# ls -l /opt/etcd/bin/ total 40472 -rwxr-xr-x 1 root root 23827424 Apr 3 12:38 etcd -rwxr-xr-x 1 root root 17612384 Apr 3 12:38 etcdctl [root@vm01 cfg]# ssh vm02 "ls -l /opt/etcd/bin/" total 40472 -rwxr-xr-x 1 root root 23827424 Apr 3 12:38 etcd -rwxr-xr-x 1 root root 17612384 Apr 3 12:38 etcdctl [root@vm01 cfg]# ssh vm03 "ls -l /opt/etcd/bin/" total 40472 -rwxr-xr-x 1 root root 23827424 Apr 3 12:38 etcd -rwxr-xr-x 1 root root 17612384 Apr 3 12:38 etcdctl #核对etcd配置文件 [root@vm01 cfg]# ls -l /opt/etcd/cfg/ total 4 -rw-r--r-- 1 root root 509 Apr 3 12:38 etcd.conf [root@vm01 cfg]# ssh vm02 "ls -l /opt/etcd/cfg/" total 4 -rw-r--r-- 1 root root 509 Apr 3 12:38 etcd.conf [root@vm01 cfg]# ssh vm03 "ls -l /opt/etcd/cfg/" total 4 -rw-r--r-- 1 root root 509 Apr 4 09:16 etcd.conf #核对etcd管理文件 [root@vm01 cfg]# ls -l /usr/lib/systemd/system/etcd* -rw-r--r-- 1 root root 535 Apr 3 12:39 /usr/lib/systemd/system/etcd.service [root@vm01 cfg]# ssh vm02 "ls -l /usr/lib/systemd/system/etcd*" -rw-r--r-- 1 root root 535 Apr 3 12:39 /usr/lib/systemd/system/etcd.service [root@vm01 cfg]# ssh vm03 "ls -l /usr/lib/systemd/system/etcd*" -rw-r--r-- 1 root root 535 Apr 4 09:17 /usr/lib/systemd/system/etcd.service #核对etcd证书文件 [root@vm01 cfg]# ls -l /opt/etcd/ssl total 16 -rw------- 1 root root 1679 Apr 3 12:39 ca-key.pem -rw-r--r-- 1 root root 1216 Apr 3 12:39 ca.pem -rw------- 1 root root 1675 Apr 3 12:39 server-key.pem -rw-r--r-- 1 root root 1338 Apr 3 12:39 server.pem [root@vm01 cfg]# ssh vm02 "ls -l /opt/etcd/ssl" total 16 -rw------- 1 root root 1679 Apr 3 12:39 ca-key.pem -rw-r--r-- 1 root root 1216 Apr 3 12:39 ca.pem -rw------- 1 root root 1675 Apr 3 12:39 server-key.pem -rw-r--r-- 1 root root 1338 Apr 3 12:39 server.pem [root@vm01 cfg]# ssh vm03 "ls -l /opt/etcd/ssl" total 16 -rw------- 1 root root 1679 Apr 4 09:17 ca-key.pem -rw-r--r-- 1 root root 1216 Apr 4 09:17 ca.pem -rw------- 1 root root 1675 Apr 4 09:17 server-key.pem -rw-r--r-- 1 root root 1338 Apr 4 09:17 server.pem

10.5启动etcd集群

#按顺序分别在vm01、vm02和vm03这3台虚拟机上执行以下命令,其中在vm01上执行命令时会有等待现象,主要是等待其他机器的状态

#在vm01上执行启动命令,并设置开机启动,同时查看etcd状态

[root@vm01 cfg]# systemctl daemon-reload && systemctl start etcd && systemctl enable etcd && systemctl status etcd

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2022-04-03 12:52:39 CST; 83ms ago

Main PID: 1281 (etcd)

CGroup: /system.slice/etcd.service

└─1281 /opt/etcd/bin/etcd --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem -...

Apr 03 12:52:39 vm01 etcd[1281]: {"level":"info","ts":"2022-04-03T12:52:39.282+0800","caller":"raft/node.go:325","msg":"raft.node: 6571fb7574e87dba elected leader 6571fb7574e87dba at term 4"}

Apr 03 12:52:39 vm01 etcd[1281]: {"level":"info","ts":"2022-04-03T12:52:39.290+0800","caller":"etcdserver/server.go:2036","msg":"published local member to cluster through raft","local-member-id":"6571fb...

Apr 03 12:52:39 vm01 systemd[1]: Started Etcd Server.

Apr 03 12:52:39 vm01 etcd[1281]: {"level":"info","ts":"2022-04-03T12:52:39.299+0800","caller":"embed/serve.go:191","msg":"serving client traffic securely","address":"192.168.10.11:2379"}

Apr 03 12:52:39 vm01 etcd[1281]: {"level":"warn","ts":"2022-04-03T12:52:39.338+0800","caller":"etcdserver/cluster_util.go:315","msg":"failed to reach the peer URL","address":"https://192.168.10.13:2380/...

Apr 03 12:52:39 vm01 etcd[1281]: {"level":"warn","ts":"2022-04-03T12:52:39.338+0800","caller":"etcdserver/cluster_util.go:168","msg":"failed to get version","remote-member-id":"d1fbb74bc6...ction refused"}

Apr 03 12:52:39 vm01 etcd[1281]: {"level":"info","ts":"2022-04-03T12:52:39.338+0800","caller":"etcdserver/server.go:2527","msg":"setting up initial cluster version","cluster-version":"3.0"}

Apr 03 12:52:39 vm01 etcd[1281]: {"level":"info","ts":"2022-04-03T12:52:39.341+0800","caller":"membership/cluster.go:558","msg":"set initial cluster version","cluster-id":"a967fee455377b3...version":"3.0"}

Apr 03 12:52:39 vm01 etcd[1281]: {"level":"info","ts":"2022-04-03T12:52:39.341+0800","caller":"api/capability.go:76","msg":"enabled capabilities for version","cluster-version":"3.0"}

Apr 03 12:52:39 vm01 etcd[1281]: {"level":"info","ts":"2022-04-03T12:52:39.341+0800","caller":"etcdserver/server.go:2559","msg":"cluster version is updated","cluster-version":"3.0"}

Hint: Some lines were ellipsized, use -l to show in full.

#在vm02上执行启动命令,并设置开机启动,同时查看etcd状态

[root@vm02 ~]# systemctl daemon-reload && systemctl start etcd && systemctl enable etcd && systemctl status etcd

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2022-04-03 12:52:41 CST; 76ms ago

Main PID: 1188 (etcd)

CGroup: /system.slice/etcd.service

└─1188 /opt/etcd/bin/etcd --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem -...

Apr 03 12:52:41 vm02 etcd[1188]: {"level":"info","ts":"2022-04-03T12:52:41.311+0800","caller":"raft/raft.go:811","msg":"9b449b0ff1d4c375 [logterm: 1, index: 3] sent MsgVote request to d1f...e5c at term 2"}

Apr 03 12:52:41 vm02 etcd[1188]: {"level":"info","ts":"2022-04-03T12:52:41.582+0800","caller":"raft/raft.go:859","msg":"9b449b0ff1d4c375 [term: 2] received a MsgVote message with higher t...dba [term: 4]"}

Apr 03 12:52:41 vm02 etcd[1188]: {"level":"info","ts":"2022-04-03T12:52:41.582+0800","caller":"raft/raft.go:700","msg":"9b449b0ff1d4c375 became follower at term 4"}

Apr 03 12:52:41 vm02 etcd[1188]: {"level":"info","ts":"2022-04-03T12:52:41.583+0800","caller":"raft/raft.go:960","msg":"9b449b0ff1d4c375 [logterm: 1, index: 3, vote: 0] cast MsgVote for 6... 3] at term 4"}

Apr 03 12:52:41 vm02 etcd[1188]: {"level":"info","ts":"2022-04-03T12:52:41.588+0800","caller":"raft/node.go:325","msg":"raft.node: 9b449b0ff1d4c375 elected leader 6571fb7574e87dba at term 4"}

Apr 03 12:52:41 vm02 etcd[1188]: {"level":"info","ts":"2022-04-03T12:52:41.601+0800","caller":"etcdserver/server.go:2036","msg":"published local member to cluster through raft","local-member-id":"9b449b...

Apr 03 12:52:41 vm02 systemd[1]: Started Etcd Server.

Apr 03 12:52:41 vm02 etcd[1188]: {"level":"info","ts":"2022-04-03T12:52:41.610+0800","caller":"embed/serve.go:191","msg":"serving client traffic securely","address":"192.168.10.12:2379"}

Apr 03 12:52:41 vm02 etcd[1188]: {"level":"info","ts":"2022-04-03T12:52:41.644+0800","caller":"membership/cluster.go:558","msg":"set initial cluster version","cluster-id":"a967fee455377b3...version":"3.0"}

Apr 03 12:52:41 vm02 etcd[1188]: {"level":"info","ts":"2022-04-03T12:52:41.645+0800","caller":"api/capability.go:76","msg":"enabled capabilities for version","cluster-version":"3.0"}

Hint: Some lines were ellipsized, use -l to show in full.

#在vm03上执行启动命令,并设置开机启动,同时查看etcd状态

[root@vm03 ~]# systemctl daemon-reload && systemctl start etcd && systemctl enable etcd && systemctl status etcd

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2022-04-04 09:29:12 CST; 90ms ago

Main PID: 1160 (etcd)

CGroup: /system.slice/etcd.service

└─1160 /opt/etcd/bin/etcd --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem -...

Apr 04 09:29:12 vm03 etcd[1160]: {"level":"info","ts":"2022-04-04T09:29:12.907+0800","caller":"membership/cluster.go:558","msg":"set initial cluster version","cluster-id":"a967fee455377b3...version":"3.0"}

Apr 04 09:29:12 vm03 etcd[1160]: {"level":"info","ts":"2022-04-04T09:29:12.907+0800","caller":"api/capability.go:76","msg":"enabled capabilities for version","cluster-version":"3.0"}

Apr 04 09:29:12 vm03 etcd[1160]: {"level":"info","ts":"2022-04-04T09:29:12.907+0800","caller":"etcdserver/server.go:2036","msg":"published local member to cluster through raft","local-member-id":"d1fbb7...

Apr 04 09:29:12 vm03 systemd[1]: Started Etcd Server.

Apr 04 09:29:12 vm03 etcd[1160]: {"level":"info","ts":"2022-04-04T09:29:12.915+0800","caller":"embed/serve.go:191","msg":"serving client traffic securely","address":"192.168.10.13:2379"}

Apr 04 09:29:12 vm03 etcd[1160]: {"level":"info","ts":"2022-04-04T09:29:12.916+0800","caller":"etcdserver/server.go:715","msg":"initialized peer connections; fast-forwarding election ticks","local-membe...

Apr 04 09:29:12 vm03 etcd[1160]: {"level":"info","ts":"2022-04-04T09:29:12.932+0800","caller":"rafthttp/stream.go:250","msg":"set message encoder","from":"d1fbb74bc6a61e5c","to":"d1fbb74b...tream Message"}

Apr 04 09:29:12 vm03 etcd[1160]: {"level":"warn","ts":"2022-04-04T09:29:12.933+0800","caller":"rafthttp/stream.go:277","msg":"established TCP streaming connection with remote peer","strea...1fb7574e87dba"}

Apr 04 09:29:12 vm03 etcd[1160]: {"level":"info","ts":"2022-04-04T09:29:12.967+0800","caller":"rafthttp/stream.go:250","msg":"set message encoder","from":"d1fbb74bc6a61e5c","to":"d1fbb74b...eam MsgApp v2"}

Apr 04 09:29:12 vm03 etcd[1160]: {"level":"warn","ts":"2022-04-04T09:29:12.967+0800","caller":"rafthttp/stream.go:277","msg":"established TCP streaming connection with remote peer","strea...1fb7574e87dba"}

Hint: Some lines were ellipsized, use -l to show in full.

10.6查看etcd集群状态

#在任意一台集群上执行以下命令,这里选中了vm01 ETCDCTL_API=3 /opt/etcd/bin/etcdctl \ --cacert=/opt/etcd/ssl/ca.pem \ --cert=/opt/etcd/ssl/server.pem \ --key=/opt/etcd/ssl/server-key.pem \ --write-out=table \ --endpoints="https://192.168.10.11:2379,https://192.168.10.12:2379,https://192.168.10.13:2379" endpoint health #返回结果 +----------------------------+--------+-------------+-------+ | ENDPOINT | HEALTH | TOOK | ERROR | +----------------------------+--------+-------------+-------+ | https://192.168.10.11:2379 | true | 10.702229ms | | | https://192.168.10.13:2379 | true | 18.81801ms | | | https://192.168.10.12:2379 | true | 18.017598ms | | +----------------------------+--------+-------------+-------+ ETCDCTL_API=3 /opt/etcd/bin/etcdctl \ --cacert=/opt/etcd/ssl/ca.pem \ --cert=/opt/etcd/ssl/server.pem \ --key=/opt/etcd/ssl/server-key.pem \ --write-out=table \ --endpoints="https://192.168.10.11:2379,https://192.168.10.12:2379,https://192.168.10.13:2379" endpoint status #返回结果 +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | https://192.168.10.11:2379 | 6571fb7574e87dba | 3.4.9 | 20 kB | true | false | 4 | 9 | 9 | | | https://192.168.10.12:2379 | 9b449b0ff1d4c375 | 3.4.9 | 25 kB | false | false | 4 | 9 | 9 | | | https://192.168.10.13:2379 | d1fbb74bc6a61e5c | 3.4.9 | 25 kB | false | false | 4 | 9 | 9 | | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

至此,etcd集群已搭建完成,从上述表格来看,vm01(192.168.10.11)作为了主节点。如有问题请使用“tail -fn 500 /var/log/message”来查看系统日志进行分析。

11.安装docker-ce

11.1创建docker管理文件

#在vm01上进行操作,为了方便操作,将可执行文件和配置文件进行了分离

#可执行文件放在/opt/TLS/download/docker/bin下

#配置文件放在/opt/TLS/download/docker/cfg下

cd /opt/TLS/download

mkdir -p bin

mv docker/* bin

mv bin docker

mkdir -p docker/cfg

cd /opt/TLS/download/docker/cfg

#创建配置文件

cd /opt/TLS/download/docker/cfg

cat > docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/local/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

tee daemon.json << 'EOF'

{

"registry-mirrors": ["https://ung2thfc.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "50m"

},

"storage-driver": "overlay2"

}

EOF

#查看文件目录结构

[root@vm01 docker]# cd /opt/TLS/download/docker/

[root@vm01 docker]# tree ./

./

├── bin

│ ├── containerd

│ ├── containerd-shim

│ ├── containerd-shim-runc-v2

│ ├── ctr

│ ├── docker

│ ├── dockerd

│ ├── docker-init

│ ├── docker-proxy

│ └── runc

└── cfg

├── daemon.json

└── docker.service

11.2分发文件

#创建docker目录 mkdir -p /etc/docker ssh vm02 "mkdir -p /etc/docker" ssh vm03 "mkdir -p /etc/docker" #分发docker管理文件 scp /opt/TLS/download/docker/cfg/docker.service /usr/lib/systemd/system/docker.service scp /opt/TLS/download/docker/cfg/docker.service vm02:/usr/lib/systemd/system/docker.service scp /opt/TLS/download/docker/cfg/docker.service vm03:/usr/lib/systemd/system/docker.service #分发docker配置文件 scp /opt/TLS/download/docker/cfg/daemon.json /etc/docker/daemon.json scp /opt/TLS/download/docker/cfg/daemon.json vm02:/etc/docker/daemon.json scp /opt/TLS/download/docker/cfg/daemon.json vm03:/etc/docker/daemon.json #分发docker可执行文件 scp /opt/TLS/download/docker/bin/* /usr/local/bin scp /opt/TLS/download/docker/bin/* vm02:/usr/local/bin scp /opt/TLS/download/docker/bin/* vm03:/usr/local/bin

11.3核对文件

#核对docker管理文件 [root@vm01 docker]# ls -l /usr/lib/systemd/system/docker.service -rw-r--r-- 1 root root 456 Apr 3 13:17 /usr/lib/systemd/system/docker.service [root@vm01 docker]# ssh vm02 "ls -l /usr/lib/systemd/system/docker.service" -rw-r--r-- 1 root root 456 Apr 3 13:17 /usr/lib/systemd/system/docker.service [root@vm01 docker]# ssh vm03 "ls -l /usr/lib/systemd/system/docker.service" -rw-r--r-- 1 root root 456 Apr 4 09:52 /usr/lib/systemd/system/docker.service #核对docker配置文件 [root@vm01 docker]# ls -l /etc/docker/daemon.json -rw-r--r-- 1 root root 219 Apr 3 13:17 /etc/docker/daemon.json [root@vm01 docker]# ssh vm02 "ls -l /etc/docker/daemon.json" -rw-r--r-- 1 root root 219 Apr 3 13:18 /etc/docker/daemon.json [root@vm01 docker]# ssh vm03 "ls -l /etc/docker/daemon.json" -rw-r--r-- 1 root root 219 Apr 4 09:52 /etc/docker/daemon.json #核对docker可执行文件 [root@vm01 docker]# ls -l /usr/local/bin/ total 241072 -rwxr-xr-x 1 root root 16659824 Apr 3 12:34 cfssl -rwxr-xr-x 1 root root 13502544 Apr 3 12:34 cfssl-certinfo -rwxr-xr-x 1 root root 11029744 Apr 3 12:34 cfssljson -rwxr-xr-x 1 root root 33908392 Apr 3 13:19 containerd -rwxr-xr-x 1 root root 6508544 Apr 3 13:19 containerd-shim -rwxr-xr-x 1 root root 8609792 Apr 3 13:19 containerd-shim-runc-v2 -rwxr-xr-x 1 root root 21131264 Apr 3 13:19 ctr -rwxr-xr-x 1 root root 52883616 Apr 3 13:19 docker -rwxr-xr-x 1 root root 64758736 Apr 3 13:19 dockerd -rwxr-xr-x 1 root root 708616 Apr 3 13:19 docker-init -rwxr-xr-x 1 root root 2784145 Apr 3 13:19 docker-proxy -rwxr-xr-x 1 root root 14352296 Apr 3 13:19 runc [root@vm01 docker]# ssh vm02 "ls -l /usr/local/bin/" total 200840 -rwxr-xr-x 1 root root 33908392 Apr 3 13:19 containerd -rwxr-xr-x 1 root root 6508544 Apr 3 13:19 containerd-shim -rwxr-xr-x 1 root root 8609792 Apr 3 13:19 containerd-shim-runc-v2 -rwxr-xr-x 1 root root 21131264 Apr 3 13:19 ctr -rwxr-xr-x 1 root root 52883616 Apr 3 13:19 docker -rwxr-xr-x 1 root root 64758736 Apr 3 13:19 dockerd -rwxr-xr-x 1 root root 708616 Apr 3 13:19 docker-init -rwxr-xr-x 1 root root 2784145 Apr 3 13:19 docker-proxy -rwxr-xr-x 1 root root 14352296 Apr 3 13:19 runc [root@vm01 docker]# ssh vm03 "ls -l /usr/local/bin/" total 200840 -rwxr-xr-x 1 root root 33908392 Apr 4 09:54 containerd -rwxr-xr-x 1 root root 6508544 Apr 4 09:54 containerd-shim -rwxr-xr-x 1 root root 8609792 Apr 4 09:54 containerd-shim-runc-v2 -rwxr-xr-x 1 root root 21131264 Apr 4 09:54 ctr -rwxr-xr-x 1 root root 52883616 Apr 4 09:54 docker -rwxr-xr-x 1 root root 64758736 Apr 4 09:54 dockerd -rwxr-xr-x 1 root root 708616 Apr 4 09:54 docker-init -rwxr-xr-x 1 root root 2784145 Apr 4 09:54 docker-proxy -rwxr-xr-x 1 root root 14352296 Apr 4 09:54 runc

11.4启动docker

#在vm01上执行启动命令,设置开启启动,并查看状态 [root@vm01 docker]# systemctl daemon-reload && systemctl start docker && systemctl enable docker && systemctl status docker Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service. ● docker.service - Docker Application Container Engine Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled) Active: active (running) since Sun 2022-04-03 13:26:46 CST; 72ms ago Docs: https://docs.docker.com Main PID: 1466 (dockerd) CGroup: /system.slice/docker.service ├─1466 /usr/local/bin/dockerd └─1471 containerd --config /var/run/docker/containerd/containerd.toml --log-level info Apr 03 13:26:46 vm01 dockerd[1466]: time="2022-04-03T13:26:46.552291845+08:00" level=info msg="ClientConn switching balancer to \"pick_first\"" module=grpc Apr 03 13:26:46 vm01 dockerd[1466]: time="2022-04-03T13:26:46.577035980+08:00" level=warning msg="Your kernel does not support cgroup blkio weight" Apr 03 13:26:46 vm01 dockerd[1466]: time="2022-04-03T13:26:46.577384262+08:00" level=warning msg="Your kernel does not support cgroup blkio weight_device" Apr 03 13:26:46 vm01 dockerd[1466]: time="2022-04-03T13:26:46.577753307+08:00" level=info msg="Loading containers: start." Apr 03 13:26:46 vm01 dockerd[1466]: time="2022-04-03T13:26:46.654683641+08:00" level=info msg="Default bridge (docker0) is assigned with an IP address 172.17.0.0/16. Daemon option --bip ca...ed IP address" Apr 03 13:26:46 vm01 dockerd[1466]: time="2022-04-03T13:26:46.696405877+08:00" level=info msg="Loading containers: done." Apr 03 13:26:46 vm01 dockerd[1466]: time="2022-04-03T13:26:46.705318380+08:00" level=info msg="Docker daemon" commit=79ea9d3 graphdriver(s)=overlay2 version=20.10.9 Apr 03 13:26:46 vm01 dockerd[1466]: time="2022-04-03T13:26:46.705785575+08:00" level=info msg="Daemon has completed initialization" Apr 03 13:26:46 vm01 systemd[1]: Started Docker Application Container Engine. Apr 03 13:26:46 vm01 dockerd[1466]: time="2022-04-03T13:26:46.739607525+08:00" level=info msg="API listen on /var/run/docker.sock" Hint: Some lines were ellipsized, use -l to show in full. #在vm02上执行启动命令,设置开启启动,并查看状态 [root@vm02 ~]# systemctl daemon-reload && systemctl start docker && systemctl enable docker && systemctl status docker Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service. ● docker.service - Docker Application Container Engine Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled) Active: active (running) since Sun 2022-04-03 13:26:53 CST; 84ms ago Docs: https://docs.docker.com Main PID: 1301 (dockerd) CGroup: /system.slice/docker.service ├─1301 /usr/local/bin/dockerd └─1307 containerd --config /var/run/docker/containerd/containerd.toml --log-level info Apr 03 13:26:53 vm02 dockerd[1301]: time="2022-04-03T13:26:53.245105288+08:00" level=info msg="ClientConn switching balancer to \"pick_first\"" module=grpc Apr 03 13:26:53 vm02 dockerd[1301]: time="2022-04-03T13:26:53.267932539+08:00" level=warning msg="Your kernel does not support cgroup blkio weight" Apr 03 13:26:53 vm02 dockerd[1301]: time="2022-04-03T13:26:53.268280419+08:00" level=warning msg="Your kernel does not support cgroup blkio weight_device" Apr 03 13:26:53 vm02 dockerd[1301]: time="2022-04-03T13:26:53.268627605+08:00" level=info msg="Loading containers: start." Apr 03 13:26:53 vm02 dockerd[1301]: time="2022-04-03T13:26:53.356983369+08:00" level=info msg="Default bridge (docker0) is assigned with an IP address 172.17.0.0/16. Daemon option --bip ca...ed IP address" Apr 03 13:26:53 vm02 dockerd[1301]: time="2022-04-03T13:26:53.402881653+08:00" level=info msg="Loading containers: done." Apr 03 13:26:53 vm02 dockerd[1301]: time="2022-04-03T13:26:53.417527585+08:00" level=info msg="Docker daemon" commit=79ea9d3 graphdriver(s)=overlay2 version=20.10.9 Apr 03 13:26:53 vm02 dockerd[1301]: time="2022-04-03T13:26:53.417931806+08:00" level=info msg="Daemon has completed initialization" Apr 03 13:26:53 vm02 systemd[1]: Started Docker Application Container Engine. Apr 03 13:26:53 vm02 dockerd[1301]: time="2022-04-03T13:26:53.482157061+08:00" level=info msg="API listen on /var/run/docker.sock" Hint: Some lines were ellipsized, use -l to show in full. #在vm03上执行启动命令,设置开启启动,并查看状态 [root@vm03 ~]# systemctl daemon-reload && systemctl start docker && systemctl enable docker && systemctl status docker Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service. ● docker.service - Docker Application Container Engine Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled) Active: active (running) since Mon 2022-04-04 10:00:48 CST; 79ms ago Docs: https://docs.docker.com Main PID: 1260 (dockerd) CGroup: /system.slice/docker.service ├─1260 /usr/local/bin/dockerd └─1266 containerd --config /var/run/docker/containerd/containerd.toml --log-level info Apr 04 10:00:48 vm03 dockerd[1260]: time="2022-04-04T10:00:48.741931283+08:00" level=info msg="ClientConn switching balancer to \"pick_first\"" module=grpc Apr 04 10:00:48 vm03 dockerd[1260]: time="2022-04-04T10:00:48.762734549+08:00" level=warning msg="Your kernel does not support cgroup blkio weight" Apr 04 10:00:48 vm03 dockerd[1260]: time="2022-04-04T10:00:48.763052152+08:00" level=warning msg="Your kernel does not support cgroup blkio weight_device" Apr 04 10:00:48 vm03 dockerd[1260]: time="2022-04-04T10:00:48.763369435+08:00" level=info msg="Loading containers: start." Apr 04 10:00:48 vm03 dockerd[1260]: time="2022-04-04T10:00:48.843920653+08:00" level=info msg="Default bridge (docker0) is assigned with an IP address 172.17.0.0/16. Daemon option --bip ca...ed IP address" Apr 04 10:00:48 vm03 dockerd[1260]: time="2022-04-04T10:00:48.896461096+08:00" level=info msg="Loading containers: done." Apr 04 10:00:48 vm03 dockerd[1260]: time="2022-04-04T10:00:48.910089764+08:00" level=info msg="Docker daemon" commit=79ea9d3 graphdriver(s)=overlay2 version=20.10.9 Apr 04 10:00:48 vm03 dockerd[1260]: time="2022-04-04T10:00:48.910487468+08:00" level=info msg="Daemon has completed initialization" Apr 04 10:00:48 vm03 systemd[1]: Started Docker Application Container Engine. Apr 04 10:00:48 vm03 dockerd[1260]: time="2022-04-04T10:00:48.942539314+08:00" level=info msg="API listen on /var/run/docker.sock" Hint: Some lines were ellipsized, use -l to show in full. #在vm01、vm02、vm03上执行“docker info”命令,看到如下信息即可 Client: Context: default Debug Mode: false Server: Containers: 0 Running: 0 Paused: 0 Stopped: 0 Images: 0 Server Version: 20.10.9 Storage Driver: overlay2 Backing Filesystem: xfs Supports d_type: true Native Overlay Diff: true userxattr: false Logging Driver: json-file Cgroup Driver: systemd Cgroup Version: 1 Plugins: Volume: local Network: bridge host ipvlan macvlan null overlay Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog Swarm: inactive Runtimes: io.containerd.runtime.v1.linux runc io.containerd.runc.v2 Default Runtime: runc Init Binary: docker-init containerd version: 5b46e404f6b9f661a205e28d59c982d3634148f8 runc version: v1.0.2-0-g52b36a2d init version: de40ad0 Security Options: seccomp Profile: default Kernel Version: 5.17.1-1.el7.elrepo.x86_64 Operating System: CentOS Linux 7 (Core) OSType: linux Architecture: x86_64 CPUs: 1 Total Memory: 1.907GiB Name: vm01 ID: 3WPS:FK3T:D5HX:ZSNS:D6NE:NGNQ:TWTO:OE6B:HQYG:SXAQ:6J2V:PA6K Docker Root Dir: /var/lib/docker Debug Mode: false Registry: https://index.docker.io/v1/ Labels: Experimental: false Insecure Registries: 127.0.0.0/8 Registry Mirrors: https://ung2thfc.mirror.aliyuncs.com/ Live Restore Enabled: false Product License: Community Engine

至此,所有节点上的docker已部署完成。

12.部署Master

12.1自签CA证书

12.1.1生成CA证书配置

cd /opt/TLS/k8s/ssl

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

12.1.2生成CA证书

#生成CA证书文件 [root@vm01 ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca - 2022/04/03 13:38:51 [INFO] generating a new CA key and certificate from CSR 2022/04/03 13:38:51 [INFO] generate received request 2022/04/03 13:38:51 [INFO] received CSR 2022/04/03 13:38:51 [INFO] generating key: rsa-2048 2022/04/03 13:38:51 [INFO] encoded CSR 2022/04/03 13:38:51 [INFO] signed certificate with serial number 652185253661746806409242928399719456314448070149 #查看已生成的证书文件 [root@vm01 ssl]# ll total 20 -rw-r--r-- 1 root root 294 Apr 3 13:37 ca-config.json -rw-r--r-- 1 root root 1001 Apr 3 13:38 ca.csr -rw-r--r-- 1 root root 264 Apr 3 13:37 ca-csr.json -rw------- 1 root root 1675 Apr 3 13:38 ca-key.pem -rw-r--r-- 1 root root 1310 Apr 3 13:38 ca.pem #这里生成了ca.pem和ca-key.pem两个文件

12.2部署Apiserver

12.2.1创建证书申请文件

cd /opt/TLS/k8s/ssl

cat > server-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.10.11",

"192.168.10.12",

"192.168.10.13",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

#上述文件hosts字段中IP为所有Master IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP

12.2.2签发apiserver 证书

[root@vm01 ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server 2022/04/03 13:55:17 [INFO] generate received request 2022/04/03 13:55:17 [INFO] received CSR 2022/04/03 13:55:17 [INFO] generating key: rsa-2048 2022/04/03 13:55:17 [INFO] encoded CSR 2022/04/03 13:55:17 [INFO] signed certificate with serial number 427283511171072372380793803662853692846755378337 #查看已生成的证书文件 [root@vm01 ssl]# ll total 36 -rw-r--r-- 1 root root 294 Apr 3 13:37 ca-config.json -rw-r--r-- 1 root root 1001 Apr 3 13:38 ca.csr -rw-r--r-- 1 root root 264 Apr 3 13:37 ca-csr.json -rw------- 1 root root 1675 Apr 3 13:38 ca-key.pem -rw-r--r-- 1 root root 1310 Apr 3 13:38 ca.pem -rw-r--r-- 1 root root 1261 Apr 3 13:55 server.csr -rw-r--r-- 1 root root 557 Apr 3 13:55 server-csr.json -rw------- 1 root root 1675 Apr 3 13:55 server-key.pem -rw-r--r-- 1 root root 1627 Apr 3 13:55 server.pem #这里生成了server.pem和server-key.pem两个文件

12.2.3创建配置文件

cd /opt/TLS/k8s/cfg cat > kube-apiserver.conf << EOF KUBE_APISERVER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --insecure-port=0 \\ --etcd-servers=https://192.168.10.11:2379,https://192.168.10.12:2379,https://192.168.10.13:2379 \\ --bind-address=192.168.10.11 \\ --secure-port=6443 \\ --advertise-address=192.168.10.11 \\ --allow-privileged=true \\ --service-cluster-ip-range=10.0.0.0/24 \\ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\ --authorization-mode=RBAC,Node \\ --enable-bootstrap-token-auth=true \\ --token-auth-file=/opt/kubernetes/cfg/token.csv \\ --service-node-port-range=30000-32767 \\ --kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\ --kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname,InternalDNS,ExternalDNS \\ --tls-cert-file=/opt/kubernetes/ssl/server.pem \\ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\ --client-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --service-account-issuer=api \\ --service-account-signing-key-file=/opt/kubernetes/ssl/server-key.pem \\ --etcd-cafile=/opt/etcd/ssl/ca.pem \\ --etcd-certfile=/opt/etcd/ssl/server.pem \\ --etcd-keyfile=/opt/etcd/ssl/server-key.pem \\ --requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem \\ --proxy-client-cert-file=/opt/kubernetes/ssl/server.pem \\ --proxy-client-key-file=/opt/kubernetes/ssl/server-key.pem \\ --requestheader-allowed-names=kubernetes \\ --requestheader-extra-headers-prefix=X-Remote-Extra- \\ --requestheader-group-headers=X-Remote-Group \\ --requestheader-username-headers=X-Remote-User \\ --enable-aggregator-routing=true \\ --audit-log-maxage=30 \\ --audit-log-maxbackup=3 \\ --audit-log-maxsize=100 \\ --audit-log-path=/opt/kubernetes/logs/k8s-audit.log" EOF # 上面两个\\ 第一个是转义符,第二个是换行符,使用转义符是为了使用EOF保留换行符。 # • --logtostderr:启用日志 # • ---v:日志等级 # • --log-dir:日志目录 # • --etcd-servers:etcd集群地址 # • --bind-address:监听地址 # • --secure-port:https安全端口 # • --advertise-address:集群通告地址 # • --allow-privileged:启用授权 # • --service-cluster-ip-range:Service虚拟IP地址段 # • --enable-admission-plugins:准入控制模块 # • --authorization-mode:认证授权,启用RBAC授权和节点自管理 # • --enable-bootstrap-token-auth:启用TLS bootstrap机制 # • --token-auth-file:bootstrap token文件 # • --service-node-port-range:Service nodeport类型默认分配端口范围 # • --kubelet-client-xxx:apiserver访问kubelet客户端证书 # • --tls-xxx-file:apiserver https证书 # • 1.20以上版本必须加的参数:--service-account-issuer,--service-account-signing-key-file # • --etcd-xxxfile:连接Etcd集群证书 # • --audit-log-xxx:审计日志 # • 启动聚合层相关配置: # • --requestheader-client-ca-file,--proxy-client-cert-file,--proxy-client-key-file, # • --requestheader-allowed-names,--requestheader-extra-headers-prefix, # • --requestheader-group-headers,--requestheader-username-headers, # • --enable-aggregator-routing

12.2.4启用 TLS Bootstrapping 机制

TLS Bootstraping:Master apiserver启用TLS认证后,Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书。

#创建token文件 cat > token.csv << EOF c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper" EOF # 格式:token,用户名,UID,用户组 # token也可自行生成替换: # head -c 16 /dev/urandom | od -An -t x | tr -d ' '

12.2.5创建管理文件

cat > kube-apiserver.service << EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF #查看上述命令生成的相关文件 [root@vm01 cfg]# ll total 12 -rw-r--r-- 1 root root 1815 Apr 3 13:57 kube-apiserver.conf -rw-r--r-- 1 root root 286 Apr 3 14:06 kube-apiserver.service -rw-r--r-- 1 root root 84 Apr 3 13:57 token.csv

12.2.7分发文件

#创建kubernetes目录

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

#拷贝证书文件

scp -r /opt/TLS/k8s/ssl/*pem /opt/kubernetes/ssl/

#拷贝配置文件

scp -r /opt/TLS/k8s/cfg/token.csv /opt/kubernetes/cfg/

scp /opt/TLS/k8s/cfg/kube-apiserver.conf /opt/kubernetes/cfg/kube-apiserver.conf

#拷贝管理文件

scp /opt/TLS/k8s/cfg/kube-apiserver.service /usr/lib/systemd/system/kube-apiserver.service

#拷贝可执行文件

scp /opt/TLS/download/kubernetes/server/bin/{kube-apiserver,kube-scheduler,kube-controller-manager} /opt/kubernetes/bin

scp /opt/TLS/download/kubernetes/server/bin/kubectl /usr/local/bin/

12.2.8核对文件

#核对证书文件

[root@vm01 cfg]# ll /opt/kubernetes/ssl/

total 16

-rw------- 1 root root 1675 Apr 3 14:11 ca-key.pem

-rw-r--r-- 1 root root 1310 Apr 3 14:11 ca.pem

-rw------- 1 root root 1675 Apr 3 14:11 server-key.pem

-rw-r--r-- 1 root root 1627 Apr 3 14:11 server.pem

#核对配置文件

[root@vm01 cfg]# ll /opt/kubernetes/cfg/token.csv

-rw-r--r-- 1 root root 84 Apr 3 14:11 /opt/kubernetes/cfg/token.csv

[root@vm01 cfg]# ll /opt/kubernetes/cfg/kube-apiserver.conf

-rw-r--r-- 1 root root 1815 Apr 3 14:12 /opt/kubernetes/cfg/kube-apiserver.conf

#核对管理文件

[root@vm01 cfg]# ll /usr/lib/systemd/system/kube-apiserver.service

-rw-r--r-- 1 root root 286 Apr 3 14:11 /usr/lib/systemd/system/kube-apiserver.service

#核对可执行文件

[root@vm01 cfg]# ll /opt/kubernetes/bin/{kube-apiserver,kube-scheduler,kube-controller-manager}

-rwxr-xr-x 1 root root 131301376 Apr 3 14:12 /opt/kubernetes/bin/kube-apiserver

-rwxr-xr-x 1 root root 121110528 Apr 3 14:12 /opt/kubernetes/bin/kube-controller-manager

-rwxr-xr-x 1 root root 49618944 Apr 3 14:12 /opt/kubernetes/bin/kube-scheduler

[root@vm01 cfg]# ll /usr/local/bin/kubectl

-rwxr-xr-x 1 root root 46592000 Apr 3 14:12 /usr/local/bin/kubectl

12.2.9启动kube-apiserver

[root@vm01 cfg]# systemctl daemon-reload && systemctl start kube-apiserver && systemctl enable kube-apiserver && systemctl status kube-apiserver Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service. ● kube-apiserver.service - Kubernetes API Server Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled) Active: active (running) since Sun 2022-04-03 14:14:54 CST; 111ms ago Docs: https://github.com/kubernetes/kubernetes Main PID: 11765 (kube-apiserver) CGroup: /system.slice/kube-apiserver.service └─11765 /opt/kubernetes/bin/kube-apiserver --logtostderr=false --v=2 --log-dir=/opt/kubernetes/logs --insecure-port=0 --etcd-servers=https://192.168.10.11:2379,https://192.168.10.12:2379,http... Apr 03 14:14:54 vm01 systemd[1]: Started Kubernetes API Server.

12.3部署ControllerManager

12.3.1创建配置文件

cd /opt/TLS/k8s/cfg cat > kube-controller-manager.conf << EOF KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --leader-elect=true \\ --kubeconfig=/opt/kubernetes/cfg/kube-controller-manager.kubeconfig \\ --bind-address=127.0.0.1 \\ --allocate-node-cidrs=true \\ --cluster-cidr=10.244.0.0/16 \\ --service-cluster-ip-range=10.0.0.0/24 \\ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --root-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --cluster-signing-duration=87600h0m0s" EOF # • --kubeconfig:连接apiserver配置文件 # • --leader-elect:当该组件启动多个时,自动选举(HA) # • --cluster-signing-cert-file/--cluster-signing-key-file:自动为kubelet颁发证书的CA,与apiserver保持一致

12.3.2生成证书配置文件

cd /opt/TLS/k8s/ssl

cat > kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

12.3.3生成证书文件

[root@vm01 ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

2022/04/03 14:19:13 [INFO] generate received request

2022/04/03 14:19:13 [INFO] received CSR

2022/04/03 14:19:13 [INFO] generating key: rsa-2048

2022/04/03 14:19:13 [INFO] encoded CSR

2022/04/03 14:19:13 [INFO] signed certificate with serial number 207379066893533311974100622812990123367796996104

2022/04/03 14:19:13 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@vm01 ssl]# ll kube-controller-manager*

-rw-r--r-- 1 root root 1045 Apr 3 14:19 kube-controller-manager.csr

-rw-r--r-- 1 root root 255 Apr 3 14:18 kube-controller-manager-csr.json

-rw------- 1 root root 1679 Apr 3 14:19 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1436 Apr 3 14:19 kube-controller-manager.pem

#这里生成了kube-controller-manager.pem和kube-controller-manager-key.pem文件

12.3.4生成kubeconfig文件

# 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=https://192.168.10.11:6443 \ --kubeconfig=/opt/TLS/k8s/cfg/kube-controller-manager.kubeconfig # 设置客户端认证参数 kubectl config set-credentials kube-controller-manager \ --client-certificate=./kube-controller-manager.pem \ --client-key=./kube-controller-manager-key.pem \ --embed-certs=true \ --kubeconfig=/opt/TLS/k8s/cfg/kube-controller-manager.kubeconfig # 设置上下文参数 kubectl config set-context default \ --cluster=kubernetes \ --user=kube-controller-manager \ --kubeconfig=/opt/TLS/k8s/cfg/kube-controller-manager.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=/opt/TLS/k8s/cfg/kube-controller-manager.kubeconfig

12.3.5生成管理文件

cd /opt/TLS/k8s/cfg cat > kube-controller-manager.service << EOF [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

12.3.6分发文件

#分发证书文件 scp -r /opt/TLS/k8s/ssl/kube-controller-manager*.pem /opt/kubernetes/ssl/ #分发配置文件 scp -r /opt/TLS/k8s/cfg/kube-controller-manager.conf /opt/kubernetes/cfg/ #分发管理文件 scp /opt/TLS/k8s/cfg/kube-controller-manager.service /usr/lib/systemd/system/kube-controller-manager.service #分发kubeconfig文件 scp /opt/TLS/k8s/cfg/kube-controller-manager.kubeconfig /opt/kubernetes/cfg/kube-controller-manager.kubeconfig

12.3.7核对文件

#核对证书文件 [root@vm01 cfg]# ll /opt/kubernetes/ssl/kube-controller-manager*.pem -rw------- 1 root root 1679 Apr 3 14:30 /opt/kubernetes/ssl/kube-controller-manager-key.pem -rw-r--r-- 1 root root 1436 Apr 3 14:30 /opt/kubernetes/ssl/kube-controller-manager.pem #核对配置文件 [root@vm01 cfg]# ll /opt/kubernetes/cfg/kube-controller-manager.conf -rw-r--r-- 1 root root 582 Apr 3 14:30 /opt/kubernetes/cfg/kube-controller-manager.conf #核对管理文件 [root@vm01 cfg]# ll /usr/lib/systemd/system/kube-controller-manager.service -rw-r--r-- 1 root root 321 Apr 3 14:30 /usr/lib/systemd/system/kube-controller-manager.service #核对kubeconfig文件 [root@vm01 cfg]# ll /opt/kubernetes/cfg/kube-controller-manager.kubeconfig -rw------- 1 root root 6279 Apr 3 14:30 /opt/kubernetes/cfg/kube-controller-manager.kubeconfig

12.3.8启动ControllerManager

[root@vm01 cfg]# systemctl daemon-reload && systemctl start kube-controller-manager && systemctl enable kube-controller-manager && systemctl status kube-controller-manager Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service. ● kube-controller-manager.service - Kubernetes Controller Manager Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled) Active: active (running) since Sun 2022-04-03 14:33:09 CST; 111ms ago Docs: https://github.com/kubernetes/kubernetes Main PID: 11872 (kube-controller) CGroup: /system.slice/kube-controller-manager.service └─11872 /opt/kubernetes/bin/kube-controller-manager --logtostderr=false --v=2 --log-dir=/opt/kubernetes/logs --leader-elect=true --kubeconfig=/opt/kubernetes/cfg/kube-controller-manager.kubec... Apr 03 14:33:09 vm01 systemd[1]: Started Kubernetes Controller Manager.

12.4部署Scheduler

12.4.1生成配置文件

cd /opt/TLS/k8s/cfg/ cat > kube-scheduler.conf << EOF KUBE_SCHEDULER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --leader-elect \\ --kubeconfig=/opt/kubernetes/cfg/kube-scheduler.kubeconfig \\ --bind-address=127.0.0.1" EOF

12.4.2生成证书配置文件

cd /opt/TLS/k8s/ssl

cat > kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

12.4.3生成证书文件

[root@vm01 ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

2022/04/03 14:37:29 [INFO] generate received request

2022/04/03 14:37:29 [INFO] received CSR

2022/04/03 14:37:29 [INFO] generating key: rsa-2048

2022/04/03 14:37:29 [INFO] encoded CSR

2022/04/03 14:37:29 [INFO] signed certificate with serial number 270861181620040490080757616258059917703352589307

2022/04/03 14:37:29 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

#查看已生成的证书文件

[root@vm01 ssl]# ll kube-scheduler*

-rw-r--r-- 1 root root 1029 Apr 3 14:37 kube-scheduler.csr

-rw-r--r-- 1 root root 245 Apr 3 14:37 kube-scheduler-csr.json

-rw------- 1 root root 1675 Apr 3 14:37 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1424 Apr 3 14:37 kube-scheduler.pem

#这里生成了kube-scheduler.pem和kube-scheduler-key.pem文件

12.4.4生成kubeconfig文件

# 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=https://192.168.10.11:6443 \ --kubeconfig=/opt/TLS/k8s/cfg/kube-scheduler.kubeconfig # 设置客户端认证参数 kubectl config set-credentials kube-scheduler \ --client-certificate=./kube-scheduler.pem \ --client-key=./kube-scheduler-key.pem \ --embed-certs=true \ --kubeconfig=/opt/TLS/k8s/cfg/kube-scheduler.kubeconfig # 设置上下文参数 kubectl config set-context default \ --cluster=kubernetes \ --user=kube-scheduler \ --kubeconfig=/opt/TLS/k8s/cfg/kube-scheduler.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=/opt/TLS/k8s/cfg/kube-scheduler.kubeconfig

12.4.5生成管理文件

cd /opt/TLS/k8s/cfg cat > kube-scheduler.service << EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

12.4.6分发文件

#分发配置文件 scp /opt/TLS/k8s/cfg/kube-scheduler.conf /opt/kubernetes/cfg/kube-scheduler.conf #分发证书文件 scp /opt/TLS/k8s/ssl/kube-scheduler*.pem /opt/kubernetes/ssl/ #分发kubeconfig文件 scp /opt/TLS/k8s/cfg/kube-scheduler.kubeconfig /opt/kubernetes/cfg/kube-scheduler.kubeconfig #分发管理文件 scp /opt/TLS/k8s/cfg/kube-scheduler.service /usr/lib/systemd/system/kube-scheduler.service

12.4.7核对文件

#核对配置文件 [root@vm01 cfg]# ll /opt/kubernetes/cfg/kube-scheduler.conf -rw-r--r-- 1 root root 188 Apr 3 14:44 /opt/kubernetes/cfg/kube-scheduler.conf #核对证书文件 [root@vm01 cfg]# ll /opt/kubernetes/ssl/kube-scheduler*.pem -rw------- 1 root root 1675 Apr 3 14:45 /opt/kubernetes/ssl/kube-scheduler-key.pem -rw-r--r-- 1 root root 1424 Apr 3 14:45 /opt/kubernetes/ssl/kube-scheduler.pem #核对kubeconfig文件 [root@vm01 cfg]# ll /opt/kubernetes/cfg/kube-scheduler.kubeconfig -rw------- 1 root root 6241 Apr 3 14:45 /opt/kubernetes/cfg/kube-scheduler.kubeconfig #核对管理文件 [root@vm01 cfg]# ll /usr/lib/systemd/system/kube-scheduler.service -rw-r--r-- 1 root root 285 Apr 3 14:45 /usr/lib/systemd/system/kube-scheduler.service

12.4.8启动scheduler

[root@vm01 cfg]# systemctl daemon-reload && systemctl start kube-scheduler && systemctl enable kube-scheduler && systemctl status kube-scheduler Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service. ● kube-scheduler.service - Kubernetes Scheduler Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled) Active: active (running) since Sun 2022-04-03 14:48:19 CST; 113ms ago Docs: https://github.com/kubernetes/kubernetes Main PID: 11972 (kube-scheduler) CGroup: /system.slice/kube-scheduler.service └─11972 /opt/kubernetes/bin/kube-scheduler --logtostderr=false --v=2 --log-dir=/opt/kubernetes/logs --leader-elect --kubeconfig=/opt/kubernetes/cfg/kube-scheduler.kubeconfig --bind-address=12... Apr 03 14:48:19 vm01 systemd[1]: Started Kubernetes Scheduler. Apr 03 14:48:19 vm01 kube-scheduler[11972]: Flag --logtostderr has been deprecated, will be removed in a future release, see https://github.com/kubernetes/enhancements/tree/master/keps/sig...k8s-components Apr 03 14:48:19 vm01 kube-scheduler[11972]: Flag --log-dir has been deprecated, will be removed in a future release, see https://github.com/kubernetes/enhancements/tree/master/keps/sig-ins...k8s-components Hint: Some lines were ellipsized, use -l to show in full.

至此,Master节点上的三个组件(Apiserver、ControllerManager、Scheduler)已部署并启动成功,下面来检查一下所有组件的状态吧。

13.检查集群组件状态

13.1生成连接集群证书配置

cd /opt/TLS/k8s/ssl cat > admin-csr.json <

13.2生成连接证书

[root@vm01 ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2022/04/03 14:52:53 [INFO] generate received request

2022/04/03 14:52:53 [INFO] received CSR

2022/04/03 14:52:53 [INFO] generating key: rsa-2048

2022/04/03 14:52:53 [INFO] encoded CSR

2022/04/03 14:52:53 [INFO] signed certificate with serial number 544157816284296715610790502652620056833806648888

2022/04/03 14:52:53 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

#查看已生成的证书

[root@vm01 ssl]# ll admin*

-rw-r--r-- 1 root root 1009 Apr 3 14:52 admin.csr

-rw-r--r-- 1 root root 229 Apr 3 14:52 admin-csr.json

-rw------- 1 root root 1679 Apr 3 14:52 admin-key.pem

-rw-r--r-- 1 root root 1399 Apr 3 14:52 admin.pem

13.3生成kubeconfig文件

cd /opt/TLS/k8s/cfg # 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=https://192.168.10.11:6443 \ --kubeconfig=/opt/TLS/k8s/cfg/config # 设置客户端认证参数 kubectl config set-credentials cluster-admin \ --client-certificate=/opt/TLS/k8s/ssl/admin.pem \ --client-key=/opt/TLS/k8s/ssl/admin-key.pem \ --embed-certs=true \ --kubeconfig=/opt/TLS/k8s/cfg/config #设置上下文参数 kubectl config set-context default \ --cluster=kubernetes \ --user=cluster-admin \ --kubeconfig=/opt/TLS/k8s/cfg/config #设置默认上下文 kubectl config use-context default --kubeconfig=/opt/TLS/k8s/cfg/config

13.4分发文件

mkdir /root/.kube scp /opt/TLS/k8s/cfg/config /root/.kube/config

13.5查看集群组件状态

#通过kubectl工具查看当前集群组件状态

[root@vm01 cfg]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

#输出以上信息说明Master节点组件运行正常

14.授权用户允许请求证书

kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

15.部署WorkNode节点

因为本机资源的限制,我们可以让Master Node上兼任Worker Node角色

15.1创建工作目录

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

ssh vm02 "mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}"

ssh vm03 "mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}"

15.2分发文件

scp -r /opt/TLS/download/kubernetes/server/bin/{kubelet,kube-proxy} /opt/kubernetes/bin

scp /opt/TLS/download/kubernetes/server/bin/kubelet /usr/local/bin

15.3核对文件

[root@vm01 cfg]# ll /opt/kubernetes/bin/{kubelet,kube-proxy}

-rwxr-xr-x 1 root root 124521440 Apr 3 15:09 /opt/kubernetes/bin/kubelet

-rwxr-xr-x 1 root root 44163072 Apr 3 15:09 /opt/kubernetes/bin/kube-proxy

[root@vm01 cfg]# ll /usr/local/bin/kubelet

-rwxr-xr-x 1 root root 124521440 Apr 3 15:10 /usr/local/bin/kubelet

15.4部署kubelet

15.4.1创建配置文件

这里为了方便,一次性创建了3台虚拟机上的kubelet配置文件,然后将对应的配置文件分发至不同的机器即可

cd /opt/TLS/k8s/cfg/ cat > kubelet01.conf << EOF KUBELET_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --hostname-override=vm01 \\ --network-plugin=cni \\ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\ --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\ --config=/opt/kubernetes/cfg/kubelet-config.yml \\ --cert-dir=/opt/kubernetes/ssl \\ --pod-infra-container-image=ibmcom/pause-amd64:3.1" EOF cat > kubelet02.conf << EOF KUBELET_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --hostname-override=vm02 \\ --network-plugin=cni \\ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\ --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\ --config=/opt/kubernetes/cfg/kubelet-config.yml \\ --cert-dir=/opt/kubernetes/ssl \\ --pod-infra-container-image=ibmcom/pause-amd64:3.1" EOF cat > kubelet03.conf << EOF KUBELET_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --hostname-override=vm03 \\ --network-plugin=cni \\ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\ --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\ --config=/opt/kubernetes/cfg/kubelet-config.yml \\ --cert-dir=/opt/kubernetes/ssl \\ --pod-infra-container-image=ibmcom/pause-amd64:3.1" EOF # • --hostname-override:显示名称,集群中唯一 # • --network-plugin:启用CNI # • --kubeconfig:空路径,会自动生成,后面用于连接apiserver # • --bootstrap-kubeconfig:首次启动向apiserver申请证书 # • --config:配置参数文件 # • --cert-dir:kubelet证书生成目录 # • --pod-infra-container-image:管理Pod网络容器的镜像

15.4.2配置参数文件

cat > kubelet-config.yml << EOF kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 0.0.0.0 port: 10250 readOnlyPort: 10255 cgroupDriver: systemd clusterDNS: - 10.0.0.2 clusterDomain: cluster.local failSwapOn: false authentication: anonymous: enabled: false webhook: cacheTTL: 2m0s enabled: true x509: clientCAFile: /opt/kubernetes/ssl/ca.pem authorization: mode: Webhook webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30s evictionHard: imagefs.available: 15% memory.available: 100Mi nodefs.available: 10% nodefs.inodesFree: 5% maxOpenFiles: 1000000 maxPods: 110 EOF

15.4.3创建管理文件

cat > kubelet.service << EOF [Unit] Description=Kubernetes Kubelet After=docker.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

15.4.4创建kubeconfig文件

# 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=https://192.168.10.11:6443 \ --kubeconfig=/opt/TLS/k8s/cfg/bootstrap.kubeconfig # 设置客户端认证参数 kubectl config set-credentials "kubelet-bootstrap" \ --token=c47ffb939f5ca36231d9e3121a252940 \ --kubeconfig=/opt/TLS/k8s/cfg/bootstrap.kubeconfig # 设置上下文参数 kubectl config set-context default \ --cluster=kubernetes \ --user="kubelet-bootstrap" \ --kubeconfig=/opt/TLS/k8s/cfg/bootstrap.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=/opt/TLS/k8s/cfg/bootstrap.kubeconfig

15.4.5分发文件

#分发配置文件 scp /opt/TLS/k8s/cfg/kubelet01.conf /opt/kubernetes/cfg/kubelet.conf #分发参数文件 scp /opt/TLS/k8s/cfg/kubelet-config.yml /opt/kubernetes/cfg/kubelet-config.yml #分发kubeconfig文件 scp /opt/TLS/k8s/cfg/bootstrap.kubeconfig /opt/kubernetes/cfg/bootstrap.kubeconfig #分发管理文件 scp /opt/TLS/k8s/cfg/kubelet.service /usr/lib/systemd/system/kubelet.service

15.4.6核对文件

#核对配置文件 [root@vm01 cfg]# ll /opt/kubernetes/cfg/kubelet.conf -rw-r--r-- 1 root root 382 Apr 3 15:19 /opt/kubernetes/cfg/kubelet.conf #核对参数文件 [root@vm01 cfg]# ll /opt/kubernetes/cfg/kubelet-config.yml -rw-r--r-- 1 root root 610 Apr 3 15:19 /opt/kubernetes/cfg/kubelet-config.yml #核对kubeconfig文件 [root@vm01 cfg]# ll /opt/kubernetes/cfg/bootstrap.kubeconfig -rw------- 1 root root 2103 Apr 3 15:19 /opt/kubernetes/cfg/bootstrap.kubeconfig #核对管理文件 [root@vm01 cfg]# ll /usr/lib/systemd/system/kubelet.service -rw-r--r-- 1 root root 246 Apr 3 15:19 /usr/lib/systemd/system/kubelet.service

15.4.7启动kubelet

[root@vm01 cfg]# systemctl daemon-reload && systemctl start kubelet && systemctl enable kubelet && systemctl status kubelet Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. ● kubelet.service - Kubernetes Kubelet Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled) Active: active (running) since Sun 2022-04-03 15:22:33 CST; 113ms ago Main PID: 12121 (kubelet) CGroup: /system.slice/kubelet.service └─12121 /opt/kubernetes/bin/kubelet --logtostderr=false --v=2 --log-dir=/opt/kubernetes/logs --hostname-override=vm01 --network-plugin=cni --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig ... Apr 03 15:22:33 vm01 systemd[1]: Started Kubernetes Kubelet.

15.4.8批准kubelet证书申请

#查看kubelet证书请求 [root@vm01 cfg]# kubectl get csr NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION node-csr-6mDDHTg4HuOsVY_7oJRUqtS-6YQFe7JytpYdbRs9kek 57s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrapPending #批准申请 [root@vm01 cfg]# kubectl certificate approve node-csr-6mDDHTg4HuOsVY_7oJRUqtS-6YQFe7JytpYdbRs9kek certificatesigningrequest.certificates.k8s.io/node-csr-6mDDHTg4HuOsVY_7oJRUqtS-6YQFe7JytpYdbRs9kek approved #查看证书请求状态 [root@vm01 cfg]# kubectl get csr NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION node-csr-6mDDHTg4HuOsVY_7oJRUqtS-6YQFe7JytpYdbRs9kek 111s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued #查看集群节点 [root@vm01 cfg]# kubectl get nodes NAME STATUS ROLES AGE VERSION vm01 NotReady 32s v1.23.4 # 由于网络插件还没有部署,节点会没有准备就绪 NotReady

15.5部署kube-proxy

15.5.1创建配置文件

cd /opt/TLS/k8s/cfg/ cat > kube-proxy.conf << EOF KUBE_PROXY_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --config=/opt/kubernetes/cfg/kube-proxy-config.yml" EOF

15.5.2创建参数文件