搭建Kubernetes 1.20.5集群(二进制方式)

搭建Kubernetes集群

此文以Kubernetes 1.20.5版本为例!

如未指定,下述命令在所有节点执行!

一、系统资源及组件规划

| 节点名称 | 系统名称 | CPU/内存 | 网卡 | 磁盘 | IP地址 | OS |

|---|---|---|---|---|---|---|

| Master | master | 2C/4G | ens33 | 128G | 192.168.0.10 | CentOS7 |

| Worker1 | worker1 | 2C/4G | ens33 | 128G | 192.168.0.11 | CentOS7 |

| Worker2 | worker2 | 2C/4G | ens33 | 128G | 192.168.0.12 | CentOS7 |

二、系统软件安装与设置

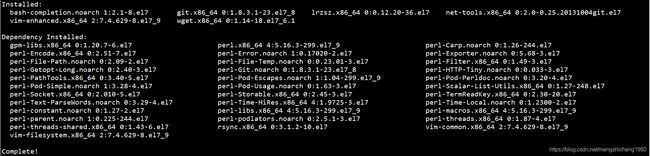

1、安装基本软件

yum -y install vim git lrzsz wget net-tools bash-completion

2、设置名称解析

echo 192.168.0.10 master >> /etc/hosts

echo 192.168.0.11 worker1 >> /etc/hosts

echo 192.168.0.12 worker2 >> /etc/hosts

![]()

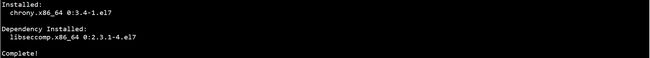

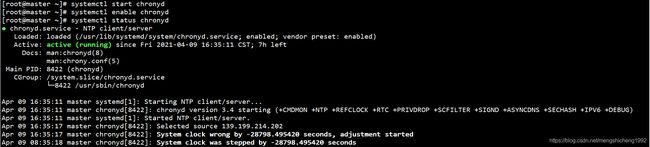

3、设置NTP

yum -y install chrony

systemctl start chronyd

systemctl enable chronyd

systemctl status chronyd

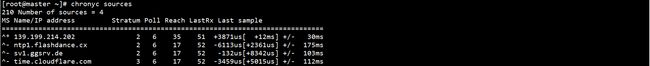

chronyc sources

4、设置SELinux、防火墙

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

5、设置网桥

配置L2网桥在转发包时会被iptables的FORWARD规则所过滤,CNI插件需要该配置

创建/etc/sysctl.d/k8s.conf文件,添加如下内容:

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

执行命令,使修改生效:

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

6、设置swap

关闭系统swap分区:

swapoff -a

yes | cp /etc/fstab /etc/fstab_bak

cat /etc/fstab_bak | grep -v swap > /etc/fstab

rm -rf /etc/fstab_bak

echo vm.swappiness = 0 >> /etc/sysctl.d/k8s.conf

sysctl -p /etc/sysctl.d/k8s.conf

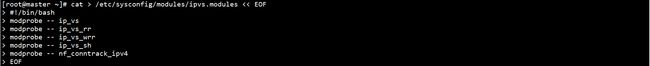

7、设置ipvs

安装ipvsadm ipset:

yum -y install ipvsadm ipset

创建ipvs设置脚本:

cat > /etc/sysconfig/modules/ipvs.modules << EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

执行脚本,验证修改结果:

chmod 755 /etc/sysconfig/modules/ipvs.modules

bash /etc/sysconfig/modules/ipvs.modules

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

三、Kubernetes集群配置

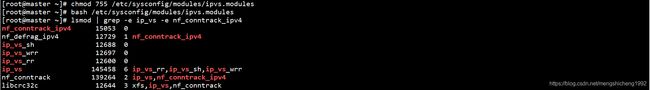

1、部署证书生成工具

在Master节点上下载证书生成工具:

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

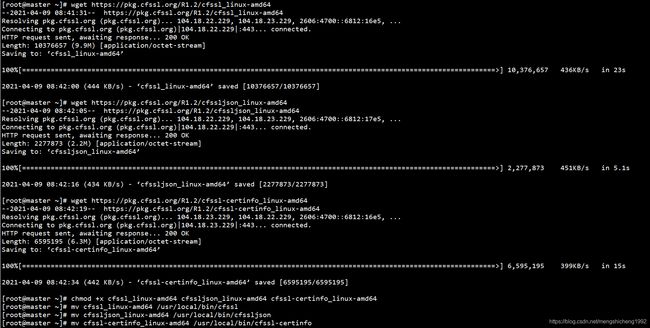

2、部署ETCD集群

在Master节点上创建配置目录和证书目录:

mkdir -p /etc/etcd/ssl/

![]()

在Master节点上创建CA CSR请求文件:

cat > /etc/etcd/ssl/ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}

],

"ca": {

"expiry": "87600h"

}

}

EOF

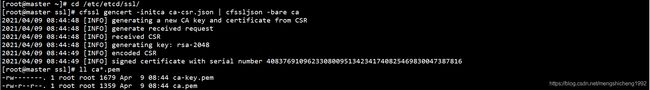

在Master节点上生成CA证书:

cd /etc/etcd/ssl/

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

ll ca*.pem

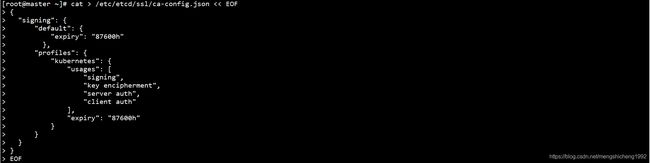

在Master节点上生成证书策略:

cat > /etc/etcd/ssl/ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

在Master节点上创建ETCD CSR请求文件:

cat > /etc/etcd/ssl/etcd-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.0.10"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}]

}

EOF

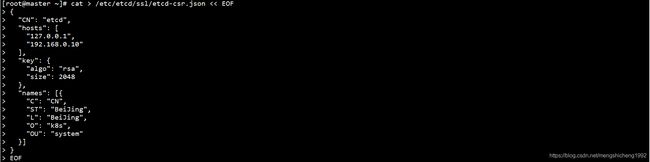

在Master节点上生成ETCD证书:

cd /etc/etcd/ssl/

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

ll etcd*.pem

下载ETCD二进制文件:

参考地址:https://github.com/etcd-io/etcd/releases

下载地址:https://github.com/etcd-io/etcd/releases/download/v3.4.15/etcd-v3.4.15-linux-amd64.tar.gz

在Master节点上解压ETCD二进制文件至系统目录:

tar -xf /root/etcd-v3.4.15-linux-amd64.tar.gz -C /root/

mv /root/etcd-v3.4.15-linux-amd64/{etcd,etcdctl} /usr/local/bin/

![]()

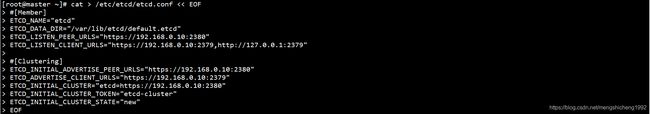

在Master节点上创建ETCD配置文件:

cat > /etc/etcd/etcd.conf << EOF

#[Member]

ETCD_NAME="etcd"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.0.10:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.0.10:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.0.10:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.0.10:2379"

ETCD_INITIAL_CLUSTER="etcd=https://192.168.0.10:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

ETCD配置文件参数说明:

ETCD_NAME:节点名称,集群中唯一

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通信监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN:集群Token

ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群

在Master节点上配置systemd管理ETCD:

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/etc/etcd/etcd.conf

ExecStart=/usr/local/bin/etcd \\

--cert-file=/etc/etcd/ssl/etcd.pem \\

--key-file=/etc/etcd/ssl/etcd-key.pem \\

--trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--peer-cert-file=/etc/etcd/ssl/etcd.pem \\

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \\

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

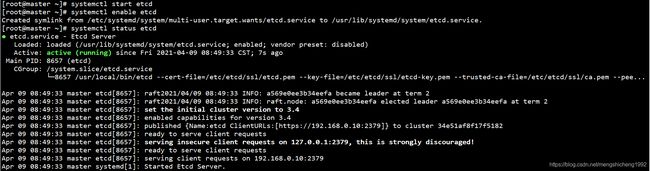

在Master节点上启动ETCD,并设置自启动:

systemctl start etcd

systemctl enable etcd

systemctl status etcd

在Master节点上查看ETCD集群状态:

ETCDCTL_API=3 /usr/local/bin/etcdctl --write-out=table --cacert=/etc/etcd/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem --endpoints=https://192.168.0.10:2379 endpoint health

3、部署Docker

下载Docker二进制文件:

参考地址:https://download.docker.com/linux/static/stable/x86_64/

下载地址:https://download.docker.com/linux/static/stable/x86_64/docker-20.10.5.tgz

解压Docker二进制文件至系统目录:

tar -xf /root/docker-20.10.5.tgz -C /root/

mv /root/docker/* /usr/local/bin

![]()

配置systemd管理Docker:

cat > /usr/lib/systemd/system/docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/local/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

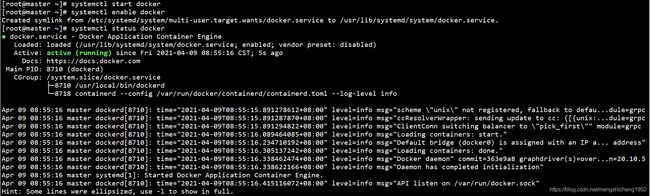

启动Docker,并设置自启动:

systemctl start docker

systemctl enable docker

systemctl status docker

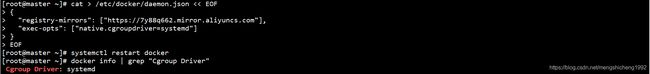

配置Docker镜像源和Cgroup驱动:

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://7y88q662.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl restart docker

docker info | grep "Cgroup Driver"

4、部署Master节点

在Master节点上下载Kubernetes二进制文件:

参考地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.20.md

下载地址:https://dl.k8s.io/v1.20.5/kubernetes-server-linux-amd64.tar.gz

解压Kubernetes二进制文件至系统目录:

tar -xf /root/kubernetes-server-linux-amd64.tar.gz -C /root/

cp

/root/kubernetes/server/bin/{kubectl,kube-apiserver,kube-scheduler,kube-controller-manager}

/usr/local/bin/

![]()

在所有节点上创建配置目录和证书目录:

mkdir -p /etc/kubernetes/ssl/

![]()

在Master节点上创建日志目录:

mkdir /var/log/kubernetes/

![]()

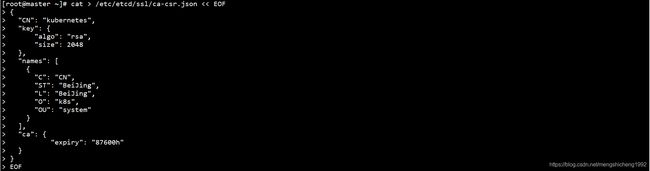

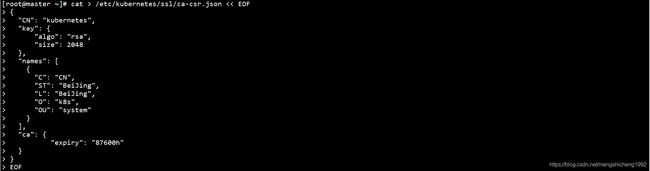

在Master节点上创建CA CSR请求文件:

cat > /etc/kubernetes/ssl/ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}

],

"ca": {

"expiry": "87600h"

}

}

EOF

在Master节点上生成CA证书:

cd /etc/kubernetes/ssl/

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

ll ca*.pem

将Master上CA证书拷贝至Worker节点:

scp /etc/kubernetes/ssl/ca.pem root@worker1:/etc/kubernetes/ssl/

scp /etc/kubernetes/ssl/ca.pem root@worker2:/etc/kubernetes/ssl/

在Master节点上部署Kubernetes:

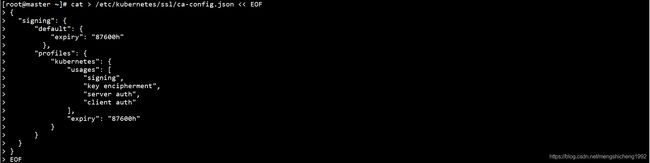

在Master节点上生成证书策略:

cat > /etc/kubernetes/ssl/ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

在Master节点上创建kube-apiserver CSR请求文件:

cat > /etc/kubernetes/ssl/kube-apiserver-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.0.10",

"192.168.0.11",

"192.168.0.12",

"10.96.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}

]

}

EOF

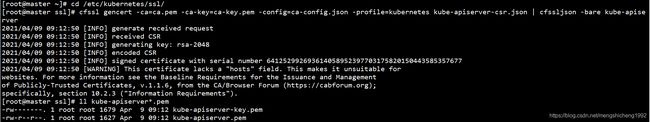

在Master节点上生成kube-apiserver证书:

cd /etc/kubernetes/ssl/

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

ll kube-apiserver*.pem

在Master节点上生成token:

cat > /etc/kubernetes/token.csv << EOF

$(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

![]()

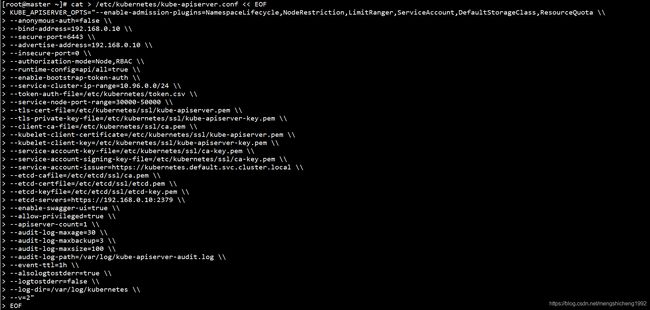

在Master节点上创建kube-apiserver配置文件:

cat > /etc/kubernetes/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \\

--anonymous-auth=false \\

--bind-address=192.168.0.10 \\

--secure-port=6443 \\

--advertise-address=192.168.0.10 \\

--insecure-port=0 \\

--authorization-mode=Node,RBAC \\

--runtime-config=api/all=true \\

--enable-bootstrap-token-auth \\

--service-cluster-ip-range=10.96.0.0/24 \\

--token-auth-file=/etc/kubernetes/token.csv \\

--service-node-port-range=30000-50000 \\

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \\

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \\

--client-ca-file=/etc/kubernetes/ssl/ca.pem \\

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \\

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \\

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--service-account-issuer=https://kubernetes.default.svc.cluster.local \\

--etcd-cafile=/etc/etcd/ssl/ca.pem \\

--etcd-certfile=/etc/etcd/ssl/etcd.pem \\

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \\

--etcd-servers=https://192.168.0.10:2379 \\

--enable-swagger-ui=true \\

--allow-privileged=true \\

--apiserver-count=1 \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/kube-apiserver-audit.log \\

--event-ttl=1h \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=2"

EOF

在Master节点上配置systemd管理kube-apiserver:

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=/etc/kubernetes/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

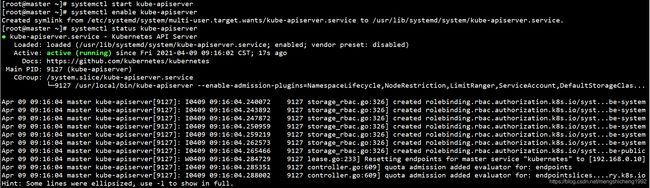

在Master节点上启动kube-apiserver,并设置自启动:

systemctl start kube-apiserver

systemctl enable kube-apiserver

systemctl status kube-apiserver

在Master节点上部署kubectl组件:

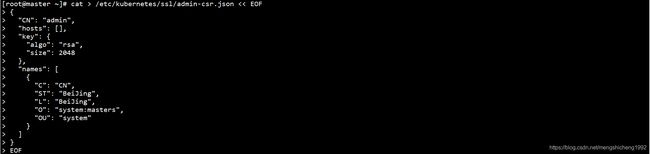

在Master节点上创建kubectl CSR请求文件:

cat > /etc/kubernetes/ssl/admin-csr.json << EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:masters",

"OU": "system"

}

]

}

EOF

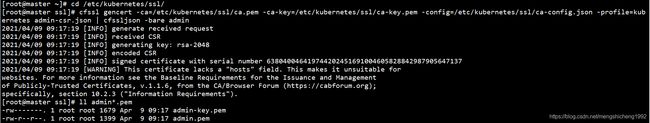

在Master节点上生成kubectl证书:

cd /etc/kubernetes/ssl/

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

ll admin*.pem

在Master节点上生成kube.config:

设置集群参数:

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.0.10:6443 --kubeconfig=/etc/kubernetes/kube.config

![]()

设置客户端认证参数:

kubectl config set-credentials admin --client-certificate=/etc/kubernetes/ssl/admin.pem --client-key=/etc/kubernetes/ssl/admin-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/kube.config

![]()

设置上下文参数:

kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=/etc/kubernetes/kube.config

![]()

设置默认上下文:

kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube.config

![]()

将kube.config拷贝至默认目录:

mkdir ~/.kube

cp /etc/kubernetes/kube.config ~/.kube/config

![]()

授权kubernetes证书访问kubelet api权限:

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

![]()

此时可以通过kubectl管理集群。

在Master节点上部署kube-controller-manager:

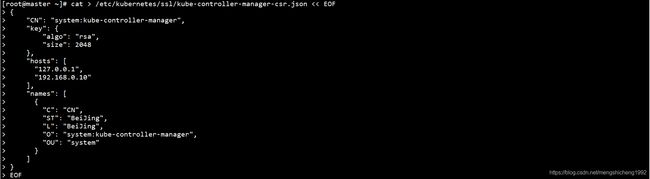

在Master节点上创建kube-controller-manager CSR请求文件:

cat > /etc/kubernetes/ssl/kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.0.10"

],

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:kube-controller-manager",

"OU": "system"

}

]

}

EOF

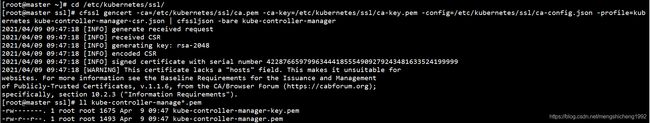

在Master节点上生成kube-controller-manager证书:

cd /etc/kubernetes/ssl/

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

ll kube-controller-manage*.pem

在Master节点上生成kube-controller-manager.kubeconfig:

设置集群参数:

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.0.10:6443 --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig

![]()

设置客户端认证参数:

kubectl config set-credentials system:kube-controller-manager --client-certificate=/etc/kubernetes/ssl/kube-controller-manager.pem --client-key=/etc/kubernetes/ssl/kube-controller-manager-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig

![]()

设置上下文参数:

kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig

![]()

设置默认上下文:

kubectl config use-context system:kube-controller-manager --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig

![]()

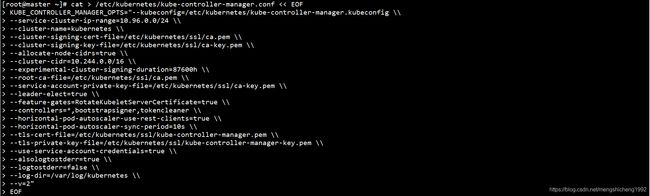

在Master节点上创建kube-controller-manager配置文件:

cat > /etc/kubernetes/kube-controller-manager.conf << EOF

KUBE_CONTROLLER_MANAGER_OPTS="--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--service-cluster-ip-range=10.96.0.0/24 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/16 \\

--experimental-cluster-signing-duration=87600h \\

--root-ca-file=/etc/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--leader-elect=true \\

--feature-gates=RotateKubeletServerCertificate=true \\

--controllers=*,bootstrapsigner,tokencleaner \\

--horizontal-pod-autoscaler-use-rest-clients=true \\

--horizontal-pod-autoscaler-sync-period=10s \\

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \\

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \\

--use-service-account-credentials=true \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=2"

EOF

在Master节点上配置systemd管理kube-controller-manager:

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

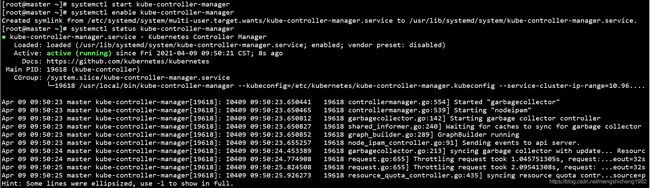

在Master节点上启动kube-controller-manager,并设置自启动:

systemctl start kube-controller-manager

systemctl enable kube-controller-manager

systemctl status kube-controller-manager

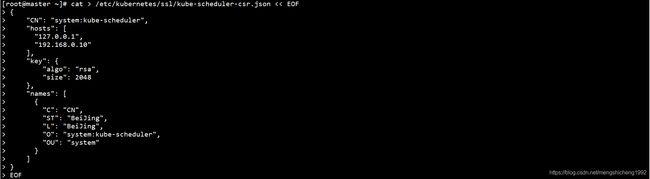

在Master节点上部署kube-scheduler组件:

在Master节点上创建kube-scheduler CSR请求文件:

cat > /etc/kubernetes/ssl/kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.0.10"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:kube-scheduler",

"OU": "system"

}

]

}

EOF

在Master节点上生成kube-scheduler证书:

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

ll kube-scheduler*.pem

![]()

在Master节点上生成kube-scheduler.kubeconfig:

设置集群参数:

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.0.10:6443 --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig

![]()

设置客户端认证参数:

kubectl config set-credentials system:kube-scheduler --client-certificate=/etc/kubernetes/ssl/kube-scheduler.pem --client-key=/etc/kubernetes/ssl/kube-scheduler-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig

![]()

设置上下文参数:

kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig

![]()

设置默认上下文:

kubectl config use-context system:kube-scheduler --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig

![]()

在Master节点上创建kube-scheduler配置文件:

cat > /etc/kubernetes/kube-scheduler.conf << EOF

KUBE_SCHEDULER_OPTS="--address=127.0.0.1 \\

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--leader-elect=true \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=2"

EOF

![]()

在Master节点上配置systemd管理kube-scheduler:

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/kube-scheduler.conf

ExecStart=/usr/local/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

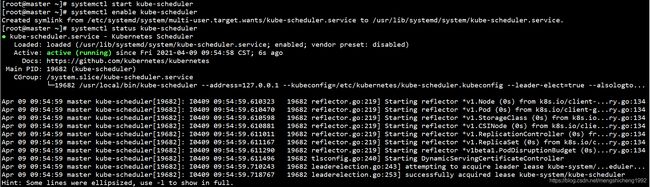

在Master节点上启动kube-scheduler,并设置自启动:

systemctl start kube-scheduler

systemctl enable kube-scheduler

systemctl status kube-scheduler

5、部署Worker节点

Master节点同时作为Worker节点,需配置kubelet和kube-proxy。

在所有节点上部署kubelet组件:

在Master节点上将Kubernetes二进制文件至系统目录:

cp /root/kubernetes/server/bin/kubelet /usr/local/bin

![]()

在Master节点上Kubernetes二进制文件拷贝至Worker节点:

scp /root/kubernetes/server/bin/kubelet root@worker1:/usr/local/bin/

scp /root/kubernetes/server/bin/kubelet root@worker2:/usr/local/bin/

在Master节点上生成kubelet-bootstrapr.kubeconfig:

设置集群参数:

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.0.10:6443 --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

![]()

设置客户端认证参数:

BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv)

kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

![]()

设置上下文参数:

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

![]()

设置默认上下文:

kubectl config use-context default --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

![]()

创建角色绑定:

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

![]()

在Master节点上kubelet-bootstrap.kubeconfig文件拷贝至Worker节点:

scp /etc/kubernetes/kubelet-bootstrap.kubeconfig root@worker1:/etc/kubernetes/

scp /etc/kubernetes/kubelet-bootstrap.kubeconfig root@worker2:/etc/kubernetes/

在Worker节点上创建日志目录:

mkdir /var/log/kubernetes/

![]()

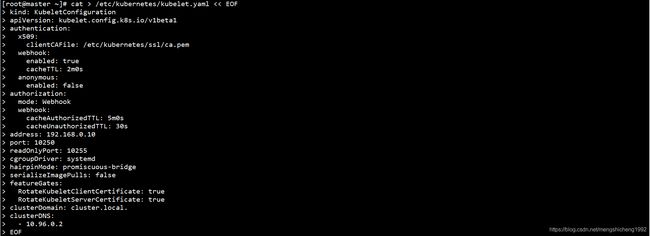

在所有节点上创建kubelet配置文件:

cat > /etc/kubernetes/kubelet.yaml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

x509:

clientCAFile: /etc/kubernetes/ssl/ca.pem

webhook:

enabled: true

cacheTTL: 2m0s

anonymous:

enabled: false

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

address: 192.168.0.10

port: 10250

readOnlyPort: 10255

cgroupDriver: systemd

hairpinMode: promiscuous-bridge

serializeImagePulls: false

featureGates:

RotateKubeletClientCertificate: true

RotateKubeletServerCertificate: true

clusterDomain: cluster.local.

clusterDNS:

- 10.96.0.2

EOF

标红部分修改为节点实际IP地址。

在所有节点上配置systemd管理kubelet:

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/local/bin/kubelet \\

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \\

--cert-dir=/etc/kubernetes/ssl \\

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\

--config=/etc/kubernetes/kubelet.yaml \\

--network-plugin=cni \\

--pod-infra-container-image=k8s.gcr.io/pause:3.2 \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

k8s.gcr.io/pause:3.2无法直接下载,需通过阿里云镜像仓库下载:

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2 k8s.gcr.io/pause:3.2

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

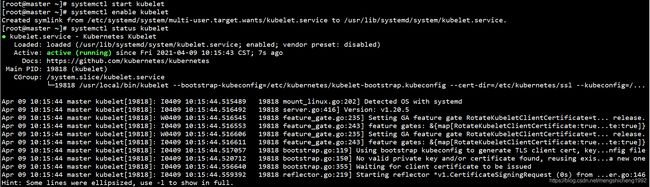

在所有节点上启动kubelet,并设置自启动:

systemctl start kubelet

systemctl enable kubelet

systemctl status kubelet

在Master节点上查看kubelet证书申请:

kubectl get csr

在Master节点上批准kubelet证书申请:

kubectl certificate approve node-csr-xxx

在Master节点上查看Node状态:

kubectl get node

在所有节点上部署kube-proxy组件:

在Master节点上将Kubernetes二进制文件至系统目录:

cp /root/kubernetes/server/bin/kube-proxy /usr/local/bin

![]()

在Master节点上Kubernetes二进制文件拷贝至Worker节点:

scp /root/kubernetes/server/bin/kube-proxy root@worker1:/usr/local/bin/

scp /root/kubernetes/server/bin/kube-proxy root@worker2:/usr/local/bin/

在Master节点上创建kube-proxy CSR请求文件:

cat > /etc/kubernetes/ssl/kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}

]

}

EOF

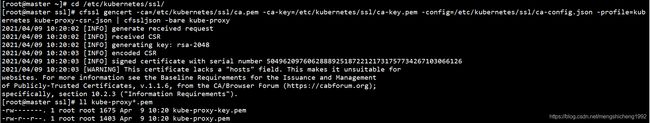

在Master节点上生成kube-proxy证书:

cd /etc/kubernetes/ssl/

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

ll kube-proxy*.pem

在Master节点上生成kube-proxy.kubeconfig:

设置集群参数:

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.0.10:6443 --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

![]()

设置客户端认证参数:

kubectl config set-credentials kube-proxy --client-certificate=/etc/kubernetes/ssl/kube-proxy.pem --client-key=/etc/kubernetes/ssl/kube-proxy-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

![]()

设置上下文参数:

kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

![]()

设置默认上下文:

kubectl config use-context default --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

![]()

在Master节点上kube-proxy.kubeconfig文件拷贝至Worker节点:

scp /etc/kubernetes/kube-proxy.kubeconfig root@worker1:/etc/kubernetes/

scp /etc/kubernetes/kube-proxy.kubeconfig root@worker2:/etc/kubernetes/

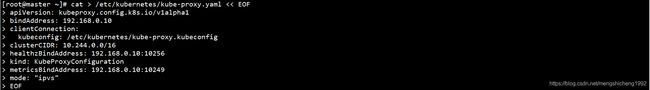

在所有节点上kube-proxy配置文件:

cat > /etc/kubernetes/kube-proxy.yaml << EOF

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 192.168.0.10

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: 10.244.0.0/16

healthzBindAddress: 192.168.0.10:10256

kind: KubeProxyConfiguration

metricsBindAddress: 192.168.0.10:10249

mode: "ipvs"

EOF

标红部分修改为节点实际IP地址。

在所有节点上配置systemd管理kube-proxy:

cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-proxy \\

--config=/etc/kubernetes/kube-proxy.yaml \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

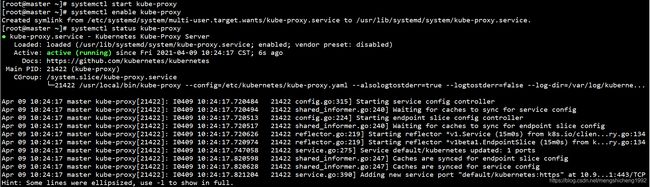

在所有节点上启动kube-apiserver,并设置自启动:

systemctl start kube-proxy

systemctl enable kube-proxy

systemctl status kube-proxy

6、部署CNI网络

在Master节点上部署CNI网络:

下载calico部署文件:

下载地址:https://docs.projectcalico.org/manifests/calico.yaml

在Master节点上修改calico.yaml:

增加

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

- name: IP_AUTODETECTION_METHOD

value: "interface=eth.*|en.*"

calico.yaml中的CIDR需与初始化集群中的参数一致。

在Master节点上部署CNI网络:

kubectl apply -f calico.yaml

在Master节点上查看Pod状态:

kubectl get pod -o wide -n kube-system

在Master节点上查看Node状态:

kubectl get node

7、部署CoreDNS

在Master节点上解压kubernetes-src.tar.gz文件:

tar -xf /root/kubernetes/kubernetes-src.tar.gz -C /root/kubernetes/

![]()

在Master节点上修改/root/kubernetes/cluster/addons/dns/coredns/transforms2sed.sed文件中$DNS_SERVER_IP、$DNS_DOMAIN、$DNS_MEMORY_LIMIT参数,如下:

s/__DNS__SERVER__/10.96.0.2/g

s/__DNS__DOMAIN__/cluster.local/g

s/__CLUSTER_CIDR__/$SERVICE_CLUSTER_IP_RANGE/g

s/__DNS__MEMORY__LIMIT__/200Mi/g

s/__MACHINE_GENERATED_WARNING__/Warning: This is a file generated from the base underscore template file: __SOURCE_FILENAME__/g

在Master节点上使用模板文件生成CoreDNS配置文件coredns.yaml:

cd /root/kubernetes/cluster/addons/dns/coredns/

![]()

sed -f transforms2sed.sed coredns.yaml.base > coredns.yaml

![]()

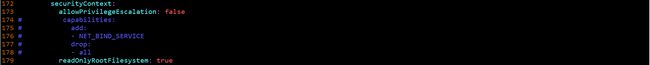

在Master节点上修改CoreDNS配置文件coredns.yaml

修改image部分参数

image: coredns/coredns:1.7.0

删除capabilities部分:

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

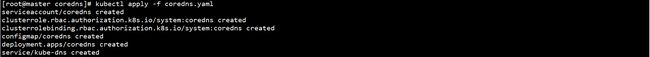

在Master节点上部署CoreDNS:

kubectl apply -f coredns.yaml

在Master节点上查看Pod状态:

kubectl get pod -o wide -n kube-system