pytorch 自学笔记@_@

课程

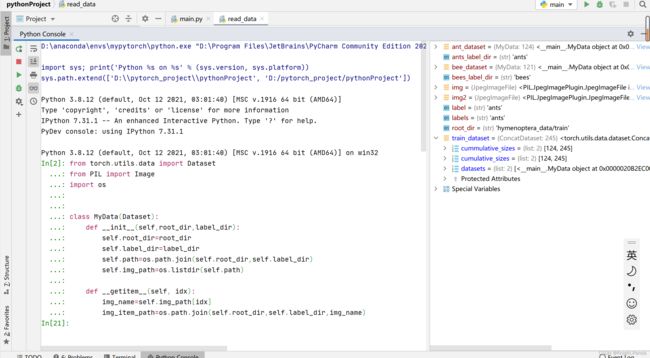

dataset 类

from torch.utils.data import Dataset

from PIL import Image

import os

class MyData(Dataset):

def __init__(self,root_dir,label_dir):

self.root_dir=root_dir

self.label_dir=label_dir

self.path=os.path.join(self.root_dir,self.label_dir)

self.img_path=os.listdir(self.path)

def __getitem__(self, idx):

img_name=self.img_path[idx]

img_item_path=os.path.join(self.root_dir,self.label_dir,img_name)

img=Image.open(img_item_path)

label=self.label_dir

return img,label

def __len__(self):

return len(self.img_path)

root_dir="hymenoptera_data/train"

ants_label_dir="ants"

ant_dataset= MyData(root_dir,ants_label_dir)

bees_label_dir="bees"

bee_dataset=MyData(root_dir,bees_label_dir)

train_dataset=ant_dataset+bee_dataset

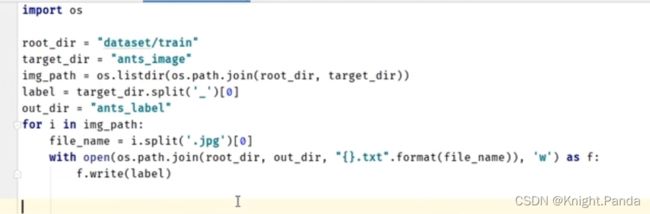

生成txt 文件

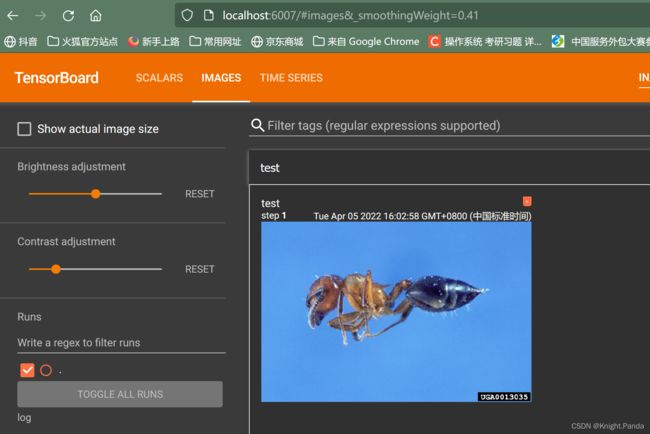

TensorBoard

writer.add_scalar

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter("log")

# writer.add_image()

for i in range(100):

writer.add_scalar("y=x",i,i)

writer.close()

(mypytorch) D:\pytorch_project\pythonProject>tensorboard --logdir=log

TensorFlow installation not found - running with reduced feature set.

Serving TensorBoard on localhost; to expose to the network, use a proxy or pass --bind_all

TensorBoard 2.8.0 at http://localhost:6006/ (Press CTRL+C to quit)

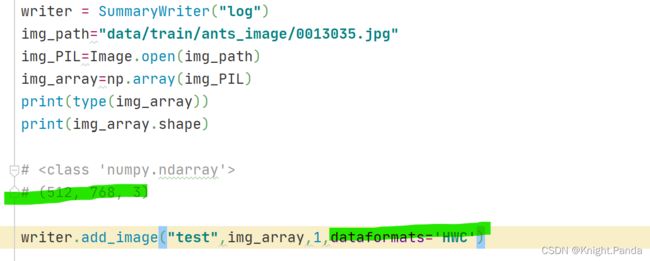

add_image

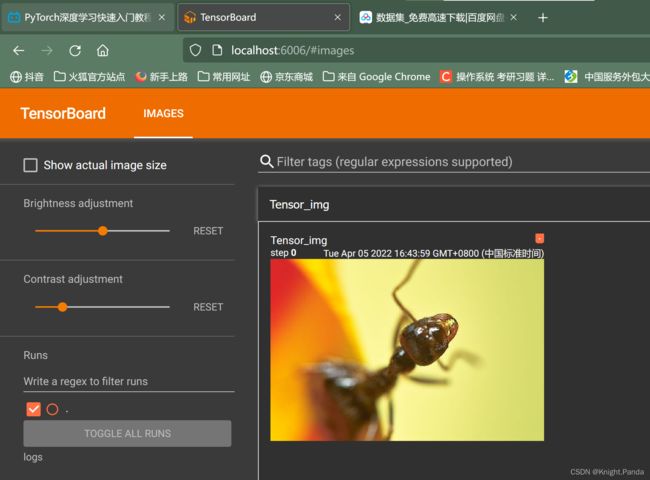

from torch.utils.tensorboard import SummaryWriter

from PIL import Image

import numpy as np

writer = SummaryWriter("log")

img_path="data/train/bees_image/39747887_42df2855ee.jpg"

img_PIL=Image.open(img_path)

img_array=np.array(img_PIL)

print(type(img_array))

print(img_array.shape)

#

from torch.utils.tensorboard import SummaryWriter

from PIL import Image

import numpy as np

writer = SummaryWriter("log")

img_path="data/train/bees_image/85112639_6e860b0469.jpg"

img_PIL=Image.open(img_path)

img_array=np.array(img_PIL)

print(type(img_array))

print(img_array.shape)

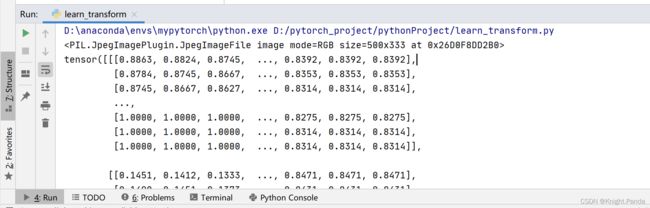

# Transforms

from PIL import Image

from torchvision import transforms

img_path="data/train/ants_image/7759525_1363d24e88.jpg"

img=Image.open(img_path)

print(img)

tensor_trans=transforms.ToTensor()

tensor_img=tensor_trans(img)

print(tensor_img)

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

img_path="data/train/ants_image/7759525_1363d24e88.jpg"

img=Image.open(img_path)

print(img)

writer=SummaryWriter("logs")

tensor_trans=transforms.ToTensor()

tensor_img=tensor_trans(img)

print(tensor_img)

writer.add_image("Tensor_img",tensor_img)

writer.close()

torchvision 中数据集的使用

ctrl+p 了解参数

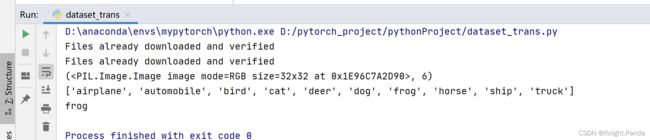

import torchvision

train_set=torchvision.datasets.CIFAR10(root="./dataset",train=True,download=True)

test_set=torchvision.datasets.CIFAR10(root="./dataset",train=False,download=True)

print(train_set[0])

print(train_set.classes)

img,target=train_set[0]

img.show()

print(train_set.classes[target])

import torchvision

from torch.utils.tensorboard import SummaryWriter

dataset_transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor()

])

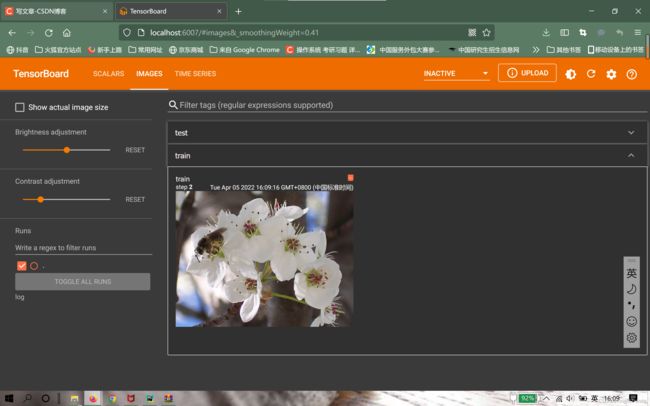

train_set=torchvision.datasets.CIFAR10(root="./dataset",train=True,transform=dataset_transform,download=True)

test_set=torchvision.datasets.CIFAR10(root="./dataset",train=False,transform=dataset_transform,download=True)

# print(train_set[0])

# print(train_set.classes)

# img,target=train_set[0]

# img.show()

# print(train_set.classes[target])

print(test_set[0])

writer=SummaryWriter("dataset_trans")

for i in range(10):

img,target=train_set[i]

writer.add_image("train",img,i)

writer.close()

DataLoader

import torchvision

#准备测试数据集

from torch.utils.data import DataLoader

test_dataset=torchvision.datasets.CIFAR10(root="./dataset",train=False,transform=torchvision.transforms.ToTensor())

test_loader=DataLoader(dataset=test_dataset,batch_size=4,shuffle=True,num_workers=0,drop_last=False)

#测试数据集中的第一张图片

img,target=test_dataset[0]

print(img.shape)

print(target)

for data in test_loader:

imgs,targets=data

print(imgs.shape)

print(targets.shape)

print(targets)

# torch.Size([4, 3, 32, 32])

# torch.Size([4])

# tensor([7, 4, 0, 2])

test_loader 对img 和 target 进行分别打包处理

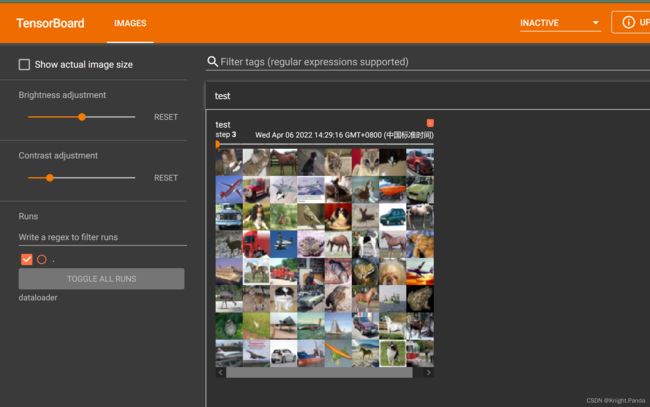

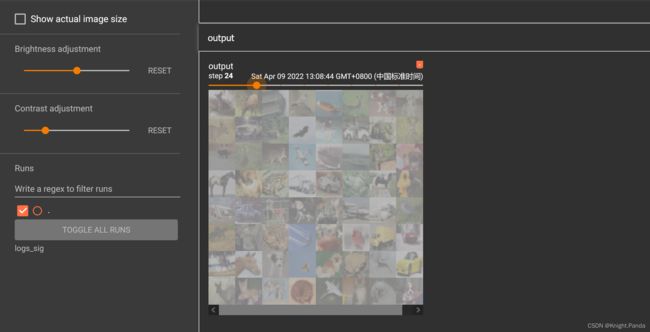

import torchvision

#准备测试数据集

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_dataset=torchvision.datasets.CIFAR10(root="./dataset",train=False,transform=torchvision.transforms.ToTensor())

test_loader=DataLoader(dataset=test_dataset,batch_size=64,shuffle=True,num_workers=0,drop_last=False)

#测试数据集中的第一张图片

img,target=test_dataset[0]

print(img.shape)

print(target)

writer=SummaryWriter("dataloader")

step=0

for data in test_loader:

imgs,targets=data

writer.add_images("test",imgs,step)

step=step+1

writer.close()

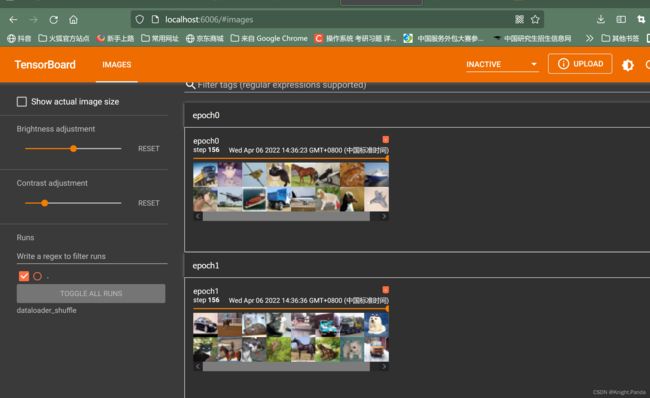

测试shuffle为true时 两次取的样本不一样

import torchvision

#准备测试数据集

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_dataset=torchvision.datasets.CIFAR10(root="./dataset",train=False,transform=torchvision.transforms.ToTensor())

test_loader=DataLoader(dataset=test_dataset,batch_size=64,shuffle=True,num_workers=0,drop_last=False)

#测试数据集中的第一张图片

img,target=test_dataset[0]

print(img.shape)

print(target)

writer=SummaryWriter("dataloader_shuffle")

for epoch in range(2):

step = 0

for data in test_loader:

imgs,targets=data

writer.add_images("epoch{}".format(epoch),imgs,step)

step=step+1

writer.close()

神经网络的基本骨架 nn.Module

import torch

from torch import nn

class Model(nn.Module):

def __init__(self) -> None:

super().__init__()

def forward(self,input):

output=input+1

return output

model=Model()

x=torch.tensor(20.4)

y=model(x)

print(y)

卷积conv – torch.nn.functional

import torch

import torch.nn.functional as F

input=torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]])

kernel=torch.tensor([[1,2,1],

[0,1,0],

[2,1,0]])

print(input.shape)

print(kernel.shape)

input=torch.reshape(input,(1,1,5,5))

kernel=torch.reshape(kernel,(1,1,3,3))

print(input.shape)

print(kernel.shape)

output=F.conv2d(input,kernel,stride=1)

print(output)

output2=F.conv2d(input,kernel,stride=2)

print(output2)

output3=F.conv2d(input,kernel,stride=1,padding=1)

print(output3)

D:\anaconda\envs\mypytorch\python.exe D:/pytorch_project/pythonProject/nn_conv.py

torch.Size([5, 5])

torch.Size([3, 3])

torch.Size([1, 1, 5, 5])

torch.Size([1, 1, 3, 3])

tensor([[[[10, 12, 12],

[18, 16, 16],

[13, 9, 3]]]])

tensor([[[[10, 12],

[13, 3]]]])

tensor([[[[ 1, 3, 4, 10, 8],

[ 5, 10, 12, 12, 6],

[ 7, 18, 16, 16, 8],

[11, 13, 9, 3, 4],

[14, 13, 9, 7, 4]]]])

Process finished with exit code 0

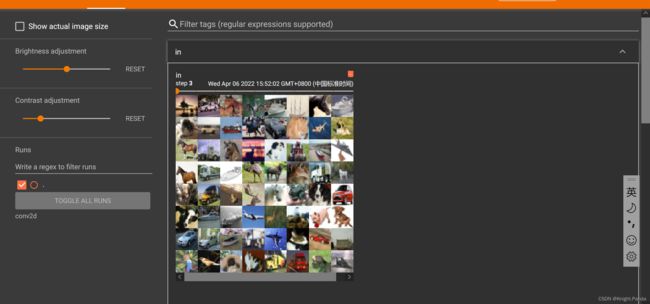

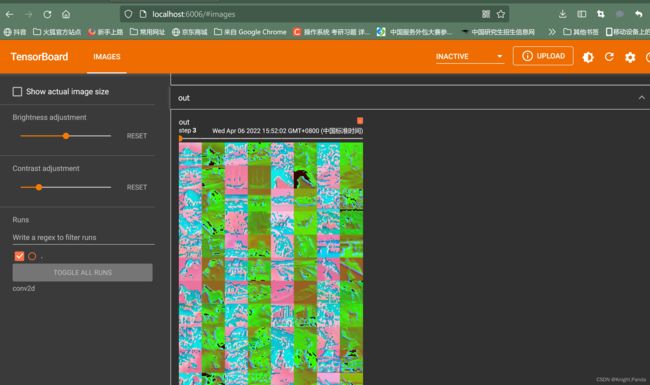

卷积 Conv2d – torch.nn

# -*- coding: utf-8 -*-

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset= torchvision.datasets.CIFAR10(root="./dataset",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader=DataLoader(dataset,batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1=Conv2d(in_channels=3,out_channels=6,kernel_size=3,stride=1,padding=0)

def forward(self,x):

x=self.conv1(x)

return x

model=Model()

writer =SummaryWriter("conv2d")

step=0

for data in dataloader:

imgs,targets=data

output = model(imgs)

print(imgs.shape)

print(output.shape)

writer.add_images("in",imgs,step)

output=torch.reshape(output,(-1,3,30,30))

writer.add_images("out",output,step)

step=step+1

writer.close()

output=torch.reshape(output,(-1,3,30,30))只有三通道才能够正常显示 所以要进行reshape 将通道数 6->3

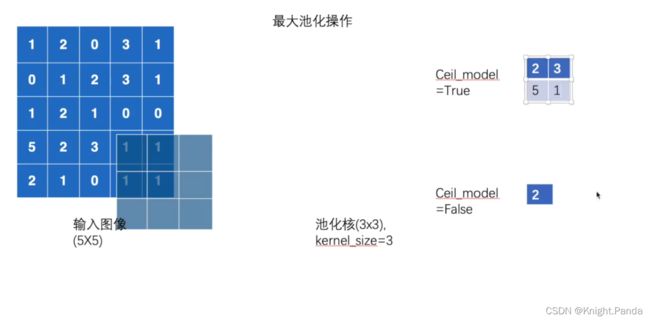

最大池化层

# @File : nn_maxpool.py

import torch

from torch import nn

from torch.nn import MaxPool2d

input=torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]],dtype=torch.float32)

input = torch.reshape(input,(-1,1,5,5))

print(input.shape)

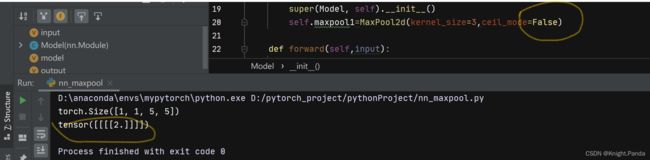

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.maxpool1=MaxPool2d(kernel_size=3,ceil_mode=True)

def forward(self,input):

output=self.maxpool1(input)

return output

model=Model()

output=model(input)

print(output)

torch.Size([1, 1, 5, 5])

tensor([[[[2., 3.],

[5., 1.]]]])

非线性激活 RELU Sigmoid

inplace

import torch

from torch import nn

from torch.nn import ReLU

input=torch.tensor([[1,-0.5],

[-1,3]])

input=torch.reshape(input,(-1,1,2,2))

print(input.shape)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.relu1=ReLU()#inplace 默认为false

def forward(self,input):

output=self.relu1(input)

return output

model=Model()

output=model(input)

print(output)

D:\anaconda\envs\mypytorch\python.exe D:/pytorch_project/pythonProject/nn_relu.py

torch.Size([1, 1, 2, 2])

tensor([[[[1., 0.],

[0., 3.]]]])

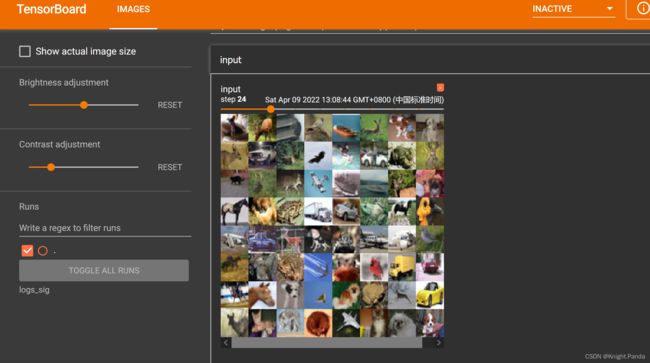

# -*- coding: utf-8 -*-

# @File : nn_relu.py

import torch

import torchvision

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset=torchvision.datasets.CIFAR10("./dataset",train=False,download=True,transform=torchvision.transforms.ToTensor())

dataloader=DataLoader(dataset,batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

#self.relu1=ReLU()#inplace 默认为false

self.sigmoid1=Sigmoid()

def forward(self,input):

output=self.sigmoid1(input)

return output

model=Model()

writer=SummaryWriter("logs_sig")

step =0

for data in dataloader:

imgs,targets=data

writer.add_images("input",imgs,step)

output=model(imgs)

writer.add_images("output",output,step)

step=step+1

writer.close()

线性层和其他层

Normalization Layers 正则化层

Recurrent Layers 循环层 【文字识别】

Linear Layers 线性层

Dropout Layers 随机失活 防止过拟合

# @File : nn_liner.py

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

dataset=torchvision.datasets.CIFAR10(download=True,root="./dataset",

transform=torchvision.transforms.ToTensor(),train=False)

dataloader=DataLoader(dataset,batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.linear1=Linear(196608,10)

def forward(self,input):

output=self.linear1(input)

return output

model=Model()

for data in dataloader:

imgs,targets=data

print(imgs.shape)

output=torch.reshape(imgs,(1,1,1,-1))

print(output.shape)

output=model(output)

print(output.shape)

# torch.Size([64, 3, 32, 32])

# torch.Size([1, 1, 1, 196608])

# model返回torch.Size([1, 1, 1, 10])

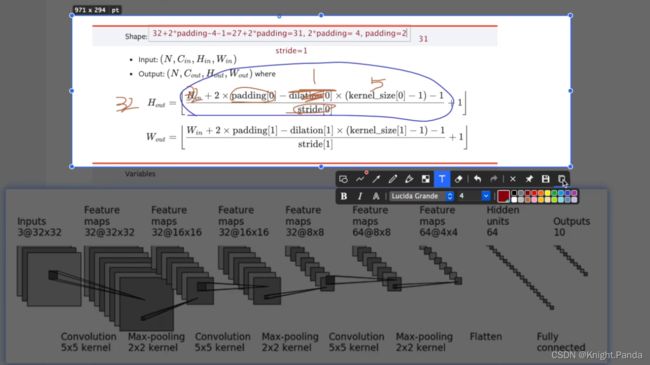

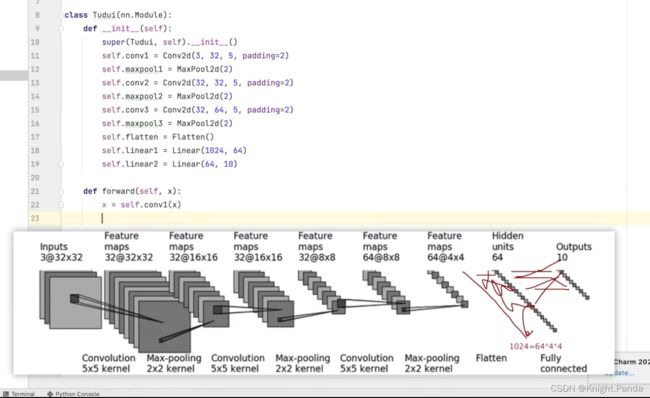

Sequential

# @File : nn_seq.py

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1=Conv2d(3,32,5,padding=2)

self.maxpool1=MaxPool2d(2)

self.conv2=Conv2d(32,32,5,padding=2)

self.maxpool2=MaxPool2d(2)

self.conv3=Conv2d(32,64,5,padding=2)

self.maxpool3=MaxPool2d(2)

self.flatten=Flatten()

self.linear1=Linear(1024,64)

self.linear2=Linear(64,10)

def forward(self,x):

x=self.conv1(x)

x=self.maxpool1(x)

x=self.conv2(x)

x=self.maxpool2(x)

x=self.conv3(x)

x=self.maxpool3(x)

x=self.flatten(x)

x=self.linear1(x)

x=self.linear2(x)

return x

model =Model()

print(model)

input=torch.ones((64,3,32,32))

output=model(input)

print(output.shape)

D:\anaconda\envs\mypytorch\python.exe D:/pytorch_project/pythonProject/nn_seq.py

Model(

(conv1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(flatten): Flatten(start_dim=1, end_dim=-1)

(linear1): Linear(in_features=1024, out_features=64, bias=True)

(linear2): Linear(in_features=64, out_features=10, bias=True)

)

torch.Size([64, 10])

Process finished with exit code 0

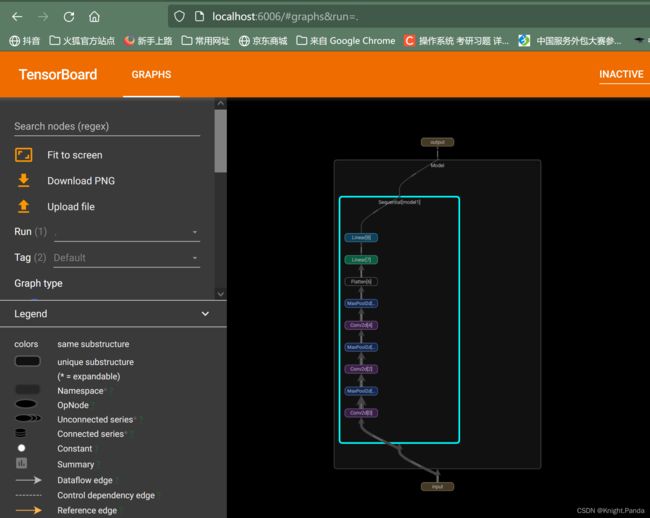

用sequential 简化

# @File : nn_seq.py

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriter

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model1=Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x=self.model1(x)

return x

model =Model()

print(model)

input=torch.ones((64,3,32,32))

output=model(input)

print(output.shape)

writer=SummaryWriter("logs_seq")

writer.add_graph(model,input)

writer.close()

损失函数与反向传播

- 计算实际输出和目标之间的差距

- 为更新输出提供了依据 – 反向传播 grad

L1Loss

# @File : nn_loss.py

import torch

from torch.nn import L1Loss

inputs = torch.tensor([1,2,5],dtype=torch.float32)

targets = torch.tensor([1,2,3],dtype=torch.float32)

inputs=torch.reshape(inputs,(1,1,1,3))

targets=torch.reshape(targets,(1,1,1,3))

loss=L1Loss()

res=loss(inputs,targets)

print(res)

#tensor(0.6667)

# @File : nn_loss.py

import torch

from torch.nn import L1Loss

inputs = torch.tensor([1,2,5],dtype=torch.float32)

targets = torch.tensor([1,2,3],dtype=torch.float32)

loss=L1Loss(reduction='sum')

res=loss(inputs,targets)

print(res)

tensor(2.)

MSELoss

# @File : nn_loss.py

import torch

from torch.nn import L1Loss, MSELoss

inputs = torch.tensor([1,2,5],dtype=torch.float32)

targets = torch.tensor([1,2,3],dtype=torch.float32)

loss=L1Loss()

res=loss(inputs,targets)

print(res)

loss_mse=MSELoss()

res_mse=loss_mse(inputs,targets)

print(res_mse)

tensor(0.6667)

tensor(1.3333)

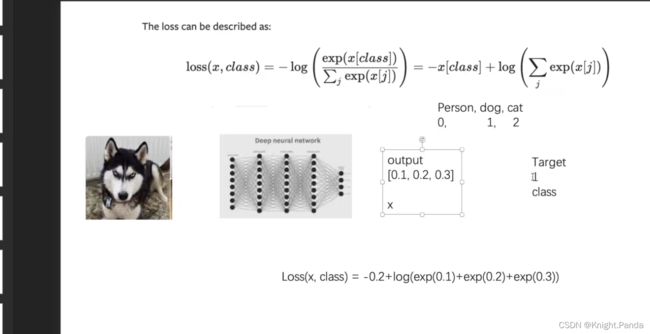

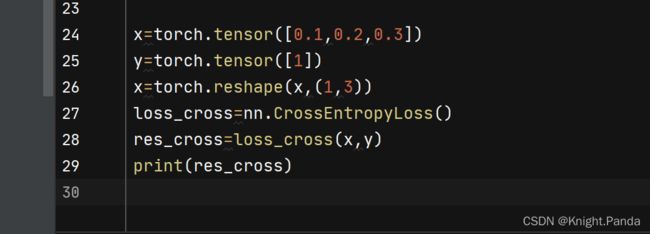

CrossEntropyLoss 交叉熵

backward

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset=torchvision.datasets.CIFAR10(root="./dataset",train=False,transform=torchvision.transforms.ToTensor()

,download=True)

dataloader=DataLoader(dataset,batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model1=Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x=self.model1(x)

return x

model =Model()

loss_cross=nn.CrossEntropyLoss()

for data in dataloader:

imgs,targets=data

output=model(imgs)

print(output.shape)

loss=loss_cross(output,targets)

print(loss)

loss.backward()

print("ok")

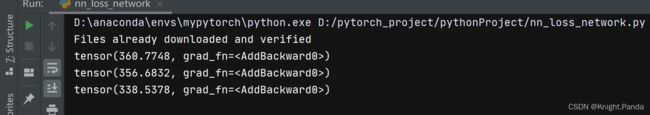

优化器

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset=torchvision.datasets.CIFAR10(root="./dataset",train=False,transform=torchvision.transforms.ToTensor()

,download=True)

dataloader=DataLoader(dataset,batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model1=Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x=self.model1(x)

return x

model =Model()

loss_cross=nn.CrossEntropyLoss()

optim=torch.optim.SGD(model.parameters(),lr=0.01)

for epoch in range(20):

run_loss=0

for data in dataloader:

imgs,targets=data

output=model(imgs)

loss=loss_cross(output,targets)

optim.zero_grad()

loss.backward()

optim.step()

run_loss=run_loss+loss

print(run_loss)

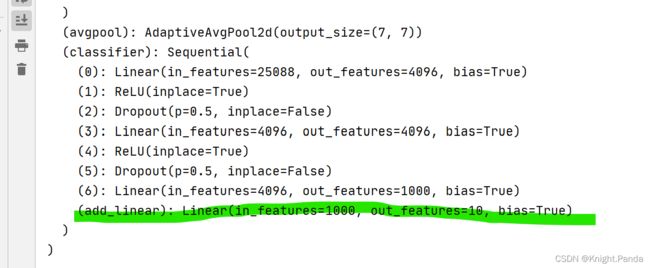

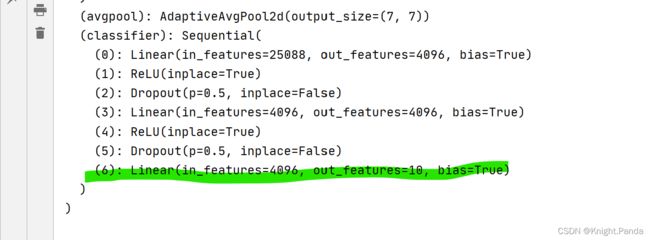

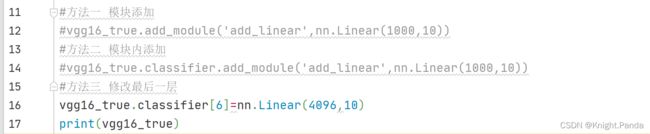

现有网络模型的使用及修改

方法一 相当于增加模块

vgg16_true.add_module('add_linear',nn.Linear(1000,10))

Files already downloaded and verified

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

(add_linear): Linear(in_features=1000, out_features=10, bias=True)

)

方法二 在模块内部添加

vgg16_true.classifier.add_module('add_linear',nn.Linear(1000,10))

print(vgg16_true)

#方法三 修改最后一层

vgg16_true.classifier[6]=nn.Linear(4096,10)

模型的保存与读取

import torchvision

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

# from nn_loss_network import *

vgg16_true=torchvision.models.vgg16(pretrained=True)

# 方式一

torch.save(vgg16_true,"vgg_model.pth") #模型结构 + 参数

#对应方式一 加载模型

model=torch.load("vgg_model.pth")

# print(model)

# 方式二

torch.save(vgg16_true.state_dict(),"vgg_model2.pth")#只有模型参数 字典形式

model2=torch.load("vgg_model2.pth")

# print(model2)#没有网络模型

vgg=torchvision.models.vgg16(pretrained=True)#将参数加载到模型中去

vgg.load_state_dict(model2)

# print(vgg)

#陷阱一 自己创建的网络无法直接显示 需要将网络的定义引入才可以显示

#1 from nn_loss_network import * 要么引入相应文件

#2 要么将网络的结构写入代码 如下所示

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model1=Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x=self.model1(x)

return x

model3=torch.load("model.pth")

print(model3)

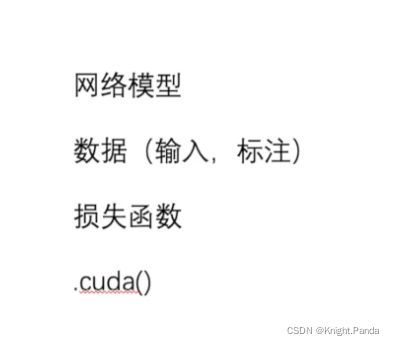

完整模型训练套路

model_tp.py 存储网络

#存储网络

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model1=Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x=self.model1(x)

return x

if __name__ == '__main__':

model=Model()

input=torch.ones((64,3,32,32))

output=model(input)

print(output.shape)

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from model_tp import *

#准备数据集

train_dataset=torchvision.datasets.CIFAR10(root="./dataset",train=True,

transform=torchvision.transforms.ToTensor(),download=True)

test_dataset=torchvision.datasets.CIFAR10(root="./dataset",train=False,

transform=torchvision.transforms.ToTensor(),download=True)

train_dataset_size=len(train_dataset)

test_dataset_size=len(test_dataset)

print("训练数据集长度{}".format(train_dataset_size))

print("测试数据集长度{}".format(test_dataset_size))

# 训练数据集长度50000

# 测试数据集长度10000

#利用DataLoader进行加载数据集

train_dataloader=DataLoader(train_dataset,batch_size=64)

test_dataloader=DataLoader(test_dataset,batch_size=64)

#搭建神经网络 model_tp.py

#创建网络模型

model=Model()

#损失函数

loss_fn=nn.CrossEntropyLoss()

#优化器定义

learning_rate=1e-2

optimizer=torch.optim.SGD(model.parameters(),lr=learning_rate)

#设置训练网络的参数

#记录训练次数

total_train_step=0

#记录测试次数

total_test_step=0

#训练轮数

epoch=10

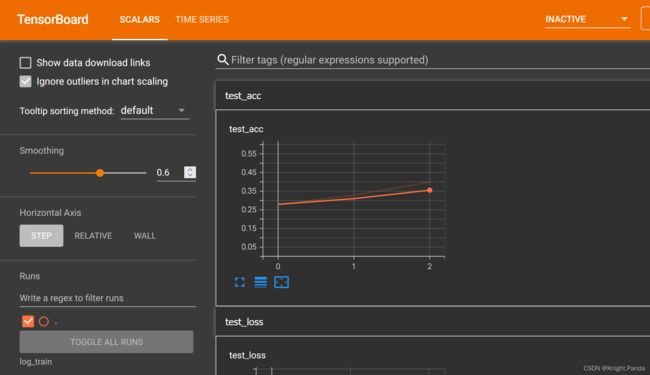

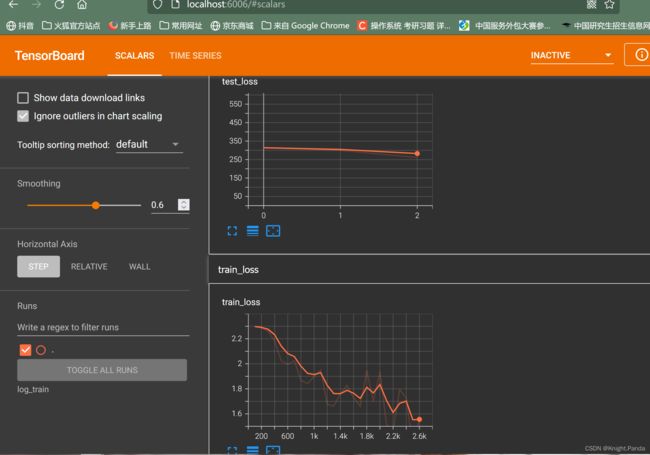

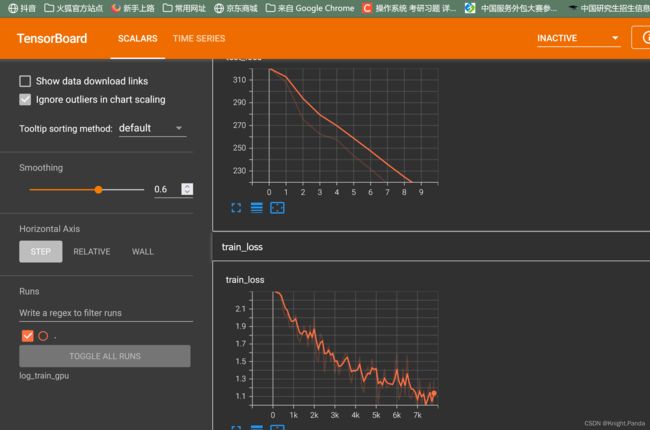

# 添加tensorboard

writer=SummaryWriter("log_train")

for i in range(epoch):

print("=========第{}轮训练========".format(i+1))

#训练步骤开始

for data in train_dataloader:

imgs,targets=data

output=model(imgs)

loss=loss_fn(output,targets)

#优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step=total_train_step+1

if(total_train_step%100==0):

print("训练次数:{},Loss:{}".format(total_train_step,loss.item()))

writer.add_scalar("train_loss",loss.item(),total_train_step)

#测试步骤

total_test_loss=0

total_accuracy=0

with torch.no_grad():

for data in test_dataloader:

imgs,targets=data

output=model(imgs)

loss=loss_fn(output,targets)

total_test_loss=total_test_loss+loss.item()

accuracy=(output.argmax(1)==targets).sum()

total_accuracy=total_accuracy+accuracy

print("整体测试集上的Loss{}".format(total_test_loss))

print("整体测试集上的Accuracy{}".format(total_accuracy/test_dataset_size))

writer.add_scalar("test_loss",total_test_loss,total_test_step)

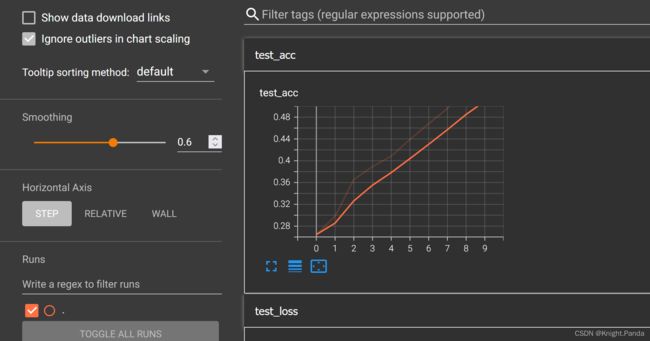

writer.add_scalar("test_acc",total_accuracy/test_dataset_size,total_test_step)

total_test_step=total_test_step+1

torch.save(model,"model_{}.pth".format(i))

print("模型已保存~")

writer.close()

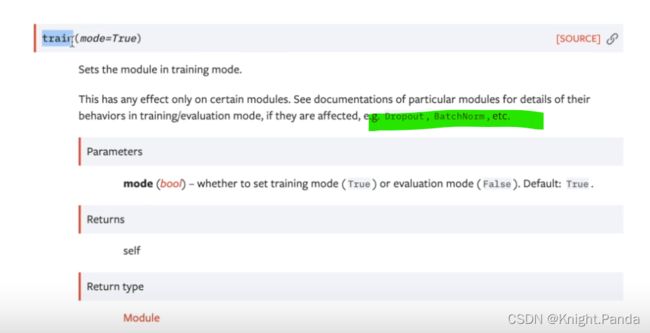

train() 对于有特定层(Dropout,BatchNorm etc…)的网络有作用

eval() 对于有特定层的网络有作用

GPU训练

nvidia-smi 显示GPU信息

.cuda()

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from model_tp import *

import time

#准备数据集

train_dataset=torchvision.datasets.CIFAR10(root="./dataset",train=True,

transform=torchvision.transforms.ToTensor(),download=True)

test_dataset=torchvision.datasets.CIFAR10(root="./dataset",train=False,

transform=torchvision.transforms.ToTensor(),download=True)

train_dataset_size=len(train_dataset)

test_dataset_size=len(test_dataset)

print("训练数据集长度{}".format(train_dataset_size))

print("测试数据集长度{}".format(test_dataset_size))

# 训练数据集长度50000

# 测试数据集长度10000

#利用DataLoader进行加载数据集

train_dataloader=DataLoader(train_dataset,batch_size=64)

test_dataloader=DataLoader(test_dataset,batch_size=64)

#搭建神经网络 model_tp.py

#创建网络模型

model=Model()

if torch.cuda.is_available():

model=model.cuda()#@@

print("cuda is available!")

#损失函数

loss_fn=nn.CrossEntropyLoss()

if torch.cuda.is_available():

loss_fn = loss_fn.cuda() #@@

#优化器定义

learning_rate=1e-2

optimizer=torch.optim.SGD(model.parameters(),lr=learning_rate)

#设置训练网络的参数

#记录训练次数

total_train_step=0

#记录测试次数

total_test_step=0

#训练轮数

epoch=10

# 添加tensorboard

writer=SummaryWriter("log_train_gpu")

start_time=time.time()

for i in range(epoch):

print("=========第{}轮训练========".format(i+1))

#训练步骤开始

for data in train_dataloader:

imgs,targets=data

if torch.cuda.is_available():

imgs = imgs.cuda()#@@

targets = targets.cuda()#@@

output=model(imgs)

loss=loss_fn(output,targets)

#优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step=total_train_step+1

if(total_train_step%100==0):

print("训练次数:{},Loss:{}".format(total_train_step,loss.item()))

writer.add_scalar("train_loss",loss.item(),total_train_step)

#测试步骤

total_test_loss=0

total_accuracy=0

with torch.no_grad():

for data in test_dataloader:

imgs,targets=data

if torch.cuda.is_available():

imgs = imgs.cuda() # @@

targets = targets.cuda() # @@

output=model(imgs)

loss=loss_fn(output,targets)

total_test_loss=total_test_loss+loss.item()

accuracy=(output.argmax(1)==targets).sum()

total_accuracy=total_accuracy+accuracy

print("整体测试集上的Loss{}".format(total_test_loss))

print("整体测试集上的Accuracy{}".format(total_accuracy/test_dataset_size))

writer.add_scalar("test_loss",total_test_loss,total_test_step)

writer.add_scalar("test_acc",total_accuracy/test_dataset_size,total_test_step)

total_test_step=total_test_step+1

torch.save(model,"model_{}.pth".format(i))

print("模型已保存~")

writer.close()

model=model.cuda()

.to(device)

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from model_tp import *

import time

#定义训练的设备

device=torch.device("cuda")

#准备数据集

train_dataset=torchvision.datasets.CIFAR10(root="./dataset",train=True,

transform=torchvision.transforms.ToTensor(),download=True)

test_dataset=torchvision.datasets.CIFAR10(root="./dataset",train=False,

transform=torchvision.transforms.ToTensor(),download=True)

train_dataset_size=len(train_dataset)

test_dataset_size=len(test_dataset)

print("训练数据集长度{}".format(train_dataset_size))

print("测试数据集长度{}".format(test_dataset_size))

# 训练数据集长度50000

# 测试数据集长度10000

#利用DataLoader进行加载数据集

train_dataloader=DataLoader(train_dataset,batch_size=64)

test_dataloader=DataLoader(test_dataset,batch_size=64)

#搭建神经网络 model_tp.py

#创建网络模型

model=Model()

model=model.to(device)

#损失函数

loss_fn=nn.CrossEntropyLoss()

loss_fn = loss_fn.to(device)

#优化器定义

learning_rate=1e-2

optimizer=torch.optim.SGD(model.parameters(),lr=learning_rate)

#设置训练网络的参数

#记录训练次数

total_train_step=0

#记录测试次数

total_test_step=0

#训练轮数

epoch=10

# 添加tensorboard

writer=SummaryWriter("log_train_gpu_2")

start_time=time.time()

for i in range(epoch):

print("=========第{}轮训练========".format(i+1))

#训练步骤开始

for data in train_dataloader:

imgs,targets=data

imgs = imgs.to(device)#@@

targets = targets.to(device)#@@

output=model(imgs)

loss=loss_fn(output,targets)

#优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step=total_train_step+1

if(total_train_step%100==0):

print("训练次数:{},Loss:{}".format(total_train_step,loss.item()))

writer.add_scalar("train_loss",loss.item(),total_train_step)

#测试步骤

total_test_loss=0

total_accuracy=0

with torch.no_grad():

for data in test_dataloader:

imgs,targets=data

imgs = imgs.to(device) # @@

targets = targets.to(device) # @@

output=model(imgs)

loss=loss_fn(output,targets)

total_test_loss=total_test_loss+loss.item()

accuracy=(output.argmax(1)==targets).sum()

total_accuracy=total_accuracy+accuracy

print("整体测试集上的Loss{}".format(total_test_loss))

print("整体测试集上的Accuracy{}".format(total_accuracy/test_dataset_size))

writer.add_scalar("test_loss",total_test_loss,total_test_step)

writer.add_scalar("test_acc",total_accuracy/test_dataset_size,total_test_step)

total_test_step=total_test_step+1

# torch.save(model,"model_{}.pth".format(i))

# print("模型已保存~")

writer.close()

model=model.cuda()

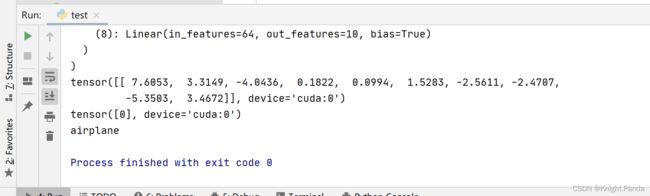

模型验证套路

test.py

import torchvision

from PIL import Image

from torchvision.transforms.functional import to_pil_image

from model_tp import *

from torchvision.transforms import ToPILImage

img_path="./img/feiji.png"

image=Image.open(img_path)

print(image)

# image.show()

#