KinectV2.0 VS2019配置记录

目录

- OpenCV

- VS2019配置(更兼容的配置见下文)

- Kinect中基本的操作

- 兼容性较好的配置方法

- 基于pthread多线程实现与树莓派联动

-

- 上位机

-

- 上位机环境要求(配置inc、lib、dll等环境):

- 上位机代码:

- 下位机

-

- 下位机配置:

- 下位机代码:

记录调试VS2019上的KINECT过程

参考资料:https://blog.csdn.net/baolinq/article/details/52373574

OpenCV

下载Opencv到本地,运行exe文件进行解压。

VS2019配置(更兼容的配置见下文)

- 项目->属性->配置管理器->平台设置为x64

- VC++目录->包含目录->添加

C:\Program Files\Microsoft SDKs\Kinect\v2.0_1409\inc

E:\Download\IDM\opencv\build\include

E:\Download\IDM\opencv\build\include\opencv2 - VC++目录->库目录->添加

C:\Program Files\Microsoft SDKs\Kinect\v2.0_1409\Lib\x64 ##此处注意是X64

E:\Download\IDM\opencv\build\x64\vc15\lib - 链接器->输入->附加依赖项

输入kinect20.lib;opencv_world450d.lib

此项相当于在代码中宏定义#pragma comment(lib,“xxx.lib”)

- 将opencv安装目录下(E:\Download\IDM\opencv\build\x64\vc15\bin)的opencv_videoio_ffmpeg450_64.dll、opencv_world450.dll、opencv_world450d.dll文件复制到C:\Windows\System32下

后来了解到,dll是动态链接库,其实在这之前都不太了解编译、执行的过程和原理,这里也可以把这个bin文件夹加入环境变量或者把相应的dll文件放在debug目录下(可执行文件同样的目录),动态编译过程大概是.h文件申明函数,然后到.lib文件中寻找函数接口(编译到此结束,执行的时候才有后面的股过程),.lib文件提供函数地址在执行时去寻找.dll动态链接库(存放着函数的定义和实现),调用时,寻找dll文件顺序猜测是:System32、PATH、exe同目录,没有相应dll就会报错。

这里给出这一部分的学习参考链接:https://www.cnblogs.com/azbane/p/7364060.html

https://www.cnblogs.com/405845829qq/p/4108450.html

也可以参考我整理的这部分的总结:https://blog.csdn.net/qq_15036691/article/details/110189014

备注:(解惑)

1.SysWow64 (System Windows On Windows 64)文件夹:SysWow64文件夹,是64位Windows,用来存放32位Windows系统文件的地方;

2.System32文件夹:

在64位系统中:用来存放64位程序文件的地方。

在32位系统中:用来存放32位程序文件的地方。当32位程序加载System32文件夹中的dll时,操作系统会自动映射到SysWow64文件夹中的对应的文件。

Kinect中基本的操作

调试了很久,也读了很多网上的代码,以及Kinect的Sample源代码,基本的一些操作总结如下:

Kinect中基本的逻辑,不严谨地描述就是,传感器mySensor来获取myColorSource、myBodySource等资源,然后资源用读取器OpenReader来读取到myColorReader、myBodyReader。

然后建立myBodyFrame一帧数据,采集此时此刻读取器读到的图像,然后存入myBodyArr数组进行后处理。

- 定义传感器并开启:

IKinectSensor* mySensor = nullptr;

GetDefaultKinectSensor(&mySensor);

mySensor->Open();

- 获取彩色图像:

IColorFrameSource* myColorSource = nullptr;

mySensor->get_ColorFrameSource(&myColorSource);

IColorFrameReader* myColorReader = nullptr;

myColorSource->OpenReader(&myColorReader);

int colorHeight = 0, colorWidth = 0;

IFrameDescription* myDescription = nullptr;

myColorSource->get_FrameDescription(&myDescription);

myDescription->get_Height(&colorHeight);

myDescription->get_Width(&colorWidth);

IColorFrame* myColorFrame = nullptr;

Mat original(colorHeight, colorWidth, CV_8UC4); //定义矩阵

- 获取骨骼;读取身体个数

myBodyCount:

IBodyFrameSource* myBodySource = nullptr;

mySensor->get_BodyFrameSource(&myBodySource);

IBodyFrameReader* myBodyReader = nullptr;

myBodySource->OpenReader(&myBodyReader);

int myBodyCount = 0; //身体个数

myBodySource->get_BodyCount(&myBodyCount);

- 创建mapper:

ICoordinateMapper* myMapper = nullptr;

mySensor->get_CoordinateMapper(&myMapper);

- 获取最新的帧,即读取图像:

IBodyFrame* myBodyFrame = nullptr;

myBodyReader->AcquireLatestFrame(&myBodyFrame) //要判断是否返回值为S_OK

/*如右侧代码*/while (myBodyReader->AcquireLatestFrame(&myBodyFrame) != S_OK);

- 定义存身体数据的数组

myBodyArr:

IBody** myBodyArr = new IBody * [myBodyCount];

for (int i = 0; i < myBodyCount; i++)

myBodyArr[i] = nullptr;

- 由

myBodyFrame获取身体骨骼数据存入数组myBodyArr

myBodyFrame->GetAndRefreshBodyData(myBodyCount, myBodyArr) == S_OK

myBodyArr数组输入到关节数组myJointArr

Joint myJointArr[JointType_Count];

myBodyArr[i]->GetJoints(JointType_Count, myJointArr) == S_OK

- 关节数组

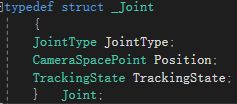

myJointArr的数据结构:

以下的枚举类型告诉了我们,方括号内填相应的枚举类型可以得到相应人体关节的关节结构体,然后调用结构体成员(如Position,即可获得位置)

myJointArr[JointType_HandRight].Position;//Example,调用右手掌心的位置坐标

- 坐标空间转换:要把关节点用的摄像机坐标下的点转换成彩色空间的点

ColorSpacePoint t_point;

myMapper->MapCameraPointToColorSpace(myJointArr[JointType_HandRight].Position, &t_point);

t_point.X;

t_point.Y;

兼容性较好的配置方法

-

将

opencv安装目录\build\include下的opencv2文件夹整个复制到.\inc文件夹下;

将C:\Program Files\Microsoft SDKs\Kinect\v2.0_1409\inc下的所有文件复制到.\inc文件夹下;

将pthreads安装目录\Pre-built.2\include下的所有文件复制到.\inc文件夹下;

-

将

opencv安装目录\build\x64\vc15\lib下的所有文件复制到.\lib文件夹下;

将C:\Program Files\Microsoft SDKs\Kinect\v2.0_1409\Lib\x64下的所有文件复制到.\lib文件夹下;

将pthreads安装目录\Pre-built.2\lib下的所有文件复制到.\lib文件夹下;

-

右击属性管理器中的Debug|64,选择第一个“添加新的项目属性表”,保存在

.\test(即源代码文件夹下)

(这一步是方便以后调用这个属性配置表,不做也可以)

然后双击这个添加了的属性配置表,在属性配置中,

VC++目录 -> 包含目录:添加.\inc和.\inc\opencv2

VC++目录 -> 库目录:添加.\lib

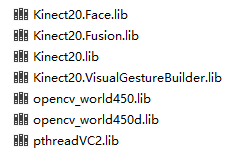

链接器 -> 输入 -> 附加依赖项:添加:kinect20.lib

opencv_world450d.lib

opencv_world450.lib

pthreadVC2.lib

ws2_32.lib

或在代码中写入以下宏定义#pragma comment(lib,“kinect20.lib”)

#pragma comment(lib,“opencv_world450d.lib”)

#pragma comment(lib,“opencv_world450.lib”)

#pragma comment(lib,“pthreadVC2.lib”)

#pragma comment(lib,“ws2_32.lib”)

以上2、3、4两步,其实是为了在其他机器上方便编译用的

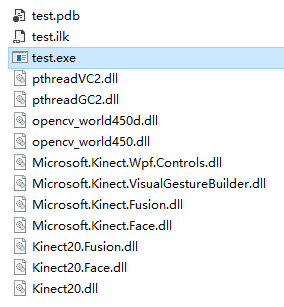

- 下一步就是去把与.lib导入库文件同名的动态链接库.dll文件加入

.\x64\Debug中,,这一步是为了方便在其它计算机上运行可执行文件时,不会报错缺少.dll文件。

假设在一台装有VS的电脑上运行(如果没有VS那还要打包VS依赖的动态链接库,或者用静态编译?(没有试过)),那么需要打包的目前有kinect、opencv、以及pthread相关的dll,于是我在相关的安装目录下打包了如下的dll文件:

- 这样的话接下来应该就可以把这个文件夹整个拷贝给一台配置有VS环境的电脑,然后就可以直接运行这个exe文件了应该。

基于pthread多线程实现与树莓派联动

上位机

上位机环境要求(配置inc、lib、dll等环境):

(x64)

opencv

pthread(初次运行可能会报错,解决方法:在pthread.h头文件中如下操作)

#if !defined( PTHREAD_H )

#define PTHREAD_H

下面加上

#define HAVE_STRUCT_TIMESPEC

kinect2.0 SDKs for windows

上位机代码:

#include 下位机

下位机配置:

小R科技树莓派小车

使用socket进行通信

运行时先启动下位机(server端)的脚本

下位机代码:

# encoding: utf-8

import chardet

from motol_motion import *

import socket

import time

import os

print("Sever Started.")

#套接字接口

mySocket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

#设置IP和端口

host = '192.168.1.1'

port = 2222

#bind绑定该端口

mySocket.setsockopt(socket.SOL_SOCKET,socket.SO_REUSEADDR,1)

mySocket.bind((host, port))

mySocket.listen(10)

motion_initial()

angle=[0,130,175,90,100,0,0,90,0] ## init

XRservo.XiaoRGEEK_ReSetServo() ##reset angle

os.system("cd ~/work/mjpg-streamer-experimental-xr")

try:

os.system("./mjpg_streamer -i \"./input_raspicam.so\" -o \"./output_http.so -w ./www\"")

except:

print("video was opened or failed")

while True:

#接收客户端连接

print("Wait For Connect....")

client, address = mySocket.accept()

print("Connected.")

print("IP is %s" % address[0])

print("port is %d\n" % address[1])

while True:

#读取消息

msg = client.recv(1024)

stop()

print(msg.decode('EUC-JP'))

#把接收到的数据进行解码

##print(msg.encode("utf-8"))

msg1=msg[0:2]

##print(chardet.detect(msg))

try: ##xx

msg2=int(msg[2:6]) ##xxxx

except:

msg2=0

#print("message received")

if msg == "hello":

print("im server")

elif msg== "1234":

print("re1234")

elif msg1 == b"00": ##00:stop

right_motol(0)

left_motol(0)

elif msg1 == b"01": ##01:left

left_motol(-1)

right_motol(1)

time.sleep(0.02)

elif msg1 == b"02": ##02:right

right_motol(-1)

left_motol(1)

time.sleep(0.02)

elif msg1 == b"03": ##03:go

right_motol(1)

left_motol(1)

time.sleep(0.02)

angle[7]=180

angle[8]=25

set_angle(0x07,angle[7])

set_angle(0x08,angle[8])

elif msg1 == b"04": ##04:back

right_motol(-1)

left_motol(-1)

time.sleep(0.02)

angle[7]=0

angle[8]=25

set_angle(0x07,angle[7])

set_angle(0x08,angle[8])

elif msg1 == b"10":

reset_angle()

elif msg1 == b"11": ##up(inc) 1&2 1:up(130) low(45) 2: up(175) low(135)

if (angle[2]==175):## move 1

if (angle[1]+msg2<130):

angle[1]=angle[1]+msg2

else:

angle[1]=130

angle[8]=10+40*(angle[1]-45)/85

else:

if (angle[2]+msg2<175):

angle[2]=angle[2]+msg2

else:

angle[2]=175

angle[8]=(angle[2]-135)/4

angle[7]=180

set_angle(0x08,angle[8])

set_angle(0x07,angle[7])

set_angle(0x01,angle[1])

set_angle(0x02,angle[2])

elif msg1 == b"21": ##down(dec) 1&2

if (angle[1]==45):## move 2

if (angle[2]-msg2>135):

angle[2]=angle[2]-msg2

else:

angle[2]=135

angle[8]=(angle[2]-135)/4

else:

if (angle[1]-msg2>45):

angle[1]=angle[1]-msg2

else:

angle[1]=45

angle[8]=10+40*(angle[1]-45)/85

angle[7]=180

set_angle(0x08,angle[8])

set_angle(0x07,angle[7])

set_angle(0x01,angle[1])

set_angle(0x02,angle[2])

elif msg1 == b"13": ##left(dec) 3

if (90-msg2)>=0:

angle[3]=90-msg2

else:

angle[3]=0

set_angle(0x03,angle[3])

elif msg1 == b"23": ##right(inc) 3

if (90+msg2)<=180:

angle[3]=90+msg2

else:

angle[3]=180

set_angle(0x03,angle[3])

elif msg1 == b"14": ##he(inc 150) 4 100~150

angle[4] = 150

set_angle(0x04,angle[4])

elif msg1 == b"24": ##zhang(dec 100) 4

angle[4] = 100

set_angle(0x04,angle[4])

elif msg == b"qq":

client.close()

mySocket.close()

print("end\n")

exit()

# motol_motion.py

import RPi.GPIO as GPIO

from smbus import SMBus

XRservo=SMBus(1)

def set_angle(index,angle):

XRservo.XiaoRGEEK_SetServo(index,angle)

GPIO.setmode(GPIO.BCM)

GPIO.setwarnings(False)

ENA=13

ENB=20

IN1=19

IN2=16

IN3=21

IN4=26

def motion_initial():

GPIO.setup(ENA,GPIO.OUT,initial=GPIO.LOW)

GPIO.setup(ENB,GPIO.OUT,initial=GPIO.LOW)

GPIO.setup(IN1,GPIO.OUT,initial=GPIO.LOW)

GPIO.setup(IN2,GPIO.OUT,initial=GPIO.LOW)

GPIO.setup(IN3,GPIO.OUT,initial=GPIO.LOW)

GPIO.setup(IN4,GPIO.OUT,initial=GPIO.LOW)

motion_initial()

def gogo():

##print("all go")

GPIO.output(ENA,True)

GPIO.output(IN1,True)

GPIO.output(IN2,False)

GPIO.output(ENB,True)

GPIO.output(IN3,True)

GPIO.output(IN4,False)

def back():

##print("all back")

GPIO.output(ENA,True)

GPIO.output(IN1,False)

GPIO.output(IN2,True)

GPIO.output(ENB,True)

GPIO.output(IN3,False)

GPIO.output(IN4,True)

def stop():

##print("all stop")

GPIO.output(ENA,False)

GPIO.output(IN1,False)

GPIO.output(IN2,False)

GPIO.output(ENB,False)

GPIO.output(IN3,False)

GPIO.output(IN4,False)

def left_motol(x): ##0:stop 1:forward -1:back

GPIO.output(ENA,x)

if x==0:

GPIO.output(IN1,0)

GPIO.output(IN2,0)

##print("left stop")

elif x==1:

GPIO.output(IN1,1)

GPIO.output(IN2,0)

##print("left go")

else:

GPIO.output(IN1,0)

GPIO.output(IN2,1)

##print("left back")

def right_motol(x): ##0:stop 1:forward -1:back

GPIO.output(ENB,x)

if x==0:

GPIO.output(IN3,0)

GPIO.output(IN4,0)

##print("right stop")

elif x==1:

GPIO.output(IN3,1)

GPIO.output(IN4,0)

##print("right go")

else:

GPIO.output(IN3,0)

GPIO.output(IN4,1)

##print("right back")

(未完……)