一文看懂pytorch转换ONNX再转换TenserRT

目录

一、运行环境

二、安装CUDA环境

三、安装TensorRT

四、代码验证

一、运行环境

系统:Ubuntu 18.04.4

CUDA:cuda_11.0.2_450.51.05_linux

cuDNN:cudnn-11.1-linux-x64-v8.0.5.39

显卡驱动版本:450.80.02

TensorRT:TensorRT-8.4.0.6.Linux.x86_64-gnu.cuda-11.6.cudnn8.3

二、安装CUDA环境

Ubuntu安装CUDA和cuDNN_小殊小殊的博客-CSDN博客一、本文使用的环境系统:Ubuntu 18.04.4CUDA:cuda_11.1.0_455.23.05_linuxcuDNN:cudnn-11.1-linux-x64-v8.0.5.39显卡驱动版本:470.103.01二、安装CUDA1.下载:CUDA Toolkit 11.1.0 | NVIDIA Developer2.执行cuda_11.1.0_455.23.05_linux.run,注意不安装驱动,假设安装到默认目录/usr/local3. vi ~/.bashrchttps://blog.csdn.net/xian0710830114/article/details/124046512

三、安装TensorRT

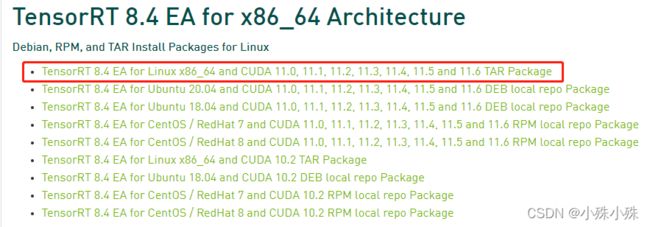

1.下载地址: https://developer.nvidia.com/nvidia-tensorrt-8x-download,一定要下载TAR版本的

2.安装

tar zxvf TensorRT-8.4.0.6.Linux.x86_64-gnu.cuda-11.6.cudnn8.3.tar.gz

cd TensorRT-8.4.0.6/python

# 根据自己的python版本选择

pip install tensorrt-8.4.0.6-cp37-none-linux_x86_64.whl

cd ../graphsurgeon

pip install graphsurgeon-0.4.5-py2.py3-none-any.whl3.配置环境变量,将/data/setup/TensorRT-8.4.0.6/lib加入环境变量

vi ~/.bashrc四、代码验证

我用一个简单的facenet做例子,将pytorch转ONNX再转TensorRT,在验证的时候顺便跑了一下速度,可以看到ONNX速度比pytorch快一倍,TensorRT比ONNX快一倍,好像TensorRT没有传的这么神,我想应该还可以优化。

import torch

from torch.autograd import Variable

import onnx

import traceback

import os

import tensorrt as trt

from torch import nn

# import utils.tensortrt_util as trtUtil

# import pycuda.autoinit

import pycuda.driver as cuda

import cv2

import numpy as np

import onnxruntime

import time

from nets.facenet import Facenet

print(torch.__version__)

print(onnx.__version__)

def torch2onnx(src_path, target_path):

'''

pytorch转换onnx

:param src_path:

:param target_path:

:return:

'''

input_name = ['input']

output_name = ['output']

# input = Variable(torch.randn(1, 3, 32, 32)).cuda()

# model = torchvision.models.resnet18(pretrained=True).cuda()

input = Variable(torch.randn(1, 3, 160, 160))

model = Facenet(backbone="inception_resnetv1", mode="predict").eval()

state_dict = torch.load(src_path, map_location=torch.device('cuda'))

for s_dict in state_dict:

print(s_dict)

model.load_state_dict(state_dict, strict=False)

# torch.onnx.export(model, input, target_path, input_names=input_name, output_names=output_name, verbose=True,

# dynamic_axes={'input' : {0 : 'batch_size'},

# 'output' : {0 : 'batch_size'}})

torch.onnx.export(model, input, target_path, input_names=input_name, output_names=output_name, verbose=True)

test = onnx.load(target_path)

onnx.checker.check_model(test)

print('run success:', target_path)

def run_onnx(model_path):

'''

验证onnx

:param model_path:

:return:

'''

onnx_model = onnxruntime.InferenceSession(model_path, providers=onnxruntime.get_available_providers())

# onnx_model.get_modelmeta()

img = cv2.imread(r'img/002.jpg')

img = cv2.resize(img, (160, 160))

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = np.transpose(img, (2, 1, 0))

img = img[np.newaxis, :, :, :]

img = img / 255.

img = img.astype(np.float32)

img = torch.from_numpy(img)

t = time.time()

tt = 0

# for i in range(1):

# img = np.random.rand(1, 3, 160, 160).astype(np.float32)

# # img = torch.rand((1, 3, 224, 224)).cuda()

# results = onnx_model.run(["output"], {"input": img, 'batch'})Z

img = np.random.rand(1, 3, 160, 160).astype(np.float32)

# img = torch.rand((1, 3, 224, 224)).cuda()

results = onnx_model.run(["output"], {"input": img})

print('cost:', time.time() - t)

for i in range(5000):

img = np.random.rand(1, 3, 160, 160).astype(np.float32)

t1 = time.time()

results = onnx_model.run(["output"], {"input": img})

tt += time.time() - t1

# predict = torch.from_numpy(results[0])

print('onnx cost:', time.time() - t, tt)

# print("predict:", results)

def run_torch(src_path):

model = Facenet(backbone="inception_resnetv1", mode="predict").eval()

state_dict = torch.load(src_path, map_location=torch.device('cuda'))

# for s_dict in state_dict:

# print(s_dict)

model.load_state_dict(state_dict, strict=False)

model = model.eval()

model = model.cuda()

img = cv2.imread(r'img/002.jpg')

img = cv2.resize(img, (160, 160))

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = np.transpose(img, (2, 1, 0))

img = img[np.newaxis, :, :, :]

img = img / 255.

img = img.astype(np.float32)

img = torch.from_numpy(img)

t = time.time()

tt = 0

for i in range(1):

img = torch.rand((1, 3, 160, 160)).cuda()

results = model(img)

print('cost:', time.time() - t)

for i in range(5000):

img = torch.rand((1, 3, 160, 160)).cuda()

t1 = time.time()

results = model(img)

tt += time.time() - t1

print('torch cost:', time.time() - t, tt)

# print("predict:", results)

def onnx2rt(onnx_file_path, engine_file_path):

'''

ONNX转换TensorRT

:param onnx_file_path: onnx文件路径

:param engine_file_path: TensorRT输出文件路径

:return: engine

'''

# 打印日志

G_LOGGER = trt.Logger(trt.Logger.WARNING)

explicit_batch = 1 << (int)(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH)

print('explicit_batch:', explicit_batch)

with trt.Builder(G_LOGGER) as builder, builder.create_network(explicit_batch) as network, trt.OnnxParser(network,

G_LOGGER) as parser:

builder.max_batch_size = 100

builder.max_workspace_size = 1 << 20

print('Loading ONNX file from path {}...'.format(onnx_file_path))

with open(onnx_file_path, 'rb') as model:

print('Beginning ONNX file parsing')

parser.parse(model.read())

print('Completed parsing of ONNX file')

print('Building an engine from file {}; this may take a while...'.format(onnx_file_path))

engine = builder.build_engine(network)

print("Completed creating Engine")

# 保存计划文件

with open(engine_file_path, "wb") as f:

f.write(engine.serialize())

return engine

def check_trt(model_path, image_size):

# 必须导入包,import pycuda.autoinit,否则cuda.Stream()报错

import pycuda.autoinit

"""

验证TensorRT结果

"""

print('[Info] model_path: {}'.format(model_path))

img_shape = (1, 3, image_size, image_size)

print('[Info] img_shape: {}'.format(img_shape))

trt_logger = trt.Logger(trt.Logger.WARNING)

trt_path = model_path # TRT模型路径

with open(trt_path, 'rb') as f, trt.Runtime(trt_logger) as runtime:

engine = runtime.deserialize_cuda_engine(f.read())

for binding in engine:

binding_idx = engine.get_binding_index(binding)

size = engine.get_binding_shape(binding_idx)

dtype = trt.nptype(engine.get_binding_dtype(binding))

print("[Info] binding: {}, binding_idx: {}, size: {}, dtype: {}"

.format(binding, binding_idx, size, dtype))

t = time.time()

tt = 0

tt1 = 0

with engine.create_execution_context() as context:

for i in range(5000):

input_image = np.random.randn(*img_shape).astype(np.float32) # 图像尺寸

t1 = time.time()

input_image = np.ascontiguousarray(input_image)

tt1 += time.time() - t1

# print('[Info] input_image: {}'.format(input_image.shape))

stream = cuda.Stream()

bindings = [0] * len(engine)

for binding in engine:

idx = engine.get_binding_index(binding)

if engine.binding_is_input(idx):

input_memory = cuda.mem_alloc(input_image.nbytes)

bindings[idx] = int(input_memory)

cuda.memcpy_htod_async(input_memory, input_image, stream)

else:

dtype = trt.nptype(engine.get_binding_dtype(binding))

shape = context.get_binding_shape(idx)

output_buffer = np.empty(shape, dtype=dtype)

output_buffer = np.ascontiguousarray(output_buffer)

output_memory = cuda.mem_alloc(output_buffer.nbytes)

bindings[idx] = int(output_memory)

context.execute_async_v2(bindings, stream.handle)

stream.synchronize()

cuda.memcpy_dtoh(output_buffer, output_memory)

tt += time.time() - t1

print('trt cost:', time.time() - t, tt, tt1)

# print("[Info] output_buffer: {}".format(output_buffer))

if __name__ == '__main__':

torch2onnx(r"model_data/facenet_inception_resnetv1.pth", r"model_data/facenet_inception_resnetv1.onnx")

onnx2rt(r"model_data/facenet_inception_resnetv1.onnx", r"model_data/facenet_inception_resnetv1.trt")

run_onnx(r"model_data/facenet_inception_resnetv1.onnx")

run_torch(r"model_data/facenet_inception_resnetv1.pth")

check_trt(r"model_data/facenet_inception_resnetv1.trt", 160)运行结果:ONNX速度比pytorch快一倍,TensorRT比ONNX快一倍

torch cost: 95.99401450157166

onnx cost: 56.98542881011963

trt cost: 26.91579008102417