tensorflow2实现yolov3并使用opencv4.5.5 DNN加载模型预测

目录

- 综述

- 一、什么是YOLO

- 二、YOLOv3 网络

-

- 1、网络结构

- 2、网络输出解读(前向过程)

-

- 2.1、输出特征图尺寸

- 2.2、锚框和预测

- 3、训练策略与损失函数(反向过程)

- 三、tensorflow代码实现

-

- 3.1、YOLOv3 网络结构

-

- 3.1.1、DBL代码实现

- 3.1.2、Residual代码实现

- 3.1.3、ResidualBlock代码实现

- 3.1.4、Darknet53代码实现

- 3.1.5、YoloBlock

- 3.1.6、YoloOutput

- 3.1.7、YoloV3

- 3.2、YOLOV3 loss实现

-

- 3.2.1、iou

- 3.2.2、giou

- 3.2.3、diou

- 3.2.4、ciou

- 3.2.5、Loss

- 3.3、数据输入

-

- 3.3.1、K-meas

- 3.3.2、data_generator

- 3.4、划分训练集和测试集

- 3.5、网络训练

- 3.5、转为graph

- 3.6、网络预测

-

- 3.6.1、加载模型

- 3.6.2、nms

- 3.6.3、画出box

- 四、opencv 代码实现

- 五、问题总结

- 参考

综述

对于那些在GPU平台上运行的检测器,它们的主干网络可能为VGG、ResNet、ResNeXt或DenseNet。

而对于那些在CPU平台上运行的检测器,他们的检测器可能为SqueezeNet ,MobileNet, ShufflfleNet。

最具代表性的二阶段目标检测器R-CNN系列,包括fast R-CNN,faster R-CNN ,R-FCN,Libra R-CNN。也可以使得二阶段目标检测器成为anchor-free目标检测器,例如RepPoints。至于一阶段目标检测器,最具代表性的网络包括YOLO、SSD、RetinaNet。

一阶段的anchor-free目标检测器在不断发展,包括CenterNet、CornerNet、FCOS等。在近些年来的发展,目标检测器通常是在头部和主干网络之间加入一些层,这些层被用来收集不同阶段的特征图,拥有这种机制的网络包括Feature Pyramid Network (FPN),Path Aggregation Network (PAN),BiFPN和NAS-FPN。

除了上述模型外,一些研究人员将重心放在了研究主干网络上(DetNet,DetNAS),而还有一些研究人员则致力于构建用于目标检测的新的模型(SpineNet,HitDetector)。

总的来说,一般的目标检测器由以下几部分组成:

- Input: Image, Patches, Image Pyramid

- Backbones: VGG16, ResNet-50,SpineNet,EffificientNet-B0/B7, CSPResNeXt50, CSPDarknet53

- Neck:

- Additional blocks: SPP,ASPP,RFB,SAM

- Path-aggregation blocks: FPN,PAN,NAS-FPN,Fully-connected FPN,BiFPN,ASFF,SFAM

- Heads:

- Dense Prediction(one-stage):

- RPN,SSD,YOLO,RetinaNet(anchor based)

- CornerNet,CenterNet,MatrixNet,FCOS(anchor free)

- Sparse Prediction(two-stage):

-

- Faster R-CNN,R-FCN,Mask R-CNN(anchor based)

- RepPoints(anchor free)

一、什么是YOLO

YOLO是“You Only Look Once”的简称,它虽然不是最精确的算法,但在精确度和速度之间选择的折中,效果也是相当不错。YOLOv3借鉴了YOLOv1和YOLOv2,虽然没有太多的创新点,但在保持YOLO家族速度的优势的同时,提升了检测精度,尤其对于小物体的检测能力。YOLOv3算法使用一个单独神经网络作用在图像上,将图像划分多个区域并且预测边界框和每个区域的概率。

二、YOLOv3 网络

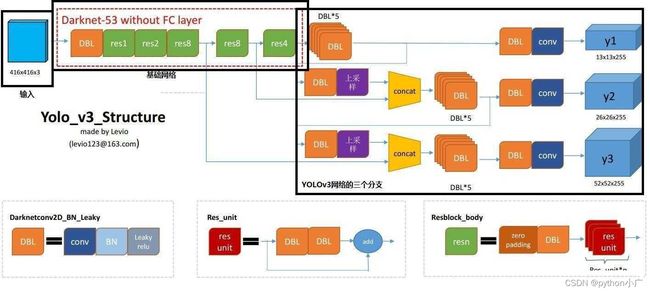

1、网络结构

Yolov3网络中的特征提取部分(backbone)采用Darkenet53,yolov3使用了darknet-53的前面的52层(没有全连接层),yolov3这个网络是一个全卷积网络,大量使用残差的跳层连接,并且为了降低池化带来的梯度负面效果,作者直接摒弃了POOLing,用conv的stride来实现降采样。在这个网络结构中,使用的是步长为2的卷积来进行降采样。

DBL:代码中的Darknetconv2d_BN_Leaky,是yolo_v3的基本组件。就是卷积+BN+Leaky relu。

ResidualBlock * m:m代表数字,表示这个ResidualBlock 里含有多少个Residual

concat:张量拼接。将darknet中间层和后面的某一层的上采样进行拼接。拼接的操作和残差层add的操作是不一样的,拼接会扩充张量的维度,而add只是直接相加不会导致张量维度的改变。

网络结构解析:

-

Yolov3中,只有卷积层,通过调节卷积步长控制输出特征图的尺寸。所以对于输入图片尺寸没有特别限制。

-

Yolov3借鉴了金字塔特征图思想,小尺寸特征图用于检测大尺寸物体,而大尺寸特征图检测小尺寸物体。特征图的输出维度为 N × N ×[3 × (4 + 1 + 80)], N × N为输出特征图格点数,一共3个Anchor框,每个框有4维预测框数值 t x , t y , t w , t h t_x,t_y,t_w,t_h tx,ty,tw,th ,1维预测框置信度,80维物体类别数。所以第一层特征图的输出维度为13×13×255。

-

yolov3总共输出3个特征图,第一个特征图下采样32倍,第二个特征图下采样16倍,第三个下采样8倍。输入图像经过Darknet-53(无全连接层),再经过Yoloblock生成的特征图被当作两用,第一用为经过33卷积层、11卷积之后生成特征图一,第二用为经过1×1卷积层加上采样层,与Darnet-53网络的中间层输出结果进行拼接,产生特征图二。同样的循环之后产生特征图三。

-

concat操作与加和操作的区别:加和操作来源于ResNet思想,将输入的特征图,与输出特征图对应维度进行相加,即 y = f(x) + x;而concat操作源于DenseNet网络的设计思路,将特征图按照通道维度直接进行拼接,例如13×13×16的特征图与13×13×16的特征图拼接后生成13×13×32的特征图。

-

上采样层(upsample):作用是将小尺寸特征图通过插值等方法,生成大尺寸图像。例如使用最近邻插值算法,将13×13的图像变换为26×26。上采样层不改变特征图的通道数。

Yolo的整个网络,吸取了Resnet、Densenet、FPN的精髓,可以说是融合了目标检测当前业界最有效的全部技巧。

2、网络输出解读(前向过程)

2.1、输出特征图尺寸

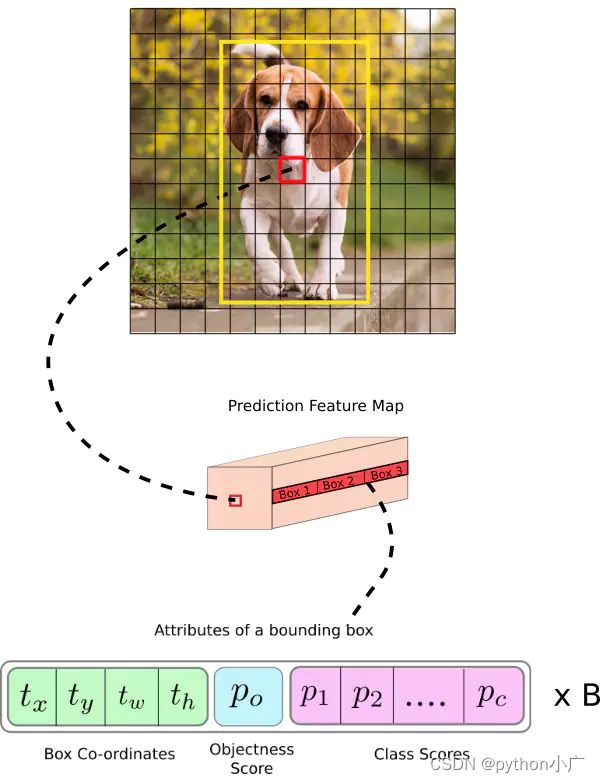

根据不同的输入尺寸,会得到不同大小的输出特征图,输出的特征图为13 × 13 × 255、26 × 26× 255、52 × 52 × 255。在Yolov3的设计中,每个特征图的每个格子中,都配置3个不同的先验框,所以最后三个特征图,这里暂且reshape为13 × 13 × 3 × 85、26 × 26 × 3 × 85、52 × 52 × 3 × 85,这样更容易理解,在代码中也是reshape成这样之后更容易操作。三张特征图就是整个Yolo输出的检测结果,检测框位置(4维)、检测置信度(1维)、类别(80维)都在其中,加起来正好是85维。特征图最后的维度85,代表的就是这些信息,而特征图其他维度N × N × 3,N × N代表了检测框的参考位置信息,3是3个不同尺度的先验框。

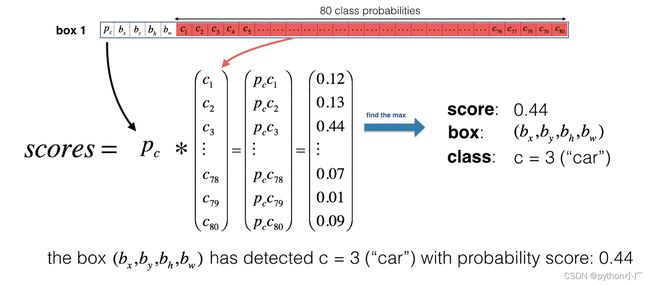

现在,对于每个cell的每个锚框我们计算下面的元素级乘法并且得到锚框包含一个物体类的概率,如下图:

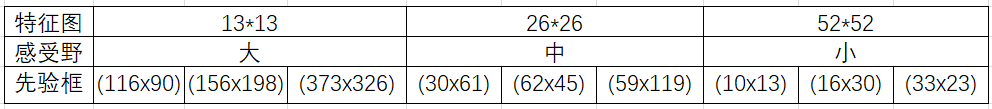

2.2、锚框和预测

- 先验框

在Yolov1中,网络直接回归检测框的宽、高,这样效果有限。所以在Yolov2中,改为了回归基于先验框的变化值,这样网络的学习难度降低,整体精度提升不小。Yolov3沿用了Yolov2中关于先验框的技巧,并且使用k-means对数据集中的标签框进行聚类,得到类别中心点的9个框,作为先验框。在COCO数据集中(原始图片全部resize为416 × 416),九个框分别是 (10×13),(16×30),(33×23),(30×61),(62×45),(59× 119), (116 × 90), (156 × 198),(373 × 326) ,顺序为w × h。

注:先验框只与检测框的w、h有关,与x、y无关。

网络降采样输入图像一直到第一个检测层,步幅是32;然后,将此层上采样2倍与上面的同样大小的特征图进行按通道堆叠,第二个检测层按步幅16形成;同样地,相同的上采样过程,最后的检测层步幅为8。在每个尺度上,每个cell使用三个锚框预测三个边界框,共9个锚框。所有锚框加起来一共个10647

- 检测框解码

有了先验框与输出特征图,就可以解码检测框 x,y,w,h。

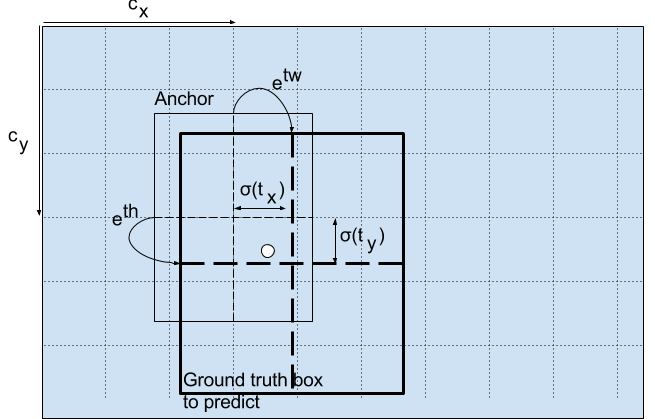

这里 b x , b y , b w , b h b_x, b_y, b_w, b_h bx,by,bw,bh分别是我们预测的中心坐标、宽度和高度。 t x , t y , t w , t h t_x, t_y, t_w, t_h tx,ty,tw,th是网络的输出。 c x , c y c_x, c_y cx,cy是网格从顶左部的坐标。 p w , p h p_w, p_h pw,ph是锚框的维度

如下图所示, 是基于矩形框中心点左上角格点坐标的偏移量, 是激活函数,论文中作者使用sigmoid。 通过sigmoid函数进行中心坐标预测,强制将值限制在0和1之间。YOLO不是预测边界框中心的绝对坐标,它预测的是偏移量:相对于预测对象的网格单元的左上角;通过特征图cell归一化维度。

考虑上面狗的图像。如果预测中心坐标是(0.4, 0.7),意味着中心在(因为红色框左上角坐标是(6,6))。但是如果预测的坐标大于1,例如(1.2,0.7),意味着中心在,现在中心在红色框右边(7.2,6.7),但是我们只能使用红色框对对象预测负责,所以我们添加一个sidmoid函数强制限制在0和1之间。

举个具体的例子,假设对于第二个特征图13 ×13× 3 × 85中的第[5,4,2]维,上图中的 为5, 为4,第二个特征图对应的先验框为(30×61),(62×45),(59× 119),prior_box的index为2,那么取最后一个59,119作为先验w、先验h。这样计算之后的 还需要乘以特征图二的采样率13,得到真实的检测框x,y。

- 检测置信度解码

物体的检测置信度,在Yolo设计中非常重要,关系到算法的检测正确率与召回率。

置信度在输出85维中占固定一位,由sigmoid函数解码即可,解码之后数值区间在[0,1]中。

- 类别解码

COCO数据集有80个类别,所以类别数在85维输出中占了80维,每一维独立代表一个类别的置信度。使用sigmoid激活函数替代了Yolov2中的softmax,取消了类别之间的互斥,可以使网络更加灵活。

三个特征图一共可以解码出13 × 13 × 3 + 26 × 26 × 3 + 52 × 52 × 3 = 10647个box以及相应的类别、置信度。这10647个box,在训练和推理时,使用方法不一样:

- 训练时10647个box全部送入打标签函数,进行后一步的标签以及损失函数的计算。

- 推理时,选取一个置信度阈值,过滤掉低阈值box,再经过nms(非极大值抑制),就可以输出整个网络的预测结果了。

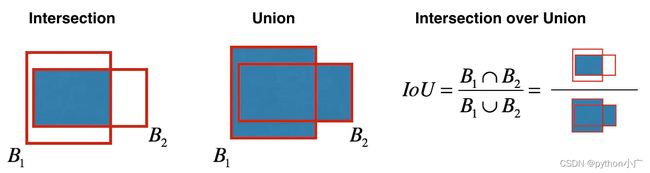

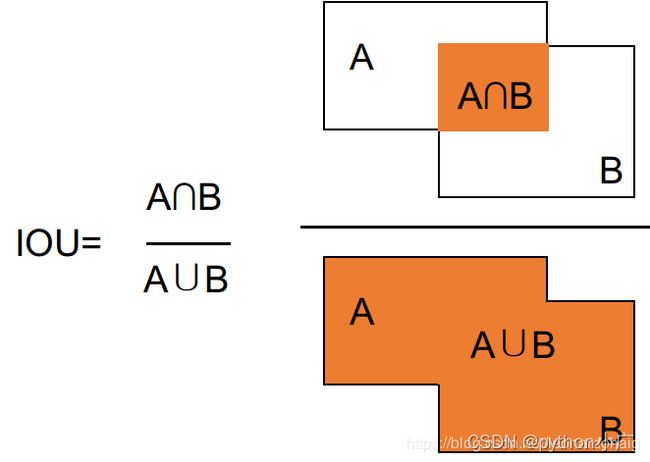

- 实现非极大值抑制,关键在于:选择一个最高分数的框;计算它和其他框的重合度,去除重合度超过IoU(交并比)阈值的框;回到步骤1迭代直到没有比当前所选框低的框。

3、训练策略与损失函数(反向过程)

- 训练策略

训练策略总结如下:

- 预测框一共分为三种情况:正例(positive)、负例(negative)、忽略样例(ignore)。

- 正例:任取一个ground truth,与10647个框全部计算IOU,IOU最大的预测框,即为正例。并且一个预测框,只能分配给一个ground truth。例如第一个ground truth已经匹配了一个正例检测框,那么下一个ground truth,就在余下的10646个检测框中,寻找IOU最大的检测框作为正例。ground truth的先后顺序可忽略。正例产生置信度loss、检测框loss、类别loss。预测框为对应的ground truth box标签;(需要反向编码,使用真实的x、y、w、h计算出类别标签对应tx,ty,tw,th)类别为1,其余为0;置信度标签为1。

- 忽略样例:正例除外,与任意一个ground truth的IOU大于阈值(论文中使用0.5),则为忽略样例。忽略样例不产生任何loss。

- 负例:正例除外(与ground truth计算后IOU最大的检测框,但是IOU小于阈值,仍为正例),与全部ground truth的IOU都小于阈值(0.5),则为负例。负例只有置信度产生loss,置信度标签为0。

- Loss函数

特征图1的Yolov3的损失函数抽象表达式如下:

Yolov3 Loss为三个特征图Loss之和: L o s s = l o s s N 1 + l o s s N 2 + l o s s N 3 Loss=loss_{N_1} + loss_{N_2} + loss_{N_3} Loss=lossN1+lossN2+lossN3

1. λ \lambda λ为权重常数,控制检测框 L o s s o b j Loss_obj Lossobj置信度与 L o s s n o o b j Loss_noobj Lossnoobj置信度 L o s s Loss Loss之间的比例,通常负例的个数是正例的几十倍以上,可以通过权重超参控制检测效果。

2. 1 i j o b j 1_{ij}^{obj} 1ijobj若是正例则输出1,否则为0; 1 i j n o o b j 1_{ij}^{noobj} 1ijnoobj若是负例则输出1,否则为0;忽略样例都输出0。

3. x、y、w、h使用MSE作为损失函数,也可以使用smooth L1 loss(出自Faster R-CNN)作为损失函数。smooth L1可以使训练更加平滑。置信度、类别标签由于是0,1二分类,所以使用交叉熵作为损失函数。

- 训练策略解释:

- ground truth为什么不按照中心点分配对应的预测box?

(1)在Yolov3的训练策略中,不再像Yolov1那样,每个cell负责中心落在该cell中的ground truth。原因是Yolov3一共产生3个特征图,3个特征图上的cell,中心是有重合的。训练时,可能最契合的是特征图1的第3个box,但是推理的时候特征图2的第1个box置信度最高。所以Yolov3的训练,不再按照ground truth中心点,严格分配指定cell,而是根据预测值寻找IOU最大的预测框作为正例。

(2)第一种,ground truth先从9个先验框中确定最接近的先验框,这样可以确定ground truth所属第几个特征图以及第几个box位置,之后根据中心点进一步分配。第二种,全部10647个输出框直接和ground truth计算IOU,取IOU最高的cell分配ground truth。第二种计算方式的IOU数值,往往都比第一种要高,这样wh与xy的loss较小,网络可以更加关注类别和置信度的学习;其次,在推理时,是按照置信度排序,再进行nms筛选,第二种训练方式,每次给ground truth分配的box都是最契合的box,给这样的box置信度打1的标签,更加合理,最接近的box,在推理时更容易被发现。

- Yolov1中的置信度标签,就是预测框与真实框的IOU,Yolov3为什么是1?

(1)置信度意味着该预测框是或者不是一个真实物体,是一个二分类,所以标签是1、0更加合理。

(2)第一种:置信度标签取预测框与真实框的IOU;第二种:置信度标签取1。第一种的结果是,在训练时,有些预测框与真实框的IOU极限值就是0.7左右,置信度以0.7作为标签,置信度学习有一些偏差,最后学到的数值是0.5,0.6,那么假设推理时的激活阈值为0.7,这个检测框就被过滤掉了。但是IOU为0.7的预测框,其实已经是比较好的学习样例了。尤其是coco中的小像素物体,几个像素就可能很大程度影响IOU,所以第一种训练方法中,置信度的标签始终很小,无法有效学习,导致检测召回率不高。而检测框趋于收敛,IOU收敛至1,置信度就可以学习到1,这样的设想太过理想化。而使用第二种方法,召回率明显提升了很高。

- 为什么有忽略样例?

(1)忽略样例是Yolov3中的点睛之笔。由于Yolov3使用了多尺度特征图,不同尺度的特征图之间会有重合检测部分。比如有一个真实物体,在训练时被分配到的检测框是特征图1的第三个box,IOU达0.98,此时恰好特征图2的第一个box与该ground truth的IOU达0.95,也检测到了该ground truth,如果此时给其置信度强行打0的标签,网络学习效果会不理想。

(2)如果给全部的忽略样例置信度标签打0,那么最终的loss函数会变成 L o s s o b j Loss_{obj} Lossobj与 L o s s n o o b j Loss_{noobj} Lossnoobj的拉扯,不管两个loss数值的权重怎么调整,或者网络预测趋向于大多数预测为负例,或者趋向于大多数预测为正例。而加入了忽略样例之后,网络才可以学习区分正负例。

- 优化器

Adam,SGD等都可以用,github上Yolov3项目中,大多使用Adam优化器。

三、tensorflow代码实现

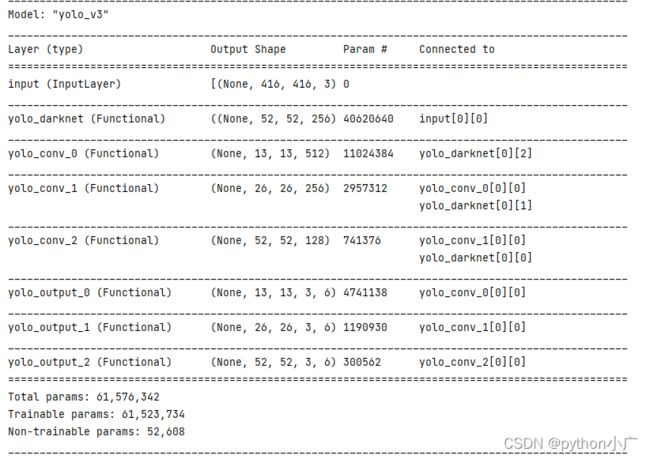

3.1、YOLOv3 网络结构

3.1.1、DBL代码实现

def DBL(x, filters, kernel_size, strides=1, batch_norm=True):

# Darknet conv-bn-LeakyReLU

# 如果步长为1,则使用 same,不为1 则进行下采样

if strides == 1:

padding = 'same'

else:

x = ZeroPadding2D(((1, 0), (1, 0)))(x) # 顶部 和 左边 补0

padding = 'valid'

x = Conv2D(filters=filters, kernel_size=kernel_size,

strides=strides, padding=padding,

use_bias=not batch_norm, kernel_regularizer=l2(0.0005))(x) # l2正则化

if batch_norm:

x = BatchNormalization()(x) # 残差

x = LeakyReLU(alpha=0.1)(x) # LeakyReLU激活函数 alpha为斜率

return x

3.1.2、Residual代码实现

def Residual(x, filters):

# res 自定义残差单元,只需给出通道数,该单元完成两次卷积,并进行加残差后返回相同维度的特征图

prev = x

x = DBL(x, filters // 2, 1)

x = DBL(x, filters, 3)

# Add C维度结果相加

x = Add()([prev, x])

return x

3.1.3、ResidualBlock代码实现

def ResidualBlock(x, filters, blocks):

# res-block 残差块

# 此处DBL 的作用是进行下采样

x = DBL(x, filters, 3, strides=2)

for _ in range(blocks):

x = Residual(x, filters)

return x

3.1.4、Darknet53代码实现

def Darknet53(name=None):

# darkent53网络

def darknet53(x_in):

x = inputs = Input(x_in.shape[1:])

x = DBL(x, 32, 3)

x = ResidualBlock(x, 64, 1)

x = ResidualBlock(x, 128, 2)

res52 = ResidualBlock(x, 256, 8)

res26 = ResidualBlock(res52, 512, 8)

res13 = ResidualBlock(res26, 1024, 4)

return Model(inputs, (res52, res26, res13), name=name)(x_in)

return darknet53

3.1.5、YoloBlock

def YoloBlock(filters, name=None):

def yolo_conv(x_in):

if isinstance(x_in, tuple):

inputs = Input(x_in[0].shape[1:]), Input(x_in[1].shape[1:])

x, x_skip = inputs

x = DBL(x, filters, 1)

x = UpSampling2D(2)(x)

x = Concatenate()([x, x_skip])

else:

x = inputs = Input(x_in.shape[1:])

x = DBL(x, filters, 1)

x = DBL(x, filters * 2, 3)

x = DBL(x, filters, 1)

x = DBL(x, filters * 2, 3)

x = DBL(x, filters, 1)

return Model(inputs, x, name=name)(x_in)

return yolo_conv

3.1.6、YoloOutput

class Reshape_out(Layer):

# 自定义层

def __init__(self, classes, anchors, **kwargs):

super(Reshape_out, self).__init__(**kwargs)

self.classes = classes

self.anchors = anchors

def call(self, inputs, **kwargs):

return array_ops.reshape(inputs, (-1, inputs.shape[1], inputs.shape[2], self.anchors, self.classes + 5))

def YoloOutput(filters, classes, anchors = 3,name=None):

def yolo_output(x_in):

x = inputs = Input(x_in.shape[1:])

x = DBL(x, filters * 2, 3)

# B * (5 + C)

x = DBL(x, anchors*(classes + 5), 1, batch_norm=False)

# x = (batch_size, grid, grid, anchors, (x, y, w, h, obj, ...classes)

x = Reshape_out(classes, anchors)(x)

return Model(inputs, x, name=name)(x_in)

return yolo_output

3.1.7、YoloV3

def YoloV3(size, classes, channels=3, anchors=yolo_anchors,

mask=yolo_anchor_masks, training=False):

x = inputs = Input([size, size, channels], name='input')

x_8, x_16, x_32 = Darknet53(name='yolo_darknet')(x)

x = YoloBlock(512, name='yolo_conv_0')(x_32)

output_0 = YoloOutput(512, name='yolo_output_0', classes=classes)(x)

x = YoloBlock(256, name='yolo_conv_1')((x, x_16))

output_1 = YoloOutput(256, name='yolo_output_1', classes=classes)(x)

x = YoloBlock(128, name='yolo_conv_2')((x, x_8))

output_2 = YoloOutput(128, name='yolo_output_2', classes=classes)(x)

if training:

return Model(inputs, (output_0, output_1, output_2), name='yolo_v3')

else:

boxes_0 = Yolo_Boxes(anchors[mask[0]], classes)(output_0)

boxes_1 = Yolo_Boxes(anchors[mask[1]], classes)(output_1)

boxes_2 = Yolo_Boxes(anchors[mask[2]], classes)(output_2)

outputs = Yolo_NMS(classes)((boxes_0, boxes_1, boxes_2))

return Model(inputs, outputs, name="yolov3")

3.2、YOLOV3 loss实现

iou 可以使用 giou、diou或ciou

3.2.1、iou

def box_iou(box_1, box_2):

"""

:param box_1: (..., (x1, y1, x2, y2))

:param box_2: (N, (x1, y1, x2, y2))

:return: (N, 1)

"""

# broadcast boxes

box_1 = tf.expand_dims(box_1, -2)

box_2 = tf.expand_dims(box_2, 0)

# new_shape: (..., N, (x1, y1, x2, y2))

new_shape = tf.broadcast_dynamic_shape(tf.shape(box_1), tf.shape(box_2))

box_1 = tf.broadcast_to(box_1, new_shape)

box_2 = tf.broadcast_to(box_2, new_shape)

# 计算并集面积

int_w = tf.maximum(tf.minimum(box_1[..., 2], box_2[..., 2]) -

tf.maximum(box_1[..., 0], box_2[..., 0]), 0)

int_h = tf.maximum(tf.minimum(box_1[..., 3], box_2[..., 3]) -

tf.maximum(box_1[..., 1], box_2[..., 1]), 0)

int_area = int_w * int_h

# 计算两个框的面积

box_1_area = (box_1[..., 2] - box_1[..., 0]) * \

(box_1[..., 3] - box_1[..., 1])

box_2_area = (box_2[..., 2] - box_2[..., 0]) * \

(box_2[..., 3] - box_2[..., 1])

# 交集面积为两个框的面积和-并集面积

union_area = box_1_area + box_2_area - int_area

# iou= 交集面积/并集面积,add epsilon in denominator to avoid dividing by 0

iou = int_area / (union_area + tf.keras.backend.epsilon())

return iou

3.2.2、giou

giou解决iou中,两框不相交的情况下(iou衡等于零),无法衡量损失

对于相交的框,IOU可以被反向传播,即它可以直接用作优化的目标函数。但是非相交的,梯度将会为0,无法优化。此时使用GIoU可以完全避免此问题。所以可以作为目标函数

def box_giou(box_1, box_2):

"""

:param box_1: (..., (x1, y1, x2, y2))

:param box_2: (N, (x1, y1, x2, y2))

:return: (N, 1)

"""

# broadcast boxes

box_1 = tf.expand_dims(box_1, -2)

box_2 = tf.expand_dims(box_2, 0)

# new_shape: (..., N, (x1, y1, x2, y2))

new_shape = tf.broadcast_dynamic_shape(tf.shape(box_1), tf.shape(box_2))

box_1 = tf.broadcast_to(box_1, new_shape)

box_2 = tf.broadcast_to(box_2, new_shape)

# 计算 并集面积

intersect_w = tf.maximum(tf.minimum(box_1[..., 2], box_2[..., 2]) -

tf.maximum(box_1[..., 0], box_2[..., 0]), 0)

intersect_h = tf.maximum(tf.minimum(box_1[..., 3], box_2[..., 3]) -

tf.maximum(box_1[..., 1], box_2[..., 1]), 0)

intersect_area = intersect_w * intersect_h

# 计算两个框的面积

box_1_area = (box_1[..., 2] - box_1[..., 0]) * \

(box_1[..., 3] - box_1[..., 1])

box_2_area = (box_2[..., 2] - box_2[..., 0]) * \

(box_2[..., 3] - box_2[..., 1])

# 交集面积为两个框的面积和-并集面积

union_area = box_1_area + box_2_area - intersect_area

# iou= 交集面积/并集面积,add epsilon in denominator to avoid dividing by 0

iou = intersect_area / (union_area + tf.keras.backend.epsilon())

# 计算包围两个框的矩形面积

enclose_w = tf.maximum(tf.maximum(box_1[..., 2], box_2[..., 2]) -

tf.minimum(box_1[..., 0], box_2[..., 0]), 0)

enclose_h = tf.maximum(tf.maximum(box_1[..., 3], box_2[..., 3]) -

tf.minimum(box_1[..., 1], box_2[..., 1]), 0)

enclose_area = enclose_w * enclose_h

#giou = iou - (最小包围矩形面积-交集面积)/ 最小包围矩形面积

giou = iou - 1.0 * (enclose_area - union_area) / (enclose_area + tf.keras.backend.epsilon())

return giou

3.2.3、diou

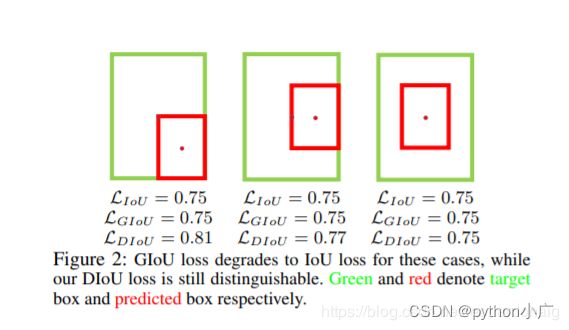

giou也存在问题,如果存在如下图的情况时,giou会退化为iou

考虑换一种方式来衡量两个框之间远近的度量方式

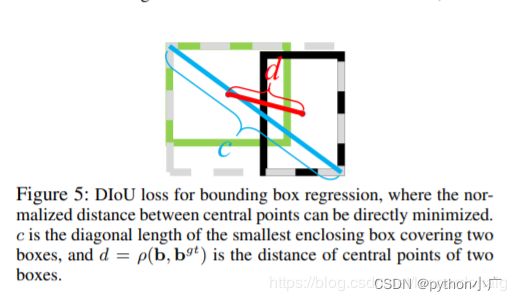

b,bgt分别代表了anchor框和目标框的中心点,且p代表的是计算两个中心点间的欧式距离。c代表的是能够同时覆盖anchor和目标框的最小矩形的对角线距离。

GIoU loss类似,DIoU loss在与目标框不重叠时,仍然可以为边界框提供移动方向。

DIoU loss可以直接最小化两个目标框的距离,而GIOU loss优化的是两个目标框之间的面积

def box_diou(box_1, box_2):

"""

:param box_1: (..., (x1, y1, x2, y2))

:param box_2: (N, (x1, y1, x2, y2))

:return: (N, 1)

"""

# broadcast boxes

box_1 = tf.expand_dims(box_1, -2)

box_2 = tf.expand_dims(box_2, 0)

# new_shape: (..., N, (x1, y1, x2, y2))

new_shape = tf.broadcast_dynamic_shape(tf.shape(box_1), tf.shape(box_2))

box_1 = tf.broadcast_to(box_1, new_shape)

box_2 = tf.broadcast_to(box_2, new_shape)

# 计算并集面积

intersect_w = tf.maximum(tf.minimum(box_1[..., 2], box_2[..., 2]) -

tf.maximum(box_1[..., 0], box_2[..., 0]), 0)

intersect_h = tf.maximum(tf.minimum(box_1[..., 3], box_2[..., 3]) -

tf.maximum(box_1[..., 1], box_2[..., 1]), 0)

intersect_area = intersect_w * intersect_h

# 计算两个框的面积

box_1_area = (box_1[..., 2] - box_1[..., 0]) * \

(box_1[..., 3] - box_1[..., 1])

box_2_area = (box_2[..., 2] - box_2[..., 0]) * \

(box_2[..., 3] - box_2[..., 1])

# 交集面积为两个框的面积和-并集面积

union_area = box_1_area + box_2_area - intersect_area

# iou= 交集面积/并集面积,add epsilon in denominator to avoid dividing by 0

iou = intersect_area / (union_area + tf.keras.backend.epsilon())

# 计算包围两个框的最小矩形w, h 并最小矩形的对角线距离平方 w*w + h*h

enclose_w = tf.maximum(tf.maximum(box_1[..., 2], box_2[..., 2]) -

tf.minimum(box_1[..., 0], box_2[..., 0]), 0)

enclose_h = tf.maximum(tf.maximum(box_1[..., 3], box_2[..., 3]) -

tf.minimum(box_1[..., 1], box_2[..., 1]), 0)

enclose_wh = tf.stack((enclose_w, enclose_h), axis=-1)

enclose_diagonal = tf.keras.backend.sum(tf.square(enclose_wh), axis=-1)

# 计算两个中心点间的欧式距离并平方

box_1_xy = (box_1[..., 0:2] + box_1[..., 2:4]) / 2.0

box_2_xy = (box_2[..., 0:2] + box_2[..., 2:4]) / 2.0

center_distance = tf.keras.backend.sum(tf.square(box_1_xy - box_2_xy), axis=-1)

# diou , add epsilon in denominator to avoid dividing by 0

diou = iou - 1.0 * (center_distance) / (enclose_diagonal + tf.keras.backend.epsilon())

return diou

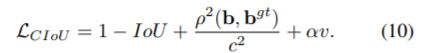

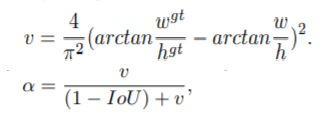

3.2.4、ciou

一个好的目标框回归损失应该考虑三个重要的几何因素:重叠面积、中心点距离、长宽比。 GIoU:为了归一化坐标尺度,利用IoU,并初步解决IoU为零的情况。 DIoU:DIoU损失同时考虑了边界框的重叠面积和中心点距离。 CIOU:Complete-IoU Loss,anchor框和目标框之间的长宽比的一致性也是极其重要的。

CIOU Loss又引入一个box长宽比的惩罚项,该Loss考虑了box的长宽比,定义如下:

其中α是用于平衡比例的参数。v用来衡量anchor框和目标框之间的比例一致性。从α参数的定义可以看出,损失函数会更加倾向于往重叠区域增多方向优化,尤其是IoU为零的时候。

def box_ciou(box_1, box_2):

"""

:param box_1: (..., (x1, y1, x2, y2))

:param box_2: (N, (x1, y1, x2, y2))

:return: (N, 1)

"""

box_1 = tf.expand_dims(box_1, -2)

box_2 = tf.expand_dims(box_2, 0)

# new_shape: (..., N, (x1, y1, x2, y2))

new_shape = tf.broadcast_dynamic_shape(tf.shape(box_1), tf.shape(box_2))

box_1 = tf.broadcast_to(box_1, new_shape)

box_2 = tf.broadcast_to(box_2, new_shape)

# 计算并集面积

intersect_w = tf.maximum(tf.minimum(box_1[..., 2], box_2[..., 2]) -

tf.maximum(box_1[..., 0], box_2[..., 0]), 0)

intersect_h = tf.maximum(tf.minimum(box_1[..., 3], box_2[..., 3]) -

tf.maximum(box_1[..., 1], box_2[..., 1]), 0)

intersect_area = intersect_w * intersect_h

# 计算两个框的面积

box_1_area = (box_1[..., 2] - box_1[..., 0]) * \

(box_1[..., 3] - box_1[..., 1])

box_2_area = (box_2[..., 2] - box_2[..., 0]) * \

(box_2[..., 3] - box_2[..., 1])

# 交集面积为两个框的面积和-并集面积

union_area = box_1_area + box_2_area - intersect_area

# iou= 交集面积/并集面积,add epsilon in denominator to avoid dividing by 0

iou = intersect_area / (union_area + tf.keras.backend.epsilon())

# 两个框的中心点欧式距离平方

box_1_xy = (box_1[..., 0:2] + box_1[..., 2:4]) / 2.0

box_2_xy = (box_2[..., 0:2] + box_2[..., 2:4]) / 2.0

center_distance = tf.keras.backend.sum(tf.square(box_1_xy - box_2_xy), axis=-1)

# 计算包围两个框的最小矩形w, h 并最小矩形的对角线距离 w*w + h*h

enclose_w = tf.maximum(tf.maximum(box_1[..., 2], box_2[..., 2]) -

tf.minimum(box_1[..., 0], box_2[..., 0]), 0)

enclose_h = tf.maximum(tf.maximum(box_1[..., 3], box_2[..., 3]) -

tf.minimum(box_1[..., 1], box_2[..., 1]), 0)

enclose_wh = tf.stack((enclose_w, enclose_h), axis=-1)

# 计算包围两个框的最小矩形w, h 并最小矩形的对角线距离平方 w*w + h*h

enclose_diagonal = tf.keras.backend.sum(tf.square(enclose_wh), axis=-1)

# diou

diou = iou - 1.0 * (center_distance) / (enclose_diagonal + tf.keras.backend.epsilon())

box_1_w = (box_1[..., 2] - box_1[..., 0])

box_1_h = (box_1[..., 3] - box_1[..., 1])

box_2_w = (box_2[..., 2] - box_2[..., 0])

box_2_h = (box_2[..., 3] - box_2[..., 1])

v = 4 * tf.keras.backend.square(

tf.math.atan2(box_1_w, box_1_h) - tf.math.atan2(box_2_w, box_2_h)) / (math.pi * math.pi)

alpha = v / (1.0 - iou + v)

# ciou

ciou = diou - alpha * v

return ciou

3.2.5、Loss

def yolo_boxes(pred, anchors, classes):

# pred: (batch_size, grid, grid, anchors, (x, y, w, h, obj, ...classes)) x,y,w,h预测的中心坐标和长宽

grid_size = tf.shape(pred)[1:3]

# 拆分最后一个维度 xy wh obj classes (2, 2, 1, classes)

box_xy, box_wh, objectness, class_probs = tf.split(pred, (2, 2, 1, classes), axis=-1)

pred_box = tf.concat((box_xy, box_wh), axis=-1)

# grid[x][y] == (y, x)

grid = tf.meshgrid(tf.range(grid_size[1]), tf.range(grid_size[0]))

grid = tf.expand_dims(tf.stack(grid, axis=-1), axis=2)

"""

使用 sidmoid 函数强制限制在0 和 1之间

bx = simmoid(tx) + Cx by = simmoid(ty) + Cy

bw = pw*exp(tw) bh = pw*exp(th)

bx, by, bw, bh 预测的中心坐标和长宽

tx, ty, tw, th 网络的输出中心坐标和长宽

Cx, Cy 网格从顶左部的坐标

ph, pw 先验框的高和宽

"""

box_xy = tf.sigmoid(box_xy)

box_xy = (box_xy + tf.cast(grid, tf.float32))

box_wh = tf.exp(box_wh) * anchors

# 将中心坐标转为相对坐标

box_xy = box_xy / tf.cast(grid_size, tf.float32)

# 计算左上角和右下角坐标

box_x1y1 = box_xy - box_wh / 2

box_x2y2 = box_xy + box_wh / 2

bbox = tf.concat([box_x1y1, box_x2y2], axis=-1)

# 置信度

objectness = tf.sigmoid(objectness)

# 类别

class_probs = tf.sigmoid(class_probs)

return bbox, objectness, class_probs, pred_box

def YoloLoss(anchors, num_classes, ignore_thresh=0.5):

def yolo_loss(y_true, y_pred):

# 1. 转换所有pred输出

# y_pred: (batch_size, grid, grid, anchors, (x, y, w, h, obj, ...cls))

pred_box, pred_obj, pred_class, pred_xywh = yolo_boxes(

y_pred, anchors, num_classes)

pred_xy = pred_xywh[..., 0:2]

pred_wh = pred_xywh[..., 2:4]

# 2. 转换所有true输出

# y_true: (batch_size, grid, grid, anchors, (x1, y1, x2, y2, obj, ...cls)) cls 为one-hot编码

true_box, true_obj, true_class_idx = tf.split(

y_true, (4,1, num_classes), axis=-1)

rue_box, true_obj, true_class_idx = tf.split(

y_true, (4, 1, num_classes), axis=-1)

# 中心点

true_xy = true_box[..., 0:2]

# wh

true_wh = true_box[..., 2:4]

# 添加系数(2 - groundtruth.w * groundtruth.h)用来加大对小框的损失

box_loss_scale = 2 - true_wh[..., 0] * true_wh[..., 1]

grid_size = tf.shape(y_true)[1]

grid = tf.meshgrid(tf.range(grid_size), tf.range(grid_size))

grid = tf.expand_dims(tf.stack(grid, axis=-1), axis=-2)

true_xy = true_xy * tf.cast(grid_size, tf.float32) - \

tf.cast(grid, tf.float32)

true_wh = tf.math.log(true_wh / anchors)

true_wh = tf.where(tf.math.is_inf(true_wh), tf.zeros_like(true_wh), true_wh)

# 4. 计算所有 masks

obj_mask = tf.squeeze(true_obj, -1)

# 5. 计算忽略样例

best_iou = tf.map_fn(

lambda x: tf.reduce_max(box_ciou(x[0], tf.boolean_mask(

x[1], tf.cast(x[2], tf.bool))), axis=-1),

(pred_box, true_box, obj_mask),

tf.float32)

ignore_mask = tf.cast(best_iou < ignore_thresh, tf.float32)

# 5. 计算所有损失

# box 为均方方差

xy_loss = obj_mask * box_loss_scale * \

tf.reduce_sum(tf.square(true_xy - pred_xy), axis=-1)

wh_loss = obj_mask * box_loss_scale * \

tf.reduce_sum(tf.square(true_wh - pred_wh), axis=-1)

# 置信度 二分类交叉熵

obj_loss = binary_crossentropy(true_obj, pred_obj, from_logits=True)

obj_loss = obj_mask * obj_loss + \

(1 - obj_mask) * ignore_mask * obj_loss

# 类别 多分类交叉熵

# true_class_idx 为one-hot编码

class_loss = obj_mask * categorical_crossentropy(

true_class_idx, pred_class, from_logits=True)

# 6. 求和所有loss (batch, gridx, gridy, anchors) => (batch, 1)

xy_loss = tf.reduce_sum(xy_loss, axis=(1, 2, 3))

wh_loss = tf.reduce_sum(wh_loss, axis=(1, 2, 3))

obj_loss = tf.reduce_sum(obj_loss, axis=(1, 2, 3))

class_loss = tf.reduce_sum(class_loss, axis=(1, 2, 3))

return xy_loss + wh_loss + obj_loss + class_loss

return yolo_loss

3.3、数据输入

3.3.1、K-meas

K均值算法需要输入待聚类的数据和欲聚类的簇数K,主要过程如下:

1.随机生成K个初始点作为质心

2.将数据集中的数据按照距离质心的远近分到各个簇中

3.将各个簇中的数据求平均值,作为新的质心,重复上一步,直到所有的簇不再改变

import xml.etree.ElementTree as ET

import numpy as np

import os

class YOLO_Kmeans:

def __init__(self, cluster_number, filename, anchor_save):

self.cluster_number = cluster_number

self.filename = filename

self.anchor_save = anchor_save

def iou(self, boxes, clusters): # 1 box -> k clusters

n = boxes.shape[0]

k = self.cluster_number

box_area = boxes[:, 0] * boxes[:, 1]

box_area = box_area.repeat(k)

box_area = np.reshape(box_area, (n, k))

cluster_area = clusters[:, 0] * clusters[:, 1]

cluster_area = np.tile(cluster_area, [1, n])

cluster_area = np.reshape(cluster_area, (n, k))

box_w_matrix = np.reshape(boxes[:, 0].repeat(k), (n, k))

cluster_w_matrix = np.reshape(np.tile(clusters[:, 0], (1, n)), (n, k))

min_w_matrix = np.minimum(cluster_w_matrix, box_w_matrix)

box_h_matrix = np.reshape(boxes[:, 1].repeat(k), (n, k))

cluster_h_matrix = np.reshape(np.tile(clusters[:, 1], (1, n)), (n, k))

min_h_matrix = np.minimum(cluster_h_matrix, box_h_matrix)

inter_area = np.multiply(min_w_matrix, min_h_matrix)

result = inter_area / (box_area + cluster_area - inter_area)

return result

def avg_iou(self, boxes, clusters):

accuracy = np.mean([np.max(self.iou(boxes, clusters), axis=1)])

return accuracy

def kmeans(self, boxes, k, dist=np.median):

box_number = boxes.shape[0]

distances = np.empty((box_number, k))

last_nearest = np.zeros((box_number,))

np.random.seed()

clusters = boxes[np.random.choice(

box_number, k, replace=False)] # init k clusters

while True:

distances = 1 - self.iou(boxes, clusters)

current_nearest = np.argmin(distances, axis=1)

if (last_nearest == current_nearest).all():

break # clusters won't change

for cluster in range(k):

clusters[cluster] = dist( # update clusters

boxes[current_nearest == cluster], axis=0)

last_nearest = current_nearest

return clusters

def result2txt(self, data):

f = open(self.anchor_save, 'w')

row = np.shape(data)[0]

for i in range(row):

if i == 0:

x_y = "%f,%f" % (data[i][0], data[i][1])

else:

x_y = ", %f,%f" % (data[i][0], data[i][1])

f.write(x_y)

f.close()

def txt2boxes(self):

assert os.path.isdir(self.filename), "The path does not exist"

dirs = [os.path.join(self.filename, filename) for filename in os.listdir(self.filename) if os.path.isdir(self.filename + filename)]

dataSet = []

for dirname in dirs:

for data_dir_file in os.listdir(dirname):

if data_dir_file == "xml":

data_dir_file = os.path.join(dirname, data_dir_file)

xml_filenames = [xml_filename for xml_filename in os.listdir(data_dir_file) if

xml_filename.endswith("xml")]

for xml_filename in xml_filenames:

tree = ET.parse(os.path.join(data_dir_file, xml_filename))

root = tree.getroot()

width = int(root.find("size")[0].text)

height = int(root.find("size")[1].text)

for object in root.findall("object"):

width = (int(object[4][2].text)- int(object[4][0].text)) / width

height = (int(object[4][3].text) - int(object[4][1].text)) / height

dataSet.append([width, height])

result = np.array(dataSet)

return result

def txt2clusters(self):

all_boxes = self.txt2boxes()

result = self.kmeans(all_boxes, k=self.cluster_number)

result = result[np.lexsort(result.T[0, None])]

self.result2txt(result)

print("K anchors:\n {}".format(result))

print("Accuracy: {:.2f}%".format(

self.avg_iou(all_boxes, result) * 100))

def get_anchor(anchors_flie, input_sise):

yolo_anchors = []

yolo_anchor_masks = np.array([[6, 7, 8], [4, 5, 6], [1, 2, 3]])

with open(anchors_flie) as f:

anchors = f.readline()

for anchor in anchors.split(", "):

w = float(anchor.split(",")[0])

h = float(anchor.split(",")[1])

yolo_anchors.append((w, h))

yolo_anchors = np.array(yolo_anchors, np.float32) / input_sise

return yolo_anchors, yolo_anchor_masks

if __name__ == "__main__":

cluster_number = 9

filename = "./data/"

anchor_save = "./data/anchors.txt"

kmeans = YOLO_Kmeans(cluster_number, filename, anchor_save)

kmeans.txt2clusters()

3.3.2、data_generator

我的数据集文件如下:target 为一个目标,里面包含两个文件夹 img用来存放图片, xml保xml文件,label_list.txt 为类别名称

import numpy as np

import tensorflow as tf

import os

import cv2 as cv

import xml.etree.ElementTree as ET

def transform_images(x_train, size):

# 裁剪图片大小,并归一化

x_train = tf.image.resize(x_train, (size, size)) / 255.0

return x_train

def preprocess_true_boxes(true_boxes, input_shape, anchors, num_classes):

"""

Preprocess true boxes to training input format

Args:

true_boxes: array, shape=(m, T, 5) 相对 x_min, y_min, x_max, y_max, class_id .

input_shape: array-like, hw, multiples of 32

anchors: array, shape=(N, 2), wh

num_classes: integer

Returns:

y_true: list of array, shape like yolo_outputs, xywh are reletive value

"""

assert (true_boxes[..., 4] < num_classes).all(), 'class id must be less than num_classes'

num_layers = len(anchors) // 3 # default setting

anchor_mask = [[6, 7, 8], [3, 4, 5], [0, 1, 2]] if num_layers == 3 else [[3, 4, 5], [1, 2, 3]]

true_boxes = np.array(true_boxes, dtype='float32')

input_shape = np.array(input_shape, dtype='int32')

boxes_xy = (true_boxes[..., 0:2] + true_boxes[..., 2:4]) / 2. # 中心点坐标

boxes_wh = true_boxes[..., 2:4] - true_boxes[..., 0:2] # w,h

true_boxes[..., 0:2] = boxes_xy

true_boxes[..., 2:4] = boxes_wh

m = true_boxes.shape[0]

grid_shapes = [input_shape // {0: 32, 1: 16, 2: 8}[l] for l in range(num_layers)]

y_true = [np.zeros((m, grid_shapes[l][0], grid_shapes[l][1], len(anchor_mask[l]), 5 + num_classes),

dtype='float32') for l in range(num_layers)]

# Expand dim to apply broadcasting.

anchors = np.expand_dims(anchors, 0)

anchor_maxes = anchors / 2.

anchor_mins = -anchor_maxes

valid_mask = boxes_wh[..., 0] > 0

for b in range(m):

# Discard zero rows.

wh = boxes_wh[b, valid_mask[b]]

if len(wh) == 0: continue

# Expand dim to apply broadcasting.

wh = np.expand_dims(wh, -2)

box_maxes = wh / 2.

box_mins = -box_maxes

intersect_mins = np.maximum(box_mins, anchor_mins)

intersect_maxes = np.minimum(box_maxes, anchor_maxes)

intersect_wh = np.maximum(intersect_maxes - intersect_mins, 0.)

intersect_area = intersect_wh[..., 0] * intersect_wh[..., 1]

box_area = wh[..., 0] * wh[..., 1]

anchor_area = anchors[..., 0] * anchors[..., 1]

iou = intersect_area / (box_area + anchor_area - intersect_area)

# Find best anchor for each true box

best_anchor = np.argmax(iou, axis=-1)

for t, n in enumerate(best_anchor):

for l in range(num_layers):

if n in anchor_mask[l]:

i = np.floor(true_boxes[b, t, 0] * grid_shapes[l][1]).astype('int32')

j = np.floor(true_boxes[b, t, 1] * grid_shapes[l][0]).astype('int32')

k = anchor_mask[l].index(n)

c = true_boxes[b, t, 4].astype('int32')

y_true[l][b, j, i, k, 0:4] = true_boxes[b, t, 0:4]

y_true[l][b, j, i, k, 4] = 1

# 置信度为1,表示有物体 ont-hot 编码

y_true[l][b, j, i, k, 5 + c] = 1

# 3 x [batchsize, grid, grid, 3, 25]

'''

:return

grid: 在第几个grid输出的什么点位

3 : 第几套anchor,一共三套,每一套3个anchor,grid=13x13,就对应第一套

25 : 0-4表示 以416为基数的相对值

'''

return y_true

def get_data_from_file(path, yolo_anchors, max_box_num, input_szie):

assert os.path.isdir(path), "The path does not exist"

label_list = [label_name.strip() for label_name in open(os.path.join(path, 'label_list.names')).readlines()]

num_classes = len(label_list)

all_data_image = []

all_data_label = []

dirs = [os.path.join(path,filename) for filename in os.listdir(path) if os.path.isdir(os.path.join(path, filename))]

for dirname in dirs:

for data_dir_file in os.listdir(dirname):

if data_dir_file == "img":

data_dir_file = os.path.join(dirname, data_dir_file)

for img_filename in os.listdir(data_dir_file):

img = cv.imread(os.path.join(data_dir_file, img_filename))

# 图片归一化

img = cv.resize(img, (input_szie, input_szie)) / 255.0

all_data_image.append(img)

if data_dir_file == "xml":

data_dir_file = os.path.join(dirname, data_dir_file)

xml_filenames = [xml_filename for xml_filename in os.listdir(data_dir_file) if xml_filename.endswith("xml")]

for xml_filename in xml_filenames:

tree = ET.parse(os.path.join(data_dir_file, xml_filename))

root = tree.getroot()

boxes_list = []

width = int(root.find("size")[0].text)

height = int(root.find("size")[1].text)

for object in root.findall("object"):

labelname = object[0].text

# 转换为相对坐标

xmin = float(object[4][0].text) / width

ymin = float(object[4][1].text) / height

xmax = float(object[4][2].text) / width

ymax = float(object[4][3].text) / height

label = label_list.index(labelname)

boxes_list.append(np.array((xmin, ymin, xmax, ymax, label)).astype(np.float64))

boxes_arr = np.stack(boxes_list)

paddings = [[0, max_box_num - np.shape(boxes_arr)[0]], [0, 0]]

boxes = np.pad(boxes_arr, paddings)

all_data_label.append(boxes)

all_data_image = np.stack(all_data_image, axis=0)

all_data_label = np.stack(all_data_label, axis=0)

# 三个不同尺寸的数据

all_data_label = preprocess_true_boxes(all_data_label, (input_szie, input_szie), yolo_anchors, num_classes)

return all_data_image, all_data_label, num_classes

class DatasetGenerator():

def __init__(self, datas, shuffle, batch_size):

self._shuffle = shuffle

self._batch_size = batch_size

self._indicator = 0

self._data = datas[0]

self._labels_32 = datas[1][0]

self._labels_16 = datas[1][1]

self._labels_8 = datas[1][2]

self.count = self._data.shape[0]

def __iter__(self):

return self

def __next__(self):

return self._next_batch()

def _shuffle_data(self):

p = np.random.permutation(self.count)

self._data = self._data[p]

self._labels_32 = self._labels_32[p]

self._labels_16 = self._labels_16[p]

self._labels_8 = self._labels_8[p]

def _next_batch(self):

end_indicator = self._indicator + self._batch_size

if end_indicator > self.count:

if self._shuffle:

self._shuffle_data()

self._indicator = 0

end_indicator = self._batch_size

else:

self._indicator = 0

end_indicator = self._batch_size

if end_indicator > self.count:

raise StopIteration

batch_data = self._data[self._indicator: end_indicator]

batch_labels_32 = self._labels_32[self._indicator: end_indicator]

batch_labels_16 = self._labels_16[self._indicator: end_indicator]

batch_labels_8 = self._labels_8[self._indicator: end_indicator]

self._indicator = end_indicator

return batch_data, (batch_labels_32, batch_labels_16, batch_labels_8)

3.4、划分训练集和测试集

import numpy as np

def split_data(datas, lables, split_rate):

data_count = len(datas)

split_num = int(data_count * split_rate)

p = np.random.permutation(data_count)

labels_32 = lables[0]

labels_16 = lables[1]

labels_8 = lables[2]

datas = datas[p]

labels_32 = labels_32[p]

labels_16 = labels_16[p]

labels_8 = labels_8[p]

val_dates = datas[:split_num]

val_labels_32 = labels_32[:split_num]

val_labels_16 = labels_16[:split_num]

val_labels_8 = labels_8[:split_num]

tarin_dates = datas[split_num:85]

train_labels_32 = labels_32[split_num:85]

train_labels_16 = labels_16[split_num:85]

train_labels_8 = labels_8[split_num:85]

return tarin_dates, [train_labels_32, train_labels_16, train_labels_8], val_dates, [val_labels_32, val_labels_16, val_labels_8]

3.5、网络训练

os.environ['CUDA_VISIBLE_DEVICES'] = '0, 1, 2, 3'

def setup_model(yolo_anchors, yolo_anchor_masks, num_classes, lreaning_rate):

model = network.YoloV3((416, 416, 3), classes=num_classes)

optimizer = tf.keras.optimizers.Adam(learning_rate=lreaning_rate)

loss = [utils.YoloLoss(yolo_anchors[mask], num_classes=num_classes) for mask in yolo_anchor_masks]

model.compile(optimizer=optimizer, loss=loss)

return model, loss

def train():

input_size = 416 # 输入图片大小

learning_rate = 1e-3 # 学习率

batch_size = 8 # 每批数据大小

epochs = 1 # 训练总轮数

datafile = "./dataset/data" # 数据集路径

max_box_num = 100 # 一张图片中最多目标数

model_filepath = "./model_filepath/" # 模型保存位置

checkpoint_filepath = "checkpoint_filepath/" # 权重保存位置

log_path = model_filepath + "log_path/" # 日志文件保存位置

load_weights = True # 是否加载预训练权重

split_rate = 0.1 # 数据集划分比率

yolo_anchors, yolo_anchor_masks = utils.get_anchor('./dataset/data/anchors.txt', input_size)

all_data_image, all_data_label, num_classes = data_generator.get_data_from_file(datafile, yolo_anchors, max_box_num, input_size)

tarin_data_image, train_data_label, val_data_image, val_data_label = split_data.split_data(all_data_image, all_data_label, split_rate)

trian_Generator = data_generator.DatasetGenerator((tarin_data_image, train_data_label), False, batch_size)

val_Generator = data_generator.DatasetGenerator((val_data_image, val_data_label), False, batch_size)

use_gpu = True

if use_gpu:

gpus = tf.config.experimental.list_physical_devices(device_type='GPU')

if gpus:

for gpu in gpus:

tf.config.experimental.set_memory_growth(device=gpu, enable=True)

tf.print(gpu)

else:

os.environ["CUDA_VISIBLE_DEVICE"] = "-1"

else:

os.environ["CUDA_VISIBLE_DEVICE"] = "-1"

model, loss = setup_model(yolo_anchors, yolo_anchor_masks, num_classes, learning_rate)

model.summary()

if load_weights:

model.load_weights(checkpoint_filepath)

cp_callback = tf.keras.callbacks.ModelCheckpoint(

filepath=checkpoint_filepath, # 文件路径

save_best_only=True, # 保存最好的

save_weights_only=True, # 只保存参数

monitor='val_loss', # 需要监视的值

mode='min', # 模式

save_freq=1, # CheckPoint之间的间隔的epoch数

)

log = tf.keras.callbacks.TensorBoard(log_dir=log_path)

reduce_lr = tf.keras.callbacks.ReduceLROnPlateau(monitor='val_loss', factor=0.1, patience=3, verbose=1)

early_stopping = tf.keras.callbacks.EarlyStopping(monitor='val_loss', min_delta=0, patience=10, verbose=1)

history = model.fit(

trian_Generator,

epochs=epochs,

steps_per_epoch=trian_Generator.count // batch_size + 1,

validation_data=val_Generator,

validation_steps=val_Generator.count // batch_size + 1,

callbacks=[cp_callback, log, reduce_lr, early_stopping]

)

model.save(model_filepath)

export_frozen_graph.export_frozen_graph(model, model_filepath + "frozen_graph.pb", (input_size, input_size, 3))

if __name__ == '__main__':

# try:

# app.run(main)

# except SystemExit:

# pass

main()

TensorBoard是一个可视化工具,它可以用来展示网络图、张量的指标变化、张量的分布情况等。进入logging文件夹的上一层文件夹,在DOS窗口运行命令:

tensorboard --logdir=./log_path

在浏览器输入网址:http://localhost:6006,或者输入上图提示的网址,即可查看生成图。

3.5、转为graph

将模型转为graph,为了后续cv加载模式使用

import tensorflow as tf

from tensorflow.python.framework.convert_to_constants import convert_variables_to_constants_v2

def export_frozen_graph(model, name, input_size) :

f = tf.function(lambda x: model(x))

f = f.get_concrete_function(x=tf.TensorSpec(shape=[None, input_size[0], input_size[1], input_size[2]], dtype=tf.float32))

f2 = convert_variables_to_constants_v2(f)

graph_def = f2.graph.as_graph_def()

# Export frozen graph

with tf.io.gfile.GFile(name, 'wb') as f:

f.write(graph_def.SerializeToString())

3.6、网络预测

3.6.1、加载模型

if __name__ == '__main__':

input_size = 416

learning_rate = 1e-5

batch_size = 8

model_filepath ="./model_filepath/"

checkpoint_filepath = model_filepath + "checkpoint_filepath/"

model = YoloV3(416, 1)

model.load_weights(checkpoint_filepath)

model.summary()

img = cv2.imread("data/target/img/0.jpg")

img = cv2.resize(img, (416, 416))

img = np.expand_dims(img, axis=0)

out = model.predict(img)

3.6.2、nms

def yolo_nms(outputs, classes):

# boxes, conf, type

b, c, t = [], [], []

for o in outputs:

boxes = o[..., 0:4]

b.append(tf.reshape(boxes, (tf.shape(boxes)[0], -1, tf.shape(boxes)[-1])))

conf = o[..., 4:5]

c.append(tf.reshape(conf, (tf.shape(conf)[0], -1, tf.shape(conf)[-1])))

type = o[..., 5:]

t.append(tf.reshape(type, (tf.shape(type)[0], -1, tf.shape(type)[-1])))

bbox = tf.concat(b, axis=1) # (1, 13*13*3+26*26*3+52*52*3, 4)

confidence = tf.concat(c, axis=1) # (1, 13*13*3+26*26*3+52*52*3, 1)

class_probs = tf.concat(t, axis=1) # (1, 13*13*3+26*26*3+52*52*3, 80)

if classes == 1:

scores = confidence

else:

scores = confidence * class_probs # (1, 13*13*3+26*26*3+52*52*3, 80)

# 删除0维

dscores = tf.squeeze(scores, axis=0) # (13*13*3+26*26*3+52*52*3, 80)

scores = tf.reduce_max(dscores,[1]) # (13*13*3+26*26*3+52*52*3)

bbox = tf.reshape(bbox, (-1, 4)) # (13*13*3+26*26*3+52*52*3, 4)

classes = tf.argmax(dscores, 1)

# 索引, 值

selected_indices, selected_scores = tf.image.non_max_suppression_with_scores(

boxes=bbox,

scores=scores,

max_output_size=100,

iou_threshold=0.5,

score_threshold=0.5,

soft_nms_sigma=0.5

)

num_valid_nms_boxes = tf.shape(selected_indices)[0]

selected_indices = tf.concat([selected_indices,tf.zeros(100-num_valid_nms_boxes, dtype=tf.int32)], 0)

selected_scores = tf.concat([selected_scores, tf.zeros(100-num_valid_nms_boxes, dtype=tf.float32)], -1)

boxes = tf.gather(bbox, selected_indices)

boxes = tf.expand_dims(boxes, axis=0)

scores = selected_scores

scores = tf.expand_dims(scores, axis=0)

classes = tf.gather(classes, selected_indices)

classes = tf.expand_dims(classes, axis=0)

valid_detections = num_valid_nms_boxes

valid_detections = tf.expand_dims(valid_detections, axis=0)

return boxes, scores, classes, valid_detections

3.6.3、画出box

def draw_box(img, classes):

boxes, classid = classes[..., 0:4], classes[..., 4:]

img_size = np.array((img.shape[1], img.shape[0]))

for i in range(len(boxes)):

print(boxes[i])

x1y1 = tuple(((np.array(boxes[i][0:2])) * img_size).astype(np.int32))

print(x1y1)

x2y2 = tuple(((np.array(boxes[i][2:4])) * img_size).astype(np.int32))

img = cv.rectangle(img, x1y1, x2y2, (255, 0, 0), 2)

img = cv.putText(img, '{}'.format(classid[i]),x1y1, cv.FONT_HERSHEY_COMPLEX_SMALL, 1, (0, 0, 255), 2)

cv.imshow("box", img)

cv.waitKey()

四、opencv 代码实现

#include 五、问题总结

更新中

参考

论文原文:https://pjreddie.com/media/files/papers/YOLOv3.pdf

yolo详解:https://blog.csdn.net/monk1992/article/details/82346138

YOLOv3网络结构细致解析:https://zhuanlan.zhihu.com/p/162043754

YOLOv3详解:https://www.jianshu.com/p/043966013dde

Yolo三部曲解读——Yolov3:https://zhuanlan.zhihu.com/p/76802514

Darknet53网络结构及代码实现:https://blog.csdn.net/weixin_48167570/article/details/120688156

一阶段目标检测器-RetinaNet网络理解:https://zhuanlan.zhihu.com/p/410436667

锚框(anchor box)/先验框(prior bounding box)概念介绍及其生成:https://blog.csdn.net/qq_46110834/article/details/111410923

YOLOv3数据输入:https://blog.csdn.net/weixin_42078618/article/details/85001224

链接一:https://github.com/xiao9616/yolo4_tensorflow2

链接二:https://github.com/zzh8829/yolov3-tf2