CentOS7安装Hadoop集群完整步骤(超级详细,亲测完美)

1. 安装3台centos7服务器

1.1.配置名字hadoop01\hadoop02\hadoop03

hostnamectl set-hostname hadoop01hostnamectl set-hostname hadoop02hostnamectl set-hostname hadoop031.2.修改hosts文件

vi /etc/hosts文件末尾添加以下内容:

hadoop01的ip地址 hadoop01

hadoop02的ip地址 hadoop02

hadoop03的ip地址 hadoop031.3.关闭防火墙

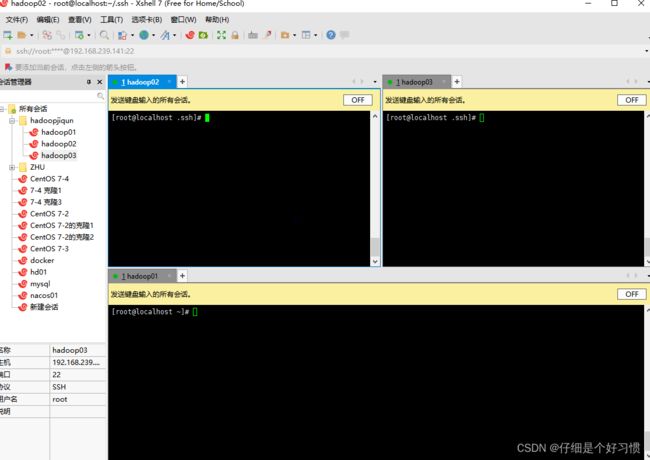

systemctl stop firewalldsystemctl disable firewalld2.xshell点击工具,选择发送键输入到所有会话

2.1.所有窗口状态改成NO

3.hadoop01输入以下命令

3.1.做ssh 公私钥 无秘;中途直接回车

ssh-keygen -t rsa -P ''3.2.copy公钥到hadoop02,hadoop03;输入yes,再输入密码

ssh-copy-id hadoop01ssh-copy-id hadoop02ssh-copy-id hadoop034.测试以上操作是否成功

4.1.hadoop02,hadoop03分别输入以下命令

cd .ssh/ls4.2.hadoop02输入以下命令

ssh hadoop02ssh hadoop03exit5.第2步的基础,hadoop02和hadoop03窗口状态改成OFF

5.1.输入以下命令,和第3步一样

ssh-keygen -t rsa -P ''ssh-copy-id hadoop01ssh-copy-id hadoop02ssh-copy-id hadoop035.2.以上操作都完成后hadoop01,hadoop02和hadoop03的窗口状态都改成OFF,任意一个窗口按下ctrl+l

6.安装chrony

yum -y install chrony7.安装wget

yum install -y gcc vim wget8.配置chrony

vim /etc/chrony.conf8.1.文件添加如下内容,注释掉server 0.centos.pool.ntp.org iburst

server ntp1.aliyun.com

server ntp2.aliyun.com

server ntp3.aliyun.com9.启动chrony

systemctl start chronyd10.安装psmisc

yum install -y psmisc11.备份原始源

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup12.下载源

wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo13.清除缓存

yum clean allyum makecache14.打开xftp,将jdk安装包分别拖到三台机器的opt文件夹下,然后执行以下命令,安装jdk

cd /opttar -zxf jdk-8u111-linux-x64.tar.gzmkdir softmv jdk1.8.0_111/ soft/jdk18014.1.配置环境变量

vim /etc/profile#java env

export JAVA_HOME=/opt/soft/jdk180

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jarsource /etc/profilejava -version15.打开xftp,将zookeeper安装包分别拖到三台机器的opt文件夹下,然后执行以下命令,安装zookeeper

tar -zxf zookeeper-3.4.5-cdh5.14.2.tar.gzmv zookeeper-3.4.5-cdh5.14.2 soft/zk34515.1.修改zoo.cfg文件

cd soft/zk345/conf/cp zoo_sample.cfg zoo.cfgvim zoo.cfg修改dataDir=/opt/soft/zk345/datas:

dataDir=/opt/soft/zk345/datas文件末尾加上以下内容:

server.1=192.168.239.137:2888:3888

server.2=192.168.239.141:2888:3888

server.3=192.168.239.142:2888:388816.创建datas文件夹

cd /opt/soft/zk345/mkdir datas17.hadoop01,hadoop02和hadoop03的窗口状态都改成ON

17.1.hadoop01页面输入以下命令

cd datasecho "1"> myidcat myid17.2.hadoop02页面输入以下命令

cd datasecho "2"> myidcat myid17.3.hadoop03页面输入以下命令

cd datasecho "3"> myidcat myid18.hadoop01,hadoop02和hadoop03的窗口状态都改成OFF

18.1.配置zookeeper运行环境

vim /etc/profile#Zookeeper env

export ZOOKEEPER_HOME=/opt/soft/zk345

export PATH=$PATH:$ZOOKEEPER_HOME/binsource /etc/profile19.启动zookeeper集群

zkServer.sh start20.jps命令查看,必须要有进程QuorumPeerMain

jps21.打开xftp,将Hadoop安装包分别拖到三台机器的opt文件夹下,然后执行以下命令,安装Hadoop集群

cd /opttar -zxf hadoop-2.6.0-cdh5.14.2.tar.gzmv hadoop-2.6.0-cdh5.14.2 soft/hadoop260cd soft/hadoop260/etc/hadoop21.1.添加对应各个文件夹

mkdir -p /opt/soft/hadoop260/tmp mkdir -p /opt/soft/hadoop260/dfs/journalnode_data mkdir -p /opt/soft/hadoop260/dfs/edits mkdir -p /opt/soft/hadoop260/dfs/datanode_datamkdir -p /opt/soft/hadoop260/dfs/namenode_data21.2.配置hadoop-env.sh

vim hadoop-env.sh修改JAVA_HOME和HADOOP_CONF_DIR的值如下:

export JAVA_HOME=/opt/soft/jdk180

export HADOOP_CONF_DIR=/opt/soft/hadoop260/etc/hadoop21.3.配置core-site.xml,快捷键shift+G到文件末尾添加如下内容(注意改机器名!!!)

vim core-site.xml

fs.defaultFS

hdfs://hacluster

hadoop.tmp.dir

file:///opt/soft/hadoop260/tmp

io.file.buffer.size

4096

ha.zookeeper.quorum

hadoop01:2181,hadoop02:2181,hadoop03:2181

hadoop.proxyuser.root.hosts

*

hadoop.proxyuser.root.groups

*

21.4.配置hdfs-site.xml,文件末尾添加如下内容(注意改机器名!!!)

vim hdfs-site.xml

dfs.block.size

134217728

dfs.replication

3

dfs.name.dir

file:///opt/soft/hadoop260/dfs/namenode_data

dfs.data.dir

file:///opt/soft/hadoop260/dfs/datanode_data

dfs.webhdfs.enabled

true

dfs.datanode.max.transfer.threads

4096

dfs.nameservices

hacluster

dfs.ha.namenodes.hacluster

nn1,nn2

dfs.namenode.rpc-address.hacluster.nn1

hadoop01:9000

dfs.namenode.servicepc-address.hacluster.nn1

hadoop01:53310

dfs.namenode.http-address.hacluster.nn1

hadoop01:50070

dfs.namenode.rpc-address.hacluster.nn2

hadoop02:9000

dfs.namenode.servicepc-address.hacluster.nn2

hadoop02:53310

dfs.namenode.http-address.hacluster.nn2

hadoop02:50070

dfs.namenode.shared.edits.dir

qjournal://hadoop01:8485;hadoop02:8485;hadoop03:8485/hacluster

dfs.journalnode.edits.dir

/opt/soft/hadoop260/dfs/journalnode_data

dfs.namenode.edits.dir

/opt/soft/hadoop260/dfs/edits

dfs.ha.automatic-failover.enabled

true

dfs.client.failover.proxy.provider.hacluster

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.ha.fencing.methods

sshfence

dfs.ha.fencing.ssh.private-key-files

/root/.ssh/id_rsa

dfs.premissions

false

21.5.配置mapred-site.xml,文件末尾添加如下内容(注意改机器名!!!)

cp mapred-site.xml.template mapred-site.xmlvim mapred-site.xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

hadoop01:10020

mapreduce.jobhistory.webapp.address

hadoop01:19888

mapreduce.job.ubertask.enable

true

21.6.配置yarn-site.xml,文件末尾添加如下内容(注意改机器名!!!)

vim yarn-site.xml

yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.cluster-id

hayarn

yarn.resourcemanager.ha.rm-ids

rm1,rm2

yarn.resourcemanager.hostname.rm1

hadoop02

yarn.resourcemanager.hostname.rm2

hadoop03

yarn.resourcemanager.zk-address

hadoop01:2181,hadoop02:2181,hadoop03:2181

yarn.resourcemanager.recovery.enabled

true

yarn.resourcemanager.store.class

org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore

yarn.resourcemanager.hostname

hadoop03

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.log-aggregation-enable

true

yarn.log-aggregation.retain-seconds

604800

22.配置slaves

vim slaves22.1.快捷键dd删除localhost,添加如下内容

hadoop01

hadoop02

hadoop0323.配置hadoop环境变量

vim /etc/profile#hadoop env

export HADOOP_HOME=/opt/soft/hadoop260

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

export HADOOP_INSTALL=$HADOOP_HOMEsource /etc/profile24.启动Hadoop集群

24.1.输入以下命令

hadoop-daemon.sh start journalnode24.2.输入jps命令,会发现多了一个进程JournalNode

jps24.3.格式化namenode(只在hadoop01主机上)(hadoop02和hadoop03的窗口状态改成ON)

hdfs namenode -format24.4.将hadoop01上的Namenode的元数据复制到hadoop02相同位置

scp -r /opt/soft/hadoop260/dfs/namenode_data/current/ root@hadoop02:/opt/soft/hadoop260/dfs/namenode_data24.5.在hadoop01上格式化故障转移控制器zkfc

hdfs zkfc -formatZK24.6.在hadoop01上启动dfs服务,再输入jps查看进程

start-dfs.shjps24.7.在hadoop03上启动yarn服务,再输入jps查看进程

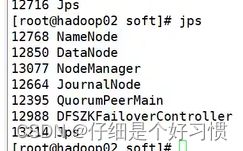

start-yarn.shjps24.8.在hadoop02上输入jps查看进程,如下图

24.9.在hadoop01上启动history服务器,jps则会多了一个JobHistoryServer的进程

mr-jobhistory-daemon.sh start historyserverjps24.10.在hadoop02上启动resourcemanager服务,jps则会多了一个Resourcemanager的进程

yarn-daemon.sh start resourcemanagerjps25.检查集群情况

25.1.在hadoop01上查看服务状态,hdfs haadmin -getServiceState nn1则会对应显示active,nn2则显示standby

hdfs haadmin -getServiceState nn1hdfs haadmin -getServiceState nn225.2.在hadoop03上查看resourcemanager状态,yarn rmadmin -getServiceState rm1则会对应显示standby,rm2则显示active

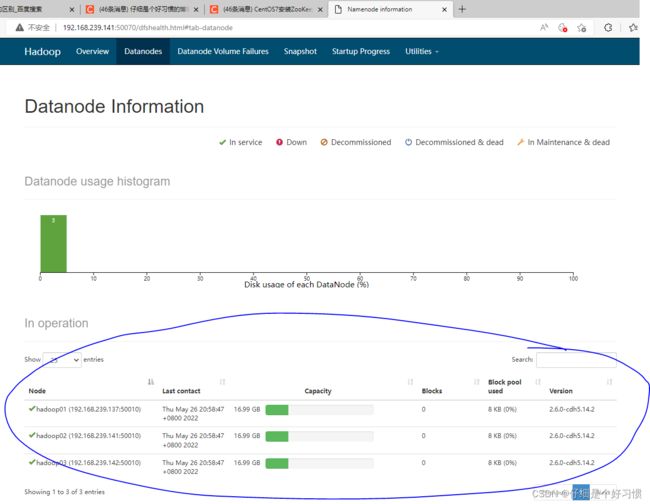

yarn rmadmin -getServiceState rm1yarn rmadmin -getServiceState rm226.浏览器输入IP地址:50070,对比以下图片

26.1.hadoop01的IP地址,注意查看是否为“active”

26.2.hadoop02的IP地址,注意查看是否为“standby”

26.3.最后选择上方的Datanodes,查看是否是三个节点,如何是,则高可用hadoop集群搭建成功!!!