python内存分配失败_Python多处理 – 调试OSError:[Errno 12]无法分配内存

我面临以下问题.我正在尝试并行化更新文件的函数,但由于OSError,我无法启动Pool():[Errno 12]无法分配内存.我开始在服务器上四处看看,这并不像我使用旧的,弱的/实际内存.

见htop:

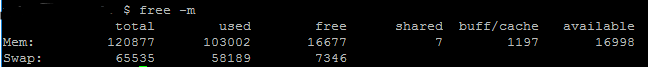

另外,free -m显示我除了大约7GB的交换内存外还有足够的RAM:

而我正在尝试使用的文件也不是那么大.我将粘贴我的代码(和堆栈跟踪),其中,大小如下:

使用的预测矩阵数据帧占用大约.根据pandasdataframe.memory_usage()80MB

文件geo.geojson是2MB

我该如何调试呢?我可以检查什么以及如何检查?感谢您的任何提示/技巧!

码:

def parallelUpdateJSON(paramMatch, predictionmatrix, data):

for feature in data['features']:

currentfeature = predictionmatrix[(predictionmatrix['SId']==feature['properties']['cellId']) & paramMatch]

if (len(currentfeature) > 0):

feature['properties'].update({"style": {"opacity": currentfeature.AllActivity.item()}})

else:

feature['properties'].update({"style": {"opacity": 0}})

def writeGeoJSON(weekdaytopredict, hourtopredict, predictionmatrix):

with open('geo.geojson') as f:

data = json.load(f)

paramMatch = (predictionmatrix['Hour']==hourtopredict) & (predictionmatrix['Weekday']==weekdaytopredict)

pool = Pool()

func = partial(parallelUpdateJSON, paramMatch, predictionmatrix)

pool.map(func, data)

pool.close()

pool.join()

with open('output.geojson', 'w') as outfile:

json.dump(data, outfile)

堆栈跟踪:

---------------------------------------------------------------------------

OSError Traceback (most recent call last)

in ()

----> 1 writeGeoJSON(6, 15, baseline)

in writeGeoJSON(weekdaytopredict, hourtopredict, predictionmatrix)

14 print("Start loop")

15 paramMatch = (predictionmatrix['Hour']==hourtopredict) & (predictionmatrix['Weekday']==weekdaytopredict)

---> 16 pool = Pool(2)

17 func = partial(parallelUpdateJSON, paramMatch, predictionmatrix)

18 print(predictionmatrix.memory_usage())

/usr/lib/python3.5/multiprocessing/context.py in Pool(self, processes, initializer, initargs, maxtasksperchild)

116 from .pool import Pool

117 return Pool(processes, initializer, initargs, maxtasksperchild,

--> 118 context=self.get_context())

119

120 def RawValue(self, typecode_or_type, *args):

/usr/lib/python3.5/multiprocessing/pool.py in __init__(self, processes, initializer, initargs, maxtasksperchild, context)

166 self._processes = processes

167 self._pool = []

--> 168 self._repopulate_pool()

169

170 self._worker_handler = threading.Thread(

/usr/lib/python3.5/multiprocessing/pool.py in _repopulate_pool(self)

231 w.name = w.name.replace('Process', 'PoolWorker')

232 w.daemon = True

--> 233 w.start()

234 util.debug('added worker')

235

/usr/lib/python3.5/multiprocessing/process.py in start(self)

103 'daemonic processes are not allowed to have children'

104 _cleanup()

--> 105 self._popen = self._Popen(self)

106 self._sentinel = self._popen.sentinel

107 _children.add(self)

/usr/lib/python3.5/multiprocessing/context.py in _Popen(process_obj)

265 def _Popen(process_obj):

266 from .popen_fork import Popen

--> 267 return Popen(process_obj)

268

269 class SpawnProcess(process.BaseProcess):

/usr/lib/python3.5/multiprocessing/popen_fork.py in __init__(self, process_obj)

18 sys.stderr.flush()

19 self.returncode = None

---> 20 self._launch(process_obj)

21

22 def duplicate_for_child(self, fd):

/usr/lib/python3.5/multiprocessing/popen_fork.py in _launch(self, process_obj)

65 code = 1

66 parent_r, child_w = os.pipe()

---> 67 self.pid = os.fork()

68 if self.pid == 0:

69 try:

OSError: [Errno 12] Cannot allocate memory

UPDATE

根据@ robyschek的解决方案,我已将我的代码更新为:

global g_predictionmatrix

def worker_init(predictionmatrix):

global g_predictionmatrix

g_predictionmatrix = predictionmatrix

def parallelUpdateJSON(paramMatch, data_item):

for feature in data_item['features']:

currentfeature = predictionmatrix[(predictionmatrix['SId']==feature['properties']['cellId']) & paramMatch]

if (len(currentfeature) > 0):

feature['properties'].update({"style": {"opacity": currentfeature.AllActivity.item()}})

else:

feature['properties'].update({"style": {"opacity": 0}})

def use_the_pool(data, paramMatch, predictionmatrix):

pool = Pool(initializer=worker_init, initargs=(predictionmatrix,))

func = partial(parallelUpdateJSON, paramMatch)

pool.map(func, data)

pool.close()

pool.join()

def writeGeoJSON(weekdaytopredict, hourtopredict, predictionmatrix):

with open('geo.geojson') as f:

data = json.load(f)

paramMatch = (predictionmatrix['Hour']==hourtopredict) & (predictionmatrix['Weekday']==weekdaytopredict)

use_the_pool(data, paramMatch, predictionmatrix)

with open('trentino-grid.geojson', 'w') as outfile:

json.dump(data, outfile)

我仍然得到同样的错误.另外,根据documentation,map()应该将我的数据分成块,所以我认为它不应该复制我的80MB rownum时间.我可能错了……

另外我注意到如果我使用较小的输入(~11MB而不是80MB),我不会得到错误.所以我想我正在尝试使用太多的内存,但我无法想象它是如何从80MB到16GB的RAM无法处理的.