k8s:资源监控:k8s资源限制、Metrics-Server、HPA、Helm

文章目录

-

- 一、k8s资源监控

-

- 1.k8s资源限制

-

- 1)内存限制

- 2)CPU限制

- 3)为namespace设置资源限制

- 4)为namespace设置资源配额

- 5)为 Namespace 配置Pod配额

- 2.Metrics-Server部署

- 3.Dashboard部署

-

- 默认dashboard对集群没有操作权限,需要授权:

- 4.HPA实例

-

- 创建 Horizontal Pod Autoscaler

- 增加负载

- 5.Helm

-

- 1)Helm安装

- 2)Helm 添加第三方 Chart 库:

- 3)建立本地charts库

- 4)Helm部署nfs-client-provisioner

- 5)helm部署nginx-ingress

- 6)helm部署kubeapps应用,为Helm提供web UI界面管理

一、k8s资源监控

##起开本地仓库

[root@server1 harbor]# docker-compose ps

[root@server1 harbor]# docker-compose start

1.k8s资源限制

#Kubernetes采用request和limit两种限制类型来对资源进行分配

1)内存限制

[root@server1 harbor]# docker search stress

[root@server1 harbor]# docker pull progrium/stress

[root@server1 harbor]# docker tag progrium/stress reg.westos.org/library/progrium/stress

[root@server1 harbor]# docker push reg.westos.org/library/progrium/stress

[root@server2 ~]# mkdir limit

[root@server2 ~]# cd limit/

[root@server2 limit]# vim pod.yaml##开一个200M进程,且requests[root@server2 limit]# kubectl delete -f pod.yaml --force

[root@server2 limit]# vim pod.yaml

memory: 300Mi##限制改成300,就能启动成功

[root@server2 limit]# kubectl apply -f pod.yaml

[root@server2 limit]# kubectl get pod

NAME READY STATUS RESTARTS AGE

memory-demo 1/1 Running 0 6s

2)CPU限制

[root@server2 limit]# vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: stress

resources:

limits:

cpu: "10"

requests:

cpu: "5"

args:

- -c

- "2"

[root@server2 limit]# kubectl apply -f pod.yaml

pod/cpu-demo created

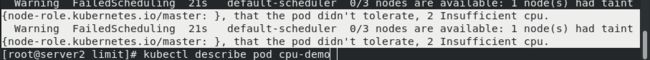

[root@server2 limit]# kubectl get pod##调度节点上没有超过2个CPU的,所以调度失败

NAME READY STATUS RESTARTS AGE

cpu-demo 0/1 Pending 0 4s

[root@server2 limit]# kubectl describe pod cpu-demo

[root@server2 limit]# vim pod.yaml##limits:2;requests:1 ,就能运行成功

requests:

cpu: "1"

[root@server2 limit]# lscpu#查看cpu信息

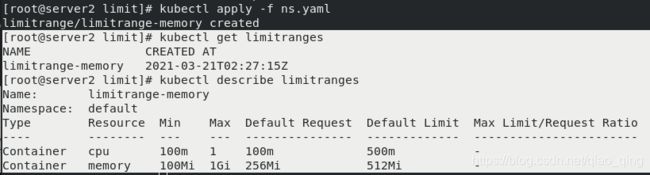

3)为namespace设置资源限制

[root@server2 limit]# vim ns.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: limitrange-memory

spec:

limits:

- default:

cpu: 0.5

memory: 512Mi

defaultRequest:

cpu: 0.1

memory: 256Mi

max:

cpu: 1

memory: 1Gi

min:

cpu: 0.1

memory: 100Mi

type: Container

[root@server2 limit]# kubectl apply -f ns.yaml

limitrange/limitrange-memory created

[root@server2 limit]# kubectl get limitranges

NAME CREATED AT

limitrange-memory 2021-03-21T02:27:15Z

[root@server2 limit]# kubectl describe limitranges

[root@server2 limit]# vim pod.yaml

[root@server2 limit]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: nginx

[root@server2 limit]# kubectl apply -f pod.yaml

[root@server2 limit]# kubectl get pod

NAME READY STATUS RESTARTS AGE

cpu-demo 1/1 Running 0 5s

[root@server2 limit]# kubectl describe pod cpu-demo ##新建的pod限制是namespace默认的设置

[root@server2 limit]# kubectl delete -f pod.yaml --force

[root@server2 limit]# vim pod.yaml

[root@server2 limit]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: nginx

resources:

limits:

cpu: "2"

requests:

cpu: "0.1"

[root@server2 limit]# kubectl apply -f pod.yaml ##namespace中的设置maxcpu是1,而pod设置是2,不满足,直接拒绝

Error from server (Forbidden): error when creating "pod.yaml": pods "cpu-demo" is forbidden: maximum cpu usage per Container is 1, but limit is 2

[root@server2 limit]# vim pod.yaml

[root@server2 limit]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: nginx

resources:

limits:

cpu: "1"

memory: "1Gi"

requests:

cpu: "0.1"

[root@server2 limit]# kubectl apply -f pod.yaml

pod/cpu-demo created

[root@server2 limit]# kubectl describe pod cpu-demo ##pod,设置limits时,requests和limits保持一致,都不设置时会与default一致

4)为namespace设置资源配额

创建的ResourceQuota对象将在default名字空间中添加以下限制:

每个容器必须设置内存请求(memory request),内存限额(memory limit),cpu请求(cpu request)和cpu限额(cpu limit)。

##namespace和pod都没设置限额时,pod是不能创建的。

[root@server2 limit]# kubectl delete -f ns.yaml --force

[root@server2 limit]# kubectl delete -f pod.yaml --force

[root@server2 limit]# vim quota.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: mem-cpu-demo

spec:

hard:

requests.cpu: "1"

requests.memory: 1Gi

limits.cpu: "2"

limits.memory: 2Gi

[root@server2 limit]# vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: nginx

[root@server2 limit]# kubectl apply -f pod.yaml

Error from server (Forbidden): error when creating "pod.yaml": pods "cpu-demo" is forbidden: failed quota: mem-cpu-demo: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

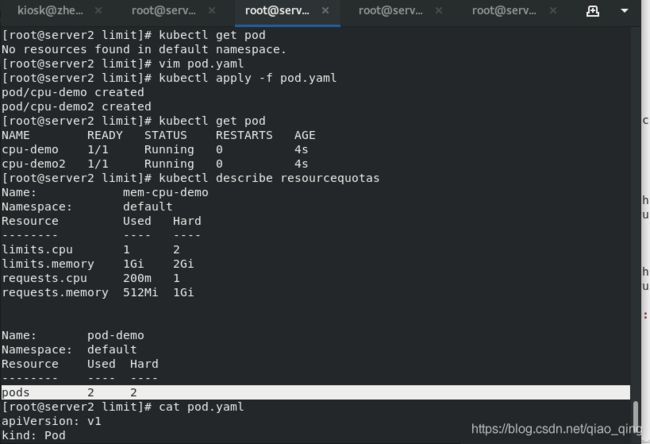

5)为 Namespace 配置Pod配额

[root@server2 limit]# cat quota.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: mem-cpu-demo

spec:

hard:

requests.cpu: "1"

requests.memory: 1Gi

limits.cpu: "2"

limits.memory: 2Gi

---

apiVersion: v1

kind: ResourceQuota

metadata:

name: pod-demo

spec:

hard:

pods: "2"

[root@server2 limit]# kubectl apply -f quota.yaml

resourcequota/mem-cpu-demo created

resourcequota/pod-demo created

[root@server2 limit]# kubectl get resourcequotas

[root@server2 limit]# vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: nginx

---

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo2

spec:

containers:

- name: cpu-demo

image: nginx

[root@server2 limit]# kubectl apply -f pod.yaml

pod/cpu-demo created

pod/cpu-demo2 created

[root@server2 limit]# kubectl get pod

[root@server2 limit]# kubectl describe resourcequotas ##能够运行2个pod,且不能多于2个

####清理环境

[root@server2 limit]# kubectl delete -f pod.yaml --force

[root@server2 limit]# kubectl delete -f ns.yaml --force

[root@server2 limit]# kubectl delete -f quota.yaml --force

2.Metrics-Server部署

%资源下载:https://github.com/kubernetes-incubator/metrics-server

容器相关的 Metrics 主要来自于 kubelet 内置的 cAdvisor 服务,有了Metrics-Server之后,用户就可以通过标准的 Kubernetes API 来访问到这些监控数据。

Metrics Server 并不是 kube-apiserver 的一部分,而是通过 Aggregator 这种插件机制,在独立部署的情况下同 kube-apiserver 一起统一对外服务的。

kube-aggregator 其实就是一个根据 URL 选择具体的 API 后端的代理服务器。

Metrics-server属于Core metrics(核心指标),提供API metrics.k8s.io,仅提供Node和Pod的CPU和内存使用情况。而其他Custom Metrics(自定义指标)由Prometheus等组件来完成。

[root@server1 harbor]# docker search metrics-server

[root@server1 harbor]# docker pull bitnami/metrics-server:0.4.2

[root@server1 harbor]# docker tag bitnami/metrics-server:0.4.2 reg.westos.org/library/metrics-server:0.4.2

[root@server1 harbor]# docker push reg.westos.org/library/metrics-server:0.4.2

[root@server2 ~]# mkdir metrics

[root@server2 ~]# cd metrics/

[root@server2 metrics]# pwd

/root/metrics

[root@server2 metrics]# ls

components.yaml

[root@server2 metrics]# vim components.yaml #改镜像

image: metrics-server:0.4.2

[root@server2 metrics]# kubectl apply -f components.yaml

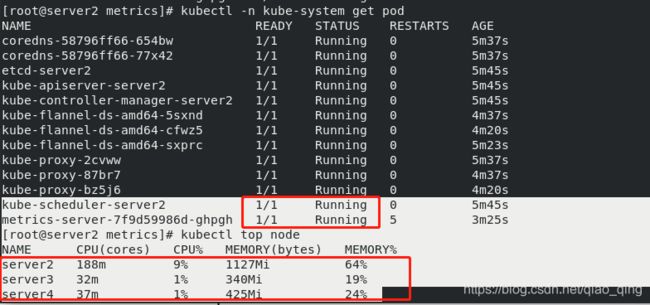

[root@server2 metrics]# kubectl -n kube-system get pod

NAME READY STATUS RESTARTS AGE

metrics-server-7f9d59986d-4mntz 0/1 Running 0 4s

##探针没起来,logs查看是证书不对

[root@server2 metrics]# kubectl -n kube-system logs metrics-server-7f9d59986d-4mntz

错误:x509: certificate signed by unknown authority

[root@server2 metrics]# vim /var/lib/kubelet/config.yaml

#最后添加,启动证书签发功能

serverTlsBootstrap: true

[root@server2 metrics]# systemctl restart kubetel

##所有节点2,3,4都要改

##重启kubelet

[root@server2 metrics]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-8rc7f 2s kubernetes.io/kubelet-serving system:node:server4 Pending

csr-pxr6x 12s kubernetes.io/kubelet-serving system:node:server3 Pending

csr-vqphb 22s kubernetes.io/kubelet-serving system:node:server2 Pending

[root@server2 metrics]# kubectl certificate approve csr-8rc7f

[root@server2 metrics]# kubectl certificate approve csr-pxr6x

[root@server2 metrics]# kubectl certificate approve csr-vqphb

[root@server2 metrics]# kubectl -n kube-system get pod##如下结果,此时说明环境部署成功 STATUS:1/1

NAME READY STATUS RESTARTS AGE

metrics-server-7f9d59986d-ghpgh 1/1 Running 5 3m25s

[root@server2 metrics]# kubectl top node##如下结果,此时说明环境部署成功

3.Dashboard部署

Dashboard可以给用户提供一个可视化的 Web 界面来查看当前集群的各种信息。用户可以用 Kubernetes Dashboard 部署容器化的应用、监控应用的状态、执行故障排查任务以及管理 Kubernetes 各种资源。

#拉取镜像

[root@server1 harbor]# docker load -i dashboard-v2.2.0.tar

[root@server1 harbor]# docker load -i metrics-server-0.4.2.tar

[root@server1 harbor]# docker images

[root@server1 harbor]# docker tag kubernetesui/metrics-scraper:v1.0.6 reg.westos.org/kubernetesui/metrics-scraper:v1.0.6

[root@server1 harbor]# docker push reg.westos.org/kubernetesui/metrics-scraper:v1.0.6

[root@server1 harbor]# docker tag kubernetesui/dashboard:v2.2.0 reg.westos.org/kubernetesui/dashboard:v2.2.0

[root@server1 harbor]# docker push reg.westos.org/kubernetesui/dashboard:v2.2.0

[root@server2 metrics]# ls

components.yaml recommended.yaml

[root@server2 metrics]# kubectl apply -f recommended.yaml

[root@server2 metrics]# kubectl get ns

NAME STATUS AGE

default Active 46m

kube-node-lease Active 46m

kube-public Active 46m

kube-system Active 46m

kubernetes-dashboard Active 3s

[root@server2 metrics]# kubectl -n kubernetes-dashboard get pod

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-79c5968bdc-hxl64 1/1 Running 0 2m4s

kubernetes-dashboard-9f9799597-jpdvk 1/1 Running 0 2m4s

[root@server2 metrics]# kubectl -n kubernetes-dashboard describe svc kubernetes-dashboard

[root@server2 metrics]# kubectl -n kubernetes-dashboard edit svc kubernetes-dashboard

type: NodePort

[root@server2 metrics]# kubectl -n kubernetes-dashboard get svc##NodePort

[root@server2 metrics]# kubectl -n kubernetes-dashboard get secrets

kubernetes-dashboard-token-2f5wf kubernetes.io/service-account-token 3 5m32s

[root@server2 metrics]# kubectl -n kubernetes-dashboard describe secrets kubernetes-dashboard-token-2f5wf##查看token

%%网页访问https://172.25.3.2:31859,输入token

默认dashboard对集群没有操作权限,需要授权:

[root@server2 metrics]# kubectl -n kubernetes-dashboard get sa

NAME SECRETS AGE

default 1 14m

kubernetes-dashboard 1 14m

[root@server2 metrics]# vim rbac.yaml

[root@server2 metrics]# cat rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

[root@server2 metrics]# kubectl apply -f rbac.yaml

%%网页重新访问https://172.25.3.2:31859,输入token

4.HPA实例

https://kubernetes.io/zh/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/

#拉取镜像

[root@server1 ~]# docker search hpa-example

[root@server1 ~]# docker pull mirrorgooglecontainers/hpa-example

[root@server1 ~]# docker tag mirrorgooglecontainers/hpa-example:latest reg.westos.org/library/hpa-example:latest

[root@server1 ~]# docker push reg.westos.org/library/hpa-example:latest

[root@server1 ~]# docker pull busybox

[root@server1 ~]# docker tag busybox:latest reg.westos.org/library/busybox:latest

[root@server1 ~]# docker push reg.westos.org/library/busybox:latest

[root@server2 hpa]# vim hpa-apache1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

spec:

selector:

matchLabels:

run: php-apache

replicas: 1

template:

metadata:

labels:

run: php-apache

spec:

containers:

- name: php-apache

image: hpa-example

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

---

apiVersion: v1

kind: Service

metadata:

name: php-apache

labels:

run: php-apache

spec:

ports:

- port: 80

selector:

run: php-apache

[root@server2 hpa]# kubectl apply -f hpa-apache.yaml

[root@server2 hpa]# kubectl get pod

NAME READY STATUS RESTARTS AGE

php-apache-6cc67f7957-x5xht 1/1 Running 0 48s

[root@server2 hpa]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 87m

php-apache ClusterIP 10.102.117.65 80/TCP 57s

[root@server2 hpa]# curl 10.102.117.65

OK!

创建 Horizontal Pod Autoscaler

HPA 将(通过 Deployment)增加或者减少 Pod 副本的数量以保持所有 Pod 的平均 CPU 利用率在 50% 左右(由于每个 Pod 请求 200 毫核的 CPU,这意味着平均 CPU 用量为 100 毫核)。

[root@server2 hpa]# kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10

[root@server2 hpa]# kubectl apply -f hpa-apache2.yaml

error: error validating "hpa-apache2.yaml": error validating data: apiVersion not set; if you choose to ignore these errors, turn validation off with --validate=false

[root@server2 hpa]# kubectl get hpa

No resources found in default namespace.

[root@server2 hpa]# vim hpa-apache2.yaml

[root@server2 hpa]# cat hpa-apache2.yaml

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: hpa-example

spec:

maxReplicas: 10

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

metrics:

- type: Resource

resource:

name: cpu

target:

averageUtilization: 60

type: Utilization

- type: Resource

resource:

name: memory

target:

averageValue: 50Mi

type: AverageValue

[root@server2 hpa]# kubectl apply -f hpa-apache2.yaml

horizontalpodautoscaler.autoscaling/hpa-example created

[root@server2 hpa]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-example Deployment/php-apache /50Mi, /60% 1 10 0 9s

[root@server2 hpa]# kubectl top pod

NAME CPU(cores) MEMORY(bytes)

php-apache-6cc67f7957-x5xht 1m 6Mi

[root@server2 hpa]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-example Deployment/php-apache 6397952/50Mi, 0%/60% 1 10 1 28s

[root@server2 hpa]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-example Deployment/php-apache 6414336/50Mi, 0%/60% 1 10 1 16s

php-apache Deployment/php-apache 0%/50% 1 10 1 3m

增加负载

[root@server2 hpa]# kubectl run -i --tty load-generator --rm --image=busybox --restart=Never -- /bin/sh -c "while sleep 0.01; do wget -q -O- http://php-apache; done"###让他一直OK

###另开连server2,##每个pod的cpu都小于100,均衡

[root@server2 ~]# kubectl top pod

NAME CPU(cores) MEMORY(bytes)

load-generator 12m 0Mi

php-apache-6cc67f7957-48z5f 48m 8Mi

php-apache-6cc67f7957-4srg2 118m 8Mi

php-apache-6cc67f7957-52hml 78m 8Mi

php-apache-6cc67f7957-6d2pw 109m 8Mi

php-apache-6cc67f7957-dll52 130m 8Mi

php-apache-6cc67f7957-tpt5f 121m 8Mi

[root@server2 hpa]# kubectl get hpa

[root@server2 hpa]# kubectl delete -f hpa-apache1.yaml --force

[root@server2 hpa]# kubectl delete -f hpa-apache2.yaml --force

5.Helm

Helm是Kubernetes 应用的包管理工具,主要用来管理 Charts,类似Linux系统的yum

Helm V3 与 V2 最大的区别在于去掉了tiller

%Helm当前最新版本 v3.1.0 官网:https://helm.sh/docs/intro/

1)Helm安装

[root@server2 ~]# mkdir heml

[root@server2 ~]# cd heml/

[root@server2 heml]# ls

helm-v3.4.1-linux-amd64.tar.gz

[root@server2 heml]# tar zxf helm-v3.4.1-linux-amd64.tar.gz

[root@server2 heml]# ls

helm-v3.4.1-linux-amd64.tar.gz linux-amd64

[root@server2 heml]# cd linux-amd64/

[root@server2 linux-amd64]# ls

helm LICENSE README.md

[root@server2 linux-amd64]# mv helm /usr/local/bin/

[root@server2 heml]# echo "source <(helm completion bash)" >> ~/.bashrc##补齐命令

[root@server2 heml]# cd

[root@server2 ~]# source .bashrc

2)Helm 添加第三方 Chart 库:

[root@server2 ~]# helm repo add stable https://mirror.azure.cn/kubernetes/charts/

[root@server2 ~]# helm repo add bitnami https://charts.bitnami.com/bitnami

[root@server2 heml]# helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts/

bitnami https://charts.bitnami.com/bitnami

[root@server2 heml]# helm repo remove stable

"stable" has been removed from your repositories

[root@server2 heml]# helm search repo nginx

[root@server2 heml]# helm pull bitnami/nginx

[root@server2 ~]# helm pull bitnami/nginx --version 8.7.0

[root@server2 ~]# ls

dashboard-v2.2.0.tar docker-ce heml kube-flannel.yml limit metrics nginx-8.7.0.tgz pod.yml

[root@server2 ~]# tar zxf nginx-8.7.0.tgz

[root@server2 ~]# ls

dashboard-v2.2.0.tar heml limit nginx pod.yml

docker-ce kube-flannel.yml metrics nginx-8.7.0.tgz

[root@server2 ~]# cd nginx/

[root@server2 nginx]# ls

Chart.lock charts Chart.yaml ci README.md templates values.schema.json values.yaml

[root@server2 nginx]# ls templates/

deployment.yaml hpa.yaml pdb.yaml svc.yaml

extra-list.yaml ingress.yaml server-block-configmap.yaml tls-secrets.yaml

health-ingress.yaml ldap-daemon-secrets.yaml serviceaccount.yaml

_helpers.tpl NOTES.txt servicemonitor.yaml

[root@server2 nginx]# vim values.yaml

tag: 1.19.7-debian-10-r1

service:

## Service type

##

type: ClusterIP

##网页创建仓库bitnami

[root@server1 ~]# docker pull bitnami/nginx:1.19.7-debian-10-r1

[root@server1 ~]# docker tag bitnami/nginx:1.19.7-debian-10-r1 reg.westos.org/bitnami/nginx:1.19.7-debian-10-r1

[root@server1 ~]# docker push reg.westos.org/bitnami/nginx:1.19.7-debian-10-r1

[root@server2 nginx]# helm install webserver .#在当前目录下安装

[root@server2 nginx]# kubectl get all##一个svc,一个deployment

[root@server2 nginx]# vim values.yaml

replicaCount: 3

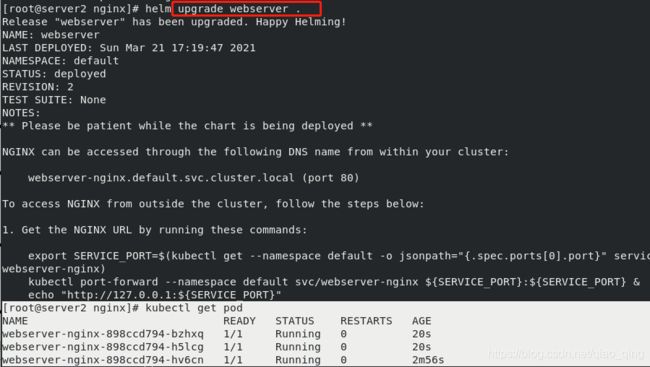

[root@server2 nginx]# helm upgrade webserver .##更新update

[root@server2 nginx]# kubectl get pod

[root@server2 nginx]# helm history webserver ##history看更新版本

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Sun Mar 21 17:17:12 2021 superseded nginx-8.7.0 1.19.7 Install complete

2 Sun Mar 21 17:19:47 2021 deployed nginx-8.7.0 1.19.7 Upgrade complete

[root@server2 nginx]# helm rollback webserver 1##回滚到1版本

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

webserver-nginx-898ccd794-bzhxq 1/1 Running 0 20s

[root@server2 nginx]# helm uninstall webserver ##uninstall,删除所有版本

3)建立本地charts库

##构建一个 Helm Chart:

[root@server2 ~]# cd heml/

[root@server2 heml]# ls

helm-v3.4.1-linux-amd64.tar.gz linux-amd64 nginx-8.8.0.tgz

[root@server2 heml]# helm create mychart

Creating mychart

[root@server2 heml]# cd mychart/

[root@server2 mychart]# tree .

-bash: tree: command not found

[root@server2 mychart]# yum install tree -y

[root@server1 harbor]# docker-compose down

[root@server1 harbor]# ./prepare

[root@server1 harbor]# ./install.sh --help

[root@server1 harbor]# ./install.sh --with-chartmuseum

[root@server2 mychart]# vim Chart.yaml #mychart的应用描述信息

appVersion: v1

[root@server2 mychart]# vim values.yaml #应用部署信息

image:

repository: myapp

pullPolicy: IfNotPresent

# Overrides the image tag whose default is the chart appVersion.

tag: "v1"

[root@server2 mychart]# helm lint . #检查依赖和模板配置是否正确,语法

[root@server2 mychart]# cd ..

[root@server2 heml]# ls

helm-v3.4.1-linux-amd64.tar.gz linux-amd64 mychart nginx-8.8.0.tgz

[root@server2 heml]# helm package mychart/ #将应用打包

[root@server2 heml]# ls

helm-v3.4.1-linux-amd64.tar.gz linux-amd64 mychart mychart-0.1.0.tgz nginx-8.8.0.tgz

##网页harbor建立本地chart仓库

[root@server2 heml]# cd

[root@server2 ~]# ls

1.tar docker-ce kube-flannel.yml metrics nginx-8.7.0.tgz

dashboard-v2.2.0.tar heml limit nginx pod.yml

[root@server2 ~]# cd /etc/docker/

[root@server2 docker]# cd certs.d/

[root@server2 certs.d]# ls

reg.westos.org

[root@server2 certs.d]# cd reg.westos.org/

[root@server2 reg.westos.org]# ls

ca.crt

[root@server2 reg.westos.org]# cp ca.crt /etc/pki/ca-trust/

[root@server2 reg.westos.org]# cp ca.crt /etc/pki/ca-trust/source/anchors/

[root@server2 reg.westos.org]# update-ca-trust

[root@server2 reg.westos.org]# cd

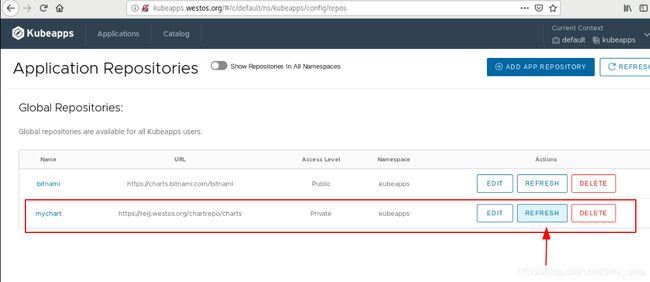

%%添加本地仓库

[root@server2 ~]# helm repo add mychart https://reg.westos.org/chartrepo/charts

%%安装helm-push插件:

[root@server2 heml]# ls

helm-v3.4.1-linux-amd64.tar.gz

[root@server2 heml]# helm env

[root@server2 heml]# mkdir -p /root/.local/share/helm/plugins/push

[root@server2 heml]# tar zxf helm-v3.4.1-linux-amd64.tar.gz -C /root/.local/share/helm/plugins/push##解压到插件目录下

[root@server2 heml]# helm push mychart-0.1.0.tgz mychart --insecure -u admin -p westos##忽略证书,指定用户和密码

[root@server2 heml]# helm repo update ##上传镜像后,要更新一下镜像源update

[root@server2 heml]# helm install mygame mychart/mychart --debug##安装调试

[root@server2 heml]# cd mychart/

[root@server2 mychart]# ls

charts Chart.yaml templates values.yaml

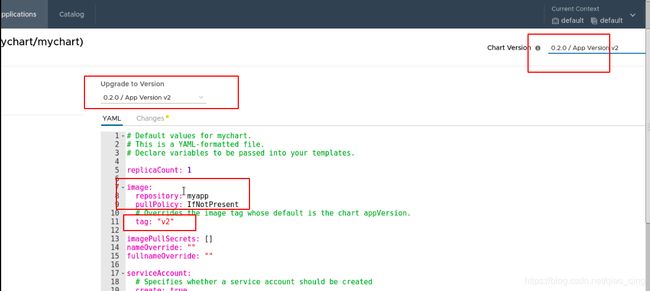

[root@server2 mychart]# vim Chart.yaml

version: 0.2.0

[root@server2 mychart]# vim values.yaml

image:

repository: myapp

pullPolicy: IfNotPresent

# Overrides the image tag whose default is the chart appVersion.

tag: "v2"

[root@server2 mychart]# cd ..

[root@server2 heml]# helm package mychart/

[root@server2 heml]# helm push mychart-0.2.0.tgz mychart --insecure -u admin -p westos

[root@server2 heml]# helm history mygame

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Sat Mar 27 16:01:05 2021 deployed mychart-0.1.0 v1 Install complete

[root@server2 heml]# helm rollback mygame 1

Rollback was a success! Happy Helming!

[root@server2 heml]# helm uninstall mygame

4)Helm部署nfs-client-provisioner

[root@server2 heml]# helm repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/

[root@server2 heml]# helm pull nfs-subdir-external-provisioner/nfs-subdir-external-provisioner

[root@server2 heml]# ls

nfs-subdir-external-provisioner-4.0.5.tgz

[root@server2 heml]# tar zxf nfs-subdir-external-provisioner-4.0.5.tgz

[root@server2 heml]# ls

[root@server2 heml]# cd nfs-subdir-external-provisioner/

[root@server2 nfs-subdir-external-provisioner]# ls

Chart.yaml ci README.md templates values.yaml

[root@server1 ~]# docker load -i nfs-client-provisioner-v4.0.0.tar

[root@server1 ~]# docker push reg.westos.org/library/nfs-subdir-external-provisioner

[root@zhenji Downloads]# vim /etc/exports

[root@zhenji Downloads]# cat /etc/exports

/nfsdata *(rw,no_root_squash)

[root@zhenji Downloads]# showmount -e

clnt_create: RPC: Program not registered

[root@zhenji Downloads]# mkdir /nfsdata

[root@zhenji Downloads]# cd /nfsdata/

[root@zhenji nfsdata]# cd ..

[root@zhenji /]# chmod 777 -R nfsdata

[root@zhenji /]# systemctl start nfs-server

[root@zhenji /]# showmount -e

Export list for zhenji:

/nfsdata *

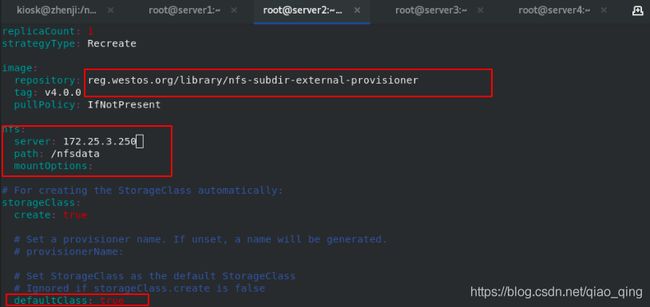

[root@server2 nfs-subdir-external-provisioner]# vim values.yaml

image:

repository: reg.westos.org/library/nfs-subdir-external-provisioner#改仓库

nfs:

server: 172.25.3.250#宿主机

path: /nfsdata#输出目录

defaultClass: true

[root@server2 nfs-subdir-external-provisioner]# kubectl create namespace nfs-provisioner

[root@server2 nfs-subdir-external-provisioner]# helm install nfs-subdir-external-provisioner . -n nfs-provisioner

[root@server2 ~]# yum install -y nfs-utils

[root@server3 ~]# yum install -y nfs-utils

[root@server4 ~]# yum install -y nfs-utils

[root@server2 nfs-subdir-external-provisioner]# kubectl get all -n nfs-provisioner

NAME READY STATUS RESTARTS AGE

pod/nfs-subdir-external-provisioner-7cd5c45954-vh4dv 0/1 Running 0 64s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nfs-subdir-external-provisioner 0/1 1 0 65s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nfs-subdir-external-provisioner-7cd5c45954 1 1 0 65s

[root@server2 nfs-subdir-external-provisioner]# kubectl get sc##存储类,部署完成

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client (default) cluster.local/nfs-subdir-external-provisioner Delete Immediate true 75s

[root@server2 heml]# cat pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

spec:

#storageClassName: managed-nfs-storage

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

[root@server2 heml]# kubectl apply -f pvc.yaml

persistentvolumeclaim/test-claim created

[root@server2 heml]# kubectl get pv

[root@server2 heml]# kubectl get pvc

[root@server2 heml]# kubectl delete -f pvc.yaml --force

##查看

[root@zhenji ~]# cd /nfsdata/

[root@zhenji nfsdata]# ls##delete后,宿主机里是默认打包的

default-test-claim-pvc-9a269074-0185-4b9a-b49d-b6c4fa4c3ab6

5)helm部署nginx-ingress

在k8s中的service中有nginx-ingress,一种全局的、为了代理不同后端 Service 而设置的负载均衡服务,就是 Kubernetes 里的Ingress 服务。

官网:https://kubernetes.github.io/ingress-nginx/

[root@server2 ~]# yum install ipvsadm -y

[root@server3 ~]# yum install ipvsadm -y

[root@server4 ~]# yum install ipvsadm -y

[root@server1 ~]# docker load -i metallb.tar

[root@server1 ~]# docker load -i nginx-ingress-controller-0.44.0.tar

[root@server1 ~]# docker push reg.westos.org/bitnami/nginx-ingress-controller

[root@server1 ~]# docker tag metallb/controller:v0.9.5 reg.westos.org/metallb/

[root@server1 ~]# docker tag metallb/speaker:v0.9.5 reg.westos.org/metallb/speaker:v0.9.5

[root@server1 ~]# docker push reg.westos.org/metallb/speaker:v0.9.5

[root@server1 ~]# docker push reg.westos.org/metallb/controller:v0.9.5

[root@server2 metallb]# kubectl edit configmaps -n kube-system kube-proxy

strictARP: true

mode: "ipvs"

[root@server2 metallb]# kubectl get pod -n kube-system |grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

##wget两个文件:ingress controller定义文件和ingress-service定义文件,可以整合到一起

[root@server2 metallb]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/mandatory.yaml

[root@server2 metallb]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/provider/baremetal/service-nodeport.yaml

[root@server2 metallb]# vim metallb.yaml

apiVersion: v1

kind: Namespace

metadata:

name: metallb-system

labels:

app: metallb

---

[root@server2 metallb]# kubectl apply -f metallb.yaml

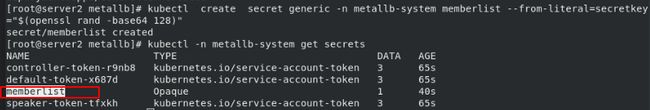

[root@server2 metallb]# kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"

[root@server2 metallb]# kubectl -n metallb-system get secrets

NAME TYPE DATA AGE

memberlist Opaque 1 40s

[root@server2 metallb]# kubectl -n metallb-system get all

[root@server2 metallb]# vim config.yaml

[root@server2 metallb]# cat config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools: #地址池

- name: default

protocol: layer2 #二层协议

addresses:

- 172.25.3.100-172.25.3.200 ##自己的ip段,要是空闲的

[root@server2 metallb]# kubectl apply -f config.yaml

[root@server2 heml]# ls

nginx-ingress-controller-7.5.0.tgz

[root@server2 heml]# tar zxf nginx-ingress-controller-7.5.0.tgz

[root@server2 heml]# cd nginx-ingress-controller/

[root@server2 nginx-ingress-controller]# vim values.yaml

global:

imageRegistry: reg.westos.org

image:

registry: docker.io

repository: bitnami/nginx

tag: 1.19.7-debian-10-r1

[root@server2 nginx-ingress-controller]# kubectl create namespace nginx-ingress-controller

[root@server2 nginx-ingress-controller]# helm install nginx-ingress-controller . -n nginx-ingress-controller

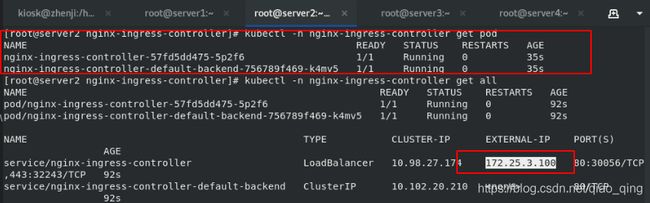

[root@server2 nginx-ingress-controller]# kubectl -n nginx-ingress-controller get pod

[root@server2 nginx-ingress-controller]# kubectl -n nginx-ingress-controller get all

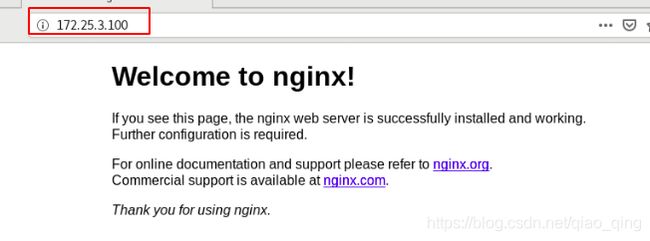

###网页访问ip172.25.3.100

6)helm部署kubeapps应用,为Helm提供web UI界面管理

[root@server1 ~]# docker load -i kubeapps-5.2.2.tar

[root@server1 ~]# docker images|grep bitnami|awk '{system("docker push "$1":"$2"")}'

[root@server2 heml]# helm pull bitnami/kubeapps --version 5.2.2

[root@server2 heml]# ls

kubeapps-5.2.2.tgz

[root@server2 heml]# tar zxf kubeapps-5.2.2.tgz##确认镜像/版本,5.2.2

[root@server2 heml]# ls

kubeapps

[root@server2 heml]# cd kubeapps/

[root@server2 kubeapps]# vim values.yaml

global:

imageRegistry: reg.westos.org

ingress:

## Set to true to enable ingress record generation

##

enabled: true ##打开ingress

hostname: kubeapps.westos.org ##域名解析到ip上

[root@server2 kubeapps]# cd charts/postgresql/

[root@server2 postgresql]# ls

Chart.lock charts Chart.yaml ci files README.md templates values.schema.json values.yaml

[root@server2 postgresql]# vim values.yaml

image:

registry: reg.westos.org

repository: bitnami/postgresql

tag: 11.11.0-debian-10-r0

[root@server2 kubeapps]# kubectl create namespace kubeapps

[root@server2 kubeapps]# helm install kubeapps -n kubeapps .

[root@server2 kubeapps]# kubectl create serviceaccount kubeapps-operator -n kubeapps

[root@server2 kubeapps]# kubectl get ingress -n kubeapps

NAME CLASS HOSTS ADDRESS PORTS AGE

kubeapps kubeapps.westos.org 172.25.3.4 80 27m

[root@server2 kubeapps]# kubectl create clusterrolebinding kubeapps-operator --clusterrole=cluster-admin --serviceaccount=kubeapps:kubeapps-operator

[root@server2 kubeapps]# kubectl -n kubeapps get secrets

kubeapps-operator-token-66tkm kubernetes.io/service-account-token 3 6m45s

[root@server2 kubeapps]# kubectl -n kubeapps describe secrets kubeapps-operator-token-66tkm##获取token

[root@server2 kubeapps]# kubectl -n nginx-ingress-controller get all

[root@server2 kubeapps]# kubectl -n nginx-ingress-controller get svc

nginx-ingress-controller LoadBalancer 10.98.27.174 172.25.3.100

[root@zhenji Desktop]# vim /etc/hosts#改解析

172.25.3.100 kubeapps.westos.org

##获取token,网页登陆kubeapps.westos.org

#加入解析,并更新

[root@server2 ~]# helm repo list

[root@server2 ~]# kubectl -n kube-system edit cm coredns

ready

hosts{

172.25.3.1 reg.westos.org

fallthrough

}

[root@server2 ~]# kubectl get pod -n kube-system |grep coredns | awk '{system("kubectl delete pod "$1" -n kube-system")}'

[root@server2 ~]# cat /etc/docker/certs.d/reg.westos.org/ca.crt