使用opencv实现app自动化

python现app自动化实

app_demo.py

# -*- encoding=utf8 -*-

# __author__ = 'Jeff.xie'

from appium import webdriver

from python_project.apptest.myopencv.opencv_utils import get_image_element_point

from time import sleep

import logging

from appium.webdriver.common.touch_action import TouchAction

def appium_desired():

'''启动App配置驱动信息'''

desired_caps={}

# 系统

desired_caps['platformName'] = 'Android'

#手机版本,在手机中:设置--关于手机 #命令行获取手机的版本号:adb -s da79fc70 shell getprop ro.build.version.release

desired_caps['platformVersion'] = '10'

# 设备号 adb devices

desired_caps['deviceName'] = 'emulator-5554'

# 包名 命令行获取包名和启动名:adb shell dumpsys window windows | findstr mFocusedApp

desired_caps['appPackage'] = 'com.android.settings'

# 启动名

desired_caps['appActivity'] = 'com.android.settings.Settings'

desired_caps["resetKeyboard"] = "True"#程序结束时重置原来的输入法

desired_caps["noReset"] = "True"#不初始化手机app信息(类似不清除缓存)

# desired_caps['automationName']=data['automationName']

# desired_caps['newCommandTimeout'] =data['newCommandTimeout']

print("start")

logging.info('初始化driver')

driver=webdriver.Remote('http://127.0.0.1:4723/wd/hub',desired_caps)

sleep(6)

print(driver.get_window_size())

width = driver.get_window_size()['width']# 屏幕宽

height = driver.get_window_size()['height']# 屏幕高

print("width: ",width)

print("height: ",height)

img1=r'./notifications.png'

img2=r'./Setting.png'

driver.get_screenshot_as_file(img2)

x,y=get_image_element_point(img1,img2)

print("x: ",x)

print("y: ",y)

print(driver.get)

sleep(3)

TouchAction(driver).tap(x=x, y=y).perform()

print('click sucess')

return driver

if __name__ == '__main__':

#本地设备调试

driver=appium_desired()

会用到下面这个图片来进行元素定位

opencv_utils.py

# -*- encoding=utf8 -*-

# __author__ = 'Jeff.xie'

import numpy as np

import cv2 as cv

import matplotlib.pyplot as plt

def get_Middle_Str(content, startStr, endStr):

"""

根据字符串首尾字符来获取指定字符

:param content: 字符内容

:param startStr: 开始字符

:param endStr: 结束字符

:return:

"""

startIndex = content.index(startStr)

if startIndex >= 0:

startIndex += len(startStr)

endIndex = content.index(endStr)

return content[startIndex:endIndex]

def get_image_element_point(src_path,dst_path):

"""

获取图像目标的坐标点

:param src_path: 原图像

:param dst_path: 目标识别图像

:return: 目标元素的中心坐标点

"""

print('src_path:%s,dst_path:%s'%(src_path,dst_path))

#以灰度模式读取图像

src_img = cv.imread(src_path,cv.IMREAD_GRAYSCALE)

dst_img = cv.imread(dst_path,cv.IMREAD_GRAYSCALE)

# 创建SITF对象

sift = cv.SIFT_create()

# 使用SITF找到关键点和特征描述

kp1, des1 = sift.detectAndCompute(src_img,None)

kp2, des2 = sift.detectAndCompute(dst_img,None)

# FLANN 匹配算法参数

FLANN_INDEX_KDTREE = 1

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5) #第一个参数指定算法

search_params = dict(checks=50) #指定应递归遍历索引中的树的次数

# flann特征匹配

flann = cv.FlannBasedMatcher(index_params,search_params)

matches = flann.knnMatch(des1,des2,k=2)

# 初始化匹配模板表

matchesMask = [[0,0] for i in range(len(matches))]

good=[]

# 匹配阈值

for i,(m,n) in enumerate(matches):

if m.distance < 0.5*n.distance:

good.append(m)

matchesMask[i]=[1,0]

MIN_MATCH_COUNT=2

print("len(good)>MIN_MATCH_COUNT: ",len(good))

#获取转换矩阵

if len(good)>MIN_MATCH_COUNT:

#获取关键点坐标

src_pts = np.float32([ kp1[m.queryIdx].pt for m in good ]).reshape(-1,1,2)

dst_pts = np.float32([ kp2[m.trainIdx].pt for m in good ]).reshape(-1,1,2)

#获取变换矩阵,M就是变化矩阵

M, mask = cv.findHomography(src_pts, dst_pts, cv.RANSAC,5.0)

matchesMask = mask.ravel().tolist()

#获得原图像高和宽

h,w = src_img.shape

#使用得到的变换矩阵对原图像的四个角进行变换,获得在目标上对应的坐标

pts = np.float32([ [0,0],[0,h-1],[w-1,h-1],[w-1,0] ]).reshape(-1,1,2)

dst = cv.perspectiveTransform(pts,M)

#提取坐标点

cordinate_x1=get_Middle_Str(str(dst[2]),'[[',']]').split()[0].split('.')[0]

cordinate_y1=get_Middle_Str(str(dst[2]),'[[',']]').split()[1].split('.')[0]

print("cordinate_x1: ",cordinate_x1)

print("cordinate_y1: ",cordinate_y1)

cordinate_x2 = get_Middle_Str(str(dst[0]), '[[', ']]').split()[0].split('.')[0]

cordinate_y2 = get_Middle_Str(str(dst[0]), '[[', ']]').split()[1].split('.')[0]

print("cordinate_x2: ",cordinate_x2)

print("cordinate_y2: ",cordinate_y2)

#提取目标元素中心坐标点

mid_cordinate_x=(int(cordinate_x1)-int(cordinate_x2))/2+int(cordinate_x2)

mid_cordinate_y=(int(cordinate_y1)-int(cordinate_y2))/2+int(cordinate_y2)

print(mid_cordinate_x/2,mid_cordinate_y/2)

"""

#原图像还原为灰度

img2 = cv.polylines(dst_img,[np.int32(dst)],True,255,10, cv.LINE_AA)

############打印图像轮廓#################

draw_params = dict(matchColor=(0, 255, 0),

# draw matches in green color

singlePointColor=None,

matchesMask=matchesMask, # draw only inliers

flags=2)

img3 = cv.drawMatches(src_img, kp1, dst_img, kp2, good, None, **draw_params)

plt.imshow(img3, 'gray'), plt.show()

"""

return int(mid_cordinate_x/2),int(mid_cordinate_y/2)

else:

print( "Not enough matches are found - {}/{}".format(len(good), MIN_MATCH_COUNT) )

matchesMask = None

def get_image_element_point2(src_path,dst_path):

#官网源码

# https://docs.opencv.org/4.x/dc/dc3/tutorial_py_matcher.html

"""

获取图像目标的坐标点

:param src_path: 原图像

:param dst_path: 目标识别图像

:return: 目标元素的中心坐标点

"""

img1 = cv.imread(src_path,cv.IMREAD_GRAYSCALE) # queryImage

img2 = cv.imread(dst_path,cv.IMREAD_GRAYSCALE) # trainImage

# Initiate SIFT detector

sift = cv.SIFT_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1,None)

kp2, des2 = sift.detectAndCompute(img2,None)

# FLANN parameters

FLANN_INDEX_KDTREE = 1

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

search_params = dict(checks=50) # or pass empty dictionary

flann = cv.FlannBasedMatcher(index_params,search_params)

matches = flann.knnMatch(des1,des2,k=2)

# Need to draw only good matches, so create a mask

matchesMask = [[0,0] for i in range(len(matches))]

# ratio test as per Lowe's paper

for i,(m,n) in enumerate(matches):

if m.distance < 0.7*n.distance:

matchesMask[i]=[1,0]

draw_params = dict(matchColor = (0,255,0),

singlePointColor = (255,0,0),

matchesMask = matchesMask,

flags = cv.DrawMatchesFlags_DEFAULT)

img3 = cv.drawMatchesKnn(img1,kp1,img2,kp2,matches,None,**draw_params)

plt.imshow(img3,),plt.show()

if __name__ == '__main__':

img1=r'D:/Battery1.png'

img2=r'D:/Loans2.png'

# get_image_element_point(img1,img2)

get_image_element_point2(img1,img2)

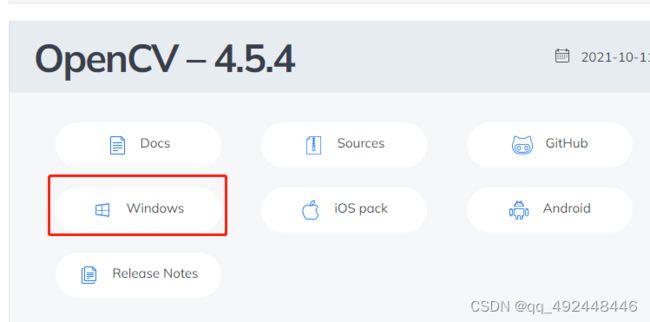

下载 opencv

Releases - OpenCV

双击解压:

可以看到路径下有opencv jar包

How to install

npm install --save opencv4nodejs

cnpm i -g opencv4nodejs

Native node modules are built via node-gyp, which already comes with npm by default. However, node-gyp requires you to have python installed. If you are running into node-gyp specific issues have a look at known issues with node-gyp first.

Important note: node-gyp won't handle whitespaces properly, thus make sure, that the path to your project directory does not contain any whitespaces. Installing opencv4nodejs under "C:\Program Files\some_dir" or similar will not work and will fail with: "fatal error C1083: Cannot open include file: 'opencv2/core.hpp'"!**

On Windows you will furthermore need Windows Build Tools to compile OpenCV and opencv4nodejs. If you don't have Visual Studio or Windows Build Tools installed, you can easily install the VS2015 build tools:

npm install --global windows-build-tools

Installing OpenCV Manually

Setting up OpenCV on your own will require you to set an environment variable to prevent the auto build script to run:

# linux and osx:

export OPENCV4NODEJS_DISABLE_AUTOBUILD=1

# on windows:

set OPENCV4NODEJS_DISABLE_AUTOBUILD=1

Windows

You can install any of the OpenCV 3 or OpenCV 4 releases manually or via the Chocolatey package manager:

# to install OpenCV 4.1.0

choco install OpenCV -y -version 4.1.0

Note, this will come without contrib modules. To install OpenCV under windows with contrib modules you have to build the library from source or you can use the auto build script.

Before installing opencv4nodejs with an own installation of OpenCV you need to expose the following environment variables:

OPENCV_INCLUDE_DIR pointing to the directory with the subfolder opencv2 containing the header files

OPENCV_LIB_DIR pointing to the lib directory containing the OpenCV .lib files

Also you will need to add the OpenCV binaries to your system path:

add an environment variable OPENCV_BIN_DIR pointing to the binary directory containing the OpenCV .dll files

append ;%OPENCV_BIN_DIR%; to your system path variable

Note: Restart your current console session after making changes to your environment.

public MobileElement findMobileElement(By by) {

return driver.findElement(by);

}

public void clickMobileElementByPicture(String picPath) throws URISyntaxException, IOException {

File file = new File(picPath);

String base64String = Base64.getEncoder().encodeToString(Files.readAllBytes(file.toPath()));

By by= MobileBy.image(base64String);

findMobileElement(by).click();

}

picPath="src/main/java/com/welab/automation/projects/channel/images/cardPic.png"; clickMobileElementByPicture(picPath);