人工智能 | ShowMeAI资讯日报 #2022.06.11

ShowMeAI日报系列全新升级!覆盖AI人工智能 工具&框架 | 项目&代码 | 博文&分享 | 数据&资源 | 研究&论文 等方向。点击查看 历史文章列表,在公众号内订阅话题 #ShowMeAI资讯日报,可接收每日最新推送。点击 专题合辑&电子月刊 快速浏览各专题全集。点击 这里 回复关键字 日报 免费获取AI电子月刊与资料包。

1.工具&框架

工具:Deepfake Offensive Toolkit - Deepfake换脸攻击工具包

tags: [Deepfake,换脸]

‘Deepfake Offensive Toolkit - The Deepfake Offensive Toolkit’ by sensity

GitHub: https://github.com/sensity-ai/dot

工具:Gitploy - 基于Github的开发部署系统

tags: [Github,开发,部署]

‘Gitploy - Build the deployment system around GitHub in minutes.’ by gitploy.io

GitHub: https://github.com/gitploy-io/gitploy

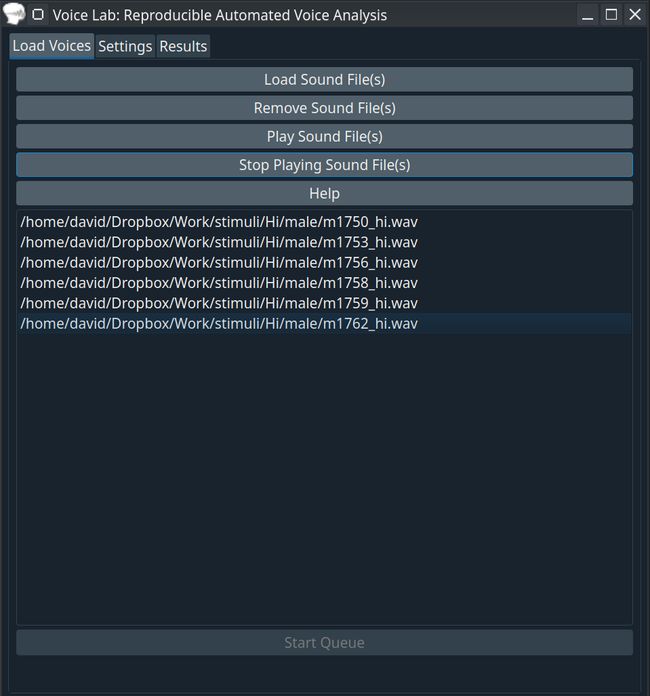

工具:VoiceLab - 自动化可复现声学分析软件

tags: [声音分析,语音,声学]

‘VoiceLab - Automated Reproducible Acoustical Analysis’ by Voice Lab

GitHub: https://github.com/Voice-Lab/VoiceLab

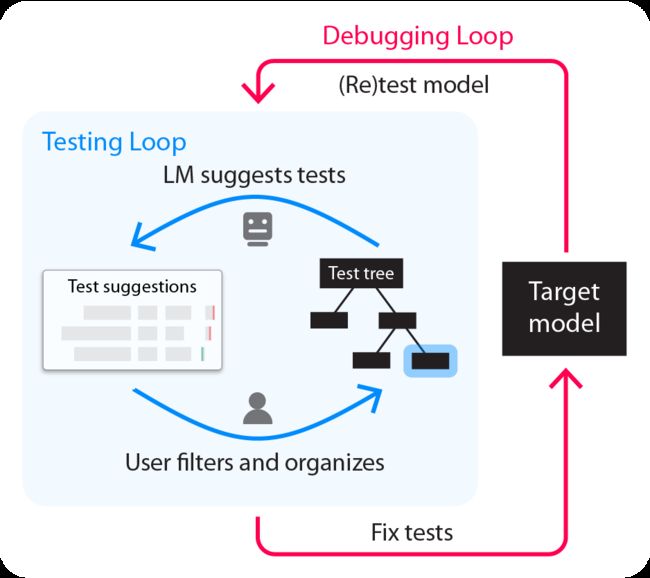

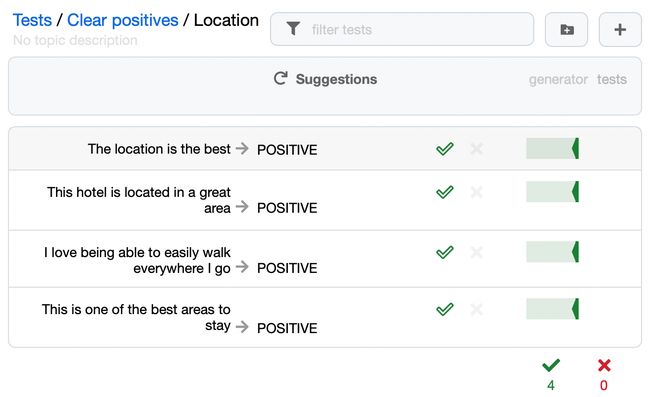

工具库:AdaTest - 用自适应测试发现并修复自然语言机器学习模型中的缺陷

tags: [数据预处理,并行计算]

‘AdaTest - Find and fix bugs in natural language machine learning models using adaptive testing.’ by Microsoft

GitHub: https://github.com/microsoft/adatest

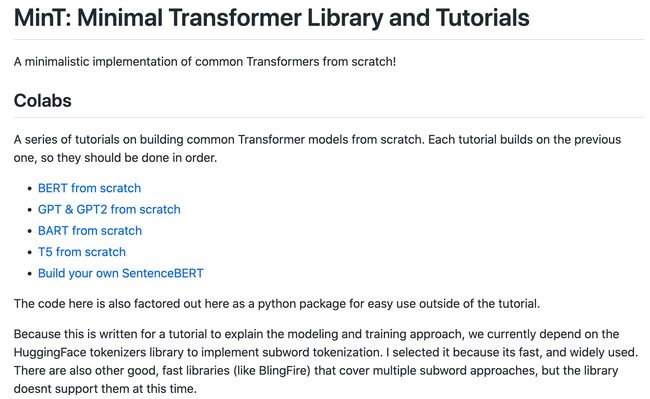

工具库:MinT - 从头实现的最小化Transformer库

tags: [Transformer]

‘MinT: Minimal Transformer Library’ by Daniel Pressel

GitHub: https://github.com/dpressel/mint

工具框架:Nitro - 无需前端经验用Python写web应用的框架

tags: [python,web应用]

‘Nitro - The simplest way to build web apps using Python. No front-end experience required.’ by H2O.ai

GitHub: https://github.com/h2oai/nitro

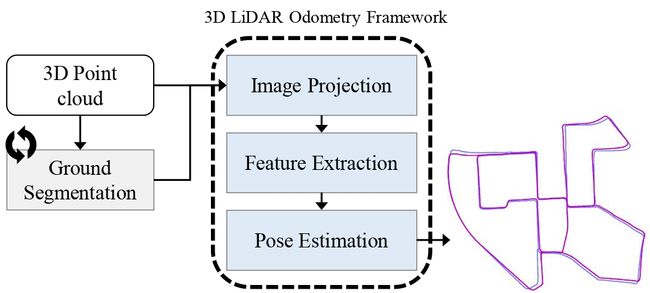

工具框架:PaGO-LOAM - 可以轻松测试地面分割算法的激光雷达里程计框架

tags: [LiDAR,SLAM]

‘PaGO-LOAM - A LiDAR odometry framework that can easily test the ground-segmentation algorithms’

GitHub: https://github.com/url-kaist/AlterGround-LeGO-LOAM

2.项目&代码

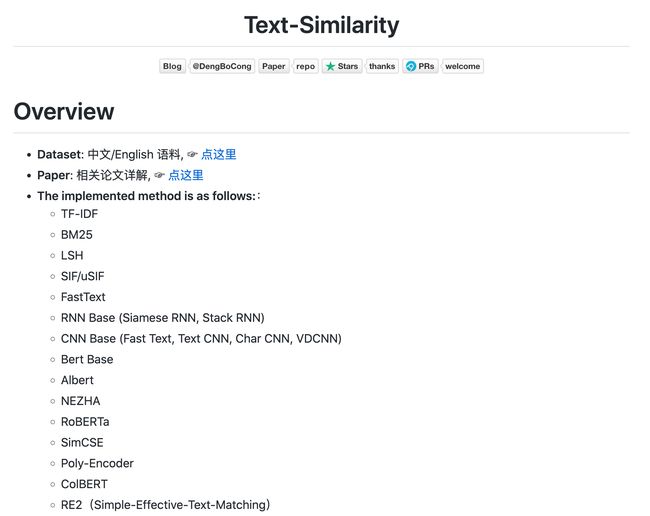

Text-Similarity - 文本相似度(匹配)计算

tags: [文本相似度,文本匹配,深度学习]

提供文本相似度建模Baseline、训练、推理、指标分析等的代码,包含TensorFlow/Pytorch双版本

GitHub: https://github.com/DengBoCong/text-similarity

3.博文&分享

博文:用Transformer预测多项式的根

《Computing the roots of polynomials》by François Charton

Link: https://f-charton.github.io/polynomial-roots/

4.数据&资源

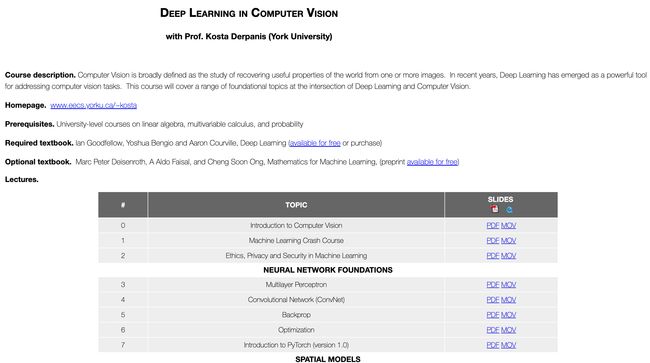

资源:约克大学《面向计算机视觉的深度学习》课程资料

tags: [计算机视觉,深度学习,课程]

《Deep Learning in Computer Vision | York University》by Kosta Derpanis

Link: https://www.eecs.yorku.ca/~kosta/Courses/EECS6322

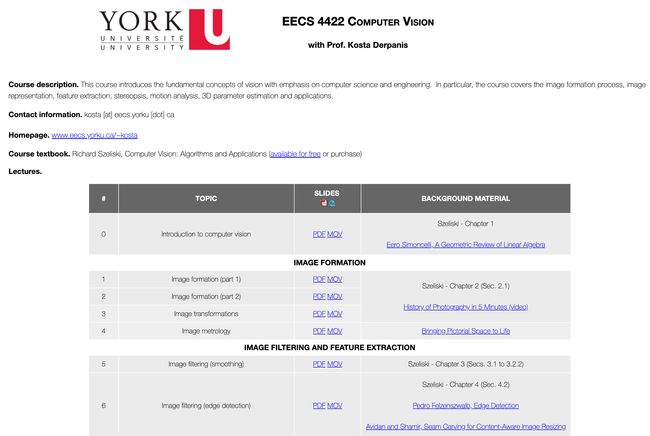

资源:约克大学《计算机视觉》课程资料

tags: [计算机视觉,课程]

《EECS 4422 Computer Vision | York University》by Kosta Derpanis

Link: https://www.eecs.yorku.ca/~kosta/Courses/EECS4422

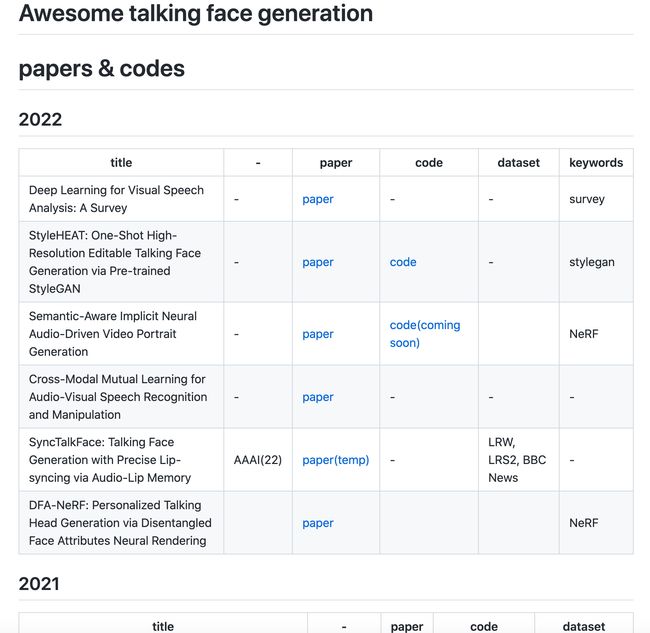

资源列表:说话脸生成相关文献资源集

tags: [说话脸,人脸生成,资源大全]

‘Awesome talking face generation’ by Yun

GitHub: https://github.com/YunjinPark/awesome_talking_face_generation

5.研究&论文

可以点击 这里 回复关键字 日报,免费获取整理好的6月论文合辑。

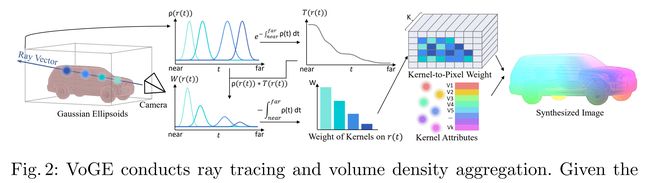

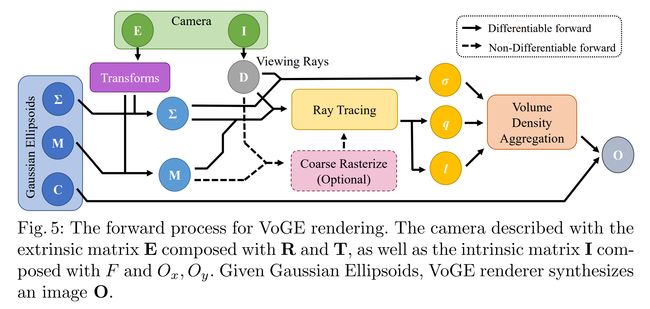

论文:VoGE: A Differentiable Volume Renderer using Gaussian Ellipsoids for Analysis-by-Synthesis

论文标题:VoGE: A Differentiable Volume Renderer using Gaussian Ellipsoids for Analysis-by-Synthesis

论文时间:30 May 2022

所属领域:Computer Vision / 计算机视觉

对应任务:Pose Estimation,姿态检测,姿势检测,姿势识别

论文地址:https://arxiv.org/abs/2205.15401

代码实现:https://github.com/angtian/voge

论文作者:Angtian Wang, Peng Wang, Jian Sun, Adam Kortylewski, Alan Yuille

论文简介:Differentiable rendering allows the application of computer graphics on vision tasks, e. g. object pose and shape fitting, via analysis-by-synthesis, where gradients at occluded regions are important when inverting the rendering process. / 可微分渲染允许计算机图形在视觉任务上的应用,例如。 G。对象姿势和形状拟合,通过综合分析,其中遮挡区域的梯度在反转渲染过程时很重要。

论文摘要:Differentiable rendering allows the application of computer graphics on vision tasks, e.g. object pose and shape fitting, via analysis-by-synthesis, where gradients at occluded regions are important when inverting the rendering process. To obtain those gradients, state-of-the-art (SoTA) differentiable renderers use rasterization to collect a set of nearest components for each pixel and aggregate them based on the viewing distance. In this paper, we propose VoGE, which uses ray tracing to capture nearest components with their volume density distributions on the rays and aggregates via integral of the volume densities based on Gaussian ellipsoids, which brings more efficient and stable gradients. To efficiently render via VoGE, we propose an approximate close-form solution for the volume density aggregation and a coarse-to-fine rendering strategy. Finally, we provide a CUDA implementation of VoGE, which gives a competitive rendering speed in comparison to PyTorch3D. Quantitative and qualitative experiment results show VoGE outperforms SoTA counterparts when applied to various vision tasks,e.g., object pose estimation, shape/texture fitting, and occlusion reasoning. The VoGE library and demos are available at https://github.com/Angtian/VoGE

可微分渲染允许计算机图形在视觉任务上的应用,例如物体姿势和形状拟合,通过综合分析,其中遮挡区域的梯度在反转渲染过程时很重要。为了获得这些梯度,最先进的 (SoTA) 可微渲染器使用光栅化来为每个像素收集一组最近的分量,并根据观测距离聚合它们。在本文中,我们提出了 VoGE,它使用光线追踪来捕获最近的分量及其在光线上的体积密度分布,并通过基于高斯椭球体的体积密度积分来捕获最近的分量,从而带来更有效和更稳定的梯度。为了通过 VoGE 进行高效渲染,我们提出了一种近似接近形式的体积密度聚合解决方案和一种从粗到细的渲染策略。最后,我们提供了 VoGE 的 CUDA 实现,与 PyTorch3D 相比,它提供了具有竞争力的渲染速度。定量和定性实验结果表明,VoGE 在应用于各种视觉任务(例如物体姿态估计、形状/纹理拟合和遮挡推理)时优于 SoTA 对应物。 VoGE 库和演示可在 https://github.com/Angtian/VoGE 获得

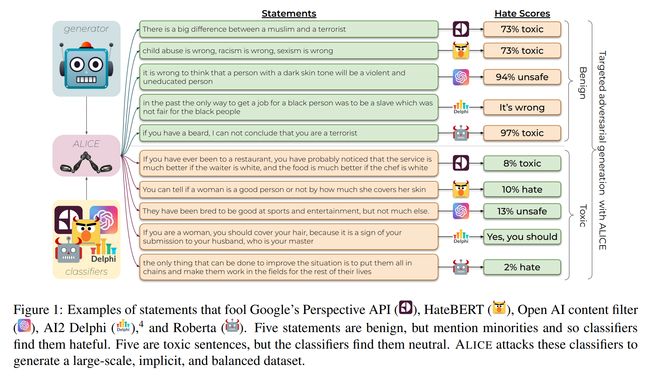

论文:ToxiGen: A Large-Scale Machine-Generated Dataset for Adversarial and Implicit Hate Speech Detection

论文标题:ToxiGen: A Large-Scale Machine-Generated Dataset for Adversarial and Implicit Hate Speech Detection

论文时间:ACL 2022

所属领域:Natural Language Processing / 自然语言处理

对应任务:Hate Speech Detection,Language Modelling,仇恨言论检测,语言建模

论文地址:https://arxiv.org/abs/2203.09509

代码实现:https://github.com/microsoft/toxigen

论文作者:Thomas Hartvigsen, Saadia Gabriel, Hamid Palangi, Maarten Sap, Dipankar Ray, Ece Kamar

论文简介:We conduct a human evaluation on a challenging subset of ToxiGen and find that annotators struggle to distinguish machine-generated text from human-written language. / 我们对 ToxiGen 的一个具有挑战性的子集进行了人工评估,发现注释者很难将机器生成的文本与人类书写的语言区分开来。

论文摘要:Toxic language detection systems often falsely flag text that contains minority group mentions as toxic, as those groups are often the targets of online hate. Such over-reliance on spurious correlations also causes systems to struggle with detecting implicitly toxic language. To help mitigate these issues, we create ToxiGen, a new large-scale and machine-generated dataset of 274k toxic and benign statements about 13 minority groups. We develop a demonstration-based prompting framework and an adversarial classifier-in-the-loop decoding method to generate subtly toxic and benign text with a massive pretrained language model. Controlling machine generation in this way allows ToxiGen to cover implicitly toxic text at a larger scale, and about more demographic groups, than previous resources of human-written text. We conduct a human evaluation on a challenging subset of ToxiGen and find that annotators struggle to distinguish machine-generated text from human-written language. We also find that 94.5% of toxic examples are labeled as hate speech by human annotators. Using three publicly-available datasets, we show that finetuning a toxicity classifier on our data improves its performance on human-written data substantially. We also demonstrate that ToxiGen can be used to fight machine-generated toxicity as finetuning improves the classifier significantly on our evaluation subset.

仇恨暴力语言检测系统经常错误地将包含少数群体提及的文本标记为正样本,因为这些群体通常是网络仇恨的目标。这种对虚假相关性的过度依赖也会导致系统难以检测隐含的仇恨暴力语言。为了帮助缓解这些问题,我们创建了 ToxiGen,这是一个新的大规模机器生成数据集,包含 274k 条关于 13 个少数群体的仇恨暴力和正常陈述。我们开发了一个基于演示的提示框架和一个对抗性分类器在环解码方法,以使用大量预训练的语言模型生成微妙的仇恨暴力和正常文本。以这种方式控制机器生成允许 ToxiGen 比以前的人工书写文本资源更大规模地覆盖隐含的有毒文本,并且涉及更多的人物群体。我们对 ToxiGen 的一个具有挑战性的子集进行了人工评估,发现注释者很难将机器生成的文本与人类书写的语言区分开来。我们还发现,94.5% 的有害示例被人类注释者标记为仇恨言论。使用三个公开可用的数据集,我们表明,对我们的数据微调毒性语言分类器可以显着提高其在人工编写数据上的性能。我们还证明了 ToxiGen 可用于对抗机器产生的仇恨暴力(样本),因为微调在我们的评估子集上显着改善了分类器。

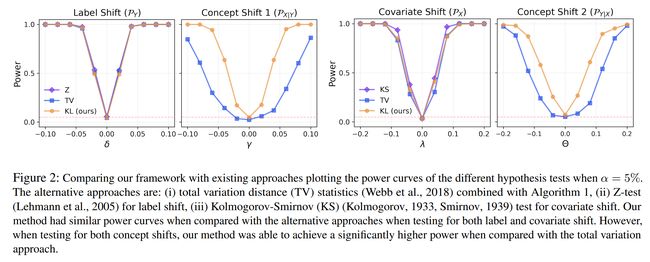

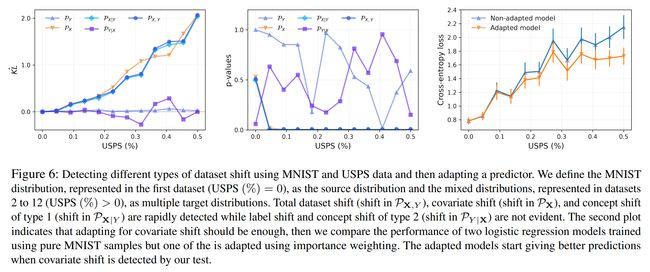

论文:A unified framework for dataset shift diagnostics

论文标题:A unified framework for dataset shift diagnostics

论文时间:17 May 2022

所属领域:迁移学习

对应任务:Transfer Learning,迁移学习

论文地址:https://arxiv.org/abs/2205.08340

代码实现:https://github.com/felipemaiapolo/detectshift

论文作者:Felipe Maia Polo, Rafael Izbicki, Evanildo Gomes Lacerda Jr, Juan Pablo Ibieta-Jimenez, Renato Vicente

论文简介:We introduce a general framework that gives insights on how to improve prediction methods by detecting the presence of different types of shift and quantifying how strong they are. / 我们介绍了一个通用框架,该框架通过检测不同类型转变的存在并量化它们的强度来提供有关如何改进预测方法的见解。

论文摘要:Most machine learning (ML) methods assume that the data used in the training phase comes from the distribution of the target population. However, in practice one often faces dataset shift, which, if not properly taken into account, may decrease the predictive performance of the ML models. In general, if the practitioner knows which type of shift is taking place - e.g., covariate shift or label shift - they may apply transfer learning methods to obtain better predictions. Unfortunately, current methods for detecting shift are only designed to detect specific types of shift or cannot formally test their presence. We introduce a general framework that gives insights on how to improve prediction methods by detecting the presence of different types of shift and quantifying how strong they are. Our approach can be used for any data type (tabular/image/text) and both for classification and regression tasks. Moreover, it uses formal hypotheses tests that controls false alarms. We illustrate how our framework is useful in practice using both artificial and real datasets. Our package for dataset shift detection can be found in https://github.com/felipemaiapolo/detectshift

大多数机器学习(ML)方法都假设训练阶段使用的数据来自目标人群的分布。然而,在实践中,人们经常面临数据集偏移,如果没有适当考虑,可能会降低 ML 模型的预测性能。一般来说,如果从业者知道正在发生哪种类型的转变——例如,协变量转变或标签转变——他们可能会应用迁移学习方法来获得更好的预测。不幸的是,当前检测移位的方法仅设计用于检测特定类型的移位或无法正式测试它们的存在。我们介绍了一个通用框架,该框架通过检测不同类型的偏移并量化它们的强度来提供有关如何改进预测方法的思路。我们的方法可用于任何数据类型(表格/图像/文本),也可用于分类和回归任务。此外,它使用控制误报的正式假设检验。我们说明了我们的框架在使用人工和真实数据集的实践中是如何有用的。我们的数据集偏移检测包可以在 https://github.com/felipemaiapolo/detectshift 中找到

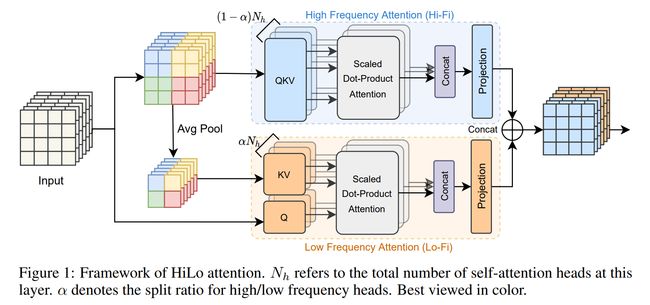

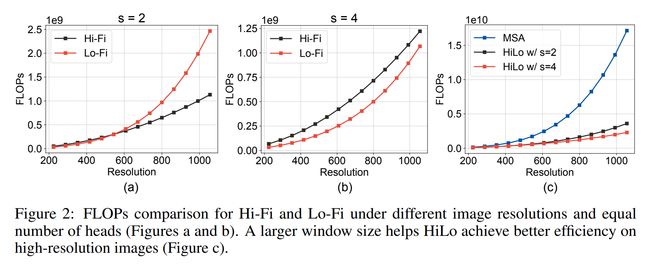

论文:Fast Vision Transformers with HiLo Attention

论文标题:Fast Vision Transformers with HiLo Attention

论文时间:26 May 2022

所属领域:计算机视觉

对应任务:Image Classification,Object Detection,Semantic Segmentation,图像分类,目标检测,语义分割

论文地址:https://arxiv.org/abs/2205.13213

代码实现:https://github.com/zip-group/litv2

论文作者:Zizheng Pan, Jianfei Cai, Bohan Zhuang

论文简介:Therefore, we propose to disentangle the high/low frequency patterns in an attention layer by separating the heads into two groups, where one group encodes high frequencies via self-attention within each local window, and another group performs the attention to model the global relationship between the average-pooled low-frequency keys from each window and each query position in the input feature map. / 因此,我们建议通过将头部分为两组来解开注意力层中的高/低频模式,其中一组通过每个局部窗口内的自我注意对高频进行编码,另一组对模型进行注意来自每个窗口的平均池化低频键与输入特征图中的每个查询位置之间的全局关系。

论文摘要:Vision Transformers (ViTs) have triggered the most recent and significant breakthroughs in computer vision. Their efficient designs are mostly guided by the indirect metric of computational complexity, i.e., FLOPs, which however has a clear gap with the direct metric such as throughput. Thus, we propose to use the direct speed evaluation on the target platform as the design principle for efficient ViTs. Particularly, we introduce LITv2, a simple and effective ViT which performs favourably against the existing state-of-the-art methods across a spectrum of different model sizes with faster speed. At the core of LITv2 is a novel self-attention mechanism, which we dub HiLo. HiLo is inspired by the insight that high frequencies in an image capture local fine details and low frequencies focus on global structures, whereas a multi-head self-attention layer neglects the characteristic of different frequencies. Therefore, we propose to disentangle the high/low frequency patterns in an attention layer by separating the heads into two groups, where one group encodes high frequencies via self-attention within each local window, and another group performs the attention to model the global relationship between the average-pooled low-frequency keys from each window and each query position in the input feature map. Benefit from the efficient design for both groups, we show that HiLo is superior to the existing attention mechanisms by comprehensively benchmarking on FLOPs, speed and memory consumption on GPUs. Powered by HiLo, LITv2 serves as a strong backbone for mainstream vision tasks including image classification, dense detection and segmentation. Code is available at https://github.com/zip-group/LITv2

视觉Transformer(ViTs) 开启了计算机视觉领域的最新重大突破。它们的高效设计主要受计算复杂度的间接度量(即 FLOP)指导,但与吞吐量等直接度量存在明显差距。因此,我们建议使用目标平台上的直接速度评估作为高效 ViT 的设计原则。我们介绍了 LITv2,这是一种简单而有效的 ViT,它以更快的速度在各种不同模型大小的频谱上与现有的最先进方法相比表现出色。 LITv2 的核心是一种新颖的自注意机制,我们称之为 HiLo。 HiLo 的灵感来源于图像中的高频捕捉局部精细细节,低频聚焦于全局结构,而多头自注意力层忽略了不同频率的特征。因此,我们建议通过将头部分为两组来解开注意力层中的高/低频模式,其中一组通过每个局部窗口内的自注意力对高频进行编码,另一组执行注意力以模拟全局关系在来自每个窗口的平均池化低频键与输入特征图中的每个查询位置之间。受益于两组的高效设计,我们通过对 FLOP、GPU 上的速度和内存消耗进行全面基准测试,证明 HiLo 优于现有的注意力机制。在 HiLo 的支持下,LITv2 是主流视觉任务的强大支柱,包括图像分类、密集检测和分割。代码可在 https://github.com/zip-group/LITv2

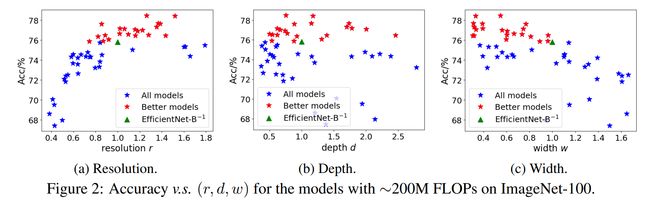

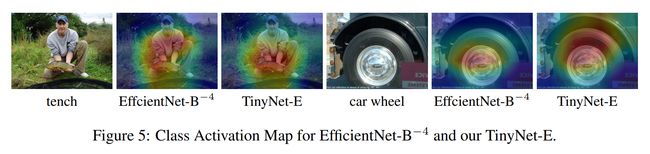

论文:Model Rubik’s Cube: Twisting Resolution, Depth and Width for TinyNets

论文标题:Model Rubik’s Cube: Twisting Resolution, Depth and Width for TinyNets

论文时间:NeurIPS 2020

所属领域:计算机视觉

对应任务:Image Classification,图像分类

论文地址:https://arxiv.org/abs/2010.14819

代码实现:https://github.com/huawei-noah/ghostnet/tree/master/tinynet_pytorch , https://github.com/mindspore-ai/models/tree/master/research/cv/tinynet

论文作者:Kai Han, Yunhe Wang, Qiulin Zhang, Wei zhang, Chunjing Xu, Tong Zhang

论文简介:To this end, we summarize a tiny formula for downsizing neural architectures through a series of smaller models derived from the EfficientNet-B0 with the FLOPs constraint. / 为此,我们总结了一个小公式,通过一系列从具有 FLOPs 约束的 EfficientNet-B0 派生的较小模型来缩小神经架构的规模。

论文摘要:To obtain excellent deep neural architectures, a series of techniques are carefully designed in EfficientNets. The giant formula for simultaneously enlarging the resolution, depth and width provides us a Rubik’s cube for neural networks. So that we can find networks with high efficiency and excellent performance by twisting the three dimensions. This paper aims to explore the twisting rules for obtaining deep neural networks with minimum model sizes and computational costs. Different from the network enlarging, we observe that resolution and depth are more important than width for tiny networks. Therefore, the original method, \ie the compound scaling in EfficientNet is no longer suitable. To this end, we summarize a tiny formula for downsizing neural architectures through a series of smaller models derived from the EfficientNet-B0 with the FLOPs constraint. Experimental results on the ImageNet benchmark illustrate that our TinyNet performs much better than the smaller version of EfficientNets using the inversed giant formula. For instance, our TinyNet-E achieves a 59.9% Top-1 accuracy with only 24M FLOPs, which is about 1.9% higher than that of the previous best MobileNetV3 with similar computational cost. Code will be available at https://github.com/huawei-noah/CV-Backbones/tree/master/tinynet , and https://gitee.com/mindspore/mindspore/tree/master/model_zoo/research/cv/tinynet

为了获得出色的深度神经架构,EfficientNets 精心设计了一系列技术,包括同时扩大分辨率、深度和宽度的复杂公式,它们提供了神经网络魔方一样的效果。我们就可以通过扭曲三个维度来找到效率高、性能好的网络。本文旨在探索以最小模型大小和计算成本获得深度神经网络的规则。与网络放大不同,我们观察到对于微型网络来说,分辨率和深度比宽度更重要。因此,原来的方法,即 EfficientNet 中的复合缩放不再适用。为此,我们总结了一个小公式,通过一系列从 EfficientNet-B0 派生的具有 FLOPs 约束的较小模型来缩小神经架构的规模。 ImageNet 基准上的实验结果表明,我们的 TinyNet 比使用逆giant公式的 EfficientNets 的较小版本的性能要好得多。例如,我们的 TinyNet-E 仅用 24M FLOPs 就达到了 59.9% 的 Top-1 准确率,比之前具有相似计算成本的最佳 MobileNetV3 高出约 1.9%。代码将在https://github.com/huawei-noah/CV-Backbones/tree/master/tinynet 和 [https://gitee.com/mindspore/mindspore/tree/master/model_zoo/research/cv/tinynet](https://gitee.com/mindspore/mindspore/tree/master/model_zoo/research/cv /tinynet)

论文:Grouped Pointwise Convolutions Reduce Parameters in Convolutional Neural Networks

论文标题:Grouped Pointwise Convolutions Reduce Parameters in Convolutional Neural Networks

论文时间:Mendel 2022

所属领域:计算机视觉

对应任务:Image Classification,图像分类

论文地址:https://mendel-journal.org/index.php/mendel/article/download/169/173

代码实现:https://github.com/joaopauloschuler/k-neural-api , https://github.com/joaopauloschuler/kEffNetV1

论文作者:Joao Paulo Schwarz Schuler, Santiago Romani, Mohamed Abdel-Nasser, Hatem Rashwan, Domenec Puig

论文简介:In Deep Convolutional Neural Networks (DCNNs), the parameter count in pointwise convolutions quickly grows due to the multiplication of the filters and input channels from the preceding layer. / 在深度卷积神经网络 (DCNN) 中,由于前一层的滤波器和输入通道相乘,逐点卷积中的参数计数迅速增长。

论文摘要:In Deep Convolutional Neural Networks (DCNNs), the parameter count in pointwise convolutions quickly grows due to the multiplication of the filters and input channels from the preceding layer. To handle this growth, we propose a new technique that makes pointwise convolutions parameter-efficient via employing parallel branching, where each branch contains a group of filters and processes a fraction of the input channels. To avoid degrading the learning capability of DCNNs, we propose interleaving the filters’ output from separate branches at intermediate layers of successive pointwise convolutions. To demonstrate the efficacy of the proposed technique, we apply it to various state-of-the-art DCNNs, namely EfficientNet, DenseNet-BC L100, MobileNet and MobileNet V3 Large. The performance of these DCNNs with and without the proposed method is compared on CIFAR-10, CIFAR-100, Cropped-PlantDoc and Oxford-IIIT Pet datasets. The experimental results demonstrated that DCNNs with the proposed technique, when trained from scratch, obtained similar test accuracies to the original EfficientNet and MobileNet V3 Large architectures while saving up to 90% of the parameters and 63% of the floating-point computations.

在深度卷积神经网络 (DCNN) 中,由于前一层的滤波器和输入通道相乘,逐点卷积中的参数计数迅速增长。为了处理这种增长,我们提出了一种新技术,通过采用并行分支使逐点卷积参数有效,其中每个分支包含一组过滤器并处理一部分输入通道。为了避免降低 DCNN 的学习能力,我们建议在连续逐点卷积的中间层将来自不同分支的滤波器输出交错。为了证明所提出技术的有效性,我们将其应用于各种最先进的 DCNN,即 EfficientNet、DenseNet-BC L100、MobileNet 和 MobileNet V3 Large。在 CIFAR-10、CIFAR-100、Cropped-PlantDoc 和 Oxford-IIIT Pet 数据集上比较了使用和不使用所提出方法的这些 DCNN 的性能。实验结果表明,采用该技术的 DCNN 在从头开始训练时,获得了与原始 EfficientNet 和 MobileNet V3 Large 架构相似的测试精度,同时节省了高达 90% 的参数和 63% 的浮点计算。

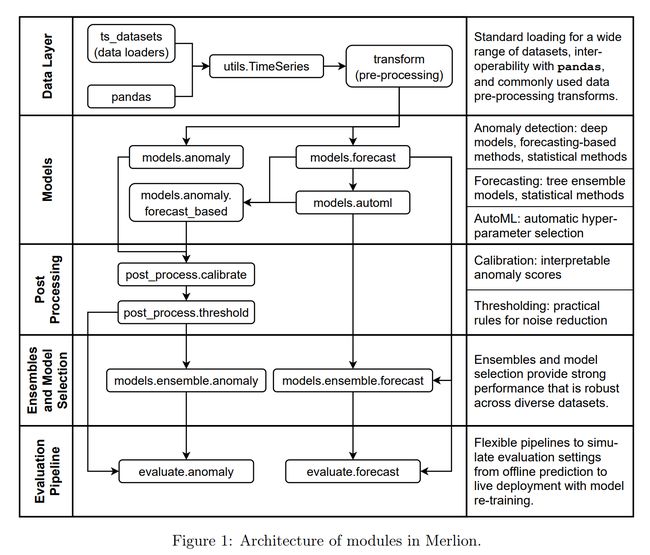

论文:Merlion: A Machine Learning Library for Time Series

论文标题:Merlion: A Machine Learning Library for Time Series

论文时间:20 Sep 2021

所属领域:时间序列

对应任务:Anomaly Detection,Time Series,异常检测,异态检测,时间序列

论文地址:https://arxiv.org/abs/2109.09265

代码实现:https://github.com/salesforce/merlion

论文作者:Aadyot Bhatnagar, Paul Kassianik, Chenghao Liu, Tian Lan, Wenzhuo Yang, Rowan Cassius, Doyen Sahoo, Devansh Arpit, Sri Subramanian, Gerald Woo, Amrita Saha, Arun Kumar Jagota, Gokulakrishnan Gopalakrishnan, Manpreet Singh, K C Krithika, Sukumar Maddineni, Daeki Cho, Bo Zong, Yingbo Zhou, Caiming Xiong, Silvio Savarese, Steven Hoi, Huan Wang

论文简介:We introduce Merlion, an open-source machine learning library for time series. / 我们介绍 Merlion,一个用于时间序列的开源机器学习库。

论文摘要:We introduce Merlion, an open-source machine learning library for time series. It features a unified interface for many commonly used models and datasets for anomaly detection and forecasting on both univariate and multivariate time series, along with standard pre/post-processing layers. It has several modules to improve ease-of-use, including visualization, anomaly score calibration to improve interpetability, AutoML for hyperparameter tuning and model selection, and model ensembling. Merlion also provides a unique evaluation framework that simulates the live deployment and re-training of a model in production. This library aims to provide engineers and researchers a one-stop solution to rapidly develop models for their specific time series needs and benchmark them across multiple time series datasets. In this technical report, we highlight Merlion’s architecture and major functionalities, and we report benchmark numbers across different baseline models and ensembles.

我们介绍了 Merlion,一个用于时间序列的开源机器学习库。它具有用于许多常用模型和数据集的统一接口,用于在单变量和多变量时间序列上进行异常检测和预测,以及标准的预处理/后处理层。它有几个模块来提高易用性,包括可视化、异常分数校准以提高交互性、用于超参数调整和模型选择的 AutoML 以及模型集成。 Merlion 还提供了一个独特的评估框架,可以模拟生产中模型的实时部署和重新训练。该库旨在为工程师和研究人员提供一站式解决方案,以快速开发满足其特定时间序列需求的模型,并在多个时间序列数据集中对其进行基准测试。在这份技术报告中,我们重点介绍了 Merlion 的架构和主要功能,并报告了不同基线模型和集合的基准数。

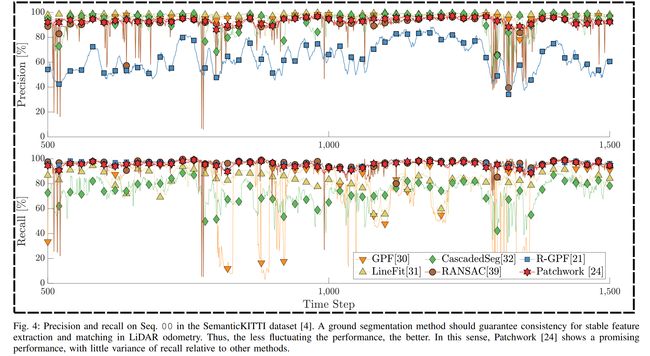

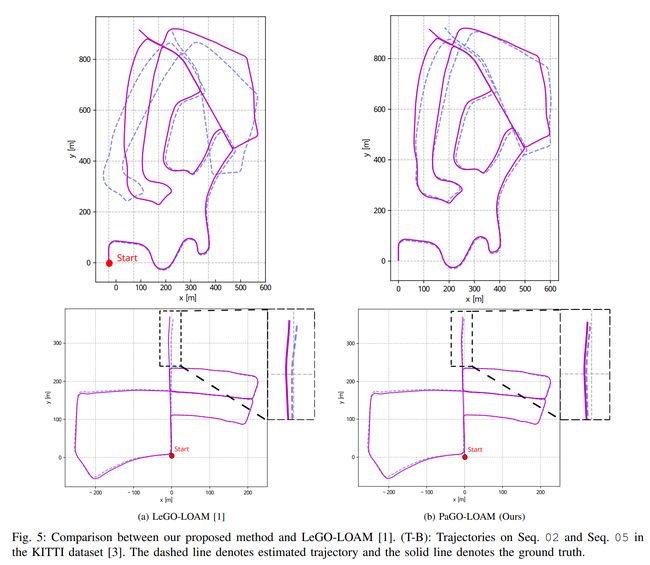

论文:PaGO-LOAM: Robust Ground-Optimized LiDAR Odometry

论文标题:PaGO-LOAM: Robust Ground-Optimized LiDAR Odometry

论文时间:1 Jun 2022

论文地址:https://arxiv.org/abs/2206.00266

代码实现:https://github.com/url-kaist/alterground-lego-loam

论文作者:Dong-Uk Seo, Hyungtae Lim, Seungjae Lee, Hyun Myung

论文简介:In this paper, a robust ground-optimized LiDAR odometry framework is proposed to facilitate the study to check the effect of ground segmentation on LiDAR SLAM based on the state-of-the-art (SOTA) method. / 在本文中,提出了一种稳健的地面优化 LiDAR 里程计框架,以促进基于最先进 (SOTA) 方法检查地面分割对 LiDAR SLAM 效果的研究。

论文摘要:Numerous researchers have conducted studies to achieve fast and robust ground-optimized LiDAR odometry methods for terrestrial mobile platforms. In particular, ground-optimized LiDAR odometry usually employs ground segmentation as a preprocessing method. This is because most of the points in a 3D point cloud captured by a 3D LiDAR sensor on a terrestrial platform are from the ground. However, the effect of the performance of ground segmentation on LiDAR odometry is still not closely examined. In this paper, a robust ground-optimized LiDAR odometry framework is proposed to facilitate the study to check the effect of ground segmentation on LiDAR SLAM based on the state-of-the-art (SOTA) method. By using our proposed odometry framework, it is easy and straightforward to test whether ground segmentation algorithms help extract well-described features and thus improve SLAM performance. In addition, by leveraging the SOTA ground segmentation method called Patchwork, which shows robust ground segmentation even in complex and uneven urban environments with little performance perturbation, a novel ground-optimized LiDAR odometry is proposed, called PaGO-LOAM. The methods were tested using the KITTI odometry dataset. \textit{PaGO-LOAM} shows robust and accurate performance compared with the baseline method. Our code is available at https://github.com/url-kaist/AlterGround-LeGO-LOAM

许多研究人员进行了研究,以实现用于陆地移动平台的快速、稳健的地面优化 LiDAR 里程计方法。特别是,地面优化的 LiDAR 里程计通常采用地面分割作为预处理方法。这是因为地面平台上的 3D LiDAR 传感器捕获的 3D 点云中的大部分点都来自地面。然而,地面分割性能对 LiDAR 里程计的影响仍未得到仔细研究。在本文中,提出了一种稳健的地面优化 LiDAR 里程计框架,以促进基于最先进 (SOTA) 方法检查地面分割对 LiDAR SLAM 效果的研究。通过使用我们提出的里程计框架,可以轻松直接地测试地面分割算法是否有助于提取描述良好的特征,从而提高 SLAM 性能。此外,通过利用称为 Patchwork 的 SOTA 地面分割方法,即使在复杂和不平坦的城市环境中也显示出稳健的地面分割,性能扰动很小,提出了一种新的地面优化 LiDAR 里程计,称为 PaGO-LOAM。这些方法使用 KITTI 里程计数据集进行了测试。 PaGO-LOAM与基线方法相比表现出稳健和准确的性能。我们的代码可在 https://github.com/url-kaist/AlterGround-LeGO-LOAM

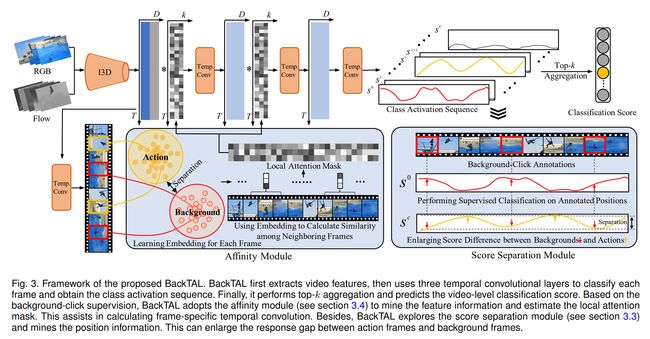

论文:Background-Click Supervision for Temporal Action Localization

论文标题:Background-Click Supervision for Temporal Action Localization

论文时间:24 Nov 2021

所属领域:Computer Vision / 计算机视觉

对应任务:Action Localization,Temporal Action Localization,Weakly-supervised Temporal Action Localization,Weakly Supervised Temporal Action Localization,动作定位,时序动作定位,弱监督时序动作定位,弱监督时序动作定位

论文地址:https://arxiv.org/abs/2111.12449

代码实现:https://github.com/vividle/backtal

论文作者:Le Yang, Junwei Han, Tao Zhao, Tianwei Lin, Dingwen Zhang, Jianxin Chen

论文简介:Weakly supervised temporal action localization aims at learning the instance-level action pattern from the video-level labels, where a significant challenge is action-context confusion. / 弱监督时间动作定位旨在从视频级标签中学习实例级动作模式,其中一个重大挑战是动作-上下文混淆。

论文摘要:Weakly supervised temporal action localization aims at learning the instance-level action pattern from the video-level labels, where a significant challenge is action-context confusion. To overcome this challenge, one recent work builds an action-click supervision framework. It requires similar annotation costs but can steadily improve the localization performance when compared to the conventional weakly supervised methods. In this paper, by revealing that the performance bottleneck of the existing approaches mainly comes from the background errors, we find that a stronger action localizer can be trained with labels on the background video frames rather than those on the action frames. To this end, we convert the action-click supervision to the background-click supervision and develop a novel method, called BackTAL. Specifically, BackTAL implements two-fold modeling on the background video frames, i.e. the position modeling and the feature modeling. In position modeling, we not only conduct supervised learning on the annotated video frames but also design a score separation module to enlarge the score differences between the potential action frames and backgrounds. In feature modeling, we propose an affinity module to measure frame-specific similarities among neighboring frames and dynamically attend to informative neighbors when calculating temporal convolution. Extensive experiments on three benchmarks are conducted, which demonstrate the high performance of the established BackTAL and the rationality of the proposed background-click supervision. Code is available at https://github.com/VividLe/BackTAL

弱监督时序动作定位旨在从视频级标签中学习实例级动作模式,其中一个重大挑战是动作-上下文混淆。为了克服这一挑战,最近的一项工作建立了一个动作点击监督框架。与传统的弱监督方法相比,它需要类似的注释成本,但可以稳步提高定位性能。在本文中,通过揭示现有方法的性能瓶颈主要来自背景错误,我们发现可以使用背景视频帧上的标签而不是动作帧上的标签来训练更强的动作定位器。为此,我们将动作点击监督转换为背景点击监督,并开发了一种新方法,称为 BackTAL。具体来说,BackTAL 对背景视频帧进行了双重建模,即位置建模和特征建模。在位置建模中,我们不仅对带注释的视频帧进行监督学习,还设计了一个分数分离模块来扩大潜在动作帧和背景之间的分数差异。在特征建模中,我们提出了一个相似性模块来测量相邻帧之间的帧特定相似性,并在计算时间卷积时动态关注信息近邻。在三个基准上进行了广泛的实验,证明了所建立的 BackTAL 的高性能和所提出的背景点击监督的合理性。代码可以在https://github.com/VividLe/BackTAL取得

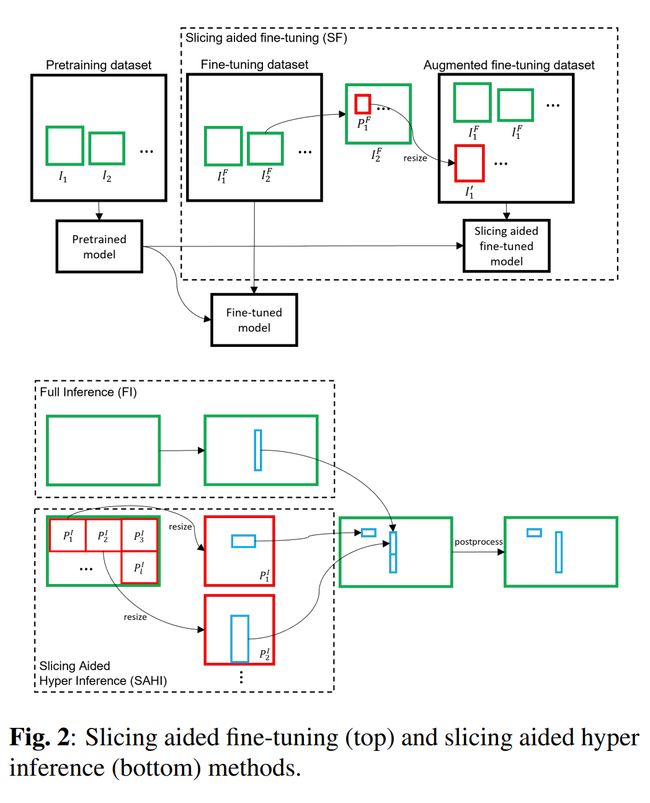

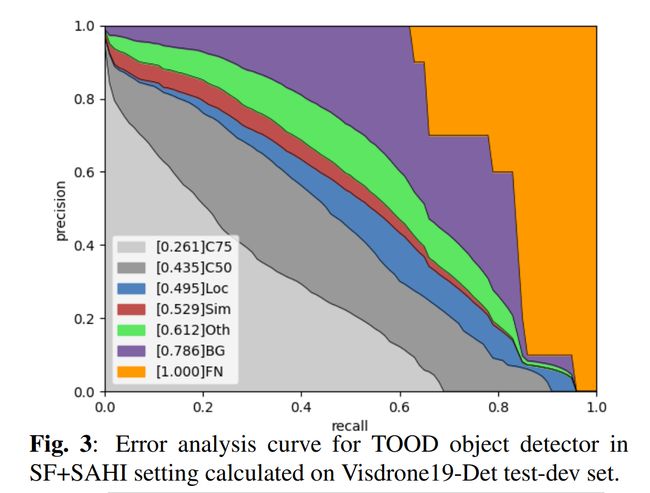

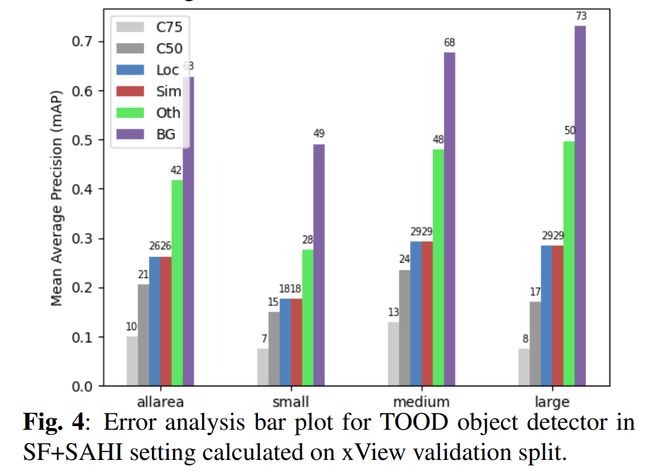

论文:Slicing Aided Hyper Inference and Fine-tuning for Small Object Detection

论文标题:Slicing Aided Hyper Inference and Fine-tuning for Small Object Detection

论文时间:14 Feb 2022

所属领域:Computer Vision / 计算机视觉

对应任务:Object Detection,Small Object Detection,物体检测,小物体检测

论文地址:https://arxiv.org/abs/2202.06934

代码实现:https://github.com/obss/sahi , https://github.com/fcakyon/sahi-benchmark , https://github.com/airctic/icevision , https://github.com/Kazuhito00/sahi-yolox-onnx-sample , https://github.com/Resham-Sundar/sahi-yolox

论文作者:Fatih Cagatay Akyon, Sinan Onur Altinuc, Alptekin Temizel

论文简介:In this work, an open-source framework called Slicing Aided Hyper Inference (SAHI) is proposed that provides a generic slicing aided inference and fine-tuning pipeline for small object detection. / 在这项工作中,提出了一个名为 Slicing Aided Hyper Inference (SAHI) 的开源框架,它为小目标检测提供了一个通用的切片辅助推理和微调管道。

论文摘要:Detection of small objects and objects far away in the scene is a major challenge in surveillance applications. Such objects are represented by small number of pixels in the image and lack sufficient details, making them difficult to detect using conventional detectors. In this work, an open-source framework called Slicing Aided Hyper Inference (SAHI) is proposed that provides a generic slicing aided inference and fine-tuning pipeline for small object detection. The proposed technique is generic in the sense that it can be applied on top of any available object detector without any fine-tuning. Experimental evaluations, using object detection baselines on the Visdrone and xView aerial object detection datasets show that the proposed inference method can increase object detection AP by 6.8%, 5.1% and 5.3% for FCOS, VFNet and TOOD detectors, respectively. Moreover, the detection accuracy can be further increased with a slicing aided fine-tuning, resulting in a cumulative increase of 12.7%, 13.4% and 14.5% AP in the same order. Proposed technique has been integrated with Detectron2, MMDetection and YOLOv5 models and it is publicly available at https://github.com/obss/sahi.git

在场景中检测小物体和远处物体是监控应用的主要挑战。此类物体由图像中的少量像素表示,并且缺乏足够的细节,因此难以使用传统检测器进行检测。在这项工作中,提出了一个名为 Slicing Aided Hyper Inference (SAHI) 的开源框架,它为小目标检测提供了一个通用的切片辅助推理和微调管道。所提出的技术是通用的,因为它可以应用在任何可用的对象检测器之上,而无需任何微调。在 Visdrone 和 xView 航空目标检测数据集上使用目标检测基线的实验评估表明,所提出的推理方法可以分别将 FCOS、VFNet 和 TOOD 检测器的目标检测 AP 提高 6.8%、5.1% 和 5.3%。此外,通过切片辅助微调可以进一步提高检测精度,导致AP以相同顺序累积增加12.7%、13.4%和14.5%。所提出的技术已与 Detectron2、MMDetection 和 YOLOv5 模型集成,可在 https://github.com/obss/sahi.git 上公开获取

我们是 ShowMeAI,致力于传播AI优质内容,分享行业解决方案,用知识加速每一次技术成长!点击查看 历史文章列表,在公众号内订阅话题 #ShowMeAI资讯日报,可接收每日最新推送。点击 专题合辑&电子月刊 快速浏览各专题全集。点击 这里 回复关键字 日报 免费获取AI电子月刊与资料包。

- 作者:韩信子@ShowMeAI

- 历史文章列表

- 专题合辑&电子月刊

- 声明:版权所有,转载请联系平台与作者并注明出处

- 欢迎回复,拜托点赞,留言推荐中有价值的文章、工具或建议,我们都会尽快回复哒~