selenium爬取阿里巴巴国际站

为了减轻采购妹纸同事的工作任务。

所有的代码我都放到这里了 https://github.com/jevy146/selenium_Alibaba

第一步获取信息。

# -*- coding: utf-8 -*-

# @Time : 2020/6/17 14:09

# @Author : 结尾!!

# @FileName: D01_spider_alibaba_com.py

# @Software: PyCharm

from selenium.webdriver import ChromeOptions

import time

from fake_useragent import UserAgent

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.wait import WebDriverWait

#第一步实现对淘宝的登陆

class Chrome_drive():

def __init__(self):

ua = UserAgent()

option = ChromeOptions()

option.add_experimental_option('excludeSwitches', ['enable-automation'])

option.add_experimental_option('useAutomationExtension', False)

NoImage = {"profile.managed_default_content_settings.images": 2} # 控制 没有图片

option.add_experimental_option("prefs", NoImage)

# option.add_argument(f'user-agent={ua.chrome}') # 增加浏览器头部

# chrome_options.add_argument(f"--proxy-server=http://{self.ip}") # 增加IP地址。。

option.add_argument('--headless') #无头模式 不弹出浏览器

self.browser = webdriver.Chrome(options=option)

self.browser.execute_cdp_cmd('Page.addScriptToEvaluateOnNewDocument', {

'source': 'Object.defineProperty(navigator,"webdriver",{get:()=>undefined})'

}) #去掉selenium的驱动设置

self.browser.set_window_size(1200,768)

self.wait = WebDriverWait(self.browser, 12)

def get_login(self):

url='https://www.alibaba.com/'

self.browser.get(url)

#self.browser.maximize_window() # 在这里登陆的中国大陆的邮编

#这里进行人工登陆。

time.sleep(2)

self.browser.refresh() # 刷新方法 refres

return

#获取判断网页文本的内容:

def index_page(self,page,wd):

"""

抓取索引页

:param page: 页码

"""

print('正在爬取第', page, '页')

words=wd.replace(' ','_')

url = f'https://www.alibaba.com/products/{words}.html?IndexArea=product_en&page={page}'

js1 = f" window.open('{url}')" # 执行打开新的标签页

print(url)

self.browser.execute_script(js1) # 打开新的网页标签

# 执行打开新一个标签页。

self.browser.switch_to.window(self.browser.window_handles[-1]) # 此行代码用来定位当前页面窗口

self.buffer() # 网页滑动 成功切换

#等待元素加载出来

time.sleep(3)

self.wait.until(EC.presence_of_element_located((By.CSS_SELECTOR, '#root > div > div.seb-pagination > div > div.seb-pagination__pages > a:nth-child(10)')))

#获取网页的源代码

html = self.browser.page_source

get_products(wd,html)

self.close_window()

def buffer(self): #滑动网页的

for i in range(20):

time.sleep(0.8)

self.browser.execute_script('window.scrollBy(0,380)', '') # 向下滑行300像素。

def close_window(self):

length=self.browser.window_handles

print('length',length) #判断当前网页窗口的数量

if len(length) > 3:

self.browser.switch_to.window(self.browser.window_handles[1])

self.browser.close()

time.sleep(1)

self.browser.switch_to.window(self.browser.window_handles[-1])

import csv

def save_csv(lise_line):

file = csv.writer(open("./alibaba_com_img.csv",'a',newline="",encoding="utf-8"))

file.writerow(lise_line)

#解析网页,

from scrapy.selector import Selector

def get_products(wd,html_text):

"""

提取商品数据

"""

select=Selector(text=html_text)

# 大概有47个

items = select.xpath('//*[@id="root"]/div/div[3]/div[2]/div/div/div/*').extract()

print('产品数 ',len(items))

for i in range(1, 49):

title = select.xpath(

f'//*[@id="root"]/div/div[3]/div[2]/div/div/div/div[{i}]/div/div[2]/h4/a/@title').extract() # 产品的标题

title_href = select.xpath(

f'//*[@id="root"]/div/div[3]/div[2]/div/div/div/div[{i}]/div/div[2]/h4/a/@href').extract() # 产品的详情页

start_num = select.xpath(

f'//*[@id="root"]/div/div[3]/div[2]/div/div/div/div[{i}]/div/div[2]/div[1]/div/p[2]/span/text()').extract() # 起订量

price = select.xpath(

f'//*[@id="root"]/div/div[3]/div[2]/div/div/div/div[{i}]/div/div[2]/div[1]/div/p[1]/@title').extract() # 产品的价格

adress_href = select.xpath(

f'//*[@id="root"]/div/div[3]/div[2]/div/div/div/div[{i}]/div/div[3]/a/@href').extract() # 商家链接

adress = select.xpath(

f'//*[@id="root"]/div/div[3]/div[2]/div/div/div/div[{i}]/div/div[3]/a/@title').extract() # 商家地址

Response_Rate = select.xpath(

f'//*[@id="root"]/div/div[3]/div[2]/div/div/div/div[{i}]/div/div[3]/div[2]/div[1]/span/span/text()').extract() # 复购率

Transaction = select.xpath(

f'//*[@id="root"]/div/div[3]/div[2]/div/div/div/div[{i}]/div/div[3]/div[2]/div[2]/span//text()').extract() # 交易量

img = select.xpath(

f'//*[@id="root"]/div/div[3]/div[2]/div/div/div/div[{i}]/div/div[1]/a/div[2]/div[1]/img/@src').extract() # 图片

all_down =[wd]+ title +img+ title_href + start_num + price + adress + adress_href + Response_Rate + Transaction

save_csv(all_down)

print(title, img,title_href, start_num, price, adress, adress_href, Response_Rate, Transaction)

def main():

"""

遍历每一页

"""

run=Chrome_drive()

run.get_login() #先扫码登录

wd=['turkey fryer','towel warmer']

for w in wd:

for i in range(1, 6):

run.index_page(i,w)

if __name__ == '__main__':

csv_title = 'word,title,img,title_href,start_num,price,adress,adress_href,Response_Rate,Transaction,Transactioning'.split(

',')

save_csv(csv_title)

main()

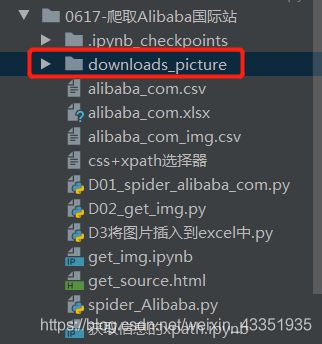

第二步:下载图片,图片都在这个文件夹中

,运行D02_get_img.py

# -*- coding: utf-8 -*-

# @Time : 2020/6/17 14:41

# @Author : 结尾!!

# @FileName: D02_get_img.py

# @Software: PyCharm

import requests

def open_requests(img, img_name):

img_url ='https:'+ img

res=requests.get(img_url)

with open(f"./downloads_picture/{img_name}",'wb') as fn:

fn.write(res.content)

import pandas as pd

df1=pd.read_csv('./alibaba_com_img.csv',)

for img in df1["img"]:

if pd.isnull(img):

pass

else:

if '@sc01' in img:

img_name=img[24:]

print(img,img_name)

open_requests(img, img_name)

第三步:将图片插入到对应的excel中

D3将图片插入到excel中.py

# -*- coding: utf-8 -*-

# @Time : 2020/1/19 10:17

# @Author : 结尾!!

# @FileName: 4将图片插入到excel中.py

# @Software: PyCharm

from PIL import Image

import os

import xlwings as xw

path='./alibaba_com.xlsx'

app = xw.App(visible=True, add_book=False)

wb = app.books.open(path)

sht = wb.sheets['alibaba_com_img']

img_list=sht.range("D2").expand('down').value

print(len(img_list))

def write_pic(cell,img_name):

path=f'./downloads_picture/{img_name}'

print(path)

fileName = os.path.join(os.getcwd(), path)

img = Image.open(path).convert("RGB")

print(img.size)

w, h = img.size

x_s = 70 # 设置宽 excel中,我设置了200x200的格式

y_s = h * x_s / w # 等比例设置高

sht.pictures.add(fileName, left=sht.range(cell).left, top=sht.range(cell).top, width=x_s, height=y_s)

if __name__ == '__main__':

for index,img in enumerate(img_list):

cell="B"+str(index+2)

if '@sc01' in img:

img_name = img[24:]

try:

write_pic(cell,img_name)

print(cell,img_name)

except:

print("没有找到这个img_name的图片",img_name)

wb.save()

wb.close()

app.quit()