VARIATIONAL IMAGE COMPRESSION WITH A SCALE HYPERPRIOR文献实验复现

前言

这篇文章是在END-TO-END OPTIMIZED IMAGE COMPRESSION文献基础上进行的改进,主要是加入了超先验网络对边信息进行了处理。相关环境配置与基础可以参考END-TO-END OPTIMIZED IMAGE COMPRESSION

github地址:github

1、相关命令

(1)训练

python bmshj2018.py -V train

同样,我这里方便调试,加入了launch.json

{

// 使用 IntelliSense 了解相关属性。

// 悬停以查看现有属性的描述。

// 欲了解更多信息,请访问: https://go.microsoft.com/fwlink/?linkid=830387

"version": "0.2.0",

"configurations": [

{

"name": "Python: 当前文件",

"type": "python",

"request": "launch",

"program": "${file}",

"console": "integratedTerminal",

"justMyCode": true,

// bmshj2018

"args": ["--verbose","train"] // 训练

}

]

}

2、数据集

if args.train_glob:

train_dataset = get_custom_dataset("train", args)

validation_dataset = get_custom_dataset("validation", args)

else:

train_dataset = get_dataset("clic", "train", args)

validation_dataset = get_dataset("clic", "validation", args)

validation_dataset = validation_dataset.take(args.max_validation_steps)

给定数据集地址的情况下使用对应数据集,否则使用默认CLIC数据集

3、整理训练流程

整个代码其实主要还是在bls2017.py上进行的补充,所以整个流转流程是和bls2017的过程一样,这里我简单整理一下这篇文献的训练过程

-

实例化对象

init中初始化一些参数(LocationScaleIndexedEntropyModel、ContinuousBatchedEntropyModel两个熵模型中所需参数,对应的模型说明可以见tfc熵模型,具体的参数有scale_fn以及给定的num_scales)

初始化非线性分析变换、非线性综合变换、超先验非线性分析变换、超先验非线性综合变换、添加均匀噪声、获得先验概率等

call中通过两个熵模型计算

(1)x->y(非线性分析变换)

(2)y->z(超先验非线性分析变换)

(3)z->z_hat、边信息bit数(ContinuousBatchedEntropyModel熵模型)

(4)y->y_hat、比特数(LocationScaleIndexedEntropyModel熵模型)

(5)计算bpp(这里bpp=bit/px,bit数等于上面两项bit数相加)、mse、loss

其中非线性分析变换即cnn卷积的过程,其中有一步补零的过程是为了输入与输出图片尺寸相等 -

model.compile

通过model.compile配置训练方法,使用优化器进行梯度下降、算bpp、mse、lose的加权平均 -

过滤剪裁数据集

通过参数查看是否给定了数据集路径

给定数据集路径:直接剪裁成256x256(统一剪裁成256*256送入网络训练,后面压缩的图片不会改变大小)

未给定数据集路径:用CLIC数据集,过滤出图片大小大于256x256的三通的图片,然后进行剪裁

分出训练数据集与验证数据集 -

model.fit

传入训练数据集,设置相关参数(epoch等)进行训练

通过retval = super().fit(*args, **kwargs)进入model的train_step(不得不说这里封装的太严实了…但从单文件的代码根本找不到怎么跳进去的) -

train_step

self.trainable_variables获取变量集,通过传入变量集与定义的损失函数,进行前向传播与反向误差传播更新参数。然后更新loss, bpp, mse

def train_step(self, x):

with tf.GradientTape() as tape:

loss, bpp, mse = self(x, training=True)

variables = self.trainable_variables

gradients = tape.gradient(loss, variables)

self.optimizer.apply_gradients(zip(gradients, variables))

self.loss.update_state(loss)

self.bpp.update_state(bpp)

self.mse.update_state(mse)

return {m.name: m.result() for m in [self.loss, self.bpp, self.mse]}

- 接下来就是重复训练的过程,直到到终止条件(比如epoch达到10000)

4、压缩一张图片

python bmshj2018.py [options] compress original.png compressed.tfci

这里通过launch.json调试

"args": ["--verbose","compress","./models/kodak/kodim01.png", "./models/kodim01.tfci"] // 压缩一张图

引入你自己的path

"--model_path", default="./models/bmshj2018Model/bmshj2018_test",

我这里压缩一张图片是通过终端调试进行的

压缩成功后

Mean squared error: 208.0925

PSNR (dB): 24.95

Multiscale SSIM: 0.9040

Multiscale SSIM (dB): 10.18

Bits per pixel: 0.2020

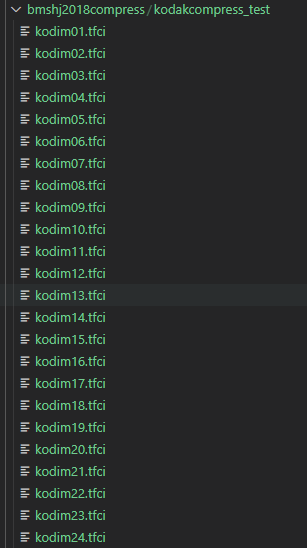

生成的tfci文件如下

![]()

5、压缩一个文件夹下的所有图片

- 添加压缩文件夹下所有图片的命令

# 'compressAll' subcommand.

compressAll_cmd = subparsers.add_parser(

"compressAll",

formatter_class=argparse.ArgumentDefaultsHelpFormatter,

description="读取文件下的文件进行压缩操作")

# Arguments for 'compressAll'.

compressAll_cmd.add_argument(

"input_folder",

help="输入文件夹.")

compressAll_cmd.add_argument(

"output_folder",

help="输出文件夹.")

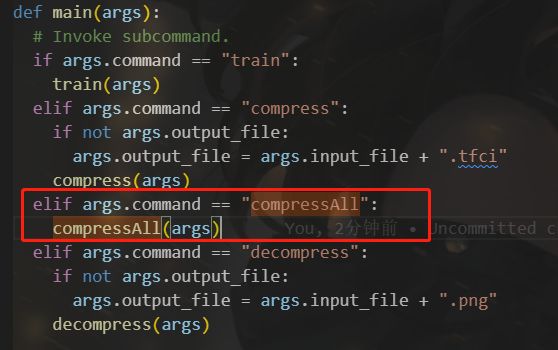

- 主分支加入压缩所有图片对应的方法

elif args.command == "compressAll":

compressAll(args)

- 添加压缩所有图片对应的方法

主要是利用调用compress方法获取相应的属性值,其中此方法中需要拼接compress所需命令

def compressAll(args):

"""压缩文件夹的文件"""

# print(args, 'args')

files = glob.glob(args.input_folder + '/*png')

# print(files, 'files')

perArgs = copy.copy(args) # 浅拷贝,不改变args的值

# print(perArgs, 'perArgs')

bpp_list = []

mse_list = []

psnr_list = []

mssim_list = []

msssim_db_list = []

# 循环遍历kodak数据集

for img in files:

# print(img, 'img')

# img为图片完整的相对路径

imgIndexFirst = img.find('/kodim') # 索引

imgIndexNext = img.find('.png')

imgName = img[imgIndexFirst: imgIndexNext] # 单独的图片文件名,如kodim01.png

# print(imgName, 'imgName') # 单独的图片文件名,如/kodim01

perArgs.input_file = img

perArgs.output_file = args.output_folder + imgName + '.tfci'

# print(perArgs, 'perArgs')

# print(args, 'args')

bpp, mse, psnr, msssim, msssim_db = perCompress(perArgs)

print(bpp, mse, psnr, msssim, msssim_db, 'bpp, mse, psnr, msssim, msssim_db')

bpp_list.append(bpp)

mse_list.append(mse)

psnr_list.append(psnr)

mssim_list.append(msssim)

msssim_db_list.append(msssim_db)

print(bpp_list, 'bpp_list')

print(mse_list, 'mse_list')

bpp_average = mean(bpp_list)

mse_average = mean(mse_list)

psnr_average = mean(psnr_list)

mssim_average = mean(mssim_list)

msssim_db_average = mean(msssim_db_list)

print(bpp_average, 'bpp_average')

print(mse_average, 'mse_average')

print(psnr_average, 'psnr_average')

print(mssim_average, 'mssim_average')

print(msssim_db_average, 'msssim_db_average')

其中,perCompress方法如下,是通过原有的compress方法加上返回值构成

# 压缩每一张图片,返回对应值

def perCompress(args):

"""Compresses an image."""

# Load model and use it to compress the image.

model = tf.keras.models.load_model(args.model_path)

x = read_png(args.input_file) # kodak数据集shape=(512,768,3)

tensors = model.compress(x)

# Write a binary file with the shape information and the compressed string.

packed = tfc.PackedTensors()

packed.pack(tensors)

with open(args.output_file, "wb") as f:

f.write(packed.string)

# If requested, decompress the image and measure performance.

if args.verbose:

x_hat = model.decompress(*tensors)

# Cast to float in order to compute metrics.

x = tf.cast(x, tf.float32)

x_hat = tf.cast(x_hat, tf.float32)

mse = tf.reduce_mean(tf.math.squared_difference(x, x_hat))

psnr = tf.squeeze(tf.image.psnr(x, x_hat, 255))

msssim = tf.squeeze(tf.image.ssim_multiscale(x, x_hat, 255))

msssim_db = -10. * tf.math.log(1 - msssim) / tf.math.log(10.)

# The actual bits per pixel including entropy coding overhead.

num_pixels = tf.reduce_prod(tf.shape(x)[:-1])

bpp = len(packed.string) * 8 / num_pixels

print(f"Mean squared error: {mse:0.4f}")

print(f"PSNR (dB): {psnr:0.2f}")

print(f"Multiscale SSIM: {msssim:0.4f}")

print(f"Multiscale SSIM (dB): {msssim_db:0.2f}")

print(f"Bits per pixel: {bpp:0.4f}")

return bpp, mse, psnr, msssim, msssim_db

- 在launch.json中通过命令调用方法

"args": ["--verbose","compressAll","./models/kodak", "./models/bmshj2018compress/kodakcompress_test"] // 压缩文件夹图片

- 在上述方法中需要部分包的引入

import copy

from numpy import *

输出的数据如下

0.13505808512369794 bpp_average

135.78781 mse_average

27.207735 psnr_average

0.90912575 mssim_average

10.570003 msssim_db_average

6、实验数据

1、文献实验数据

| bpp | psnr |

|---|---|

| 0.115239 | 27.106351 |

| 0.185698 | 28.679134 |

| 0.301804 | 30.616753 |

| 0.468972 | 32.554935 |

| 0.686378 | 34.580960 |

| 0.966864 | 36.720366 |

| 1.307441 | 38.807960 |

| 1.727503 | 40.794920 |

2、自己实验数据

| modelName | lambda | num_filters | bpp | mse | psnr | missm | learning_rate | msssim_db |

|---|---|---|---|---|---|---|---|---|

| bmshj2018_test | 0.0016 | 128 | 0.1945 | 135.78781 | 27.207735 | 0.90912575 | 10.570003 | 1e-4 |

第一组测试数据显然没有收敛,下一步不改变其他参数,增加学习率进行尝试

注:

1、经验实验数据参数设置

| num_filters | lambda | bpp |

|---|---|---|

| 128 | 0.0016 | 0.1左右 |

| 128 | 0.0032 | |

| 128 | 0.0075 | 0.3左右 |

| 192 | 0.015 | |

| 192 | 0.03 | |

| 192 | 0.045 | |

| 256 | 0.06 |