5.2 基于二进制的Kubernetes集群部署

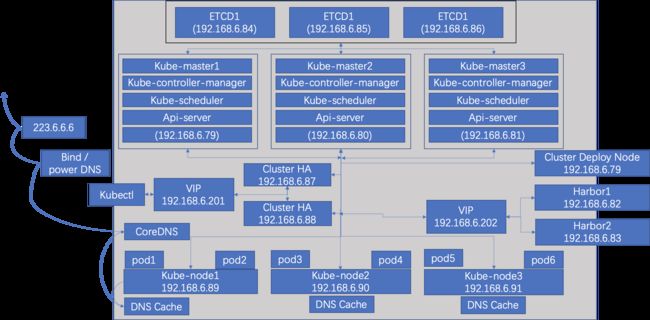

1. 集群部署架构

2. 配置基础环境

2.1 系统配置

- 主机名

- iptables

- 防火墙

- 内核参数及资源限制策略配置

2.2 安装docker

- 在master、etcd、node节点安装docker,安装及配置参考2.5.1.1

2.3 安装ansible

- 在部署主机安装ansible,以master1 为部署主机为例

root@master1:~# apt install python3-pip git

root@master1:~# pip3 install ansible -i https://mirrors.aliyun.com/pypi/simple

root@master1:~# ansible --version

ansible [core 2.11.5]

config file = None

configured module search path = ['/root/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /usr/local/lib/python3.8/dist-packages/ansible

ansible collection location = /root/.ansible/collections:/usr/share/ansible/collections

executable location = /usr/local/bin/ansible

python version = 3.8.10 (default, Jun 2 2021, 10:49:15) [GCC 9.4.0]

jinja version = 2.10.1

libyaml = True

2.4 配置节点免密、docker登陆

2.4.1 在master1 上登陆docker

root@master1:~# mkdir -p /etc/docker/certs.d/harbor.k8s.local/

root@master1:~# scp [email protected]:/data/certs/harbor-ca.crt /etc/docker/certs.d/harbor.k8s.local/

root@master1:~# docker login harbor.k8s.local

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

2.4.2 在master1节点使用脚本,对master、etcd、node节点配置免密登陆、docker登陆

- 脚本如下:

root@master1:~# ssh-keygen

root@master1:~# apt install sshpass

root@master1:~# vim scp-key.sh

#!/bin/bash

IP="

192.168.6.79

192.168.6.80

192.168.6.81

192.168.6.84

192.168.6.85

192.168.6.86

192.168.6.89

192.168.6.90

192.168.6.91

"

for node in ${IP};do

sshpass -p 123456 ssh-copy-id ${node} -o StrictHostKeyChecking=no

if [ $? -eq 0 ];then

echo "${node} ssh-key copy completed"

echo "copy ssl crt for docker"

ssh ${node} "mkdir -p /etc/docker/certs.d/harbor.k8s.local/"

echo "create harbor ssl path sucess"

scp /etc/docker/certs.d/harbor.k8s.local/harbor-ca.crt ${node}:/etc/docker/certs.d/harbor.k8s.local/harbor-ca.crt

echo "copy harbor ssl key sucess"

ssh ${node} "echo "192.168.6.201 harbor.k8s.local" >> /etc/hosts"

echo "copy hosts file complete"

scp -r /root/.docker ${node}:/root/

echo "harbor ssl copy complete"

else

echo "${node} ssh-key copy failed"

fi

done

2.5. 部署Harbor及HA节点

2.5.1 部署Harbor

2.5.1.1 安装docker及docker-compose

- 以此安装脚本安装

#!/bin/bash

User=$1

DIR=$(pwd)

PACKAGE_NAME=$2

DOCKER_FILE=${DIR}/${PACKAGE_NAME}

centos_install_docker(){

grep "Kernel" /etc/issue &> /dev/null

if [ $? -eq 0 ];then

/bin/echo "当前系统为 $(cat /etc/redhat-release),准备开始系统初始化,配置docker-compose与安装docker" && sleep 1

systemctl stop firewalld && systemctl disable firewalld && echo "已经关闭防火墙" && sleep 1

systemctl stop NetworkManager && systemctl disable NetworkManager && echo "NetworkManager Stopped" && sleep 1

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux && setenforce 0 && echo "Selinux 已经关闭" && sleep 1

\cp ${DIR}/limits.conf /etc/security/limits.conf

\cp ${DIR}/sysctl.conf /etc/sysctl.conf

/bin/tar xvf ${DOCKER_FILE}

\cp -rf docker/* /usr/bin/

\cp containerd.service /lib/systemd/system/containerd.service

\cp docker.service /lib/systemd/system/docker.service

\cp docker.socket /lib/systemd/system/docker.socket

\cp ${DIR}/docker-compose-Linux-x86_64 /usr/bin/docker-compose

chmod u+x /usr/bin/docker-compose

id -u docker &>/dev/null

if [ $? -ne 0 ];then

groupadd docker && useradd docker -g docker

fi

id -u ${User} &> /dev/null

if [ $? -ne 0 ];then

useradd ${User}

useradd ${User} -G docker

fi

/usr/bin/ls /data &>/dev/null

if [ $? -ne 0 ];then

mkdir /data

fi

\cp ${DIR}/daemon.json /etc/docker/

systemctl enable containerd.service && systemctl restart containerd.service

systemctl enable docker.service && systemctl restart docker.service

systemctl enable docker.socket && systemctl restart docker.socket

fi

}

ubuntu_install_docker(){

grep "Ubuntu" /etc/issue &> /dev/null

if [ $? -eq 0 ];then

/bin/echo "当前系统为 $(cat /etc/issue),准备开始系统初始化,配置docker-compose与安装docker" && sleep 1

\cp ${DIR}/limits.conf /etc/security/limits.conf

\cp ${DIR}/sysctl.conf /etc/sysctl.conf

/bin/tar xvf ${DOCKER_FILE}

\cp -rf docker/* /usr/bin/

\cp containerd.service /lib/systemd/system/containerd.service

\cp docker.service /lib/systemd/system/docker.service

\cp docker.socket /lib/systemd/system/docker.socket

\cp ${DIR}/docker-compose-Linux-x86_64 /usr/bin/docker-compose

chmod u+x /usr/bin/docker-compose

id -u ${User} &> /dev/null

if [ $? -ne 0 ];then

/bin/grep -q docker /etc/group

if [ $? -ne 0 ];then

groupadd -r docker

fi

useradd -r -m -g ${User} ${User}

fi

/usr/bin/ls /data/docker &>/dev/null

if [ $? -ne 0 ];then

mkdir -p /data/docker

fi

\cp ${DIR}/daemon.json /etc/docker/

systemctl enable containerd.service && systemctl restart containerd.service

systemctl enable docker.service && systemctl restart docker.service

systemctl enable docker.socket && systemctl restart docker.socket

fi

}

main(){

centos_install_docker

ubuntu_install_docker

}

main

root@harbor1:~# bash docker_install.sh docker docker-19.03.15.tgz

- 相关脚本及文件:

https://codeup.aliyun.com/614c9d1b3a631c643ae62857/Scripts.git

2.5.1.2 安装Harbor

- 将harbor二进制文件复制到对应主机后:

root@harbor1:~# tar xf harbor-offline-installer-v2.3.2.tgz

root@harbor1:~# mkdir /data/harbor/

root@harbor1:~# cd harbor

root@harbor1:~# cp harbor.yml.tmpl harbor.yml

root@harbor1:~# vim harbor.yml

# Configuration file of Harbor

# The IP address or hostname to access admin UI and registry service.

# DO NOT use localhost or 127.0.0.1, because Harbor needs to be accessed by external clients.

hostname: 192.168.6.82

# http related config

http:

# port for http, default is 80. If https enabled, this port will redirect to https port

port: 80

# https related config

#https:

# https port for harbor, default is 443

# port: 443

# The path of cert and key files for nginx

# certificate: /your/certificate/path

# private_key: /your/private/key/path

# # Uncomment following will enable tls communication between all harbor components

# internal_tls:

# # set enabled to true means internal tls is enabled

# enabled: true

# # put your cert and key files on dir

# dir: /etc/harbor/tls/internal

# Uncomment external_url if you want to enable external proxy

# And when it enabled the hostname will no longer used

# external_url: https://reg.mydomain.com:8433

# The initial password of Harbor admin

# It only works in first time to install harbor

# Remember Change the admin password from UI after launching Harbor.

harbor_admin_password: 123456

# Harbor DB configuration

database:

# The password for the root user of Harbor DB. Change this before any production use.

password: root123

# The maximum number of connections in the idle connection pool. If it <=0, no idle connections are retained.

max_idle_conns: 100

# The maximum number of open connections to the database. If it <= 0, then there is no limit on the number of open connections.

# Note: the default number of connections is 1024 for postgres of harbor.

max_open_conns: 900

# The default data volume

data_volume: /data/harbor

# Harbor Storage settings by default is using /data dir on local filesystem

# Uncomment storage_service setting If you want to using external storage

# storage_service:

# # ca_bundle is the path to the custom root ca certificate, which will be injected into the truststore

# # of registry's and chart repository's containers. This is usually needed when the user hosts a internal storage with self signed certificate.

# ca_bundle:

# # storage backend, default is filesystem, options include filesystem, azure, gcs, s3, swift and oss

# # for more info about this configuration please refer https://docs.docker.com/registry/configuration/

# filesystem:

# maxthreads: 100

# # set disable to true when you want to disable registry redirect

# redirect:

# disabled: false

# Trivy configuration

#

# Trivy DB contains vulnerability information from NVD, Red Hat, and many other upstream vulnerability databases.

# It is downloaded by Trivy from the GitHub release page https://github.com/aquasecurity/trivy-db/releases and cached

# in the local file system. In addition, the database contains the update timestamp so Trivy can detect whether it

# should download a newer version from the Internet or use the cached one. Currently, the database is updated every

# 12 hours and published as a new release to GitHub.

trivy:

# ignoreUnfixed The flag to display only fixed vulnerabilities

ignore_unfixed: false

# skipUpdate The flag to enable or disable Trivy DB downloads from GitHub

#

# You might want to enable this flag in test or CI/CD environments to avoid GitHub rate limiting issues.

# If the flag is enabled you have to download the `trivy-offline.tar.gz` archive manually, extract `trivy.db` and

# `metadata.json` files and mount them in the `/home/scanner/.cache/trivy/db` path.

skip_update: false

#

# insecure The flag to skip verifying registry certificate

insecure: false

# github_token The GitHub access token to download Trivy DB

#

# Anonymous downloads from GitHub are subject to the limit of 60 requests per hour. Normally such rate limit is enough

# for production operations. If, for any reason, it's not enough, you could increase the rate limit to 5000

# requests per hour by specifying the GitHub access token. For more details on GitHub rate limiting please consult

# https://developer.github.com/v3/#rate-limiting

#

# You can create a GitHub token by following the instructions in

# https://help.github.com/en/github/authenticating-to-github/creating-a-personal-access-token-for-the-command-line

#

# github_token: xxx

jobservice:

# Maximum number of job workers in job service

max_job_workers: 10

notification:

# Maximum retry count for webhook job

webhook_job_max_retry: 10

chart:

# Change the value of absolute_url to enabled can enable absolute url in chart

absolute_url: disabled

# Log configurations

log:

# options are debug, info, warning, error, fatal

level: info

# configs for logs in local storage

local:

# Log files are rotated log_rotate_count times before being removed. If count is 0, old versions are removed rather than rotated.

rotate_count: 50

# Log files are rotated only if they grow bigger than log_rotate_size bytes. If size is followed by k, the size is assumed to be in kilobytes.

# If the M is used, the size is in megabytes, and if G is used, the size is in gigabytes. So size 100, size 100k, size 100M and size 100G

# are all valid.

rotate_size: 200M

# The directory on your host that store log

location: /var/log/harbor

# Uncomment following lines to enable external syslog endpoint.

# external_endpoint:

# # protocol used to transmit log to external endpoint, options is tcp or udp

# protocol: tcp

# # The host of external endpoint

# host: localhost

# # Port of external endpoint

# port: 5140

#This attribute is for migrator to detect the version of the .cfg file, DO NOT MODIFY!

_version: 2.3.0

# Uncomment external_database if using external database.

# external_database:

# harbor:

# host: harbor_db_host

# port: harbor_db_port

# db_name: harbor_db_name

# username: harbor_db_username

# password: harbor_db_password

# ssl_mode: disable

# max_idle_conns: 2

# max_open_conns: 0

# notary_signer:

# host: notary_signer_db_host

# port: notary_signer_db_port

# db_name: notary_signer_db_name

# username: notary_signer_db_username

# password: notary_signer_db_password

# ssl_mode: disable

# notary_server:

# host: notary_server_db_host

# port: notary_server_db_port

# db_name: notary_server_db_name

# username: notary_server_db_username

# password: notary_server_db_password

# ssl_mode: disable

# Uncomment external_redis if using external Redis server

# external_redis:

# # support redis, redis+sentinel

# # host for redis: :

# # host for redis+sentinel:

# # :,:,:

# host: redis:6379

# password:

# # sentinel_master_set must be set to support redis+sentinel

# #sentinel_master_set:

# # db_index 0 is for core, it's unchangeable

# registry_db_index: 1

# jobservice_db_index: 2

# chartmuseum_db_index: 3

# trivy_db_index: 5

# idle_timeout_seconds: 30

# Uncomment uaa for trusting the certificate of uaa instance that is hosted via self-signed cert.

# uaa:

# ca_file: /path/to/ca

# Global proxy

# Config http proxy for components, e.g. http://my.proxy.com:3128

# Components doesn't need to connect to each others via http proxy.

# Remove component from `components` array if want disable proxy

# for it. If you want use proxy for replication, MUST enable proxy

# for core and jobservice, and set `http_proxy` and `https_proxy`.

# Add domain to the `no_proxy` field, when you want disable proxy

# for some special registry.

proxy:

http_proxy:

https_proxy:

no_proxy:

components:

- core

- jobservice

- trivy

# metric:

# enabled: false

# port: 9090

# path: /metrics

root@harbor1:~# ./install.sh --with-trivy

root@harbor1:~# docker-compose start

- 在另一harbor节点进行如上配置

- 配置harbor双节点复制

2.5.2 部署HA节点

2.5.2.1使用openssl生成ssl证书

- 在HA1节点生成ssl证书

root@ha1:~# mkdir -p /data/certs/

root@ha1:/data/certs# openssl genrsa -out harbor-ca.key

root@ha1:/data/certs# openssl req -x509 -new -nodes -key harbor-ca.key -subj "/CN=harbor.k8s.local" -days 7120 -out harbor-ca.crt

root@ha1:/data/certs# cat harbor-ca.crt harbor-ca.key | tee harbor-ca.pem

- 将证书复制到HA2 节点

root@ha2:~# mkdir -p /data/certs/

root@ha1:/data/certs# scp -r * [email protected]:/data/certs

2.5.2.2 安装及配置keepalived

- 在两个HA节点上均如此配置

root@ha1:~# apt update

root@ha1:~# apt install -y keepalived

root@ha1:~# vim /etc/keepalived/keepalived.conf

global_defs {

notification_email {

acassen

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 56

priority 100 #在HA2节点上配置为90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.6.201 dev eth0 label eth0:0

192.168.6.202 dev eth0 label eth0:1

192.168.6.203 dev eth0 label eth0:2

192.168.6.204 dev eth0 label eth0:3

192.168.6.205 dev eth0 label eth0:4

}

}

root@ha1:~# vim /etc/sysctl.conf

...

net.ipv4.ip_nonlocal_bind = 1

root@ha1:~# sysctl -p

root@ha1:~# systemctl start keepalived

root@ha1:~# systemctl enable keepalived

2.5.2.3 安装及配置Haproxy

-

对Harbor的高可用,采用haproxy-ssl-termination的方式,即客户端与haproxy之间采用ssl连接,ssl连接终止在负载均衡器,haproxy与后端应用采用非加密连接。

-

在两个HA节点上均如此配置

root@ha1:~# apt install haproxy

root@ha1:~# vim /etc/haproxy/haproxy.cfg

...

listen harbor-443 #配置harbor监听

bind 192.168.6.201:80

bind 192.168.6.201:443 ssl crt /data/certs/harbor-ca.pem

reqadd X-Forwarded-Proto:\ https

option forwardfor

mode http

balance source

redirect scheme https if !{ ssl_fc }

server harbor1 192.168.6.82:80 check inter 3s fall 3 rise 5

server harbor2 192.168.6.83:80 check inter 3s fall 3 rise 5

listen k8s-6443 #配置kube-api监听

bind 192.168.6.202:6443

mode tcp

server k8s1 192.168.6.79:6443 check inter 3s fall 3 rise 5

server k8s2 192.168.6.80:6443 check inter 3s fall 3 rise 5

server k8s3 192.168.6.81:6443 check inter 3s fall 3 rise 5

root@ha1:~# systemctl restart haproxy

root@ha1:~# systemctl enable haproxy

2.5.2.4 验证登陆Harbor

#登陆测试

root@harbor2:~# docker login harbor.k8s.local

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

#image上传测试

root@harbor2:~# docker pull calico/cni:v3.15.3

v3.15.3: Pulling from calico/cni

1ff8efc68ede: Pull complete

dbf74493f8ac: Pull complete

6a02335af7ae: Pull complete

a9d90ecd95cb: Pull complete

269efe44f16b: Pull complete

d94997f3700d: Pull complete

8c7602656f2e: Pull complete

34fcbf8be9e7: Pull complete

Digest: sha256:519e5c74c3c801ee337ca49b95b47153e01fd02b7d2797c601aeda48dc6367ff

Status: Downloaded newer image for calico/cni:v3.15.3

docker.io/calico/cni:v3.15.3

root@harbor2:~# docker tag calico/cni:v3.15.3 harbor.k8s.local/k8s/calico-cni:v3.15.3

root@harbor2:~# docker push harbor.k8s.local/k8s/calico-cni:v3.15.3

The push refers to repository [harbor.k8s.local/k8s/calico-cni]

3fbbefdb79e9: Pushed

0cab240caf05: Pushed

ed578a6324e2: Pushed

e00099f6d86f: Pushed

0b954fdf8c09: Pushed

fa6b2b5f2343: Pushed

710ad749cc2d: Pushed

3e05a70dae71: Pushed

v3.15.3: digest: sha256:a559d264c7a75a7528560d11778dba2d3b55c588228aed99be401fd2baa9b607 size: 1997

2.5 在部署节点下载部署项目及组件

root@master1:~# export release=3.1.0

root@master1:~# curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

root@master1:~# chmod +x ./ezdown

root@master1:~# ./ezdown -D

root@master1:~# ll /etc/kubeasz/down/

total 1239424

drwxr-xr-x 2 root root 4096 Sep 29 15:41 ./

drwxrwxr-x 11 root root 209 Sep 29 15:36 ../

-rw------- 1 root root 451969024 Sep 29 15:38 calico_v3.15.3.tar

-rw------- 1 root root 42592768 Sep 29 15:38 coredns_1.8.0.tar

-rw------- 1 root root 227933696 Sep 29 15:39 dashboard_v2.2.0.tar

-rw-r--r-- 1 root root 62436240 Sep 29 15:34 docker-19.03.15.tgz

-rw------- 1 root root 58150912 Sep 29 15:39 flannel_v0.13.0-amd64.tar

-rw------- 1 root root 124833792 Sep 29 15:38 k8s-dns-node-cache_1.17.0.tar

-rw------- 1 root root 179014144 Sep 29 15:41 kubeasz_3.1.0.tar

-rw------- 1 root root 34566656 Sep 29 15:40 metrics-scraper_v1.0.6.tar

-rw------- 1 root root 41199616 Sep 29 15:40 metrics-server_v0.3.6.tar

-rw------- 1 root root 45063680 Sep 29 15:40 nfs-provisioner_v4.0.1.tar

-rw------- 1 root root 692736 Sep 29 15:40 pause.tar

-rw------- 1 root root 692736 Sep 29 15:40 pause_3.4.1.tar

3. 配置部署工具及K8S集群配置

3.1 生成新的K8S集群配置文件

root@master1:~# cd /etc/kubeasz/

root@master1:/etc/kubeasz# ./ezctl new k8s-01

3.2 配置ansible 文件

root@master1:/etc/kubeasz# vim clusters/k8s-01/hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd]

192.168.6.84

192.168.6.85

192.168.6.86

# master node(s)

[kube_master]

192.168.6.79

192.168.6.80

# work node(s)

[kube_node]

192.168.6.89

192.168.6.90

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

# 192.168.6.201 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb] #需要打开,不打开部署工具会报错

192.168.6.88 LB_ROLE=backup EX_APISERVER_VIP=192.168.6.202 EX_APISERVER_PORT=8443

192.168.6.87 LB_ROLE=master EX_APISERVER_VIP=192.168.6.202 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

# 192.168.6.79

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"

# Cluster container-runtime supported: docker, containerd

CONTAINER_RUNTIME="docker"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico" #根据需要修改为需要的网络插件

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.100.0.0/16" #根据需要修改

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.200.0.0/16" #根据需要修改

# NodePort Range

NODE_PORT_RANGE="30000-60000" #根据需要port范围建议设置的大一些

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="k8s.local" #根据需要修改

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/usr/local/bin" #建议修改为常见目录

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-01"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

3.3 配置config文件

root@master1:/etc/kubeasz# vim clusters/k8s-01/config.yml

############################

# prepare

############################

# 可选离线安装系统软件包 (offline|online)

INSTALL_SOURCE: "online"

# 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening

OS_HARDEN: false

# 设置时间源服务器【重要:集群内机器时间必须同步】

ntp_servers:

- "ntp1.aliyun.com"

- "time1.cloud.tencent.com"

- "0.cn.pool.ntp.org"

# 设置允许内部时间同步的网络段,比如"10.0.0.0/8",默认全部允许

local_network: "0.0.0.0/0"

############################

# role:deploy

############################

# default: ca will expire in 100 years

# default: certs issued by the ca will expire in 50 years

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

# kubeconfig 配置参数

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

############################

# role:etcd

############################

# 设置不同的wal目录,可以避免磁盘io竞争,提高性能

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""

############################

# role:runtime [containerd,docker]

############################

# ------------------------------------------- containerd

# [.]启用容器仓库镜像

ENABLE_MIRROR_REGISTRY: true

# [containerd]基础容器镜像

SANDBOX_IMAGE: "harbor.k8s.local/k8s/easzlab-pause-amd64:3.4.1" #修改为本地harbor镜像地址

# [containerd]容器持久化存储目录

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

# ------------------------------------------- docker

# [docker]容器存储目录

DOCKER_STORAGE_DIR: "/var/lib/docker"

# [docker]开启Restful API

ENABLE_REMOTE_API: false

# [docker]信任的HTTP仓库

INSECURE_REG: '["127.0.0.1/8"]'

############################

# role:kube-master

############################

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)

MASTER_CERT_HOSTS:

- "10.1.1.1"

- "k8s.test.io"

#- "www.test.com"

# node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址)

# 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段

# https://github.com/coreos/flannel/issues/847

NODE_CIDR_LEN: 24

############################

# role:kube-node

############################

# Kubelet 根目录

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# node节点最大pod 数

MAX_PODS: 300

# 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量

# 数值设置详见templates/kubelet-config.yaml.j2

KUBE_RESERVED_ENABLED: "yes"

# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况;

# 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2

# 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留

# 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存

SYS_RESERVED_ENABLED: "no"

# haproxy balance mode

BALANCE_ALG: "roundrobin"

############################

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

############################

# ------------------------------------------- flannel

# [flannel]设置flannel 后端"host-gw","vxlan"等

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

# [flannel] flanneld_image: "quay.io/coreos/flannel:v0.10.0-amd64"

flannelVer: "v0.13.0-amd64"

flanneld_image: "easzlab/flannel:{{ flannelVer }}"

# [flannel]离线镜像tar包

flannel_offline: "flannel_{{ flannelVer }}.tar"

# ------------------------------------------- calico

# [calico]设置 CALICO_IPV4POOL_IPIP=“off”,可以提高网络性能,条件限制详见 docs/setup/calico.md

CALICO_IPV4POOL_IPIP: "Always"

# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

# [calico]设置calico 网络 backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

# [calico]更新支持calico 版本: [v3.3.x] [v3.4.x] [v3.8.x] [v3.15.x]

calico_ver: "v3.15.3"

# [calico]calico 主版本

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

# [calico]离线镜像tar包

calico_offline: "calico_{{ calico_ver }}.tar"

# ------------------------------------------- cilium

# [cilium]CILIUM_ETCD_OPERATOR 创建的 etcd 集群节点数 1,3,5,7...

ETCD_CLUSTER_SIZE: 1

# [cilium]镜像版本

cilium_ver: "v1.4.1"

# [cilium]离线镜像tar包

cilium_offline: "cilium_{{ cilium_ver }}.tar"

# ------------------------------------------- kube-ovn

# [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

# [kube-ovn]离线镜像tar包

kube_ovn_ver: "v1.5.3"

kube_ovn_offline: "kube_ovn_{{ kube_ovn_ver }}.tar"

# ------------------------------------------- kube-router

# [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet"

OVERLAY_TYPE: "full"

# [kube-router]NetworkPolicy 支持开关

FIREWALL_ENABLE: "true"

# [kube-router]kube-router 镜像版本

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

# [kube-router]kube-router 离线镜像tar包

kuberouter_offline: "kube-router_{{ kube_router_ver }}.tar"

busybox_offline: "busybox_{{ busybox_ver }}.tar"

############################

# role:cluster-addon

############################

# coredns 自动安装

dns_install: "no" #不安装,后期单独安装

corednsVer: "1.8.0"

ENABLE_LOCAL_DNS_CACHE: false #测试环境不安装,建议生成环境安装

dnsNodeCacheVer: "1.17.0"

# 设置 local dns cache 地址

LOCAL_DNS_CACHE: "169.254.20.10"

# metric server 自动安装

metricsserver_install: "no" #不安装

metricsVer: "v0.3.6"

# dashboard 自动安装 #不安装,后期单独安装

dashboard_install: "no"

dashboardVer: "v2.2.0"

dashboardMetricsScraperVer: "v1.0.6"

# ingress 自动安装 #不安装

ingress_install: "no"

ingress_backend: "traefik"

traefik_chart_ver: "9.12.3"

# prometheus 自动安装 #不安装

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "12.10.6"

# nfs-provisioner 自动安装 #不安装

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.1"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

############################

# role:harbor

############################

# harbor version,完整版本号

HARBOR_VER: "v2.1.3"

HARBOR_DOMAIN: "harbor.yourdomain.com"

HARBOR_TLS_PORT: 8443

# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

HARBOR_SELF_SIGNED_CERT: true

# install extra component

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CLAIR: false

HARBOR_WITH_CHARTMUSEUM: true

3.4 初始化集群环境

root@master1:/etc/kubeasz# ./ezctl help setup

Usage: ezctl setup <cluster> <step>

available steps:

01 prepare to prepare CA/certs & kubeconfig & other system settings

02 etcd to setup the etcd cluster

03 container-runtime to setup the container runtime(docker or containerd)

04 kube-master to setup the master nodes

05 kube-node to setup the worker nodes

06 network to setup the network plugin

07 cluster-addon to setup other useful plugins

90 all to run 01~07 all at once

10 ex-lb to install external loadbalance for accessing k8s from outside

11 harbor to install a new harbor server or to integrate with an existed one

examples: ./ezctl setup test-k8s 01 (or ./ezctl setup test-k8s prepare)

./ezctl setup test-k8s 02 (or ./ezctl setup test-k8s etcd)

./ezctl setup test-k8s all

./ezctl setup test-k8s 04 -t restart_master

root@master1:/etc/kubeasz# vim playbooks/01.prepare.yml

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 01

3.5 上传pause镜像

root@master1:/etc/kubeasz# grep SANDBOX_IMAGE ./clusters/* -R

./clusters/k8s-01/config.yml:SANDBOX_IMAGE: "harbor.k8s.local/k8s/pause-amd64:3.4.1"

root@master1:/etc/kubeasz# docker pull easzlab/pause-amd64:3.4.1

3.4.1: Pulling from easzlab/pause-amd64

Digest: sha256:9ec1e780f5c0196af7b28f135ffc0533eddcb0a54a0ba8b32943303ce76fe70d

Status: Image is up to date for easzlab/pause-amd64:3.4.1

docker.io/easzlab/pause-amd64:3.4.1

root@master1:/etc/kubeasz# docker tag easzlab/pause-amd64:3.4.1 harbor.k8s.local/k8s/easzlab-pause-amd64:3.4.1

root@master1:/etc/kubeasz# docker push harbor.k8s.local/k8s/easzlab-pause-amd64:3.4.1

The push refers to repository [harbor.k8s.local/k8s/easzlab-pause-amd64]

915e8870f7d1: Pushed

3.4.1: digest: sha256:9ec1e780f5c0196af7b28f135ffc0533eddcb0a54a0ba8b32943303ce76fe70d size: 526

4. 部署etcd节点

4.1 部署节点

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 02

4.2 验证各节点服务状态

root@etcd1:~# export node_ip='192.168.6.84 192.168.6.85 192.168.6.86'

root@etcd1:~# for i in ${node_ip}; do ETCDCTL_API=3 /usr/local/bin/etcdctl --endpoints=https://${i}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

https://192.168.6.84:2379 is healthy: successfully committed proposal: took = 31.318624ms

https://192.168.6.85:2379 is healthy: successfully committed proposal: took = 16.456257ms

https://192.168.6.86:2379 is healthy: successfully committed proposal: took = 14.769783ms

5. 部署容器运行时

- node节点必须安装docker,docker可以自行安装,此步骤可选做

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 03

6. 部署Master节点

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 04

#验证集群

root@master1:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.6.79 Ready,SchedulingDisabled master 59s v1.21.0

192.168.6.80 Ready,SchedulingDisabled master 60s v1.21.0

7. 部署Node节点

7.1 通过修改模板来修改配置(以修改kube-proxy 的配置为例)

root@master1:/etc/kubeasz# vim /etc/kubeasz/roles/kube-node/templates/kube-proxy-config.yaml.j2

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: {{ inventory_hostname }}

clientConnection:

kubeconfig: "/etc/kubernetes/kube-proxy.kubeconfig"

clusterCIDR: "{{ CLUSTER_CIDR }}"

conntrack:

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

healthzBindAddress: {{ inventory_hostname }}:10256

hostnameOverride: "{{ inventory_hostname }}"

metricsBindAddress: {{ inventory_hostname }}:10249

mode: "{{ PROXY_MODE }}"

ipvs: #新添加,将调度算法改为sh,默认为rr

scheduler: sh

7.2 部署Node节点

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 05

7.3 验证

7.3.1 部署机上查看集群状态

root@master1:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.6.79 Ready,SchedulingDisabled master 2d16h v1.21.0

192.168.6.80 Ready,SchedulingDisabled master 2d17h v1.21.0

192.168.6.89 Ready node 2d16h v1.21.0

192.168.6.90 Ready node 2d16h v1.21.0

7.3.2 在Node节点上查看ipvs规则,确认修改生效

root@node1:~# ipvsadm --list

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP node1.k8s.local:https sh #调度算法是source hashing

-> 192.168.6.79:6443 Masq 1 0 0

-> 192.168.6.80:6443 Masq 1 0 0

8. 部署集群网络

8.1 根据选用的网络组件,修改其配置模板

以calico组件为例,修改模版中的镜像地址(calico 有4个镜像需要改)为本地镜像仓库地址,加快部署速度,镜像可提前下载好,上传到本地的Harbor仓库

root@master1:/etc/kubeasz# vim roles/calico/templates/calico-v3.15.yaml.j2

...

serviceAccountName: calico-node

# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force

# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.

terminationGracePeriodSeconds: 0

priorityClassName: system-node-critical

initContainers:

# This container installs the CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: harbor.k8s.local/k8s/calico-cni:v3.15.3 #修改为本地harbor镜像地址

command: ["/install-cni.sh"]

env:

# Name of the CNI config file to create.

- name: CNI_CONF_NAME

value: "10-calico.conflist"

# The CNI network config to install on each node.

- name: CNI_NETWORK_CONFIG

valueFrom:

configMapKeyRef:

name: calico-config

key: cni_network_config

# The location of the etcd cluster.

...

...

# Adds a Flex Volume Driver that creates a per-pod Unix Domain Socket to allow Dikastes

# to communicate with Felix over the Policy Sync API.

- name: flexvol-driver

image: harbor.k8s.local/k8s/calico-pod2daemon-flexvol:v3.15.3 #修改为本地harbor镜像地址

volumeMounts:

- name: flexvol-driver-host

mountPath: /host/driver

securityContext:

privileged: true

...

...

containers:

# Runs calico-node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: harbor.k8s.local/k8s/calico-node:v3.15.3 #修改为本地harbor镜像地址

env:

# The location of the etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

...

...

priorityClassName: system-cluster-critical

# The controllers must run in the host network namespace so that

# it isn't governed by policy that would prevent it from working.

hostNetwork: true

containers:

- name: calico-kube-controllers

image: harbor.k8s.local/k8s/calico-kube-controllers:v3.15.3 #修改为本地harbor镜像地址

env:

# The location of the etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

...

######

#注意:该配置文件中:

...

# Set MTU for the Wireguard tunnel device.

- name: FELIX_WIREGUARDMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

value: "{{ CLUSTER_CIDR }}" # 无论采用何种部署工具,如果自动定义了集群地址段 ,一定要修改这里将新的自定义的地址段体现出来

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

# Set Felix endpoint to host default action to ACCEPT.

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "ACCEPT"

...

8.2 部署集群网络插件

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 06

8.3 验证网络插件部署情况

root@node1:~# calicoctl node status

Calico process is running.

IPv4 BGP status

+--------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+-------------------+-------+----------+-------------+

| 192.168.6.79 | node-to-node mesh | up | 03:49:14 | Established |

| 192.168.6.80 | node-to-node mesh | up | 03:49:14 | Established |

| 192.168.6.90 | node-to-node mesh | up | 03:49:14 | Established |

+--------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

root@node1:~# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.6.1 0.0.0.0 UG 100 0 0 eth0

10.200.67.0 192.168.6.80 255.255.255.192 UG 0 0 0 tunl0

10.200.99.64 192.168.6.90 255.255.255.192 UG 0 0 0 tunl0

10.200.213.128 192.168.6.79 255.255.255.192 UG 0 0 0 tunl0

10.200.255.128 0.0.0.0 255.255.255.192 U 0 0 0 *

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.6.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

192.168.6.1 0.0.0.0 255.255.255.255 UH 100 0 0 eth0

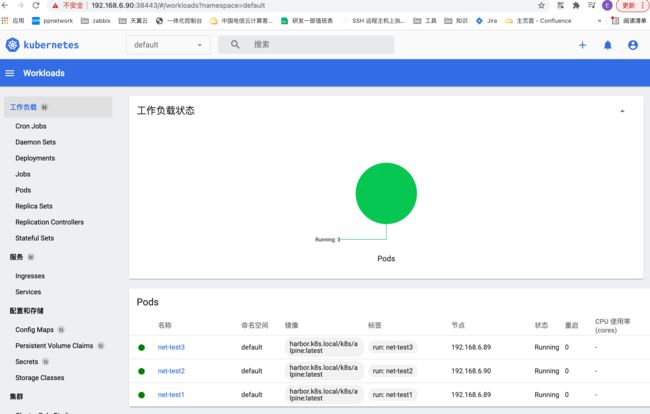

8.4 创建测试pod,验证网络

root@master1:/etc/kubeasz# kubectl run net-test --image=harbor.k8s.local/k8s/alpine:latest sleep 300000

root@master1:/etc/kubeasz# kubectl run net-test1 --image=harbor.k8s.local/k8s/alpine:latest sleep 300000

root@master1:/etc/kubeasz# kubectl run net-test2 --image=harbor.k8s.local/k8s/alpine:latest sleep 300000

root@master1:/etc/kubeasz# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default net-test 1/1 Running 0 41s 10.200.255.129 192.168.6.89 <none> <none>

default net-test1 1/1 Running 0 17s 10.200.99.65 192.168.6.90 <none> <none>

default net-test2 1/1 Running 0 8s 10.200.255.130 192.168.6.89 <none> <none>

kube-system calico-kube-controllers-759545cb9c-dr8gz 1/1 Running 0 71m 192.168.6.90 192.168.6.90 <none> <none>

kube-system calico-node-7zlwc 1/1 Running 0 71m 192.168.6.79 192.168.6.79 <none> <none>

kube-system calico-node-k77p7 1/1 Running 0 71m 192.168.6.89 192.168.6.89 <none> <none>

kube-system calico-node-sv98g 1/1 Running 0 71m 192.168.6.90 192.168.6.90 <none> <none>

kube-system calico-node-x9qbn 1/1 Running 0 71m 192.168.6.80 192.168.6.80 <none> <none>

root@node1:~# kubectl exec -it net-test sh

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 72:0c:91:dd:a3:6c brd ff:ff:ff:ff:ff:ff

inet 10.200.255.129/32 scope global eth0

valid_lft forever preferred_lft forever

/ # ping 10.200.99.65 #进入测试容器,ping跨主机地址是否通

PING 10.200.99.65 (10.200.99.65): 56 data bytes

64 bytes from 10.200.99.65: seq=0 ttl=62 time=1.627 ms

64 bytes from 10.200.99.65: seq=1 ttl=62 time=0.856 ms

64 bytes from 10.200.99.65: seq=2 ttl=62 time=0.914 ms

^C

/ # ping 223.6.6.6 #ping外网地址是否通

PING 223.6.6.6 (223.6.6.6): 56 data bytes

64 bytes from 223.6.6.6: seq=0 ttl=116 time=14.000 ms

64 bytes from 223.6.6.6: seq=1 ttl=116 time=7.925 ms

64 bytes from 223.6.6.6: seq=2 ttl=116 time=16.233 ms

^C

--- 223.6.6.6 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 7.925/12.719/16.233 ms

9. 部署集群插件

9.1 CoreDns部署

9.1.1 主要配置参数

-

error:错误日志输出到stdout

-

health: Coredns的运行状态报告为http://localhost:8080/health

-

cache: 启用dns缓存

-

reload:配置自动重新加载配置文件,如果修改了ConfigMap配置,会在两分钟后生效

-

loadbalance: 一个域名有多个记录会被轮询解析

-

cache 30 : 缓存时间

-

kubernetes: coredns将根据指定的service domain 名称在kubernetes SVC中进行域名解析

-

forward:不是Kubernetes集群内的域名查询都进行转发指定的服务器(/etc/resolv.conf)

-

prometheus: coredns 的指标数据可以配置prometheus 访问http://coredns svc:9154/metrics进行收集

-

ready:当coredns 服务启动完成后会进行状态监测,会有个 URL,路径为/ready返回200状态码,否则返回报错

9.1.2 部署coredns

9.1.2.1 获取coredns的yaml文件

-

github:https://github.com/coredns/deployment/blob/master/kubernetes/coredns.yaml.sed

-

kubernetes官方:采用官方的部署包,里面有相关的yaml文件和脚本,包含4个包,选择对应的版本,以1.21.4版本为例:

- github对应版本地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.21.md#downloads-for-v1214

- 4个包:

- kubernetes-node-linux-amd64.tar.gz

- kubernetes-server-linux-amd64.tar.gz

- kubernetes-client-linux-amd64.tar.gz

- kubernetes.tar.gz

上传到服务器,解压后得到相关yaml文件,以此文件为模板进行修改

root@master1:/usr/local/src# ls

kubernetes-client-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz

kubernetes-node-linux-amd64.tar.gz kubernetes.tar.gz

root@master1:/usr/local/src# tar xf kubernetes-client-linux-amd64.tar.gz

root@master1:/usr/local/src# tar xf kubernetes-server-linux-amd64.tar.gz

root@master1:/usr/local/src# tar xf kubernetes-node-linux-amd64.tar.gz

root@master1:/usr/local/src# tar xf kubernetes.tar.gz

root@master1:/usr/local/src# ls

kubernetes kubernetes-node-linux-amd64.tar.gz kubernetes.tar.gz

kubernetes-client-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz

root@master1:/usr/local/src/kubernetes/cluster/addons/dns/coredns# ls

Makefile coredns.yaml.base coredns.yaml.in coredns.yaml.sed transforms2salt.sed transforms2sed.sed

# coredns.yaml.base 即可作为模板文件进行修改(注意通过这种方法获得的模板yaml部署coredns,要注意coredns的版本与yaml文件对应

9.1.2.2 修改yaml文件

修改的主要参数,参照9.1.2.1,此处以coredns github上的deployment yaml文件为模板进行修改。

root@master1:~# vim coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

#bind 0.0.0.0 #不知道作用

ready

kubernetes k8s.local in-addr.arpa ip6.arpa { ##填写kubeasz的集群host文件中的CLUSTER_DNS_DOMAIN配置,即集群的域名

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf { #配置集群dns的上级dns

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: harbor.k8s.local/k8s/coredns:1.8.3 #将镜像以为本地镜像仓库镜像

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi #此处可根据需要修改内存限制,一般生产环境最多给到4G,再多就开多副本进行负载

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

type: NodePort #添加的,实际上是为了给prometheus暴露metrics

selector:

k8s-app: kube-dns

clusterIP: 10.100.0.2 #实际上是SERVICE_CIDR地址,一般是IP段的第二个地址,这里可以进入集群的测试容器,查看/etc/resolv.conf文件中的nameserver地址确认。

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

targetPort: 9153 #添加的,为暴露metrics

nodePort: 30009 #添加的,为暴露metrics给宿主机的30009端口

9.1.2.3 部署coredns

root@master1:~# kubectl apply -f coredns.yaml

9.1.2.4 验证

进入测试容器,ping外网

root@master1:~# kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ping www.baidu.com

PING www.baidu.com (110.242.68.4): 56 data bytes

64 bytes from 110.242.68.4: seq=0 ttl=51 time=10.841 ms

64 bytes from 110.242.68.4: seq=1 ttl=51 time=11.695 ms

64 bytes from 110.242.68.4: seq=2 ttl=51 time=21.819 ms

64 bytes from 110.242.68.4: seq=3 ttl=51 time=12.340 ms

^C

--- www.baidu.com ping statistics ---

4 packets transmitted, 4 packets received, 0% packet loss

round-trip min/avg/max = 10.841/14.173/21.819 ms

9.2 部署Dashboard

9.2.1 获取基本镜像及yaml文件

- yaml文件:

Github kubernetes dashboard --根据kubernetes 集群的版本选择确定对应的dashboard版本

https://github.com/kubernetes/dashboard/releases

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

- 基本镜像:

kubernetesui/dashboard:v2.3.1

kubernetesui/metrics-scraper:v1.0.6

#下载镜像后推送到私有镜像仓库

root@harbor1:~# docker tag kubernetesui/metrics-scraper:v1.0.6 harbor.k8s.local/k8s/kubernetesui-metrics-scraper:v1.0.6

root@harbor1:~# docker tag kubernetesui/dashboard:v2.3.1 harbor.k8s.local/k8s/kubernetesui-dashboard:v2.3.1

root@harbor1:~# docker push harbor.k8s.local/k8s/kubernetesui-metrics-scraper:v1.0.6

root@harbor1:~# docker push harbor.k8s.local/k8s/kubernetesui-dashboard:v2.3.1

9.2.2 修改yaml文件

root@master1:~# cp recommended.yaml k8s-dashboard.yaml

root@master1:~# vim k8s-dashboard.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #添加,暴露dashboard端口

ports:

- port: 443

targetPort: 8443

nodePort: 38443 #添加,暴露dashboard端口

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: harbor.k8s.local/k8s/kubernetesui-dashboard:v2.3.1 #修改为私人镜像仓库

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: harbor.k8s.local/k8s/kubernetesui-metrics-scraper:v1.0.6 #修改为私人镜像仓库

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

9.2.3 部署Dashboard

kubectl apply -f k8s-dashboard.yaml

9.2.4 验证使用

9.2.4.1 创建账号

root@master1:~# vim admin-user.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

9.2.4.1 获取token,登陆验证

root@master1:~# kubectl get secrets -A | grep admin

kubernetes-dashboard admin-user-token-xwrjl kubernetes.io/service-account-token 3 74s

root@master1:~# kubectl describe secrets admin-user-token-xwrjl -n kubernetes-dashboard

Name: admin-user-token-xwrjl

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: d0286365-2a5e-4955-adcc-f4ec78d13f65

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1350 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InZhRkxvMGNjbHFPQ1A5ak1vd0hfVjM4bTRfNnRHYmt5SmhiR2ZYaDVWM2cifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXh3cmpsIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJkMDI4NjM2NS0yYTVlLTQ5NTUtYWRjYy1mNGVjNzhkMTNmNjUiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.GE0fZh7VOvl0yJa6moWP546OEZV_HlA8wn2nlpiDSKIo97UHvPfd6I8hhe-LiPOo9eNWhnTmZqnA3c3_4yvqUMVASUAqykFISs4aOKZFpZ_RpW2QLTTJra04p0vXSOlvprNaxVAQnrCA2oRQYSV_5Y5rS49Lj_YEx9IqOb1PXJSS4Bl5y9YOYjAlVmE31oh2ynSd2G06x9-TUil3vx_8a0UEz74zzpejesen9cF_A9bZwywymw9HU7BWL5EzoSdpN_rHcrXAKzxPne4v_J7320jrghAciPFFRSpgApqmuc9jG-8msy24g-oljmfHg--obkl3y7oNLe1b8RYkbp9qEw

10. 集群节点添加

10.1 添加Master节点

root@master1:~# cd /etc/kubeasz/

root@master1:/etc/kubeasz# ./ezctl add-master k8s-01 192.168.6.81

10.2 添加node节点

root@master1:/etc/kubeasz# ./ezctl add-node k8s-01 192.168.6.91