【OpenCV3编程入门学习笔记】——第2章 启程前的认知准备

第二章 启程前的认知准备

文章目录

- 第二章 启程前的认知准备

-

- 前言

- 2.1 OpenCV官方例程引导与赏析

-

- 2.1.1 彩色目标追踪:Camshift

- 2.1.2 光流:optical flow

- 2.1.3 点追踪:Ikdemo

- 2.1.4 人脸识别:objectDetection

- 2.2 开源的魅力:编译OpenCV源代码

-

- 2.2.1 下载安装CMake

- 2.2.2 使用CMake生成OpenCV源码工程的解决方案

- 2.2.3 编译OpenCV源代码

- 2.3 “opencv.hpp”头文件认知

- 2.4 命名规范约定

-

- 2.4.1 本书范例的命名规范

- 2.4.2 匈牙利命名法

- 2.5 argc与argv参数解惑

-

- 2.5.1 初识main函数中的argc和argv

- 2.5.2 argc与argv的具体含义

- 2.5.3 Visual Studio中的main函数的几种写法说明

- 2.5.4 总结

- 2.6 格式输出函数printf()简析

-

- 2.6.1 格式输出:printf()函数

- 示例程序:printf函数的用法示例

- 2.7 智能显示当前使用的OpenCV版本

- 2.8 本章小结

前言

笔记系列

参考书籍:OpenCV3编程入门

作者:毛星云

版权方:电子工业出版社

出版日期:2015-02

笔记仅供本人参考使用,不具备共通性

笔记中代码均是OpenCV+Qt的代码,并非用vs开发,请勿混淆

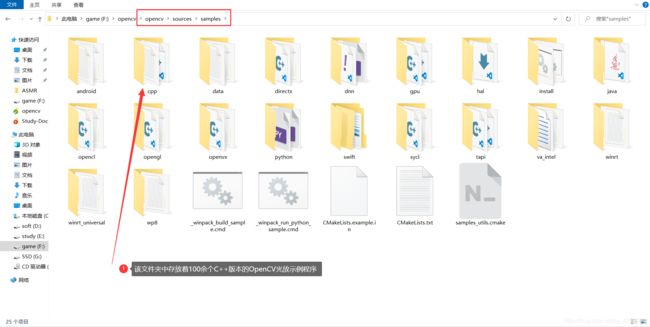

2.1 OpenCV官方例程引导与赏析

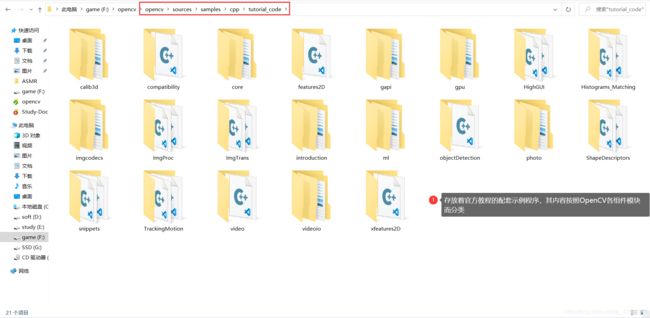

在OpenCV安装目录下,可以找到OpenCV官方提供的示例代码,具体位于

...\opencv\sources\samples\cpp

而在...\opencv\sources\samples\cpp\tutorial_code目录下,还存放着官方教程的配套示例程序。其内容按照OpenCV各组件模块而分类,非常适合学习,初学者可以按需查询,分类学习,各个击破。

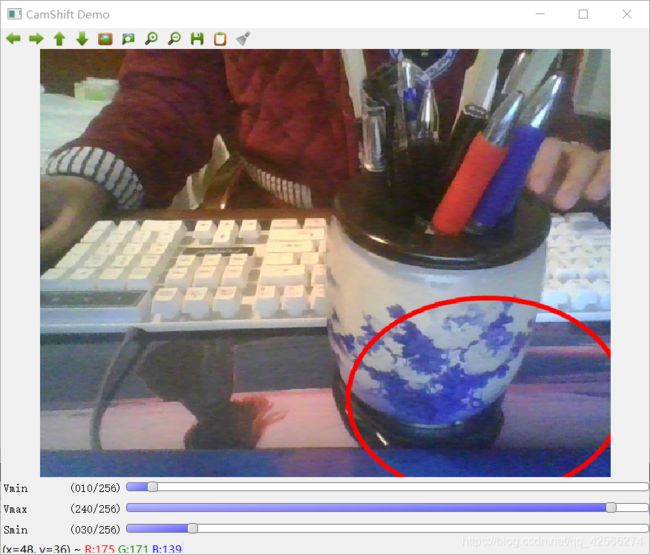

2.1.1 彩色目标追踪:Camshift

该程序的用法是根据鼠标框选区域的色度光谱来进行摄像头读入的视频目标的追踪。

其主要采用CamShift算法(“Continuously Adaptive Mean-SHIFT”),它是对MeanShift算法的改进,被称为连续自适应的MeanShift算法。

示例程序在文件夹中的位置:...\opencv\sources\samples\cpp\camshiftdemo.cpp

使用Qt重新编译后如下

文件:main.cpp

#include "mainwindow.h"

#include 运行结果如下:

【1】彩色目标追踪截图

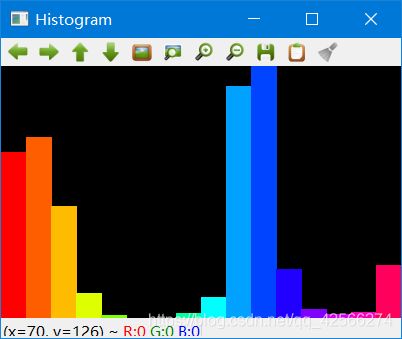

2.1.2 光流:optical flow

光流(optical flow)法是目前运动图像分析的重要方法。

光流用来指定时变图像中模式的运动速度,因为当物体在运动时,在图像上对应的亮度模式也在运动。

这种图像亮度模式的表观运动就是光流。

光流表达了图像的变化,由于它包含了目标运动的信息,因此可被观察者用来确定目标的运动情况。

示例程序在文件夹中的位置:...\opencv\sources\samples\cpp\tutorial_code\video\optical_flow\optical_flow.cpp

使用Qt重新编译后如下

文件:main.cpp

#include "mainwindow.h"

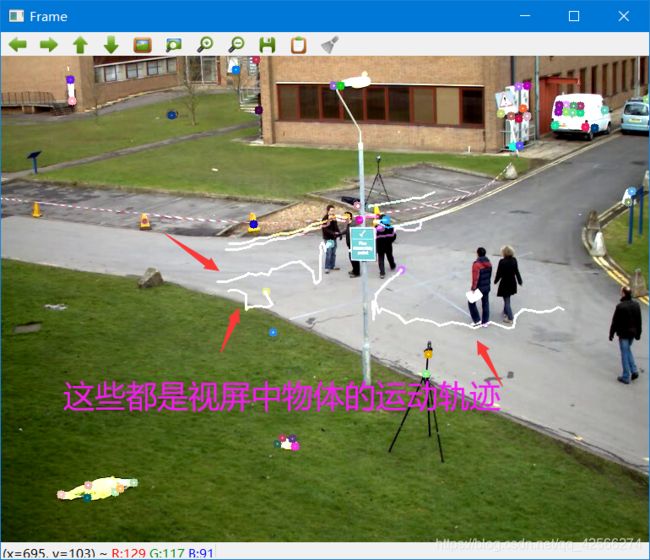

#include 运行结果如下:

【1】光流追踪效果

2.1.3 点追踪:Ikdemo

在...\opencv\sources\samples\cpp目录下(实际路径会因为OpenCV版本和安装时的设置不同,可能产生出入),找到Ikdemo.cpp文件

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-pq4zLkLB-1615432211740)(image/【OpenCV3编程入门学习笔记】——第2章 启程前的认知准备/image-20210310215410446.png)]

其具体代码在移植到Qt后如下所示

文件:main.cpp

#include 运行结果如下:

【1】点追踪效果

2.1.4 人脸识别:objectDetection

人脸识别是图像处理与OpenCV非常重要的应用之一,OpenCV官方专门有教程和代码讲解其实现方法.

这里找来的示例程序就是使用objdetect模块检测摄像头视频流中的人脸,其位于...\opencv\sources\samples\cpp\tutorial_code\objectDetection路径之下.

注意:需要将...\opencv\sources\data\haarcascades路径下的"haarcascade_eye_tree_eyeglasses.xml“和”haarcascade_frontalface_alt.xml"两个文件一起复制到项目文件夹中,才能正确运行的

其具体代码在移植到Qt后如下所示

文件:main.cpp

#include 运行结果如下:

2.2 开源的魅力:编译OpenCV源代码

本节略,具体编译OpenCV的方法,可以参考书中描述,或者以下博客文章

Qt-OpenCV开发环境搭建(史上最详细)

拜小白教你Qt5.8.0+OpenCV3.2.0配置教程(详细版)

编译安装的方法步骤其实都大同小异,此处就不多做赘述了,此外,编译中遇到的大部分问题,在上面两个的博客中基本都有解释的,读者应耐心查看,对照自己电脑的问题,不要毛躁,切忌毛躁!

2.2.1 下载安装CMake

略

2.2.2 使用CMake生成OpenCV源码工程的解决方案

略

2.2.3 编译OpenCV源代码

略

2.3 “opencv.hpp”头文件认知

在任意一个OpenCV程序中,通过转到定义,我们可以发现"#include "一句中的头文件定义类似如下:

#ifndef OPENCV_ALL_HPP

#define OPENCV_ALL_HPP

// File that defines what modules where included during the build of OpenCV

// These are purely the defines of the correct HAVE_OPENCV_modulename values

#include "opencv2/opencv_modules.hpp"

// Then the list of defines is checked to include the correct headers

// Core library is always included --> without no OpenCV functionality available

#include "opencv2/core.hpp"

// Then the optional modules are checked

#ifdef HAVE_OPENCV_CALIB3D

#include "opencv2/calib3d.hpp"

#endif

#ifdef HAVE_OPENCV_FEATURES2D

#include "opencv2/features2d.hpp"

#endif

#ifdef HAVE_OPENCV_DNN

#include "opencv2/dnn.hpp"

#endif

#ifdef HAVE_OPENCV_FLANN

#include "opencv2/flann.hpp"

#endif

#ifdef HAVE_OPENCV_HIGHGUI

#include "opencv2/highgui.hpp"

#endif

#ifdef HAVE_OPENCV_IMGCODECS

#include "opencv2/imgcodecs.hpp"

#endif

#ifdef HAVE_OPENCV_IMGPROC

#include "opencv2/imgproc.hpp"

#endif

#ifdef HAVE_OPENCV_ML

#include "opencv2/ml.hpp"

#endif

#ifdef HAVE_OPENCV_OBJDETECT

#include "opencv2/objdetect.hpp"

#endif

#ifdef HAVE_OPENCV_PHOTO

#include "opencv2/photo.hpp"

#endif

#ifdef HAVE_OPENCV_SHAPE

#include "opencv2/shape.hpp"

#endif

#ifdef HAVE_OPENCV_STITCHING

#include "opencv2/stitching.hpp"

#endif

#ifdef HAVE_OPENCV_SUPERRES

#include "opencv2/superres.hpp"

#endif

#ifdef HAVE_OPENCV_VIDEO

#include "opencv2/video.hpp"

#endif

#ifdef HAVE_OPENCV_VIDEOIO

#include "opencv2/videoio.hpp"

#endif

#ifdef HAVE_OPENCV_VIDEOSTAB

#include "opencv2/videostab.hpp"

#endif

#ifdef HAVE_OPENCV_VIZ

#include "opencv2/viz.hpp"

#endif

// Finally CUDA specific entries are checked and added

#ifdef HAVE_OPENCV_CUDAARITHM

#include "opencv2/cudaarithm.hpp"

#endif

#ifdef HAVE_OPENCV_CUDABGSEGM

#include "opencv2/cudabgsegm.hpp"

#endif

#ifdef HAVE_OPENCV_CUDACODEC

#include "opencv2/cudacodec.hpp"

#endif

#ifdef HAVE_OPENCV_CUDAFEATURES2D

#include "opencv2/cudafeatures2d.hpp"

#endif

#ifdef HAVE_OPENCV_CUDAFILTERS

#include "opencv2/cudafilters.hpp"

#endif

#ifdef HAVE_OPENCV_CUDAIMGPROC

#include "opencv2/cudaimgproc.hpp"

#endif

#ifdef HAVE_OPENCV_CUDAOBJDETECT

#include "opencv2/cudaobjdetect.hpp"

#endif

#ifdef HAVE_OPENCV_CUDAOPTFLOW

#include "opencv2/cudaoptflow.hpp"

#endif

#ifdef HAVE_OPENCV_CUDASTEREO

#include "opencv2/cudastereo.hpp"

#endif

#ifdef HAVE_OPENCV_CUDAWARPING

#include "opencv2/cudawarping.hpp"

#endif

#endif

通过观察可看出来,opencv2\opencv.hpp头文件中已经包含了OpenCV的各模块的头文件,因此原则上,在编写OpenCV代码时,只需要添加

#include

这一个头文件就可以了,因此代码可以精简一些

但是我作为初学者,在写代码时,还是默认会包含相应模块的头文件的

2.4 命名规范约定

2.4.1 本书范例的命名规范

该规范来自《代码大全(第二版)》的命名规则,其中有些微修改,具体如下

| 描述 | 实例 |

|---|---|

| 类名混合使用大小写,且首字母大写 | ClassName |

| 类型定义,包括枚举和typedef,混合使用大小写,首字母大写 | TypeName |

| 枚举类型除了混合使用大小写外,还需要以复数形式表示 | EnumeratedTypes |

| 局部变量混合使用大小写,且首字母小写,其名字应该与底层数据类型无关,而且应该反应该变量所代表的事务 | localVariable |

| 子程序参数的格式混合使用大小写,且每个单词的首字母大写,其名字应该与底层数据类型无关,而且应该反应该变量所代表的事务 | RoutineParameter |

| 对类的多个子程序可见(且只对该类可见)的成员变量名用m_前缀 | m_ClassVariable |

| 局部变量名用g_前缀 | g_GlobalVariable |

| 具名常量全部大写 | CONSTANT |

| 宏全部大写,单词间用分隔符"_"隔开 | SCREEN_WIDTH |

| 枚举类型成员名用能反应其基础类型的\单数形式的前缀.例如:Color_Red,Color_Blue | Base_EnumeratedType |

2.4.2 匈牙利命名法

匈牙利命名法

-

基本原则:变量名=属性+类型+对象描述

-

变量命名规范如下

-

前缀写法 类型 描述 实例 ch char 8位字符 chGrade ch TCHAR 如果_UNICODE定义,则为16位字符 chName b BOOL 布尔值 bEnable n int 整形(其大小依赖于操作系统) nLength n UINT 无符号值(其大小依赖于操作系统) nHeight w WORD 16位无符号值 wPos l LONG 32位有符号整型 lOffset dw DWORD 32位无符号整型 dwRange p * 指针 pDoc lp FAR* 远指针 lpszName lpsz LPSTR 32位字符串指针 lpszName lpsz LPCSTR 32位常量字符串指针 lpszName lpsz LPCTSTR 如果_UNICODE定义,则为32位常量字符串指针 lpszName h handle Windows对象句柄 hWnd lpfn callback 指向CALLBACK函数的远指针 LpfnName

-

-

关键字母组合

-

描述内容 使用关键字母组合 最大值 Max 最小值 Min 初始化 Init 临时变量 T(或Temp) 源对象 src 目的对象 Dst

-

2.5 argc与argv参数解惑

2.5.1 初识main函数中的argc和argv

argc和argv中的arg是"参数"的意思

例如arguments,argument counter和argument vector

其中

- argc为整数,用来统计运行程序时需要送给main函数的命令行参数的个数

- *argv[]为字符串数组,用来存放指向字符串参数的指针数组,每一个元素指向一个参数

2.5.2 argc与argv的具体含义

其实main(int argc,char *argv[],char **env)才是UNIX和Linux中的标准写法

- int类型的argc

- 为整型

- 用来统计程序运行时发送给main函数的命令行参数的个数

- 在vs中默认值为1

- char*类型的argv[]

- 为字符串数组

- 用来存放指向字符串参数的指针数组,每个元素指向一个参数

- 各成员含义如下:

- argv[0]指向程序运行的全路径名

- argv[1]指向在DOS命令行中执行程序名后的第一个字符串

- argv[2]指向执行程序名后的第二个字符串

- argv[3]指向执行程序名后的第三个字符串

- …

- argv[argc]为NULL

- char**类型的env

- 为字符串数组

- env[]的每一个元素都包含ENVVAR=value的字符串

- ENVVAR为环境变量

- value为ENVVAR的对应值

- OpenCV中很少用到这个参数

2.5.3 Visual Studio中的main函数的几种写法说明

以下三种写法在vs编译器中绝对合法

【1】返回值为整型带参的main函数

int main(int argc,char** argv)

{

//函数体内使用或不使用argc和argv都行

.....

return 1;

}

【2】返回值为整型不带参的main函数

int main(int argc,char** argv)

{

//函数体内使用了argc或argv

.....

return 1;

}

【3】返回值为void且不带参的main函数

int main()

{

.....

return 1;

}

2.5.4 总结

- int argc表示命令行字符串的个数

- char* argv[]表示命令行参数的字符串

2.6 格式输出函数printf()简析

本章略

2.6.1 格式输出:printf()函数

示例程序:printf函数的用法示例

2.7 智能显示当前使用的OpenCV版本

CV_VERSION为用于标识当前OpenCV版本的宏,所以我们可以用下面的代码,检测OpenCV的版本

【1】使用printf函数

printf("\t当前使用的OpenCV版本为 %s"CV_VERSION);//这个貌似有问题,或许是因为我在QT环境下的原因,它并不能很好的表示出来

【2】使用cout函数

cout<<"\t当前使用的OpenCV版本为"<<CV_VERSION;

【3】在Qt中使用qDebug函数

qDebug()<<"\t当前使用的OpenCV版本为"<<CV_VERSION;

2.8 本章小结

- 看来一些OpenCV的官方示例代码,让我知道了OpenCV基本能做些什么

- 书中还提到了Cmake OpenCV源码的步骤,但因为事先我已经从别的渠道学会了这个,所以一掠而过

- 简略看了opencv.hpp这个头文件

- 代码命名规范 — 匈牙利命名法

- argc与argv

- 显示OpenCV的版本号