python实现线性回归

目录

普通最小二乘法

岭回归

编辑 多项式回归

多元回归

本文用sklearn库实现简单线性回归(普通最小二乘法,岭回归,多项式回归,多元回归),以下是相关代码,所用数据库为sklearn自带,由于是自带数据库,可能拟合效果会很差,但只作为学习其方法,有所错误还望斧正。

普通最小二乘法

# -*- coding: utf-8 -*-

# @Time : 2022/7/11 14:18

# @Author : 中意灬

# @FileName: 普通最小二乘法.py

# @Software: PyCharm

import matplotlib.pyplot as plt

import numpy as np

from sklearn import datasets, linear_model

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error, r2_score

from sklearn.model_selection import cross_val_score

# 加载数据集(return_X_y:返回一个由两个 ndarray 组成的形状(n_samples,n_features)的元组 一个 2D 数组,

# 其中每行表示一个样本,每列表示给定样本的特征和/或目标)

diabetes_X, diabetes_y = datasets.load_diabetes(return_X_y=True)

# 选择其中一个数据集

# np.newaxis增加一个新的维度,放在第一个位置即[np.newaxis,:]在最前面增肌一个位置,放在第二个位置[:,np.newaxis]即在中间增加一个维度,

#放在第三个位置[:,...,np.newaxis]在第三个位置增加一个维度

diabetes_X = diabetes_X[:,np.newaxis, 2]#在中间加一个维度,取其中最后一个数据集

# 划分训练集和测试集

X_train,X_test,y_train,y_test=train_test_split(diabetes_X,diabetes_y,test_size=0.3,random_state=0)

# 创建一个线性模型对象

regr = linear_model.LinearRegression()

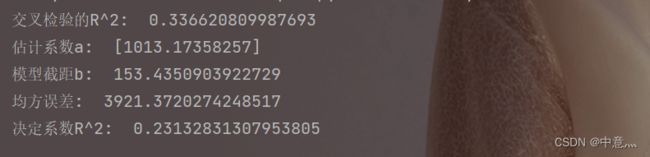

print('交叉检验的R^2: ',np.mean(cross_val_score(regr,diabetes_X,diabetes_y,cv=3)))

# 用训练集训练模型fit(x,y,sample_weight=None)sample_weight为数组形状

regr.fit(X_train, y_train)

#

# 用测试集做一个预测

y_pred = regr.predict(X_test)

# 估计系数a

print("估计系数a: ", regr.coef_)

# 模型截距b

print("模型截距b: ",regr.intercept_)

# 均方误差即E(y_test-y_pred)^2

print("均方误差: " ,mean_squared_error(y_test, y_pred))

# 决定系数r^2,越接近1越好

print("决定系数R^2: " ,r2_score(y_test, y_pred))

# 绘制图像

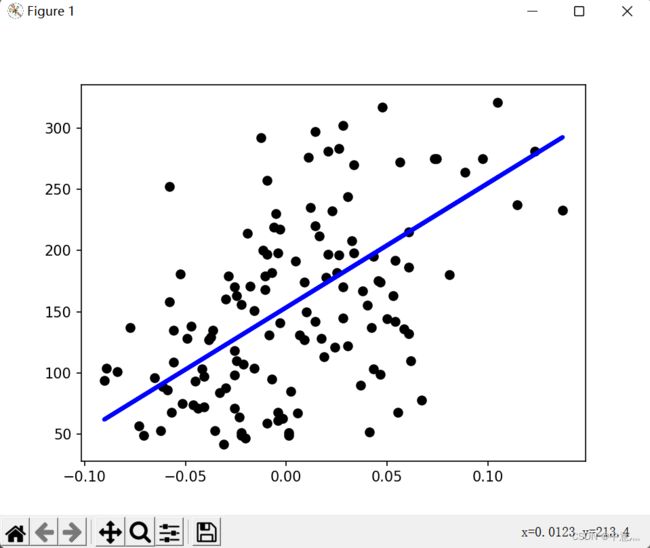

plt.scatter(X_test, y_test, color="black")

plt.plot(X_test, y_pred, color="blue", linewidth=3)

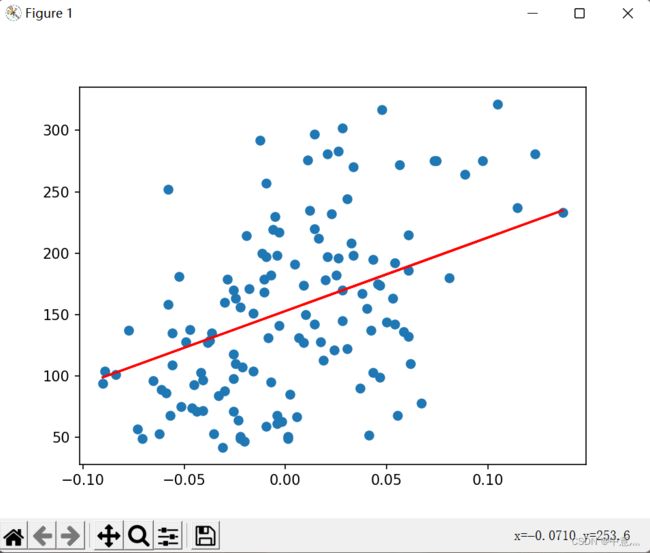

plt.show()岭回归

# -*- coding: utf-8 -*-

# @Time : 2022/7/11 17:45

# @Author : 中意灬

# @FileName: 岭回归.py

# @Software: PyCharm

import sklearn.linear_model

import sklearn.datasets

import numpy as np

from sklearn.model_selection import train_test_split,cross_val_score

import matplotlib.pyplot as plt

from sklearn.metrics import mean_squared_error

# 构建岭回归模型

clf=sklearn.linear_model.Ridge(alpha=0.5)

# 导入数据集

X,Y=sklearn.datasets.load_diabetes(return_X_y=True)

X=X[:,np.newaxis,2]

# 切分训练集和测试集

X_train,X_test,y_train,y_test=train_test_split(X,Y,test_size=0.3,random_state=0)

# 训练模型

clf.fit(X_train,y_train)

# 测试模型

y_pred=clf.predict(X_test)

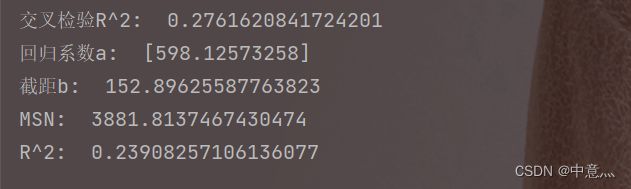

print("交叉检验R^2: ",np.mean(cross_val_score(clf,X,Y,cv=3)))

# 回归系数a

print('回归系数a: ',clf.coef_)

# 截距b

print('截距b: ',clf.intercept_)

# MSN均方误差

print('MSN: ',mean_squared_error(y_pred,y_test))

# 决定系数R^2

print('R^2: ',clf.score(X_test,y_test))

plt.figure()

plt.scatter(X_test,y_test)

plt.plot(X_test,y_pred,c='r')

plt.show()

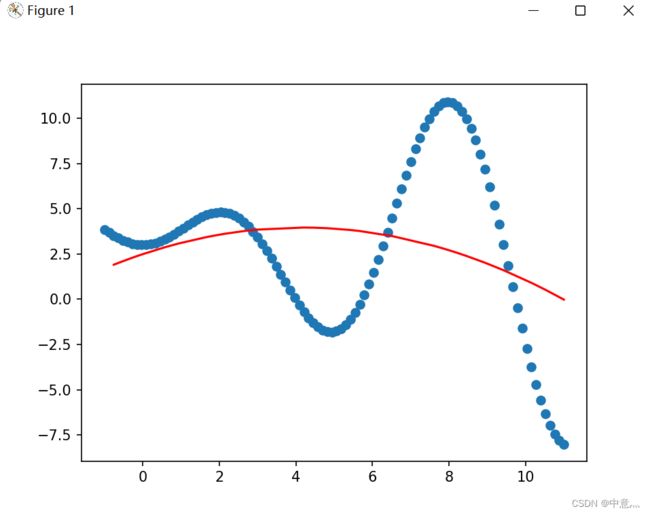

多项式回归

多项式回归

# -*- coding: utf-8 -*-

# @Time : 2022/7/11 18:47

# @Author : 中意灬

# @FileName: 多项式回归.py

# @Software: PyCharm

import random

import matplotlib.pyplot as plt

from sklearn.preprocessing import PolynomialFeatures

from sklearn.metrics import mean_squared_error,r2_score

import numpy as np

from sklearn.model_selection import train_test_split,cross_val_score

from sklearn.linear_model import LinearRegression

from sklearn.pipeline import make_pipeline

# 构建拟合数据集

def f(x):

return np.sin(x)*x+random.randint(1,10)

x=np.linspace(-1,11,100)

y=f(x)

x=x[:,np.newaxis]

y=y[:,np.newaxis]

# 切分数据集

x_train,x_test,y_train,y_test=train_test_split(x,y,test_size=0.3,random_state=0)

# 创建模型对象

model=make_pipeline(PolynomialFeatures(degree=2),LinearRegression())

# 训练模型

model.fit(x_train,y_train)

x_test=sorted(x_test)

# 预测数据

y_pred=model.predict(x_test)

# 估计系数beta

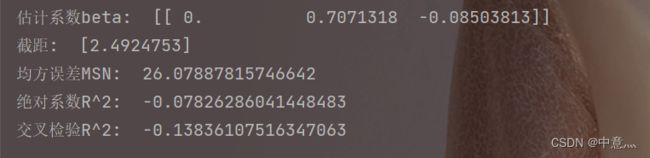

print('估计系数beta: ',model.steps[1][1].coef_)

# 截距a

print("截距: ",model.steps[1][1].intercept_)

# MSN均方误差

print('均方误差MSN: ',mean_squared_error(y_pred,y_test))

# 绝对系数R^2

print('绝对系数R^2: ',model.score(x_test,y_test))

# 交叉检验R^2

print('交叉检验R^2: ',np.mean(cross_val_score(model.steps[1][1],x_test,y_test,cv=2)))

# 绘图

plt.figure()

plt.scatter(x,y)

plt.plot(x_test,y_pred)

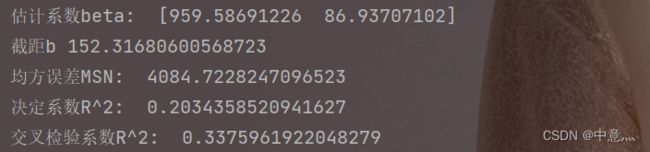

plt.show()多元回归

# -*- coding: utf-8 -*-

# @Time : 2022/7/11 17:26

# @Author : 中意灬

# @FileName: 多元回归.py

# @Software: PyCharm

from sklearn.linear_model import LinearRegression

import sklearn.datasets

from mpl_toolkits.mplot3d import Axes3D

import numpy as np

from sklearn.model_selection import train_test_split,cross_val_score

import matplotlib.pyplot as plt

from sklearn.metrics import mean_squared_error

# 构线性回归模型

slm=LinearRegression()

# 导入数据集

X,Y=sklearn.datasets.load_diabetes(return_X_y=True)

X=X[:,(2,4)]

# 划分训练集和测试集

x_train,x_test,y_train,y_test=train_test_split(X,Y,test_size=0.2,random_state=0)

# 训练模型

slm.fit(x_train,y_train)

# 用测试集预测一下

y_pred=slm.predict(x_test)

# 估计系数beta

print("估计系数beta: ",slm.coef_)

# 截距b

print('截距b',slm.intercept_)

# MSN均方误差

print('均方误差MSN: ',mean_squared_error(y_pred,y_test))

# 绝对系数R^2

print('决定系数R^2: ',slm.score(x_test,y_test))

# 交叉检验系数R^2

print('交叉检验系数R^2: ',np.mean(cross_val_score(slm,X,Y,cv=3)))

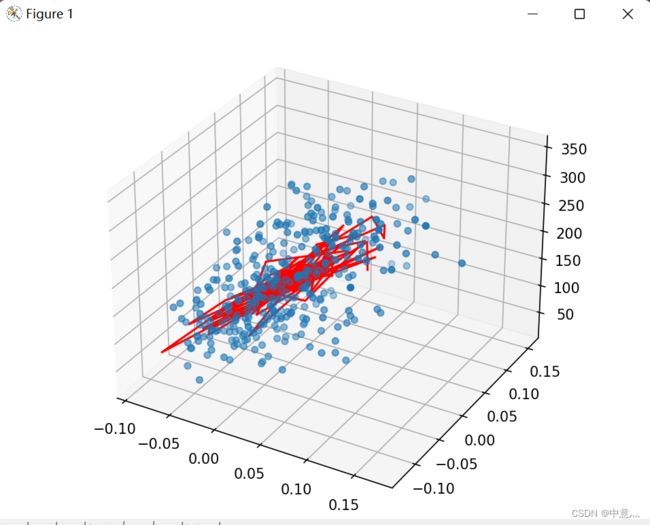

fig=plt.figure()

ax1=Axes3D(fig)

ax1.scatter3D(x_train[:,0],x_train[:,1],y_train)

ax1.plot3D(x_test[:,0],x_test[:,1],y_pred,c='r')

plt.show()拟合出来绘图应该是个平面,但这里就大概意思一下吧,大家把他当作一个平面,欸嘿嘿。