数据增强系列(5)PyTorch和Albumentations用于语义分割

这个例子展示了如何使用Albumentations进行二分类语义分割。我们将使用牛津宠物数据集。任务是将输入图像的每个像素分类为宠物或背景。

1.安装所需的库

我们将使用TernausNet,这是一个为语义分割任务提供预训练的UNet模型的库。

pip install ternausnet

pip install albumentations==0.4.6 #下载这个版本的不会报错

2.导入相关的库

from collections import defaultdict

import copy

import random

import os

import shutil

from urllib.request import urlretrieve

import albumentations as A

import albumentations.augmentations.functional as F

from albumentations.pytorch import ToTensorV2

import cv2

import matplotlib.pyplot as plt

import numpy as np

import ternausnet.models

from tqdm import tqdm

import torch

import torch.backends.cudnn as cudnn

import torch.nn as nn

import torch.optim

from torch.utils.data import Dataset, DataLoader

cudnn.benchmark = True

3.定义函数来下载数据集并解压缩它

class TqdmUpTo(tqdm):

def update_to(self, b=1, bsize=1, tsize=None):

if tsize is not None:

self.total = tsize

self.update(b * bsize - self.n)

def download_url(url, filepath):

directory = os.path.dirname(os.path.abspath(filepath))

os.makedirs(directory, exist_ok=True)

if os.path.exists(filepath):

print("Dataset already exists on the disk. Skipping download.")

return

with TqdmUpTo(unit="B", unit_scale=True, unit_divisor=1024, miniters=1, desc=os.path.basename(filepath)) as t:

urlretrieve(url, filename=filepath, reporthook=t.update_to, data=None)

t.total = t.n

def extract_archive(filepath):

extract_dir = os.path.dirname(os.path.abspath(filepath))

shutil.unpack_archive(filepath, extract_dir)

# 为下载的数据集设置根目录

# dataset_directory = os.path.join(os.environ["HOME"], "datasets/oxford-iiit-pet")

dataset_directory = "datasets/oxford-iiit-pet"

# 下载并提取Cats vs. Docs数据集

filepath = os.path.join(dataset_directory, "images.tar.gz")

download_url(

url="https://www.robots.ox.ac.uk/~vgg/data/pets/data/images.tar.gz", filepath=filepath,

)

extract_archive(filepath)

filepath = os.path.join(dataset_directory, "annotations.tar.gz")

download_url(

url="https://www.robots.ox.ac.uk/~vgg/data/pets/data/annotations.tar.gz", filepath=filepath,

)

extract_archive(filepath)

4.将数据集分为训练集和验证集

数据集中的一些文件被破坏了,所以我们将只使用那些OpenCV能够正确加载的图像文件。我们将使用6000张图片进行训练,1374张图片进行验证,10张图片进行测试。

root_directory = os.path.join(dataset_directory)

images_directory = os.path.join(root_directory, "images")

masks_directory = os.path.join(root_directory, "annotations", "trimaps")

images_filenames = list(sorted(os.listdir(images_directory)))

correct_images_filenames = [i for i in images_filenames if cv2.imread(os.path.join(images_directory, i)) is not None]

random.seed(42)

random.shuffle(correct_images_filenames)

train_images_filenames = correct_images_filenames[:6000]

val_images_filenames = correct_images_filenames[6000:-10]

test_images_filenames = images_filenames[-10:]

print(len(train_images_filenames), len(val_images_filenames), len(test_images_filenames)) # 6000 1374 10

5.定义一些与处理函数

5.1定义一个对掩码进行预处理的函数

数据集包含像素级分割。对于每个图像,都有一个带掩码的PNG文件。掩码的大小等于相关图像的大小。掩码图像中的每个像素可以取三个值中的一个:1、2或3。

1表示图像的这个像素属于类别pet, 2 -属于类别背景,3 -属于类别边界。由于本例演示了一个二值分割任务(即为每个像素分配两个类中的一个),

我们将对掩码进行预处理,因此它将只包含两个唯一值:如果像素是背景,则为0.0;如果一个像素是宠物或边框,则为1.0。

def preprocess_mask(mask):

mask = mask.astype(np.float32)

mask[mask == 2.0] = 0.0

mask[(mask == 1.0) | (mask == 3.0)] = 1.0

return mask

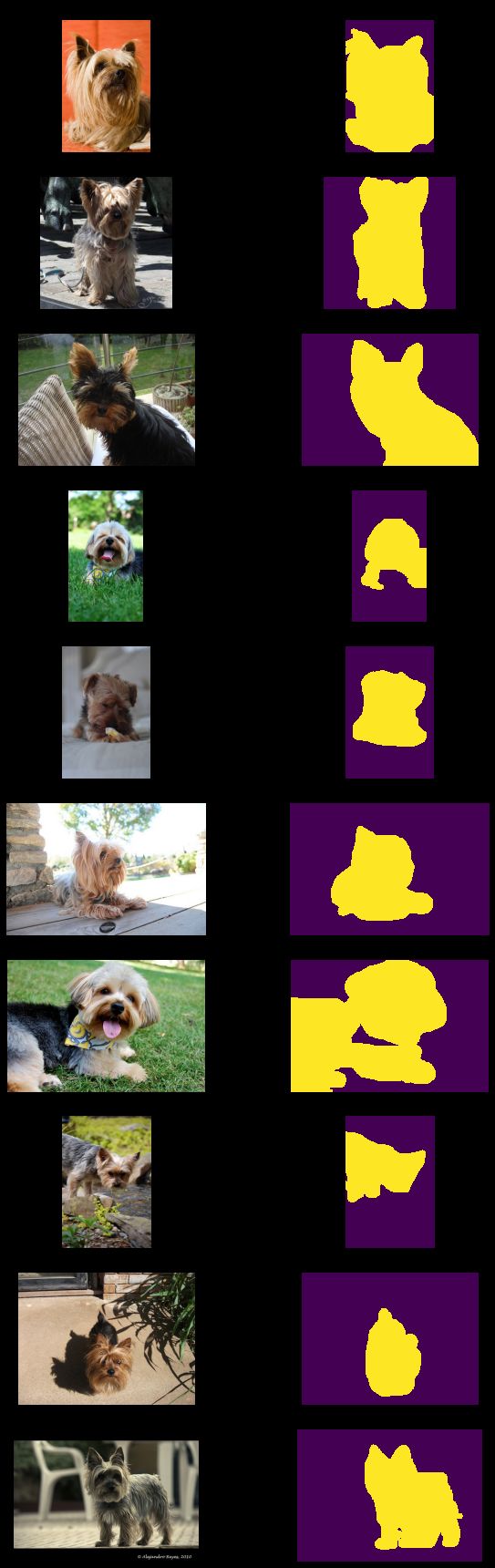

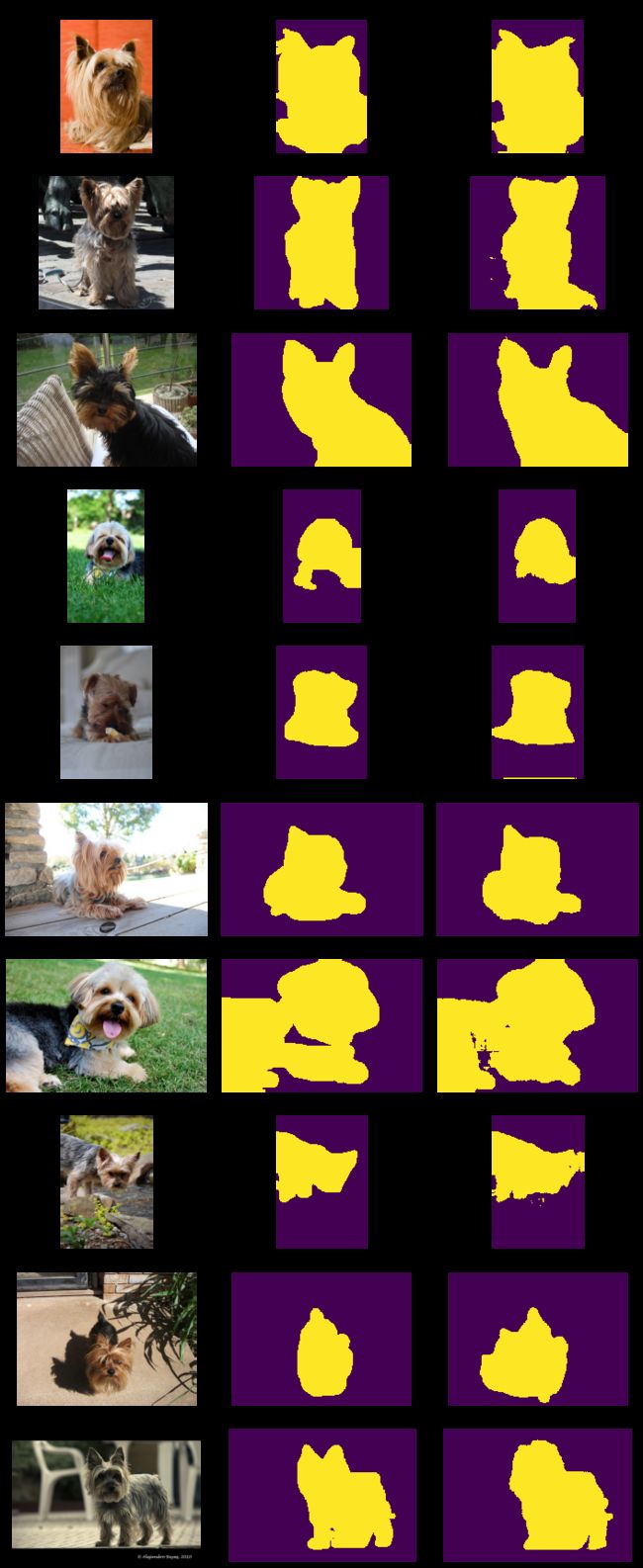

5.2定义一个函数来可视化图像及其标签

让我们定义一个可视化函数,它将获取图像文件名列表、带有图像的目录的路径、带有掩码的目录的路径,以及带有预测掩码的可选参数(稍后我们将使用此参数来显示模型的预测)。

def display_image_grid(images_filenames, images_directory, masks_directory, predicted_masks=None):

cols = 3 if predicted_masks else 2

rows = len(images_filenames)

figure, ax = plt.subplots(nrows=rows, ncols=cols, figsize=(10, 24))

for i, image_filename in enumerate(images_filenames):

image = cv2.imread(os.path.join(images_directory, image_filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

mask = cv2.imread(os.path.join(masks_directory, image_filename.replace(".jpg", ".png")), cv2.IMREAD_UNCHANGED, )

mask = preprocess_mask(mask)

ax[i, 0].imshow(image)

ax[i, 1].imshow(mask, interpolation="nearest")

ax[i, 0].set_title("Image")

ax[i, 1].set_title("Ground truth mask")

ax[i, 0].set_axis_off()

ax[i, 1].set_axis_off()

if predicted_masks:

predicted_mask = predicted_masks[i]

ax[i, 2].imshow(predicted_mask, interpolation="nearest")

ax[i, 2].set_title("Predicted mask")

ax[i, 2].set_axis_off()

plt.tight_layout()

plt.show()

# 显示

display_image_grid(test_images_filenames, images_directory, masks_directory)

6.用于训练和预测的图像大小

通常,用于训练和推理的图像有不同的高度、宽度和纵横比。这一事实给深度学习渠道带来了两个挑战:

1)PyTorch要求批处理中的所有图像具有相同的高度和宽度。

2)如果一个神经网络不是全卷积的,你必须在训练和推理过程中对所有图像使用相同的宽度和高度。全卷积架构,如UNet,可以处理任何大小的图像。

有三种常见的方法来应对这些挑战:

1)在训练期间调整所有图像和掩码的大小到固定的大小(例如,256x256像素)。当一个模型在推断过程中预测出一个固定大小的掩码后,将掩码调整为原始图像的大小。

这种方法简单,但有一些缺点:预测的掩码比图像小,掩码可能会丢失原始图像的一些上下文和重要细节。

如果数据集中的图像有不同的纵横比,这种方法可能会有问题。例如,假设您正在调整大小为1024x512像素的图像(即长宽比为2:1的图像)到256x256像素(1:1长宽比)。

在这种情况下,这种转换将扭曲图像,也可能影响预测的质量。

2)如果你使用全卷积神经网络,你可以用图像裁剪训练一个模型,但使用原始图像进行推理。这种选择通常提供了质量、训练速度和硬件要求之间的最佳折中。

3)不要改变图像的大小,同时使用源图像进行训练和推断。使用这种方法,您不会丢失任何信息。然而,原始图像可能相当大,所以它们可能需要大量的GPU内存。

而且这种方法需要更多的训练时间才能取得好的效果。

一些架构,比如UNet,要求图像的大小必须能被网络的降采样因子(通常是32)整除,所以你可能还需要用边框填充图像。Albumentations为这种情况提供了一种特殊的转换。

# 下面的示例展示了不同类型预处理的图像。

example_image_filename = correct_images_filenames[0]

image = cv2.imread(os.path.join(images_directory, example_image_filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

resized_image = F.resize(image, height=256, width=256)

padded_image = F.pad(image, min_height=512, min_width=512)

padded_constant_image = F.pad(image, min_height=512, min_width=512, border_mode=cv2.BORDER_CONSTANT)

cropped_image = F.center_crop(image, crop_height=256, crop_width=256)

figure, ax = plt.subplots(nrows=1, ncols=5, figsize=(18, 10))

ax.ravel()[0].imshow(image)

ax.ravel()[0].set_title("Original image")

ax.ravel()[1].imshow(resized_image)

ax.ravel()[1].set_title("Resized image")

ax.ravel()[2].imshow(cropped_image)

ax.ravel()[2].set_title("Cropped image")

ax.ravel()[3].imshow(padded_image)

ax.ravel()[3].set_title("Image padded with reflection")

ax.ravel()[4].imshow(padded_constant_image)

ax.ravel()[4].set_title("Image padded with constant padding")

plt.tight_layout()

plt.show()

7.在本教程中,我们将探索处理图像大小的所有三种方法。

7.1 方法1:将所有图像和掩码调整为固定大小(例如,256x256像素)。

7.1.1定义一个PyTorch数据集类

class OxfordPetDataset(Dataset):

def __init__(self, images_filenames, images_directory, masks_directory, transform=None):

self.images_filenames = images_filenames

self.images_directory = images_directory

self.masks_directory = masks_directory

self.transform = transform

def __len__(self):

return len(self.images_filenames)

def __getitem__(self, idx):

image_filename = self.images_filenames[idx]

image = cv2.imread(os.path.join(self.images_directory, image_filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

mask = cv2.imread(

os.path.join(self.masks_directory, image_filename.replace(".jpg", ".png")), cv2.IMREAD_UNCHANGED,

)

mask = preprocess_mask(mask)

if self.transform is not None:

transformed = self.transform(image=image, mask=mask)

image = transformed["image"]

mask = transformed["mask"]

return image, mask

7.1.2接下来,我们为训练和验证数据集创建增强管道。

注意,我们使用A.Resize(256, 256)将输入图像和掩码的大小调整为256x256像素。

train_transform = A.Compose(

[

A.Resize(256, 256),

A.ShiftScaleRotate(shift_limit=0.2, scale_limit=0.2, rotate_limit=30, p=0.5),

A.RGBShift(r_shift_limit=25, g_shift_limit=25, b_shift_limit=25, p=0.5),

A.RandomBrightnessContrast(brightness_limit=0.3, contrast_limit=0.3, p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

]

)

train_dataset = OxfordPetDataset(train_images_filenames, images_directory, masks_directory, transform=train_transform, )

val_transform = A.Compose(

[A.Resize(256, 256), A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)), ToTensorV2()]

)

val_dataset = OxfordPetDataset(val_images_filenames, images_directory, masks_directory, transform=val_transform, )

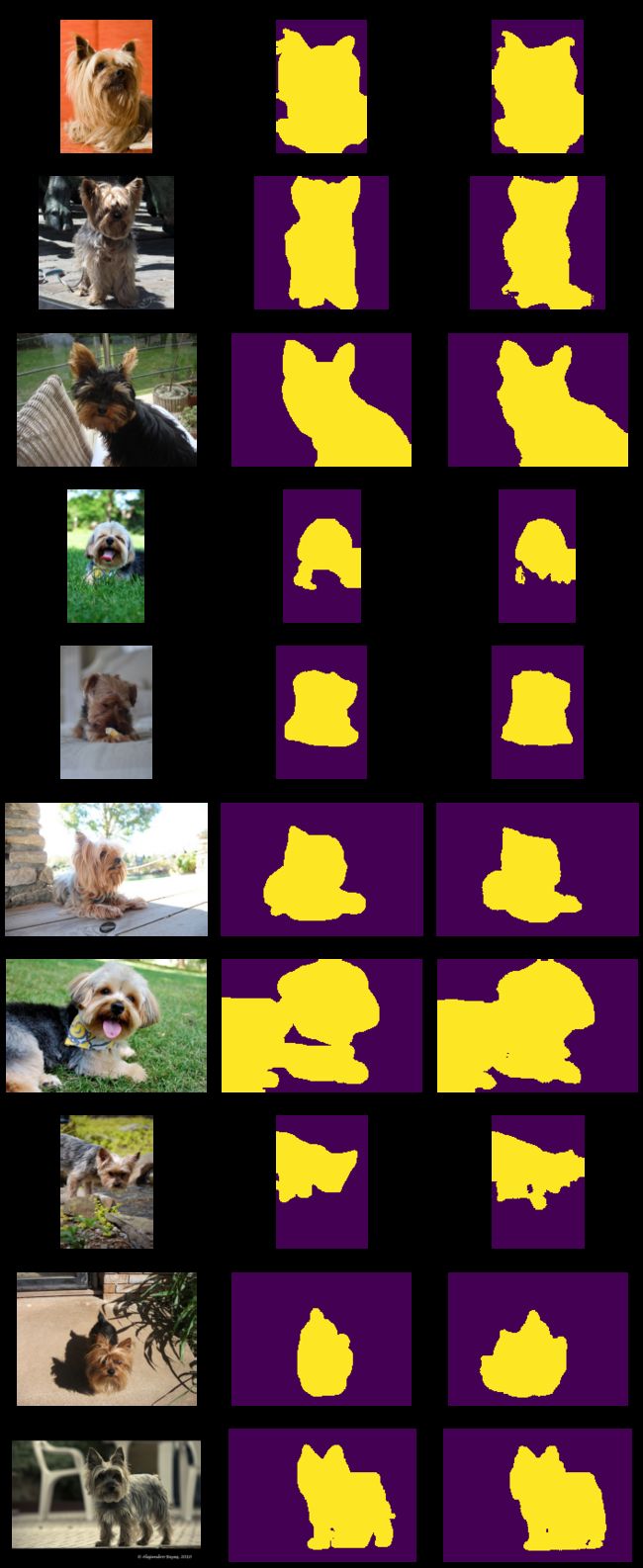

7.1.3让我们定义一个函数

它接受一个数据集,并可视化应用于同一图像和相关掩码的不同增强结果。

def visualize_augmentations(dataset, idx=0, samples=5):

dataset = copy.deepcopy(dataset)

dataset.transform = A.Compose([t for t in dataset.transform if not isinstance(t, (A.Normalize, ToTensorV2))])

figure, ax = plt.subplots(nrows=samples, ncols=2, figsize=(10, 24))

for i in range(samples):

image, mask = dataset[idx]

ax[i, 0].imshow(image)

ax[i, 1].imshow(mask, interpolation="nearest")

ax[i, 0].set_title("Augmented image")

ax[i, 1].set_title("Augmented mask")

ax[i, 0].set_axis_off()

ax[i, 1].set_axis_off()

plt.tight_layout()

plt.show()

random.seed(42)

visualize_augmentations(train_dataset, idx=55)

7.1.4定义训练辅助函数

MetricMonitor帮助跟踪指标,如训练和验证期间的准确性或损失。

class MetricMonitor:

def __init__(self, float_precision=3):

self.float_precision = float_precision

self.reset()

def reset(self):

self.metrics = defaultdict(lambda: {"val": 0, "count": 0, "avg": 0})

def update(self, metric_name, val):

metric = self.metrics[metric_name]

metric["val"] += val

metric["count"] += 1

metric["avg"] = metric["val"] / metric["count"]

def __str__(self):

return " | ".join(

[

"{metric_name}: {avg:.{float_precision}f}".format(

metric_name=metric_name, avg=metric["avg"], float_precision=self.float_precision

)

for (metric_name, metric) in self.metrics.items()

]

)

7.1.5 定义用于训练和验证的函数

def train(train_loader, model, criterion, optimizer, epoch, params):

metric_monitor = MetricMonitor()

model.train()

stream = tqdm(train_loader)

for i, (images, target) in enumerate(stream, start=1):

images = images.to(params["device"], non_blocking=True)

target = target.to(params["device"], non_blocking=True)

output = model(images).squeeze(1)

loss = criterion(output, target)

metric_monitor.update("Loss", loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

stream.set_description(

"Epoch: {epoch}. Train. {metric_monitor}".format(epoch=epoch, metric_monitor=metric_monitor)

)

def validate(val_loader, model, criterion, epoch, params):

metric_monitor = MetricMonitor()

model.eval()

stream = tqdm(val_loader)

with torch.no_grad():

for i, (images, target) in enumerate(stream, start=1):

images = images.to(params["device"], non_blocking=True)

target = target.to(params["device"], non_blocking=True)

output = model(images).squeeze(1)

loss = criterion(output, target)

metric_monitor.update("Loss", loss.item())

stream.set_description(

"Epoch: {epoch}. Validation. {metric_monitor}".format(epoch=epoch, metric_monitor=metric_monitor)

)

def create_model(params):

model = getattr(ternausnet.models, params["model"])(pretrained=True)

model = model.to(params["device"])

return model

def train_and_validate(model, train_dataset, val_dataset, params):

train_loader = DataLoader(

train_dataset,

batch_size=params["batch_size"],

shuffle=True,

num_workers=params["num_workers"],

pin_memory=True,

)

val_loader = DataLoader(

val_dataset,

batch_size=params["batch_size"],

shuffle=False,

num_workers=params["num_workers"],

pin_memory=True,

)

criterion = nn.BCEWithLogitsLoss().to(params["device"])

optimizer = torch.optim.Adam(model.parameters(), lr=params["lr"])

for epoch in range(1, params["epochs"] + 1):

train(train_loader, model, criterion, optimizer, epoch, params)

validate(val_loader, model, criterion, epoch, params)

return model

def predict(model, params, test_dataset, batch_size):

test_loader = DataLoader(

test_dataset, batch_size=batch_size, shuffle=False, num_workers=params["num_workers"], pin_memory=True,

)

model.eval()

predictions = []

with torch.no_grad():

for images, (original_heights, original_widths) in test_loader:

images = images.to(params["device"], non_blocking=True)

output = model(images)

probabilities = torch.sigmoid(output.squeeze(1))

predicted_masks = (probabilities >= 0.5).float() * 1

predicted_masks = predicted_masks.cpu().numpy()

for predicted_mask, original_height, original_width in zip(

predicted_masks, original_heights.numpy(), original_widths.numpy()

):

predictions.append((predicted_mask, original_height, original_width))

return predictions

7.1.6定义训练参数并训练模型

在这里,我们定义了一些训练参数,如模型结构、学习率、批量大小、周期等。

params = {

"model": "UNet11",

"device": "cuda",

"lr": 0.001,

"batch_size": 16,

"num_workers": 4,

"epochs": 10,

}

model = create_model(params)

model = train_and_validate(model, train_dataset, val_dataset, params)

7.1.7预测图像的标签并将这些预测可视化

现在我们有了一个训练过的模型,所以让我们试着预测一些图像的掩码。注意,__getitem__方法不仅返回图像,而且还返回图像的原始高度和宽度。

我们将使用这些值将预测的掩码大小从256x256像素调整为原始图像的大小。

class OxfordPetInferenceDataset(Dataset):

def __init__(self, images_filenames, images_directory, transform=None):

self.images_filenames = images_filenames

self.images_directory = images_directory

self.transform = transform

def __len__(self):

return len(self.images_filenames)

def __getitem__(self, idx):

image_filename = self.images_filenames[idx]

image = cv2.imread(os.path.join(self.images_directory, image_filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

original_size = tuple(image.shape[:2])

if self.transform is not None:

transformed = self.transform(image=image)

image = transformed["image"]

return image, original_size

test_transform = A.Compose(

[A.Resize(256, 256), A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)), ToTensorV2()]

)

test_dataset = OxfordPetInferenceDataset(test_images_filenames, images_directory, transform=test_transform,)

predictions = predict(model, params, test_dataset, batch_size=16)

# 接下来,我们将把预测掩码的大小调整为256x256像素到原始图像的大小。

predicted_masks = []

for predicted_256x256_mask, original_height, original_width in predictions:

full_sized_mask = F.resize(

predicted_256x256_mask, height=original_height, width=original_width, interpolation=cv2.INTER_NEAREST

)

predicted_masks.append(full_sized_mask)

display_image_grid(test_images_filenames, images_directory, masks_directory, predicted_masks=predicted_masks)

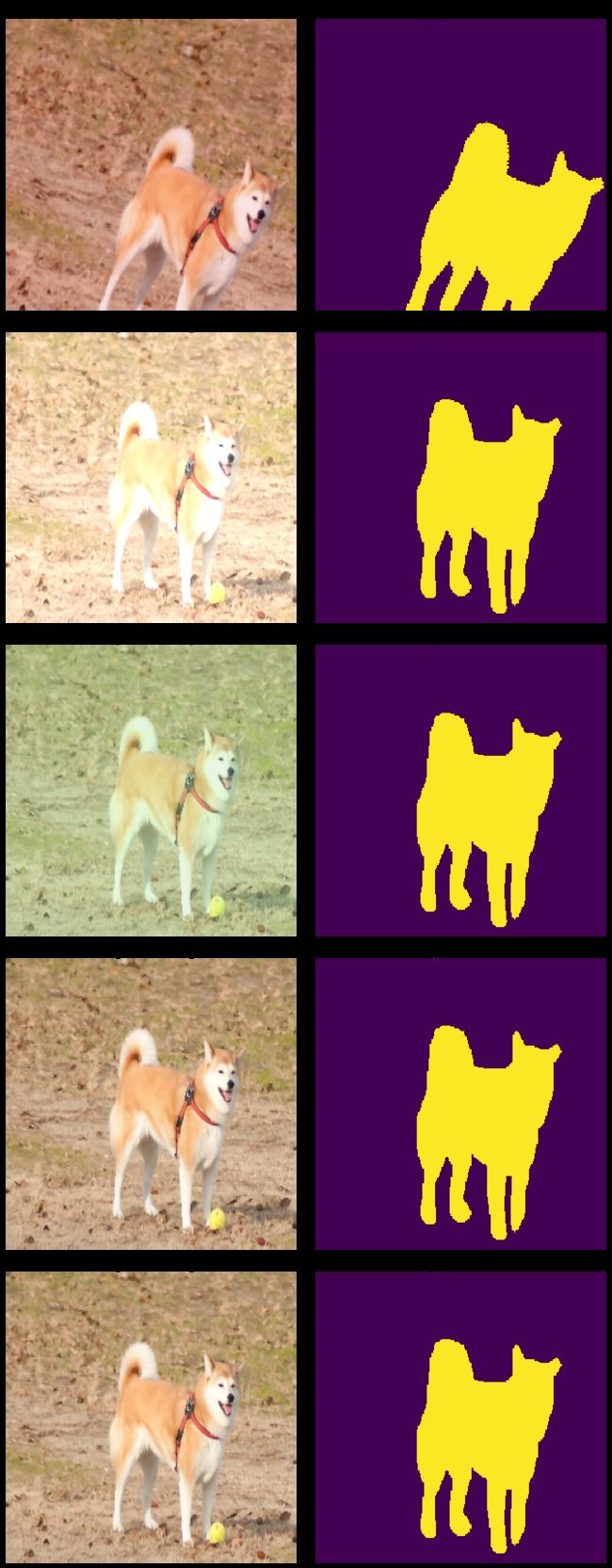

7.2 方法2:训练裁剪图像,预测全尺寸图像的掩码

我们将重用前面示例中的大部分代码。

在数据集中,相同图像的高度和宽度小于裁剪的大小(256x256像素),所以我们首先应用A.PadIfNeeded(min_height=256, min_width=256),

如果它的高度或宽度小于256像素,将填充图像。

train_transform = A.Compose(

[

A.PadIfNeeded(min_height=256, min_width=256),

A.RandomCrop(256, 256),

A.ShiftScaleRotate(shift_limit=0.05, scale_limit=0.05, rotate_limit=15, p=0.5),

A.RGBShift(r_shift_limit=15, g_shift_limit=15, b_shift_limit=15, p=0.5),

A.RandomBrightnessContrast(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

]

)

train_dataset = OxfordPetDataset(train_images_filenames, images_directory, masks_directory, transform=train_transform,)

val_transform = A.Compose(

[

A.PadIfNeeded(min_height=256, min_width=256),

A.CenterCrop(256, 256),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

]

)

val_dataset = OxfordPetDataset(val_images_filenames, images_directory, masks_directory, transform=val_transform,)

params = {

"model": "UNet11",

"device": "cuda",

"lr": 0.001,

"batch_size": 16,

"num_workers": 4,

"epochs": 10,

}

model = create_model(params)

model = train_and_validate(model, train_dataset, val_dataset, params)

测试数据集中的所有图像的最大边长为500像素。由于PyTorch要求批处理中的所有图像必须具有相同的尺寸,而且UNet要求图像的大小可以被16整除,

我们将应用A.padifneeded (min_height=512, min_width=512, border_mode=cv2.BORDER_CONSTANT)。

该增强将填充图像边界为0,因此图像大小将成为512x512像素。

test_transform = A.Compose(

[

A.PadIfNeeded(min_height=512, min_width=512, border_mode=cv2.BORDER_CONSTANT),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

]

)

test_dataset = OxfordPetInferenceDataset(test_images_filenames, images_directory, transform=test_transform,)

predictions = predict(model, params, test_dataset, batch_size=16)

由于我们收到了填充图像的掩码,我们需要从填充的掩码中裁剪一部分原始图像大小。

predicted_masks = []

for predicted_padded_mask, original_height, original_width in predictions:

cropped_mask = F.center_crop(predicted_padded_mask, original_height, original_width)

predicted_masks.append(cropped_mask)

display_image_grid(test_images_filenames, images_directory, masks_directory, predicted_masks=predicted_masks)

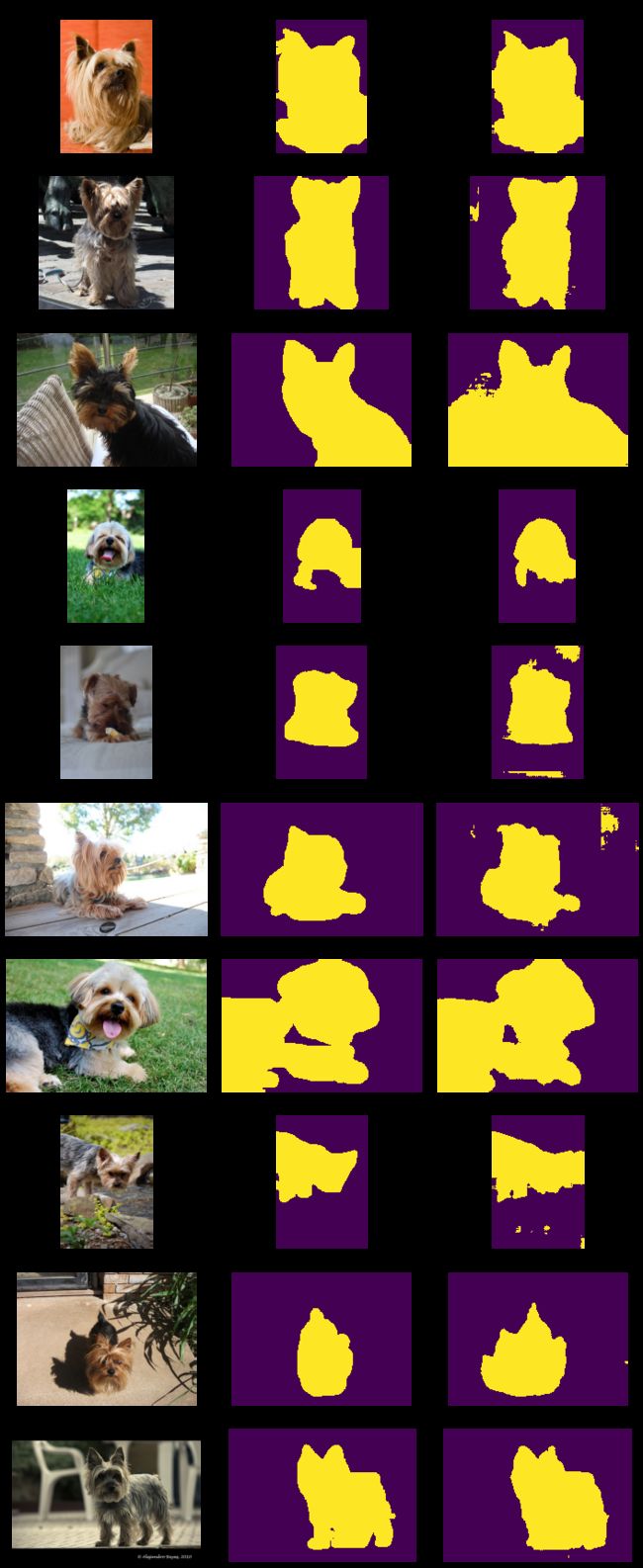

7.3 方法3:使用原始图像。

我们也可以使用原始图像,而不用调整大小或裁剪它们。然而,这个数据集有一个问题。数据集中的一些图像非常大,即使批大小=1,它们也需要超过11Gb的GPU内存来进行训练。因此,作为权衡,我们首先应用A.longestmaxsize(512)增强,以确保图像的最大尺寸不超过512像素。

这种增强将只影响7384个数据集中的137个图像。下一步将使用A.PadIfNeeded(最小高度=512,最小宽度=512),以确保所有图像都为512x512像素。

train_transform = A.Compose(

[

A.LongestMaxSize(512),

A.PadIfNeeded(min_height=512, min_width=512),

A.ShiftScaleRotate(shift_limit=0.05, scale_limit=0.05, rotate_limit=15, p=0.5),

A.RGBShift(r_shift_limit=15, g_shift_limit=15, b_shift_limit=15, p=0.5),

A.RandomBrightnessContrast(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

]

)

train_dataset = OxfordPetDataset(train_images_filenames, images_directory, masks_directory, transform=train_transform,)

val_transform = A.Compose(

[

A.LongestMaxSize(512),

A.PadIfNeeded(min_height=512, min_width=512, border_mode=cv2.BORDER_CONSTANT),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

]

)

val_dataset = OxfordPetDataset(val_images_filenames, images_directory, masks_directory, transform=val_transform,)

params = {

"model": "UNet11",

"device": "cuda",

"lr": 0.001,

"batch_size": 8,

"num_workers": 4,

"epochs": 10,

}

model = create_model(params)

model = train_and_validate(model, train_dataset, val_dataset, params)

接下来,我们将使用与方法2中相同的代码来进行预测。

test_transform = A.Compose(

[

A.PadIfNeeded(min_height=512, min_width=512, border_mode=cv2.BORDER_CONSTANT),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

]

)

test_dataset = OxfordPetInferenceDataset(test_images_filenames, images_directory, transform=test_transform,)

predictions = predict(model, params, test_dataset, batch_size=16)

predicted_masks = []

for predicted_padded_mask, original_height, original_width in predictions:

cropped_mask = F.center_crop(predicted_padded_mask, original_height, original_width)

predicted_masks.append(cropped_mask)

display_image_grid(test_images_filenames, images_directory, masks_directory, predicted_masks=predicted_masks)

参考目录

https://github.com/albumentations-team/albumentations_examples