k8s-9:k8s调度,亲和,污点,访问控制

文章目录

- 1. 简介

- 2. Kubernetes调度方法

-

- 2.1 nodeName

- 2.2 nodeSelector

- 3. 亲和与反亲和

-

- 3.1 节点亲和

- 3.2 Pod亲和性和反亲和

- 4. 污点与容忍

- 5. k8s访问控制

-

- 5.1 ServiceAccount

- 5.2 UserAccount

1. 简介

- 调度器通过Kubernetes的watch机制来发现集群中新创建且未被调度到Node上的Pod。调度器会将发现的每一个未调度的Pod调度到一个合适的Node上运行

- Kube-scheduler是kubernetes集群的默认调度器,并且是集群控制面的一部分。如果有调度需求,kube-scheduler在设计上是允许用户自己写一个调度组件并替换原有的kube-schduler

- 在做调度决定时需要考虑的因素包括:单独和整体的资源请求,硬件/软件/策略限制,亲和以及反亲和要求,数据局限性,负载间的干扰等等

- 官方说明

创建Pod时首先时由调度器选择节点:

2. Kubernetes调度方法

官方解释

2.1 nodeName

- nodeName时节点选择约束的最简单方法,但一般不推荐。如果nodeName在PodSpec中指定了,则它优先于其他的节点选择方法。

- 使用nodeName来选择节点的一些限制:

– 如果指定的节点不存在;

– 如果指定的节点没有资源来容纳pod,则pod调度失败

– 云环境中的节点名称并非总是可预测或稳定的

示例:

apiVersion: v1

kind: pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: myapp:v1

nodeName: server4

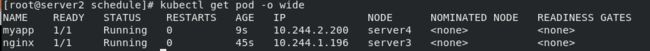

此时能看到在server3和server4上各有一个Pod:

手动指定node节点名称的问题在于,若该节点名称不存在,则会调度失败:

2.2 nodeSelector

- nodeSelector是节点选择约束的最简单推荐形式

- 给选择的节点添加标签:

kubectl label nodes server2 disktype=ssd

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

env: test

spec:

containers:

- name: nginx

image: nginx

nodeSelector:

disktype: ssd

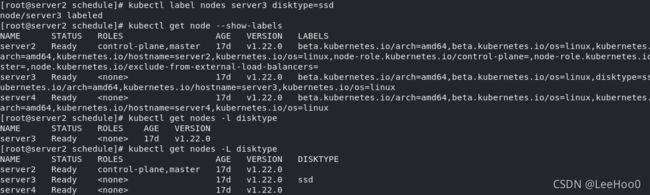

给server3节点打标签:kubectl label nodes server3 disktype=ssd

查看各节点上的所有标签:kubectl get node --show-labels

查看带指定标签的节点:kubectl get nodes -l disktype

查看指定标签key值包含的节点:kubectl get node -L disktype

去掉节点上的标签类型:kubectl label node server3 disktype-

运行,此时即在server3上执行:

3. 亲和与反亲和

亲和与反亲和:

- nodeSelector提供了一种非常简单的方法来将Pod约束到具有特定标签的节点上。亲和/反亲和功能极大的扩展了可以表达约束的类型

- 可以发现规则是“软”/“偏好”,而不是硬性要求,因此,如果调度器无法满足该要求,仍然调度该Pod

- 可以使用节点上的Pod标签来约束,而不是使用节点本身的标签,来允许哪些Pod可以或者不可以被放置在一起

3.1 节点亲和

- requireDuringSchedulingIgnoredDuringExcution 必须满足

- preferredDuringSchedulingIgnoredDuringExecution 倾向满足

- IgnoreDuringExcution 表示如果在Pod运行期间Node的标签发生变化,导致亲和性策略不能满足,则继续运行当前Pod

apiVersion: v1

kind: Pod

metadata:

name: node-affinity

spec:

containers:

- name: nginx

image: nginx

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: disktype

operator: In

values:

- sata

给server4添加标签:kubectl label nodes server4 disktype=sata

![]()

在调度时,每次根据配置清单,必须先满足硬限要求,再是软限要求

3.2 Pod亲和性和反亲和

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

---

apiVersion: v1

kind: Pod

metadata:

name: myapp

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v1

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx

topologyKey: kubernetes.io/hostname

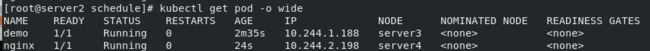

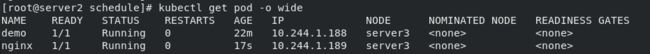

myapp与nginx节点保持一致:

![]()

对于反亲和性,只需更改一个关键词:

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

---

apiVersion: v1

kind: Pod

metadata:

name: myapp

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v1

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx

topologyKey: kubernetes.io/hostname

4. 污点与容忍

- NodeAffinity节点亲和性,是Pod上定义的一种属性,使Pod能够按要求调度的某个Node上,而Taints相反,可以让Node拒绝运行Pod,甚至驱逐Pod

- Taints(污点)是Node的一个属性,设置了Taints后,调度器便不会调度POd到该Node。如果在Pod上设置了Tolerations(容忍),可以容忍Node上的污点,则调度器可以忽略污点,可以调度

server2作为管理节点,默认设置了不参与调度的污点:

![]()

创建:kubectl taint nodes node1 key=value:NoSchedule

查询:kubectl describe nodes server2 |grep Taints

删除: kubectl taint nodes node1 key:NoSchedule-

设置的污点范围:

- Noschedule(Pod不会被调度到被标记为Taints的节点)

- PreferNoSchedule(NoSchedule的软策略版本)

- NoExecute:该选项意为着一旦Taint生效,如该节点的正在运行的Pod没有相应Tolerate设置,会被直接驱逐

Tolerations中定义的key,value,effect要与node上设置的taint保持一致

- 如果operator是Exists,value可以忽略

- 如果operator是Equal,则key与value之间的关系必须相等

- 如果不指定operator属性,则默认值为Equal

- 两个特殊值

– 当不指定key,再配合Exists就能匹配所有的key和value,可以容忍所有污点

– 当不指定effect,则匹配所有的effect

后续创建的Pod不会在该节点上:kubectl cordon server3

直接驱逐本节点上所有Pod至其他节点,且后续其他Pod不会在调度至本节点,注意本操作需要能让本节点的Pod能有调度到其他节点,且需要忽略DaemonSet控制器:kubectl drain server3

删除节点:kubectl delete server3

重新加入集群(k8s的自动注册功能):systemctl restart kubelet

正常的维护逻辑(比如关闭节点升级硬件)是,首先执行drain,再delete

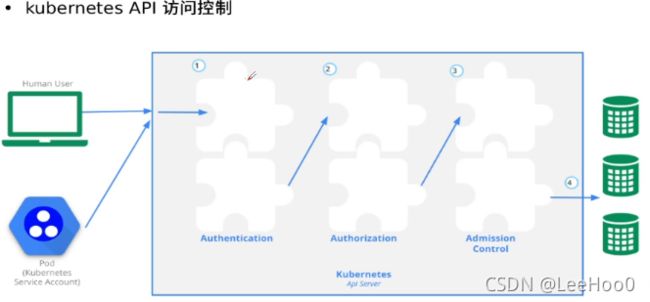

5. k8s访问控制

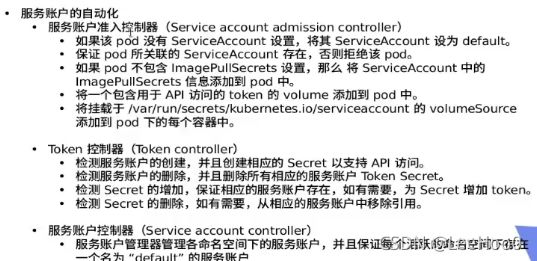

5.1 ServiceAccount

主要用于集群内部:认证,授权,(准入控制),

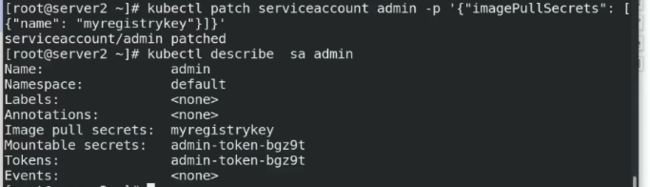

将添加的用于拉取镜像的secrets与创建的sc进行绑定,然后在策略文件中添加sc,即可拉取私有仓库:kubectl patch serviceaccounts default -p '{"imagePullSecrets": [{"name": "myregistrykey"}]}'

5.2 UserAccount

主要用于登陆集群

进入k8s存放证书目录:/etc/kubernetes/pki

生成一个测试证书:openssl genrsa -out test.key 2048

由test.key生成一个证书的请求:openssl req -new -key test.key -out test.csr -subj "/CN=test"

由csr文件生成证书的有效期限365天:openssl x509 -req -in test.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out test.crt -days 365

最终生成test.crt

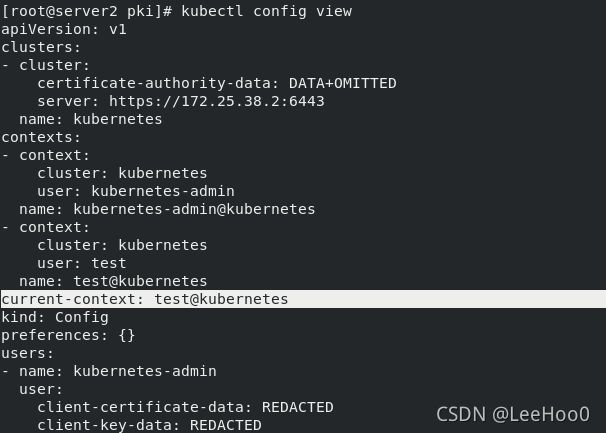

查询配置:kubectl config view

若要创建test用户访问集群,也需要配置相关证书文件:kubectl config set-credentials test --client-certificate=/etc/kubernetes/pki/test.crt --client-key=/etc/kubernetes/pki/test.key --embed-certs=true

此时除了默认账户,出现了新建的test:

设置test用户的contest,其实就是绑定,用来作切换:kubectl config set-context test@kubernetes --cluster=kubernetes --user=test

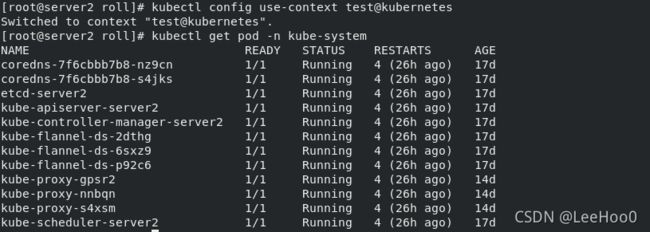

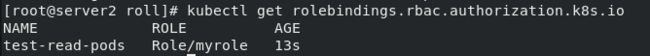

使用test用户:kubectl config use-context test@kubernetes

此时已经完成了test用户的认证功能,但是还未对其进行授权,无法使用集群资源

此时需要先切换到管理员对其授权:kubectl config use-context kubernetes-admin@kubernetes

RBAC

默认的授权是RBAC(Role Based Access Control,基于角色访问控制授权):

- 允许管理员通过k8s API动态配置授权策略,RBAC就是通过用户角色与权限进行关联

- RBAC只有授权,没有拒绝授权,所以只需要定义允许该用户做什么即可

- RBAC包括四种类型:Role,ClusterRole,RoleBinding,ClusterRoleBinding

- RBAC就是将用户与角色进行绑定,角色是对资源的访问控制,权限。

RBAC的萨格基本概念:

- Subject: 被作用者,它表示k8s中的三类主体,user,group,serviceAccount

- role: 角色,实际是一组规则,定义了一组对k8s API对象的操作权限

- RoleBinging:定义了“被作用者”的“角色的关系“

Role和ClusterRole

- Role是一系列的权限的集合,Role只能授予单个namespace中资源的访问权限,即局部规则

- ClusterRole跟Role类似,但是可以在集群中全局使用,即全局规则

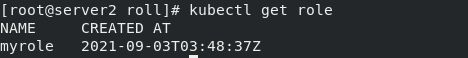

创建角色:

vim roles.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: default

name: myrole

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get","watch","list","create","update","patch","delete"]

两种绑定方式:RoleBinding和ClusterRoleBinding

- RoleBinding是将Role中定义的权限授权给u用户或用户组。它包含一个subjects列表(users,groups,service accounts),并引用该Role

- RoleBinding是对某个namespace内授权,ClusterRoleBinding适用在集群范围内使用

使用Rolebingding的方式,必须指定namespace(此处为默认),被作用者(user account,此处为新建的test用户,roleref为角色绑定),以下为新建角色及绑定的完整过程

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: default

name: myrole

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get","watch","list","create","update","patch","delete"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: test-read-pods

namespace: default

subjects:

- kind: User

name: test

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: myrole

apiGroup: rbac.authorization.k8s.io

此时,切换用户测试,可以看到,可以使用集群资源,但是只能在默认的namespace下查查看,无权查看kube-system的pod:

切换回admin,进一步设置一个clusterrolebinding,其中包含了可以对pod和deployments作操作,并绑定 :

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: default

name: myrole

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get","watch","list","create","update","patch","delete"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: test-read-pods

namespace: default

subjects:

- kind: User

name: test

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: myrole

apiGroup: rbac.authorization.k8s.io

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: myclusterrole

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get","watch","list","create","update"]

- apiGroups: ["extensions","apps"]

resources: ["deployments"]

verbs: ["get","watch","list","create","update","patch","delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: rolebind-myclusterrole

namespace: default

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: myclusterrole

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: test

此时,即可使用deployment控制器,但是仍然不能查看别的nameplace

最后,添加clusterrolebinding:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: clusterrolebinding-myclusterrole

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: myclusterrole

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: test

额外补充: