SpringCloud微服务接入分布式事务框架Seata实战,【一个注解解决分布式事务】

文章目录

- 一、配置启动Seata Server

-

- 1.1 环境准备

-

- 1)指定nacos作为配置中心和注册中心

- 2)同步seata server的配置到nacos

- 3) 启动Seata Server

- 二、Seata如何整合到Spring Cloud微服务

-

- 2.1 导入依赖

- 2.2 微服务对应数据库中添加undo_log表

- 2.3 微服务需要使用seata DataSourceProxy代理自己的数据源

- 2.4 添加seata的配置

-

- 1)将registry.conf文件拷贝到resources目录下,指定注册中心和配置中心都是nacos

- 2)在yml中指定事务分组(和配置中心的service.vgroup_mapping 配置一一对应)

- 3) 在事务发起者中添加`@GlobalTransactional`注解

- 4)测试分布式事务是否生效

- 三、使用中的注意事项(坑)

-

- 3.1 服务降级导致的分布式事务失效问题

- 3.2 如何配置Seata高可用,挂了怎么办

一、配置启动Seata Server

1.1 环境准备

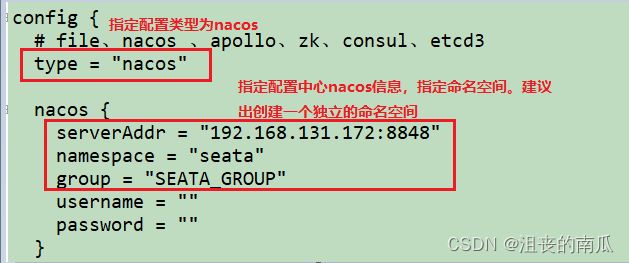

1)指定nacos作为配置中心和注册中心

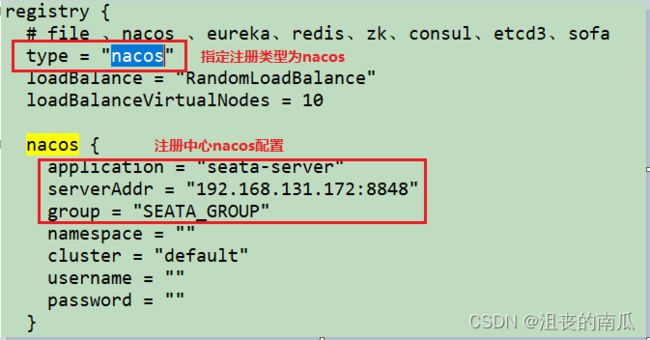

修改registry.conf文件:

需要复制registry.conf文件到每个微服务的resources目录下:

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "192.168.131.172:8848"

namespace = ""

cluster = "default"

group = "SEATA_GROUP"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3、springCloudConfig

type = "nacos"

nacos {

serverAddr = "192.168.131.172:8848"

namespace = "seata"

group = "SEATA_GROUP"

}

}

注意registry.nacos.application这个属性要写,不然可能出现如下错误:

![]()

建议为seata创建一个独立的命名空间来存储配置,因为seata相关的配置是非常多的。如果放在public下,非常混乱…

注意:客户端配置registry.conf使用nacos时也要注意group要和seata server中的group一致,默认group是"DEFAULT_GROUP"

2)同步seata server的配置到nacos

脚本文件下载地址:https://github.com/seata/seata/tree/1.4.0/script

获取/seata/script/config-center/config.txt,修改配置信息:

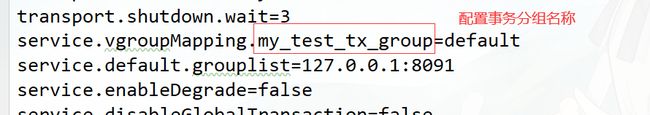

配置事务分组, 要与客户端配置的事务分组一致。(客户端properties配置:spring.cloud.alibaba.seata.tx‐service‐group=my_test_tx_group)

config.txt文件:

transport.type=TCP

transport.server=NIO

transport.heartbeat=true

transport.enableClientBatchSendRequest=false

transport.threadFactory.bossThreadPrefix=NettyBoss

transport.threadFactory.workerThreadPrefix=NettyServerNIOWorker

transport.threadFactory.serverExecutorThreadPrefix=NettyServerBizHandler

transport.threadFactory.shareBossWorker=false

transport.threadFactory.clientSelectorThreadPrefix=NettyClientSelector

transport.threadFactory.clientSelectorThreadSize=1

transport.threadFactory.clientWorkerThreadPrefix=NettyClientWorkerThread

transport.threadFactory.bossThreadSize=1

transport.threadFactory.workerThreadSize=default

transport.shutdown.wait=3

service.vgroupMapping.my_test_tx_group=default

service.default.grouplist=127.0.0.1:8091

service.enableDegrade=false

service.disableGlobalTransaction=false

client.rm.asyncCommitBufferLimit=10000

client.rm.lock.retryInterval=10

client.rm.lock.retryTimes=30

client.rm.lock.retryPolicyBranchRollbackOnConflict=true

client.rm.reportRetryCount=5

client.rm.tableMetaCheckEnable=false

client.rm.sqlParserType=druid

client.rm.reportSuccessEnable=false

client.rm.sagaBranchRegisterEnable=false

client.tm.commitRetryCount=5

client.tm.rollbackRetryCount=5

client.tm.defaultGlobalTransactionTimeout=60000

client.tm.degradeCheck=false

client.tm.degradeCheckAllowTimes=10

client.tm.degradeCheckPeriod=2000

store.mode=db

store.file.dir=file_store/data

store.file.maxBranchSessionSize=16384

store.file.maxGlobalSessionSize=512

store.file.fileWriteBufferCacheSize=16384

store.file.flushDiskMode=async

store.file.sessionReloadReadSize=100

store.db.datasource=druid

store.db.dbType=mysql

store.db.driverClassName=com.mysql.jdbc.Driver

store.db.url=jdbc:mysql://127.0.0.1:3306/seata?useUnicode=true

store.db.user=root

store.db.password=mysqladmin

store.db.minConn=5

store.db.maxConn=30

store.db.globalTable=global_table

store.db.branchTable=branch_table

store.db.queryLimit=100

store.db.lockTable=lock_table

store.db.maxWait=5000

store.redis.host=127.0.0.1

store.redis.port=6379

store.redis.maxConn=10

store.redis.minConn=1

store.redis.database=0

store.redis.password=null

store.redis.queryLimit=100

server.recovery.committingRetryPeriod=1000

server.recovery.asynCommittingRetryPeriod=1000

server.recovery.rollbackingRetryPeriod=1000

server.recovery.timeoutRetryPeriod=1000

server.maxCommitRetryTimeout=-1

server.maxRollbackRetryTimeout=-1

server.rollbackRetryTimeoutUnlockEnable=false

client.undo.dataValidation=true

client.undo.logSerialization=jackson

client.undo.onlyCareUpdateColumns=true

server.undo.logSaveDays=7

server.undo.logDeletePeriod=86400000

client.undo.logTable=undo_log

client.log.exceptionRate=100

transport.serialization=seata

transport.compressor=none

metrics.enabled=false

metrics.registryType=compact

metrics.exporterList=prometheus

metrics.exporterPrometheusPort=9898

修改完成后,还需要将配置上传到到Nacos:

在seata的script目录下存在这样的脚本来发布配置到nacos上:

我们只需要执行命令即可:

sh ${SEATAPATH}/script/config-center/nacos/nacos-config.sh -h 192.168.131.172 -p 8848 -g SEATA_GROUP -t seata

参数说明:

-h: host,默认值 localhost

-p: port,默认值 8848

-g: 配置分组,默认值为 ‘SEATA_GROUP’

-t: 租户信息,对应 Nacos 的命名空间ID字段, 默认值为空 ‘’

我在windows上执行:

D:\Tools-install\Seata\seata-v1.4.0\seata-v1.4.0\script\config-center\nacos>sh D:\Tools-install\Seata\seata-v1.4.0\seata-v1.4.0\script\config-center\nacos\nacos-config.sh -h 192.168.131.172 -p 8848 -g SEATA_GROUP -t seata

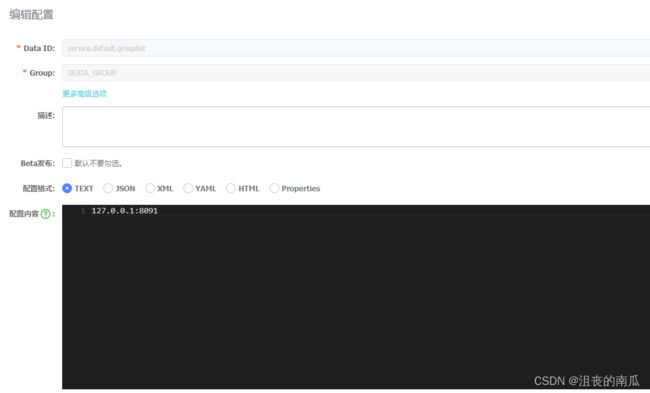

然后我们去nacos配置中心的seata命名空间查看:

可以看到,配置已经同步到nacos上了。

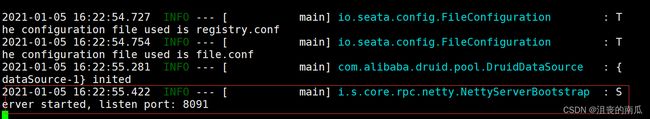

3) 启动Seata Server

bin/seata-server.sh

// 集群启动

bin/seata-server.sh -p 8091 -n 1

bin/seata-server.sh -p 8092 -n 2

bin/seata-server.sh -p 8093 -n 3

二、Seata如何整合到Spring Cloud微服务

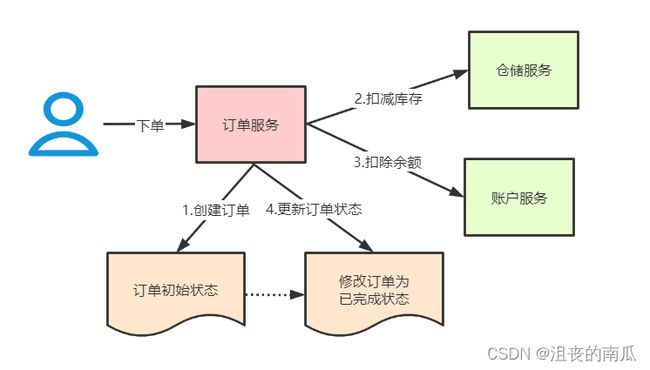

业务场景:

用户下单,整个业务逻辑由三个微服务构成:

- 仓储服务:对给定的商品扣除库存数量。

- 订单服务:根据采购需求创建订单。

- 帐户服务:从用户帐户中扣除余额。

环境准备:

seata: v1.4.0

spring cloud&spring cloud alibaba:

<spring-cloud.version>Greenwich.SR3</spring-cloud.version>

<spring-cloud-alibaba.version>2.1.1.RELEASE</spring-cloud-alibaba.version>

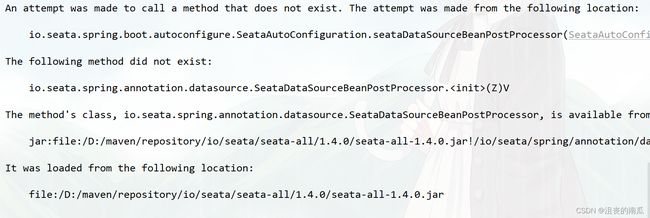

注意版本选择问题:

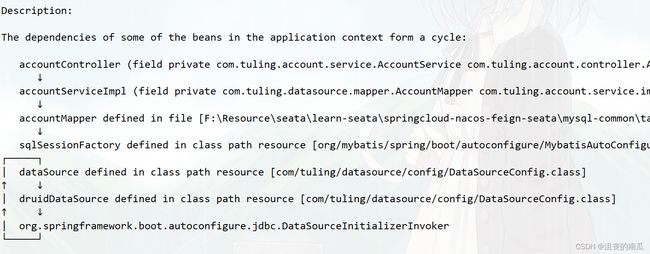

spring cloud alibaba 2.1.2 及其以上版本使用seata1.4.0会出现如下异常 (支持seata 1.3.0)

2.1 导入依赖

<!-- seata-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

<exclusions>

<exclusion>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

<version>1.4.0</version>

</dependency>

<!--nacos 注册中心-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-openfeign</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.1.21</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<scope>runtime</scope>

<version>8.0.16</version>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>2.1.1</version>

</dependency>

2.2 微服务对应数据库中添加undo_log表

CREATE TABLE `undo_log` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(20) NOT NULL,

`xid` varchar(100) NOT NULL,

`context` varchar(128) NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(11) NOT NULL,

`log_created` datetime NOT NULL,

`log_modified` datetime NOT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

2.3 微服务需要使用seata DataSourceProxy代理自己的数据源

注意:为何要配置代理数据源呢?

因为seata要在执行真实sql之前和之后分贝生成before image和after impage,以及构建undo log。然后才会执行targetTransaction来执行真正的业务sql。

/**

* 需要用到分布式事务的微服务都需要使用seata DataSourceProxy代理自己的数据源

*/

@Configuration

@MapperScan("com.jihu.datasource.mapper")

public class MybatisConfig {

/**

* 从配置文件获取属性构造datasource,注意前缀,这里用的是druid,根据自己情况配置,

* 原生datasource前缀取"spring.datasource"

*

* @return

*/

@Bean

@ConfigurationProperties(prefix = "spring.datasource.druid")

public DataSource druidDataSource() {

DruidDataSource druidDataSource = new DruidDataSource();

return druidDataSource;

}

/**

* 构造datasource代理对象,替换原来的datasource

* @param druidDataSource

* @return

*/

@Primary

@Bean("dataSource")

public DataSourceProxy dataSourceProxy(DataSource druidDataSource) {

return new DataSourceProxy(druidDataSource);

}

@Bean(name = "sqlSessionFactory")

public SqlSessionFactory sqlSessionFactoryBean(DataSourceProxy dataSourceProxy) throws Exception {

SqlSessionFactoryBean factoryBean = new SqlSessionFactoryBean();

//设置代理数据源

factoryBean.setDataSource(dataSourceProxy);

ResourcePatternResolver resolver = new PathMatchingResourcePatternResolver();

factoryBean.setMapperLocations(resolver.getResources("classpath*:mybatis/**/*-mapper.xml"));

org.apache.ibatis.session.Configuration configuration=new org.apache.ibatis.session.Configuration();

//使用jdbc的getGeneratedKeys获取数据库自增主键值

configuration.setUseGeneratedKeys(true);

//使用列别名替换列名

configuration.setUseColumnLabel(true);

//自动使用驼峰命名属性映射字段,如userId ---> user_id

configuration.setMapUnderscoreToCamelCase(true);

factoryBean.setConfiguration(configuration);

return factoryBean.getObject();

}

}

注意: 启动类上需要排除DataSourceAutoConfiguration,否则会出现循环依赖的问题。

@SpringBootApplication(scanBasePackages = "com.jihu",exclude = DataSourceAutoConfiguration.class)

@EnableFeignClients

public class OrderServiceApplication {

public static void main(String[] args) {

SpringApplication.run(OrderServiceApplication.class, args);

}

}

启动类排除DataSourceAutoConfiguration.class

2.4 添加seata的配置

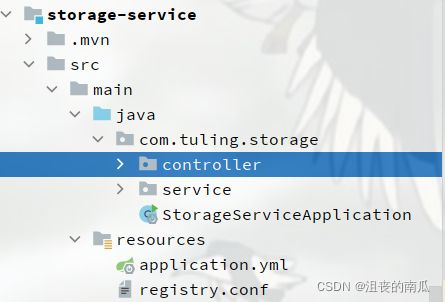

1)将registry.conf文件拷贝到resources目录下,指定注册中心和配置中心都是nacos

注意:需要指定group = "SEATA_GROUP",因为Seata Server端指定了group = "SEATA_GROUP" ,必须保证一致

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

serverAddr = "192.168.131.172:8848"

namespace = ""

cluster = "default"

group = "SEATA_GROUP"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3、springCloudConfig

type = "nacos"

nacos {

serverAddr = "192.168.131.172:8848"

namespace = ""

group = "SEATA_GROUP"

}

}

在

org.springframework.cloud:spring-cloud-starter-alibaba-seata的org.springframework.cloud.alibaba.seata.GlobalTransactionAutoConfiguration类中,默认会使用${spring.application.name}-seata-service-group作为服务名注册到 Seata Server上,如果和service.vgroup_mapping配置不一致,会提示no available server to connect错误

也可以通过配置 spring.cloud.alibaba.seata.tx-service-group修改后缀,但是必须和file.conf中的配置保持一致

如果出现这种问题:

![]()

一般大多数情况下都是因为配置不匹配导致的:

1、检查现在使用的seata服务和项目maven中seata的版本是否一致

2、检查tx-service-group,nacos.cluster,nacos.group参数是否和Seata Server中的配置一致

跟踪源码:seata/discover包下实现了RegistryService#lookup,用来获取服务列表。

NacosRegistryServiceImpl#lookup

》String clusterName = getServiceGroup(key); #获取seata server集群名称

》List<Instance> firstAllInstances = getNamingInstance().getAllInstances(getServiceName(), getServiceGroup(), clusters)

2)在yml中指定事务分组(和配置中心的service.vgroup_mapping 配置一一对应)

配置seata 服务事务分组,要与服务端nacos配置中心中service.vgroup_mapping的后缀对应。

server:

port: 8020

spring:

application:

name: order-service

cloud:

nacos:

discovery:

server-addr: 127.0.0.1:8848

alibaba:

seata:

tx-service-group:

my_test_tx_group # seata 服务事务分组

datasource:

type: com.alibaba.druid.pool.DruidDataSource

druid:

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://localhost:3306/seata_order?useUnicode=true&characterEncoding=UTF-8&serverTimezone=Asia/Shanghai

username: root

password: mysqladmin

initial-size: 10

max-active: 100

min-idle: 10

max-wait: 60000

pool-prepared-statements: true

max-pool-prepared-statement-per-connection-size: 20

time-between-eviction-runs-millis: 60000

min-evictable-idle-time-millis: 300000

test-while-idle: true

test-on-borrow: false

test-on-return: false

stat-view-servlet:

enabled: true

url-pattern: /druid/*

filter:

stat:

log-slow-sql: true

slow-sql-millis: 1000

merge-sql: false

wall:

config:

multi-statement-allow: true

参考源码:

io.seata.core.rpc.netty.NettyClientChannelManager#getAvailServerList

》NacosRegistryServiceImpl#lookup

》String clusterName = getServiceGroup(key); #获取seata server集群名称

》List firstAllInstances = getNamingInstance().getAllInstances(getServiceName(), getServiceGroup(), clusters)

spring cloud alibaba 2.1.4 之后支持yml中配置seata属性,可以用来替换registry.conf文件

配置支持实现在seata-spring-boot-starter.jar中,也可以引入依赖:

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

<version>1.4.0</version>

</dependency>

在yml中配置:

seata:

# seata 服务分组,要与服务端nacos-config.txt中service.vgroup_mapping的后缀对应

tx-service-group: my_test_tx_group

registry:

# 指定nacos作为注册中心

type: nacos

nacos:

server-addr: 192.168.131.172:8848

namespace: ""

group: SEATA_GROUP

config:

# 指定nacos作为配置中心

type: nacos

nacos:

server-addr: 192.168.131.172:8848

namespace: "54433b62-df64-40f1-9527-c907219fc17f"

group: SEATA_GROUP

3) 在事务发起者中添加@GlobalTransactional注解

@Override

//@Transactional

@GlobalTransactional(name="createOrder")

public Order saveOrder(OrderVo orderVo){

log.info("=============用户下单=================");

log.info("当前 XID: {}", RootContext.getXID());

// 保存订单

Order order = new Order();

order.setUserId(orderVo.getUserId());

order.setCommodityCode(orderVo.getCommodityCode());

order.setCount(orderVo.getCount());

order.setMoney(orderVo.getMoney());

order.setStatus(OrderStatus.INIT.getValue());

Integer saveOrderRecord = orderMapper.insert(order);

log.info("保存订单{}", saveOrderRecord > 0 ? "成功" : "失败");

//扣减库存

storageFeignService.deduct(orderVo.getCommodityCode(),orderVo.getCount());

//扣减余额

accountFeignService.debit(orderVo.getUserId(),orderVo.getMoney());

//更新订单

Integer updateOrderRecord = orderMapper.updateOrderStatus(order.getId(),OrderStatus.SUCCESS.getValue());

log.info("更新订单id:{} {}", order.getId(), updateOrderRecord > 0 ? "成功" : "失败");

return order;

}

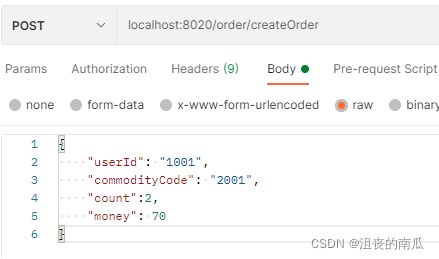

4)测试分布式事务是否生效

分布式事务成功,模拟正常下单、扣库存,扣余额

分布式事务失败,模拟下单扣库存成功、扣余额失败,事务是否回滚

三、使用中的注意事项(坑)

3.1 服务降级导致的分布式事务失效问题

我们调用其他服务的时候如果返回的是降级结果,TM是感知不到的,因为此时并没有抛出异常,所以还是会提交事务。此时就会使得分布式事务失效,因为按照业务是不应该被提交的。

正确的解决方案:要通过业务去判断结果,在事务发起者出让其感知到错误,从而使得分布式事务回滚。

//扣减余额 服务降级 throw

Boolean debit= accountFeignService.debit(orderVo.getUserId(), orderVo.getMoney());

// 要判断服务是否调用成功,防止因为服务降级导致分布式事务失效

if(!debit){

// 解决 feign整合sentinel降级导致SeaTa失效的处理

throw new RuntimeException("账户服务异常降级了");

}

错误的解决方案:在降级方法中获取XID并设置状态:

@Component

@Slf4j

public class FallbackAccountFeignServiceFactory implements FallbackFactory<AccountFeignService> {

@Override

public AccountFeignService create(Throwable throwable) {

return new AccountFeignService() {

@Override

public Boolean debit(String userId, int money) throws TransactionException {

log.info("账户服务异常降级了");

// 解决 feign整合sentinel降级导致Seata失效的处理 此方案不可取

if(!StringUtils.isEmpty(RootContext.getXID())){

// 通过xid获取GlobalTransaction调用rollback回滚

// 可以让库存服务回滚 能解决问题吗? 绝对不能用

GlobalTransactionContext.reload(RootContext.getXID()).rollback();

}

return false;

}

};

}

}

上面的这种方式看似很好,可以让库存服务回滚,但是整个的事务发起者并不能感知到,所以无法解决问题。

3.2 如何配置Seata高可用,挂了怎么办

启动多台seata服务后,还需要在/seata/script/config-center/config.txt文件中添加机器列表,如果是nacos配置中心,需要在nacos上修改:

service.default.grouplist=127.0.0.1:8091,127.0.0.1:8092,127.0.0.1:8093