Kubernetes搭建

Kubernetes搭建

- Kubernetes搭建

-

- 部署组件介绍

-

- K8s 集群架构图

- k8s控制组件

-

- 控制平面

- kube-apiserver

- kube-scheduler

- kube-controller-manager

- etcd

- k8s运行组件

-

- k8s节点

- 容器集

- 容器运行时引擎

- kubelet

- kube-proxy

- k8s 存储组件

-

- 持久存储

- 容器镜像仓库

- 底层基础架构

- kubeadm部署k8s

-

- kubeadm简介

- kubeadm安装准备

-

- 环境要求

- 准备工作

- Docker环境安装

- 安装kubeadm

-

- 安装组件

- 导入镜像

- 初始化Master

-

- 执行初始化命令

- 创建配置文件

- 添加flannel网络

- 创建网络

- 查看集群状态

- 查看版本信息

- 查看集群信息

- 删除节点

- 开启自动补全

-

- 永久生效

- dashboard

-

- 启动dashboard

Kubernetes搭建

部署组件介绍

我们把一个有效的 Kubernetes 部署称为集群。您可以将 Kubernetes 集群可视化为两个部分:

控制平面与计算设备(或称为节点)。每个节点都是其自己的 Linux环境,并且可以是物理机或虚拟机。每个节点都运行由若干容器组成的容器集。

K8s 集群架构图

以下 K8s 架构图显示了 Kubernetes 集群的各部分之间的联系:

k8s控制组件

控制平面

K8s 集群的神经中枢

让我们从 Kubernetes 集群的神经中枢(即控制平面)开始说起。在这里,我们可以找到用于控制集群的 Kubernetes 组件以及一些有关集群状态和配置的数据。这些核心 Kubernetes 组件负责处理重要的工作,以确保容器以足够的数量和所需的资源运行。

控制平面会一直与您的计算机保持联系。集群已被配置为以特定的方式运行,而控制平面要做的就是确保万无一失。

kube-apiserver

K8s 集群API,如果需要与您的 Kubernetes 集群进行交互,就要通过 API

Kubernetes API 是 Kubernetes 控制平面的前端,用于处理内部和外部请求。API 服务器会确定请求是否有效,如果有效,则对其进行处理。您可以通过 REST 调用、kubectl 命令行界面或其他命令行工具(例如 kubeadm)来访问 API。

kube-scheduler

K8s 调度程序,您的集群是否状况良好?如果需要新的容器,要将它们放在哪里?这些是 Kubernetes 调度程序所要关注的问题。

调度程序会考虑容器集的资源需求(例如 CPU 或内存)以及集群的运行状况。随后,它会将容器集安排到适当的计算节点。

kube-controller-manager

K8s 控制器,控制器负责实际运行集群,而 Kubernetes 控制器管理器则是将多个控制器功能合而为一

控制器用于查询调度程序,并确保有正确数量的容器集在运行。如果有容器集停止运行,另一个控制器会发现并做出响应。控制器会将服务连接至容器集,以便让请求前往正确的端点。还有一些控制器用于创建帐户和 API 访问令牌。

etcd

键值存储数据库

配置数据以及有关集群状态的信息位于 etcd(一个键值存储数据库)中。etcd 采用分布式、容错设计,被视为集群的最终事实来源。

k8s运行组件

k8s节点

Kubernetes 集群中至少需要一个计算节点,但通常会有多个计算节点。

容器集经过调度和编排后,就会在节点上运行。如果需要扩展集群的容量,那就要添加更多的节点。

容器集

容器集是 Kubernetes 对象模型中最小、最简单的单元。

它代表了应用的单个实例。每个容器集都由一个容器(或一系列紧密耦合的容器)以及若干控制容器运行方式的选件组成。容器集可以连接至持久存储,以运行有状态应用。

容器运行时引擎

为了运行容器,每个计算节点都有一个容器运行时引擎。

比如 Docker,但 Kubernetes 也支持其他符合开源容器运动(OCI)标准的运行时,例如 rkt 和 CRI-O。

kubelet

每个计算节点中都包含一个 kubelet,这是一个与控制平面通信的微型应用。

kublet 可确保容器在容器集内运行。当控制平面需要在节点中执行某个操作时,kubelet 就会执行该操作。

kube-proxy

每个计算节点中还包含 kube-proxy,这是一个用于优化 Kubernetes 网络服务的网络代理。

kube-proxy 负责处理集群内部或外部的网络通信——靠操作系统的数据包过滤层,或者自行转发流量。

k8s 存储组件

持久存储

除了管理运行应用的容器外,Kubernetes 还可以管理附加在集群上的应用数据。

Kubernetes 允许用户请求存储资源,而无需了解底层存储基础架构的详细信息。持久卷是集群(而非容器集)所特有的,因此其寿命可以超过容器集。

容器镜像仓库

Kubernetes 所依赖的容器镜像存储于容器镜像仓库中。

这个镜像仓库可以由您自己配置的,也可以由第三方提供。

底层基础架构

您可以自己决定具体在哪里运行 Kubernetes。

答案可以是裸机服务器、虚拟机、公共云提供商、私有云和混合云环境。Kubernetes 的一大优势就是它可以在许多不同类型的基础架构上运行。

kubeadm部署k8s

kubeadm简介

kubeadm是Kubernetes项目自带的及集群构建工具,负责执行构建一个最小化的可用集群以及将其启动等的必要基本步骤,kubeadm是Kubernetes集群全生命周期的管理工具,可用于实现集群的部署、升级、降级及拆除。kubeadm部署Kubernetes集群是将大部分资源以pod的方式运行,例如(kube-proxy、kube-controller-manager、kube-scheduler、kube-apiserver、flannel)都是以pod方式运行。

Kubeadm仅关心如何初始化并启动集群,余下的其他操作,例如安装Kubernetes Dashboard、监控系统、日志系统等必要的附加组件则不在其考虑范围之内,需要管理员自行部署。

Kubeadm集成了Kubeadm init和kubeadm join等工具程序,其中kubeadm init用于集群的快速初始化,其核心功能是部署Master节点的各个组件,而kubeadm join则用于将节点快速加入到指定集群中,它们是创建Kubernetes集群最佳实践的“快速路径”。另外,kubeadm token可于集群构建后管理用于加入集群时使用的认证令牌(token),而kubeadm reset命令的功能则是删除集群构建过程中生成的文件以重置回初始状态。

kubeadm安装准备

环境要求

- 一台兼容的 Linux 主机。Kubernetes 项目为基于 Debian 和 Red Hat 的 Linux

发行版以及一些不提供包管理器的发行版提供通用的指令 - 每台机器 2 GB 或更多的 RAM (如果少于这个数字将会影响你应用的运行内存)

- 2 CPU 核或更多

- 集群中的所有机器的网络彼此均能相互连接(公网和内网都可以)

- 节点之中不可以有重复的主机名、MAC 地址或 product_uuid。请参见这里了解更多详细信息。

- 开启机器上的某些端口。请参见这里 了解更多详细信息。

- 禁用交换分区。为了保证 kubelet 正常工作,你 必须 禁用交换分区。

准备工作

下面初始化环境工作master节点和node节点都需要执行

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

ntpdate 0.rhel.pool.ntp.org

vi /etc/hostname

写入

HOSTNAME=yourhostname

保存后执行以下

hostname yourhostname

查看设置后的hostname

hostname

vi /etc/hosts

127.0.0.1 yourhostname localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 yourhostname localhost localhost.localdomain localhost6 localhost6.localdomain6

host绑定

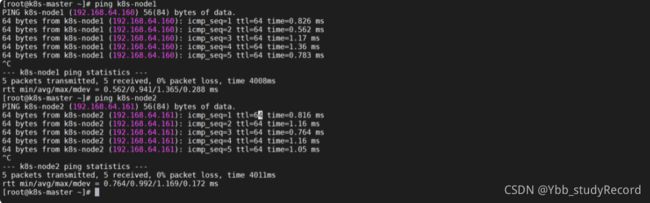

修改其他节点的hostname后,将域名绑定到hostname中保证其他节点能够通过hostname进行访问其他节点

vi /etc/hosts

192.168.64.160 k8s-master

192.168.64.161 k8s-node1

192.168.64.162 k8s-node2

ping k8s-node1

ping k8s-node2

echo 'net.bridge.bridge-nf-call-iptables = 1' >> /etc/sysctl.conf

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

echo "echo 1 > /proc/sys/net/ipv4/ip_forward" >> /etc/rc.d/rc.local

echo 1 > /proc/sys/net/ipv4/ip_forward

-bash: /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory

先执行

sudo modprobe br_netfilter

Docker环境安装

参考前面章节的Docker安装

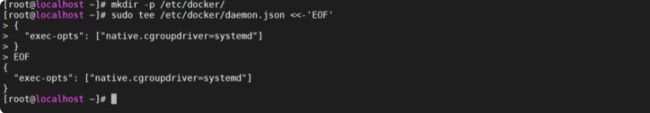

修改daemon.json

修改docker cgroup driver为systemd

根据文档CRI installation中的内容,对于使用systemd作为init system的Linux的发行版,使用systemd作为docker的cgroup driver可以确保服务器节点在资源紧张的情况更加稳定,因此这里修改各个节点上docker的cgroup driver为systemd。

mkdir -p /etc/docker/

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl restart docker #启动docker

systemctl enable docker #开机自启动

docker info |grep Cgroup

安装kubeadm

master节点和所有node节点都需要执行

配置kubeadm安装源

在线安装kubeadm,使用阿里的源,本教程使用1.21 (很慢!)

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装组件

安装kubelat、kubectl、kubeadm

yum install -y kubelet-1.21.0 kubeadm-1.21.0 kubectl-1.21.0 --disableexcludes=kubernetes

rpm -aq kubelet kubectl kubeadm

将kubelet加入开机启动,这里刚安装完成不能直接启动。(因为目前还没有集群还没有建立)

systemctl enable kubelet

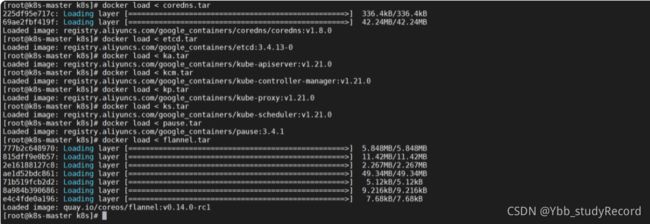

导入镜像

直接使用离线的镜像文件,在资料下的tar文件夹下

链接: https://pan.baidu.com/s/1sC2xhonVPLEmkS53gxm4IQ 提取码: 2833

上传Image镜像

将资料文件夹下的 tar文件上传到centos服务中来

导入镜像

将上传的镜像导入到docker容器中来

docker load < coredns.tar

docker load < etcd.tar

docker load < ka.tar

docker load < kcm.tar

docker load < kp.tar

docker load < ks.tar

docker load < pause.tar

docker load < flannel.tar

初始化Master

注意:在master节点执行

执行初始化命令

kubeadm init \

--kubernetes-version v1.21.0 \

--image-repository registry.aliyuncs.com/google_containers \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.1.0.0/16 \

--ignore-preflight-errors=Swap

执行需要花费一些时间,出现successfully表示初始化成功

创建配置文件

按照上面初始化成功提示创建配置文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#我们是root用户启动,所以还需要下面这句

export KUBECONFIG=/etc/kubernetes/admin.conf

添加flannel网络

创建flannel配置文件

创建网络,flannel.yml文件在”资料“文件夹里

vi flannel.yml

文件内容如下

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.14.0-rc1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.14.0-rc1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

创建网络

kubectl apply -f flannel.yml

安装kubeadm

参考《安装kubeadm》也需要在从节点执行一遍

加入集群

主节点输入

kubeadm token create --print-join-command --ttl 0

在子节点上执行加入命令

kubeadm join 192.168.64.159:6443 --token i16hg0.9z9aq1px2mp78fi7 \

--discovery-token-ca-cert-hash sha256:9ec1e3f485191da5a11f42f5d1303fd3f0f3334297e121c62126b72beb6a4643

kubeadm reset

然后再执行加入节点的操作即可

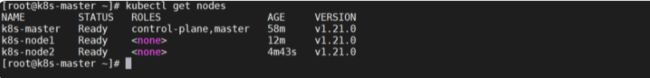

查看集群状态

在master节点输入命令检查集群状态,返回如下结果则集群状态正常

kubectl get nodes

如果报这个错误,先删干净再重新写入配置

rm -rf $HOME/.kube

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#我们是root用户启动,所以还需要下面这句

export KUBECONFIG=/etc/kubernetes/admin.conf

重点查看STATUS内容为Ready时,则说明集群状态正常。

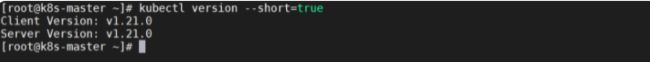

查看版本信息

查看集群客户端和服务端程序版本信息

kubectl version --short=true

查看集群信息

kubectl cluster-info

删除节点

有时节点出现故障,需要删除节点,方法如下

在master节点上执行

kubectl drain --delete-local-data --force --ignore-daemonsets

kubectl delete node

在需要移除的节点上执行

kubeadm reset

开启自动补全

当我们在使用kubectl命令时,如果不能用tab补全,将会非常麻烦,得把命令一个一个敲出来

但是配置自动补全之后就非常方便了

安装命令

安装bash-completion

yum install bash-completion -y

执行命令

执行bash_completion

source /usr/share/bash-completion/bash_completion

重新加载

重新加载kubectl completion

source <(kubectl completion bash)

永久生效

配置永久生效

echo "source <(kubectl completion bash)" >> ~/.bashrc

dashboard

创建配置文件

vi dashboard.yml

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

type: NodePort

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.2.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

- --token-ttl=43200

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.6

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

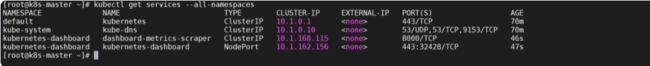

启动dashboard

kubectl apply -f yml/dashboard.yml

kubectl get services --all-namespaces

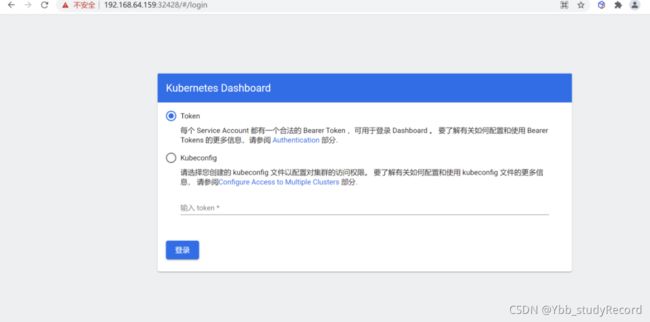

访问测试

访问https://192.168.64.159:32428地址进入dashboard界面,因为没有证书,访问需要输入token

获取Token

在master执行以下命令来获取Token

kubectl -n kube-system describe $(kubectl -n kube-system get secret -n kube-system -o name | grep namespace) | grep token