OpenCV图像识别技术+Mediapipe与Unity引擎的结合

OpenCV图像识别技术+Mediapipe与Unity引擎的结合

- 前言

-

- Demo效果展示

- 认识Mediapipe

- 项目环境

- 身体动作捕捉部分

-

- 关于身体特征点

- 核心代码

- 手势动作捕捉部分

- 后语

-

- 关于项目

前言

本篇文章将介绍如何使用Python利用OpenCV图像捕捉,配合强大的Mediapipe库来实现手势,人体动作检测与识别;将识别结果实时同步至Unity中,实现手部,人物模型在Unity中运动身体结构识别

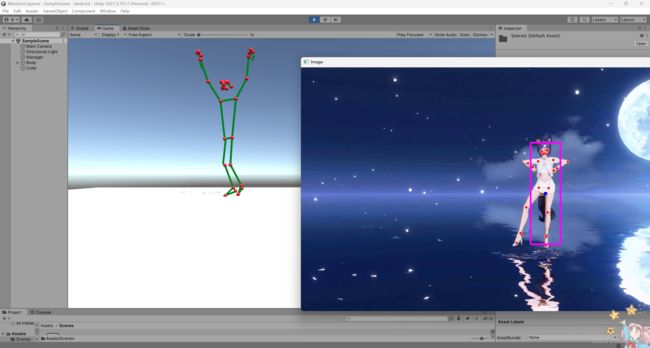

Demo效果展示

视频演示地址:https://hackathon2022.juejin.cn/#/works/detail?unique=WJoYomLPg0JOYs8GazDVrw

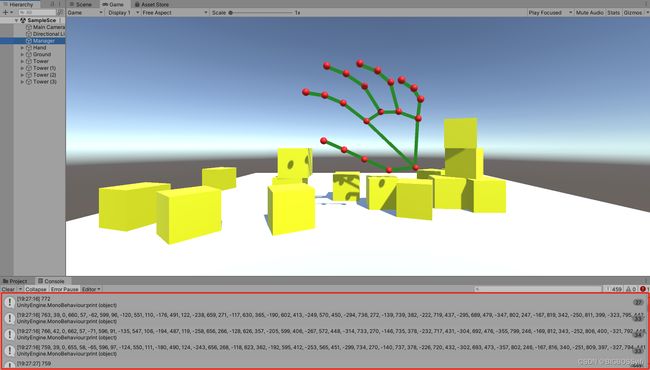

手势识别实时抓取物品:

身体机构动作捕捉:

本篇文章所用的技术会整理后开源,后续可以持续关注:

Unity手势项目地址:https://github.com/BIGBOSS-dedsec/OpenCV-Unity-To-Build-3DHands

Unity人物动作项目地址:https://github.com/BIGBOSS-dedsec/OpenCV-Unity-To-Build-3DPerson

GitHub:https://github.com/BIGBOSS-dedsec

CSDN: https://blog.csdn.net/weixin_50679163?type=blog

同时本篇文章实现的技术参加了稀土掘金2022编程挑战赛-游戏赛道-优秀奖

视频演示地址:https://hackathon2022.juejin.cn/#/works/detail?unique=WJoYomLPg0JOYs8GazDVrw

认识Mediapipe

项目的实现,核心是强大的Mediapipe ,它是google的一个开源项目:

| 功能 | 详细 |

|---|---|

| 人脸检测 FaceMesh | 从图像/视频中重建出人脸的3D Mesh |

| 人像分离 | 从图像/视频中把人分离出来 |

| 手势跟踪 | 21个关键点的3D坐标 |

| 人体3D识别 | 33个关键点的3D坐标 |

| 物体颜色识别 | 可以把头发检测出来,并图上颜色 |

Mediapipe官网:https://mediapipe.dev/

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-tW89n14v-1652014368560)(https://p3-juejin.byteimg.com/tos-cn-i-k3u1fbpfcp/4d135fc2864f4c239eb4ef98c0acd644~tplv-k3u1fbpfcp-zoom-1.image “图片描述”)]

以上是Mediapipe的几个常用功能 ,这几个功能我们会在后续一一讲解实现

pip install mediapipe==0.8.9.1

也可以用 setup.py 安装

https://github.com/google/mediapipe

项目环境

Python 3.7

Mediapipe 0.8.9.1

Numpy 1.21.6

OpenCV-Python 4.5.5.64

OpenCV-contrib-Python 4.5.5.64

身体动作捕捉部分

身体数据文件:

这部分是我们通过读取视频中人物计算出每个特征点信息进行数据保存,这些信息很重要,后续在untiy中导入这些动作数据

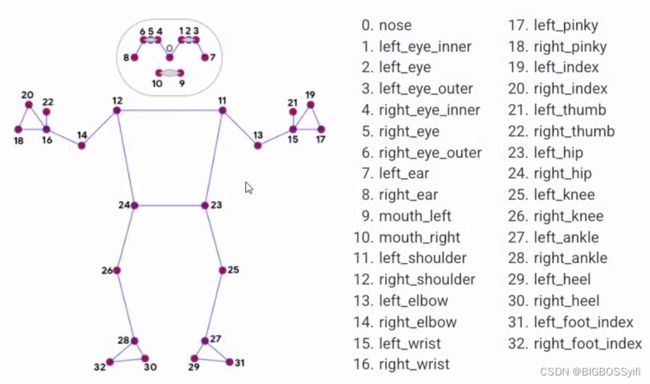

关于身体特征点

核心代码

摄像头捕捉部分:

import cv2

cap = cv2.VideoCapture(0) #OpenCV摄像头调用:0=内置摄像头(笔记本) 1=USB摄像头-1 2=USB摄像头-2

while True:

success, img = cap.read()

imgRGB = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) #cv2图像初始化

cv2.imshow("HandsImage", img) #CV2窗体

cv2.waitKey(1) #关闭窗体

视频帧率计算

import time

#帧率时间计算

pTime = 0

cTime = 0

while True

cTime = time.time()

fps = 1 / (cTime - pTime)

pTime = cTime

cv2.putText(img, str(int(fps)), (10, 70), cv2.FONT_HERSHEY_PLAIN, 3,

(255, 0, 255), 3) #FPS的字号,颜色等设置

身体动作捕捉:

while True:

if bboxInfo:

lmString = ''

for lm in lmList:

lmString += f'{lm[1]},{img.shape[0] - lm[2]},{lm[3]},'

posList.append(lmString)

手势动作捕捉部分

手势数据部分:

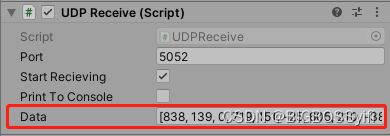

我们的手势部分数据是实时同步于Unity中,其中核心原理是利用socket和UDP

Socket是应用层与TCP/IP协议族通信的中间软件抽象层,它是一组接口。在设计模式中,它把复杂的TCP/IP协议族隐藏在Socket接口后面,对用户来说,一组简单的接口就是全部,让Socket去组织数据,以符合指定的协议。

import socket

在代码中的实现如下:

数据发送部分:

sock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

serverAddressPort = ("127.0.0.1", 5052) # localhost的IP和端口设置

UDP接收部分:

private void ReceiveData()

{

client = new UdpClient(port);

while (startRecieving)

{

try

{

IPEndPoint anyIP = new IPEndPoint(IPAddress.Any, 0);

byte[] dataByte = client.Receive(ref anyIP);

data = Encoding.UTF8.GetString(dataByte);

if (printToConsole) { print(data); }

}

catch (Exception err)

{

print(err.ToString());

}

}

}

在Unity中实现的效果:

无数据接收下:

数据通信接收下:

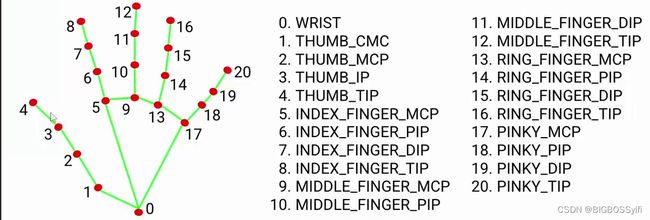

手势特征点

通过每个的特征点数据,对应到Unity的模型中,给予每个特征点数据定位,实现手势实时动作

手势代码捕捉部分

while True:

if results.multi_hand_landmarks:

for handLms in results.multi_hand_landmarks:

for id, lm in enumerate(handLms.landmark):

# print(id, lm)

h, w, c = img.shape

cx, cy = int(lm.x * w), int(lm.y * h)

print(id, cx, cy)

# if id == 4:

cv2.circle(img, (cx, cy), 15, (255, 0, 255), cv2.FILLED)

#绘制手部特征点:

mpDraw.draw_landmarks(img, handLms, mpHands.HAND_CONNECTIONS)

后语

关于项目

本项目基于Python+OpenCV对人物动作(关节识别)实时捕捉与Unity引擎模型,可实现在Unity中使人物模型同步与摄像机画面并完成相应动作,利用实时体感,玩家可以操控角色在游戏进行探险,不同于VR游戏与AR游戏,本项目实现仅需一颗高清摄像头,通过全身动作的互动与ASOUL带来的偶像效应,更能增加玩家游玩的体验,提高玩家的游玩兴趣与互动带来的参与感。

关键词 : OpenCV ;Mediapip;参与感;Unity3D

Abstract:Isolated at home? It’s better to take a big adventure with asoul members, set up a camera and experience the whole body of asoul to interact with asoul and experience a wonderful and unforgettable adventure; You can operate the five members of asoul and a Cao in the game, and capture the whole body picture through the camera, so as to “personally” experience the adventure with asoul!

The action of this project is different from that of the openy camera, which can be used to capture the action of the character in real time. Now, the player can use the Ar + unit camera to complete the real-time action recognition of the character in the game, which is different from that of the openy camera, Players need to scan and capture the whole body movement in the game (establish the character model through tensorflow) to establish the game character model operated by players 1:1. Through the interaction of whole body movement and the idol effect brought by asoul, it can better increase the player’s playing experience and improve the player’s interest in playing and the sense of participation brought by interaction.