【云原生 | Kubernetes 系列】----Prometheus三种方式安装部署

1. Prometheus介绍

1.1. Prometheus简介

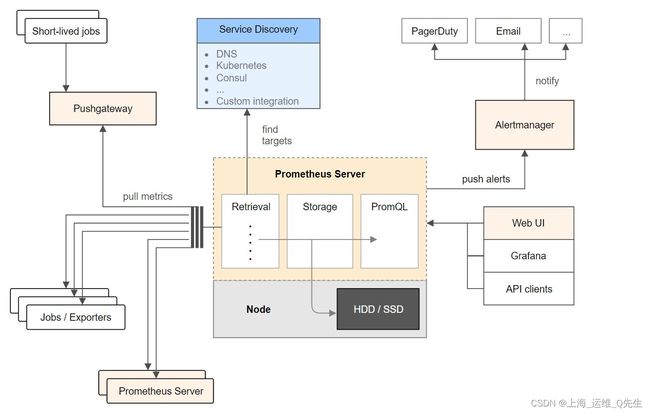

Prometheus 是一款基于时序数据库的开源监控告警系统,非常适合Kubernetes集群的监控。Prometheus的基本原理是通过HTTP协议周期性抓取被监控组件的状态,任意组件只要提供对应的HTTP接口就可以接入监控。不需要任何SDK或者其他的集成过程。这样做非常适合做虚拟化环境监控系统,比如VM、Docker、Kubernetes等。输出被监控组件信息的HTTP接口被叫做exporter 。目前互联网公司常用的组件大部分都有exporter可以直接使用,比如Varnish、Haproxy、Nginx、MySQL、Linux系统信息(包括磁盘、内存、CPU、网络等等)。Promethus有以下特点:

- 支持多维数据模型:由度量名和键值对组成的时间序列数据

- 内置时间序列数据库TSDB

- 支持PromQL查询语言,可以完成非常复杂的查询和分析,对图表展示和告警非常有意义

- 支持HTTP的Pull方式采集时间序列数据

- 支持PushGateway采集瞬时任务的数据

- 支持服务发现和静态配置两种方式发现目标

- 支持接入Grafana

1.2.1. Prometheus Server

主要负责数据采集和存储,提供PromQL查询语言的支持。包含了三个组件:

-

Retrieval: 获取监控数据

-

TSDB: 时间序列数据库(Time Series Database),我们可以简单的理解为一个优化后用来处理时间序列数据的软件,并且数据中的数组是由时间进行索引的。具备以下特点:

- 大部分时间都是顺序写入操作,很少涉及修改数据

- 删除操作都是删除一段时间的数据,而不涉及到删除无规律数据

- 读操作一般都是升序或者降序

-

HTTP Server: 为告警和出图提供查询接口

1.2.2. 指标采集

- Exporters: Prometheus的一类数据采集组件的总称。它负责从目标处搜集数据,并将其转化为Prometheus支持的格式。与传统的数据采集组件不同的是,它并不向中央服务器发送数据,而是等待中央服务器主动前来抓取

- Pushgateway: 支持临时性Job主动推送指标的中间网关

1.2.3. 服务发现

- Kubernetes_sd: 支持从Kubernetes中自动发现服务和采集信息。而Zabbix监控项原型就不适合Kubernets,因为随着Pod的重启或者升级,Pod的名称是会随机变化的。

- file_sd: 通过配置文件来实现服务的自动发现

1.2.4. 告警管理

通过相关的告警配置,对触发阈值的告警通过页面展示、短信和邮件通知的方式告知运维人员。

1.2.5. 图形化展示

通过ProQL语句查询指标信息,并在页面展示。虽然Prometheus自带UI界面,但是大部分都是使用Grafana出图。另外第三方也可以通过 API 接口来获取监控指标。

2. Prometheus部署

常见的部署方式:

- Docker部署

- Operator部署

- 二进制部署

2.1 Docker部署Prometheus

https://hub.docker.com/r/prom/prometheus/tags

docker run -p 9090:9090 prom/prometheus

2.2 Operator部署Prometheus

https://github.com/prometheus-operator/kube-prometheus

Operator部署器是基于已经编写好的yaml文件,可以将prometheus server,alertmanager,grafana,node-exporter等组件一键批量部署在k8s内部.

根据k8s版本选择Operator

| kube-prometheus stack | Kubernetes 1.20 | Kubernetes 1.21 | Kubernetes 1.22 | Kubernetes 1.23 | Kubernetes 1.24 |

|---|---|---|---|---|---|

| release-0.8 | ✔ | ✔ | ✗ | ✗ | ✗ |

| release-0.9 | ✗ | ✔ | ✔ | ✗ | ✗ |

| release-0.10 | ✗ | ✗ | ✔ | ✔ | ✗ |

| release-0.11 | ✗ | ✗ | ✗ | ✔ | ✔ |

| main | ✗ | ✗ | ✗ | ✗ | ✔ |

root@k8s-master-01:/opt/k8s-data/yaml# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.31.101 Ready,SchedulingDisabled master 123d v1.22.5

2.2.1 克隆Operator

下载或克隆0.10版本

https://codeload.github.com/prometheus-operator/kube-prometheus/zip/refs/heads/release-0.10

git clone https://github.com/prometheus-operator/kube-prometheus.git

cd kube-prometheus-release-0.10/manifests/

kubectl create -f setup/

2.2.2 下载所需镜像

下载镜像并上传harbor,如果没有代理服务器,使用阿里镜像曲线下载

alertmanager-alertmanager.yaml: image: harbor.intra.com/prometheus/alertmanager:v0.23.0

blackboxExporter-deployment.yaml: image: harbor.intra.com/prometheus/blackbox-exporter:v0.19.0

blackboxExporter-deployment.yaml: image: harbor.intra.com/prometheus/configmap-reload:v0.5.0

blackboxExporter-deployment.yaml: image: harbor.intra.com/prometheus/kube-rbac-proxy:v0.11.0

grafana-deployment.yaml: image: harbor.intra.com/prometheus/grafana:8.3.3

kubeStateMetrics-deployment.yaml: image: harbor.intra.com/prometheus/kube-state-metrics:v2.3.0

kubeStateMetrics-deployment.yaml: image: harbor.intra.com/prometheus/kube-rbac-proxy:v0.11.0

kubeStateMetrics-deployment.yaml: image: harbor.intra.com/prometheus/kube-rbac-proxy:v0.11.0

nodeExporter-daemonset.yaml: image: harbor.intra.com/prometheus/node-exporter:v1.3.1

nodeExporter-daemonset.yaml: image: harbor.intra.com/prometheus/kube-rbac-proxy:v0.11.0

prometheusAdapter-deployment.yaml: image: harbor.intra.com/prometheus/prometheus-adapter:v0.9.1

prometheusOperator-deployment.yaml: image: harbor.intra.com/prometheus/prometheus-operator:v0.53.1

prometheusOperator-deployment.yaml: image: harbor.intra.com/prometheus/kube-rbac-proxy:v0.11.0

prometheus-prometheus.yaml: image: harbor.intra.com/prometheus/prometheus:v2.32.1

2.2.3 部署Prometheus

修改service使得prometheus通过NodePort提供服务

如果不修改可以临时使用以下命令临时指定

kubectl -n monitoring port-forward svc/prometheus-k8s 9090

prometheus-service.yaml

spec:

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

- name: reloader-web

port: 8080

targetPort: reloader-web

部署

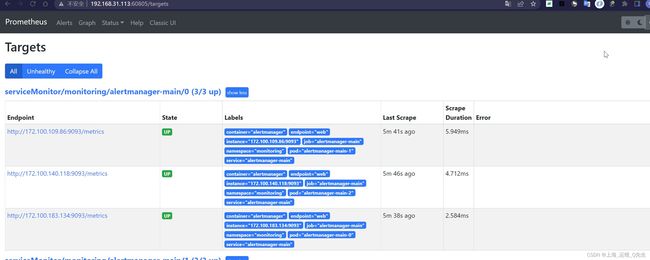

root@k8s-master-01:/opt/k8s-data/yaml/kube-prometheus-release-0.10/manifests# kubectl apply .

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager-main created

clusterrole.rbac.authorization.k8s.io/blackbox-exporter created

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created

configmap/blackbox-exporter-configuration created

deployment.apps/blackbox-exporter created

service/blackbox-exporter created

serviceaccount/blackbox-exporter created

略

root@k8s-master-01:/opt/k8s-data/yaml/kube-prometheus-release-0.10/manifests# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main ClusterIP 10.200.236.235 <none> 9093/TCP,8080/TCP 47m

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 47m

blackbox-exporter ClusterIP 10.200.16.237 <none> 9115/TCP,19115/TCP 47m

grafana ClusterIP 10.200.168.96 <none> 3000/TCP 47m

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 47m

node-exporter ClusterIP None <none> 9100/TCP 47m

prometheus-adapter ClusterIP 10.200.166.31 <none> 443/TCP 47m

prometheus-k8s NodePort 10.200.129.185 <none> 9090:60805/TCP,8080:52252/TCP 47m

prometheus-operated ClusterIP None <none> 9090/TCP 47m

prometheus-operator ClusterIP None <none> 8443/TCP 47m

2.2.4 部署grafana

修改service使得grafana通过NodePort提供服务

grafana-service.yaml

spec:

type: NodePort

ports:

- name: http

port: 3000

targetPort: http

nodePort: 33000

部署grafana

kubectl apply -f grafana-service.yaml

root@k8s-master-01:/opt/k8s-data/yaml/kube-prometheus-release-0.10/manifests# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main ClusterIP 10.200.236.235 <none> 9093/TCP,8080/TCP 51m

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 51m

blackbox-exporter ClusterIP 10.200.16.237 <none> 9115/TCP,19115/TCP 51m

grafana NodePort 10.200.168.96 <none> 3000:33000/TCP 51m

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 51m

node-exporter ClusterIP None <none> 9100/TCP 51m

prometheus-adapter ClusterIP 10.200.166.31 <none> 443/TCP 51m

prometheus-k8s NodePort 10.200.129.185 <none> 9090:60805/TCP,8080:52252/TCP 51m

prometheus-operated ClusterIP None <none> 9090/TCP 51m

prometheus-operator ClusterIP None <none> 8443/TCP 51m

此时可以正常访问到grafana.至此Operator部署Prometheus完成

2.3 二进制部署Prometheus

2.3.1 安装Prometheus

2.3.1.1 下载二进制文件

mkdir /apps

cd /apps

wget https://github.com/prometheus/prometheus/releases/download/v2.38.0/prometheus-2.38.0.linux-amd64.tar.gz

2.3.1.2 解压prometheus

tar xf prometheus-2.38.0.linux-amd64.tar.gz

ln -sf /apps/prometheus-2.38.0.linux-amd64 /apps/prometheus

root@prometheus-2:/apps# cd /apps/prometheus

root@prometheus-2:/apps/prometheus# ll

total 207312

drwxr-xr-x 4 3434 3434 169 Aug 29 15:21 ./

drwxr-xr-x 4 root root 118 Aug 29 15:22 ../

-rw-r--r-- 1 3434 3434 11357 Aug 16 21:42 LICENSE

-rw-r--r-- 1 3434 3434 3773 Aug 16 21:42 NOTICE

drwxr-xr-x 2 3434 3434 38 Aug 16 21:42 console_libraries/

drwxr-xr-x 2 3434 3434 173 Aug 16 21:42 consoles/

-rwxr-xr-x 1 3434 3434 110234973 Aug 16 21:26 prometheus* # Prometheus主程序

-rw-r--r-- 1 3434 3434 934 Aug 16 21:42 prometheus.yml # Prometheus主配置文件

-rwxr-xr-x 1 3434 3434 102028302 Aug 16 21:28 promtool* # 测试工具,用于检测配置prometheus配置文件,检测metrics数据

2.3.1.3 Service文件

/etc/systemd/system/prometheus.service

[Unit]

Description=Prometheus Server

Docmentation=https://prometheus.io/docs/introduction/overview/

After=network.target

[Service]

Restart=on-failure

WorkingDirectory=/apps/prometheus/

ExecStart=/apps/prometheus/prometheus --config.file=/apps/prometheus/prometheus.yml

[Install]

WantedBy=multi-user.targe

启动prometheus

systemctl daemon-reload

systemctl enable --now prometheus.service

2.3.2 二进制安装node-exporter

2.3.2.1下载Node_Exporter

mkdir /apps

cd /apps

wget https://github.com/prometheus/node_exporter/releases/download/v1.3.1/node_exporter-1.3.1.linux-amd64.tar.gz

2.3.2.2 解压node_exporter

tar xf node_exporter-1.3.1.linux-amd64.tar.gz

ln -sf /apps/node_exporter-1.3.1.linux-amd64 /apps/node_exporter

2.3.2.3 Service文件

[Unit]

Description=Prometheus Node Exporter

After=network.target

[Service]

ExecStart=/apps/node_exporter/node_exporter

[Install]

WantedBy=multi-user.target

启动node_exporter

root@zookeeper-1:/apps/node_exporter# systemctl enable --now node-exporter.service

Created symlink /etc/systemd/system/multi-user.target.wants/node-exporter.service → /etc/systemd/system/node-exporter.service

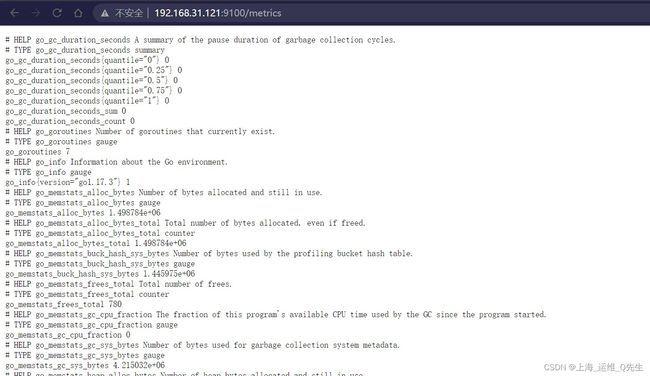

ss -ntlup|grep 9100

tcp LISTEN 0 4096 *:9100 *:* users:(("node_exporter",pid=123757,fd=3))

可以通过网页获得节点数据

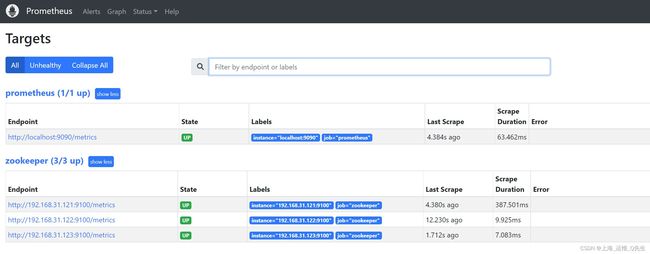

2.3.3 配置Prometheus监控

2.3.3.1 配置客户端地址

root@prometheus-2:/apps/prometheus# vi prometheus.yml

## 追加以下内容

- job_name: "zookeeper"

static_configs:

- targets: ["192.168.31.121:9100","192.168.31.122:9100","192.168.31.123:9100"]

重启服务,并确认服务状态

root@prometheus-2:/apps/prometheus# systemctl restart prometheus.service

root@prometheus-2:/apps/prometheus# ss -ntlup|grep 9090

tcp LISTEN 0 4096 *:9090 *:* users:(("prometheus",pid=5198,fd=8))

此时可以在Prometheus上看到3个节点的数据已经获取