WebRTC 的 AudioSource/AudioTrack

按照 WebRTC 的设计,AudioSource 是一个音频源,它可以向外提供 PCM 音频帧数据,比如麦克风录制可以提供 PCM 音频帧数据,它应该是一个 AudioSource。然而在 WebRTC 实际的实现中,AudioSource 的作用相对于设计,有一些不一样的地方。

这里以 WebRTC 的示例应用 peerconnection_client 的代码为例,来看 AudioSource/AudioTrack 的角色和作用。Conductor::InitializePeerConnection() 函数中初始化 PeerConnection 时会创建并添加 AudioSource/AudioTrack (webrtc/src/examples/peerconnection/client/conductor.cc):

void Conductor::AddTracks() {

if (!peer_connection_->GetSenders().empty()) {

return; // Already added tracks.

}

rtc::scoped_refptr audio_track(

peer_connection_factory_->CreateAudioTrack(

kAudioLabel, peer_connection_factory_->CreateAudioSource(

cricket::AudioOptions())));

auto result_or_error = peer_connection_->AddTrack(audio_track, {kStreamId});

if (!result_or_error.ok()) {

RTC_LOG(LS_ERROR) << "Failed to add audio track to PeerConnection: "

<< result_or_error.error().message();

}

rtc::scoped_refptr video_device =

CapturerTrackSource::Create();

if (video_device) {

rtc::scoped_refptr video_track_(

peer_connection_factory_->CreateVideoTrack(kVideoLabel, video_device));

main_wnd_->StartLocalRenderer(video_track_);

result_or_error = peer_connection_->AddTrack(video_track_, {kStreamId});

if (!result_or_error.ok()) {

RTC_LOG(LS_ERROR) << "Failed to add video track to PeerConnection: "

<< result_or_error.error().message();

}

} else {

RTC_LOG(LS_ERROR) << "OpenVideoCaptureDevice failed";

}

main_wnd_->SwitchToStreamingUI();

}

实际创建 LocalAudioSource 的调用栈如下:

#0 webrtc::LocalAudioSource::Create(cricket::AudioOptions const*) (audio_options=0x0) at ../../pc/local_audio_source.cc:20

#1 0x0000555556155b79 in webrtc::PeerConnectionFactory::CreateAudioSource(cricket::AudioOptions const&) (this=0x7fffe0004060, options=...)

at ../../pc/peer_connection_factory.cc:182

#2 0x000055555617a478 in webrtc::ReturnType >::Invoke (webrtc::PeerConnectionFactoryInterface::*)(cricket::AudioOptions const&), cricket::AudioOptions const>(webrtc::PeerConnectionFactoryInterface*, rtc::scoped_refptr (webrtc::PeerConnectionFactoryInterface::*)(cricket::AudioOptions const&), cricket::AudioOptions const&&) (this=0x7fffffffba30, c=0x7fffe0004060, m=&virtual table offset 88) at ../../pc/proxy.h:105

#3 0x0000555556175131 in webrtc::MethodCall, cricket::AudioOptions const&>::Invoke<0ul>(std::integer_sequence) (this=0x7fffffffba10) at ../../pc/proxy.h:153

#4 0x0000555556183b9c in webrtc::MethodCall, cricket::AudioOptions const&>::Run() (this=0x7fffffffba10) at ../../pc/proxy.h:146

#5 0x00005555560575f4 in rtc::Thread::QueuedTaskHandler::OnMessage(rtc::Message*) (this=0x5555589fc7a8, msg=0x7fffef7fdab0)

at ../../rtc_base/thread.cc:1042

LocalAudioSource 的头文件 (webrtc/src/pc/local_audio_source.h) 如下:

namespace webrtc {

class LocalAudioSource : public Notifier {

public:

// Creates an instance of LocalAudioSource.

static rtc::scoped_refptr Create(

const cricket::AudioOptions* audio_options);

SourceState state() const override { return kLive; }

bool remote() const override { return false; }

const cricket::AudioOptions options() const override { return options_; }

void AddSink(AudioTrackSinkInterface* sink) override {}

void RemoveSink(AudioTrackSinkInterface* sink) override {}

protected:

LocalAudioSource() {}

~LocalAudioSource() override {}

private:

void Initialize(const cricket::AudioOptions* audio_options);

cricket::AudioOptions options_;

};

} // namespace webrtc

LocalAudioSource 的源文件 (webrtc/src/pc/local_audio_source.cc) 如下:

using webrtc::MediaSourceInterface;

namespace webrtc {

rtc::scoped_refptr LocalAudioSource::Create(

const cricket::AudioOptions* audio_options) {

auto source = rtc::make_ref_counted();

source->Initialize(audio_options);

return source;

}

void LocalAudioSource::Initialize(const cricket::AudioOptions* audio_options) {

if (!audio_options)

return;

options_ = *audio_options;

}

} // namespace webrtc

这里展示 LocalAudioSource 的头文件和源文件是为了说明,LocalAudioSource 的功能真的是非常简单,它基本上也就只能存一个 audio_options 而已。

接着来看下 LocalAudioSource 实现的相关接口 (webrtc/src/api/media_stream_interface.h):

// Base class for sources. A MediaStreamTrack has an underlying source that

// provides media. A source can be shared by multiple tracks.

class RTC_EXPORT MediaSourceInterface : public rtc::RefCountInterface,

public NotifierInterface {

public:

enum SourceState { kInitializing, kLive, kEnded, kMuted };

virtual SourceState state() const = 0;

virtual bool remote() const = 0;

protected:

~MediaSourceInterface() override = default;

};

.......

// Interface for receiving audio data from a AudioTrack.

class AudioTrackSinkInterface {

public:

virtual void OnData(const void* audio_data,

int bits_per_sample,

int sample_rate,

size_t number_of_channels,

size_t number_of_frames) {

RTC_NOTREACHED() << "This method must be overridden, or not used.";

}

// In this method, `absolute_capture_timestamp_ms`, when available, is

// supposed to deliver the timestamp when this audio frame was originally

// captured. This timestamp MUST be based on the same clock as

// rtc::TimeMillis().

virtual void OnData(const void* audio_data,

int bits_per_sample,

int sample_rate,

size_t number_of_channels,

size_t number_of_frames,

absl::optional absolute_capture_timestamp_ms) {

// TODO(bugs.webrtc.org/10739): Deprecate the old OnData and make this one

// pure virtual.

return OnData(audio_data, bits_per_sample, sample_rate, number_of_channels,

number_of_frames);

}

// Returns the number of channels encoded by the sink. This can be less than

// the number_of_channels if down-mixing occur. A value of -1 means an unknown

// number.

virtual int NumPreferredChannels() const { return -1; }

protected:

virtual ~AudioTrackSinkInterface() {}

};

class RTC_EXPORT AudioSourceInterface : public MediaSourceInterface {

public:

class AudioObserver {

public:

virtual void OnSetVolume(double volume) = 0;

protected:

virtual ~AudioObserver() {}

};

// TODO(deadbeef): Makes all the interfaces pure virtual after they're

// implemented in chromium.

// Sets the volume of the source. `volume` is in the range of [0, 10].

// TODO(tommi): This method should be on the track and ideally volume should

// be applied in the track in a way that does not affect clones of the track.

virtual void SetVolume(double volume) {}

// Registers/unregisters observers to the audio source.

virtual void RegisterAudioObserver(AudioObserver* observer) {}

virtual void UnregisterAudioObserver(AudioObserver* observer) {}

// TODO(tommi): Make pure virtual.

virtual void AddSink(AudioTrackSinkInterface* sink) {}

virtual void RemoveSink(AudioTrackSinkInterface* sink) {}

// Returns options for the AudioSource.

// (for some of the settings this approach is broken, e.g. setting

// audio network adaptation on the source is the wrong layer of abstraction).

virtual const cricket::AudioOptions options() const;

};

接口的设计反映了设计意图,即 LocalAudioSource 应该是一个可以自己获得或者生成音频 PCM 数据,并吐出去的组件。

AudioTrack 用于将 AudioSource 接入 pipeline。如前面看到的, Conductor::AddTracks() 函数在创建了 AudioSource 之后,会立即创建 AudioTrack。创建 AudioTrack 的调用栈如下:

#0 webrtc::AudioTrack::Create(std::__cxx11::basic_string, std::allocator > const&, rtc::scoped_refptr const&)

(id="Dp\033VUU\000\000jp\033VUU\000\000\270p\033VUU\000\000\376p\033VUU\000\000\362j\033VUU\000\000\006k\033VUU\000\000tB\002VUU\000\000\210B\002VUU\000\000\234B\002VUU\000\000Lk\033VUU\000\000`k\033VUU\000\000\032k\033VUU\000\000\070q\033VUU\000\000\320q\033VUU\000\000\226r\033VUU\000\000\370\377\377\377\377\377\377\377\070\067&XUU\000\000\303q\033VUU\000\000\211r\033VUU\000\000\364p\033VUU\000\000-q\033VUU\000\000\372\277qWUU\000\000\373\277qWUU\000\000\000\300qWUU\000\000\b\300qWUU\000\000"..., source=...) at ../../pc/audio_track.cc:21

#1 0x0000555556156c6f in webrtc::PeerConnectionFactory::CreateAudioTrack(std::__cxx11::basic_string, std::allocator > const&, webrtc::AudioSourceInterface*) (this=0x7fffe0004060, id="audio_label", source=0x7fffe000d4f0) at ../../pc/peer_connection_factory.cc:282

#2 0x000055555617a76c in webrtc::ReturnType >::Invoke (webrtc::PeerConnectionFactoryInterface::*)(std::__cxx11::basic_string, std::allocator > const&, webrtc::AudioSourceInterface*), std::__cxx11::basic_string, std::allocator > const, webrtc::AudioSourceInterface*>(webrtc::PeerConnectionFactoryInterface*, rtc::scoped_refptr (webrtc::PeerConnectionFactoryInterface::*)(std::__cxx11::basic_string, std::allocator > const&, webrtc::AudioSourceInterface*), std::__cxx11::basic_string, std::allocator > const&&, webrtc::AudioSourceInterface*&&) (this=0x7fffffffc240, c=0x7fffe0004060, m=&virtual table offset 104) at ../../pc/proxy.h:105

AudioTrack 的完整代码 (webrtc/src/pc/audio_track.cc) 如下:

namespace webrtc {

// static

rtc::scoped_refptr AudioTrack::Create(

const std::string& id,

const rtc::scoped_refptr& source) {

return rtc::make_ref_counted(id, source);

}

AudioTrack::AudioTrack(const std::string& label,

const rtc::scoped_refptr& source)

: MediaStreamTrack(label), audio_source_(source) {

if (audio_source_) {

audio_source_->RegisterObserver(this);

OnChanged();

}

}

AudioTrack::~AudioTrack() {

RTC_DCHECK_RUN_ON(&thread_checker_);

set_state(MediaStreamTrackInterface::kEnded);

if (audio_source_)

audio_source_->UnregisterObserver(this);

}

std::string AudioTrack::kind() const {

return kAudioKind;

}

AudioSourceInterface* AudioTrack::GetSource() const {

// Callable from any thread.

return audio_source_.get();

}

void AudioTrack::AddSink(AudioTrackSinkInterface* sink) {

RTC_DCHECK_RUN_ON(&thread_checker_);

if (audio_source_)

audio_source_->AddSink(sink);

}

void AudioTrack::RemoveSink(AudioTrackSinkInterface* sink) {

RTC_DCHECK_RUN_ON(&thread_checker_);

if (audio_source_)

audio_source_->RemoveSink(sink);

}

void AudioTrack::OnChanged() {

RTC_DCHECK_RUN_ON(&thread_checker_);

if (audio_source_->state() == MediaSourceInterface::kEnded) {

set_state(kEnded);

} else {

set_state(kLive);

}

}

} // namespace webrtc

Conductor::AddTracks() 还会将 AudioTrack 添加进 PeerConnection:

#0 webrtc::AudioRtpSender::AttachTrack() (this=0x0) at ../../pc/rtp_sender.cc:501

#1 0x0000555556d7bc40 in webrtc::RtpSenderBase::SetTrack(webrtc::MediaStreamTrackInterface*) (this=0x7fffe0014568, track=0x7fffe000ab70)

at ../../pc/rtp_sender.cc:254

#2 0x0000555556203da4 in webrtc::ReturnType::Invoke(webrtc::RtpSenderInterface*, bool (webrtc::RtpSenderInterface::*)(webrtc::MediaStreamTrackInterface*), webrtc::MediaStreamTrackInterface*&&) (this=0x7fffef7fca20, c=0x7fffe0014568, m=&virtual table offset 32) at ../../pc/proxy.h:105

#3 0x0000555556202fa7 in webrtc::MethodCall::Invoke<0ul>(std::integer_sequence) (this=0x7fffef7fca00) at ../../pc/proxy.h:153

#4 0x0000555556201061 in webrtc::MethodCall::Marshal(rtc::Location const&, rtc::Thread*) (this=0x7fffef7fca00, posted_from=..., t=0x55555852ef30) at ../../pc/proxy.h:136

#5 0x00005555561fe79a in webrtc::RtpSenderProxyWithInternal::SetTrack(webrtc::MediaStreamTrackInterface*)

(this=0x7fffe0007e40, a1=0x7fffe000ab70) at ../../pc/rtp_sender_proxy.h:27

#6 0x0000555556d988ca in webrtc::RtpTransmissionManager::CreateSender(cricket::MediaType, std::__cxx11::basic_string, std::allocator > const&, rtc::scoped_refptr, std::vector, std::allocator >, std::allocator, std::allocator > > > const&, std::vector > const&)

(this=0x7fffe0014150, media_type=cricket::MEDIA_TYPE_AUDIO, id="audio_label", track=..., stream_ids=std::vector of length 1, capacity 1 = {...}, send_encodings=std::vector of length 0, capacity 0) at ../../pc/rtp_transmission_manager.cc:229

#7 0x0000555556d97fc5 in webrtc::RtpTransmissionManager::AddTrackUnifiedPlan(rtc::scoped_refptr, std::vector, std::allocator >, std::allocator, std::allocator > > > const&) (this=0x7fffe0014150, track=..., stream_ids=std::vector of length 1, capacity 1 = {...}) at ../../pc/rtp_transmission_manager.cc:196

#8 0x0000555556d96232 in webrtc::RtpTransmissionManager::AddTrack(rtc::scoped_refptr, std::vector, std::allocator >, std::allocator, std::allocator > > > const&) (this=0x7fffe0014150, track=..., stream_ids=std::vector of length 1, capacity 1 = {...}) at ../../pc/rtp_transmission_manager.cc:112

#9 0x00005555561c0386 in webrtc::PeerConnection::AddTrack(rtc::scoped_refptr, std::vector, std::allocator >, std::allocator, std::allocator > > > const&)

(this=0x7fffe0004590, track=..., stream_ids=std::vector of length 1, capacity 1 = {...}) at ../../pc/peer_connection.cc:834

RtpSenderBase::SetTrack() 在执行时,如果可以开始发送,会执行 SetSend(),否则不执行:

bool RtpSenderBase::SetTrack(MediaStreamTrackInterface* track) {

TRACE_EVENT0("webrtc", "RtpSenderBase::SetTrack");

if (stopped_) {

RTC_LOG(LS_ERROR) << "SetTrack can't be called on a stopped RtpSender.";

return false;

}

if (track && track->kind() != track_kind()) {

RTC_LOG(LS_ERROR) << "SetTrack with " << track->kind()

<< " called on RtpSender with " << track_kind()

<< " track.";

return false;

}

// Detach from old track.

if (track_) {

DetachTrack();

track_->UnregisterObserver(this);

RemoveTrackFromStats();

}

// Attach to new track.

bool prev_can_send_track = can_send_track();

// Keep a reference to the old track to keep it alive until we call SetSend.

rtc::scoped_refptr old_track = track_;

track_ = track;

if (track_) {

track_->RegisterObserver(this);

AttachTrack();

}

// Update channel.

if (can_send_track()) {

SetSend();

AddTrackToStats();

} else if (prev_can_send_track) {

ClearSend();

}

attachment_id_ = (track_ ? GenerateUniqueId() : 0);

return true;

}

在真正需要开始启动发送时,AudioRtpSender::SetSend() 会被调到,如 SDP offer 成功获得了应答时:

#0 webrtc::AudioRtpSender::SetSend() (this=0x3000000020) at ../../pc/rtp_sender.cc:523

#1 0x0000555556d7c3c8 in webrtc::RtpSenderBase::SetSsrc(unsigned int) (this=0x7fffc8013ce8, ssrc=1129908154) at ../../pc/rtp_sender.cc:280

#2 0x000055555624c79a in webrtc::SdpOfferAnswerHandler::ApplyLocalDescription(std::unique_ptr >, std::map, std::allocator >, cricket::ContentGroup const*, std::less, std::allocator > >, std::allocator, std::allocator > const, cricket::ContentGroup const*> > > const&)

(this=0x7fffc8004ae0, desc=std::unique_ptr = {...}, bundle_groups_by_mid=std::map with 2 elements = {...})

at ../../pc/sdp_offer_answer.cc:1438

#3 0x000055555625112c in webrtc::SdpOfferAnswerHandler::DoSetLocalDescription(std::unique_ptr >, rtc::scoped_refptr)

(this=0x7fffc8004ae0, desc=std::unique_ptr = {...}, observer=...) at ../../pc/sdp_offer_answer.cc:1934

#4 0x000055555624a0df in webrtc::SdpOfferAnswerHandler::)>::operator()(std::function)

(__closure=0x7fffcfbf9f90, operations_chain_callback=...) at ../../pc/sdp_offer_answer.cc:1159

#5 0x00005555562816e9 in rtc::rtc_operations_chain_internal::OperationWithFunctor)> >::Run(void) (this=0x7fffc801ba30)

at ../../rtc_base/operations_chain.h:71

#6 0x00005555562787e8 in rtc::OperationsChain::ChainOperation)> >(webrtc::SdpOfferAnswerHandler::)> &&)

(this=0x7fffc8004e00, functor=...) at ../../rtc_base/operations_chain.h:154

#7 0x000055555624a369 in webrtc::SdpOfferAnswerHandler::SetLocalDescription(webrtc::SetSessionDescriptionObserver*, webrtc::SessionDescriptionInterface*)

(this=0x7fffc8004ae0, observer=0x7fffc8018ae0, desc_ptr=0x7fffc801dc70) at ../../pc/sdp_offer_answer.cc:1143

#8 0x00005555561cb811 in webrtc::PeerConnection::SetLocalDescription(webrtc::SetSessionDescriptionObserver*, webrtc::SessionDescriptionInterface*)

(this=0x7fffc8003d10, observer=0x7fffc8018ae0, desc_ptr=0x7fffc801dc70) at ../../pc/peer_connection.cc:1336

#9 0x0000555556178768 in webrtc::ReturnType::Invoke(webrtc::PeerConnectionInterface*, void (webrtc::PeerConnectionInterface::*)(webrtc::SetSessionDescriptionObserver*, webrtc::SessionDescriptionInterface*), webrtc::SetSessionDescriptionObserver*&&, webrtc::SessionDescriptionInterface*&&) (this=0x7fffcfbfa3e0, c=0x7fffc8003d10, m=&virtual table offset 296) at ../../pc/proxy.h:119

#10 0x000055555617412f in webrtc::MethodCall::Invoke<0ul, 1ul>(std::integer_sequence) (this=0x7fffcfbfa3c0) at ../../pc/proxy.h:153

#11 0x000055555616f59d in webrtc::MethodCall::Marshal(rtc::Location const&, rtc::Thread*) (this=0x7fffcfbfa3c0, posted_from=..., t=0x555558758c00) at ../../pc/proxy.h:136

#12 0x0000555556167f64 in webrtc::PeerConnectionProxyWithInternal::SetLocalDescription(webrtc::SetSessionDescriptionObserver*, webrtc::SessionDescriptionInterface*) (this=0x7fffc8004600, a1=0x7fffc8018ae0, a2=0x7fffc801dc70) at ../../pc/peer_connection_proxy.h:109

#13 0x00005555557a5692 in Conductor::OnSuccess(webrtc::SessionDescriptionInterface*) (this=0x5555589cf4e0, desc=0x7fffc801dc70)

at ../../examples/peerconnection/client/conductor.cc:551

#14 0x00005555562848ef in webrtc::CreateSessionDescriptionObserverOperationWrapper::OnSuccess(webrtc::SessionDescriptionInterface*)

(this=0x5555587591e0, desc=0x7fffc801dc70) at ../../pc/sdp_offer_answer.cc:845

#15 0x00005555562ced1f in webrtc::WebRtcSessionDescriptionFactory::OnMessage(rtc::Message*) (this=0x7fffc8005040, msg=0x7fffcfbfaab0)

at ../../pc/webrtc_session_description_factory.cc:306

#16 0x0000555556055398 in rtc::Thread::Dispatch(rtc::Message*) (this=0x555558758c00, pmsg=0x7fffcfbfaab0) at ../../rtc_base/thread.cc:711

AudioRtpSender::SetSend() 的代码如下:

void AudioRtpSender::SetSend() {

RTC_DCHECK(!stopped_);

RTC_DCHECK(can_send_track());

if (!media_channel_) {

RTC_LOG(LS_ERROR) << "SetAudioSend: No audio channel exists.";

return;

}

cricket::AudioOptions options;

#if !defined(WEBRTC_CHROMIUM_BUILD) && !defined(WEBRTC_WEBKIT_BUILD)

// TODO(tommi): Remove this hack when we move CreateAudioSource out of

// PeerConnection. This is a bit of a strange way to apply local audio

// options since it is also applied to all streams/channels, local or remote.

if (track_->enabled() && audio_track()->GetSource() &&

!audio_track()->GetSource()->remote()) {

options = audio_track()->GetSource()->options();

}

#endif

// `track_->enabled()` hops to the signaling thread, so call it before we hop

// to the worker thread or else it will deadlock.

bool track_enabled = track_->enabled();

bool success = worker_thread_->Invoke(RTC_FROM_HERE, [&] {

return voice_media_channel()->SetAudioSend(ssrc_, track_enabled, &options,

sink_adapter_.get());

});

if (!success) {

RTC_LOG(LS_ERROR) << "SetAudioSend: ssrc is incorrect: " << ssrc_;

}

}

AudioRtpSender::SetSend() 获得 AudioSource 的 audio options,检查一下 AudioTrack 是否被 enable,然后把这些值连同 AudioSource 一起丢给 VoiceMediaChannel,如在 WebRTC 音频发送和接收处理过程 中看到的,VoiceMediaChannel 实际为 WebRtcVoiceMediaChannel。WebRtcVoiceMediaChannel::SetAudioSend() 的代码如下:

bool WebRtcVoiceMediaChannel::SetAudioSend(uint32_t ssrc,

bool enable,

const AudioOptions* options,

AudioSource* source) {

RTC_DCHECK_RUN_ON(worker_thread_);

// TODO(solenberg): The state change should be fully rolled back if any one of

// these calls fail.

if (!SetLocalSource(ssrc, source)) {

return false;

}

if (!MuteStream(ssrc, !enable)) {

return false;

}

if (enable && options) {

return SetOptions(*options);

}

return true;

}

上面看到的 SetLocalSource() 执行过程如下:

#0 webrtc::LocalAudioSinkAdapter::SetSink(cricket::AudioSource::Sink*) (this=0x7fffc40ace80, sink=0x7fffcebf9700) at ../../pc/rtp_sender.cc:416

#1 0x00005555560f6140 in cricket::WebRtcVoiceMediaChannel::WebRtcAudioSendStream::SetSource(cricket::AudioSource*)

(this=0x7fffc40ace30, source=0x7fffc8007068) at ../../media/engine/webrtc_voice_engine.cc:980

#2 0x00005555560e91fe in cricket::WebRtcVoiceMediaChannel::SetLocalSource(unsigned int, cricket::AudioSource*)

(this=0x7fffc4091b90, ssrc=1129908154, source=0x7fffc8007068) at ../../media/engine/webrtc_voice_engine.cc:2084

#3 0x00005555560e6141 in cricket::WebRtcVoiceMediaChannel::SetAudioSend(unsigned int, bool, cricket::AudioOptions const*, cricket::AudioSource*)

(this=0x7fffc4091b90, ssrc=1129908154, enable=true, options=0x7fffcfbf96d0, source=0x7fffc8007068) at ../../media/engine/webrtc_voice_engine.cc:1905

#4 0x0000555556d7e93f in webrtc::AudioRtpSender::::operator()(void) const (__closure=0x7fffcfbf96b0) at ../../pc/rtp_sender.cc:545

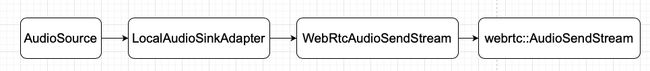

在这里搭建了完整的音频数据处理管线。

这里再来看下把数据处理管线中两个节点连接在一起的代码。对于 AudioSource 和 LocalAudioSinkAdapter 的连接,代码是:

void AudioRtpSender::AttachTrack() {

RTC_DCHECK(track_);

cached_track_enabled_ = track_->enabled();

audio_track()->AddSink(sink_adapter_.get());

}

对于 LocalAudioSinkAdapter 和 WebRtcAudioSendStream 的连接,代码是:

class WebRtcVoiceMediaChannel::WebRtcAudioSendStream

: public AudioSource::Sink {

public:

. . . . . .

void SetSource(AudioSource* source) {

RTC_DCHECK_RUN_ON(&worker_thread_checker_);

RTC_DCHECK(source);

if (source_) {

RTC_DCHECK(source_ == source);

return;

}

source->SetSink(this);

source_ = source;

UpdateSendState();

}

对于 WebRtcAudioSendStream 和 webrtc::AudioSendStream 的连接,代码是:

class WebRtcVoiceMediaChannel::WebRtcAudioSendStream

: public AudioSource::Sink {

public:

. . . . . .

void OnData(const void* audio_data,

int bits_per_sample,

int sample_rate,

size_t number_of_channels,

size_t number_of_frames,

absl::optional absolute_capture_timestamp_ms) override {

RTC_DCHECK_EQ(16, bits_per_sample);

RTC_CHECK_RUNS_SERIALIZED(&audio_capture_race_checker_);

RTC_DCHECK(stream_);

std::unique_ptr audio_frame(new webrtc::AudioFrame());

audio_frame->UpdateFrame(

audio_frame->timestamp_, static_cast(audio_data),

number_of_frames, sample_rate, audio_frame->speech_type_,

audio_frame->vad_activity_, number_of_channels);

// TODO(bugs.webrtc.org/10739): add dcheck that

// `absolute_capture_timestamp_ms` always receives a value.

if (absolute_capture_timestamp_ms) {

audio_frame->set_absolute_capture_timestamp_ms(

*absolute_capture_timestamp_ms);

}

stream_->SendAudioData(std::move(audio_frame));

}

不过我们前面看到 LocalAudioSource 明明不提供任何数据用于发送。这是怎么回事呢?

前面看到的数据处理管线是音频的抽象数据处理管线,当我们需要自己定义一段音频数据,通过 WebRTC 发送出去,比如从诸如 mp4 这样的媒体文件解码出来一段音频数据,我们完全可以自己定义一个合适的 AudioSource 实现。但对于麦克风来说,音频数据处理管线中,webrtc::AudioSendStream 之前的部分,是 AudioDeviceModule 和 AudioTransportImpl 这些组件,更详细的数据处理管线形态,如 WebRTC 音频发送和接收处理过程 。

回到 WebRtcVoiceMediaChannel::SetAudioSend() 的代码,可见 AudioSource 确实有一个很重要的职责,就是传递 audio options,用户通过 AudioSource 将 audio options 传给 WebRtcVoiceEngine,来控制一些模块的行为,如 APM 里面的回声,降噪等。此外,还可以通过 AudioSource 控制音频流发送的停止/重启等。