K8S搭建日志和监控平台

通过k8s部署grafana、loki、promtail

借鉴作者

https://zhuanlan.zhihu.com/p/532984838

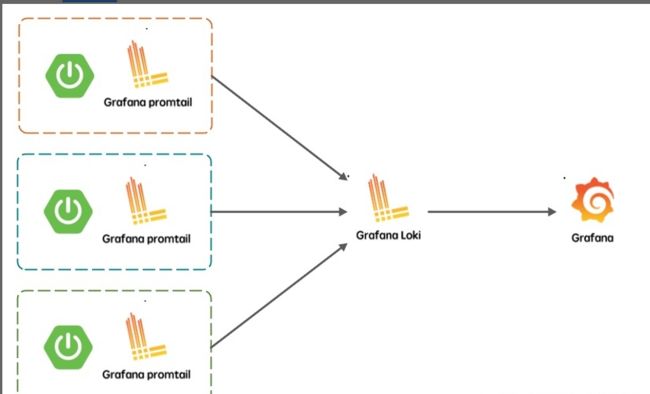

部署结构图

- Loki 是主服务,负责存储日志和处理查询

- promtail 是代理,负责收集日志并将其发送给 loki

- Grafana 用于 UI 展示

只要在应用程序服务器上安装promtail来收集日志然后发送给Loki存储,就可以在Grafana UI界面通过添加Loki为数据源进行日志查询(如果Loki服务器性能不够,可以部署多个Loki进行存储及查询)。作为一个日志系统不光只有查询分析日志的能力,还能对日志进行监控和报警。

部署脚本

链接:https://pan.baidu.com/s/1CP6OTQZ1EKEhBzrr1h9s4g?pwd=mq57

提取码:mq57

--来自百度网盘超级会员V1的分享

首先你需要在集群上面做一个NFS共享存储

部署grafana

创建命名空间

kubectl create ns logging

cd grafana

kubectl apply -f grafana-deploy.yaml

查看grafana-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

labels:

app: grafana

namespace: logging

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana:8.4.7

imagePullPolicy: IfNotPresent

env:

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

resources:

requests:

cpu: 100m

memory: 200Mi

limits:

cpu: '1'

memory: 2Gi

readinessProbe:

httpGet:

path: /login

port: 3000

volumeMounts:

- name: storage

mountPath: /tmp/testnfs/grafana #这里改成你本地需要共享的目录

volumes:

- name: storage

hostPath:

path: /app/grafana

---

apiVersion: v1

kind: Service

metadata:

name: grafana

labels:

app: grafana

namespace: logging

spec:

type: NodePort

ports:

- port: 3000

targetPort: 3000

nodePort: 30030

selector:

app: grafana

root@k8s-master1:/lgp/monitor-master/grafana# pwd

/lgp/monitor-master/grafana

root@k8s-master1:/lgp/monitor-master/grafana# ls

grafana-deploy.yaml nginx.yml

root@k8s-master1:/lgp/monitor-master/grafana# kubectl get pod -n logging

NAME READY STATUS RESTARTS AGE

grafana-6d874cf9bf-8wm28 1/1 Running 0 117m

root@k8s-master1:/lgp/monitor-master/grafana#

部署loki

root@k8s-master1:/lgp/monitor-master/loki# pwd

/lgp/monitor-master/loki

root@k8s-master1:/lgp/monitor-master/loki# ls

loki-configmap.yaml loki-rbac.yaml loki-statefulset.yaml

kubectl apply -f .

查看loki-statefulset.yaml

apiVersion: v1

kind: Service

metadata:

name: loki

namespace: logging

labels:

app: loki

spec:

type: NodePort

ports:

- port: 3100

protocol: TCP

name: http-metrics

targetPort: http-metrics

nodePort: 30100

selector:

app: loki

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: loki

namespace: logging

labels:

app: loki

spec:

podManagementPolicy: OrderedReady

replicas: 1

selector:

matchLabels:

app: loki

serviceName: loki

updateStrategy:

type: RollingUpdate

template:

metadata:

labels:

app: loki

spec:

serviceAccountName: loki

initContainers:

- name: chmod-data

image: busybox:1.28.4

imagePullPolicy: IfNotPresent

command: ["chmod","-R","777","/loki/data"]

volumeMounts:

- name: storage

mountPath: /loki/data

containers:

- name: loki

image: grafana/loki:2.3.0

imagePullPolicy: IfNotPresent

args:

- -config.file=/etc/loki/loki.yaml

volumeMounts:

- name: config

mountPath: /etc/loki

- name: storage

mountPath: /data

ports:

- name: http-metrics

containerPort: 3100

protocol: TCP

livenessProbe:

httpGet:

path: /ready

port: http-metrics

scheme: HTTP

initialDelaySeconds: 45

readinessProbe:

httpGet:

path: /ready

port: http-metrics

scheme: HTTP

initialDelaySeconds: 45

securityContext:

readOnlyRootFilesystem: true

terminationGracePeriodSeconds: 4800

volumes:

- name: config

configMap:

name: loki

- name: storage

hostPath:

path: /app/loki

查看loki-rbac.yaml

apiVersion: v1

kind: Namespace

metadata:

name: logging

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: loki

namespace: logging

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: loki

namespace: logging

rules:

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

verbs: ["use"]

resourceNames: [loki]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: loki

namespace: logging

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: loki

subjects:

- kind: ServiceAccount

name: loki

查看loki-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: loki

namespace: logging

labels:

app: loki

data:

loki.yaml: |

auth_enabled: false

ingester:

chunk_idle_period: 3m

chunk_block_size: 262144

chunk_retain_period: 1m

max_transfer_retries: 0

lifecycler:

ring:

kvstore:

store: inmemory

replication_factor: 1

limits_config:

enforce_metric_name: false

reject_old_samples: true

reject_old_samples_max_age: 168h

schema_config:

configs:

- from: "2022-05-15"

store: boltdb-shipper

object_store: filesystem

schema: v11

index:

prefix: index_

period: 24h

server:

http_listen_port: 3100

storage_config:

boltdb_shipper:

active_index_directory: /data/loki/boltdb-shipper-active

cache_location: /data/loki/boltdb-shipper-cache

cache_ttl: 24h

shared_store: filesystem

filesystem:

directory: /data/loki/chunks

chunk_store_config:

max_look_back_period: 0s

table_manager:

retention_deletes_enabled: true

retention_period: 48h

compactor:

working_directory: /data/loki/boltdb-shipper-compactor

shared_store: filesystem

root@k8s-master1:/lgp/monitor-master/loki# kubectl get pod -n logging

NAME READY STATUS RESTARTS AGE

grafana-6d874cf9bf-8wm28 1/1 Running 0 127m

loki-0 1/1 Running 0 4m32s

部署promtail

root@k8s-master1:/lgp/monitor-master/promtail# pwd

/lgp/monitor-master/promtail

root@k8s-master1:/lgp/monitor-master/promtail# ls

promtail-configmap.yaml promtail-daemonset.yaml promtail-rbac.yaml

kubectl apply -f .

查看promtail-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: loki-promtail

namespace: logging

labels:

app: promtail

spec:

selector:

matchLabels:

app: promtail

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: promtail

spec:

serviceAccountName: loki-promtail

containers:

- name: promtail

image: grafana/promtail:2.3.0

imagePullPolicy: IfNotPresent

args:

- -config.file=/etc/promtail/promtail.yaml

- -client.url=http://10.1.2.190:30100/loki/api/v1/push

env:

- name: HOSTNAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

volumeMounts:

- mountPath: /etc/promtail

name: config

- mountPath: /run/promtail

name: run

- mountPath: /var/lib/docker/containers

name: docker

readOnly: true

- mountPath: /var/log/pods

name: pods

readOnly: true

ports:

- containerPort: 3101

name: http-metrics

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsGroup: 0

runAsUser: 0

readinessProbe:

failureThreshold: 5

httpGet:

path: /ready

port: http-metrics

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Exists

volumes:

- name: config

configMap:

name: loki-promtail

- name: run

hostPath:

path: /run/promtail

type: ""

- name: docker

hostPath:

path: /var/lib/docker/containers

- name: pods

hostPath:

path: /var/log/pods

查看promtail-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: loki-promtail

labels:

app: promtail

namespace: logging

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

app: promtail

name: promtail-clusterrole

namespace: logging

rules:

- apiGroups: [""]

resources: ["nodes","nodes/proxy","services","endpoints","pods"]

verbs: ["get", "watch", "list"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: promtail-clusterrolebinding

labels:

app: promtail

namespace: logging

subjects:

- kind: ServiceAccount

name: loki-promtail

namespace: logging

roleRef:

kind: ClusterRole

name: promtail-clusterrole

apiGroup: rbac.authorization.k8s.io

查看promtail-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: loki-promtail

namespace: logging

labels:

app: promtail

data:

promtail.yaml: |

client:

backoff_config:

max_period: 5m

max_retries: 10

min_period: 500ms

batchsize: 1048576

batchwait: 1s

external_labels: {}

timeout: 10s

positions:

filename: /run/promtail/positions.yaml

server:

http_listen_port: 3101

target_config:

sync_period: 10s

scrape_configs:

- job_name: kubernetes-pods-name

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels:

- __meta_kubernetes_pod_label_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- job_name: kubernetes-pods-app

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

source_labels:

- __meta_kubernetes_pod_label_name

- source_labels:

- __meta_kubernetes_pod_label_app

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- job_name: kubernetes-pods-direct-controllers

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

separator: ''

source_labels:

- __meta_kubernetes_pod_label_name

- __meta_kubernetes_pod_label_app

- action: drop

regex: '[0-9a-z-.]+-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

- source_labels:

- __meta_kubernetes_pod_controller_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- job_name: kubernetes-pods-indirect-controller

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

separator: ''

source_labels:

- __meta_kubernetes_pod_label_name

- __meta_kubernetes_pod_label_app

- action: keep

regex: '[0-9a-z-.]+-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

- action: replace

regex: '([0-9a-z-.]+)-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- job_name: kubernetes-pods-static

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: ''

source_labels:

- __meta_kubernetes_pod_annotation_kubernetes_io_config_mirror

- action: replace

source_labels:

- __meta_kubernetes_pod_label_component

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_annotation_kubernetes_io_config_mirror

- __meta_kubernetes_pod_container_name

target_label: __path__

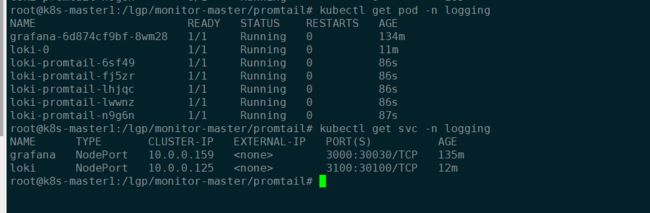

查看部署情况

root@k8s-master1:/lgp/monitor-master/promtail# kubectl get pod -n logging

NAME READY STATUS RESTARTS AGE

grafana-6d874cf9bf-8wm28 1/1 Running 0 134m

loki-0 1/1 Running 0 11m

loki-promtail-6sf49 1/1 Running 0 86s

loki-promtail-fj5zr 1/1 Running 0 86s

loki-promtail-lhjqc 1/1 Running 0 86s

loki-promtail-lwwnz 1/1 Running 0 86s

loki-promtail-n9g6n 1/1 Running 0 87s

查看访问端口

root@k8s-master1:/lgp/monitor-master/promtail# kubectl get svc -n logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana NodePort 10.0.0.159 <none> 3000:30030/TCP 135m

loki NodePort 10.0.0.125 <none> 3100:30100/TCP 12m

页面访问grafana

http://10.1.2.190:30030

admin/admin

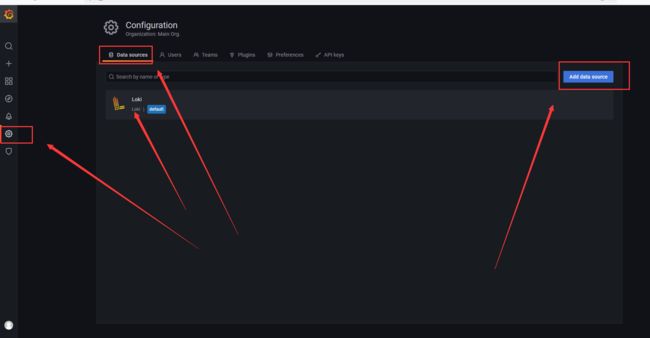

配置loki数据源

1/1 Running 0 86s

loki-promtail-n9g6n 1/1 Running 0 87s

[外链图片转存中...(img-jM3DNQVN-1662623837205)]

###### 查看访问端口

```shell

root@k8s-master1:/lgp/monitor-master/promtail# kubectl get svc -n logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana NodePort 10.0.0.159 3000:30030/TCP 135m

loki NodePort 10.0.0.125 3100:30100/TCP 12m

[外链图片转存中…(img-nNOhX3ac-1662623837205)]

页面访问grafana

http://10.1.2.190:30030

admin/admin

[外链图片转存中…(img-SAhooPH3-1662623837206)]

[外链图片转存中…(img-JfMu5qrM-1662623837206)]

配置loki数据源

[外链图片转存中…(img-6jtoPk9o-1662623837206)]

[外链图片转存中…(img-kLn8tRlD-1662623837206)]