ELK集群搭建/K8S集群日志采集

部署ELK-7.12.0

-

-

- 一、所需的基础环境

-

-

- 1、JDK环境

- 2、磁盘配置

- 3、修改操作系统限制

-

- 二、部署ES

-

-

- 1、创建路径

- 2、修改配置

- 3、启动

-

- 三、安装es-head

- 三、安装kibana

- 四、安装filebeat

- ELK采集K8S日志

-

-

- 在k8s集群中配置ELK

-

-

一、所需的基础环境

部署包

提取码:ddc2

1、JDK环境

对应版本对应支持的JDK环境也有所差异,但基本都支持JDK1.8

三台机器都需要执行

[root@node1 /]# mkdir /home/apps

[root@node1 /]# cd /home/apps

[root@node1 apps]# tar -zxf jdk-8u171-linux-x64.tar.gz

# 加入以下内容

[root@node1 apps]# vim /etc/profile.d/jdk.sh

#!/bin/bash

export JAVA_HOME=/home/apps/jdk1.8.0_171

export PATH=$JAVA_HOME/bin:$PATH:$JAVA_HOME/lib/tools.jar

export CLASSPATH=$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

[root@node1 apps]# source /etc/profile

2、磁盘配置

parted /dev/vdb

mklabel gpt

mkpart primary 0% 100%

p

toggle 1 lvm

yum -y install lvm2

pvcreate /dev/vdb1

vgcreate -s 16M vgone /dev/vdb1

lvcreate -L 4096G -n lvnew vgone

mkfs.xfs /dev/vgone/lvnew

# 创建并挂载存放数据的目录

mkdir -p /data

mount /dev/vgone/lvnew /data

echo "/dev/vgone/lvnew /jxpoc xfs defaults 0 0" >> /etc/fstab

3、修改操作系统限制

# 修改操作系统单个进程可以打开的最大文件数

[root@node1 apps]# vim /etc/security/limits.conf

* soft nofile 65535

* hard nofile 65535

* hard nproc 4096

* soft nproc 4096

[root@node1 apps]# vi /etc/sysctl.conf

vm.max_map_count=262144

[root@node1 apps]# sysctl -p

二、部署ES

1、创建路径

# 网络下载ES部署包

[root@master ~]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.12.0-linux-x86_64.tar.gz

# 创建用户

[root@master ~]# adduser es_user

[root@master ~]# passwd es_user

[root@master ~]# mkdir /usr/local/es/

# 192.168.75.121

[root@master ~]# tar -zxf elasticsearch-7.12.0-linux-x86_64.tar.gz

[root@master ~]# mv elasticsearch-7.12.0 /usr/local/es/elasticsearch-7.12.0-node-1

[root@master ~]# chmod u+x /usr/local/es/elasticsearch-7.12.0-node-1/bin

[root@master ~]# chown -R es_user /usr/local/es/

[root@master ~]# mkdir -p /data/es/es-node-1/

[root@master ~]# chown -R es_user /data/es/es-node-1/

[root@master ~]# mkdir -p /data/log/es/es-node-1/

[root@master ~]# chown -R es_user /data/log/es/es-node-1/

# 192.168.75.120

[root@master ~]# tar -zxf elasticsearch-7.12.0-linux-x86_64.tar.gz

[root@master ~]# mv elasticsearch-7.12.0 /usr/local/es/elasticsearch-7.12.0-node-2

[root@master ~]# chmod u+x /usr/local/es/elasticsearch-7.12.0-node-2/bin

[root@master ~]# chown -R es_user /usr/local/es/

[root@master ~]# mkdir -p /data/es/es-node-2/

[root@master ~]# chown -R es_user /data/es/es-node-2/

[root@master ~]# mkdir -p /data/log/es/es-node-2/

[root@master ~]# chown -R es_user /data/log/es/es-node-2/

# 192.168.65.80

[root@master ~]# tar -zxf elasticsearch-7.12.0-linux-x86_64.tar.gz

[root@master ~]# mv elasticsearch-7.12.0 /usr/local/es/elasticsearch-7.12.0-node-3

[root@master ~]# chmod u+x /usr/local/es/elasticsearch-7.12.0-node-3/bin

[root@master ~]# chown -R es_user /usr/local/es/

[root@master ~]# mkdir -p /data/es/es-node-3/

[root@master ~]# chown -R es_user /data/es/es-node-3/

[root@master ~]# mkdir -p /data/log/es/es-node-3/

[root@master ~]# chown -R es_user /data/log/es/es-node-3/

2、修改配置

192.168.75.121-node-1

# 修改以下内容

[root@node1 es]# vim elasticsearch-7.12.0-node-1/config/elasticsearch.yml

# 集群名称

cluster.name: my-application

# 集群节点名称

node.name: node-1

# 因为这台机器要装docker环境,所以需要将IP写死

network.host: 192.168.75.121

# 默认ES节点端口9200,如果是伪集群(在一台服务器上搭建集群),需要修改。

http.port: 9200

path.data: /data/es/es-node-1/

path.logs: /data/log/es/es-node-1/

# 发现其他节点主机配置

discovery.seed_hosts: ["192.168.75.121:9300","192.168.75.120:9300","192.168.65.80:9300"]

# 哪些节点可以被选举为主节点配置

cluster.initial_master_nodes: ["node-1", "node-2","node-3"]

# 跨域访问

http.cors.enabled: true

http.cors.allow-origin: "*"

192.168.75.121-node-1

[root@node1 es]# vim elasticsearch-7.12.0-node-2/config/elasticsearch.yml

cluster.name: my-application

node.name: node-2

network.host: 0.0.0.0

http.port: 9200

path.data: /data/es/es-node-2/

path.logs: /data/log/es/es-node-2/

discovery.seed_hosts: ["192.168.75.121:9300","192.168.75.120:9300","192.168.65.80:9300"]

cluster.initial_master_nodes: ["node-1", "node-2","node-3"]

# 跨域访问

http.cors.enabled: true

http.cors.allow-origin: "*"

192.168.75.121-node-1

[root@node1 es]# vim elasticsearch-7.12.0-node-3/config/elasticsearch.yml

cluster.name: my-application

node.name: node-3

network.host: 0.0.0.0

http.port: 9200

path.data: /data/es/es-node-3/

path.logs: /data/log/es/es-node-3/

discovery.seed_hosts: ["192.168.75.121:9300","192.168.75.120:9300","192.168.65.80:9300"]

cluster.initial_master_nodes: ["node-1", "node-2","node-3"]

# 跨域访问

http.cors.enabled: true

http.cors.allow-origin: "*"

3、启动

三台机器都执行相同操作

[root@node1 es]# cd elasticsearch-7.12.0-node-1

[root@node1 elasticsearch-7.12.0-node-1]# su es_user

[es_user@node1 elasticsearch-7.12.0-node-1]$ ./bin/elasticsearch -d # -d后台启动,先不要加这个参数,看看输出日志是否有报错

三、安装es-head

docker pull mobz/elasticsearch-head:5

docker run -itd --name=es-head -p 9100:9100 mobz/elasticsearch-head:5

三、安装kibana

# 下载

curl -L -O https://artifacts.elastic.co/downloads/kibana/kibana-7.12.0-linux-x86_64.tar.gz

tar xzvf kibana-7.12.0-linux-x86_64.tar.gz

cd kibana-7.12.0-linux-x86_64/

# 修改配置文件

vim config/kibana.yml

# Kibana 默认监听端口5601,如果需要改变就修改这个配置

server.port: 5601

# Kibana 部署服务器IP,如果是单网卡配置0.0.0.0即可,如果是多网卡需要配置IP

server.host: "0.0.0.0"

# 配置服务器的名称

server.name: my-kibana

# 配置ES的集群节点地址

elasticsearch.hosts: ["http://192.168.75.121:9200","http://192.168.75.120:9200","http://192.168.65.80:9200"]

# 创建一个kibana索引

# kibana.index: ".kibana"

logging.dest: /var/log/kibana/kibana.log

i18n.locale: "zh-CN"

# 运行

nohup ./bin/kibana --alow-root &

四、安装filebeat

# 下载部署包

curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.12.0-linux-x86_64.tar.gz

# 解压

tar xzvf filebeat-7.12.0-linux-x86_64.tar.gz -C /usr/local/

mv /usr/local/filebeat-7.12.0-linux-x86_64.tar.gz /usr/local/filebeat

cd /usr/local/filebeat

编辑一个实例,这里以ngin为例

vim test.yml

# 获取的日志类型,这里以log为例

filebeat.inputs:

- type: log

enabled: true

paths:

- /tmp/access.log

# 输出到屏幕中

output.console:

pretty: true

# 前台启动

./filebeat -c ./test.yml

# 开放新的模块,以nginx为例

[root@master filebeat]# ./filebeat -c ./filebeat.yml modules enable nginx

Enabled nginx

# 修改nginx模块内容

[root@master filebeat]# vim modules.d/nginx.yml

# 添加日志地址

var.paths: ["/home/apps/access.og", "/home/apps/error.log"]

# 启动-e表示扩展,会自动去寻找modules开启的模块

[root@master filebeat]# ./filebeat -c ./filebeat.yml -e

ELK采集K8S日志

容器特性给日志采集带来的难度:

- K8s弹性伸缩性:导致不能预先确定采集的目标

- 容器隔离性:容器的文件系统与宿主机是隔离,导致

日志采集器读取日志文件受阻

应用程序日志记录体现方式分为两类:

- 标准输出:输出到控制台,使用kubectl logs可以看到

- 日志文件:写到容器的文件系统的文件

# 查看一个nginx的Pod日志

kubectl run nginx --image=nginx

kubectl exec -it nginx -- bash

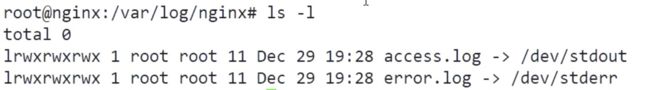

root@nginx:/# cd /var/log/nginx/

root@nginx:/var/log/nginx/# ls -l

可以发现nginx的日志是输出到标准输出和标准错误输出里面,通过kubectl logs 可以查看nginx的日志

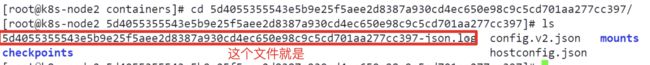

这个是从node节点的docker存储路径更具docker-ID找的

而kubectl logs查看日志的流程如下

kubectl logs命令—> apiserver —> kubelet —> docker api —>-json.log

因为docker是把所有的日志都存放在宿主机目录中的(/var/lib/docker/containers,目录因不唯一,可使用docker info查看),所以可以采集/vat/lib/docker/containers//-json.log(这里的*就是容器ID)

以上看到的就是标准输出的日志文件,另一种就是存在于容器内部的输出文件

如何去采集这两种日志

在k8s集群中配置ELK

创建动态供给(PV)

这里只是配置的单点的,没有配置集群

配置es的yaml

# 创建ops命名空间

apiVersion: v1

kind: Namespace

metadata:

name: ops

---

# 配置es

apiVersion: apps/v1

kind: Deployment

metadata:

name: elasticsearch

# 配置的命名空间为ops

namespace: ops

labels:

k8s-app: elasticsearch

spec:

replicas: 1

selector:

matchLabels:

k8s-app: elasticsearch

template:

metadata:

labels:

k8s-app: elasticsearch

spec:

containers:

- image: elasticsearch:7.9.2

name: elasticsearch

resources:

limits:

cpu: 2

memory: 3Gi

requests:

cpu: 0.5

memory: 500Mi

env:

# 传入预定义的一个值,设置为单节点部署

- name: "discovery.type"

value: "single-node"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx2g"

ports:

- containerPort: 9200

name: db

protocol: TCP

volumeMounts:

- name: elasticsearch-data

mountPath: /usr/share/elasticsearch/data

volumes:

- name: elasticsearch-data

persistentVolumeClaim:

claimName: es-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: es-pvc

namespace: ops

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: Service

metadata:

name: elasticsearch

namespace: ops

spec:

ports:

- port: 9200

protocol: TCP

targetPort: 9200

selector:

k8s-app: elasticsearch

kibana,yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: ops

labels:

k8s-app: kibana

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana

template:

metadata:

labels:

k8s-app: kibana

spec:

containers:

- name: kibana

image: kibana:7.9.2

resources:

limits:

cpu: 2

memory: 2Gi

requests:

cpu: 0.5

memory: 500Mi

env:

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch.ops:9200

- name: I18N_LOCALE

value: zh-CN

ports:

- containerPort: 5601

name: ui

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: ops

spec:

type: NodePort

ports:

- port: 5601

protocol: TCP

targetPort: ui

nodePort: 30601

selector:

k8s-app: kibana

filebeat.yaml

在正常的部署中,filebeat是可以配置该日志是那个项目,那个业务的标记,但是在k8s中无法获取,为了解决这个方法,需要先部署一个k8s官方的yaml,来实现filebeat对k8s服务的发现能力.

可以根据日志从k8s api里得知这条日志属于谁的,那个Pod的,那个命名空间

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: ops

labels:

k8s-app: filebeat

data:

filebeat.yml: |-

filebeat.config:

inputs:

# Mounted `filebeat-inputs` configmap:

path: ${path.config}/inputs.d/*.yml

# Reload inputs configs as they change:

reload.enabled: false

modules:

path: ${path.config}/modules.d/*.yml

# Reload module configs as they change:

reload.enabled: false

output.elasticsearch:

hosts: ['elasticsearch.ops:9200']

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-inputs

namespace: ops

labels:

k8s-app: filebeat

data:

kubernetes.yml: |-

- type: docker

containers.ids:

- "*"

processors:

- add_kubernetes_metadata:

in_cluster: true

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: ops

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: elastic/filebeat:7.9.2

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

securityContext:

runAsUser: 0

# If using Red Hat OpenShift uncomment this:

#privileged: true

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: inputs

mountPath: /usr/share/filebeat/inputs.d

readOnly: true

- name: data

mountPath: /usr/share/filebeat/data

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: inputs

configMap:

defaultMode: 0600

name: filebeat-inputs

# data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: ops

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: ops

labels:

k8s-app: filebeat

K8S-ELK集群部署

官方文档