【论文翻译】FCOS3D: Fully Convolutional One-Stage Monocular 3D Object Detection

文章目录

- PaperInfo

- Abstract

- 1 Introduction

- 2 Related Work

-

- 2D Object Detection

- Monocular 3D Object Detection

- Methods involving sub-networks

- Transform to 3D representations

- End-to-end design like 2D detection

- 3 Approach

-

- 3.1 Framework Overview

-

- Backbone

- Neck

- Detection Head

- Regression Targets

- Loss

- Inference

- 3.2 2D Guided Multi-Level 3D Prediction

- 3.3 3D Center-ness with 2D Gaussian Distribution

- 4 Experimental Setup

-

- 4.1 Dataset

- 4.2 Evaluation Metrics

-

- Average Precision metric

- True Positive metrics

- NuScenes Detection Score

- 4.3 Implementation Details

-

- Network Architectures

- Training Parameters

- Data Augmentation

- 5 Results

-

- 5.1 Quantitative Analysis

- 5.2 Qualitative Analysis

- Ablation Studies

- 6 Conclusion

- Appendix

-

- 1 Failure Cases

- 2 Results on the KITTI Benchmark

- References

PaperInfo

| 属性 | 属性值 |

|---|---|

| FCOS3D论文名称 | FCOS3D: Fully Convolutional One-Stage Monocular 3D Object Detection |

| FCOS3D论文地址 | https://arxiv.org/abs/2104.10956 |

| FCOS3D论文代码 | https://github.com/open-mmlab/mmdetection3d |

| FCOS2D论文名称 | FCOS: Fully Convolutional One-Stage Object Detection |

| FCOS2D论文地址 | https://arxiv.org/abs/1904.01355 |

| FCOS2D论文代码 | https://tinyurl.com/FCOSv1 |

Abstract

Monocular 3D object detection is an important task for autonomous driving considering its advantage of low cost. It is much more challenging than conventional 2D cases due to its inherent ill-posed property, which is mainly reflected in the lack of depth information. Recent progress on 2D detection offers opportunities to better solving this problem. However, it is non-trivial to make a general adapted 2D detector work in this 3D task. In this paper, we study this problem with a practice built on a fully convolutional single-stage detector and propose a general framework FCOS3D. Specifically, we first transform the commonly defined 7-DoF 3D targets to the image domain and decouple them as 2D and 3D attributes. Then the objects are distributed to different feature levels with consideration

of their 2D scales and assigned only according to the projected 3D-center for the training procedure. Furthermore, the center-ness is redefined with a 2D Gaussian distribution based on the 3D-center to fit the 3D target formulation. All of these make this framework simple yet effective, getting rid of any 2D detection or 2D-3D correspondence priors. Our solution achieves 1st place out of all the vision-only methods in the nuScenes 3D detection challenge of NeurIPS 2020. Code and models are released at

https://github.com/open-mmlab/mmdetection3d.

单目三维目标检测具有低成本的优点,是自动驾驶的重要任务。由于其固有的不适定特性,它比传统的二维情况更具挑战性,这主要反映在缺乏深度信息上。二维检测的最新进展为更好地解决这个问题提供了机会。然而,在这个3D任务中使一个通用的2D检测器工作是很重要的。在本文中,我们用一个建立在全卷积单级检测器上的实践来研究这个问题,并提出了一个通用的框架FCOS3D。具体来说,我们首先将通常定义的7-DoF 3D目标转换到图像域,并将它们解耦为二维和三维属性。然后将对象分配到不同的特征层次,考虑到它们的二维尺度,并只根据训练过程中的3D-center投影进行分配。此外,以基于3D-center的二维高斯分布重新定义中心度,以拟合三维目标公式。所有这些都使这个框架简单而有效,摆脱了任何2D检测或2D-3D对应先验。在NeurIPS 2020的nuScenes 3D检测挑战中,我们的解决方案获得了所有纯视觉方法中的第一名。代码和模型发布在 https://github.com/open-mmlab/mmdetection3d

1 Introduction

Object detection is a fundamental problem in computer vision. It aims to identify objects of interest in the image and predict their categories with corresponding 2D bounding boxes. With the rapid progress of deep learning, 2D object detection has been well explored in recent years. Various models such as Faster R-CNN [27], RetinaNet [18], and FCOS [31] significantly promote the progress of the field and benefit various applications like autonomous driving.

目标检测是计算机视觉中的一个基本问题。它的目的是识别图像中感兴趣的对象,并通过相应的二维边界框预测其类别。随着深度学习的快速发展,二维目标检测近年来得到了很好的探索。各种车型,如更快的R-CNN [27]、RetinaNet [18]和FCOS [31],显著促进了该领域的发展,并有利于自动驾驶等各种应用。

However, 2D information is not enough for an intelligent agent to perceive the 3D real world. For example, when an autonomous vehicle needs to run smoothly and safely on the road, it must have accurate 3D information of objects around it to make secure decisions. Therefore, 3D object detection is becoming increasingly important in these robotic applications. Most state-of-the-art methods [39, 14, 29, 32, 41, 42] rely on the accurate 3D information provided by LiDAR point clouds, but it is a heavy burden to install expensive LiDARs on each vehicle. So monocular 3D object detection, as a simple and cheap setting for deployment, becomes a much meaningful research problem nowadays.

然而,2D信息并不足以让智能代理感知3D真实世界。例如,当自动驾驶汽车需要在道路上平稳、安全地运行时,它必须拥有周围物体的准确3D信息,才能做出安全的决策。因此,3D目标检测在这些机器人应用中变得越来越重要。大多数最先进的方法[39,14,29,32,41,42]依赖于激光雷达点云提供的精确的3D信息,但在每辆车上安装昂贵的激光雷达是一个沉重的负担。因此,单目三维目标检测作为一种简单、廉价的部署环境,成为当今一个非常有意义的研究问题。

Considering monocular 2D and 3D object detection have the same input but different outputs, a straightforward solution for monocular 3D object detection is following the practices in the 2D domain but adding extra components to predict the additional 3D attributes of the objects. Some previous work [30, 20] keeps predicting 2D boxes and further regresses 3D attributes on top of 2D centers and regions of interest. Others [1, 9, 2] simultaneously predict 2D and 3D boxes with 3D priors corresponding to each 2D anchor. Another stream of methods based on redundant 3D information [13, 16] predicts extra keypoints for optimized results ultimately. In a word, the fundamental underlying problem is how to assign 3D targets to the 2D domain with the 2D-3D correspondence and predict them afterward.

考虑到单目2D目标检测和3D目标检测具有相同的输入但不同的输出,一种单目3D目标检测的简单解决方案是遵循2D的实践的基础上再添加额外的组件来预测目标的3D属性。[30,20]之前的一些工作继续预测2D boxes,并在2D中心和感兴趣区域的基础上进一步回归3D属性。其他[1,9,2]同时预测2D boxes和3D boxes(带有每个 2D anchor 对应的3D先验)。另一种基于冗余3D信息的方法流[13,16]最终预测了优化结果的额外关键点。总之,最基本的潜在问题是如何将具有2D-3D对应关系的3D目标分配到2D域,并在之后进行预测。

In this paper, we adopt a simple yet efficient method to enable a 2D detector to predict 3D localization. We first project the commonly defined 7-DoF 3D locations onto the 2D image and get the projected center point, which we name as 3D-center compared to the previous 2D-center. With this projection, the 3D-center contains 2.5D information, i.e., 2D location and its corresponding depth. The 2D location can be further reduced to the 2D offset from a certain point on the image, which serves as the only 2D attribute that can be normalized among different feature levels like in the 2D detection. In comparison, depth, 3D size, and orientation are regarded as 3D attributes after decoupling. In this way, we transform the 3D targets with a center-based paradigm and avoid any necessary 2D detection or 2D-3D correspondence priors.

在本文中,我们采用了一种简单而有效的方法,使2D检测器能够预测3D定位。我们首先将通常定义的7-DoF 3D位置投影到2D图像上,并得到投影的中心点,我们将其命名为3D-center,而不是之前的2D-center。通过这个投影,3D-center包含了2.5D的信息,即2D的位置及其对应的深度。2D位置可以进一步减少为从图像上的某一点的2D偏移,这是唯一可以在如二维检测中的不同特征级别之间归一化的二维属性。相比之下,我们将深度、三维尺寸和方向作为解耦后的三维属性。通过这种方式,我们用基于中心的范式来转换3D目标,并避免任何必要的2D检测或2D-3D对应先验。

As a practical implementation, we build our method on FCOS [31], a simple anchor-free fully convolutional single-stage detector. We first distribute the objects to different feature levels with consideration of their 2D scales. Then the regression targets of each training sample are assigned only according to the projected 3D centers. In contrast to FCOS that denotes the center-ness with distances to boundaries, we represent the 3D center-ness with a 2D Gaussian distribution based on the 3D-center.

作为一个可行的实现,我们在FCOS [31]上建立了我们的方法,这是一个简单的无anchor的全卷积一阶段检测器。我们首先考虑目标的2D尺度将目标分配到不同的特征级别。然后,仅根据投影的3D中心来分配每个训练样本的回归目标。与FCOS表示距离到边界的中心度相比,我们用基于3D-center的二维高斯分布来表示3D center-ness。

We evaluate our method on a popular large-scale dataset, nuScenes [3], and achieved 1st place on the camera track of this benchmark without any prior information. Moreover, we only need 2x less computing resources to train a baseline model with performance comparable to the previous best open-source method, CenterNet [38], in one day, also 3x faster than it. Both show that our framework is simple and efficient. Detailed ablation studies show the importance of each component.

我们在一个流行的大规模数据集nuScenes [3]上评估了我们的方法,并在没有任何先验信息的情况下,在这个基准测试的摄像机跟踪上取得了第一名。此外,我们只需要少2倍的计算资源来训练一个基准模型,其性能与之前最好的开源方法CenterNet [38]相当,在一天内也比它快3倍。两者都表明了我们的框架是简单和有效的。详细的消融研究显示了每种成分的重要性。

2 Related Work

2D Object Detection

Research on 2D object detection has made great progress with the breakthrough of deep learning approaches. According to the base of initial guesses, modern methods can be divided into two branches: anchor-based and anchor-free. Anchor-based methods [10, 27, 19, 26] benefit from the predefined anchors in terms of much easier regression while having many hyper-parameters to tune. In contrast, anchor-free methods [12, 25, 31, 15, 38] do not need these prior settings and are thus neater with better universality. For simplicity, this paper takes FCOS, a representative anchor-free detector, as the baseline considering its capability of handling overlapped ground truths and scale variance problem.

随着深度学习方法的突破,2D目标检测的研究取得了巨大的进展。根据初步猜测的基础,现代方法可以分为基于anchor和无anchor两个分支。基于anchor的方法[10,27,19,26]受益于预定义的anchor,因为它更容易回归,同时有许多超参数来调整。相比之下,无anchor方法[12,25,31,15,38]不需要这些先前的设置,因此更整洁,具有更好的泛化性。为简单起见,考虑到代表性的无anchor检测器FCOS处理重叠的GTBox和尺度方差问题的能力,本文以FOCS为基准。

From another perspective, monocular 3D detection is a more difficult task closely related to 2D detection. But there is few work investigating the connection and difference between them, which makes them isolated and not able to benefit from the advancement of each other. This paper aims to adapt FCOS as the example and further build a closer connection between these two tasks.

从另一个角度来看,单目3D检测是一项与2D检测密切相关的更加困难的任务。但很少有研究人员在调查它们之间的联系和差异,这使得它们被孤立起来,不能从彼此的进步中获益。本文旨在以FCOS为例,进一步建立这两个任务之间更紧密的联系。

Monocular 3D Object Detection

Monocular 3D detection is more complex than conventional 2D detection. The underlying key problem is the inconsistency of input 2D data modal and the output 3D predictions.

单目三维检测比传统的二维检测更为复杂。潜在的关键问题是输入的二维数据模态和输出的三维预测的不一致。

Methods involving sub-networks

The first batch of works resorts to sub-networks to assist 3D detection. To mention only a few, 3DOP [4] and MLFusion [36] use a depth estimation network, while Deep3DBox [21] uses a 2D object detector. They heavily rely on the performance of subnetworks, even external data and pre-trained models, making the entire system complex and inconvenient to train.

第一批论文采用子网络来协助3D检测。另外,3DOP [4]和MLFusion [36]使用深度估计网络,而Deep3DBox [21]使用2D目标检测器。它们严重依赖子网络的性能,甚至外部数据和预先训练的模型,使整个系统变得复杂和不方便训练。

Transform to 3D representations

Another category of methods converts the input RGB image to other 3D representations, such as voxels [28] and point clouds [35]. Recent work [37, 23, 34, 24] has made great progress following this approach and shown promising performance. However, they still rely on dense depth labels and thus are not regarded as pure monocular approaches. There are also domain gaps between different depth sensors and LiDARs, making them hard to generalize to new practice settings smoothly. In addition, it is difficult to process a large number of point clouds when applying these methods to the real-world scenarios.

另一类方法将输入的RGB图像转换为其他3D表示,如体素[28]和点云[35]。在最近的工作中,[37,23,34,24]按照这种方法取得了很大的进展,并显示出了良好的性能。然而,它们仍然依赖于密集的深度标签,因此不被认为是纯单目方法。在不同的深度传感器和激光雷达之间也存在着领域上的差距,这使得它们很难顺利地推广到新的实践设置中。此外,当将这些方法应用于实际场景时,很难处理大量的点云。

End-to-end design like 2D detection

Recent work notices these drawbacks and begins to design end-to-end frameworks like 2D detectors. For example, M3D-RPN [1] proposes a single-stage detector with an end-to-end region proposal network and depth-aware convolution. SS3D [13] detects 2D key points and further predicts object characteristics with uncertainties. MonoDIS [30] improves the multitask learning with a disentangling loss. These methods follow the anchor-based manners and are thus required to define consistent 2D and 3D anchors. Some of them also need multiple training stages or hand-crafted post-optimization phases. In contrast, anchor-free methods [38, 16, 5] do not need to make statistics on the given data. It is more convenient to generalize their simple designs to more complex cases with more various classes or different intrinsic settings. Hence, we choose to follow this paradigm.

最近的工作注意到了这些缺点,并开始设计像2D检测器中的端到端框架。例如,M3D-RPN [1]提出了一个具有端到端区域建议网络和深度感知卷积的一阶段检测器。SS3D [13]检测2D关键点,并进一步预测具有不确定性的物体特征。MonoDIS [30] 用disentangling loss改进了多任务学习。这些方法遵循了基于anchor的方法,要求定义2D与3D一致的anchors。其中一些还需要多个训练阶段或手动的后优化阶段。相比之下,无anchor方法[38,16,5]不需要对给定的数据进行统计。将它们的简单设计推广到具有更多不同的类或不同的内在设置的更复杂的情况下会更方便。因此,我们选择遵循这个范式。

Disentangling的原理

将Disentangling Loss应用于2D目标框和3D目标框的回归损失中,目的是将多组参数对于损失函数的贡献隔离开,同时保留这些参数的原始含义,利于网络训练时的优化过程。在2D目标框的回归损失中,包含用于确定目标框的2组参数,一组用于确定目标框的长和宽;

另外一组用于确定目标框的中心点位置。在3D目标框的回归损失中,包含用于确定目标框的4组参数,它们分别是与深度有关的参数、与3D目标框中心在图像平面投影有关的参数、与旋转角度有关的参数和与目标长宽高有关的参数。

————————————————

版权声明:本文为CSDN博主「我爱计算机视觉」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/moxibingdao/article/details/115327965

Nevertheless, these works hardly study the key difficulty when applying a general 2D detector to monocular 3D detection. What should be kept or adjusted therein is seldom

discussed when proposing their new frameworks. In contrast, this paper concentrates on this point, which could provide a reference when applying a typical 2D detector framework to a closely related task. On this basis, a more indepth understanding of the connection and difference between these two tasks will also benefit further research of both communities.

然而,这些工作几乎没有研究将通用的2D检测器应用于单目3D检测的关键困难。在提出新框架时,很少讨论其中应该保留或调整什么。相反,本文主要关注这一点,这可以为将一个典型的2D检测器框架应用于一个密切相关的任务提供参考。在此基础上,更深入地了解这两个任务之间的联系和差异,也将有助于对这两个社区的进一步研究。

3 Approach

Object detection is one of the most fundamental and challenging problems for scene understanding. The goal of conventional 2D object detection is to predict 2D bounding boxes and category labels for each object of interest. In comparison, monocular 3D detection needs us to predict 3D bounding boxes instead, which need to be decoupled and transformed to the 2D image plane. This section will first present an overview of our framework with our adopted reformulation of 3D targets, and then elaborate on two corresponding technical designs, 2D guided multi-level 3D prediction and 3D center-ness with 2D Gaussian distribution, tailored to this task. These technical designs work together to equip the 2D detector FCOS with the capability of detecting 3D objects.

目标检测是场景理解中最基本和最具挑战性的问题之一。传统的2D目标检测的目标是预测每个感兴趣目标的2D边界框和类别标签。相比之下,单目3D检测需要我们预测3D边界盒,这需要解耦并转换到2D图像平面。本节将首先概述我们采用的对3D目标重新制定的框架,然后详细阐述两个相应的技术设计,2D引导的多层次3D预测和使用2D高斯分布的3D center-ness。这些技术设计共同作用,使2D检测器FCOS具有检测3D物体的能力。

3.1 Framework Overview

A fully convolutional one-stage detector typically consists of three components: a backbone for feature extraction, necks for multi-level branches construction and detection heads for dense predictions. Then we briefly introduce each of them.

一个全卷积的一阶段检测器通常由三个组件组成:用于特征提取的主干,用于多层次分支构建的颈部和用于密集预测的检测头。然后我们简要介绍它们每一个。

Backbone

We use the pretrained ResNet101 [11, 8] with deformable convolutions [7] for feature extraction. It achieves a good trade-off between accuracy and efficiency in our experiments. We fixed the parameters of the first convolutional block to avoid more memory overhead.

我们使用预训练的ResNet101 [11,8]与可变形卷积[7]进行特征提取。在我们的实验中,它很好地在精度和效率之间取得了平衡。我们固定了第一个卷积块的参数,以避免更多的内存开销。

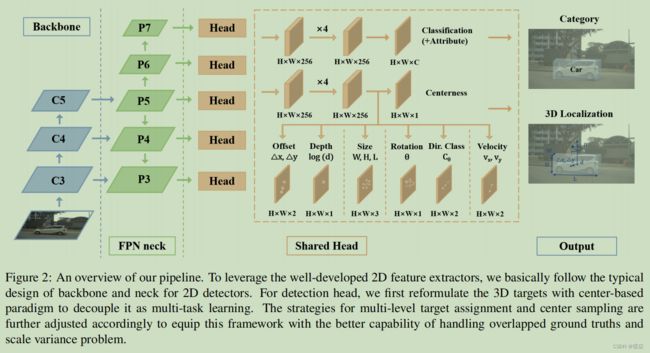

Neck

The second module is the Feature Pyramid Network [17], a primary component for detecting objects at different scales. For precise clarification, we denote feature maps from level 3 to 7 as P3 to P7, as shown in Fig. 2. We follow the original FCOS to obtain P3 to P5 and downsample P5 with two convolutional blocks to obtain P6 and P7. All of these five feature maps are responsible for predictions of different scales afterward.

第二个模块是特征金字塔网络[17],一个用于在不同尺度上检测目标的主要组件。为了精确的说明,我们将从第3级到第7级的特征图表示为第P3级到第P7级,如图2所示。我们按照原始的FCOS,得到P3到P5,然后用两个卷积块对P5下采样,得到P6和P7。所有这五种特征图都负责对之后不同尺度的预测。

Detection Head

Finally, for shared detection heads, we need to deal with two critical issues. The first is how to distribute targets to different feature levels and different points. It is one of the core problems for different detectors and will be presented in Sec. 3.2. The second is how to design thearchitecture. We follow the conventional design of RetinaNet [18] and FCOS [31]. Each shared head consists of 4 shared convolutional blocks and small heads for different targets. It is empirically more effective to build extra disentangled heads for regression targets with different measurements, so we set one small head for each of them (Fig. 2).

最后,对于共享的检测头,我们需要处理两个关键问题。第一个问题是如何将目标分配到不同的特性级别和不同的点。这是不同检测器的核心问题之一,将在Sec.3.2.中提出。二是如何设计架构。我们遵循RetinaNet [18]和FCOS [31]的传统设计。每个共享的头由4个共享的卷积块和针对不同目标的小头组成。在经验上,为不同测量值的回归目标建立额外的 disentangled 头更有效,因此我们为每个目标设置一个小头(图2)。

So far, we have introduced the overall design of our network architecture. Next, we will formulate this problem more formally and present the detailed training and inference procedure.

到目前为止,我们已经介绍了我们的网络架构的总体设计。接下来,我们将更正式地阐述这个问题,并给出详细的训练和推理过程。

Regression Targets

To begin with, we first recall the formulation of anchor-free manners for object detection in FCOS. Given a feature map at layer i i i of the backbone, denoted as F i ∈ R H × W × C F_i ∈ R^{H×W×C} Fi∈RH×W×C , we need to predict objects based on each point on this feature map, which corresponds to uniformly distributed points on the original input image. Formally, for each location ( x , y ) (x, y) (x,y) on the feature map F i F_i Fi, suppose the total stride until layer i i i is s s s, then the corresponding location on the original image should be ( x s + ⌊ s 2 ⌋ , y s + ⌊ s 2 ⌋ ) (xs+⌊\frac {s}{2}⌋,ys+⌊\frac {s}{2}⌋) (xs+⌊2s⌋,ys+⌊2s⌋). Unlike anchor-based detectors regressing targets by taking predefined anchors as a reference, we directly predict objects based on these locations. Moreover, because we do not rely on anchors, the criterion for judging whether a point is from the foreground or not will no longer be the IoU (Intersection over Union) between anchors and ground truths. Instead, as long as the point is near the box center enough, it could be a foreground point.

首先,我们首先回顾了FCOS中目标检测的无anchor方式的公式。给定backbone第 i i i层的特征图,记为 F i ∈ R H × W × C F_i∈R^{H×W×C} Fi∈RH×W×C,我们需要根据该特征图上的每个点预测对象,它对应于原始输入图像上均匀分布的点。形式上,对于特征图 F i F_i Fi上的每个位置 ( x , y ) (x,y) (x,y),假设层 i i i之前的总步幅是 s s s,那么原始图像上的相应位置应该是 ( ( ({2},+ys\frac{s}{2})$。与基于anchor检测器通过以预定义的anchor作为参考来回归目标不同,我们直接基于这些位置来预测目标。此外,由于我们不依赖anchor,判断一个点是否来自前景的标准将不再是anchor box 和GTbox之间的IoU(交集/并集)。相反,只要这个点足够靠近box的中心,它就可以是一个前景点。

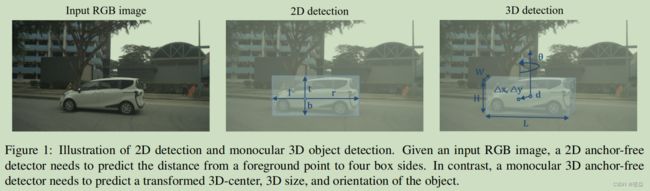

In the 2D case, the model needs to regress the distance of the point to the top/bottom/left/right side, denoted as t, b, l, r in Fig. 1. However, in the 3D case, it is non-trivial to regress the distance to six faces of the 3D bounding box. Instead, a more straightforward implementation is to convert the commonly defined 7-DoF regression targets to the 2.5D center and 3D size. The 2.5D center can be easily transformed back to 3D space with a camera intrinsic matrix. Regressing the 2.5D center could be further reduced to regressing the offset from the center to a specific foreground point, ∆x, ∆y, and its corresponding depth d respectively. In addition, to predict the allocentric orientation of the object, we divide it into two parts: angle θ with period π and 2-bin direction classification. The first component naturally models the IOU of our predictions with the ground truth boxes, while the second component focuses on the adversarial case where two boxes have opposite orientations. Benefiting from this angle encoding, our method surpasses another center-based framework, CenterNet, in terms of orientation accuracy, which will be compared in the experiments. The rotation encoding scheme is illustrated in Fig. 3.

在2D情况下,模型需要回归该点到上/下/左/右边的距离,在图1中记为t、b、l、r。然而,在3D边界盒的情况下,将距离回归到3D边界盒的六个面并不是很平凡的。相反,一个更直接的实现是将通常定义的7-DoF回归目标转换为2.5D center 和3D大小。2.5D center 可以很容易地用相机固有矩阵转换回3D空间。回归2.5D中心可以进一步减少,回归从中心到一个特定的前景点的偏移,∆x、∆y和相应的深度d。此外,为了预测物体的异中心方向,我们将其分为两部分:周期为π的角θ和2-bin方向分类。第一个部分用GTBox来模拟我们预测的IOU,而第二个部分则集中于两个box有相反方向的对抗性情况。得益于这个角度编码,我们的方法在定向精度方面超过了另一个基于中心的框架CenterNet,这将在实验中进行比较。旋转编码方案如图3所示。

In addition to these regression targets related to the location and orientation of objects, we also regress a binary target center-ness c like FCOS. It serves as a soft binary classifier to determine which points are closer to centers, and helps suppress those low-quality predictions far away from object centers. More details are presented in Sec. 3.3.

除了这些与目标的位置和方向相关的回归目标外,我们还回归了一个二元目标center-ness c,如FCOS。它作为一个软二进制分类器来确定哪些点更接近中心,并有助于抑制那些远离物体中心的低质量预测。更多的细节在Sec. 3.3. 中的介绍。

To sum up, the regression branch needs to predict δ x , δ y , d , w , l , h , θ , v x , v y , \delta x, \delta y, d, w, l, h, θ, v_x, v_y, δx,δy,d,w,l,h,θ,vx,vy, direction class C θ C_θ Cθ and centerness c while the classification branch needs to output the class label of the object and its attribute label (Fig. 2).

综上所述,回归分支需要预测 Δ x , Δ y , d , w , l , h , θ , v x , v y , \Delta x, \Delta y, d, w, l, h, θ, v_x, v_y, Δx,Δy,d,w,l,h,θ,vx,vy, 方向类 C θ C_θ Cθ和中心度c,而分类分支需要输出目标的类标签及其属性标签(图2)。

Loss

For classification and different regression targets, we define their loss respectively and take their weighted summation as the total loss.

对于分类和不同的回归目标,我们分别定义它们的损失,并将它们的加权和作为总损失。

Firstly, for classification branch, we use the commonly used focal loss [18] for object classification loss:

L c l s = − α ( 1 − p ) γ l o g p (1) L_{cls} = −\alpha (1 − p)^\gamma logp \tag{1} Lcls=−α(1−p)γlogp(1)

where p p p is the class probability of a predicted box. We follow the settings, α = 0.25 \alpha = 0.25 α=0.25 and γ = 2 \gamma = 2 γ=2, of the original paper. For attribute classification, we use a simple softmax classification loss, denoted as L a t t r L_{attr} Lattr.

首先,对于分类分支,我们使用常用的focal loss[18]来进行目标分类损失:

L c l s = − α ( 1 − p ) γ l o g p (1) L_{cls} = −\alpha (1 − p)^\gamma logp \tag{1} Lcls=−α(1−p)γlogp(1)

其中p是预测一个盒子所属类的概率。我们遵循原论文的设置, α = 0.25 \alpha = 0.25 α=0.25 和 γ = 2 \gamma = 2 γ=2。对于属性分类,我们使用一个简单的softmax classification loss ,记为 L a t t r L_{attr} Lattr。

For regression branch, we use smooth L1 loss for each regression targets except center-ness with corresponding weights considering their scales:

L l o c = ∑ b ∈ ( Δ x , Δ y , d , w , l , h , θ , v x , v y ) S m o o t h L 1 ( Δ b ) (2) L_{loc} =\sum_{b∈(\Delta x, \Delta y, d, w, l, h, θ, v_x, v_y)} SmoothL1(\Delta b) \tag{2} Lloc=b∈(Δx,Δy,d,w,l,h,θ,vx,vy)∑SmoothL1(Δb)(2)

where the weight of ∆x, ∆y, w, l, h, θ error is 1, the weight of d is 0.2 and the weight of v x , v y v_x, v_y vx,vy is 0.05. Note that although we employ e x p ( x ) exp(x) exp(x) for depth prediction, we still compute the loss in the original depth space instead of the log space. It empirically results in more accurate depth estimation ultimately. We use the softmax classification loss and binary cross entropy (BCE) loss for direction classification and center-ness regression, denoted as L d i r L_{dir} Ldir and L c t L_{ct} Lct respectively. Finally, the total loss is:

L = 1 N p o s ( β c l s L c l s + β a t t r L a t t r + β l o c L l o c + β d i r L d i r + β c t L c t ) (3) L = \frac{1} {N_{pos}} (\beta_{cls}L_{cls} + \beta_{attr}L_{attr} + \beta_{loc}L_{loc} + \beta_{dir}L_{dir} + \beta_{ct}L_{ct}) \tag{3} L=Npos1(βclsLcls+βattrLattr+βlocLloc+βdirLdir+βctLct)(3)

where N p o s N_{pos} Npos is the number of positive predictions and β c l s = β a t t r = β l o c = β d i r = β c t = 1 \beta_{cls} = \beta_{attr} = \beta_{loc} = \beta_{dir} = \beta_{ct} = 1 βcls=βattr=βloc=βdir=βct=1.

对于回归分支,除了center-ness考虑它们的尺度有相应的权重外,我们对每个回归目标使用SmoothL1损失,:

L l o c = ∑ b ∈ ( Δ x , Δ y , d , w , l , h , θ , v x , v y ) S m o o t h L 1 ( Δ b ) (2) L_{loc} =\sum_{b∈(\Delta x, \Delta y, d, w, l, h, θ, v_x, v_y)} SmoothL1(\Delta b) \tag{2} Lloc=b∈(Δx,Δy,d,w,l,h,θ,vx,vy)∑SmoothL1(Δb)(2)

其中∆x、∆y、w、l、h、θ误差为1,d的权重为0.2, v x v_x vx的权重为0.05, v y v_y vy的权重为0.05。注意,虽然我们使用 e x p ( x ) exp (x) exp(x)进行深度预测,但我们仍然计算原始深度空间的损失,而不是对数空间。通过经验,最终可以得到更准确的深度估计。我们使用softmax分类损失和二元交叉熵(BCE)损失进行方向分类和center-ness回归,分别记为 L d i r L_{dir} Ldir和 L c t L_{ct} Lct。最后,总损失为:

L = 1 N p o s ( β c l s L c l s + β a t t r L a t t r + β l o c L l o c + β d i r L d i r + β c t L c t ) (3) L = \frac{1} {N_{pos}} (\beta_{cls}L_{cls} + \beta_{attr}L_{attr} + \beta_{loc}L_{loc} + \beta_{dir}L_{dir} + \beta_{ct}L_{ct}) \tag{3} L=Npos1(βclsLcls+βattrLattr+βlocLloc+βdirLdir+βctLct)(3)

其中 N p o s N_{pos} Npos为正样本预测的数量并且 β c l s = β a t t r = β l o c = β d i r = β c t = 1 \beta_{cls} = \beta_{attr} = \beta_{loc} = \beta_{dir} = \beta_{ct} = 1 βcls=βattr=βloc=βdir=βct=1。

Inference

During inference, given an input image, we forward it through the framework and obtain bounding boxes with their class scores, attribute scores, and center-ness predictions. We multiply the class score and center-ness as the confidence for each prediction and conduct rotated Non-Maximum Suppression (NMS) in the bird view as most 3D detectors to get the final results.

在推理过程中,给定一个输入图像,我们将其通过框架前向传播,并获得包含类分数、属性分数和center-ness预测的边界框。我们将类得分和center-ness的乘积作为每个预测的置信度,并像大多数3D检测器一样,在鸟视图中进行旋转非最大抑制(NMS)以得到最终结果。

3.2 2D Guided Multi-Level 3D Prediction

As mentioned previously, to train a detector with pyramid networks, we need to devise a strategy to distribute targets to different feature levels. FCOS [31] has discussed two crucial issues therein: 1) How to enable anchor-free detectors to achieve similar Best Possible Recall (BPR) compared to anchor-based methods, 2) Intractable ambiguity problem caused by overlaps of ground-truth boxes. The comparison in the original paper has well addressed the

first problem. It shows that multi-level prediction through FPN can improve BPR and even achieve better results than anchor-based methods. Similarly, the conclusion of this problem is also applicable in our adapted framework. The second question will involve the specific setting of the regression target, which we will discuss next.

如前所述,为了用金字塔网络训练检测器,我们需要设计一种策略来将目标分配到不同的特征级别。FCOS [31]在其中讨论了两个关键问题: 1) 如何得到与基于anchor的检测器相同的最佳可能召回(BPR);2)由GTBox重叠引起的难以处理的不确定性问题。FOCS 2D目标检测论文中的比较已经很好地解决了第一个问题。结果表明,通过FPN的多层次预测可以提高BPR,甚至获得更好的效果。同样,这个问题的结论也适用于我们所改写的框架。第二个问题将涉及回归目标的具体设置,我们将在接下来讨论。

The original FCOS detects objects of different sizes in different levels of feature maps. Different from anchor-based methods, instead of assigning anchors with different sizes, it directly assigns ground-truth boxes with different sizes to different levels of feature maps. Formally, it first computes the 2D regression targets, l ∗ , r ∗ , t ∗ , b ∗ l^∗, r^∗, t^∗, b^∗ l∗,r∗,t∗,b∗ for each location at each feature level. Then locations satisfying m a x ( l ∗ , r ∗ , t ∗ , b ∗ ) > m i max(l^∗, r^∗, t^∗, b^∗) > m_i max(l∗,r∗,t∗,b∗)>mi or m a x ( l ∗ , r ∗ , t ∗ , b ∗ ) < m i − 1 max(l^∗, r^∗, t^∗, b^∗) < m_{i−1} max(l∗,r∗,t∗,b∗)<mi−1 would be regarded as a negative sample, where m i m_i mi denotes

the maximum regression range for feature level i i i(We set the regression range as (0, 48, 96, 192, 384, ∞) for m2 to m7 in our experiments respectively.). In comparison, we also follow this criterion in our implementation, considering that the scale of 2D detection is directly consistent with how large a region we need to focus on. However, we only use 2D detection for filtering meaningless targets in this assignment step. After completing the target assignment, our regression targets only include 3D-related ones. Here we generate the 2D bounding boxes by computing the exterior rectangle of projected 3D bounding boxes, so we do not need any 2D detection annotations or priors.

原始的FCOS在不同级别的特征映射中检测不同大小的目标。与基于anchor的方法不同,它不分配不同大小的anchor,而是直接将不同大小的GTBox分配给不同级别的特征图。在形式上,它首先计算每个特征层次上每个位置的2D回归目标, l ∗ , r ∗ , t ∗ , b ∗ l^∗,r^∗,t^∗,b^∗ l∗,r∗,t∗,b∗。然后满足 m a x ( l ∗ , r ∗ , t ∗ , b ∗ ) > m i max(l^∗,r^∗,t^∗,b^∗)>m_i max(l∗,r∗,t∗,b∗)>mi或 m a x ( l ∗ , r ∗ , t ∗ , b ∗ ) < m i − 1 max(l^∗,r^∗,t^∗,b^∗)

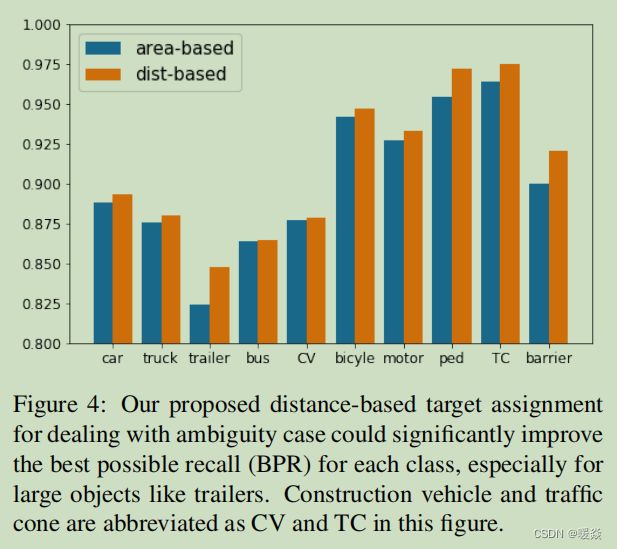

Next, we will discuss how to deal with the ambiguity problem. Specifically, when a point is inside multiple ground truth boxes in the same feature level, which box should be assigned to it? The usual way is to select according to the area of the 2D bounding box. The box with a smaller area is selected as the target box for this point. We call this scheme the area-based criterion. This scheme has an obvious drawback: Large objects will be paid less attention by such processing, which is also verified by our experiments (Fig. 4). Taking this into account, we instead propose a distance-based criterion, i.e., select the box with closer center as the regression target. This scheme is consistent with the adapted center-based mechanism for defining regression targets. Furthermore, it is also reasonable because the points closer to the object’s center can obtain more comprehensive and balanced local region features, thus easily producing higher-quality predictions. Through simple verification (Fig. 4), we find that this scheme significantly improves the best possible recall (BPR) and mAP of large objects and also improves the overall mAP (about 1%), which will be presented in the ablation study.

接下来,我们将讨论如何处理不确定性问题。具体而言,当一个位置处于相同特征级别的多个GTBoxes中时,应该指定哪个box给它?通常的方法是根据2D边界框的面积进行选择。选择较小面积的box作为该位置的目标框。我们称这个方案为基于面积的准则。这个方案有一个很明显的缺点:这种处理对大目标的关注较少,我们的实验也证实了这一点(如图4所示)。考虑到这一点,我们提出了一个基于距离的准则,即选择中心较近的方框作为回归目标。这一方案与以基于中心的结构确定回归目标的机制是一致的。此外,这也是合理的,因为更靠近物体中心的点可以获得更全面和平衡的局部区域特征,从而很容易产生更高质量的预测。通过简单的验证(图4),我们发现该方案显著提高了大物体的最佳召回率(BPR)和mAP,也提高了整体mAP(约1%),这将在消融研究中提出。

In addition to the center-based approach to deal with ambiguity, we also use the 3D-center to determine foreground points, i.e., only the points near the center enough will be regarded as positive samples. We define a hyper-parameter, radius, to measure this central portion. The points with a distance smaller than radius×stride to the object center would be considered positive, where the radius is set to 1.5 in our experiments.

除了基于中心的方法来处理不确定性之外,我们还使用3D-center来确定前景点,即只有足够靠近中心的点才被视为正样本。我们定义了一个超参数,半径,来测量这个中心部分。距离小于半径×步长的点被认为是正的,在我们的实验中半径设置为1.5。

Finally, we replace each output x of different regression branches with six to distinguish shared heads for different feature levels. Here s i s_i si is a trainable scalar used to adjust the exponential function base for feature level i. It brings a minor improvement in terms of detection performance.

最后,我们将不同回归分支的每个输出x替换为6个区分不同特征级别的共享头。这里的 s i s_i si是一个可训练的标量,用于调整特征级 i i i的指数函数基。它在检测性能方面有了一个小的改进。

3.3 3D Center-ness with 2D Gaussian Distribution

In the original design of FCOS, center-ness c is defined by 2D regression targets, l ∗ , r ∗ , t ∗ , b ∗ l^∗, r^∗, t^∗, b^∗ l∗,r∗,t∗,b∗ :

c e n t e r n e s s ∗ = m i n ( l ∗ , r ∗ ) m a x ( l ∗ , r ∗ ) × m i n ( t ∗ , b ∗ ) m a x ( t ∗ , b ∗ ) (4) centerness^*=\sqrt{\frac {min(l^*,r^*)}{max(l^*,r^*)} \times \frac {min(t^*,b^*)}{max(t^*,b^*)}} \tag{4} centerness∗=max(l∗,r∗)min(l∗,r∗)×max(t∗,b∗)min(t∗,b∗)(4)

Because our regression targets are changed to the 3D center-based paradigm, we define the center-ness by 2D Gaussian distribution with the projected 3D-center as the origin. The 2D Gaussian distribution is simplified as:

c = e − α ( ( Δ x ) 2 + ( Δ y ) 2 ) (5) c = e^{-\alpha ((\Delta x)^2+(\Delta y)^2)} \tag{5} c=e−α((Δx)2+(Δy)2)(5)

Here α \alpha α is used to adjust the intensity attenuation from the center to the periphery and set to 2.5 in our experiments. We take it as the ground truth of center-ness and predict it from the regression branch for filtering low-quality predictions later. As mentioned earlier, this center-ness target ranges from 0 to 1, so we use the Binary Cross Entropy (BCE) loss for training that branch.

在FCOS的原始设计中,center-ness c由二维回归目标定义, l ∗ , r ∗ , t ∗ , b ∗ l^∗, r^∗, t^∗, b^∗ l∗,r∗,t∗,b∗ :

c e n t e r n e s s ∗ = m i n ( l ∗ , r ∗ ) m a x ( l ∗ , r ∗ ) × m i n ( t ∗ , b ∗ ) m a x ( t ∗ , b ∗ ) (4) centerness^*=\sqrt{\frac {min(l^*,r^*)}{max(l^*,r^*)} \times \frac {min(t^*,b^*)}{max(t^*,b^*)}} \tag{4} centerness∗=max(l∗,r∗)min(l∗,r∗)×max(t∗,b∗)min(t∗,b∗)(4)

因为我们的回归目标被更改为基于 3D center-based 的范式,所以我们以投影的 3D-center 为原点,用二维高斯分布来定义center-ness。将二维高斯分布简化为:

c = e − α ( ( Δ x ) 2 + ( Δ y ) 2 ) (5) c = e^{-\alpha ((\Delta x)^2+(\Delta y)^2)} \tag{5} c=e−α((Δx)2+(Δy)2)(5)

在这里, α \alpha α用于调整从中心到外围的衰减强度,并在我们的实验中设置为2.5。我们将其作为center-ness的GT,从回归分支进行预测,以便过滤低质量的预测。如前所述,这个center-ness目标的范围从0到1,所以我们使用二元交叉熵(BCE)损失来训练该分支。

4 Experimental Setup

4.1 Dataset

We evaluate our framework on a large-scale, commonly used dataset, nuScenes [3]. It consists of multi-modal data collected from 1000 scenes, including RGB images from 6 surround-view cameras, points from 5 Radars and 1 LiDAR. It is split into 700/150/150 scenes for training/validation/testing. There are overall 1.4M annotated 3D bounding boxes from 10 categories. Due to its variety of scenes and ground truths, it is becoming one of the authoritative benchmarks for 3D object detection. Therefore, we take it as the platform to validate the efficacy of our method.

我们在一个大规模的、常用的数据集nuScenes [3]上评估我们的框架。它由从1000个场景收集的多模态数据组成,包括6个环绕视野摄像机的RGB图像,5个雷达和1个激光雷达。它被分成700/150/150个场景,用于训练/验证/测试。总共有来自10个类别的140万个带注释的3D边界框。由于其场景和标签的多样性,它正成为3D目标检测的权威基准之一。因此,我们将其作为验证我们方法的有效性平台。

4.2 Evaluation Metrics

We use the official metrics, distance-based mAP, and NDS for a fair comparison with other methods. Next, we briefly introduce these two kinds of metrics as follows.

我们使用官方度量、基于距离的mAP和NDS与其他方法进行公平的比较。接下来,我们将简要介绍以下这两种指标。

Average Precision metric

The Average Precision (AP) metric is generally used when evaluating the performance

of object detectors. Instead of using 3D Intersection over Union (IoU) for thresholding, nuScenes defines the match by 2D center distance d d d on the ground plane for decoupling detection from object size and orientation. On this basis, we calculate AP by computing the normalized area under the precision-recall curve for recall and precision over 10%. Finally, mAP is computed over all matching thresholds, D = 0.5 , 1 , 2 , 4 \mathbb D = {0.5, 1, 2, 4} D=0.5,1,2,4 meters, and all categories C C C:

m A P = 1 ∣ C ∣ ∣ D ∣ ∑ c ∈ C ∑ d ∈ D A P c , d (6) mAP=\frac {1}{|\mathbb C||\mathbb D|}\sum_{c \in \mathbb C}\sum_{d \in \mathbb D}AP_{c,d}\tag{6} mAP=∣C∣∣D∣1c∈C∑d∈D∑APc,d(6)

平均精度(AP)度量指标通常用于评估目标检测器的性能。nuScenes定义了基平面上的2D中心距离 d d d的匹配,以解耦目标大小和方向的检测。在此基础上,我们通过计算召回率和精度超过10%的P-R曲线下的归一化面积来计算AP。最后,在所有匹配的阈值、 D = 0.5 , 1 , 2 , 4 \mathbb D = {0.5, 1, 2, 4} D=0.5,1,2,4米和所有类别 C C C上计算mAP

m A P = 1 ∣ C ∣ ∣ D ∣ ∑ c ∈ C ∑ d ∈ D A P c , d (6) mAP=\frac {1}{|\mathbb C||\mathbb D|}\sum_{c \in \mathbb C}\sum_{d \in \mathbb D}AP_{c,d}\tag{6} mAP=∣C∣∣D∣1c∈C∑d∈D∑APc,d(6)

True Positive metrics

Apart from Average Precision, we also calculate five kinds of True Positive metrics, Average

Translation Error (ATE), Average Scale Error (ASE), Average Orientation Error (AOE), Average Velocity Error (AVE) and Average Attribute Error (AAE). To obtain these measurements, we firstly define that predictions with center distance from the matching ground truth d ≤ 2m will be regarded as true positives (TP). Then matching and scoring are conducted independently for each class of objects, and each metric is the average cumulative mean at each recall level above 10%. ATE is the Euclidean center distance in 2D (m). ASE is equal to 1 − IOU, IOU is calculated between predictions and labels after aligning their translation and orientation. AOE is the smallest yaw angle difference between predictions and labels (radians). Note that different from other classes measured on the entire 360◦ period, barriers are measured on 180◦ period. AVE is the L2-Norm of the absolute velocity error in 2D (m/s). AAE is defined as 1−acc, where acc refers to the attribute classification accuracy. Finally, given these metrics, we compute the mean TP metric (mTP) overall all categories:

m T P = 1 c ∑ c ∈ C T P c (7) mTP=\frac {1}{\mathbb c}\sum_{c\in\mathbb C}TP_c\tag{7} mTP=c1c∈C∑TPc(7)

Note that not well-defined metrics will be omitted, like AVE for cones and barriers, considering they are stationary.

除了AP,我们还需要计算5种真正例指标,Average Translation Error (ATE), Average Scale Error (ASE), Average Orientation Error (AOE), Average Velocity Error (AVE) and Average Attribute Error (AAE)。为了获得这些测量值,我们首先定义与中心距离匹配的ground truth d≤2m的预测值将被视为真正例(TP)。然后对每一类对象独立进行匹配和评分,每个度量是每个10%以上召回率的平均累积平均值。ATE是2D(m)中的欧氏中心距离。ASE等于1−IOU,IOU是在预测和标签对准其转换和方向后计算。AOE是预测和标签之间最小的偏航角差(弧度)。请注意,与在整个360◦周期上测量的其他类别不同,barriers是在180◦周期上测量的。AVE是二维(m/s)中绝对速度误差的L2-范数。AAE定义为1−acc,其中acc为属性分类精度。最后,给定这些指标,我们计算了总体上所有类别的平均TP指标(mTP):

m T P = 1 c ∑ c ∈ C T P c (7) mTP=\frac {1}{\mathbb c}\sum_{c\in\mathbb C}TP_c\tag{7} mTP=c1c∈C∑TPc(7)

请注意,没有明确定义的指标将被省略,如cones和 barriers的AVE,考虑到它们是静止的。

NuScenes Detection Score

The conventional mAP couples the evaluation of locations, sizes, and orientations of

detections and also could not capture some aspects in this setting like velocity and attributes, so this benchmark proposes a more comprehensive, decoupled but simple metric,

nuScenes detection score (NDS):

N D S = 1 10 [ 5 m A P + ∑ m T P ∈ T P ( 1 − m i n ( 1 , m T P ) ) ] (8) NDS=\frac{1}{10}[5mAP+\sum_{mTP\in\mathbb T\mathbb P}(1-min(1,mTP))]\tag{8} NDS=101[5mAP+mTP∈TP∑(1−min(1,mTP))](8)

where mAP is mean Average Precision (mAP) and TP is the set composed of five True Positive metrics. Considering mAVE, mAOE and mATE can be larger than 1, a bound is applied to limit them between 0 and 1.

传统的mAP结合了检测的位置、大小和方向的评估,这个设置环境下也不能捕获一些方面,如速度和属性,因此这个基准提出了一个更全面、解耦但简单的度量,nuScenes检测分数(NDS):

N D S = 1 10 [ 5 m A P + ∑ m T P ∈ T P ( 1 − m i n ( 1 , m T P ) ) ] (8) NDS=\frac{1}{10}[5mAP+\sum_{mTP\in\mathbb T\mathbb P}(1-min(1,mTP))]\tag{8} NDS=101[5mAP+mTP∈TP∑(1−min(1,mTP))](8)

其中,mAP是平均平均精度(mAP),TP是由5个真正例指标组成的集合。考虑到mAVE,mAOE和mATE可以大于1,则应用一个界将它们限制在0和1之间。

4.3 Implementation Details

Network Architectures

As shown in Fig. 2, our framework follows the design of FCOS. Given the input image, we utilize ResNet101 as the feature extraction backbone followed by Feature Pyramid Networks (FPN) for generating multi-level predictions. Detection heads are shared among multi-level feature maps except that three scale factors are used to differentiate some of their final regressed results, including offsets, depths, and sizes, respectively. All the convolutional modules are made up of basic convolution, batch normalization, and activation layers, and normal distribution is leveraged for weights initialization. The overall framework is built on top of MMDetection3D [6].

如图2所示,我们的框架遵循了FCOS的设计。给定输入的图像,利用ResNet101作为特征提取的backbone,然后使用特征金字塔网络(FPN)来生成多层次的预测。在多层特征图中共享检测头,除了使用三个尺度因子来区分它们的一些最终回归结果,分别包括偏移量、深度和大小。所有的卷积模块都由基本的卷积、批处理归一化和激活层组成,并利用正态分布进行权值初始化。整个框架建立在MMDetection3D[6]之上。

Training Parameters

For all experiments, we trained randomly initialized networks from scratch following end-to-end manners. Models are trained with an SGD optimizer. Gradient clip and warm-up policy are exploited with the learning rate 0.002, the number of warm-up iterations 500, warm-up ratio 0.33, and batch size 32 on 16 GTX 1080Ti GPUs. We apply a weight of 0.2 for depth regression to train our baseline model to make the training more stable. For a more competitive performance and a more accurate detector, we finetune our model with this weight switched to 1. Related results are presented in the ablation study.

在所有实验中,我们从端到端方式从头训练随机初始化的网络。模型用一个SGD优化器进行训练。在16个GTX 1080Ti GPU上,学习率为0.002,预热迭代次数为500,预热率为0.33,批量大小为32。我们应用0.2的权重进行深度回归来训练我们的基准模型,使训练更稳定。为了获得更有竞争力的性能和更精确的检测器,我们将这个权重切换为1来调整我们的模型。在消融的研究中显示了相关的结果。

Data Augmentation

Like previous work, we only implement image flip for data augmentation both when training

and testing. Note that only the offset is needed to be flipped as 2D attributes and 3D boxes need to be transformed correspondingly in 3D space when flipping images. For test time augmentation, we average the score maps output by the detection heads except rotation and velocity related scores due to their inaccuracy. It is empirically a more efficient approach for augmentation than merging boxes at last.

与之前的工作一样,我们只在训练和测试时实现图像翻转的数据增强。请注意,当翻转图像时,只需要将偏移量作为2D属性进行翻转,而3D边框需要在3D空间中相应地进行转换。对于测试时间的增加,我们将检测头输出的分数图进行平均(除了旋转和速度相关的分数,因为它们的不准确性)。在经验上,这是一种比最终合并boxes更有效的增强方法。

5 Results

In this section, we present quantitative and qualitative results and make a detailed ablation study on essential factors in pushing our method towards the state-of-the-art.

在本节中,我们将介绍定量和定性的结果,并对推动我们的方法走向最先进水平的基本因素进行了详细的消融研究。

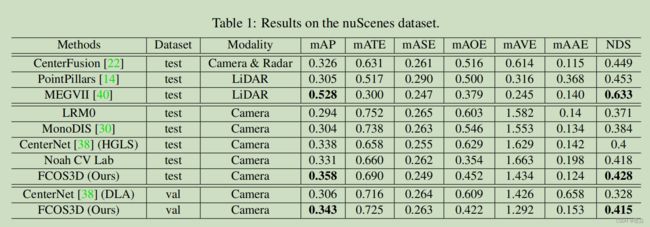

5.1 Quantitative Analysis

First, we show the results of quantitative analysis in Tab. 1. We compare the results on the test set and validation set, respectively. We first compared all the methods using RGB images as the input data on the test set. We achieved the best performance among them with mAP 0.358 and NDS 0.428. In particular, our method exceeded the previous best one by more than 2% in terms of mAP. Benchmarks using LiDAR data as the input include PointPillars [14], which are faster and lighter, and CBGS [40] (MEGVII in the Tab. 1) with relatively high performance. For the approaches which use the input of RGB image and Radar data, we select CenterFusion [22] as the benchmark. It can be seen that although our method has a certain gap with the high-performance CBGS, it even surpasses PointPillars and CenterFusion on mAP. It shows that we can solve this ill-posed problem decently with enough data. At the same time, it can be seen that the methods using other modals of data have relatively better NDS, mainly because the mAVE is smaller. The reason is that other methods introduce continuous multi-frame data, such as point cloud data from consecutive frames, to predict the speed of objects. In addition, Radars can measure the velocity, so CenterFusion can achieve reasonable speed prediction even with a single frame image. However, these can not be achieved with only a single image, so how to mine the speed information from consecutive frame images will be one of the directions that can be explored in the future. For detailed mAP for each category, please refer to Tab. 2 and the official benchmark.

首先,我们在表1中显示了定量分析的结果。 我们分别比较了在测试集和验证集上的结果。我们首先使用RGB图像作为测试集上的输入数据来比较所有的方法。其中mAP为0.358,NDS为0.428。特别是,我们的方法在mAP方面比之前的最佳方法多出了2%以上。使用激光雷达数据作为输入的基准测试包括更快更轻PointPillars[14],和具有相对较高性能的CBGS[40](Tab中的MEGVII。1)。对于使用RGB图像和雷达数据输入的方法,我们选择CenterFusion [22]作为基准。可以看出,虽然我们的方法与高性能的CBGS有一定的差距,但它的mAP甚至超过了PointPillars和CenterFusion。这表明,我们可以用足够的数据体面地解决这个不适定的问题。同时,也可以看出,使用其他数据模式的方法有相对较好的NDS,这主要是因为mAVE较小。其原因是其他方法也引入了连续的多帧数据,如来自连续帧的点云数据,来预测物体的速度。此外,雷达可以测量速度,因此CenterFusion即使只有单帧图像也可以实现合理的速度预测。然而,这并不能仅仅用一幅图像来实现,所以如何从连续的帧图像中挖掘速度信息将是未来可以探索的方向之一。有关每个类别的详细mAP,请参考表2和官方基准。

On the validation set, we compare our method with the best open-source detector, CenterNet. Their method not only takes about three days to train (compared with our only one day to achieve comparable performance, possibly thanks to our pre-trained backbone) but also is inferior to our method except for mATE. In particular, thanks to our rotation encoding scheme, we achieved a significant improvement in the accuracy of angle prediction. The significant improvement of mAP reflects the superiority of our multi-level prediction. Based on all the improvements in these aspects, we finally achieved a gain of about 9% on NDS.

在验证集上,我们将我们的方法与最佳的开源检测器CenterNet进行了比较。他们的方法不仅需要大约三天的训练时间(而我们只有一天就能达到类似的性能,这可能要归功于我们的预先训练过的骨干),而且除了mATE之外,它也不如我们的方法。特别是,由于我们的旋转编码方案,我们在角度预测的精度上取得了显著的提高。mAP的显著改进反映了我们的多层预测的优越性。基于这些方面的所有改进,我们最终在NDS上实现了约9%的收益。

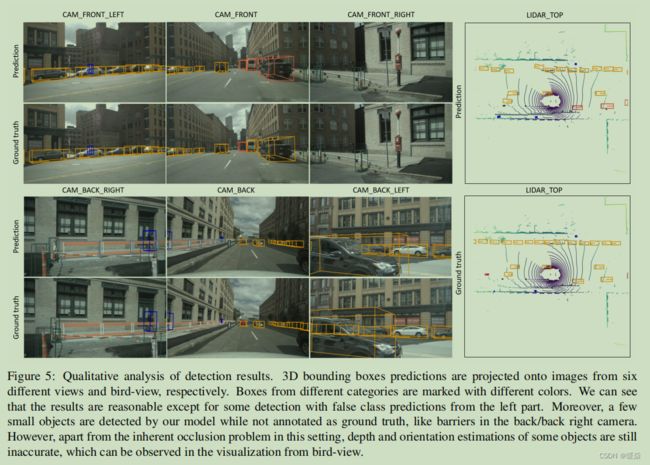

5.2 Qualitative Analysis

Then we show some qualitative results in Fig. 5 to give an intuitive understanding of the performance of our model. First of all, in Fig. 5, we draw the predicted 3D bounding boxes in the six-view images and the top-view point clouds. For example, the barriers in the camera at the rear right are not labeled but detected by our model. However, at the same time, we should also see that our method still has apparent problems in the depth estimation and identification of occluded objects. For example, it is difficult to detect the blocked car in the left rear image. Moreover, from the top view, especially in terms of depth estimation, results are not as good as those shown in the image. This is also in line with our expectation that depth estimation is still the core challenge in this ill-posed problem.

然后,我们在图5中给出了一些定性的结果,以直观地理解我们的模型的性能。首先,在图5中,我们在六视图图像和顶视图点云中绘制了预测的三维边界框。例如,右后部摄像头中的障碍物没有被标记,而是被我们的模型检测到。然而,与此同时,我们也应该看到,我们的方法在被遮挡物体的深度估计和识别方面仍然存在明显的问题。例如,在左后图像中很难检测到被堵塞的汽车。此外,从俯视图来看,特别是在深度估计方面,结果不如图像中所显示的结果好。这也与我们的预期相一致,即深度估计仍然是这个不适定问题的核心挑战。

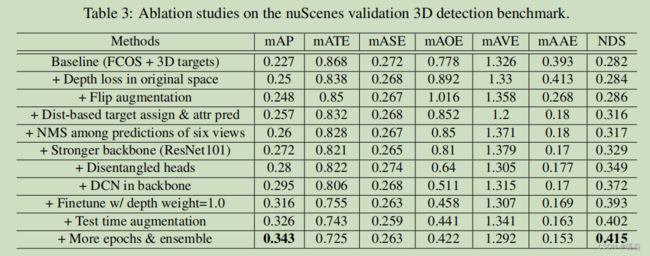

Ablation Studies

Finally, we show some critical factors in the whole process of studying in Tab. 3. It can be seen that in the prophase process, transforming depth back to the original space to compute loss is an essential factor to improve mAP, and distance-based target assignment is an essential factor to improve the overall NDS. The stronger backbone, such as replacing the original ResNet50 with ResNet101 and using DCN, is crucial in the later promotion process. At the same time, due to the difference in scales and measurements, using disentangled heads for different regression targets is also a meaningful way to improve the accuracy of angle prediction and NDS. Finally, we achieve the current state-of-the-art through simple augmentation, more training epochs, and a basic model ensemble.

最后,我们在表3中展示了整个研究过程中的一些关键因素。 可以看出,在前期过程中,将深度转换回原始空间来计算损失是提高mAP的重要因素,而基于距离的目标分配是提高整体NDS的重要因素。更强的backbone,比如用ResNet101取代原来的ResNet50和使用DCN,在以后的推广过程中是至关重要的。同时,由于尺度和测量值的差异,对不同的回归目标使用解纠缠头也是提高角度预测和NDS精度的一种有意义的方法。最后,我们通过简单的增强、更多的训练时代和一个基本的模型集成来实现当前最先进的技术。

6 Conclusion

This paper proposes a simple yet efficient one-stage framework, FCOS3D, for monocular 3D object detection without any 2D detection or 2D-3D correspondence priors. In the framework, we first transform the commonly defined 7-DoF 3D targets to the image domain and decouple them as 2D and 3D attributes to fit the 3D setting. On this basis, the objects are distributed to different feature levels considering their 2D scales and further assigned only according to the 3D centers. In addition, the center-ness is redefined with a 2D Gaussian distribution based on the 3D-center to be compatible with our target formulation. Experimental results with detailed ablation studies show the efficacy of our approach. For future work, a promising direction is how to better tackle the difficulty of depth and orientation estimation in this ill-posed setting.

本文提出了一种简单而有效的一阶段框架FCOS3D,用于没有任何2D检测或2D-3D对应先验的单目3D目标检测。在该框架中,我们首先将通常定义的7-DoF 3D目标转换到图像域,并将它们解耦为二维和三维属性,以适应三维设置。在此基础上,将物体的二维尺度分布到不同的特征层次上,并仅根据三维中心进行进一步分配。此外,采用基于三维中心的二维高斯分布重新定义center-ness,以与我们的目标公式兼容。经过详细的消融研究的实验结果表明了我们的方法的有效性。在未来的工作中,一个很有前途的方向是如何更好地解决深度和方向估计在这个不适定条件下的困难。

Appendix

1 Failure Cases

In Fig. 6, we show some failure cases, mainly focused on the detection of large objects and occluded objects. In the camera view and top view, yellow dotted circles are used to mark the blocked objects that are not successfully detected. Red dotted circles are used to mark the detected large objects with noticeable deviation. The former is mainly manifest in the failure to find the objects behind, while the latter is mainly manifest in the inaccurate estimation of the size and orientation of the objects. The reasons behind the two failure cases are also different. The former is due to the inherent property of the current setting, which is difficult to solve; the latter may be because the receptive field of convolution kernel of the current model is not large enough, resulting in low performance of large object detection. Therefore, the future research direction may be more focused on the solution of the latter.

在图6中,我们展示了一些失败案例,主要集中在大物体和遮挡物体的检测。在摄影机视图和顶视图中,黄色虚线圆圈用于标记未成功检测到的被阻止的对象。红色虚线圈是用来标记检测到的有明显偏差的大物体。前者主要表现在找到后面的物体,而后者主要表现在对物体大小和方向的不准确估计。这两个失败案例背后的原因也有所不同。前者是由于当前设置的固有特性,难以解决;后者可能是由于当前模型的卷积核的感受野不够大,导致大目标检测的性能较低。因此,未来的研究方向可能会更多地关注后者的解决方案。

2 Results on the KITTI Benchmark

We provide FCOS3D baseline results on the KITTI benchmark in the follow-up work, PGD [33]. Since the number of samples on KITTI is limited, vanilla FCOS3D cannot achieve outstanding performance. With the basic enhancement of local geometric constraints and customized designs for depth estimation, PGD (can also be termed as FCOS3D++) finally achieves state-of-the-art or competitive

performance on various benchmarks under different evaluation metrics. Please refer to the paper [33] for more details.

我们在后续工作中,PGD [33]中提供了关于KITTI基准的FCOS3D基准结果。由于KITTI上的样本数量有限,香草FCOS3D无法达到出色的性能。通过局部几何约束的基本增强和深度估计的定制设计,PGD(也可以称为FCOS3D++)最终在不同的评估指标下的各种基准上实现了最先进的或有竞争力的性能。详情请参考论文[33]。

References

[1] Garrick Brazil and Xiaoming Liu. M3d-rpn: Monocular 3d region proposal network for object detection. In IEEE International Conference on Computer Vision, 2019. 1, 2

[2] Garrick Brazil, Gerard Pons-Moll, Xiaoming Liu, and Bernt Schiele. Kinematic 3d object detection in monocular video. In Proceedings of the European Conference on Computer Vision, 2020. 1

[3] Holger Caesar, Varun Bankiti, Alex H. Lang, Sourabh Vora, Venice Erin Liong, Qiang Xu, Anush Krishnan, Yu Pan, Giancarlo Baldan, and Oscar Beijbom. nuscenes: A multimodal dataset for autonomous driving. CoRR, abs/1903.11027, 2019. 2, 5

[4] Xiaozhi Chen, Kaustav Kundu, Yukun Zhu, Andrew G. Berneshawi, Huimin Ma, Sanja Fidler, and Raquel Urtasun. 3d object proposals for accurate object class detection. In Conference on Neural Information Processing Systems, 2015. 2

[5] Yongjian Chen, Lei Tai, Kai Sun, and Mingyang Li. Monopair: Monocular 3d object detection using pairwise spatial relationships. In IEEE Conference on Computer Vision and Pattern Recognition, 2020. 2

[6] MMDetection3D Contributors. MMDetection3D: OpenMMLab next-generation platform for general 3D object detection. https://github.com/open-mmlab/mmdetection3d, 2020. 6

[7] Jifeng Dai, Haozhi Qi, Yuwen Xiong, Yi Li, Guodong Zhang, Han Hu, and Yichen Wei. Deformable convolutional networks. In IEEE International Conference on Computer Vision, 2017. 3

[8] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Fei-Fei Li. Imagenet: A large-scale hierarchical image database. In IEEE Conference on Computer Vision and Pattern Recognition, 2009. 3

[9] Mingyu Ding, Yuqi Huo, Hongwei Yi, Zhe Wang, Jianping Shi, Zhiwu Lu, and Ping Luo. Learning depth-guided convolutions for monocular 3d object detection. In IEEE Conference on Computer Vision and Pattern Recognition, 2020.1

[10] Ross Girshick. Fast r-cnn. In IEEE International Conference on Computer Vision, 2015. 2

[11] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In IEEE Conference on Computer Vision and Pattern Recognition, 2016.3

[12] Lichao Huang, Yi Yang, Yafeng Deng, and Yinan Yu. Densebox: Unifying landmark localization with end to end object detection. In IEEE Conference on Computer Vision and Pattern Recognition, 2015. 2

[13] Eskil J¨orgensen, Christopher Zach, and Fredrik Kahl. Monocular 3d object detection and box fitting trained end-to-end using intersection-over-union loss. CoRR, abs/1906.08070, 2019. 1, 2

[14] Alex H. Lang, Sourabh Vora, Holger Caesar, Lubing Zhou, Jiong Yang, and Oscar Beijbom. Pointpillars: Fast encoders for object detection from point clouds. In IEEE Conference

on Computer Vision and Pattern Recognition, 2019. 1, 6, 7

[15] Hei Law and Jia Deng. Cornernet: Detecting objects as paired keypoints. In European Conference on Computer Vision, 2018. 2

[16] Peixuan Li, Huaici Zhao, Pengfei Liu, and Feidao Cao. Rtm3d: Real-time monocular 3d detection from object keypoints for autonomous driving. In European Conference on Computer Vision, 2020. 1, 2

[17] Tsung-Yi Lin, Piotr Doll´ar, Ross Girshick, Kaiming He, Bharath Hariharan, and Serge Belongie. Feature pyramid networks for object detection. In IEEE Conference on Computer Vision and Pattern Recognition, 2017. 3

[18] Tsung-Yi Lin, Priya Goyal, Ross Girshick, Kaiming He, and Piotr Doll´ar. Focal loss for dense object detection. In IEEE Conference on Computer Vision and Pattern Recognition,

2017. 1, 3, 4

[19] Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed, Cheng-Yang Fu, and Alexander C. Berg. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, 2016. 2

[20] Fabian Manhardt, Wadim Kehl, and Adrien Gaidon. Roi-10d: Monocular lifting of 2d detection to 6d pose and metric shape. In IEEE Conference on Computer Vision and Pattern

Recognition, 2019. 1

[21] Arsalan Mousavian, Dragomir Anguelov, John Flynn, and Jana Kosecka. 3d bounding box estimation using deep learning and geometry. In IEEE Conference on Computer Vision and Pattern Recognition, 2017. 2

[22] Ramin Nabati and Hairong Qi. Centerfusion: Center-based radar and camera fusion for 3d object detection. In IEEE Winter Conference on Applications of Computer Vision, 2020. 6, 7

[23] Rui Qian, Divyansh Garg, Yan Wang, Yurong You, Serge Belongie, Bharath Hariharan, Mark Campbell, Kilian Q Weinberger, and Wei-Lun Chao. End-to-end pseudo-lidar for image-based 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5881–5890, 2020. 2

[24] Cody Reading, Ali Harakeh, Julia Chae, and Steven L. Waslander. Categorical depth distributionnetwork for monocular 3d object detection. CVPR, 2021. 2

[25] Joseph Redmon, Santosh Divvala, Ross Girshick, and Ali Farhadi. You only look once: Unified, real-time object detection. In IEEE Conference on Computer Vision and Pattern

Recognition, 2016. 2

[26] Joseph Redmon and Ali Farhadi. Yolo9000: Better, faster, stronger. In IEEE Conference on Computer Vision and Pattern Recognition, 2017. 2

[27] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems, 2015. 1, 2

[28] Thomas Roddick, Alex Kendall, and Roberto Cipolla. Orthographic feature transform for monocular 3d object detection. CoRR, abs/1811.08188, 2018. 2

[29] Shaoshuai Shi, Xiaogang Wang, and Hongsheng Li. Pointrcnn: 3d object proposal generation and detection from point cloud. In IEEE Conference on Computer Vision and Pattern Recognition, 2019. 1

[30] Andrea Simonelli, Samuel Rota Rota Bul`o, Lorenzo Porzi, Manuel L´opez-Antequera, and Peter Kontschieder. Disentangling monocular 3d object detection. In IEEE International Conference on Computer Vision, 2019. 1, 2, 6, 7

[31] Zhi Tian, Chunhua Shen, Hao Chen, and Tong He. Fcos: Fully convolutional one-stage object detection. In IEEE Conference on Computer Vision and Pattern Recognition, 2019.

1, 2, 3, 4

[32] Tai Wang, Xinge Zhu, and Dahua Lin. Reconfigurable voxels: A new representation for lidar-based point clouds. In Conference on Robot Learning, 2020. 1

[33] Tai Wang, Xinge Zhu, Jiangmiao Pang, and Dahua Lin. Probabilistic and geometric depth: Detecting objects in perspective. In Conference on Robot Learning, 2021. 11

[34] Xinlong Wang, Wei Yin, Tao Kong, Yuning Jiang, Lei Li, and Chunhua Shen. Task-aware monocular depth estimation for 3d object detection. In AAAI Conference on Artificial Intelligence, 2020. 2

[35] Yan Wang, Wei-Lun Chao, Divyansh Garg, Bharath Hariharan, Mark Campbell, and Kilian Q. Weinberger. Pseudo-lidar from visual depth estimation: Bridging the gap in 3d object

detection for autonomous driving. In IEEE Conference on Computer Vision and Pattern Recognition, 2019. 2

[36] Bin Xu and Zhenzhong Chen. Multi-level fusion based 3d object detection from monocular images. In IEEE Conference on Computer Vision and Pattern Recognition, 2018. 2

[37] Yurong You, Yan Wang, Wei-Lun Chao, Divyansh Garg, Geoff Pleiss, Bharath Hariharan, Mark Campbell, and Kilian Q Weinberger. Pseudo-lidar++: Accurate depth for 3d object detection in autonomous driving. In ICLR, 2020. 2

[38] Xingyi Zhou, Dequan Wang, and Philipp Kr¨ahenb¨uhl. Objects as points. CoRR, abs/1904.07850, 2019. 2, 6, 7

[39] Yin Zhou and Oncel Tuzel. Voxelnet: End-to-end learning for point cloud based 3d object detection. In IEEE Conference on Computer Vision and Pattern Recognition, 2018. 1

[40] Benjin Zhu, Zhengkai Jiang, Xiangxin Zhou, Zeming Li, and Gang Yu. Class-balanced grouping and sampling for point cloud 3d object detection. CoRR, abs/1908.09492, 2019. 6, 7

[41] Xinge Zhu, Yuexin Ma, Tai Wang, Yan Xu, Jianping Shi, and Dahua Lin. Ssn: Shape signature networks for multiclass object detection from point clouds. In Proceedings of

the European Conference on Computer Vision, 2020. 1

[42] Xinge Zhu, Hui Zhou, Tai Wang, Fangzhou Hong, Yuexin Ma, Wei Li, Hongsheng Li, and Dahua Lin. Cylindrical and asymmetrical 3d convolution networks for lidar segmentation. In Proceedings of the European Conference on Computer Vision, 2021. 1