Kubernetes学习-K8S安装篇-Kubeadm安装高可用K8S集群

Kubernetes学习-K8S安装篇-Kubeadm高可用安装K8S集群

- 1. Kubernetes 高可用安装

-

- 1.1 kubeadm高可用安装k8s集群1.23.1

-

- 1.1.1 基本环境配置

- 1.1.2 内核配置

- 1.1.3 基本组件安装

- 1.1.4 高可用组件安装

- 1.1.5 Calico组件的安装

- 1.1.6 高可用Master

- 1.1.7 Node节点的配置

- 1.1.8 Metrics部署

- 1.1.9 Dashboard部署

- 1.1.10 一些必须的配置更改

1. Kubernetes 高可用安装

1.1 kubeadm高可用安装k8s集群1.23.1

1.1.1 基本环境配置

- Kubectl debug 设置一个临时容器

- Sidecar

- Volume:更改目录权限,fsGroup

- ConfigMap和Secret

K8S官网:https://kubernetes.io/docs/setup/

最新版高可用安装:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/high-availability/

高可用Kubernetes集群规划

| 主机名 | IP地址 | 说明 |

|---|---|---|

| k8s-master01 | 10.0.0.100 | master节点01 |

| k8s-master02 | 10.0.0.101 | master节点02 |

| k8s-master03 | 10.0.0.102 | master节点03 |

| k8s-master-lb | 10.0.0.200 | keepalived虚拟IP |

| k8s-node01 | 10.0.0.103 | node节点01 |

| k8s-node02 | 10.0.0.104 | node节点02 |

VIP(虚拟IP)不要和公司内网IP重复,首先去ping一下,不通才可用。VIP需要和主机在同一个局域网内!

服务器环境配置

| IP地址 | 系统版本 | 内核版本 | CPU内存磁盘 |

|---|---|---|---|

| 10.0.0.100 | centos8.4.2105 | 4.18.0-305.3.1.el8.x86_64 | 2C2G30G |

| 10.0.0.101 | centos8.4.2105 | 4.18.0-305.3.1.el8.x86_64 | 2C2G30G |

| 10.0.0.102 | centos8.4.2105 | 4.18.0-305.3.1.el8.x86_64 | 2C2G30G |

| 10.0.0.103 | centos8.4.2105 | 4.18.0-305.3.1.el8.x86_64 | 2C2G30G |

| 10.0.0.104 | centos8.4.2105 | 4.18.0-305.3.1.el8.x86_64 | 2C2G30G |

所有节点修改主机名

[root@localhost ~]# hostnamectl set-hostname k8s-master01

[root@localhost ~]# bash

[root@k8s-master01 ~]#

[root@localhost ~]# hostnamectl set-hostname k8s-master02

[root@localhost ~]# bash

[root@k8s-master02 ~]#

[root@localhost ~]# hostnamectl set-hostname k8s-master03

[root@localhost ~]# bash

[root@k8s-master03 ~]#

[root@localhost ~]# hostnamectl set-hostname k8s-node01

[root@localhost ~]# bash

[root@k8s-node01 ~]#

[root@localhost ~]# hostnamectl set-hostname k8s-node02

[root@localhost ~]# bash

[root@k8s-node02 ~]#

所有节点配置hosts,修改/etc/hosts如下:

[root@k8s-master01 ~]# vi /etc/hosts

[root@k8s-master01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.100 k8s-master01

10.0.0.101 k8s-master02

10.0.0.102 k8s-master03

10.0.0.200 k8s-master-lb

10.0.0.103 k8s-node01

10.0.0.104 k8s-node02

所有节点关闭防火墙、selinux、dnsmasq、swap。服务器配置如下:

[root@k8s-master01 ~]# systemctl disable --now firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@k8s-master01 ~]# systemctl disable --now dnsmasq

Failed to disable unit: Unit file dnsmasq.service does not exist.

[root@k8s-master01 ~]# sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

[root@k8s-master01 ~]# sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

[root@k8s-master01 ~]# reboot

...

[root@k8s-master03 ~]# setenforce 0

setenforce: SELinux is disabled

[root@k8s-master01 ~]# sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

[root@k8s-master01 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Sat Jan 1 13:29:34 2022

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

/dev/mapper/cl-root / xfs defaults 0 0

UUID=011be562-0336-49cc-a85e-71e7a4ea93a8 /boot xfs defaults 0 0

/dev/mapper/cl-home /home xfs defaults 0 0

#/dev/mapper/cl-swap none swap defaults 0 0

[root@k8s-master01 ~]# swapoff -a && sysctl -w vm.swappiness=0

vm.swappiness = 0

安装ntpdate

[root@k8s-master01 ~]# rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm

Retrieving http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm

Verifying... ################################# [100%]

Preparing... ################################# [100%]

Updating / installing...

1:wlnmp-release-centos-2-1 ################################# [100%]

[root@k8s-master01 ~]# yum install wntp -y

CentOS Linux 8 - AppStream 6.5 MB/s | 8.4 MB 00:01

CentOS Linux 8 - BaseOS 107 kB/s | 4.6 MB 00:43

...

Installed:

wntp-4.2.8p15-1.el8.x86_64

Complete!

所有节点同步时间。时间同步配置如下:

[root@k8s-master01 ~]# ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

[root@k8s-master01 ~]# echo 'Asia/Shanghai' >/etc/timezone

[root@k8s-master01 ~]# ntpdate time2.aliyun.com

1 Jan 16:24:32 ntpdate[1179]: step time server 203.107.6.88 offset -28798.560596 sec

# 加入到crontab

[root@k8s-master01 ~]# crontab -e

no crontab for root - using an empty one

crontab: installing new crontab

[root@k8s-master01 ~]# crontab -l

*/5 * * * * /usr/sbin/ntpdate time2.aliyun.com

# 加入到开机自动同步,/etc/rc.local

[root@k8s-master01 ~]# ntpdate time2.aliyun.com

1 Jan 16:25:32 ntpdate[1184]: adjust time server 203.107.6.88 offset -0.000941 sec

[root@k8s-master01 ~]#

所有节点配置limit:

[root@k8s-master01 ~]# ulimit -SHn 65535

[root@k8s-master01 ~]# vi /etc/security/limits.conf

# 末尾添加如下内容

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

[root@k8s-master01 ~]# cat /etc/security/limits.conf

...

#@student - maxlogins 4

# End of file

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

Master01节点免密钥登录其他节点,安装过程中生成配置文件和证书均在Master01上操作,集群管理也在Master01上操作,阿里云或者AWS上需要单独一台kubectl服务器。密钥配置如下:

[root@k8s-master01 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:vfVM2Ko2K08tlgait/2qldXg1tf1jjPbXGGIooB5A1s root@k8s-master01

The key's randomart image is:

+---[RSA 3072]----+

| |

| |

| . E . .|

| * o +.o..o|

| + +. S.=.=.+oo|

| ..o..=.= *.o.|

| . ..o B o * o|

| . +.+oo *.|

| o.o*=o . o|

+----[SHA256]-----+

[root@k8s-master01 ~]# for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 k8s-node02;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'k8s-master01 (10.0.0.100)' can't be established.

ECDSA key fingerprint is SHA256:hiGOVC01QsC2Mno7Chucvj2lYf8vRIVrQ2AlMn96pxM.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-master01's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-master01'"

and check to make sure that only the key(s) you wanted were added.

...

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host 'k8s-node02 (10.0.0.104)' can't be established.

ECDSA key fingerprint is SHA256:qqhCI3qRQrs1+3jcovSkU947ZDcc7QEBiq9lUfVTfxs.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-node02's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-node02'"

and check to make sure that only the key(s) you wanted were added.

在源码中的repo目录配置使用的是国内仓库源,将其复制到所有节点:

本次是提前下载好了,上传到所有节点

git clone https://github.com/dotbalo/k8s-ha-install.git

[root@k8s-master01 ~]# ls

anaconda-ks.cfg k8s-ha-install

CentOS 8 安装源如下:

[root@k8s-master01 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-8.repo

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2595 100 2595 0 0 8034 0 --:--:-- --:--:-- --:--:-- 8034

[root@k8s-master01 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

Repository extras is listed more than once in the configuration

CentOS-8 - Base - mirrors.aliyun.com 9.7 MB/s | 4.6 MB 00:00

CentOS-8 - Extras - mirrors.aliyun.com 34 kB/s | 10 kB 00:00

...

Upgraded:

device-mapper-8:1.02.177-10.el8.x86_64 device-mapper-event-8:1.02.177-10.el8.x86_64 device-mapper-event-libs-8:1.02.177-10.el8.x86_64 device-mapper-libs-8:1.02.177-10.el8.x86_64

device-mapper-persistent-data-0.9.0-4.el8.x86_64 dnf-plugins-core-4.0.21-3.el8.noarch lvm2-8:2.03.12-10.el8.x86_64 lvm2-libs-8:2.03.12-10.el8.x86_64

python3-dnf-plugins-core-4.0.21-3.el8.noarch

Installed:

yum-utils-4.0.21-3.el8.noarch

Complete!

[root@k8s-master01 ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Repository extras is listed more than once in the configuration

Adding repo from: https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s-master01 ~]# cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@k8s-master01 ~]# sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

[root@k8s-master01 ~]#

必备工具安装

[root@k8s-master01 ~]# yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 -y

Repository extras is listed more than once in the configuration

CentOS-8 - Base - mirrors.aliyun.com 21 kB/s | 3.9 kB 00:00

CentOS-8 - Extras - mirrors.aliyun.com 9.9 kB/s | 1.5 kB 00:00

...

telnet-1:0.17-76.el8.x86_64

vim-common-2:8.0.1763-16.el8.x86_64

vim-enhanced-2:8.0.1763-16.el8.x86_64

vim-filesystem-2:8.0.1763-16.el8.noarch

wget-1.19.5-10.el8.x86_64

Complete!

所有节点升级系统并重启,此处升级没有升级内核,下节会单独升级内核:

yum update -y --exclude=kernel* && reboot #CentOS7需要升级,8不需要

1.1.2 内核配置

CentOS7 需要升级内核至4.18+

https://www.kernel.org/ 和 https://elrepo.org/linux/kernel/el7/x86_64/

CentOS 7 dnf可能无法安装内核

dnf --disablerepo=\* --enablerepo=elrepo -y install kernel-ml kernel-ml-devel

grubby --default-kernel

使用如下方式安装最新版内核

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

查看最新版内核yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

[root@k8s-node01 ~]# yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* elrepo-kernel: mirrors.neusoft.edu.cn

elrepo-kernel | 2.9 kB 00:00:00

elrepo-kernel/primary_db | 1.9 MB 00:00:00

Available Packages

elrepo-release.noarch 7.0-5.el7.elrepo elrepo-kernel

kernel-lt.x86_64 4.4.229-1.el7.elrepo elrepo-kernel

kernel-lt-devel.x86_64 4.4.229-1.el7.elrepo elrepo-kernel

kernel-lt-doc.noarch 4.4.229-1.el7.elrepo elrepo-kernel

kernel-lt-headers.x86_64 4.4.229-1.el7.elrepo elrepo-kernel

kernel-lt-tools.x86_64 4.4.229-1.el7.elrepo elrepo-kernel

kernel-lt-tools-libs.x86_64 4.4.229-1.el7.elrepo elrepo-kernel

kernel-lt-tools-libs-devel.x86_64 4.4.229-1.el7.elrepo elrepo-kernel

kernel-ml.x86_64 5.7.7-1.el7.elrepo elrepo-kernel

kernel-ml-devel.x86_64 5.7.7-1.el7.elrepo elrepo-kernel

kernel-ml-doc.noarch 5.7.7-1.el7.elrepo elrepo-kernel

kernel-ml-headers.x86_64 5.7.7-1.el7.elrepo elrepo-kernel

kernel-ml-tools.x86_64 5.7.7-1.el7.elrepo elrepo-kernel

kernel-ml-tools-libs.x86_64 5.7.7-1.el7.elrepo elrepo-kernel

kernel-ml-tools-libs-devel.x86_64 5.7.7-1.el7.elrepo elrepo-kernel

perf.x86_64 5.7.7-1.el7.elrepo elrepo-kernel

python-perf.x86_64 5.7.7-1.el7.elrepo elrepo-kernel

安装最新版:

yum --enablerepo=elrepo-kernel install kernel-ml kernel-ml-devel -y

安装完成后reboot

更改内核顺序:

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg && grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)" && reboot

开机后查看内核

[appadmin@k8s-node01 ~]$ uname -a

Linux k8s-node01 5.7.7-1.el7.elrepo.x86_64 #1 SMP Wed Jul 1 11:53:16 EDT 2020 x86_64 x86_64 x86_64 GNU/Linux

CentOS 8按需升级:

可以采用dnf升级,也可使用上述同样步骤升级(使用上述步骤注意elrepo-release-8.1版本)

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

dnf install https://www.elrepo.org/elrepo-release-8.1-1.el8.elrepo.noarch.rpm

dnf --disablerepo=\* --enablerepo=elrepo -y install kernel-ml kernel-ml-devel

grubby --default-kernel && reboot

本所有节点安装ipvsadm:

[root@k8s-master01 ~]# yum install ipvsadm ipset sysstat conntrack libseccomp -y

Repository extras is listed more than once in the configuration

Last metadata expiration check: -1 day, 16:38:19 ago on Sun 02 Jan 2022 12:20:14 AM CST.

Package ipset-7.1-1.el8.x86_64 is already installed.

Package libseccomp-2.5.1-1.el8.x86_64 is already installed.

...

Installed:

conntrack-tools-1.4.4-10.el8.x86_64 ipvsadm-1.31-1.el8.x86_64

libnetfilter_cthelper-1.0.0-15.el8.x86_64 libnetfilter_cttimeout-1.0.0-11.el8.x86_64

libnetfilter_queue-1.0.4-3.el8.x86_64 lm_sensors-libs-3.4.0-23.20180522git70f7e08.el8.x86_64

sysstat-11.7.3-6.el8.x86_64

Complete!

所有节点配置ipvs模块,在内核4.19+版本nf_conntrack_ipv4已经改为nf_conntrack,本例安装的内核为4.18,使用nf_conntrack_ipv4即可:

[root@k8s-master01 ~]# modprobe -- ip_vs

[root@k8s-master01 ~]# modprobe -- ip_vs_rr

[root@k8s-master01 ~]# modprobe -- ip_vs_wrr

[root@k8s-master01 ~]# modprobe -- ip_vs_sh

[root@k8s-master01 ~]# modprobe -- nf_conntrack_ipv4

modprobe: FATAL: Module nf_conntrack_ipv4 not found in directory /lib/modules/4.18.0-305.3.1.el8.x86_64

[root@k8s-master01 ~]# modprobe -- nf_conntrack

[root@k8s-master01 ~]# vim /etc/modules-load.d/ipvs.conf

[root@k8s-master01 ~]# cat /etc/modules-load.d/ipvs.conf

# 加入以下内容

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

然后执行systemctl enable --now systemd-modules-load.service即可

[root@k8s-master01 ~]# systemctl enable --now systemd-modules-load.service

The unit files have no installation config (WantedBy, RequiredBy, Also, Alias

settings in the [Install] section, and DefaultInstance for template units).

This means they are not meant to be enabled using systemctl.

Possible reasons for having this kind of units are:

1) A unit may be statically enabled by being symlinked from another unit's

.wants/ or .requires/ directory.

2) A unit's purpose may be to act as a helper for some other unit which has

a requirement dependency on it.

3) A unit may be started when needed via activation (socket, path, timer,

D-Bus, udev, scripted systemctl call, ...).

4) In case of template units, the unit is meant to be enabled with some

instance name specified.

检查是否加载:

[root@k8s-master01 ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 172032 1 ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

libcrc32c 16384 3 nf_conntrack,xfs,ip_vs

[root@k8s-master01 ~]# lsmod | grep -e ip_vs -e nf_conntrack

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 172032 1 ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

nf_defrag_ipv4 16384 1 nf_conntrack

libcrc32c 16384 3 nf_conntrack,xfs,ip_vs

开启一些k8s集群中必须的内核参数,所有节点配置k8s内核:

[root@k8s-master01 ~]# cat < /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

[root@k8s-master01 ~]# sysctl --system

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-coredump.conf ...

kernel.core_pattern = |/usr/lib/systemd/systemd-coredump %P %u %g %s %t %c %h %e

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

kernel.kptr_restrict = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.all.promote_secondaries = 1

net.core.default_qdisc = fq_codel

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /usr/lib/sysctl.d/50-libkcapi-optmem_max.conf ...

net.core.optmem_max = 81920

* Applying /usr/lib/sysctl.d/50-pid-max.conf ...

kernel.pid_max = 4194304

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

net.ipv4.ip_forward = 1

vm.overcommit_memory = 1

vm.panic_on_oom = 0

fs.inotify.max_user_watches = 89100

fs.file-max = 52706963

fs.nr_open = 52706963

net.netfilter.nf_conntrack_max = 2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

* Applying /etc/sysctl.conf ...

所有节点配置完内核后,重启服务器,保证重启后内核依旧加载

[root@k8s-master01 ~]# reboot

...

[root@k8s-master01 ~]# lsmod | grep --color=auto -e ip_vs -e nf_conntrack

ip_vs_ftp 16384 0

nf_nat 45056 1 ip_vs_ftp

ip_vs_sed 16384 0

ip_vs_nq 16384 0

ip_vs_fo 16384 0

ip_vs_sh 16384 0

ip_vs_dh 16384 0

ip_vs_lblcr 16384 0

ip_vs_lblc 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs_wlc 16384 0

ip_vs_lc 16384 0

ip_vs 172032 24 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp

nf_conntrack 172032 2 nf_nat,ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

nf_defrag_ipv4 16384 1 nf_conntrack

libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs

1.1.3 基本组件安装

本节主要安装的是集群中用到的各种组件,比如Docker-ce、Kubernetes各组件等。

查看可用docker-ce版本:

[root@k8s-master01 ~]# yum list docker-ce.x86_64 --showduplicates | sort -r

Repository extras is listed more than once in the configuration

Last metadata expiration check: 0:57:48 ago on Sun 02 Jan 2022 12:20:14 AM CST.

docker-ce.x86_64 3:20.10.9-3.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.8-3.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.7-3.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.6-3.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.5-3.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.4-3.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.3-3.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.2-3.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.1-3.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.12-3.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.11-3.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.10-3.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.0-3.el8 docker-ce-stable

docker-ce.x86_64 3:19.03.15-3.el8 docker-ce-stable

docker-ce.x86_64 3:19.03.14-3.el8 docker-ce-stable

docker-ce.x86_64 3:19.03.13-3.el8 docker-ce-stable

Available Packages

安装最新版本的Docker

[root@k8s-master01 ~]# yum install docker-ce -y

Repository extras is listed more than once in the configuration

Last metadata expiration check: 1:00:16 ago on Sun 02 Jan 2022 12:20:14 AM CST.

Dependencies resolved.

===========================================================================================================================================================================================================

Package Architecture Version Repository Size

===========================================================================================================================================================================================================

Installing:

docker-ce x86_64 3:20.10.12-3.el8 docker-ce-stable 22 M

...

Upgraded:

policycoreutils-2.9-16.el8.x86_64

Installed:

checkpolicy-2.9-1.el8.x86_64 container-selinux-2:2.167.0-1.module_el8.5.0+911+f19012f9.noarch containerd.io-1.4.12-3.1.el8.x86_64

docker-ce-3:20.10.12-3.el8.x86_64 docker-ce-cli-1:20.10.12-3.el8.x86_64 docker-ce-rootless-extras-20.10.12-3.el8.x86_64

docker-scan-plugin-0.12.0-3.el8.x86_64 fuse-overlayfs-1.7.1-1.module_el8.5.0+890+6b136101.x86_64 fuse3-3.2.1-12.el8.x86_64

fuse3-libs-3.2.1-12.el8.x86_64 libcgroup-0.41-19.el8.x86_64 libslirp-4.4.0-1.module_el8.5.0+890+6b136101.x86_64

policycoreutils-python-utils-2.9-16.el8.noarch python3-audit-3.0-0.17.20191104git1c2f876.el8.x86_64 python3-libsemanage-2.9-6.el8.x86_64

python3-policycoreutils-2.9-16.el8.noarch python3-setools-4.3.0-2.el8.x86_64 slirp4netns-1.1.8-1.module_el8.5.0+890+6b136101.x86_64

Complete!

可能会遇到的问题

[root@k8s-master01 k8s-ha-install]# wget https://download.docker.com/linux/centos/7/x86_64/edge/Packages/containerd.io-1.2.13-3.2.el7.x86_64.rpm

[root@k8s-master01 k8s-ha-install]# yum install containerd.io-1.2.13-3.2.el7.x86_64.rpm -y

安装指定版本的Docker:

yum -y install docker-ce-17.09.1.ce-1.el7.centos

温馨提示:

由于新版kubelet建议使用systemd,所以可以把docker的CgroupDriver改成systemd

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

安装k8s组件:

[root@k8s-master01 ~]# yum list kubeadm.x86_64 --showduplicates | sort -r

Repository extras is listed more than once in the configuration

Last metadata expiration check: 1:09:19 ago on Sun 02 Jan 2022 12:20:14 AM CST.

kubeadm.x86_64 1.9.9-0 kubernetes

...

kubeadm.x86_64 1.23.1-0 kubernetes

kubeadm.x86_64 1.23.0-0 kubernetes

kubeadm.x86_64 1.22.5-0 kubernetes

kubeadm.x86_64 1.22.4-0 kubernetes

kubeadm.x86_64 1.22.3-0 kubernetes

...

所有节点安装最新版本kubeadm:

[root@k8s-master01 ~]# yum install kubeadm -y

Repository extras is listed more than once in the configuration

Last metadata expiration check: 1:11:16 ago on Sun 02 Jan 2022 12:20:14 AM CST.

Dependencies resolved.

===================================================================================================

Package Architecture Version Repository Size

===================================================================================================

Installing:

kubeadm x86_64 1.23.1-0 kubernetes 9.0 M

Installing dependencies:

cri-tools x86_64 1.19.0-0 kubernetes 5.7 M

kubectl x86_64 1.23.1-0 kubernetes 9.5 M

kubelet x86_64 1.23.1-0 kubernetes 21 M

kubernetes-cni x86_64 0.8.7-0 kubernetes 19 M

socat x86_64 1.7.4.1-1.el8 AppStream 323 k

Transaction Summary

===================================================================================================

Install 6 Packages

...

Installed:

cri-tools-1.19.0-0.x86_64 kubeadm-1.23.1-0.x86_64 kubectl-1.23.1-0.x86_64

kubelet-1.23.1-0.x86_64 kubernetes-cni-0.8.7-0.x86_64 socat-1.7.4.1-1.el8.x86_64

Complete!

所有节点安装指定版本k8s组件:

yum install -y kubeadm-1.12.3-0.x86_64 kubectl-1.12.3-0.x86_64 kubelet-1.12.3-0.x86_64

所有节点设置开机自启动Docker:

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl enable --now docker

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

所有节点查看docker启动情况,最后面一定要没有告警和报错才可

[root@k8s-master01 ~]# docker info

Client:

Context: default

Debug Mode: false

Plugins:

app: Docker App (Docker Inc., v0.9.1-beta3)

buildx: Docker Buildx (Docker Inc., v0.7.1-docker)

scan: Docker Scan (Docker Inc., v0.12.0)

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 20.10.12

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Cgroup Version: 1

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: io.containerd.runc.v2 io.containerd.runtime.v1.linux runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 7b11cfaabd73bb80907dd23182b9347b4245eb5d

runc version: v1.0.2-0-g52b36a2

init version: de40ad0

Security Options:

seccomp

Profile: default

Kernel Version: 4.18.0-305.3.1.el8.x86_64

Operating System: CentOS Linux 8

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 1.905GiB

Name: k8s-master01

ID: WTOL:FBKY:MYQY:5O32:2MUV:KFFW:CVXJ:FASZ:YPJB:YNOQ:MYZ3:GEC7

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

默认配置的pause镜像使用gcr.io仓库,国内可能无法访问,所以这里配置Kubelet使用阿里云的pause镜像:

[root@k8s-master01 ~]# DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f4)

[root@k8s-master01 ~]# echo $DOCKER_CGROUPS

cgroupfs 1

[root@k8s-master01 ~]# cat >/etc/sysconfig/kubelet<

KUBELET_EXTRA_ARGS="--cgroup-driver=cgroupfs --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1"

EOF

[root@k8s-node02 ~]# cat /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=cgroupfs --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1"

设置Kubelet开机自启动:

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl enable --now kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

1.1.4 高可用组件安装

所有Master节点通过yum安装HAProxy和KeepAlived:

[root@k8s-master01 ~]# yum install keepalived haproxy -y

Repository extras is listed more than once in the configuration

Last metadata expiration check: 1:33:58 ago on Sun 02 Jan 2022 12:20:14 AM CST.

Dependencies resolved.

===================================================================================================

Package Architecture Version Repository Size

===================================================================================================

Installing:

haproxy x86_64 1.8.27-2.el8 AppStream 1.4 M

keepalived x86_64 2.1.5-6.el8 AppStream 537 k

Installing dependencies:

mariadb-connector-c x86_64 3.1.11-2.el8_3 AppStream 200 k

mariadb-connector-c-config noarch 3.1.11-2.el8_3 AppStream 15 k

net-snmp-agent-libs x86_64 1:5.8-22.el8 AppStream 748 k

net-snmp-libs x86_64 1:5.8-22.el8 base 827 k

Transaction Summary

===================================================================================================

Install 6 Packages

...

Installed:

haproxy-1.8.27-2.el8.x86_64 keepalived-2.1.5-6.el8.x86_64

mariadb-connector-c-3.1.11-2.el8_3.x86_64 mariadb-connector-c-config-3.1.11-2.el8_3.noarch

net-snmp-agent-libs-1:5.8-22.el8.x86_64 net-snmp-libs-1:5.8-22.el8.x86_64

Complete!

所有Master节点配置HAProxy(详细配置参考HAProxy文档,所有Master节点的HAProxy配置相同):

[root@k8s-master01 ~]# cd /etc/haproxy/

[root@k8s-master01 haproxy]# ls

haproxy.cfg

[root@k8s-master01 haproxy]# mv haproxy.cfg haproxy.cfg.bak

[root@k8s-master01 haproxy]# vi haproxy.cfg

[root@k8s-master01 haproxy]# cat haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 10.0.0.100:6443 check

server k8s-master02 10.0.0.101:6443 check

server k8s-master03 10.0.0.102:6443 check

所有Master节点配置KeepAlived,配置不一样;

注意区分 [root@k8s-master01 pki]# vim /etc/keepalived/keepalived.conf 。

注意每个节点的IP和网卡(interface参数)

Master01节点的配置:

[root@k8s-master01 haproxy]# cd /etc/keepalived/

[root@k8s-master01 keepalived]# ls

keepalived.conf

[root@k8s-master01 keepalived]# mv keepalived.conf keepalived.conf.bak

[root@k8s-master01 keepalived]# vi keepalived.conf

[root@k8s-master01 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens160

mcast_src_ip 10.0.0.100

virtual_router_id 51

priority 101

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.0.0.200

}

# track_script {

# chk_apiserver

# }

}

Master02节点的配置:

[root@k8s-master02 ~]# cd /etc/keepalived/

[root@k8s-master02 keepalived]# ls

keepalived.conf

[root@k8s-master02 keepalived]# mv keepalived.conf keepalived.conf.bak

[root@k8s-master02 keepalived]# vi keepalived.conf

[root@k8s-master02 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface ens160

mcast_src_ip 10.0.0.101

virtual_router_id 51

priority 100

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.0.0.200

}

# track_script {

# chk_apiserver

# }

}

Master03节点的配置:

[root@k8s-master03 ~]# cd /etc/keepalived/

[root@k8s-master03 keepalived]# ls

keepalived.conf

[root@k8s-master03 keepalived]# mv keepalived.conf keepalived.conf.bak

[root@k8s-master03 keepalived]# vi keepalived.conf

[root@k8s-master03 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface ens160

mcast_src_ip 10.0.0.102

virtual_router_id 51

priority 100

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.0.0.200

}

# track_script {

# chk_apiserver

# }

}

注意上述的健康检查是关闭的,集群建立完成后再开启:

# track_script {

# chk_apiserver

# }

所有master节点配置KeepAlived健康检查文件:

[root@k8s-master01 keepalived]# vi /etc/keepalived/check_apiserver.sh

[root@k8s-master01 keepalived]# cat /etc/keepalived/check_apiserver.sh

#!/bin/bash

err=0

for k in $(seq 1 3)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

[root@k8s-master01 keepalived]# chmod +x /etc/keepalived/check_apiserver.sh

[root@k8s-master01 keepalived]#

所有的master节点启动haproxy和keepalived

[root@k8s-master01 keepalived]# systemctl enable --now haproxy

Created symlink /etc/systemd/system/multi-user.target.wants/haproxy.service → /usr/lib/systemd/system/haproxy.service.

[root@k8s-master01 keepalived]# systemctl enable --now keepalived

Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.

重要:如果安装了keepalived和haproxy,需要测试keepalived是否是正常的

#可以看到虚IP在master01上面

[root@k8s-master01 keepalived]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:64:93:f2 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.100/24 brd 10.0.0.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet 10.0.0.200/32 scope global ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe64:93f2/64 scope link noprefixroute

valid_lft forever preferred_lft forever

...

#在别的节点上面ping虚IP的地址看能不能通

[root@k8s-master02 keepalived]# ping 10.0.0.200

PING 10.0.0.200 (10.0.0.200) 56(84) bytes of data.

64 bytes from 10.0.0.200: icmp_seq=1 ttl=64 time=0.698 ms

64 bytes from 10.0.0.200: icmp_seq=2 ttl=64 time=0.417 ms

^C

--- 10.0.0.200 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1027ms

rtt min/avg/max/mdev = 0.417/0.557/0.698/0.142 ms

telnet 10.0.0.200 16443

如果ping不通且telnet没有出现 ],则认为VIP不可以,不可在继续往下执行,需要排查keepalived的问题,比如防火墙和selinux,haproxy和keepalived的状态,监听端口等

所有节点查看防火墙状态必须为disable和inactive:systemctl status firewalld

所有节点查看selinux状态,必须为disable:getenforce

master节点查看haproxy和keepalived状态:systemctl status keepalived haproxy

master节点查看监听端口:netstat -lntp

官方地址:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/high-availability/

各Master节点的kubeadm-config.yaml配置文件如下:

Master01:

daocloud.io/daocloud

[root@k8s-master01 ~]# vi kubeadm-config.yaml

[root@k8s-master01 ~]# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: 7t2weq.bjbawausm0jaxury

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.0.0.100

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

certSANs:

- 10.0.0.200

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 10.0.0.200:16443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.23.1

networking:

dnsDomain: cluster.local

podSubnet: 172.168.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

更新kubeadm文件

kubeadm config migrate --old-config kubeadm-config.yaml --new-config new.yaml

所有Master节点提前下载镜像,可以节省初始化时间:

[root@k8s-master01 ~]# kubeadm config images pull --config /root/kubeadm-config.yaml

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.23.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.23.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.23.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.23.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.6

[root@k8s-master01 ~]#

所有节点设置开机自启动kubelet

systemctl enable --now kubelet

Master01节点初始化,初始化以后会在/etc/kubernetes目录下生成对应的证书和配置文件,之后其他Master节点加入Master01即可:

[root@k8s-master01 ~]# kubeadm init --config /root/kubeadm-config.yaml --upload-certs

[init] Using Kubernetes version: v1.23.1

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.0.0.100 10.0.0.200]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master01 localhost] and IPs [10.0.0.100 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master01 localhost] and IPs [10.0.0.100 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 51.922117 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.23" in namespace kube-system with the configuration for the kubelets in the cluster

NOTE: The "kubelet-config-1.23" naming of the kubelet ConfigMap is deprecated. Once the UnversionedKubeletConfigMap feature gate graduates to Beta the default name will become just "kubelet-config". Kubeadm upgrade will handle this transition transparently.

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

ecef976dec14d9ea041938ee3e1dee670fee35041fa91258424d50973784ff02

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 7t2weq.bjbawausm0jaxury

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 10.0.0.200:16443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:6a259f0b91a7412345ee5c182db413a61b5afc93a411e7b949fbc297201594cf \

--control-plane --certificate-key ecef976dec14d9ea041938ee3e1dee670fee35041fa91258424d50973784ff02

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.0.200:16443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:6a259f0b91a7412345ee5c182db413a61b5afc93a411e7b949fbc297201594cf

[root@k8s-master01 ~]# mkdir -p $HOME/.kube

[root@k8s-master01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane,master 4m15s v1.23.1

k8s-master02 NotReady control-plane,master 3m15s v1.23.1

k8s-master03 NotReady control-plane,master 2m23s v1.23.1

k8s-node01 NotReady <none> 103s v1.23.1

k8s-node02 NotReady <none> 70s v1.23.1

[root@k8s-master01 ~]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-65c54cc984-dzwtd 0/1 Pending 0 3m15s

coredns-65c54cc984-gl9qp 0/1 Pending 0 3m15s

etcd-k8s-master01 1/1 Running 0 3m28s

kube-apiserver-k8s-master01 1/1 Running 0 3m28s

kube-controller-manager-k8s-master01 1/1 Running 0 3m28s

kube-proxy-gwdsv 1/1 Running 0 3m15s

kube-scheduler-k8s-master01 1/1 Running 0 3m28s

....

[root@k8s-master01 ~]# tail -f /var/log/messages

Jan 2 04:45:43 k8s-master01 kubelet[12498]: I0102 04:45:43.259976 12498 cni.go:240] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

Jan 2 04:45:45 k8s-master01 kubelet[12498]: E0102 04:45:45.695262 12498 kubelet.go:2347] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized"

Jan 2 04:45:48 k8s-master01 kubelet[12498]: I0102 04:45:48.261617 12498 cni.go:240] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

Jan 2 04:45:50 k8s-master01 kubelet[12498]: E0102 04:45:50.702817 12498 kubelet.go:2347] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized"

Jan 2 04:45:53 k8s-master01 kubelet[12498]: I0102 04:45:53.262294 12498 cni.go:240] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

Jan 2 04:45:55 k8s-master01 kubelet[12498]: E0102 04:45:55.711591 12498 kubelet.go:2347] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized"

Jan 2 04:45:58 k8s-master01 kubelet[12498]: I0102 04:45:58.263726 12498 cni.go:240] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

Jan 2 04:46:00 k8s-master01 kubelet[12498]: E0102 04:46:00.726013 12498 kubelet.go:2347] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized"

Jan 2 04:46:03 k8s-master01 kubelet[12498]: I0102 04:46:03.265447 12498 cni.go:240] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

Jan 2 04:46:05 k8s-master01 kubelet[12498]: E0102 04:46:05.734212 12498 kubelet.go:2347] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized"

Jan 2 04:46:08 k8s-master01 kubelet[12498]: I0102 04:46:08.265555 12498 cni.go:240] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

Jan 2 04:46:10 k8s-master01 kubelet[12498]: E0102 04:46:10.742864 12498 kubelet.go:2347] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized"

^C

不用配置文件初始化:

kubeadm init --control-plane-endpoint "LOAD_BALANCER_DNS:LOAD_BALANCER_PORT" --upload-certs

如果初始化失败,重置后再次初始化,命令如下:

kubeadm reset

初始化成功以后,会产生Token值,用于其他节点加入时使用,因此要记录下初始化成功生成的token值(令牌值):

所有Master节点配置环境变量,用于访问Kubernetes集群:

cat <<EOF >> /root/.bashrc

export KUBECONFIG=/etc/kubernetes/admin.conf

EOF

source /root/.bashrc

查看节点状态:

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady master 14m v1.12.3

采用初始化安装方式,所有的系统组件均以容器的方式运行并且在kube-system命名空间内,此时可以查看Pod状态:

[root@k8s-master01 ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE

coredns-777d78ff6f-kstsz 0/1 Pending 0 14m

coredns-777d78ff6f-rlfr5 0/1 Pending 0 14m

etcd-k8s-master01 1/1 Running 0 14m 10.0.0.100 k8s-master01

kube-apiserver-k8s-master01 1/1 Running 0 13m 10.0.0.100 k8s-master01

kube-controller-manager-k8s-master01 1/1 Running 0 13m 10.0.0.100 k8s-master01

kube-proxy-8d4qc 1/1 Running 0 14m 10.0.0.100 k8s-master01

kube-scheduler-k8s-master01 1/1 Running 0 13m 10.0.0.100 k8s-master01

1.1.5 Calico组件的安装

下载calico的yaml文件到master01节点上

[root@k8s-master01 ~]# curl https://docs.projectcalico.org/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 212k 100 212k 0 0 26954 0 0:00:08 0:00:08 --:--:-- 47391

[root@k8s-master01 ~]# vim calico.yaml

...

# 修改这两行

- name: CALICO_IPV4POOL_CIDR

value: "172.168.0.0/16"

...

[root@k8s-master01 ~]# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/calico-kube-controllers created

[root@k8s-master01 ~]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-647d84984b-lfnj5 0/1 Pending 0 100s

calico-node-glmkj 0/1 Init:1/3 0 100s

coredns-65c54cc984-dzwtd 0/1 Pending 0 19m

coredns-65c54cc984-gl9qp 0/1 Pending 0 19m

etcd-k8s-master01 1/1 Running 0 19m

kube-apiserver-k8s-master01 1/1 Running 0 19m

kube-controller-manager-k8s-master01 1/1 Running 0 19m

kube-proxy-gwdsv 1/1 Running 0 19m

kube-scheduler-k8s-master01 1/1 Running 0 19m

[root@k8s-master01 ~]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-647d84984b-lfnj5 0/1 ContainerCreating 0 2m43s

calico-node-glmkj 0/1 Init:2/3 0 2m43s

coredns-65c54cc984-dzwtd 0/1 ContainerCreating 0 20m

coredns-65c54cc984-gl9qp 0/1 ContainerCreating 0 20m

etcd-k8s-master01 1/1 Running 0 20m

kube-apiserver-k8s-master01 1/1 Running 0 20m

kube-controller-manager-k8s-master01 1/1 Running 0 20m

kube-proxy-gwdsv 1/1 Running 0 20m

kube-scheduler-k8s-master01 1/1 Running 0 20m

[root@k8s-master01 ~]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-647d84984b-lfnj5 0/1 ContainerCreating 0 7m41s

calico-node-glmkj 1/1 Running 0 7m41s

coredns-65c54cc984-dzwtd 1/1 Running 0 25m

coredns-65c54cc984-gl9qp 1/1 Running 0 25m

etcd-k8s-master01 1/1 Running 0 25m

kube-apiserver-k8s-master01 1/1 Running 0 25m

kube-controller-manager-k8s-master01 1/1 Running 0 25m

kube-proxy-gwdsv 1/1 Running 0 25m

kube-scheduler-k8s-master01 1/1 Running 0 25m

[root@k8s-master01 ~]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-647d84984b-lfnj5 1/1 Running 0 10m

calico-node-glmkj 1/1 Running 0 10m

coredns-65c54cc984-dzwtd 1/1 Running 0 28m

coredns-65c54cc984-gl9qp 1/1 Running 0 28m

etcd-k8s-master01 1/1 Running 0 28m

kube-apiserver-k8s-master01 1/1 Running 0 28m

kube-controller-manager-k8s-master01 1/1 Running 0 28m

kube-proxy-gwdsv 1/1 Running 0 28m

kube-scheduler-k8s-master01 1/1 Running 0 28m

注意:如果国内用户下载Calico较慢,所有节点可以配置加速器(如果该文件有其他配置,别忘了加上去)

vim /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": [

"https://registry.docker-cn.com",

"http://hub-mirror.c.163.com",

"https://docker.mirrors.ustc.edu.cn"

]

}

systemctl daemon-reload

systemctl restart docker

Calico:https://www.projectcalico.org/

https://docs.projectcalico.org/getting-started/kubernetes/self-managed-onprem/onpremises

1.1.6 高可用Master

[root@k8s-master01 ~]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

attachdetach-controller-token-lzrnd kubernetes.io/service-account-token 3 28m

bootstrap-signer-token-hzgrs kubernetes.io/service-account-token 3 28m

bootstrap-token-7t2weq bootstrap.kubernetes.io/token 6 28m

bootstrap-token-9yqdd5 bootstrap.kubernetes.io/token 4 28m

calico-kube-controllers-token-xxxmn kubernetes.io/service-account-token 3 11m

calico-node-token-q264q kubernetes.io/service-account-token 3 11m

certificate-controller-token-xjcls kubernetes.io/service-account-token 3 28m

clusterrole-aggregation-controller-token-vdhnt kubernetes.io/service-account-token 3 28m

coredns-token-ndkm2 kubernetes.io/service-account-token 3 28m

cronjob-controller-token-9s4hg kubernetes.io/service-account-token 3 28m

daemon-set-controller-token-fv8rs kubernetes.io/service-account-token 3 28m

default-token-5wnhm kubernetes.io/service-account-token 3 28m

deployment-controller-token-6bl57 kubernetes.io/service-account-token 3 28m

disruption-controller-token-xvtv7 kubernetes.io/service-account-token 3 28m

endpoint-controller-token-gc8qs kubernetes.io/service-account-token 3 28m

endpointslice-controller-token-tkfm9 kubernetes.io/service-account-token 3 28m

endpointslicemirroring-controller-token-k66nr kubernetes.io/service-account-token 3 28m

ephemeral-volume-controller-token-4tzxh kubernetes.io/service-account-token 3 28m

expand-controller-token-hztsb kubernetes.io/service-account-token 3 28m

generic-garbage-collector-token-9zmrv kubernetes.io/service-account-token 3 28m

horizontal-pod-autoscaler-token-h6ct5 kubernetes.io/service-account-token 3 28m

job-controller-token-bsh9b kubernetes.io/service-account-token 3 28m

kube-proxy-token-j45xs kubernetes.io/service-account-token 3 28m

kubeadm-certs Opaque 8 28m

namespace-controller-token-tmt8l kubernetes.io/service-account-token 3 28m

node-controller-token-j444g kubernetes.io/service-account-token 3 28m

persistent-volume-binder-token-2v79v kubernetes.io/service-account-token 3 28m

pod-garbage-collector-token-ksh2r kubernetes.io/service-account-token 3 28m

pv-protection-controller-token-2np6q kubernetes.io/service-account-token 3 28m

pvc-protection-controller-token-4jdwq kubernetes.io/service-account-token 3 28m

replicaset-controller-token-b2zjz kubernetes.io/service-account-token 3 28m

replication-controller-token-c8jwk kubernetes.io/service-account-token 3 28m

resourcequota-controller-token-2rm87 kubernetes.io/service-account-token 3 28m

root-ca-cert-publisher-token-lc4xc kubernetes.io/service-account-token 3 28m

service-account-controller-token-wflvt kubernetes.io/service-account-token 3 28m

service-controller-token-9555f kubernetes.io/service-account-token 3 28m

statefulset-controller-token-nhklv kubernetes.io/service-account-token 3 28m

token-cleaner-token-x42xx kubernetes.io/service-account-token 3 28m

ttl-after-finished-controller-token-nppn2 kubernetes.io/service-account-token 3 28m

ttl-controller-token-79wqf kubernetes.io/service-account-token 3 28m

[root@k8s-master01 ~]# kubectl get secret -n kube-system bootstrap-token-7t2weq -o yaml

apiVersion: v1

data:

auth-extra-groups: c3lzdGVtOmJvb3RzdHJhcHBlcnM6a3ViZWFkbTpkZWZhdWx0LW5vZGUtdG9rZW4=

expiration: MjAyMi0wMS0wMlQyMDo0MjowNlo=

token-id: N3Qyd2Vx

token-secret: YmpiYXdhdXNtMGpheHVyeQ==

usage-bootstrap-authentication: dHJ1ZQ==

usage-bootstrap-signing: dHJ1ZQ==

kind: Secret

metadata:

creationTimestamp: "2022-01-01T20:42:06Z"

name: bootstrap-token-7t2weq

namespace: kube-system

resourceVersion: "223"

uid: 55d2933f-0ee2-4a54-9f02-0c105fe97be1

type: bootstrap.kubernetes.io/token

[root@k8s-master01 ~]# echo "MjAyMi0wMS0wMlQyMDo0MjowNlo=" |base64 --decode

2022-01-02T20:42:06Z[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl delete secret -n kube-system bootstrap-token-7t2weq

secret "bootstrap-token-7t2weq" deleted

[root@k8s-master01 ~]# kubectl delete secret -n kube-system bootstrap-token-9yqdd5

secret "bootstrap-token-9yqdd5" deleted

[root@k8s-master01 ~]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

attachdetach-controller-token-lzrnd kubernetes.io/service-account-token 3 32m

bootstrap-signer-token-hzgrs kubernetes.io/service-account-token 3 32m

calico-kube-controllers-token-xxxmn kubernetes.io/service-account-token 3 14m

calico-node-token-q264q kubernetes.io/service-account-token 3 14m

certificate-controller-token-xjcls kubernetes.io/service-account-token 3 32m

clusterrole-aggregation-controller-token-vdhnt kubernetes.io/service-account-token 3 32m

coredns-token-ndkm2 kubernetes.io/service-account-token 3 32m

cronjob-controller-token-9s4hg kubernetes.io/service-account-token 3 32m

daemon-set-controller-token-fv8rs kubernetes.io/service-account-token 3 32m

default-token-5wnhm kubernetes.io/service-account-token 3 32m

deployment-controller-token-6bl57 kubernetes.io/service-account-token 3 32m

disruption-controller-token-xvtv7 kubernetes.io/service-account-token 3 32m

endpoint-controller-token-gc8qs kubernetes.io/service-account-token 3 32m

endpointslice-controller-token-tkfm9 kubernetes.io/service-account-token 3 32m

endpointslicemirroring-controller-token-k66nr kubernetes.io/service-account-token 3 32m

ephemeral-volume-controller-token-4tzxh kubernetes.io/service-account-token 3 32m

expand-controller-token-hztsb kubernetes.io/service-account-token 3 32m

generic-garbage-collector-token-9zmrv kubernetes.io/service-account-token 3 32m

horizontal-pod-autoscaler-token-h6ct5 kubernetes.io/service-account-token 3 32m

job-controller-token-bsh9b kubernetes.io/service-account-token 3 32m

kube-proxy-token-j45xs kubernetes.io/service-account-token 3 32m

namespace-controller-token-tmt8l kubernetes.io/service-account-token 3 32m

node-controller-token-j444g kubernetes.io/service-account-token 3 32m

persistent-volume-binder-token-2v79v kubernetes.io/service-account-token 3 32m

pod-garbage-collector-token-ksh2r kubernetes.io/service-account-token 3 32m

pv-protection-controller-token-2np6q kubernetes.io/service-account-token 3 32m

pvc-protection-controller-token-4jdwq kubernetes.io/service-account-token 3 32m

replicaset-controller-token-b2zjz kubernetes.io/service-account-token 3 32m

replication-controller-token-c8jwk kubernetes.io/service-account-token 3 32m

resourcequota-controller-token-2rm87 kubernetes.io/service-account-token 3 32m

root-ca-cert-publisher-token-lc4xc kubernetes.io/service-account-token 3 32m

service-account-controller-token-wflvt kubernetes.io/service-account-token 3 32m

service-controller-token-9555f kubernetes.io/service-account-token 3 32m

statefulset-controller-token-nhklv kubernetes.io/service-account-token 3 32m

token-cleaner-token-x42xx kubernetes.io/service-account-token 3 32m

ttl-after-finished-controller-token-nppn2 kubernetes.io/service-account-token 3 32m

ttl-controller-token-79wqf kubernetes.io/service-account-token 3 32m

Token过期后生成新的token:

[root@k8s-master01 ~]# kubeadm token create --print-join-command

kubeadm join 10.0.0.200:16443 --token bq1cbw.dv5jsa9z52bwgny5 --discovery-token-ca-cert-hash sha256:6a259f0b91a7412345ee5c182db413a61b5afc93a411e7b949fbc297201594cf

# Master需要生成--certificate-key

[root@k8s-master01 ~]# kubeadm init phase upload-certs --upload-certs

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

7a6cebd73a46feaf355660104e261534786f5984ebfacdd720bbadf385c1bfb4

# 初始化其他master加入集群,--control-plane --certificate-key

[root@k8s-master02 ~]# kubeadm join 10.0.0.200:16443 --token bq1cbw.dv5jsa9z52bwgny5 --discovery-token-ca-cert-hash sha256:6a259f0b91a7412345ee5c182db413a61b5afc93a411e7b949fbc297201594cf \

--control-plane --certificate-key 7a6cebd73a46feaf355660104e261534786f5984ebfacdd720bbadf385c1bfb4

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "apiserver" certificate and key

...

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

[root@k8s-master02 ~]# mkdir -p $HOME/.kube

[root@k8s-master02 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master02 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master02 ~]#

1.1.7 Node节点的配置

Node节点上主要部署公司的一些业务应用,生产环境中不建议Master节点部署系统组件之外的其他Pod,测试环境可以允许Master节点部署Pod以节省系统资源。

[root@k8s-master01 ~]# kubeadm token create --print-join-command

kubeadm join 10.0.0.200:16443 --token bq1cbw.dv5jsa9z52bwgny5 --discovery-token-ca-cert-hash sha256:6a259f0b91a7412345ee5c182db413a61b5afc93a411e7b949fbc297201594cf

[root@k8s-node02 ~]# kubeadm join 10.0.0.200:16443 --token bq1cbw.dv5jsa9z52bwgny5 --discovery-token-ca-cert-hash sha256:6a259f0b91a7412345ee5c182db413a61b5afc93a411e7b949fbc297201594cf

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

在主节点上看,都已经加入到集群中

[root@k8s-master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready control-plane,master 12h v1.23.1

k8s-master02 Ready control-plane,master 36m v1.23.1

k8s-master03 Ready control-plane,master 28m v1.23.1

k8s-node01 Ready <none> 10m v1.23.1

k8s-node02 Ready <none> 19m v1.23.1

1.1.8 Metrics部署

在新版的Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存、磁盘、CPU和网络的使用率。Heapster

更改metrics的部署文件证书,将metrics-server-3.6.1/metrics-server-deployment.yaml的front-proxy-ca.pem改为front-proxy-ca.crt;再接着修改metrics-apiservice.yaml中的apiversion

[root@k8s-master01 ~]# cd k8s-ha-install/

[root@k8s-master01 k8s-ha-install]# ls

k8s-deployment-strategies.md metrics-server-0.3.7 metrics-server-3.6.1 README.md

[root@k8s-master01 k8s-ha-install]# cd metrics-server-3.6.1/

[root@k8s-master01 metrics-server-3.6.1]# ls

aggregated-metrics-reader.yaml auth-delegator.yaml auth-reader.yaml metrics-apiservice.yaml metrics-server-deployment.yaml metrics-server-service.yaml resource-reader.yaml

[root@k8s-master01 metrics-server-3.6.1]# vi metrics-server-deployment.yaml

[root@k8s-master01 metrics-server-3.6.1]# cat metrics-server-deployment.yaml

---

apiVersion: v1

...

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt # change to front-proxy-ca.crt for kubeadm

- --requestheader-username-headers=X-Remote-User

...

[root@k8s-master01 metrics-server-3.6.1]# kubectl api-versions

admissionregistration.k8s.io/v1

apiextensions.k8s.io/v1

apiregistration.k8s.io/v1

apps/v1

authentication.k8s.io/v1

authorization.k8s.io/v1

autoscaling/v1

autoscaling/v2

autoscaling/v2beta1

autoscaling/v2beta2

batch/v1

batch/v1beta1

certificates.k8s.io/v1

coordination.k8s.io/v1

crd.projectcalico.org/v1

discovery.k8s.io/v1

discovery.k8s.io/v1beta1

events.k8s.io/v1

events.k8s.io/v1beta1

flowcontrol.apiserver.k8s.io/v1beta1

flowcontrol.apiserver.k8s.io/v1beta2

networking.k8s.io/v1

node.k8s.io/v1

node.k8s.io/v1beta1

policy/v1

policy/v1beta1

rbac.authorization.k8s.io/v1

scheduling.k8s.io/v1

storage.k8s.io/v1

storage.k8s.io/v1beta1

v1

[root@k8s-master01 metrics-server-3.6.1]# vi metrics-apiservice.yaml

[root@k8s-master01 metrics-server-3.6.1]# cat metrics-apiservice.yaml

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

service:

name: metrics-server

namespace: kube-system

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

安装metrics server

[root@k8s-master01 metrics-server-3.6.1]# kubectl apply -f .

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

Warning: spec.template.spec.nodeSelector[beta.kubernetes.io/os]: deprecated since v1.14; use "kubernetes.io/os" instead

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

[root@k8s-master01 metrics-server-3.6.1]#

将Master01节点的front-proxy-ca.crt复制到所有Node节点

scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node01:/etc/kubernetes/pki/front-proxy-ca.crt

scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node(其他节点自行拷贝):/etc/kubernetes/pki/front-proxy-ca.crt

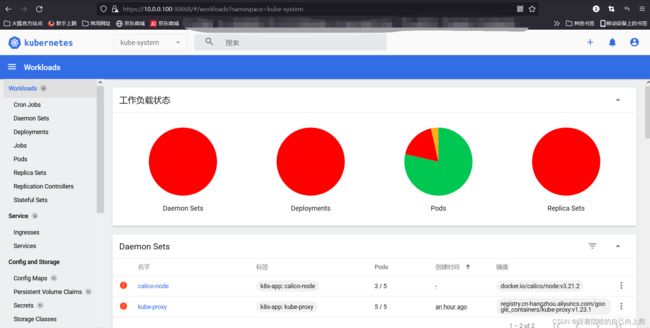

1.1.9 Dashboard部署

官方GitHub:https://github.com/kubernetes/dashboard

Dashboard用于展示集群中的各类资源,同时也可以通过Dashboard实时查看Pod的日志和在容器中执行一些命令等。

可以在官方dashboard查看到最新版dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

上传dashboard文件,用create创建

[root@k8s-master01 ~]# kubectl create -f recommended-2.0.4.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

Warning: spec.template.metadata.annotations[seccomp.security.alpha.kubernetes.io/pod]: deprecated since v1.19, non-functional in v1.25+; use the "seccompProfile" field instead

deployment.apps/dashboard-metrics-scraper created

[root@k8s-master01 ~]# kubectl get po -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-79459f84f-s4qgd 1/1 Running 0 118s

kubernetes-dashboard-5fc4c598cf-zqft2 1/1 Running 0 118s

集群验证

[root@k8s-master01 ~]# kubectl top po -n kube-system

[root@k8s-master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 58m

[root@k8s-master01 ~]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 58m

metrics-server ClusterIP 10.100.137.143 <none> 443/TCP 2m40s

[root@k8s-master01 ~]# telnet 10.96.0.1 443

Trying 10.96.0.1...

Connected to 10.96.0.1.

Escape character is '^]'.

^CConnection closed by foreign host.

[root@k8s-master01 ~]# telnet 10.96.0.10 53

Trying 10.96.0.10...

Connected to 10.96.0.10.

Escape character is '^]'.

^CConnection closed by foreign host.

[root@k8s-master01 ~]# kubectl get po --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-647d84984b-bpghh 1/1 Running 0 28m 172.168.85.194 k8s-node01 <none> <none>

kube-system calico-kube-controllers-647d84984b-rqhx4 0/1 Terminating 0 51m <none> k8s-node02 <none> <none>

kube-system calico-node-74s8h 1/1 Running 0 51m 10.0.0.100 k8s-master01 <none> <none>

kube-system calico-node-8bm2m 0/1 Init:0/3 0 51m 10.0.0.102 k8s-master03 <none> <none>

kube-system calico-node-lxkph 1/1 Running 0 51m 10.0.0.103 k8s-node01 <none> <none>

kube-system calico-node-rzbst 0/1 PodInitializing 0 51m 10.0.0.104 k8s-node02 <none> <none>

kube-system calico-node-wgzfm 1/1 Running 0 51m 10.0.0.101 k8s-master02 <none> <none>

kube-system coredns-65c54cc984-9jmf4 1/1 Running 0 60m 172.168.85.193 k8s-node01 <none> <none>

kube-system coredns-65c54cc984-w64kz 1/1 Running 0 28m 172.168.122.129 k8s-master02 <none> <none>

kube-system coredns-65c54cc984-xcc9t 0/1 Terminating 0 60m <none> k8s-node02 <none> <none>

kube-system etcd-k8s-master01 1/1 Running 3 (35m ago) 60m 10.0.0.100 k8s-master01 <none> <none>

kube-system etcd-k8s-master02 1/1 Running 2 (37m ago) 59m 10.0.0.101 k8s-master02 <none> <none>

kube-system etcd-k8s-master03 1/1 Running 0 58m 10.0.0.102 k8s-master03 <none> <none>

kube-system kube-apiserver-k8s-master01 1/1 Running 3 (35m ago) 60m 10.0.0.100 k8s-master01 <none> <none>

kube-system kube-apiserver-k8s-master02 1/1 Running 2 (37m ago) 59m 10.0.0.101 k8s-master02 <none> <none>

kube-system kube-apiserver-k8s-master03 1/1 Running 1 (<invalid> ago) 58m 10.0.0.102 k8s-master03 <none> <none>

kube-system kube-controller-manager-k8s-master01 1/1 Running 4 (35m ago) 60m 10.0.0.100 k8s-master01 <none> <none>

kube-system kube-controller-manager-k8s-master02 1/1 Running 3 (37m ago) 59m 10.0.0.101 k8s-master02 <none> <none>

kube-system kube-controller-manager-k8s-master03 1/1 Running 0 57m 10.0.0.102 k8s-master03 <none> <none>

kube-system kube-proxy-28lj7 1/1 Running 0 57m 10.0.0.104 k8s-node02 <none> <none>

kube-system kube-proxy-2tttp 1/1 Running 0 58m 10.0.0.102 k8s-master03 <none> <none>

kube-system kube-proxy-49cs9 1/1 Running 2 (35m ago) 60m 10.0.0.100 k8s-master01 <none> <none>

kube-system kube-proxy-j6rrq 1/1 Running 1 (37m ago) 59m 10.0.0.101 k8s-master02 <none> <none>

kube-system kube-proxy-z75kb 1/1 Running 1 (37m ago) 57m 10.0.0.103 k8s-node01 <none> <none>

kube-system kube-scheduler-k8s-master01 1/1 Running 4 (35m ago) 60m 10.0.0.100 k8s-master01 <none> <none>

kube-system kube-scheduler-k8s-master02 1/1 Running 3 (37m ago) 59m 10.0.0.101 k8s-master02 <none> <none>

kube-system kube-scheduler-k8s-master03 1/1 Running 0 57m 10.0.0.102 k8s-master03 <none> <none>

kube-system metrics-server-6bcff985dc-tsjsj 0/1 CrashLoopBackOff 5 (61s ago) 4m 172.168.85.200 k8s-node01 <none> <none>

kubernetes-dashboard dashboard-metrics-scraper-79459f84f-s4qgd 1/1 Running 0 18m 172.168.85.197 k8s-node01 <none> <none>

kubernetes-dashboard kubernetes-dashboard-5fc4c598cf-zqft2 1/1 Running 0 18m 172.168.85.196 k8s-node01 <none> <none>

# 更改dashboard的svc为NodePort,将ClusterIP更改为NodePort(如果已经为NodePort忽略此步骤):

[root@k8s-master01 ~]# kubectl edit svc kubernetes-dashboard -n !$

...

sessionAffinity: None