Pod控制器详解

Pod控制器详解

文章目录

- Pod控制器详解

-

-

- 一. Pod控制器介绍

-

- 1. Pod是kubernetes的最小管理单元,在kubernetes中,按照pod的创建方式可以将其分为两类:

- 2. Pod控制器

- 3. 类型

- 二. ReplicaSet (RS)

-

- 1. 创建ReplicaSet

- 2. 扩缩容

- 3. 镜像升级

- 4. 删除ReplicaSet

- 三. Deployment(Deploy)

-

- 1. Deployment主要功能:

- 2. Deployment的资源清单文件

- 3. 创建deployment

- 4. 扩缩容

- 5. 镜像更新

-

- 5.1 重建更新

- 5.2 滚动更新

- 5.3 版本回退

- 5.4 金丝雀发布

- 5.5 删除Deployment

- 四. Horizontal Pod Autoscaler(HPA)

-

- 1. 实验

-

- 1.1 安装metrics-server

- 1.2 准备deployment和servie

- 1.3 部署HPA

- 1.4 测试

- 五. DaemonSet(DS)

-

- 1. DaemonSet控制器的特点

- 2. DaemonSet的资源清单文件

- 3. 创建文件

- 六. Job

-

- 1. Job定义

- 2. Job特点

- 3. Job的资源清单文件:

- 4. 创建文件

- 七. CronJob(CJ)

-

- 1. CronJob的资源清单文件

- 2. 创建文件

-

一. Pod控制器介绍

1. Pod是kubernetes的最小管理单元,在kubernetes中,按照pod的创建方式可以将其分为两类:

- 自主式pod:kubernetes直接创建出来的Pod,这种pod删除后就没有了,也不会重建

- 控制器创建的pod:kubernetes通过控制器创建的pod,这种pod删除了之后还会自动重建

2. Pod控制器

- Pod控制器是管理pod的中间层,使用Pod控制器之后,只需要告诉Pod控制器,想要多少个什么样的Pod就可以了,它会创建出满足条件的Pod并确保每一个Pod资源处于用户期望的目标状态。如果Pod资源在运行中出现故障,它会基于指定策略重新编排Pod。多删少补,通过这样的实现滚动更新,规则定义3个pod,启动4个pod,干掉旧版本,重启新版本,一次类推

3. 类型

- 在kubernetes中,有很多类型的pod控制器,每种都有自己的适合的场景,常见的有下面这些:

- ReplicationController:比较原始的pod控制器,已经被废弃,由ReplicaSet替代

- ReplicaSet:保证副本数量一直维持在期望值,并支持pod数量扩缩容,镜像版本升级

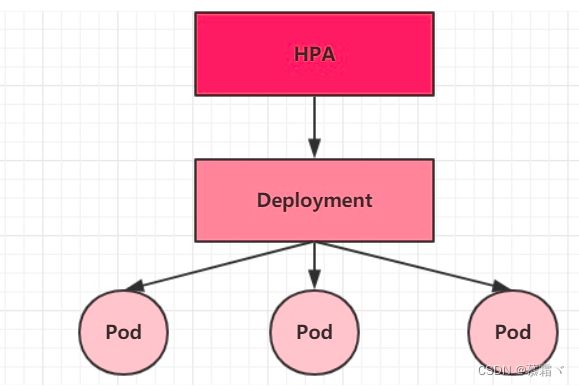

- Deployment:通过控制ReplicaSet来控制Pod,并支持滚动升级、回退版本

- Horizontal Pod Autoscaler:可以根据集群负载自动 水平调整Pod的数量,实现削峰填谷

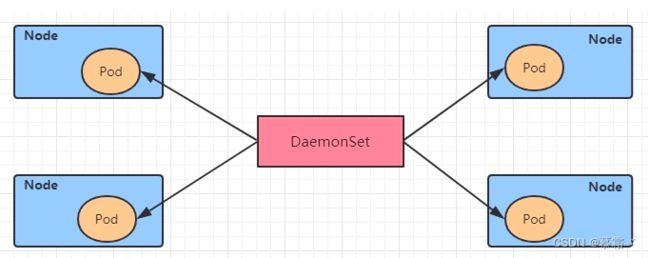

- DaemonSet:在集群中的指定Node上运行且仅运行一个副本,一般用于守护进程类的任务

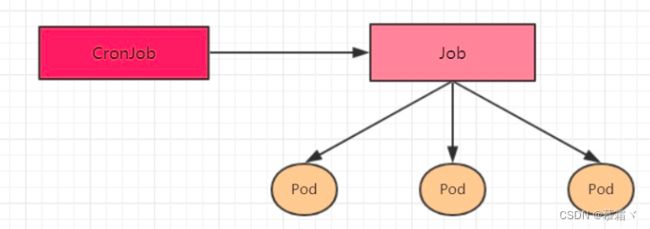

- Job:它创建出来的pod只要完成任务就立即退出,不需要重启或重建,用于执行一次性任务

- Cronjob:它创建的Pod负责周期性任务控制,不需要持续后台运行

- StatefulSet:管理有状态应用

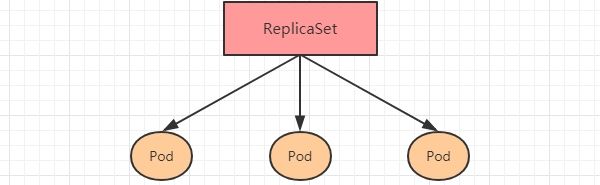

二. ReplicaSet (RS)

- ReplicaSet的主要作用是保证一定数量的pod正常运行,它会持续监听这些Pod的运行状态,一旦Pod发生故障,就会重启或重建。

- 它还支持对pod数量的扩缩容和镜像版本的升降级。

- ReplicaSet的资源清单文件:

apiVersion: apps/v1 # 版本号

kind: ReplicaSet # 类型

metadata: # 元数据

name: # rs名称

namespace: # 所属命名空间

labels: #标签

controller: rs

spec: # 详情描述

replicas: 3 # 副本数量

selector: # 选择器,通过它指定该控制器管理哪些pod

matchLabels: # Labels匹配规则//2选1

app: nginx-pod

matchExpressions: # Expressions匹配规则

- {key: app, operator: In, values: [nginx-pod]}//2选1

template: # 模板,当副本数量不足时,会根据下面的模板创建pod副本

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

- 了解的配置项就是

spec下面几个选项:- replicas:指定副本数量,其实就是当前rs创建出来的pod的数量,默认为1

- selector:选择器,它的作用是建立pod控制器和pod之间的关联关系,采用的Label Selector机制

- 在pod模板上定义label,在控制器上定义选择器,就可以表明当前控制器能管理哪些pod了

- template:模板,就是当前控制器创建pod所使用的模板板,里面其实就是前一章学过的pod的定义

1. 创建ReplicaSet

- 创建文件

[root@k8s-master manifest]# cat pc-replicaset.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: pc-replicaset

namespace: dev

spec:

replicas: 3

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

[root@k8s-master manifest]#

- 运行

# 创建rs

[root@k8s-master manifest]# kubectl apply -f pc-replicaset.yaml

replicaset.apps/pc-replicaset created

# 查看rs

# DESIRED:期望副本数量

# CURRENT:当前副本数量

# READY:已经准备好提供服务的副本数量

[root@k8s-master manifest]# kubectl get -f pc-replicaset.yaml -o wide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

pc-replicaset 3 3 3 54s nginx nginx:1.17.1 app=nginx-pod

[root@k8s-master manifest]#

# 查看当前控制器创建出来的pod

# 这里发现控制器创建出来的pod的名称是在控制器名称后面拼接了-xxxxx随机码

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-replicaset-4qpfc 1/1 Running 0 6m46s

pc-replicaset-6kbnw 1/1 Running 0 6m46s

pc-replicaset-qfbxf 1/1 Running 0 6m46s

2. 扩缩容

# 指定类型 是哪个控制器 在那个名称空间

[root@k8s-master manifest]# kubectl edit rs pc-replicaset -n dev

内容省略....

creationTimestamp: "2022-09-13T13:49:08Z"

generation: 2

name: pc-replicaset

namespace: dev

resourceVersion: "273468"

uid: 93a19817-6d84-447e-b1c5-50a5e7d299f0

spec:

replicas: 6

selector:

matchLabels:

app: nginx-pod

template:

[root@k8s-master manifest]# kubectl edit rs pc-replicaset -n dev

replicaset.apps/pc-replicaset edited

[root@k8s-master manifest]#

扩容

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-replicaset-4qpfc 1/1 Running 0 13m

pc-replicaset-6kbnw 1/1 Running 0 13m

pc-replicaset-7p6fp 1/1 Running 0 94s

pc-replicaset-dplzm 1/1 Running 0 25s

pc-replicaset-qfbxf 1/1 Running 0 13m

pc-replicaset-xnms7 1/1 Running 0 94s

[root@k8s-master manifest]# kubectl get -f pc-replicaset.yaml -o wide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

pc-replicaset 6 6 6 13m nginx nginx:1.17.1 app=nginx-pod

[root@k8s-master manifest]#

# 当然也可以直接使用命令实现

# 使用scale命令实现扩缩容, 后面--replicas=n直接指定目标数量即可

[root@k8s-master manifest]# kubectl scale rs pc-replicaset -n dev --replicas=2

replicaset.apps/pc-replicaset scaled

#稍等片刻,就只剩下2个了

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-replicaset-4qpfc 1/1 Running 0 22m

pc-replicaset-qfbxf 1/1 Running 0 22m

#删除一个又自动运行一个

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-replicaset-llh9p 1/1 Running 0 25s

pc-replicaset-qfbxf 1/1 Running 0 33m

[root@k8s-master manifest]# kubectl delete pod -n dev pc-replicaset-qfbxf

pod "pc-replicaset-qfbxf" deleted

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-replicaset-llh9p 1/1 Running 0 51s

pc-replicaset-pnlwk 1/1 Running 0 3s

[root@k8s-master manifest]#

3. 镜像升级

# 编辑rs的容器镜像 - image: nginx:1.17.2

[root@k8s-master manifest]# kubectl edit rs pc-replicaset -n dev

replicaset.apps/pc-replicaset edited

spec:

replicas: 2

selector:

matchLabels:

app: nginx-pod

template:

metadata:

creationTimestamp: null

labels:

app: nginx-pod

spec:

containers:

- image: nginx:1.17.2

imagePullPolicy: IfNotPresent

name: nginx

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

# 再次查看,发现镜像版本已经变更了

[root@k8s-master ~]# kubectl get rs -n dev -o wide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

pc-replicaset 2 2 2 44m nginx nginx:1.17.2 app=nginx-pod

[root@k8s-master ~]#

# 同样的道理,也可以使用命令完成这个工作

# kubectl set image rs rs名称 容器=镜像版本 -n namespace

[root@k8s-master ~]# kubectl set image rs pc-replicaset -n dev nginx=nginx:1.17.1

replicaset.apps/pc-replicaset image updated

# 再次查看,发现镜像版本已经变更了

[root@k8s-master ~]# kubectl get rs -n dev -o wideNAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

pc-replicaset 2 2 2 45m nginx nginx:1.17.1 app=nginx-pod

[root@k8s-master ~]#

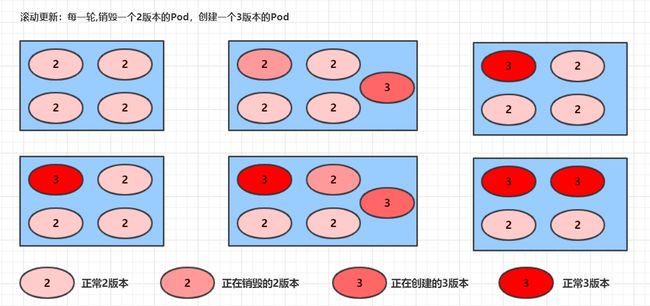

滚动更新

1.查看:

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-replicaset-6fqtd 1/1 Running 0 23s

pc-replicaset-79pk6 1/1 Running 0 23s

pc-replicaset-llh9p 1/1 Running 0 16m

pc-replicaset-pnlwk 1/1 Running 0 15m

[root@k8s-master manifest]# kubectl describe pod pc-replicaset-6fqtd -n dev|grep -i image

Image: nginx:1.17.1

2.升级版本

[root@k8s-master ~]# kubectl set image rs pc-replicaset -n dev nginx=nginx:1.17.2

replicaset.apps/pc-replicaset image updated

[root@k8s-master ~]#

3.删除一个pod

[root@k8s-master manifest]# kubectl delete pod pc-replicaset-6fqtd -n dev

pod "pc-replicaset-6fqtd" deleted

[root@k8s-master manifest]# kubectl get pods -n devNAME READY STATUS RESTARTS AGE

pc-replicaset-79pk6 1/1 Running 0 3m57s

pc-replicaset-htxzm 0/1 ContainerCreating 0 5s

pc-replicaset-llh9p 1/1 Running 0 20m

pc-replicaset-pnlwk 1/1 Running 0 19m

4.查看

[root@k8s-master manifest]# kubectl describe pod pc-replicaset-htxzm -n dev|grep -i image

Image: nginx:1.17.2

可以换镜像

4. 删除ReplicaSet

# 使用kubectl delete命令会删除此RS以及它管理的Pod

# 在kubernetes删除RS前,会将RS的replicasclear调整为0,等待所有的Pod被删除后,在执行RS对象的删除

[root@k8s-master ~]# kubectl delete rs pc-replicaset -n dev

replicaset.apps "pc-replicaset" deleted

# 如果希望仅仅删除RS对象(保留Pod),可以使用kubectl delete命令时添加--cascade=false选项(不推荐)。

kubectl delete rs pc-replicaset -n dev --cascade=false

# 也可以使用yaml直接删除(推荐)

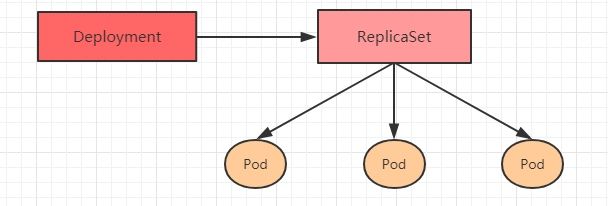

三. Deployment(Deploy)

-

为了更好的解决服务编排的问题,kubernetes在V1.2版本开始,引入了Deployment控制器

-

这种控制器并不直接管理pod,而是通过管理ReplicaSet来简介管理Pod,即:Deployment管理ReplicaSet,ReplicaSet管理Pod。所以Deployment比ReplicaSet功能更加强大。

1. Deployment主要功能:

- 支持ReplicaSet的所有功能

- 支持发布的停止、继续

- 支持滚动升级和回滚版本

2. Deployment的资源清单文件

apiVersion: apps/v1 # 版本号

kind: Deployment # 类型

metadata: # 元数据

name: # rs名称

namespace: # 所属命名空间

labels: #标签

controller: deploy

spec: # 详情描述

replicas: 3 # 副本数量,几个pod

revisionHistoryLimit: 3 # 保留历史版本

paused: false # 暂停部署,默认是false

progressDeadlineSeconds: 600 # 部署超时时间(s),默认是600

strategy: # 策略

type: RollingUpdate # 滚动更新策略

rollingUpdate: # 滚动更新

maxSurge: 30% # 最大额外可以存在的副本数,可以为百分比,也可以为整数

maxUnavailable: 30% # 最大不可用状态的 Pod 的最大值,可以为百分比,也可以为整数

selector: # 选择器,通过它指定该控制器管理哪些pod

matchLabels: # Labels匹配规则

app: nginx-pod

matchExpressions: # Expressions匹配规则

- {key: app, operator: In, values: [nginx-pod]}

template: # 模板,当副本数量不足时,会根据下面的模板创建pod副本

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

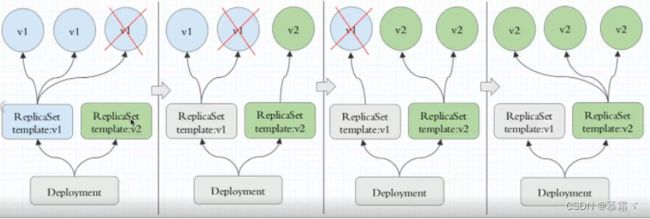

- 滚动更新

- 停止一个,启动一个新的

3. 创建deployment

[root@k8s-master manifest]# cat pc-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: pc-deployment

namespace: dev

spec:

replicas: 3

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

[root@k8s-master manifest]#

- 创建deploy

# 创建deployment

[root@k8s-master manifest]# kubectl apply -f pc-deployment.yaml

deployment.apps/pc-deployment created

# 查看deployment

# UP-TO-DATE 最新版本的pod的数量

# AVAILABLE 当前可用的pod的数量

[root@k8s-master manifest]# kubectl get -f pc-deployment.yaml

NAME READY UP-TO-DATE AVAILABLE AGE

pc-deployment 3/3 3 3 42s

# 查看rs

# 发现rs的名称是在原来deployment的名字后面添加了一个10位数的随机串

[root@k8s-master manifest]# kubectl get rs -n dev

NAME DESIRED CURRENT READY AGE

pc-deployment-6f6bc8fc5f 3 3 3 101s

# 查看pod

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-deployment-6f6bc8fc5f-2k5jz 1/1 Running 0 2m14s

pc-deployment-6f6bc8fc5f-xbfm4 1/1 Running 0 2m13s

pc-deployment-6f6bc8fc5f-xpmd9 1/1 Running 0 2m13s

[root@k8s-master manifest]#

4. 扩缩容

# 变更副本数量为5个

[root@k8s-master ~]# kubectl get deploy -n dev

NAME READY UP-TO-DATE AVAILABLE AGE

pc-deployment 3/3 3 3 4m40s

[root@k8s-master ~]# kubectl scale deploy pc-deployment -n dev --replicas 4

deployment.apps/pc-deployment scaled

# 查看pod

[root@k8s-master ~]# kubectl get pods -n devNAME READY STATUS RESTARTS AGE

pc-deployment-6f6bc8fc5f-2k5jz 1/1 Running 0 5m36s

pc-deployment-6f6bc8fc5f-xbfm4 1/1 Running 0 5m35s

pc-deployment-6f6bc8fc5f-xpmd9 1/1 Running 0 5m35s

pc-deployment-6f6bc8fc5f-xvq8j 1/1 Running 0 7s

[root@k8s-master ~]#

# 查看deployment

[root@k8s-master ~]# kubectl get deploy -n devNAME READY UP-TO-DATE AVAILABLE AGE

pc-deployment 4/4 4 4 7m31s

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl scale deploy pc-deployment -n dev --replicas 2

deployment.apps/pc-deployment scaled

[root@k8s-master ~]# kubectl get deploy -n devNAME READY UP-TO-DATE AVAILABLE AGE

pc-deployment 2/2 2 2 8m39s

[root@k8s-master ~]#

# 编辑deployment的副本数量,修改spec:replicas: 4即可

[root@k8s-master ~]# kubectl edit deploy pc-deployment -n dev

deployment.apps/pc-deployment edited

[root@k8s-master ~]# kubectl get deploy -n dev

NAME READY UP-TO-DATE AVAILABLE AGE

pc-deployment 4/4 4 4 11m

[root@k8s-master ~]#

spec:

progressDeadlineSeconds: 600

replicas: 4

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx-pod

5. 镜像更新

- deployment支持两种更新策略:

重建更新和滚动更新,可以通过strategy指定策略类型,支持两个属性:

strategy:指定新的Pod替换旧的Pod的策略, 支持两个属性:

type:指定策略类型,支持两种策略

Recreate:在创建出新的Pod之前会先杀掉所有已存在的Pod

RollingUpdate:滚动更新,就是杀死一部分,就启动一部分,在更新过程中,存在两个版本Pod

rollingUpdate:当type为RollingUpdate时生效,用于为RollingUpdate设置参数,支持两个属性:

maxUnavailable:用来指定在升级过程中不可用Pod的最大数量,默认为25%。4个容器的25%是1个

maxSurge: 用来指定在升级过程中可以超过期望的Pod的最大数量,默认为25%。

5.1 重建更新

- 编辑pc-deployment.yaml,在spec节点下添加更新策略

[root@k8s-master ~]# kubectl edit deploy pc-deployment -n dev

deployment.apps/pc-deployment edited

spec:

progressDeadlineSeconds: 600

replicas: 4

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx-pod

strategy:

type: Recreate

template:

spec:

strategy: # 策略

type: Recreate # 重建更新

- 创建deploy进行验证

# 变更镜像

[root@k8s-master ~]# kubectl set image deploy pc-deployment -n dev nginx=nginx:1.17.2

deployment.apps/pc-deployment image updated

将旧的干掉,重新运行新的

[root@k8s-master ~]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-deployment-59577f776-6djw4 0/1 ContainerCreating 0 41s

pc-deployment-59577f776-d45rw 0/1 ContainerCreating 0 41s

pc-deployment-59577f776-dtwqf 0/1 ContainerCreating 0 41s

pc-deployment-59577f776-wk74b 0/1 ContainerCreating 0 41s

[root@k8s-master ~]#

5.2 滚动更新

- 编辑pc-deployment.yaml,在spec节点下添加更新策略

spec:

strategy: # 策略

type: RollingUpdate # 滚动更新策略

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

[root@k8s-master ~]# kubectl edit deploy pc-deployment -n dev

deployment.apps/pc-deployment edited

spec:

progressDeadlineSeconds: 600

replicas: 4

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx-pod

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

template:

- 创建deploy进行验证

变更镜像

[root@k8s-master ~]# kubectl set image deployment pc-deployment nginx=nginx:1.17.3 -n dev

deployment.apps/pc-deployment image updated

[root@k8s-master ~]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-deployment-59577f776-6djw4 0/1 ContainerCreating 0 5m31s

pc-deployment-59577f776-d45rw 0/1 Terminating 0 5m31s

pc-deployment-59577f776-dtwqf 0/1 ContainerCreating 0 5m31s

pc-deployment-59577f776-wk74b 0/1 ContainerCreating 0 5m31s

pc-deployment-6ccc4766df-8rd58 0/1 ContainerCreating 0 6s

pc-deployment-6ccc4766df-97gst 0/1 ContainerCreating 0 6s

# 至此,新版本的pod创建完毕,就版本的pod销毁完毕

# 中间过程是滚动进行的,也就是边销毁边创建

- 滚动更新的过程

-

去掉一个旧的,运行一个新的

-

镜像更新中rs的变化

# 查看rs,发现原来的rs的依旧存在,只是pod数量变为了0,而后又新产生了一个rs,pod数量为4

# 其实这就是deployment能够进行版本回退的奥妙所在,后面会详细解释

[root@k8s-master01 ~]# kubectl get rs -n dev

NAME DESIRED CURRENT READY AGE

pc-deployment-6696798b78 0 0 0 7m37s

pc-deployment-6696798b11 0 0 0 5m37s

pc-deployment-c848d76789 4 4 4 72s

5.3 版本回退

- deployment支持版本升级过程中的暂停、继续功能以及版本回退等诸多功能

- kubectl rollout: 版本升级相关功能,支持下面的选项

- status 显示当前升级状态

- history 显示 升级历史记录

- pause 暂停版本升级过程

- resume 继续已经暂停的版本升级过程

- restart 重启版本升级过程

- undo 回滚到上一级版本(可以使用–to-revision回滚到指定版本)

# 查看当前升级版本的状态

# kubectl rollout status deploy pc-deployment -n dev

[root@k8s-master ~]# kubectl get deploy -n dev

NAME READY UP-TO-DATE AVAILABLE AGE

pc-deployment 3/3 1 3 9m30s

[root@k8s-master ~]# kubectl rollout status deploy pc-deployment -n dev

# 查看升级历史记录

[root@k8s-master ~]# kubectl rollout history deploy pc-deployment -n dev

deployment.apps/pc-deployment

REVISION CHANGE-CAUSE

1 <none>

2 <none>

3 <none>

[root@k8s-master ~]#

# 可以发现有三次版本记录,说明完成过两次升级

# 版本回滚

# 这里直接使用--to-revision=1回滚到了1版本, 如果省略这个选项,就是回退到上个版本,就是2版本

[root@k8s-master ~]# kubectl rollout undo deploy pc-deployment -n dev --to-revision=1

deployment.apps/pc-deployment rolled back

[root@k8s-master ~]#

# 查看发现,通过nginx镜像版本可以发现到了第一版

[root@k8s-master ~]# kubectl get pods -n dev -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pc-deployment-6ccc4766df-rm5c2 0/1 Terminating 0 4m2s <none> [root@k8s-master ~]# kubectl get deploy -n dev -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

pc-deployment 3/3 3 3 21m nginx nginx:1.17.1 app=nginx-pod

[root@k8s-master ~]#

# 查看rs,发现第一个rs中有3个pod运行,后面两个版本的rs中pod为运行

# 其实deployment之所以可是实现版本的回滚,就是通过记录下历史rs来实现的,

# 一旦想回滚到哪个版本,只需要将当前版本pod数量降为0,然后将回滚版本的pod提升为目标数量就可以了

[root@k8s-master ~]# kubectl get rs -n dev

NAME DESIRED CURRENT READY AGE

pc-deployment-59577f776 0 0 0 19m

pc-deployment-6ccc4766df 0 0 0 18m

pc-deployment-6f6bc8fc5f 3 3 3 22m

5.4 金丝雀发布

-

Deployment控制器支持控制更新过程中的控制,如“暂停(pause)”或“继续(resume)”更新操作

-

比如有一批新的Pod资源创建完成后立即暂停更新过程,仅存在一部分新版本的应用,主体部分还是旧的版本,再筛选一小部分的用户请求路由到新版本的Pod应用,继续观察能否稳定地按期望的方式运行。确定没问题之后再继续完成余下的Pod资源滚动更新,否则立即回滚更新操作。这就是金丝雀发布。(先更新一部分,另一部分将其暂停,将用户请求调度过去进行测试,过一段时间看是否访问,能访问,将剩余部分更新 )

# 更新deployment的版本,并配置暂停deployment

[root@k8s-master ~]# kubectl set image deploy pc-deployment -n dev nginx=nginx:1.17.4 && kubectl rollout pause deploy pc-deployment -n dev

deployment.apps/pc-deployment image updated

deployment.apps/pc-deployment paused

[root@k8s-master ~]#

#观察更新状态

[root@k8s-master ~]# kubectl rollout status deploy pc-deployment -n dev

Waiting for deployment "pc-deployment" rollout to finish: 1 out of 3 new replicas have been updated...

# 监控更新的过程,可以看到已经新增了一个资源,但是并未按照预期的状态去删除一个旧的资源,就是因为使用了pause暂停命令

[root@k8s-master ~]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-deployment-59577f776-bfvt6 0/1 Terminating 0 5m26s

pc-deployment-6f6bc8fc5f-4p2xv 1/1 Running 0 68s

pc-deployment-6f6bc8fc5f-d57nd 1/1 Running 0 67s

pc-deployment-6f6bc8fc5f-jw7gg 1/1 Running 0 69s

pc-deployment-7c88cdf554-ljvj5 1/1 Running 0 29s

[root@k8s-master ~]# kubectl get rs -n dev

NAME DESIRED CURRENT READY AGE

pc-deployment-59577f776 0 0 0 5m39s

pc-deployment-6ccc4766df 0 0 0 2m30s

pc-deployment-6f6bc8fc5f 3 3 3 6m24s

pc-deployment-7c88cdf554 1 1 1 42s

[root@k8s-master ~]#

# 确保更新的pod没问题了,继续更新

[root@k8s-master ~]# kubectl rollout resume deploy pc-deployment -n dev

deployment.apps/pc-deployment resumed

[root@k8s-master ~]#

# 查看最后的更新情况

[root@k8s-master ~]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-deployment-59577f776-bfvt6 0/1 Terminating 0 7m51s

pc-deployment-7c88cdf554-2b8c9 1/1 Running 0 25s

pc-deployment-7c88cdf554-ljvj5 1/1 Running 0 2m54s

pc-deployment-7c88cdf554-smwkq 1/1 Running 0 26s

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get rs -n dev -o wide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

pc-deployment-59577f776 0 0 0 8m37s nginx nginx:1.17.2 app=nginx-pod,pod-template-hash=59577f776

pc-deployment-6ccc4766df 0 0 0 5m28s nginx nginx:1.17.3 app=nginx-pod,pod-template-hash=6ccc4766df

pc-deployment-6f6bc8fc5f 0 0 0 9m22s nginx nginx:1.17.1 app=nginx-pod,pod-template-hash=6f6bc8fc5f

pc-deployment-7c88cdf554 3 3 3 3m40s nginx nginx:1.17.4 app=nginx-pod,pod-template-hash=7c88cdf554

[root@k8s-master ~]#

5.5 删除Deployment

# 删除deployment,其下的rs和pod也将被删除

[root@k8s-master manifest]# kubectl delete -f pc-deployment.yaml

deployment.apps "pc-deployment" deleted

[root@k8s-master manifest]# kubectl get rs -n dev -o wide

No resources found in dev namespace.

[root@k8s-master manifest]# kubectl get deloy -n dev -o wide

error: the server doesn't have a resource type "deloy"

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-deployment-59577f776-bfvt6 0/1 Terminating 0 12m

[root@k8s-master manifest]#

四. Horizontal Pod Autoscaler(HPA)

-

水平自动扩展pod

-

已经可以实现通过手工执行

kubectl scale命令实现Pod扩容或缩容,但是这显然不符合Kubernetes的定位目标–自动化、智能化。 -

Kubernetes期望可以实现通过监测Pod的使用情况,实现pod数量的自动调整,就产生了HPA控制器。

-

HPA可以获取每个Pod利用率,然后和HPA中定义的指标进行对比,同时计算出需要伸缩的具体值,最后实现Pod的数量的调整。

-

HPA与之前的Deployment一样,也属于一种Kubernetes资源对象,它通过追踪分析RC控制的所有目标Pod的负载变化情况,来确定是否需要针对性地调整目标Pod的副本数,是HPA的实现原理。 (通过算法算出需要多少pod的副本数,多退少补)

1. 实验

1.1 安装metrics-server

# 安装git

[root@k8s-master ~]# yum -y install git

# 获取metrics-server, 注意使用的版本

[root@k8s-master ~]# git clone -b v0.6.1 https://github.com/kubernetes-incubator/metrics-server

Cloning into 'metrics-server'...

remote: Enumerating objects: 14773, done.

remote: Counting objects: 100% (270/270), done.

remote: Compressing objects: 100% (163/163), done.

remote: Total 14773 (delta 124), reused 199 (delta 102), pack-reused 14503

Receiving objects: 100% (14773/14773), 13.37 MiB | 1.91 MiB/s, done.

Resolving deltas: 100% (7811/7811), done.

Note: switching to '9c9d712d31742f7c32c023d7b36c49c2dc7033b5'.

You are in 'detached HEAD' state. You can look around, make experimental

changes and commit them, and you can discard any commits you make in this

state without impacting any branches by switching back to a branch.

If you want to create a new branch to retain commits you create, you may

do so (now or later) by using -c with the switch command. Example:

git switch -c <new-branch-name>

Or undo this operation with:

git switch -

Turn off this advice by setting config variable advice.detachedHead to false

[root@k8s-master ~]# ls

anaconda-ks.cfg kube-flannel.yml metrics-server

k8s.txt manifest

[root@k8s-master ~]#

下载 components.yaml

[root@k8s-master ~]# wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.1/components.yaml

# 修改文件components.yaml image:registry.aliyuncs.com/google_containers/metrics-server:v0.6.1

imagePullPolicy: Always

将健康检查删除:

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

添加

args:

- --kubelet-insecure-tls

修改文件components.yaml

spec:

containers:

- args:

- --cert-dir=/tmp

# - --secure-port=4443

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

selector:

k8s-app: metrics-server

---

imagePullPolicy: Always

name: metrics-server

ports:

- containerPort: 80

name: http

protocol: TCP

# 安装metrics-server

[root@k8s-master ~]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

[root@k8s-master ~]#

# 查看pod运行情况

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-c676cc86f-44bsf 1/1 Running 11 (156m ago) 8d

coredns-c676cc86f-fbb7f 1/1 Running 11 (156m ago) 8d

etcd-k8s-master 1/1 Running 11 (156m ago) 8d

kube-apiserver-k8s-master 1/1 Running 11 (156m ago) 8d

kube-controller-manager-k8s-master 1/1 Running 14 (156m ago) 8d

kube-proxy-65lcn 1/1 Running 11 (156m ago) 8d

kube-proxy-lw4z2 1/1 Running 11 (155m ago) 8d

kube-proxy-zskvf 1/1 Running 12 (155m ago) 8d

kube-scheduler-k8s-master 1/1 Running 15 (156m ago) 8d

metrics-server-6d67df7d45-jpjll 1/1 Running 0 55s

[root@k8s-master ~]#

# 使用kubectl top node 查看资源使用情况

[root@k8s-master01 1.8+]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master01 289m 14% 1582Mi 54%

k8s-node01 81m 4% 1195Mi 40%

k8s-node02 72m 3% 1211Mi 41%

[root@k8s-master01 1.8+]# kubectl top pod -n kube-system

NAME CPU(cores) MEMORY(bytes)

coredns-6955765f44-7ptsb 3m 9Mi

coredns-6955765f44-vcwr5 3m 8Mi

etcd-master 14m 145Mi

...

# 至此,metrics-server安装完成

1.2 准备deployment和servie

- 创建pc-hpa-pod.yaml文件

[root@k8s-master manifest]# cat pc-hpa-pod.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: dev

spec:

strategy: # 策略

type: RollingUpdate # 滚动更新策略

replicas: 1

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

resources: # 资源配额

limits: # 限制资源(上限)

cpu: "1" # CPU限制,单位是core数

requests: # 请求资源(下限)

cpu: "100m" # CPU限制,单位是core数

[root@k8s-master manifest]#

# 创建pod

root@k8s-master manifest]# kubectl apply -f pc-hpa-pod.yaml

deployment.apps/nginx created

[root@k8s-master manifest]#

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

nginx-57797f84cb-njnhz 1/1 Running 0 48s

# 创建service

[root@k8s-master ~]# kubectl expose deploy nginx --type=NodePort --port=80 -n dev

service/nginx exposed

[root@k8s-master ~]# kubectl get svc -n dev

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

containerlab NodePort 10.99.32.163 <none> 80:30514/TCP 5d12h

nginx NodePort 10.111.65.78 <none> 80:31876/TCP 13s

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get pods,svc,deploy -n dev

NAME READY STATUS RESTARTS AGE

pod/nginx-57797f84cb-njnhz 1/1 Running 0 3m34s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/containerlab NodePort 10.99.32.163 <none> 80:30514/TCP 5d12h

service/nginx NodePort 10.111.65.78 <none> 80:31876/TCP 83s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx 1/1 1 1 3m34s

[root@k8s-master ~]#

1.3 部署HPA

- 创建pc-hpa.yaml文件

[root@k8s-master manifest]# cat pc-hpa.yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: pc-hpa

namespace: dev

spec:

minReplicas: 1 #最小pod数量

maxReplicas: 10 #最大pod数量

targetCPUUtilizationPercentage: 3 # CPU使用率指标

scaleTargetRef: # 指定要控制的nginx信息

apiVersion: apps/v1

kind: Deployment

name: nginx

[root@k8s-master manifest]#

- 创建hpa

[root@k8s-master manifest]# kubectl apply -f pc-hpa.yaml

horizontalpodautoscaler.autoscaling/pc-hpa created

# 查看hpa

[root@k8s-master manifest]# kubectl get -f pc-hpa.yaml

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

pc-hpa Deployment/nginx <unknown>/3% 1 10 1 31s

[root@k8s-master manifest]#

1.4 测试

- 使用压测工具对service地址

192.168.232.128:31876进行压测,然后通过控制台查看hpa和pod的变化 - hpa变化

[root@k8s-master ~]# kubectl get hpa -n dev -w

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

pc-hpa Deployment/nginx <unknown>/3% 1 10 1 2m5s

- deployment变化

[root@k8s-master ~]# kubectl get deploy -n dev -w

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 15m

^C[root@k8s-master ~]#

- pod变化

[root@k8s-master ~]# kubectl get pods -n dev -w

NAME READY STATUS RESTARTS AGE

nginx-57797f84cb-njnhz 1/1 Running 0 15m

^C[root@k8s-master ~]#

五. DaemonSet(DS)

- DaemonSet类型的控制器可以保证在集群中的每一台(或指定)节点上都运行一个副本。

- 一般适用于日志收集、节点监控等场景。也就是说,如果一个Pod提供的功能是节点级别的(每个节点都需要且只需要一个),那么这类Pod就适合使用DaemonSet类型的控制器创建。

1. DaemonSet控制器的特点

- 每当向集群中添加一个节点时,指定的 Pod 副本也将添加到该节点上

- 当节点从集群中移除时,Pod 也就被垃圾回收了

2. DaemonSet的资源清单文件

apiVersion: apps/v1 # 版本号

kind: DaemonSet # 类型

metadata: # 元数据

name: # rs名称

namespace: # 所属命名空间

labels: #标签

controller: daemonset

spec: # 详情描述

revisionHistoryLimit: 3 # 保留历史版本

updateStrategy: # 更新策略

type: RollingUpdate # 滚动更新策略

rollingUpdate: # 滚动更新

maxUnavailable: 1 # 最大不可用状态的 Pod 的最大值,可以为百分比,也可以为整数

selector: # 选择器,通过它指定该控制器管理哪些pod

matchLabels: # Labels匹配规则

app: nginx-pod

matchExpressions: # Expressions匹配规则

- {key: app, operator: In, values: [nginx-pod]}

template: # 模板,当副本数量不足时,会根据下面的模板创建pod副本

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

3. 创建文件

- pc-daemonset.yaml

[root@k8s-master manifest]# cat pc-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: pc-daemonset

namespace: dev

spec:

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

[root@k8s-master manifest]#

- 运行

# 创建daemonset

[root@k8s-master manifest]# kubectl apply -f pc-daemonset.yaml

daemonset.apps/pc-daemonset created

# 查看daemonset

[root@k8s-master manifest]# kubectl get ds -n dev -o wide

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

pc-daemonset 2 2 2 2 2 <none> 39s nginx nginx:1.17.1 app=nginx-pod

[root@k8s-master manifest]#

# 查看pod,发现在每个Node上都运行一个pod

[root@k8s-master manifest]# kubectl get pods -n dev -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pc-daemonset-f5nmb 1/1 Running 0 56s 10.244.2.106 k8s-node2 <none> <none>

pc-daemonset-xt22t 1/1 Running 0 56s 10.244.1.90 k8s-node1 <none> <none>

[root@k8s-master manifest]#

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-daemonset-f5nmb 1/1 Running 0 81s

pc-daemonset-xt22t 1/1 Running 0 81s

[root@k8s-master manifest]#

# 删除daemonset

[root@k8s-master manifest]# kubectl delete -f pc-daemonset.yaml

daemonset.apps "pc-daemonset" deleted

六. Job

1. Job定义

- 主要用于负责**批量处理(一次要处理指定数量任务)短暂的一次性(每个任务仅运行一次就结束)**任务。

2. Job特点

- 当Job创建的pod执行成功结束时,Job将记录成功结束的pod数量

- 当成功结束的pod达到指定的数量时,Job将完成执行

3. Job的资源清单文件:

apiVersion: batch/v1 # 版本号

kind: Job # 类型

metadata: # 元数据

name: # rs名称

namespace: # 所属命名空间

labels: #标签

controller: job

spec: # 详情描述

completions: 1 # 指定job需要成功运行Pods的次数。默认值: 1

parallelism: 1 # 指定job在任一时刻应该并发运行Pods的数量。默认值: 1

activeDeadlineSeconds: 30 # 指定job可运行的时间期限,超过时间还未结束,系统将会尝试进行终止。

backoffLimit: 6 # 指定job失败后进行重试的次数。默认是6

manualSelector: true # 是否可以使用selector选择器选择pod,默认是false

selector: # 选择器,通过它指定该控制器管理哪些pod

matchLabels: # Labels匹配规则

app: counter-pod

matchExpressions: # Expressions匹配规则

- {key: app, operator: In, values: [counter-pod]}

template: # 模板,当副本数量不足时,会根据下面的模板创建pod副本

metadata:

labels:

app: counter-pod

spec:

restartPolicy: Never # 重启策略只能设置为Never或者OnFailure

containers:

- name: counter

image: busybox:1.30

command: ["bin/sh","-c","for i in 9 8 7 6 5 4 3 2 1; do echo $i;sleep 2;done"]

- 关于重启策略设置的说明:

如果指定为OnFailure,则job会在pod出现故障时重启容器,而不是创建pod,failed次数不变

如果指定为Never,则job会在pod出现故障时创建新的pod,并且故障pod不会消失,也不会重启,failed次数加1

如果指定为Always的话,就意味着一直重启,意味着job任务会重复去执行了,当然不对,所以不能设置为Always

4. 创建文件

- 创建pc-job.yaml

[root@k8s-master manifest]# cat pc-job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: pc-job

namespace: dev

spec:

manualSelector: true

selector:

matchLabels:

app: counter-pod

template:

metadata:

labels:

app: counter-pod

spec:

restartPolicy: Never

containers:

- name: counter

image: busybox:1.30

command: ["bin/sh","-c","for i in 9 8 7 6 5 4 3 2 1; do echo $i;sleep 3;done"]

[root@k8s-master manifest]#

- 创建job

# 创建job

[root@k8s-master manifest]# kubectl apply -f pc-job.yaml

job.batch/pc-job created

# 查看job

[root@k8s-master manifest]# kubectl get -f pc-job.yaml

NAME COMPLETIONS DURATION AGE

pc-job 0/1 27s 27s

[root@k8s-master manifest]# kubectl get -f pc-job.yaml -o wide

NAME COMPLETIONS DURATION AGE CONTAINERS IMAGES SELECTOR

pc-job 1/1 31s 48s counter busybox:1.30 app=counter-pod

[root@k8s-master manifest]#

# 通过观察pod状态可以看到,pod在运行完毕任务后,就会变成Completed状态

[root@k8s-master manifest]# kubectl get pods -n dev -w

NAME READY STATUS RESTARTS AGE

pc-job-lwcqr 0/1 Completed 0 2m44s

^C[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-job-lwcqr 0/1 Completed 0 2m54s

[root@k8s-master manifest]#

# 接下来,调整下pod运行的总数量和并行数量 即:在spec下设置下面两个选项

# completions: 6 # 指定job需要成功运行Pods的次数为6

# parallelism: 3 # 指定job并发运行Pods的数量为3

# 然后重新运行job,观察效果,此时会发现,job会每次运行3个pod,总共执行了6个pod

[root@k8s-master manifest]# cat pc-job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: pc-job

namespace: dev

spec:

manualSelector: true

completions: 6

parallelism: 3

selector:

matchLabels:

app: counter-pod

template:

metadata:

labels:

app: counter-pod

spec:

restartPolicy: Never

containers:

- name: counter

image: busybox:1.30

command: ["bin/sh","-c","for i in 9 8 7 6 5 4 3 2 1; do echo $i;sleep 3;done"]

[root@k8s-master manifest]#

[root@k8s-master manifest]# kubectl apply -f pc-job.yaml

job.batch/pc-job created

[root@k8s-master manifest]#

[root@k8s-master ~]# kubectl get job -n dev

NAME COMPLETIONS DURATION AGE

pc-job 0/6 17s 17s

[root@k8s-master ~]# kubectl get job -n dev -w

NAME COMPLETIONS DURATION AGE

pc-job 0/6 30s 30s

pc-job 0/6 31s 31s

pc-job 3/6 31s 31s

pc-job 3/6 32s 32s

pc-job 3/6 34s 34s

pc-job 3/6 60s 60s

pc-job 3/6 63s 63s

pc-job 6/6 63s 63s

[root@k8s-master ~]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-job-8x47s 0/1 Completed 0 102s

pc-job-dmnxz 0/1 Completed 0 71s

pc-job-frsfr 0/1 Completed 0 102s

pc-job-rcrfs 0/1 Completed 0 71s

pc-job-t7bwz 0/1 Completed 0 102s

pc-job-xlnv2 0/1 Completed 0 71s

[root@k8s-master ~]#

# 删除job

[root@k8s-master manifest]# kubectl delete -f pc-job.yaml

job.batch "pc-job" deleted

[root@k8s-master manifest]#

七. CronJob(CJ)

- CronJob控制器以Job控制器资源为其管控对象,并借助它管理pod资源对象,Job控制器定义的作业任务在其控制器资源创建之后便会立即执行,但CronJob可以以类似于Linux操作系统的周期性任务作业计划的方式控制其运行时间点及重复运行的方式。

- CronJob可以在特定的时间点(反复的)去运行job任务。

- job管理pod,cj管理job

1. CronJob的资源清单文件

apiVersion: batch/v1beta1 # 版本号

kind: CronJob # 类型

metadata: # 元数据

name: # rs名称

namespace: # 所属命名空间

labels: #标签

controller: cronjob

spec: # 详情描述

schedule: # cron格式的作业调度运行时间点,用于控制任务在什么时间执行

concurrencyPolicy: # 并发执行策略,用于定义前一次作业运行尚未完成时是否以及如何运行后一次的作业

failedJobHistoryLimit: # 为失败的任务执行保留的历史记录数,默认为1

successfulJobHistoryLimit: # 为成功的任务执行保留的历史记录数,默认为3

startingDeadlineSeconds: # 启动作业错误的超时时长

jobTemplate: # job控制器模板,用于为cronjob控制器生成job对象;下面其实就是job的定义

metadata:

spec:

completions: 1

parallelism: 1

activeDeadlineSeconds: 30

backoffLimit: 6

manualSelector: true

selector:

matchLabels:

app: counter-pod

matchExpressions: 规则

- {key: app, operator: In, values: [counter-pod]}

template:

metadata:

labels:

app: counter-pod

spec:

restartPolicy: Never

containers:

- name: counter

image: busybox:1.30

command: ["bin/sh","-c","for i in 9 8 7 6 5 4 3 2 1; do echo $i;sleep 20;done"]

需要重点解释的几个选项:

schedule: cron表达式,用于指定任务的执行时间

*/1 * * * *

<分钟> <小时> <日> <月份> <星期>

分钟 值从 0 到 59.

小时 值从 0 到 23.

日 值从 1 到 31.

月 值从 1 到 12.

星期 值从 0 到 6, 0 代表星期日

多个时间可以用逗号隔开; 范围可以用连字符给出;*可以作为通配符; /表示每...

concurrencyPolicy:

Allow: 允许Jobs并发运行(默认)

Forbid: 禁止并发运行,如果上一次运行尚未完成,则跳过下一次运行,上一次任务完成之后,才执行下次任务

Replace: 替换,取消当前正在运行的作业并用新作业替换它,上一次没执行成功替换掉,执行新的任务

2. 创建文件

- 创建pc-cronjob.yaml

[root@k8s-master manifest]# cat pc-cronjob.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: pc-cronjob

namespace: dev

labels:

controller: cronjob

spec:

schedule: "*/1 * * * *"

jobTemplate:

metadata:

spec:

template:

spec:

restartPolicy: Never

containers:

- name: counter

image: busybox:1.30

command: ["bin/sh","-c","for i in 9 8 7 6 5 4 3 2 1; do echo $i;sleep 3;done"]

[root@k8s-master manifest]#

- 创建job

# 创建cronjob

[root@k8s-master manifest]# kubectl apply -f pc-cronjob.yaml

cronjob.batch/pc-cronjob created

[root@k8s-master manifest]#

# 查看cronjob

[root@k8s-master manifest]# kubectl get -f pc-cronjob.yaml

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

pc-cronjob */1 * * * * False 0 <none> 23s

[root@k8s-master manifest]#

# 查看job

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-cronjob-27719561-rddql 1/1 Running 0 27s

[root@k8s-master manifest]#

# 查看pod

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-cronjob-27719561-rddql 0/1 Completed 0 2m6s

pc-cronjob-27719562-zxx9m 0/1 Completed 0 66s

pc-cronjob-27719563-hwqdh 1/1 Running 0 6s

[root@k8s-master manifest]#

# 删除cronjob

[root@k8s-master manifest]# kubectl delete -f pc-cronjob.yaml

cronjob.batch "pc-cronjob" deleted

[root@k8s-master manifest]#