MindSpore Ascend 内存管理

在调试网络的时候,我们经常会遇到OutOfMemory报错,下面基于MindSpore r1.5分支代码介绍下MindSpore在Ascend设备上对内存的管理。

内存管理介绍

Ascend在对内存分为静态内存和动态内存,它们分别指:

- 静态内存:

- Parameter - 网络中的权重

- Value Node - 网络中的常量

- Output - 网络的输出

- 动态内存:

- Output Tensor - 网络中的算子的输出Tensor

- Workspace Tensor - 网络中的部分算子在计算过程中的临时buffer

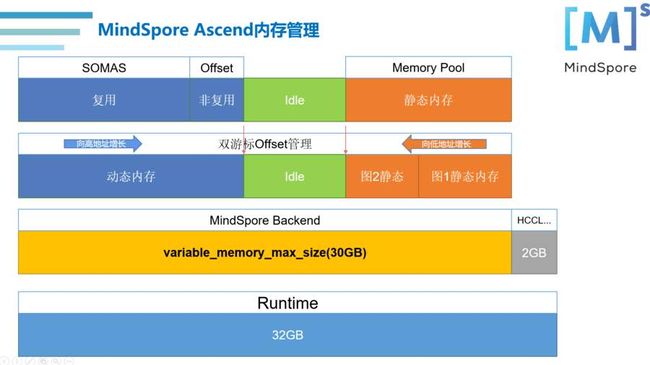

MindSpore对Ascend设备内存分成4层进行管理:

第一层 Runtime

Runtime直接对板载的32GB内存进行管理

第二层 Context

如果用户在网络脚本中使用了Context配置MindSpore后端使用的内存大小, MindSpore则按照用户设置大小去向下一层Runtime申请,如果用户没有设置,则默认申请30GB内存,预留2GB内存给系统中的HCCL等其他模块使用。

设置MindSpore后端使用内存大小为25GB的代码为:

context.set_context(variable_memory_max_size='25GB')第三层 双游标

为了动态管理动态内存与静态内存的大小,MindSpore给动态内存和动态内存分别设置了一个游标,在游标交会的时候内存使用完毕:

-

动态内存游标

动态内存的地址增长从低地址向高地址增长,在

GRAPH_MODE模式下,每个计算图的偏移都从0开始 -

静态内存游标

静态内存的地址增长从高地址向低地址增长,在

GRAPH_MODE模式下,所有计算图的静态内存都会同时存在

第四层 内存复用

为了进一步提高内存的使用效率,在第三层的基础上进一步引入SOMAS及Memory Pool对内存进行管理

-

SOMAS (Safe Optimized Memory Allocation Solver)

“约束编程 - 并行拓扑内存优化”(SOMAS) :将计算图并行流与数据依赖进行聚合分析,得到算子间祖先关系构建张量全局生命期互斥约束,使用多种启发式算法求解最优的内存静态规划,实现逼近理论极限的内存复用从而提升网络训练Batch Size数量和训练吞吐量FPS。

在

GRAPH_MODE模式下,计算图中的动态内存(所有算子的Output Tensor及Workspace Tensor)都是用SOMAS进行分配,在SOMAS计算出整体使用大小后,一次向第三层申请当前图大小的静态内存 -

Memory Pool

Memory Pool主要通过分析Tensor间的引用关系对Tensor使用的内存进行申请释放,在使用BestFit最佳适应内存分配算法对内存进行分配。

在

GRAPH_MODE模式下,计算图中的静态内存(图的权重、常量及输出)都使用Memory Pool进行分配,Memory Pool一次向第三层申请单位大小的内存进行复用,当内存池中的无空闲内存块时,再向第三层申请单位大小的内存进行复用在

PYNATIVE_MODE模式下,计算图中所有内存均使用Memory Pool进行分配,Memory Pool一次向第三层申请单位大小的内存进行复用,当内存池中的无空闲内存块时,再向第三层申请单位大小的内存进行复用

模型内存大小计算

在GRAPH_MODE模式下,模型的内存使用大小计算方式为:

日志分析

第二层 Context

[INFO] DEVICE(60236,7fe82e1cb700,python):2021-11-09-10:49:39.370.492 [mindspore/ccsrc/runtime/device/ascend/ascend_memory_manager.cc:89] GetDeviceMemSizeFromContext] context variable_memory_max_size:25 * 1024 * 1024 * 1024

[INFO] DEVICE(60236,7fe82e1cb700,python):2021-11-09-10:49:39.370.504 [mindspore/ccsrc/runtime/device/ascend/ascend_memory_manager.cc:96] GetDeviceMemSizeFromContext] variable_memory_max_size(GB):25

[INFO] DEVICE(60236,7fe82e1cb700,python):2021-11-09-10:49:39.436.350 [mindspore/ccsrc/runtime/device/ascend/ascend_memory_manager.cc:71] MallocDeviceMemory] Call rtMalloc to allocate device memory Success, size : 26843545600 bytes , address : 0x108800000000- 用户通过context设置MindSpore后端使用内存为25GB

- MindSpore向第一层Runtime申请25GB内存,起始地址为0x108800000000

第三层 双游标

-

静态内存:

[INFO] DEVICE(60236,7fe82e1cb700,python):2021-11-09-10:51:01.685.566 [mindspore/ccsrc/runtime/device/ascend/ascend_memory_manager.cc:143] MallocStaticMem] Malloc Memory for Static: size[147968], Memory statistics: total[26843545600] dynamic [0] static [8388608], Pool statistics: pool total size [8388608] used [220160] communication_mem:0申请大小为

147968Byte的静态内存,当前内存分配情况为:总内存大小:

26843545600Byte动态内存大小:

0Byte静态内存大小:

8388608Byte内存池情况为:

内存池总大小:

8388608Byte内存池已使用内存大小:

220160Byte -

动态内存:

[INFO] DEVICE(60236,7fe82e1cb700,python):2021-11-09-10:51:01.950.824 [mindspore/ccsrc/runtime/device/ascend/ascend_memory_manager.cc:177] MallocDynamicMem] Malloc Memory for Dynamic: size[1513511424], Memory statistics: total[26843545600] dynamic[0] static[209715200] communication_mem: 0申请大小为

1513511424Byte的静态内存,当前内存分配情况为:总内存大小:

26843545600Byte动态内存大小:

0Byte静态内存大小:

209715200Byte

最后一次内存申请日志中的静态内存大小与动态内存大小之和就是此模型的占用内存总大小

第四层 内存复用

-

SOMAS:

[INFO] PRE_ACT(60236,7fe82e1cb700,python):2021-11-09-10:51:01.950.806 [mindspore/ccsrc/backend/optimizer/somas/somas.cc:1732] GenGraphStatisticInfo] Total Dynamic Size (Upper Bound): 3512785408 Theoretical Optimal Size (Lower Bound): 1509126144 Total Workspace Size: 0 Total Communication Input Tensor Size: 0 Total Communication Output Tensor Size: 0 Total LifeLong All Tensor Size: 19268608 Total LifeLong Start Tensor Size: 0 Total LifeLong End Tensor Size: 0 Reused Size(Allocate Size): 1513510912Total Dynamic Size (Upper Bound): 网络中所有算子的输出Tensor及Workspace Tensor的内存大小总和

Theoretical Optimal Size (Lower Bound): 网络中所有算子输出完全复用后的理论最优值

Reused Size(Allocate Size):当前网络使用SOMAS算法复用后所需内存大小

[INFO] DEVICE(60236,7fe82e1cb700,python):2021-11-09-10:51:01.950.815 [mindspore/ccsrc/runtime/device/memory_manager.cc:48] MallocSomasDynamicMem] Graph 1: TotalSomasReuseDynamicSize [1513510912] [INFO] DEVICE(60236,7fe82e1cb700,python):2021-11-09-10:51:01.950.824 [mindspore/ccsrc/runtime/device/ascend/ascend_memory_manager.cc:177] MallocDynamicMem] Malloc Memory for Dynamic: size[1513511424], Memory statistics: total[26843545600] dynamic[0] static[209715200] communication_mem: 0 [INFO] DEVICE(60236,7fe82e1cb700,python):2021-11-09-10:51:01.950.831 [mindspore/ccsrc/runtime/device/memory_manager.cc:51] MallocSomasDynamicMem] Somas Reuse Memory Base Address [0x108800000000], End Address [0x10885a365800]- SOMAS复用后所需内存大小为

1513511424Byte - 向第三层申请大小为

1513511424Byte的动态内存 - SOMAS管理的内存起始地址为

0x108800000000, 终止地址为0x10885a365800

- SOMAS复用后所需内存大小为

-

Memory Pool

[INFO] DEVICE(60236,7fe82e1cb700,python):2021-11-09-10:51:01.692.943 [mindspore/ccsrc/runtime/device/ascend/ascend_memory_pool.cc:80] AllocDeviceMem] Malloc Memory: Pool, total[26843545600] (dynamic[0] memory pool[142606336]) malloc [16777216]向Pool申请大小为

16777216Byte的内存,当前后端总内存为26843545600Byte, 动态内存大小为0,内存池大小为142606336Byte

静态内存使用情况

[INFO] DEVICE(60236,7fe82e1cb700,python):2021-11-09-10:51:01.692.840 [mindspore/ccsrc/runtime/device/kernel_runtime.cc:621] AssignStaticMemoryInput] Assign Static Memory for Input node, size:2097152 node:moments.layer4.0.conv1.weight index: 0给图中的节点moments.layer4.0.conv1.weight的第0个输出分配大小为2097152Byte的静态内存

常见问题

设备被占用 Malloc device memory failed

[EXCEPTION] DEVICE(98186,7f40043f8700,python):2021-11-09-11:39:49.758.665 [mindspore/ccsrc/runtime/device/ascend/ascend_memory_manager.cc:64] MallocDeviceMemory] Malloc device memory failed, size[32212254720], ret[207001], Device 2 may be other processes occupying this card, check as: ps -ef|grep python-

问题分析:

MindSpore向第一层Runtime申请内存30GB失败。

由于MindSpore在初始化的时候会直接向Ascend Runtime申请30GB内存,然后一直持有,后续有其他进程再次向Runtime申请30GB内存的时候会导致申请失败。

-

解决方案:

通过ps -ef|grep python查找正在跑的进程,等待进程结束释放设备或者kill掉进程释放设备

静态内存分配失败 Memory not enough:

[INFO] DEVICE(95511,7fc881aed700,python):2021-11-09-15:39:06.632.247 [mindspore/ccsrc/runtime/device/kernel_runtime.cc:621] AssignStaticMemoryInput] Assign Static Memory for Input node, size:16777216 node:lamb_v.bert.bert.bert_encoder.layers.11.intermediate.weight index: 0

[INFO] DEVICE(95511,7fc881aed700,python):2021-11-09-15:39:06.632.254 [mindspore/ccsrc/runtime/device/ascend/ascend_memory_manager.cc:143] MallocStaticMem] Malloc Memory for Static: size[16777728], Memory statistics: total[4294967296] dynamic [0] static [4286578688], Pool statistics: pool total size [4286578688] used [3272974848] communication_mem:0

[EXCEPTION] DEVICE(95511,7fc881aed700,python):2021-11-09-15:39:06.632.266 [mindspore/ccsrc/runtime/device/ascend/ascend_memory_pool.cc:53] CalMemBlockAllocSize] Memory not enough: current free memory size[8388608] is smaller than required size[16777728], dynamic offset [0] memory pool offset[4286578688])-

问题分析:

申请动态内存失败,从日志可以知道后端整体可用内存为

4294967296Byte,当前已使用动态内存为0Byte, 内存池使用了的静态内存为4286578688Byte,当前需要申请静态内存为16777728Byte,,已使用内存加上申请内存超过总内存大小,故报Memory not enough -

解决方案:

-

通过context增加MindSpore后端使用内存大小,最大可以设置为31GB

context.set_context(variable_memory_max_size='31GB')复制 -

排查是否引入了异常大的

Paramter或者Value Node,例如单个数据shape为[640,1024,80,81],数据类型为float32,单个数据大小超过15G(640*1024*80*81*4),这样差不多大小的两个数据相加时,占用内存超过3*15G,容易造成内存不足,需要检查此类节点参数 -

使用可视化工具或静态内存使用情况日志分析内存使用情况,排查异常节点

-

可以上官方论坛发帖提出问题,将会有专门的技术人员帮助解决

-

动态内存分配失败 Out of memory!!!

[INFO] DEVICE(61069,7f89c77cd700,python):2021-11-09-14:49:54.398.228 [mindspore/ccsrc/runtime/device/memory_manager.cc:48] MallocSomasDynamicMem] Graph 1: TotalSomasReuseDynamicSize [28929622528]

[INFO] DEVICE(61069,7f89c77cd700,python):2021-11-09-14:49:54.398.238 [mindspore/ccsrc/runtime/device/ascend/ascend_memory_manager.cc:177] MallocDynamicMem] Malloc Memory for Dynamic: size[28929623040], Memory statistics: total[32212254720] dynamic[0] static[5142216704] communication_mem: 0

[EXCEPTION] DEVICE(61069,7f89c77cd700,python):2021-11-09-14:49:54.398.250 [mindspore/ccsrc/runtime/device/ascend/ascend_memory_manager.cc:183] MallocDynamicMem] Out of memory!!! total[32212254720] (dynamic[0] memory pool[5142216704]) malloc [28929623040] failed! Please try to reduce 'batch_size' or check whether exists extra large shape. More details can be found in mindspore's FAQ-

问题分析:

申请动态内存失败,从日志可以知道后端整体可用内存为

32212254720Byte,当前已使用动态内存为0Byte, 内存池使用了的静态内存为5142216704Byte,当前需要申请内存内存为28929623040Byte, 动态内存需求太大,已使用内存加上申请内存超过总内存大小,故报Out of memory!!! -

解决方案:

动态内存过大导致内存占用过多的情况大概有以下几种情况:

- Batch Size过大,处理数据多,内存占用较大

- 通信算子占用内存较多导致整体内存复用率较低

- 内存复用算法在当前网络下效率较低

- 内存复用模块异常

在遇到这种问题时,可以依次通过以下步骤处理

-

通过context增加MindSpore后端使用内存大小,最大可以设置为31GB

context.set_context(variable_memory_max_size='31GB') -

减小Batch Size,降低网络内存使用

-

使用可视化工具分析内存使用情况,调整网络结构,减小Tensor的生命周期,让Tensor产生后尽快被使用,使用完后Tensor的内存可以释放给后面的Tensor使用

-

可以上官方论坛发帖提出问题,将会有专门的技术人员帮助解决

HCCL申请内存失败 Device malloc failed

[ERROR] DRV(132896,python3.7):2021-10-30-10:22:16.236.063 [ascend][curpid: 132896, 133393][drv][devmm][devmm_ioctl_advise 166] Ioctl device error! ptr=0x1080d0000000, count=209747968, advise=0x9c, device=7.

[ERROR] DRV(132896,python3.7):2021-10-30-10:22:16.238.839 [ascend][curpid: 132896, 133393][drv][devmm][devmm_ioctl_alloc_and_advise 205] advise mem error! ret=6

[ERROR] DRV(132896,python3.7):2021-10-30-10:22:16.238.849 [ascend][curpid: 132896, 133393][drv][devmm][devmm_virt_heap_alloc_device 421] devmm_ioctl_alloc error. ptr=0x1080d0000000.

[ERROR] DRV(132896,python3.7):2021-10-30-10:22:16.238.857 [ascend][curpid: 132896, 133393][drv][devmm][devmm_virt_heap_alloc 394] alloc phy addr failed.

[ERROR] DRV(132896,python3.7):2021-10-30-10:22:16.238.867 [ascend][curpid: 132896, 133393][drv][devmm][devmm_alloc_from_normal_heap 896] heap_alloc_managed out of memory, pp=0x1, bytesize=209747968.

[ERROR] DRV(132896,python3.7):2021-10-30-10:22:16.238.877 [ascend][curpid: 132896, 133393][drv][devmm][devmm_alloc_managed 134] heap_alloc_managed out of memory, pp=0x1, bytesize=209747968.

[ERROR] RUNTIME(132896,python3.7):2021-10-30-10:22:16.238.890 [npu_driver.cc:691]133393 DevMemAllocHugePageManaged:[driver interface] halMemAlloc failed: device_id=7, size=209747968, type=16, env_type=3, drvRetCode=6!

[ERROR] DRV(132896,python3.7):2021-10-30-10:22:16.370.700 [ascend][curpid: 132896, 133393][drv][devmm][devmm_ioctl_advise 166] Ioctl device error! ptr=0x108050000000, count=209747968, advise=0x98, device=7.

[ERROR] DRV(132896,python3.7):2021-10-30-10:22:16.863.258 [ascend][curpid: 132896, 133393][drv][devmm][devmm_ioctl_alloc_and_advise 205] advise mem error! ret=6

[ERROR] DRV(132896,python3.7):2021-10-30-10:22:16.863.277 [ascend][curpid: 132896, 133393][drv][devmm][devmm_virt_heap_alloc_device 421] devmm_ioctl_alloc error. ptr=0x108050000000.

[ERROR] DRV(132896,python3.7):2021-10-30-10:22:16.863.287 [ascend][curpid: 132896, 133393][drv][devmm][devmm_virt_heap_alloc 394] alloc phy addr failed.

[ERROR] DRV(132896,python3.7):2021-10-30-10:22:16.863.301 [ascend][curpid: 132896, 133393][drv][devmm][devmm_alloc_from_normal_heap 896] heap_alloc_managed out of memory, pp=0x1, bytesize=209747968.

[ERROR] DRV(132896,python3.7):2021-10-30-10:22:16.863.311 [ascend][curpid: 132896, 133393][drv][devmm][devmm_alloc_managed 134] heap_alloc_managed out of memory, pp=0x1, bytesize=209747968.

[ERROR] RUNTIME(132896,python3.7):2021-10-30-10:22:16.863.324 [npu_driver.cc:726]133393 DevMemAllocManaged:[driver interface] halMemAlloc failed: size=209747968, deviceId=7, type=16, env_type=3, drvRetCode=6!

[ERROR] RUNTIME(132896,python3.7):2021-10-30-10:22:16.863.358 [npu_driver.cc:802]133393 DevMemAllocOnline:DevMemAlloc huge page failed: deviceId=7, type=16, size=209747968, retCode=117571606!

[ERROR] RUNTIME(132896,python3.7):2021-10-30-10:22:16.863.376 [logger.cc:349]133393 DevMalloc:Device malloc failed, size=209747968, type=16.

[ERROR] RUNTIME(132896,python3.7):2021-10-30-10:22:16.863.405 [api_c.cc:801]133393 rtMalloc:ErrCode=207001, desc=[driver error:out of memory], InnerCode=0x7020016

[ERROR] RUNTIME(132896,python3.7):2021-10-30-10:22:16.863.418 [error_message_manage.cc:41]133393 ReportFuncErrorReason:rtMalloc execute failed, reason=[driver error:out of memory]

[ERROR] HCCL(132896,python3.7):2021-10-30-10:22:16.863.488 [adapter.cc:182][hccl-132896-1-1635560488-hccl_world_group][1][Malloc][Mem]errNo[0x000000000500000f] rtMalloc failed, return[207001], para: devPtrAddr[(nil)], size[209747968].

[ERROR] HCCL(132896,python3.7):2021-10-30-10:22:16.863.499 [mem_device.cc:45][hccl-132896-1-1635560488-hccl_world_group][1][DeviceMem][Alloc]rt_malloc error, ret[15], size[209747968]

[ERROR] HCCL(132896,python3.7):2021-10-30-10:22:16.863.508 [op_base.cc:971][hccl-132896-1-1635560488-hccl_world_group][1][Create][OpBasedResources]In create workspace mem, malloc failed.

[ERROR] HCCL(132896,python3.7):2021-10-30-10:22:16.863.517 [op_base.cc:408][hccl-132896-1-1635560488-hccl_world_group][1]call trace: ret -> 3

[ERROR] KERNEL(132896,ffff25ffb160,python3.7):2021-10-30-10:22:16.863.551 [mindspore/ccsrc/backend/kernel_compiler/hccl/hcom_all_reduce_scatter.cc:39] Launch] HcclReduceScatter faled, ret:3

[ERROR] DEVICE(132896,ffff25ffb160,python3.7):2021-10-30-10:22:16.863.576 [mindspore/ccsrc/runtime/device/kernel_runtime.cc:1521] LaunchKernelMod] Launch kernel failed.

[ERROR] DEVICE(132896,ffff25ffb160,python3.7):2021-10-30-10:22:16.863.603 [mindspore/ccsrc/runtime/device/kernel_runtime.cc:1563] LaunchKernels] LaunchKernelMod failed!

[CRITICAL] SESSION(132896,ffff25ffb160,python3.7):2021-10-30-10:22:16.863.640 [mindspore/ccsrc/backend/session/ascend_session.cc:1359] Execute] run task error!

[INFO] DEBUG(132896,ffff25ffb160,python3.7):2021-10-30-10:22:16.863.672 [mindspore/ccsrc/debug/trace.cc:121] TraceGraphEval] Length of **ysis graph stack is empty.

[INFO] DEBUG(132896,ffff25ffb160,python3.7):2021-10-30-10:22:16.863.685 [mindspore/ccsrc/debug/trace.cc:385] GetEvalStackInfo] Get graph **ysis information begin

[INFO] DEBUG(132896,ffff25ffb160,python3.7):2021-10-30-10:22:16.863.694 [mindspore/ccsrc/debug/trace.cc:388] GetEvalStackInfo] Length of **ysis information stack is empty.

[ERROR] SESSION(132896,ffff25ffb160,python3.7):2021-10-30-10:22:16.863.883 [mindspore/ccsrc/backend/session/ascend_session.cc:1758] ReportErrorMessage] Ascend error occurred, error message:

EI0007: Failed to allocate resource [DeviceMemory], with info [size:209747968].

[driver interface] halMemAlloc failed: device_id=7, size=209747968, type=16, env_type=3, drvRetCode=6![FUNC:DevMemAllocHugePageManaged][FILE:npu_driver.cc][LINE:691]

[driver interface] halMemAlloc failed: size=209747968, deviceId=7, type=16, env_type=3, drvRetCode=6![FUNC:DevMemAllocManaged][FILE:npu_driver.cc][LINE:726]

DevMemAlloc huge page failed: deviceId=7, type=16, size=209747968, retCode=117571606![FUNC:DevMemAllocOnline][FILE:npu_driver.cc][LINE:802]

Device malloc failed, size=209747968, type=16.[FUNC:DevMalloc][FILE:logger.cc][LINE:349]

rtMalloc execute failed, reason=[driver error:out of memory][FUNC:ReportFuncErrorReason][FILE:error_message_manage.cc][LINE:41]

[INFO] SESSION(132896,ffff25ffb160,python3.7):2021-10-30-10:22:16.863.908 [mindspore/ccsrc/backend/session/executor.cc:146] Run] End run graph 0 -

问题分析:

从前面可以得知,在用户未使用Context指定MindSpore Backend使用的内存大小的时候,MindSpore会去申请30GB内存使用,预留2GB内存给HCCL及其他组件使用,从日志得知,此处为HCCL申请内存失败:

[ERROR] HCCL(132896,python3.7):2021-10-30-10:22:16.863.488 [adapter.cc:182][hccl-132896-1-1635560488-hccl_world_group][1][Malloc][Mem]errNo[0x000000000500000f] rtMalloc failed, return[207001], para: devPtrAddr[(nil)], size[209747968].可见,此时预留给HCCL及其他组件使用的2GB内存并不足够,HCCL需要使用更多内存。

-

解决方案:

可以通过Context降低MindSpore Backend使用的内存大小,提高预留给HCCL及其他组件使用的内存大小

context.set_context(variable_memory_max_size='25GB')

可视化工具

MindInsight提供了对Device内存使用情况分析的可视化工具。

官网链接:

MindInsight 内存使用情况分析