K8S部署&DevOps

k8s+kubesphere+devops

一、k8s 集群部署

1、k8s 快速入门

1)、简介

Kubernetes 简称 k8s。是用于自动部署,扩展和管理容器化应用程序的开源系统。

中文官网:https://kubernetes.io/zh/

中文社区:https://www.kubernetes.org.cn/

官方文档:https://kubernetes.io/zh/docs/home/

社区文档:http://docs.kubernetes.org.cn/

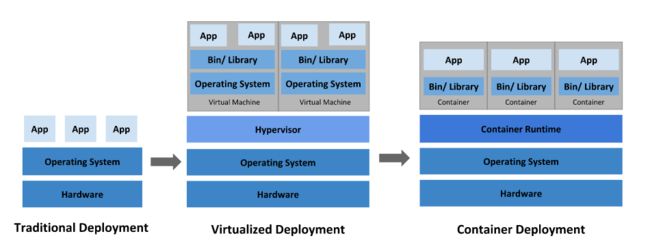

- 部署方式的进化

https://kubernetes.io/zh/docs/concepts/overview/

2)、架构

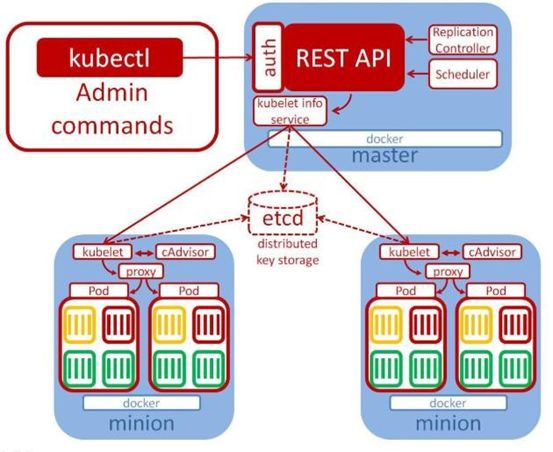

1、整体主从方式

一个服务器为主节点,其他的为node节点

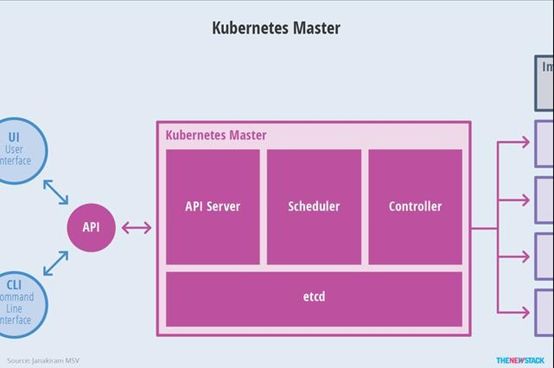

2、Master 节点架构

-

kube-apiserver

- 对外暴露 K8S 的 api 接口,是外界进行资源操作的唯一入口

- 提供认证、授权、访问控制、API 注册和发现等机制

-

etcd

- etcd 是兼具一致性和高可用性的键值数据库,可以作为保存 Kubernetes 所有集群数据的后台数据库。

- Kubernetes 集群的 etcd 数据库通常需要有个备份计划

-

kube-scheduler

- 主节点上的组件,该组件监视那些新创建的未指定运行节点的 Pod,并选择节点 让 Pod 在上面运行。

- 所有对 k8s 的集群操作,都必须经过主节点进行调度

-

kube-controller-manager

- 在主节点上运行控制器的组件

- 这些控制器包括:

- 节点控制器(Node Controller): 负责在节点出现故障时进行通知和响应。

- 副本控制器(Replication Controller): 负责为系统中的每个副本控制器对象维护正确数量的 Pod。

- 端点控制器(Endpoints Controller): 填充端点(Endpoints)对象(即加入 Service 与 Pod) 。

- 服务帐户和令牌控制器(Service Account & Token Controllers): 为新的命名 空间创建默认帐户和 API 访问令牌

3、Node 节点架构

-

kubelet

- 一个在集群中每个节点上运行的代理。它保证容器都运行在 Pod 中。

- 负责维护容器的生命周期,同时也负责 Volume(CSI)和网络(CNI)的管理;

-

kube-proxy

- 负责为 Service 提供 cluster 内部的服务发现和负载均衡;

-

容器运行环境(Container Runtime)

- 容器运行环境是负责运行容器的软件。

- Kubernetes 支持多个容器运行环境: Docker、 containerd、cri-o、 rktlet 以及任何实现 Kubernetes CRI (容器运行环境接口)。

-

fluentd

- 是一个守护进程,它有助于提供集群层面日志集群层面的日志

3)、概念

-

Container:容器,可以是 docker 启动的一个容器

-

Pod:

- k8s 使用 Pod 来组织一组容器

- 一个 Pod 中的所有容器共享同一网络。

- Pod 是 k8s 中的最小部署单元

-

Volume

- 声明在 Pod 容器中可访问的文件目录

- 可以被挂载在 Pod 中一个或多个容器指定路径下

- 支持多种后端存储抽象(本地存储,分布式存储,云存储…)

![]()

-

Controllers:更高层次对象,部署和管理 Pod;

- ReplicaSet:确保预期的 Pod 副本数量

- Deplotment:无状态应用部署

- StatefulSet:有状态应用部署

- DaemonSet:确保所有 Node 都运行一个指定 Pod

- Job:一次性任务

- Cronjob:定时任务

-

Deployment:

- 定义一组 Pod 的副本数目、版本等

- 通过控制器(Controller)维持 Pod 数目(自动回 复失败的 Pod)

- 通过控制器以指定的策略控制版本(滚动升级,回滚等)

- Service

- 定义一组 Pod 的访问策略

- Pod 的负载均衡,提供一个或者多个 Pod 的稳定 访问地址

- 支持多种方式(ClusterIP、NodePort、LoadBalance)

- Label:标签,用于对象资源的查询,筛选

- Namespace:命名空间,逻辑隔离

- 一个集群内部的逻辑隔离机制(鉴权,资源)

- 每个资源都属于一个 namespace

- 同一个 namespace 所有资源名不能重复

- 不同 namespace 可以资源名重复

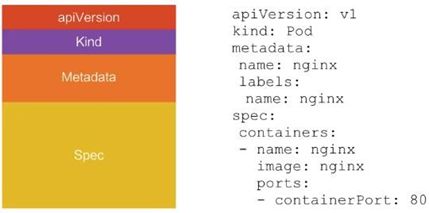

API:

我们通过 kubernetes 的 API 来操作整个集群

可以通过 kubectl、ui、curl 最终发送 http+json/yaml 方式的请求给 API Server,然后控制 k8s 集群。k8s 里的所有的资源对象都可以采用 yaml 或 JSON 格式的文件定义或描述

4)、快速体验

1、安装 minikube

https://github.com/kubernetes/minikube/releases

下载 minikube-windows-amd64.exe 改名为 minikube.exe 打开 VirtualBox,打开cmd运行

minikube start --vm-driver=virtualbox --registry-mirror=https://registry.docker-cn.com

等待 20 分钟左右即可

2、体验 nginx 部署升级

提交一个nginx deployment

kubectl apply -f https://k8s.io/examples/application/deployment.yaml

升级nginx deployment

kubectl apply -f https://k8s.io/examples/application/deployment-update.yaml

扩容nginx deployment

kubectl apply -f https://k8s.io/examples/application/deployment-scale.yaml

5)、流程叙述

1、通过 Kubectl 提交一个创建RC(Replication Controller)的请求,该请求通过 APIServer被写入etcd 中

2、此时Controller Manager 通过API Server 的监听资源变化的接口监听到此RC 事件

3、分析之后,发现当前集群中还没有它所对应的Pod 实例,

4、于是根据RC 里的Pod 模板定义生成一个Pod 对象,通过APIServer 写入etcd

5、此事件被Scheduler 发现,它立即执行一个复杂的调度流程,为这个新 Pod 选定一 个落户的Node,然后通过 API Server 讲这一结果写入到 etcd 中,

6、目标 Node 上运行的 Kubelet 进程通过 APIServer 监测到这个“新生的”Pod,并按照它 的定义,启动该 Pod 并任劳任怨地负责它的下半生,直到Pod 的生命结束。

7、随后,我们通过Kubectl 提交一个新的映射到该 Pod 的Service 的创建请求

8、ControllerManager 通过Label 标签查询到关联的Pod 实例,然后生成Service 的 Endpoints 信息,并通过APIServer 写入到 etcd 中

9、接下来,所有 Node 上运行的 Proxy 进程通过 APIServer 查询并监听 Service 对象与 其对应的 Endpoints 信息,建立一个软件方式的负载均衡器来实现 Service 访问到后端 Pod 的流量转发功能。

k8s 里的所有的资源对象都可以采用yaml 或JSON 格式的文件定义或描述

2、k8s 集群安装

1、kubeadm

kubeadm 是官方社区推出的一个用于快速部署 kubernetes 集群的工具。 这个工具能通过两条指令完成一个 kubernetes 集群的部署:

# 创建一个 Master 节点

$ kubeadm init

# 将一个 Node 节点加入到当前集群中

$ kubeadm join <Master 节点的 IP 和端口 >

2、前置要求

一台或多台机器,操作系统 CentOS7.x-86_x64

硬件配置:2GB 或更多 RAM,2 个 CPU 或更多 CPU,硬盘 30GB 或更多

集群中所有机器之间网络互通

可以访问外网,需要拉取镜像

禁止 swap 分区

3、部署步骤

1.在所有节点上安装 Docker 和 kubeadm

2.部署 Kubernetes Master

3.部署容器网络插件

4.部署 Kubernetes Node,将节点加入 Kubernetes 集群中

5.部署 Dashboard Web 页面,可视化查看 Kubernetes 资源

4、环境准备

1、准备工作

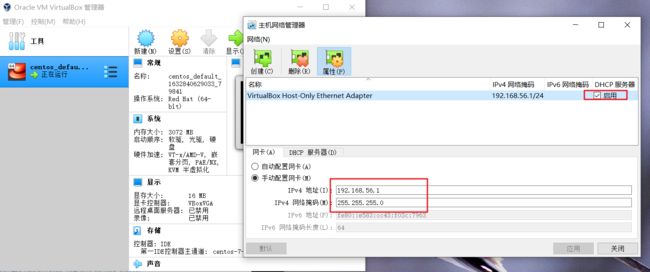

- 我们可以使用 vagrant 快速创建三个虚拟机。虚拟机启动前先设置 virtualbox 的主机网络。现全部统一为 192.168.56.1,以后所有虚拟机都是 56.x 的 ip 地址

- 设置虚拟机存储目录,防止硬盘空间不足

2、启动三个虚拟机

Vagrantfile:

Vagrant.configure("2") do |config|

(1..3).each do |i|

config.vm.define "k8s-node#{i}" do |node|

# 设置虚拟机的Box

node.vm.box = "centos/7"

# 设置虚拟机的主机名

node.vm.hostname="k8s-node#{i}"

# 设置虚拟机的IP

node.vm.network "private_network", ip: "192.168.56.#{99+i}", netmask: "255.255.255.0"

# 设置主机与虚拟机的共享目录

# node.vm.synced_folder "~/Documents/vagrant/share", "/home/vagrant/share"

# VirtaulBox相关配置

node.vm.provider "virtualbox" do |v|

# 设置虚拟机的名称

v.name = "k8s-node#{i}"

# 设置虚拟机的内存大小

v.memory = 4096

# 设置虚拟机的CPU个数

v.cpus = 4

end

end

end

end

-

使用我们提供的 vagrant 文件,复制到非中文无空格目录下,运行

vagrant up启动三个虚拟机。其实 vagrant 完全可以一键部署全部 k8s 集群。 https://github.com/rootsongjc/kubernetes-vagrant-centos-cluster http://github.com/davidkbainbridge/k8s-playground -

进入三个虚拟机,开启 root 的密码访问权限。

进去系统,如第一台k8s-node1

vagrant ssh k8s-node1su root #密码为 vagrantvi /etc/ssh/sshd_config修改

PasswordAuthentication yes

重启服务

systemctl restart sshd.service所有虚拟机设置为 4 核 4G

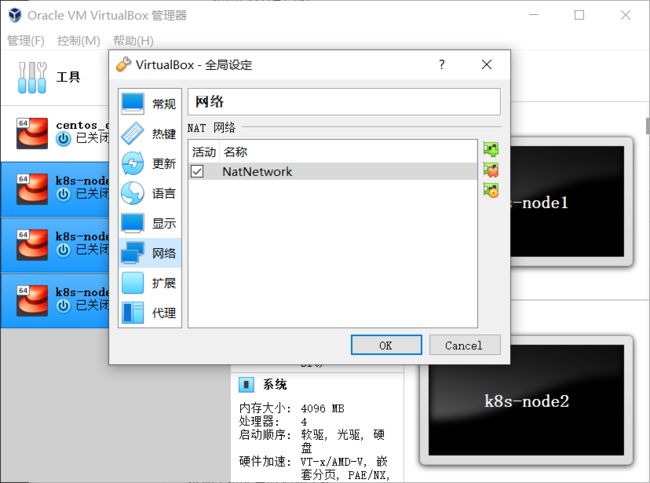

设置好 NAT 网络

关闭所有机器,全局设定设置net网络

每个机器设置网络连接方式为nat,界面名称为上述创建的NatNetwork,地址一定要随机在生成一个

3、设置 linux 环境(三个节点都执行)

关闭防火墙:

systemctl stop firewalld

systemctl disable firewalld

关闭selinux:

sed -i 's/enforcing/disabled/' /etc/selinux/config

set enforce 0

关闭 swap:

#关闭sawp分区 (可以不关闭,使用参数--ignore-preflight-errors=swap)

#临时关闭

swapoff -a

#永久

sed -ri 's/.*swap.*/#&/' /etc/fstab

#验证,swap 必须为 0;

free -g

添加主机名与IP 对应关系

cat > /etc/hosts << EOF

10.0.2.15 k8s-node1

10.0.2.4 k8s-node2

10.0..5 k8s-node3

EOF

#指定新的hostname

hostnamectl set-hostname <newhostname>

su 切换过来

将桥接的 IPv4 流量传递到 iptables 的链:

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

疑难问题:

遇见提示是只读的文件系统,运行如下命令

mount -o remount rw /

date 查看时间 (可选)

yum install -y ntpdate

同步最新时间

ntpdate time.windows.com

时间同步

echo '*/5 * * * * /usr/sbin/ntpdate -u ntp.api.bz' >>/var/spool/cron/root

systemctl restart crond.service

crontab -l

5、所有节点安装Docker、kubeadm、kubelet、kubectl

Kubernetes 默认 CRI(容器运行时)为 Docker,因此先安装 Docker。

1、安装 docker

1、卸载系统之前的 docker

sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

2、安装 Docker-CE

源添加

#源添加

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

wget -P /etc/yum.repos.d/ http://mirrors.aliyun.com/repo/epel-7.repo

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

yum clean all

yum install -y bash-completion.noarch

安装必须的依赖,系统工具

sudo yum install -y yum-utils

设置 docker repo 的 yum 位置

sudo yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

可以查看版本安装

yum list docker-ce --showduplicates | sort -r

安装 docker,以及 docker-cli,可以指定一下版本

sudo yum install -y docker-ce-18.09.9-3.el7 docker-ce-cli-18.09.9-3.el7 containerd.io

3、配置 docker 加速

sudo mkdir -p /etc/docker

sudo echo -e "{\n \"registry-mirrors\": [\"https://5955cm2y.mirror.aliyuncs.com\"],\"exec-opts\": [\"native.cgroupdriver=systemd\"]\n}" > /etc/docker/daemon.json

sudo systemctl daemon-reload

sudo systemctl restart docker

4、启动 docker & 设置 docker 开机自启

systemctl enable docker

基础环境准备好,可以给三个虚拟机备份一下;为 node3 分配 16g,剩下的 3g。方便未来测试

2、添加阿里云 yum 源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

拉取flanel镜像

docker pull lizhenliang/flannel:v0.11.0-amd64

3、安装 kubeadm,kubelet 和 kubectl

yum list|grep kube #检查是否有kube源

yum install -y kubelet-1.17.3 kubeadm-1.17.3 kubectl-1.17.3

systemctl enable kubelet #开机启动

systemctl start kubelet #启动

查看kubelet状态:systemctl status kubelet 发现启动不起来,因为其他配置未配置,这里先不管

6、部署k8s-master

1、master 节点初始化

先查看要成为master节点的默认网卡地址:ip addr

如图下面的设置为–apiserver-advertise-address=10.0.2.15

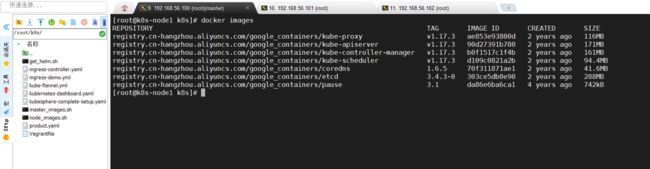

执行命令前可能失败先复制我们准备的master_images.sh文件

master_images.sh:

#!/bin/bash

images=(

kube-apiserver:v1.17.3

kube-proxy:v1.17.3

kube-controller-manager:v1.17.3

kube-scheduler:v1.17.3

coredns:1.6.5

etcd:3.4.3-0

pause:3.1

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

done

复制到这个要成为master节点的机器中

当前文件可能没有执行的权限使用命令

chmod 700 master_images.sh

执行这个文件下砸镜像

./master_images.sh

等待几分钟下载完成,执行docker images查看下载情况

之后执行下面命令

kubeadm init \

--apiserver-advertise-address=10.0.2.15 \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version v1.17.3 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=10.244.0.0/16

可能提示swap的错误,关闭即可

或者提示

把这个设置为1即可

echo "1">/proc/sys/net/bridge/bridge-nf-call-iptables

echo "1">/proc/sys/net/bridge/bridge-nf-call-ip6tables

启动成功

![]()

由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。可以手动 按照我们的 images.sh 先拉取镜像,

地址变为 registry.aliyuncs.com/google_containers 也可以。

科普:无类别域间路由(Classless Inter-Domain Routing、CIDR)是一个用于给用户分配 IP 地址以及在互联网上有效地路由 IP 数据包的对 IP 地址进行归类的方法。

拉取可能失败,需要下载镜像。

运行完成提前复制:加入集群的令牌,这会成功后先不要删除信息

2、测试 kubectl(主节点执行)

初始化成功后提示

执行一些配置文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

//todo

目前 master 状态为 notready。等待网络加入完成即可。

journalctl -u kubelet

7、 安装 Pod 网络插件(CNI)

$ kubectl apply -f \

https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

可能拉去失败,这里我用自己的

kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

以上地址可能被墙, 大家获取上传我们下载好的 kube-flannel.yml 运行即可, 同时 kube-flannel.yml 中指定的 images 访问不到可以去 docker hub 找一个wget yml 的地址

vi 修改 yml 所有 amd64 的地址都修改了即可。

执行命令

kubectl apply -f kube-flannel.yml

等待大约 3 分钟

kubectl get pods -n kube-system 查看指定名称空间的 pods

kubectl get pods --all-namespaces 查看所有名称空间的 pods

$ ip link set cni0 down 如果网络出现问题, 关闭 cni0, 重启虚拟机继续测试

执行 watch kubectl get pod -n kube-system -o wide 监控 pod 进度

等 3-10 分钟, 完全都是 running 以后继续

获取所有节点

kubectl get nodes

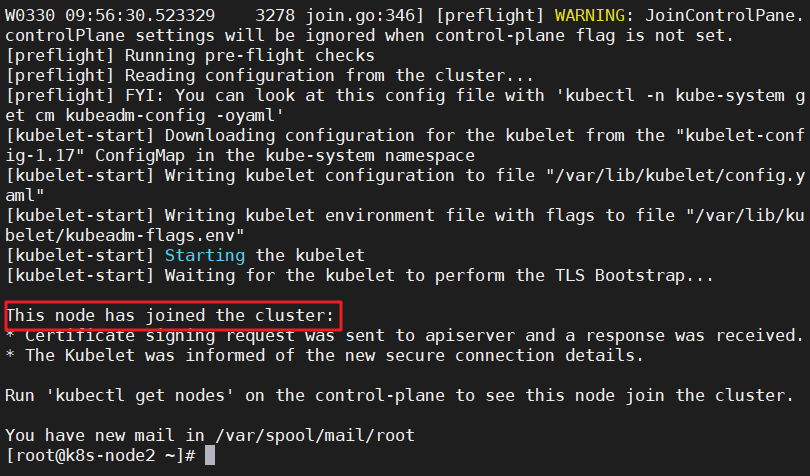

8、加入Kubernetes Node

在 Node 节点执行。

向集群添加新节点,执行在 kubeadm init 输出的 kubeadm join 命令:

kubeadm join 10.0.2.15:6443 --token 9g1a61.2a0407fj52gdfvfz \

--discovery-token-ca-cert-hash sha256:c3fdaf1e1c9e7230e3809a8a57223419f2cafddedc98085d8df3dbd1586bb59a

重启服务

systemctl restart docker.service

确保 node 节点成功

token 过期怎么办

#重新创建一个

kubeadm token create --print-join-command

#或者创建一个永久的token

kubeadm token create --ttl 0 --print-join-command

执行 watch kubectl get pod -n kube-system -o wide 监控 pod 进度

等 3-10 分钟,完全都是 running 以后使用kubectl get nodes检查状态

9、入门操作 kubernetes 集群

1、部署一个 tomcat

kubectl create deployment tomcat6 --image=tomcat:6.0.53-jre8

可以获取到 tomcat 信息,在哪个节点

kubectl get pods -o wide

此时如图部署在了node3节点上,如果node3宕机了会感知到然后重新部署到其他节点如node2

2、暴露 nginx 访问

kubectl expose deployment tomcat6 --port=80 --target-port=8080 --type=NodePort

80 映射容器的 8080;service 会代理 Pod 的 80

查看信息

kubectl get svc -o wide

访问测试,端口如上图,ip哪个机器都可以

http://192.168.56.102:30560/

3、动态扩容测试

kubectl get deployment

应用升级

kubectl set image (--help 查看帮助)

扩容

kubectl scale --replicas=3 deployment tomcat6

扩容了多份,所有无论访问哪个 node 的指定端口,都可以访问到 tomcat6

4、以上操作的 yaml 获取

参照 k8s 细节

5、删除

Kubectl get all

kubectl delete deploy/nginx

kubectl delete service/nginx-service

流程:创建 deployment 会管理 replicas,replicas 控制 pod 数量,有 pod 故障会自动拉起新的 pod

10、安装默认 dashboard(暂不使用)

1、部署 dashboard

$ kubectl apply -f \ https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommende

墙的原因可能不成功,就使用我们准备好的文件,自行上传 文件中无法访问的镜像,自行去 docker hub 找

kubernetes-dashboard.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

2、暴露 dashboard 为公共访问

默认 Dashboard 只能集群内部访问,修改 Service 为 NodePort 类型,暴露到外部:

kind: Service

apiVersion: v1 metadata:

labels:

k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001 selector:

k8s-app: kubernetes-dashboard

访问地址:http://NodeIP:30001

3、创建授权账户

$ kubectl create serviceaccount dashboard-admin -n kube-system

$ kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

$ kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

使用输出的 token 登录 Dashboard。

二、KubeSphere

默认的 dashboard 没啥用,我们用 kubesphere 可以打通全部的 devops 链路。 Kubesphere 集成了很多套件,集群要求较高

https://kubesphere.io/

Kuboard 也很不错,集群要求不高

https://kuboard.cn/support/

1、简介

KubeSphere 是一款面向云原生设计的开源项目,在目前主流容器调度平台 Kubernetes 之 上构建的分布式多租户容器管理平台,提供简单易用的操作界面以及向导式操作方式,在降 低用户使用容器调度平台学习成本的同时,极大降低开发、测试、运维的日常工作的复杂度。

2、安装

1、前提条件

https://kubesphere.io/docs/v2.1/zh-CN/installation/prerequisites/

2、安装前提环境

1、安装 helm(master 节点执行)

Helm 是 Kubernetes 的包管理器。包管理器类似于我们在 Ubuntu 中使用的 apt、Centos 中使用的 yum 或者 Python 中的 pip 一样,能快速查找、下载和安装软件包。Helm 由客 户端组件 helm 和服务端组件 Tiller 组成, 能够将一组 K8S 资源打包统一管理, 是查找、共享和使用为 Kubernetes 构建的软件的最佳方式。

1)、安装

curl -L https://git.io/get_helm.sh | bash

墙原因,这里使用离线安装

获取安装包,这个地址下载快一点

https://mirrors.huaweicloud.com/helm/v2.16.3/

将解压后的linux-amd64/helm、linux-amd64/tiller两个文件放到/usr/local/bin/目录中

chmod 700 xxx #没有权限就使用这个命令更改一下

2)、验证版本

helm version #错误先不管

3、创建权限(master 执行)

创建 helm-rbac.yaml,写入如下内容

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

应用配置

kubectl apply -f helm-rbac.yaml

2、安装 Tiller(master 执行)

1、初始化

helm init --service-account=tiller --upgrade -i registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.16.3 --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

–tiller-image指定镜像,否则会被墙

等待节点上部署的 tiller 完成即可

2、测试(可跳过)

helm install stable/nginx-ingress --name nginx-ingress

helm ls

helm delete nginx-ingress

3、使用语法(可跳过)

#创建一个 chart 范例

helm create helm-chart

#检查 chart 语法

helm lint ./helm-chart

#使用默认 chart 部署到 k8s

helm install --name example1 ./helm-chart --set service.type=NodePort

#查看是否部署成功

kubectl get pod

3、安装 OpenEBS(master 执行)

https://v2-1.docs.kubesphere.io/docs/zh-CN/appendix/install-openebs/

确定 master 节点是否有 taint

kubectl describe node k8s-node1 | grep Taint

取消 taint

kubectl taint nodes k8s-node1 node-role.kubernetes.io/master:NoSchedule-

创建 OpenEBS 的 namespace,OpenEBS 相关资源将创建在这个 namespace 下:

kubectl create ns openebs

安装 OpenEBS,以下列出两种方法,可参考其中任意一种进行创建

A. 若集群已安装了 Helm,可通过 Helm 命令来安装 OpenEBS:

helm install --namespace openebs --name openebs stable/openebs --version 1.5.0

B.除此之外 还可以通过 kubectl 命令安装:

kubectl apply -f https://openebs.github.io/charts/openebs-operator-1.5.0.yaml

以上两种官方说明的方式 第一种提示(hint: running helm repo update may help)我解决不了

第二种是下载一个yaml文件去应用,因为官方提供的地址找不到资源了,这里我们去docker找到对应的版本拉取,然后使用我们自己的yaml文件执行

docker pull openebs/m-apiserver:1.5.0

docker pull openebs/openebs-k8s-provisioner:1.5.0

docker pull openebs/snapshot-controller:1.5.0

docker pull openebs/snapshot-provisioner:1.5.0

docker pull openebs/node-disk-manager-amd64:v0.4.5

docker pull openebs/node-disk-operator-amd64:v0.4.5

docker pull openebs/admission-server:1.5.0

docker pull openebs/provisioner-localpv:1.5.0

openebs-operator-1.5.0.yaml

# This manifest deploys the OpenEBS control plane components, with associated CRs & RBAC rules

# NOTE: On GKE, deploy the openebs-operator.yaml in admin context

# Create the OpenEBS namespace

apiVersion: v1

kind: Namespace

metadata:

name: openebs

---

# Create Maya Service Account

apiVersion: v1

kind: ServiceAccount

metadata:

name: openebs-maya-operator

namespace: openebs

---

# Define Role that allows operations on K8s pods/deployments

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: openebs-maya-operator

rules:

- apiGroups: ["*"]

resources: ["nodes", "nodes/proxy"]

verbs: ["*"]

- apiGroups: ["*"]

resources: ["namespaces", "services", "pods", "pods/exec", "deployments", "deployments/finalizers", "replicationcontrollers", "replicasets", "events", "endpoints", "configmaps", "secrets", "jobs", "cronjobs"]

verbs: ["*"]

- apiGroups: ["*"]

resources: ["statefulsets", "daemonsets"]

verbs: ["*"]

- apiGroups: ["*"]

resources: ["resourcequotas", "limitranges"]

verbs: ["list", "watch"]

- apiGroups: ["*"]

resources: ["ingresses", "horizontalpodautoscalers", "verticalpodautoscalers", "poddisruptionbudgets", "certificatesigningrequests"]

verbs: ["list", "watch"]

- apiGroups: ["*"]

resources: ["storageclasses", "persistentvolumeclaims", "persistentvolumes"]

verbs: ["*"]

- apiGroups: ["volumesnapshot.external-storage.k8s.io"]

resources: ["volumesnapshots", "volumesnapshotdatas"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

- apiGroups: ["apiextensions.k8s.io"]

resources: ["customresourcedefinitions"]

verbs: [ "get", "list", "create", "update", "delete", "patch"]

- apiGroups: ["*"]

resources: [ "disks", "blockdevices", "blockdeviceclaims"]

verbs: ["*" ]

- apiGroups: ["*"]

resources: [ "cstorpoolclusters", "storagepoolclaims", "storagepoolclaims/finalizers", "cstorpoolclusters/finalizers", "storagepools"]

verbs: ["*" ]

- apiGroups: ["*"]

resources: [ "castemplates", "runtasks"]

verbs: ["*" ]

- apiGroups: ["*"]

resources: [ "cstorpools", "cstorpools/finalizers", "cstorvolumereplicas", "cstorvolumes", "cstorvolumeclaims"]

verbs: ["*" ]

- apiGroups: ["*"]

resources: [ "cstorpoolinstances", "cstorpoolinstances/finalizers"]

verbs: ["*" ]

- apiGroups: ["*"]

resources: [ "cstorbackups", "cstorrestores", "cstorcompletedbackups"]

verbs: ["*" ]

- apiGroups: ["coordination.k8s.io"]

resources: ["leases"]

verbs: ["get", "watch", "list", "delete", "update", "create"]

- apiGroups: ["admissionregistration.k8s.io"]

resources: ["validatingwebhookconfigurations", "mutatingwebhookconfigurations"]

verbs: ["get", "create", "list", "delete", "update", "patch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

- apiGroups: ["*"]

resources: [ "upgradetasks"]

verbs: ["*" ]

---

# Bind the Service Account with the Role Privileges.

# TODO: Check if default account also needs to be there

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: openebs-maya-operator

subjects:

- kind: ServiceAccount

name: openebs-maya-operator

namespace: openebs

roleRef:

kind: ClusterRole

name: openebs-maya-operator

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: maya-apiserver

namespace: openebs

labels:

name: maya-apiserver

openebs.io/component-name: maya-apiserver

openebs.io/version: 1.5.0

spec:

selector:

matchLabels:

name: maya-apiserver

openebs.io/component-name: maya-apiserver

replicas: 1

strategy:

type: Recreate

rollingUpdate: null

template:

metadata:

labels:

name: maya-apiserver

openebs.io/component-name: maya-apiserver

openebs.io/version: 1.5.0

spec:

serviceAccountName: openebs-maya-operator

containers:

- name: maya-apiserver

imagePullPolicy: IfNotPresent

image: openebs/m-apiserver:1.5.0

ports:

- containerPort: 5656

env:

# OPENEBS_IO_KUBE_CONFIG enables maya api service to connect to K8s

# based on this config. This is ignored if empty.

# This is supported for maya api server version 0.5.2 onwards

#- name: OPENEBS_IO_KUBE_CONFIG

# value: "/home/ubuntu/.kube/config"

# OPENEBS_IO_K8S_MASTER enables maya api service to connect to K8s

# based on this address. This is ignored if empty.

# This is supported for maya api server version 0.5.2 onwards

#- name: OPENEBS_IO_K8S_MASTER

# value: "http://172.28.128.3:8080"

# OPENEBS_NAMESPACE provides the namespace of this deployment as an

# environment variable

- name: OPENEBS_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# OPENEBS_SERVICE_ACCOUNT provides the service account of this pod as

# environment variable

- name: OPENEBS_SERVICE_ACCOUNT

valueFrom:

fieldRef:

fieldPath: spec.serviceAccountName

# OPENEBS_MAYA_POD_NAME provides the name of this pod as

# environment variable

- name: OPENEBS_MAYA_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

# If OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG is false then OpenEBS default

# storageclass and storagepool will not be created.

- name: OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG

value: "true"

# OPENEBS_IO_INSTALL_DEFAULT_CSTOR_SPARSE_POOL decides whether default cstor sparse pool should be

# configured as a part of openebs installation.

# If "true" a default cstor sparse pool will be configured, if "false" it will not be configured.

# This value takes effect only if OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG

# is set to true

- name: OPENEBS_IO_INSTALL_DEFAULT_CSTOR_SPARSE_POOL

value: "false"

# OPENEBS_IO_CSTOR_TARGET_DIR can be used to specify the hostpath

# to be used for saving the shared content between the side cars

# of cstor volume pod.

# The default path used is /var/openebs/sparse

#- name: OPENEBS_IO_CSTOR_TARGET_DIR

# value: "/var/openebs/sparse"

# OPENEBS_IO_CSTOR_POOL_SPARSE_DIR can be used to specify the hostpath

# to be used for saving the shared content between the side cars

# of cstor pool pod. This ENV is also used to indicate the location

# of the sparse devices.

# The default path used is /var/openebs/sparse

#- name: OPENEBS_IO_CSTOR_POOL_SPARSE_DIR

# value: "/var/openebs/sparse"

# OPENEBS_IO_JIVA_POOL_DIR can be used to specify the hostpath

# to be used for default Jiva StoragePool loaded by OpenEBS

# The default path used is /var/openebs

# This value takes effect only if OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG

# is set to true

#- name: OPENEBS_IO_JIVA_POOL_DIR

# value: "/var/openebs"

# OPENEBS_IO_LOCALPV_HOSTPATH_DIR can be used to specify the hostpath

# to be used for default openebs-hostpath storageclass loaded by OpenEBS

# The default path used is /var/openebs/local

# This value takes effect only if OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG

# is set to true

#- name: OPENEBS_IO_LOCALPV_HOSTPATH_DIR

# value: "/var/openebs/local"

- name: OPENEBS_IO_JIVA_CONTROLLER_IMAGE

value: "openebs/jiva:1.5.0"

- name: OPENEBS_IO_JIVA_REPLICA_IMAGE

value: "openebs/jiva:1.5.0"

- name: OPENEBS_IO_JIVA_REPLICA_COUNT

value: "3"

- name: OPENEBS_IO_CSTOR_TARGET_IMAGE

value: "openebs/cstor-istgt:1.5.0"

- name: OPENEBS_IO_CSTOR_POOL_IMAGE

value: "openebs/cstor-pool:1.5.0"

- name: OPENEBS_IO_CSTOR_POOL_MGMT_IMAGE

value: "openebs/cstor-pool-mgmt:1.5.0"

- name: OPENEBS_IO_CSTOR_VOLUME_MGMT_IMAGE

value: "openebs/cstor-volume-mgmt:1.5.0"

- name: OPENEBS_IO_VOLUME_MONITOR_IMAGE

value: "openebs/m-exporter:1.5.0"

- name: OPENEBS_IO_CSTOR_POOL_EXPORTER_IMAGE

###################################################################################################################

value: "openebs/m-exporter:1.5.0"

- name: OPENEBS_IO_HELPER_IMAGE

value: "openebs/linux-utils:1.5.0"

# OPENEBS_IO_ENABLE_ANALYTICS if set to true sends anonymous usage

# events to Google Analytics

- name: OPENEBS_IO_ENABLE_ANALYTICS

value: "true"

- name: OPENEBS_IO_INSTALLER_TYPE

value: "openebs-operator"

# OPENEBS_IO_ANALYTICS_PING_INTERVAL can be used to specify the duration (in hours)

# for periodic ping events sent to Google Analytics.

# Default is 24h.

# Minimum is 1h. You can convert this to weekly by setting 168h

#- name: OPENEBS_IO_ANALYTICS_PING_INTERVAL

# value: "24h"

livenessProbe:

exec:

command:

- /usr/local/bin/mayactl

- version

initialDelaySeconds: 30

periodSeconds: 60

readinessProbe:

exec:

command:

- /usr/local/bin/mayactl

- version

initialDelaySeconds: 30

periodSeconds: 60

---

apiVersion: v1

kind: Service

metadata:

name: maya-apiserver-service

namespace: openebs

labels:

openebs.io/component-name: maya-apiserver-svc

spec:

ports:

- name: api

port: 5656

protocol: TCP

targetPort: 5656

selector:

name: maya-apiserver

sessionAffinity: None

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: openebs-provisioner

namespace: openebs

labels:

name: openebs-provisioner

openebs.io/component-name: openebs-provisioner

openebs.io/version: 1.5.0

spec:

selector:

matchLabels:

name: openebs-provisioner

openebs.io/component-name: openebs-provisioner

replicas: 1

strategy:

type: Recreate

rollingUpdate: null

template:

metadata:

labels:

name: openebs-provisioner

openebs.io/component-name: openebs-provisioner

openebs.io/version: 1.5.0

spec:

serviceAccountName: openebs-maya-operator

containers:

- name: openebs-provisioner

imagePullPolicy: IfNotPresent

image: openebs/openebs-k8s-provisioner:1.5.0

env:

# OPENEBS_IO_K8S_MASTER enables openebs provisioner to connect to K8s

# based on this address. This is ignored if empty.

# This is supported for openebs provisioner version 0.5.2 onwards

#- name: OPENEBS_IO_K8S_MASTER

# value: "http://10.128.0.12:8080"

# OPENEBS_IO_KUBE_CONFIG enables openebs provisioner to connect to K8s

# based on this config. This is ignored if empty.

# This is supported for openebs provisioner version 0.5.2 onwards

#- name: OPENEBS_IO_KUBE_CONFIG

# value: "/home/ubuntu/.kube/config"

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: OPENEBS_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# OPENEBS_MAYA_SERVICE_NAME provides the maya-apiserver K8s service name,

# that provisioner should forward the volume create/delete requests.

# If not present, "maya-apiserver-service" will be used for lookup.

# This is supported for openebs provisioner version 0.5.3-RC1 onwards

#- name: OPENEBS_MAYA_SERVICE_NAME

# value: "maya-apiserver-apiservice"

livenessProbe:

exec:

command:

- pgrep

- ".*openebs"

initialDelaySeconds: 30

periodSeconds: 60

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: openebs-snapshot-operator

namespace: openebs

labels:

name: openebs-snapshot-operator

openebs.io/component-name: openebs-snapshot-operator

openebs.io/version: 1.5.0

spec:

selector:

matchLabels:

name: openebs-snapshot-operator

openebs.io/component-name: openebs-snapshot-operator

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

name: openebs-snapshot-operator

openebs.io/component-name: openebs-snapshot-operator

openebs.io/version: 1.5.0

spec:

serviceAccountName: openebs-maya-operator

containers:

- name: snapshot-controller

image: openebs/snapshot-controller:1.5.0

imagePullPolicy: IfNotPresent

env:

- name: OPENEBS_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

livenessProbe:

exec:

command:

- pgrep

- ".*controller"

initialDelaySeconds: 30

periodSeconds: 60

# OPENEBS_MAYA_SERVICE_NAME provides the maya-apiserver K8s service name,

# that snapshot controller should forward the snapshot create/delete requests.

# If not present, "maya-apiserver-service" will be used for lookup.

# This is supported for openebs provisioner version 0.5.3-RC1 onwards

#- name: OPENEBS_MAYA_SERVICE_NAME

# value: "maya-apiserver-apiservice"

- name: snapshot-provisioner

image: openebs/snapshot-provisioner:1.5.0

imagePullPolicy: IfNotPresent

env:

- name: OPENEBS_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# OPENEBS_MAYA_SERVICE_NAME provides the maya-apiserver K8s service name,

# that snapshot provisioner should forward the clone create/delete requests.

# If not present, "maya-apiserver-service" will be used for lookup.

# This is supported for openebs provisioner version 0.5.3-RC1 onwards

#- name: OPENEBS_MAYA_SERVICE_NAME

# value: "maya-apiserver-apiservice"

livenessProbe:

exec:

command:

- pgrep

- ".*provisioner"

initialDelaySeconds: 30

periodSeconds: 60

---

# This is the node-disk-manager related config.

# It can be used to customize the disks probes and filters

apiVersion: v1

kind: ConfigMap

metadata:

name: openebs-ndm-config

namespace: openebs

labels:

openebs.io/component-name: ndm-config

data:

# udev-probe is default or primary probe which should be enabled to run ndm

# filterconfigs contails configs of filters - in their form fo include

# and exclude comma separated strings

node-disk-manager.config: |

probeconfigs:

- key: udev-probe

name: udev probe

state: true

- key: seachest-probe

name: seachest probe

state: false

- key: smart-probe

name: smart probe

state: true

filterconfigs:

- key: os-disk-exclude-filter

name: os disk exclude filter

state: true

exclude: "/,/etc/hosts,/boot"

- key: vendor-filter

name: vendor filter

state: true

include: ""

exclude: "CLOUDBYT,OpenEBS"

- key: path-filter

name: path filter

state: true

include: ""

exclude: "loop,/dev/fd0,/dev/sr0,/dev/ram,/dev/dm-,/dev/md"

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: openebs-ndm

namespace: openebs

labels:

name: openebs-ndm

openebs.io/component-name: ndm

openebs.io/version: 1.5.0

spec:

selector:

matchLabels:

name: openebs-ndm

openebs.io/component-name: ndm

updateStrategy:

type: RollingUpdate

template:

metadata:

labels:

name: openebs-ndm

openebs.io/component-name: ndm

openebs.io/version: 1.5.0

spec:

# By default the node-disk-manager will be run on all kubernetes nodes

# If you would like to limit this to only some nodes, say the nodes

# that have storage attached, you could label those node and use

# nodeSelector.

#

# e.g. label the storage nodes with - "openebs.io/nodegroup"="storage-node"

# kubectl label node "openebs.io/nodegroup"="storage-node"

#nodeSelector:

# "openebs.io/nodegroup": "storage-node"

serviceAccountName: openebs-maya-operator

hostNetwork: true

containers:

- name: node-disk-manager

image: openebs/node-disk-manager-amd64:v0.4.5

imagePullPolicy: Always

securityContext:

privileged: true

volumeMounts:

- name: config

mountPath: /host/node-disk-manager.config

subPath: node-disk-manager.config

readOnly: true

- name: udev

mountPath: /run/udev

- name: procmount

mountPath: /host/proc

readOnly: true

- name: sparsepath

mountPath: /var/openebs/sparse

env:

# namespace in which NDM is installed will be passed to NDM Daemonset

# as environment variable

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# pass hostname as env variable using downward API to the NDM container

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# specify the directory where the sparse files need to be created.

# if not specified, then sparse files will not be created.

- name: SPARSE_FILE_DIR

value: "/var/openebs/sparse"

# Size(bytes) of the sparse file to be created.

- name: SPARSE_FILE_SIZE

value: "10737418240"

# Specify the number of sparse files to be created

- name: SPARSE_FILE_COUNT

value: "0"

livenessProbe:

exec:

command:

- pgrep

- ".*ndm"

initialDelaySeconds: 30

periodSeconds: 60

volumes:

- name: config

configMap:

name: openebs-ndm-config

- name: udev

hostPath:

path: /run/udev

type: Directory

# mount /proc (to access mount file of process 1 of host) inside container

# to read mount-point of disks and partitions

- name: procmount

hostPath:

path: /proc

type: Directory

- name: sparsepath

hostPath:

path: /var/openebs/sparse

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: openebs-ndm-operator

namespace: openebs

labels:

name: openebs-ndm-operator

openebs.io/component-name: ndm-operator

openebs.io/version: 1.5.0

spec:

selector:

matchLabels:

name: openebs-ndm-operator

openebs.io/component-name: ndm-operator

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

name: openebs-ndm-operator

openebs.io/component-name: ndm-operator

openebs.io/version: 1.5.0

spec:

serviceAccountName: openebs-maya-operator

containers:

- name: node-disk-operator

image: openebs/node-disk-operator-amd64:v0.4.5

imagePullPolicy: Always

readinessProbe:

exec:

command:

- stat

- /tmp/operator-sdk-ready

initialDelaySeconds: 4

periodSeconds: 10

failureThreshold: 1

env:

- name: WATCH_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

# the service account of the ndm-operator pod

- name: SERVICE_ACCOUNT

valueFrom:

fieldRef:

fieldPath: spec.serviceAccountName

- name: OPERATOR_NAME

value: "node-disk-operator"

- name: CLEANUP_JOB_IMAGE

value: "openebs/linux-utils:1.5.0"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: openebs-admission-server

namespace: openebs

labels:

app: admission-webhook

openebs.io/component-name: admission-webhook

openebs.io/version: 1.5.0

spec:

replicas: 1

strategy:

type: Recreate

rollingUpdate: null

selector:

matchLabels:

app: admission-webhook

template:

metadata:

labels:

app: admission-webhook

openebs.io/component-name: admission-webhook

openebs.io/version: 1.5.0

spec:

serviceAccountName: openebs-maya-operator

containers:

- name: admission-webhook

image: openebs/admission-server:1.5.0

imagePullPolicy: IfNotPresent

args:

- -alsologtostderr

- -v=2

- 2>&1

env:

- name: OPENEBS_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: ADMISSION_WEBHOOK_NAME

value: "openebs-admission-server"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: openebs-localpv-provisioner

namespace: openebs

labels:

name: openebs-localpv-provisioner

openebs.io/component-name: openebs-localpv-provisioner

openebs.io/version: 1.5.0

spec:

selector:

matchLabels:

name: openebs-localpv-provisioner

openebs.io/component-name: openebs-localpv-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

name: openebs-localpv-provisioner

openebs.io/component-name: openebs-localpv-provisioner

openebs.io/version: 1.5.0

spec:

serviceAccountName: openebs-maya-operator

containers:

- name: openebs-provisioner-hostpath

imagePullPolicy: Always

image: openebs/provisioner-localpv:1.5.0

env:

# OPENEBS_IO_K8S_MASTER enables openebs provisioner to connect to K8s

# based on this address. This is ignored if empty.

# This is supported for openebs provisioner version 0.5.2 onwards

#- name: OPENEBS_IO_K8S_MASTER

# value: "http://10.128.0.12:8080"

# OPENEBS_IO_KUBE_CONFIG enables openebs provisioner to connect to K8s

# based on this config. This is ignored if empty.

# This is supported for openebs provisioner version 0.5.2 onwards

#- name: OPENEBS_IO_KUBE_CONFIG

# value: "/home/ubuntu/.kube/config"

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: OPENEBS_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# OPENEBS_SERVICE_ACCOUNT provides the service account of this pod as

# environment variable

- name: OPENEBS_SERVICE_ACCOUNT

valueFrom:

fieldRef:

fieldPath: spec.serviceAccountName

- name: OPENEBS_IO_ENABLE_ANALYTICS

value: "true"

- name: OPENEBS_IO_INSTALLER_TYPE

value: "openebs-operator"

- name: OPENEBS_IO_HELPER_IMAGE

value: "openebs/linux-utils:1.5.0"

livenessProbe:

exec:

command:

- pgrep

- ".*localpv"

initialDelaySeconds: 30

periodSeconds: 60

---

执行命令应用

kubectl apply -f openebs-operator-1.5.0.yaml

稍等一会查看效果

kubectl get sc --all-namespaces

将 openebs-hostpath 设置为 默认的 StorageClass:

kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

至此,OpenEBS 的 LocalPV 已作为默认的存储类型创建成功。由于在文档开头手动去掉 了master 节点的 Taint,我们可以在安装完 OpenEBS 后将 master 节点 Taint 加上,避 免业务相关的工作负载调度到 master 节点抢占 master 资源,但是我个人测试这里不要加上,不然后面安装kubesphere的时候就会导致ks-gateway和api服务开启不了,所以忽略下面命令,后面有需要在看

kubectl taint nodes k8s-node1 node-role.kubernetes.io/master=:NoSchedule

3、最小化安装kubesphere

若您的集群可用的资源符合 CPU > 1 Core,可用内存 > 2 G,可以参考以下命令开启

KubeSphere 最小化安装:

kubectl apply -f https://github.com/kubesphere/ks-installer/blob/v2.1.1/kubesphere-minimal.yaml

查看安装日志,请耐心等待安装成功。

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

4、完整化安装

若集群可用 CPU > 8 Core 且可用内存 > 16 G,可以使用以下命令完整安装 KubeSphere。

1、一条命令

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/v2.1.1/kubesphere-complete-setup.yaml

可以去我们的文件里面获取,上传到虚拟机,

参照 https://github.com/kubesphere/ks-installer/tree/master 修改部分配置

2、查看进度

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

因为暂时我的机器内存有限,这里先使用最小安装,可能上述官方的地址失效,我找到一个kubesphere-minimal.yaml文件如下:

---

apiVersion: v1

kind: Namespace

metadata:

name: kubesphere-system

---

apiVersion: v1

data:

ks-config.yaml: |

---

persistence:

storageClass: ""

etcd:

monitoring: False

endpointIps: 192.168.0.7,192.168.0.8,192.168.0.9

port: 2379

tlsEnable: True

common:

mysqlVolumeSize: 20Gi

minioVolumeSize: 20Gi

etcdVolumeSize: 20Gi

openldapVolumeSize: 2Gi

redisVolumSize: 2Gi

metrics_server:

enabled: False

console:

enableMultiLogin: False # enable/disable multi login

port: 30880

monitoring:

prometheusReplicas: 1

prometheusMemoryRequest: 400Mi

prometheusVolumeSize: 20Gi

grafana:

enabled: False

logging:

enabled: False

elasticsearchMasterReplicas: 1

elasticsearchDataReplicas: 1

logsidecarReplicas: 2

elasticsearchMasterVolumeSize: 4Gi

elasticsearchDataVolumeSize: 20Gi

logMaxAge: 7

elkPrefix: logstash

containersLogMountedPath: ""

kibana:

enabled: False

openpitrix:

enabled: False

devops:

enabled: True

jenkinsMemoryLim: 2Gi

jenkinsMemoryReq: 1000Mi

jenkinsVolumeSize: 8Gi

jenkinsJavaOpts_Xms: 512m

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

sonarqube:

enabled: True

postgresqlVolumeSize: 8Gi

servicemesh:

enabled: False

notification:

enabled: True

alerting:

enabled: True

kind: ConfigMap

metadata:

name: ks-installer

namespace: kubesphere-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: ks-installer

namespace: kubesphere-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

creationTimestamp: null

name: ks-installer

rules:

- apiGroups:

- ""

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apps

resources:

- '*'

verbs:

- '*'

- apiGroups:

- extensions

resources:

- '*'

verbs:

- '*'

- apiGroups:

- batch

resources:

- '*'

verbs:

- '*'

- apiGroups:

- rbac.authorization.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apiregistration.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apiextensions.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- tenant.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- certificates.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- devops.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- monitoring.coreos.com

resources:

- '*'

verbs:

- '*'

- apiGroups:

- logging.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- jaegertracing.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- storage.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- admissionregistration.k8s.io

resources:

- '*'

verbs:

- '*'

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: ks-installer

subjects:

- kind: ServiceAccount

name: ks-installer

namespace: kubesphere-system

roleRef:

kind: ClusterRole

name: ks-installer

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

app: ks-install

spec:

replicas: 1

selector:

matchLabels:

app: ks-install

template:

metadata:

labels:

app: ks-install

spec:

serviceAccountName: ks-installer

containers:

- name: installer

image: kubesphere/ks-installer:v2.1.1

imagePullPolicy: "Always"

复制到机器中使用命令:

kubectl apply -f kubesphere-minimal.yaml

启动成功

3、解决问题重启 installer

kubectl delete pod ks-apigateway-78bcdc8ffc-fcfqb -n kubesphere-system

4、metrics-server 部署

---

apiVersion: v1

kind: ServiceAccount metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

-name: tmp-dir

emptyDir: {}

containers:

-name: metrics-server

# image: k8s.gcr.io/metrics-server-amd64:v0.3.6

image: registry.aliyuncs.com/google_containers/metrics-server-amd64:v0.3.6

args:

---cert-dir=/tmp

---secure-port=4443

---kubelet-insecure-tls

---kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP

ports:

- name: main-port

containerPort: 4443

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

imagePullPolicy: IfNotPresent

volumeMounts:

- name: tmp-dir

mountPath: /tmp

nodeSelector:

beta.kubernetes.io/os: linux

3、角色管理

示例

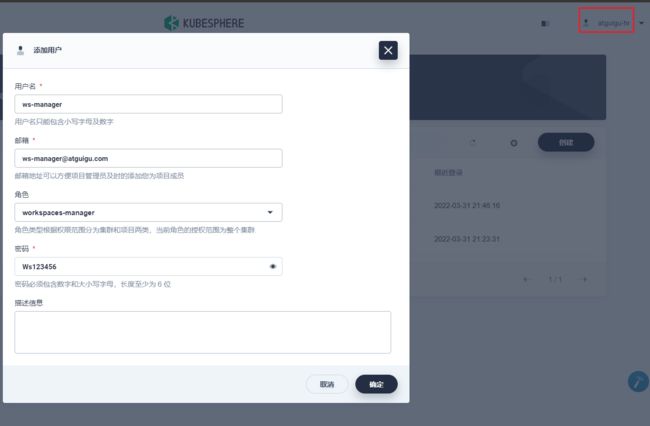

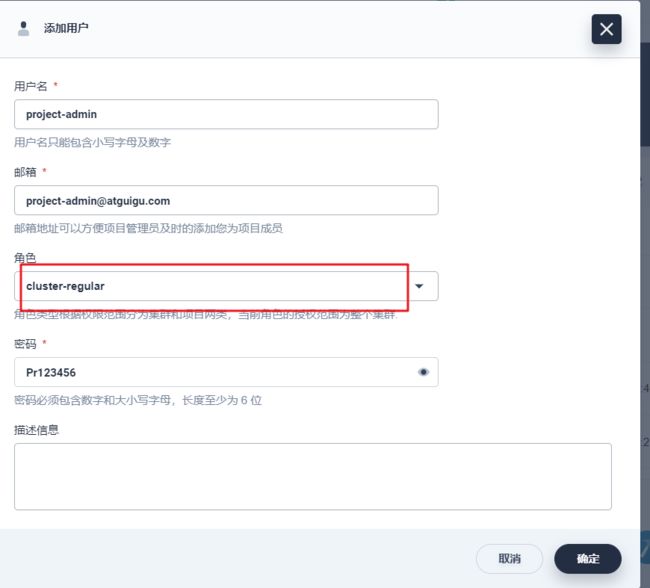

1、创建一个角色(负责用户管理的)

2、创建一个账号

3、使用创建的hr的账号分配一个集群工作空间的管理员

4、创建的hr的账号分配一个集群的普通用户

5、创建一个项目的管理用户

6、创建一个项目的普通用户

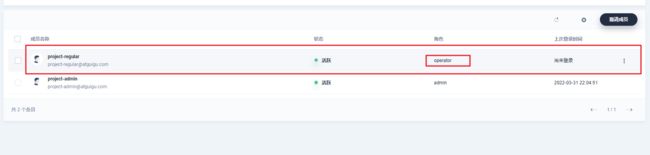

7、登录ws-manager用户(管理工作空间的)

创建一个项目空间

分配该项目空间的管理员

8、登录ws-admin(项目的管理员)

邀请两个成员,一个是项目的管理员,一个只能观看的普通用户

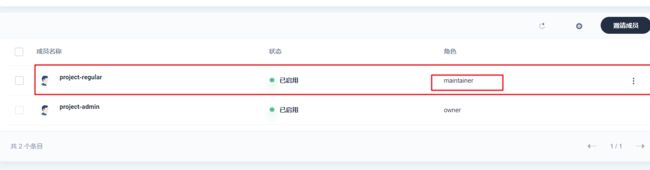

9、登录project-admin

创建一个资源项目,如谷粒商城后台项目、前端项目…

邀请一个开发人员

创建一个devops项目

邀请一个流水线维护人员

4、创建密钥

详情见https://v2-1.docs.kubesphere.io/docs/zh-CN/quick-start/wordpress-deployment/

5、创建凭证

https://v2-1.docs.kubesphere.io/docs/zh-CN/devops/credential/#%E5%88%9B%E5%BB%BA%E5%87%AD%E8%AF%81

6、等等。。。。。。

三、Docker 深入

1、Dockerfile

在 Docker 中创建镜像最常用的方式,就是使用 Dockerfile。Dockerfile 是一个 Docker 镜像 的描述文件,我们可以理解成火箭发射的 A、B、C、D…的步骤。Dockerfile 其内部包含了一 条条的指令,每一条指令构建一层,因此每一条指令的内容,就是描述该层应当如何构建。

1、示例

#基于 centos 镜像

FROM centos

#维护人的信息

MAINTAINER My CentOS <534096094@qq.com>

#安装 httpd 软件包

RUN yum -y update

RUN yum -y install httpd

#开启 80 端口

EXPOSE 80

#复制网站首页文件至镜像中 web 站点下

ADD index.html /var/www/html/index.html

#复制该脚本至镜像中,并修改其权限

ADD run.sh /run.sh

RUN chmod 775 /run.sh

#当启动容器时执行的脚本文件

CMD ["/run.sh"]

官方文档:https://docs.docker.com/engine/reference/builder/#from

复杂一点的示例

#在 centos 上安装 nginx

FROM centos

#标明著作人的名称和邮箱

MAINTAINER xxx [email protected]

#测试一下网络环境

RUN ping -c 1 www.baidu.com

#安装 nginx 必要的一些软件

RUN yum -y install gcc make pcre-devel zlib-devel tar zlib

#把 nginx 安装包复制到/usr/src/目录下,如果是压缩包还会自动解压,是网络路径会自动 下载

ADD nginx-1.15.8.tar.gz /usr/src/

#切换到/usr/src/nginx-1.15.8 编译并且安装

nginx RUN cd /usr/src/nginx-1.15.8 \

&& mkdir /usr/local/nginx \

&& ./configure --prefix=/usr/local/nginx && make && make install \

&& ln -s /usr/local/nginx/sbin/nginx /usr/local/sbin/ \

&& nginx

#删除安装 nginx 安装目录

RUN rm -rf /usr/src/nginx-nginx-1.15.8

#对外暴露 80 端口

EXPOSE 80

#启动 nginx

CMD ["nginx", "-g", "daemon off;"]

2、常用指令

| 类型 | 命令 |

|---|---|

| 基础镜像信息 | FROM |

| 维护者信息 | MAINTAINER |

| 镜像操作指令 | RUN、COPY、ADD、EXPOSE、WORKDIR、 ONBUILD、USER、VOLUME 等 |

| 容器启动时执行指令 | CMD、ENTRYPOINT |

2、镜像操作

1、创建项目 dockerfile

2、上传项目到服务器。

3、进入项目,构建镜像到本地仓库;

-

(1)

docker build -t nginx:GA-1.0 -f ./Dockerfile .别忘了最后的小数点。 -

(2)

docker images查看镜像 -

(3)

docker exec -it 容器id /bin/bash进入容器,修改容器 -

(4)

docker commit -a “leifengyang” -m “nginxxx” 容器 id mynginx:GA-2.0- docker commit [OPTIONS] CONTAINER [REPOSITORY[:TAG]]

- OPTIONS 说明:

- -a :提交的镜像作者;

- -c :使用 Dockerfile 指令来创建镜像;

- -m :提交时的说明文字;

- -p :在 commit 时,将容器暂停。

-

(5)

docker login: 登陆到一个 Docker 镜像仓库,如果未指定镜像仓库地址,默认为官 方仓库 Docker Hubdocker login -u 用户名 -p 密码

-

(6)

docker logout: 登出一个 Docker 镜像仓库,如果未指定镜像仓库地址,默认为官 方仓库 Docker Hub

4、推送镜像到 docker hub

(1)标记镜像

docker tag local-image:tagname username/new-repo:tagname

(2)上传镜像

docker push username/new-repo:tagname

5、保存镜像,加载镜像

(1)可以保存镜像为 tar,使用 u 盘等设备复制到任意 docker 主机,再次加载镜像

(2)保存

docker save spring-boot-docker -o /home/spring-boot-docker.tar

(3)加载

docker load -i sping-boot-docker.tar

6、阿里云操作

(1)登录阿里云,密码就是开通镜像仓库时的密码

docker login --username=chenfl**** registry.cn-hangzhou.aliyuncs.com

(2)拉取镜像

docker pull registry.cn-hangzhou.aliyuncs.com/chenfl_docker/gulimall:[镜像版本号]

(3)推送镜像

$ docker login --username=chenfl0126 registry.cn-hangzhou.aliyuncs.com

$ docker tag [ImageId] registry.cn-hangzhou.aliyuncs.com/chenfl_docker/gulimall:[镜像版本号]

$ docker push registry.cn-hangzhou.aliyuncs.com/chenfl_docker/gulimall:[镜像版本号]

四、K8S 细节

1、kubectl

1、kubectl 文档

https://kubernetes.io/zh/docs/reference/kubectl/overview/

2、资源类型

https://kubernetes.io/zh/docs/reference/kubectl/overview/#%E8%B5%84%E6%BA%90%E7%B1%BB%E5%9E%8B

3、格式化输出

https://kubernetes.io/zh/docs/reference/kubectl/overview/#%E6%A0%BC%E5%BC%8F%E5%8C%96%E8%BE%93%E5%87%BA

4、常用操作

https://kubernetes.io/zh/docs/reference/kubectl/overview/#%E7%A4%BA%E4%BE%8B-%E5%B8%B8%E7%94%A8%E6%93%8D%E4%BD%9C

5、命令参考

https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands

2、yaml 语法

1、yml 模板

2、yaml 字段解析

参照官方文档

3、入门操作

1、Pod 是什么,Controller 是什么

https://kubernetes.io/zh/docs/concepts/workloads/pods/#pods-and-controllers

Pod 和控制器

控制器可以为您创建和管理多个 Pod,管理副本和上线,并在集群范围内提供自修复能力。 例如,如果一个节点失败,控制器可以在不同的节点上调度一样的替身来自动替换 Pod。 包含一个或多个 Pod 的控制器一些示例包括:

Deployment

StatefulSet

DaemonSet

控制器通常使用您提供的 Pod 模板来创建它所负责的 Pod

2、Deployment&Service 是什么

3、Service 的意义

1、部署一个 nginx

kubectl create deployment nginx --image=nginx

2、暴露 nginx 访问

kubectl expose deployment nginx --port=80 --type=NodePort

统一应用访问入口;

Service 管理一组 Pod。

防止 Pod 失联(服务发现)、定义一组 Pod 的访问策略

现在 Service 我们使用 NodePort 的方式暴露,这样访问每个节点的端口,都可以访问到这 个 Pod,如果节点宕机,就会出现问题。

4、labels and selectors

5、Ingress

通过 Service 发现 Pod 进行关联。基于域名访问。

通过 Ingress Controller 实现 Pod 负载均衡

支持 TCP/UDP 4 层负载均衡和 HTTP 7 层负载均衡

步骤:

1)、部署 Ingress Controller

2)、创建 Ingress 规则

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web

spec:

rules:

- host: tomcat6.atguigu.com

http:

paths:

- backend:

serviceName: tomcat6

servicePort: 80

如果再部署了 tomcat8;看效果;

kubectl create deployment tomcat8 --image=tomcat:8.5.51-jdk8

kubectl expose deployment tomcat8 --port=88 --target-port=8080 --type=NodePort

删除指定资源

kubectl delete xxx

随便配置域名对应哪个节点,都可以访问 tomcat6/8;因为所有节点的 ingress-controller 路由表是同步的。

6、网络模型

Kubernetes 的网络模型从内至外 由四个部分组成:

-

1、Pod 内部容器所在的网络

-

2、Pod 所在的网络

-

3、Pod 和 Service 之间通信的网络

-

4、外界与 Service 之间通信的网络

4、项目部署

项目部署流程

制作项目镜像(将项目制作为 Docker 镜像,要熟悉 Dockerfile 的编写)

控制器管理 Pod(编写 k8s 的 yaml 即可)

暴露应用

日志监控

五、MySQL 集群

1、集群原理

以上可以作为企业中常用的数据库解决方案;

- MySQL-MMM 是 Master-Master Replication Manager for MySQL(mysql 主主复制管理 器)的简称,是 Google 的开源项目(Perl 脚本)。MMM 基于 MySQL Replication 做的扩展架构,主要用 来监控 mysql 主主复制并做失败转 移。其原理是将真实数据库节点的 IP(RIP)映射为虚拟 IP(VIP)集。 mysql-mmm 的监管端会提供多个 虚拟 IP(VIP),包括一个可写 VIP 多个可读 VIP,通过监管的管理,这 些 IP 会绑定在可用 mysql 之上,当 某一台 mysql 宕机时,监管会将 VIP 迁移至其他 mysql。在整个监管过 程中,需要在 mysql 中添加相关授 权用户,以便让 mysql 可以支持监 理机的维护。授权的用户包括一个mmm_monitor 用户和一个 mmm_agent 用户,如果想使用 mmm 的备份工具则还要添 加一个 mmm_tools 用户。

-

MHA(Master High Availability)目前在 MySQL 高可用方面是一个相对成熟的解决方案, 由日本 DeNA 公司 youshimaton(现就职于 Facebook 公司)开发,是一套优秀的作为 MySQL 高可用性环境下故障切换和主从提升的高可用软件。在MySQL 故障切换过程中, MHA 能做到在 0~30 秒之内自动完成数据库的故障切换操作(以 2019 年的眼光来说太 慢了),并且在进行故障切换的过程中,MHA 能在最大程度上保证数据的一致性,以 达到真正意义上的高可用。

-

InnoDB Cluster 支持自动 Failover、强一致性、读写分离、读库高可用、读请求负载均 衡,横向扩展的特性,是比较完备的一套方案。但是部署起来复杂,想要解决 router 单点问题好需要新增组件,如没有其他更好的方案可考虑该方案。 InnoDB Cluster 主 要由 MySQL Shell、MySQL Router 和 MySQL 服务器集群组成,三者协同工作,共同为 MySQL 提供完整的高可用性解决方案。MySQL Shell 对管理人员提供管理接口,可以 很方便的对集群进行配置和管理,MySQL Router 可以根据部署的集群状况自动的初始 化,是客户端连接实例。如果有节点 down 机,集群会自动更新配置。集群包含单点写 入和多点写入两种模式。在单主模式下,如果主节点 down 掉,从节点自动替换上来, MySQL Router 会自动探测,并将客户端连接到新节点。

2、Docker 安装模拟 MySQL 主从复制集群

1、下载mysql 镜像

2、创建Master 实例并启动

docker run -p 3307:3306 --name mysql-master \

-v /mydata/mysql/master/log:/var/log/mysql \

-v /mydata/mysql/master/data:/var/lib/mysql \

-v /mydata/mysql/master/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=root \

-d mysql:5.7

参数说明

-p 3307:3306:将容器的 3306 端口映射到主机的 3307 端口

-v /mydata/mysql/master/conf:/etc/mysql:将配置文件夹挂在到主机

-v /mydata/mysql/master/log:/var/log/mysql:将日志文件夹挂载到主机

-v /mydata/mysql/master/data:/var/lib/mysql/:将配置文件夹挂载到主机

-e MYSQL_ROOT_PASSWORD=root:初始化 root 用户的密码

修改master基本配置

vim /mydata/mysql/master/conf/my.cnf

[client]

default-character-set=utf8

[mysql]

default-character-set=utf8

[mysqld]

init_connect='SET collation_connection = utf8_unicode_ci'

init_connect='SET NAMES utf8'

character-set-server=utf8

collation-server=utf8_unicode_ci

skip-character-set-client-handshake

skip-name-resolve

注意:skip-name-resolve 一定要加,不然连接 mysql 会超级慢

添加 master 主从复制部分配置,在上述后面加上

server_id=1

log-bin=mysql-bin

read-only=0

binlog-do-db=gulimall_ums

binlog-do-db=gulimall_pms

binlog-do-db=gulimall_oms

binlog-do-db=gulimall_sms

binlog-do-db=gulimall_wms

binlog-do-db=gulimall_admin

replicate-ignore-db=mysql

replicate-ignore-db=sys

replicate-ignore-db=information_schema

replicate-ignore-db=performance_schema

重启 master

3、 创建 Slave 实例并启动

docker run -p 3317:3306 --name mysql-slaver-01 \

-v /mydata/mysql/slaver/log:/var/log/mysql \

-v /mydata/mysql/slaver/data:/var/lib/mysql \

-v /mydata/mysql/slaver/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=root \

-d mysql:5.7

修改 slave 基本配置

vim /mydata/mysql/slaver/conf/my.cnf

[client]

default-character-set=utf8

[mysql]

default-character-set=utf8

[mysqld]

init_connect='SET collation_connection = utf8_unicode_ci'

init_connect='SET NAMES utf8'

character-set-server=utf8

collation-server=utf8_unicode_ci

skip-character-set-client-handshake

skip-name-resolve

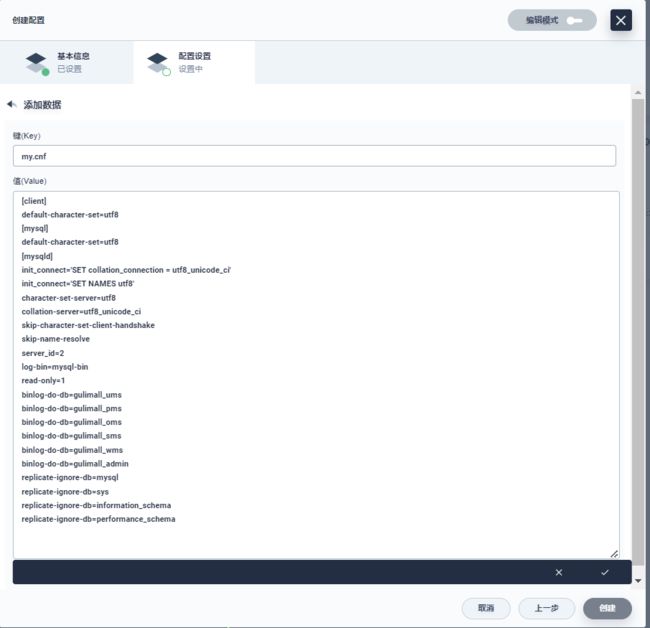

添加 master 主从复制部分配置,在上述后面加上

server_id=2

log-bin=mysql-bin

read-only=1

binlog-do-db=gulimall_ums

binlog-do-db=gulimall_pms

binlog-do-db=gulimall_oms

binlog-do-db=gulimall_sms

binlog-do-db=gulimall_wms

binlog-do-db=gulimall_admin

replicate-ignore-db=mysql

replicate-ignore-db=sys

replicate-ignore-db=information_schema

replicate-ignore-db=performance_schema

重启 slaver

4、 为 master 授权用户来他的同步数据

1、 进入 master 容器

docker exec -it mysql /bin/bash

2、 进入 mysql 内部 (mysql –uroot -p)(或者直接使用工具连接)

1) 、 授权 root 可以远程访问( 主从无关, 为了方便我们远程连接 mysql)

grant all privileges on *.* to 'root'@'%' identified by 'root' with grant option;

flush privileges;

2) 、 添加用来同步的用户

GRANT REPLICATION SLAVE ON *.* TO 'backup'@'%' IDENTIFIED BY '123456';

3、 查看 master 状态

show master status;

5、 配置 slaver 同步 master 数据

1、 进入 slaver 容器

docker exec -it mysql-slaver-01 /bin/bash

2、 进入 mysql 内部(mysql –uroot -p)

1) 、 授权 root 可以远程访问( 主从无关, 为了方便我们远程连接 mysql)

grant all privileges on . to 'root'@'%' identified by 'root' with grant option;

flush privileges;

2) 、 设置主库连接,告诉mysql需要同步哪个节点

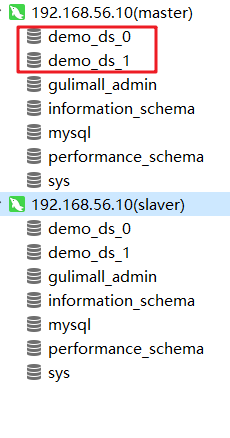

change master to master_host='192.168.56.10',master_user='backup',master_password='123456',master_log_file='mysql-bin.000001',master_log_pos=0,master_port=3307;

master_log_file:master中的File

master_host:master的主机地址

master_port:master的端口

3) 、 启动从库同步

start slave;

4) 、 查看从库状态

show slave status

至此主从配置完成;

总结:

1) 、 主从数据库在自己配置文件中声明需要同步哪个数据库, 忽略哪个数据库等信息。

并且 server-id 不能一样

2) 、 主库授权某个账号密码来同步自己的数据

3) 、 从库使用这个账号密码连接主库来同步数据

3、 MyCat 或者 ShardingSphere