【云原生--Kubernetes】Pod控制器

文章目录

- 一. Pod控制器简介

-

- 1.1 Pod与控制器之间的关系

- 1.2 有状态与无状态区别

- 1.3 常用控制器种类

- 二. Deployment(无状态)

- 三. SatefulSet(有状态)

-

- 3.1常规service和无头服务

-

- 3.1.1Service类型

- 3.1.2 headless方式

- 3.2 StatefulSet

- 四. DaemonSet(守护进程集)

- 五. Job

- 六. CronJob

引言:pod控制器,又称为工作负载(workload),是用于实现管理pod的中间层,确保pod资源符合预期的状态,pod的资源出现故障时,会尝试进行重启,当重启策略无效,会重新创建新的pod。

一. Pod控制器简介

1.1 Pod与控制器之间的关系

controllers:在集群上管理和运行容器的对象通过label-selector相关联

Pod通过控制器实现应用的运维,如伸缩,升级等

1.2 有状态与无状态区别

pod控制器分为有状态和无状态的

有状态:

1.实例之间有差别,每个实例都有自己的独特性,元数据不同,例如etcd,zookeeper

2.实例之间不对等的关系,以及依靠外部存储的应用

无状态:

1.deployment认为所有的pod都是一样的

2.不用考虑顺序的要求

3.不用考虑在哪个node节点上运行

4.可以随意扩容和缩容

1.3 常用控制器种类

| 控制器 | 描述 |

|---|---|

| ReplicaSet | 代用户创建指定数量的pod副本数量,确保pod副本数量符合预期状态,并且支持滚动式自动扩容和缩容功能 |

| Deployment | 工作在ReplicaSet之上,用于管理无状态应用,目前来说最好的控制器。支持滚动更新和回滚功能 |

| DaemonSet | 用于确保集群中的每一个节点只运行特定的pod副本,通常用于实现系统级后台任务。比如ELK服务。管理的服务是无状态的,服务是守护进程 |

| StatefulSet | 管理有状态应用 |

| Job | 只要完成就立即退出,不需要重启或重建 |

| Cronjob | 周期性任务控制,不需要持续后台运行 |

二. Deployment(无状态)

- 部署无状态应用

- 管理Pod和ReplicaSet

- 具有上线部署、副本设定、滚动升级、回滚等功能

- 提供声明式更新,例如只更新一个新的Image

- 应用场景:web服务

示例

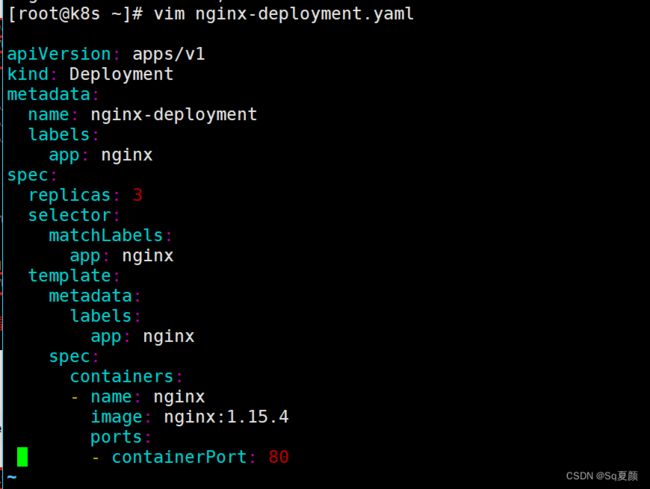

vim nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.15.4

ports:

- containerPort: 80

kubectl apply -f nginx-deployment.yaml

kubectl get pods,deploy,rs

kubectl edit deployment/nginx-deployment

三. SatefulSet(有状态)

- 部署有状态应用

- 解决Pod独立生命周期,保持Pod启动顺序和唯一性

- 稳定,唯一的网络标识符,持久存储(例如:etcd配置文件,节点地址发生变化,将无法使用)

- 有序,优雅的部署和扩展、删除和终止(例如:mysql主从关系,先启动主,再启动从)

- 有序,滚动更新

- 应用场景:数据库

3.1常规service和无头服务

- service:一组Pod访问策略,提供cluster-IP群集之间通讯,还提供负载均衡和服务发现。

- Headless service :无头服务,不需要cluster-IP,直接绑定具体的Pod的IP

3.1.1Service类型

- Cluster_IP

- NodePort:使用Pod所在节点的IP和其端口范围

- Headless

- HostPort(ingress、kubesphere)

- LoadBalance负载均衡(F5硬件负载均衡器)

ps:k8s暴露方式主要就3种:ingress loadbalance(SLB/ALB K8S集群外的负载均衡器、Ng、harproxy、KONG、traefik等等) service

vim nginx-service.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.20

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

labels:

app: nginx

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

selector:

app: nginx

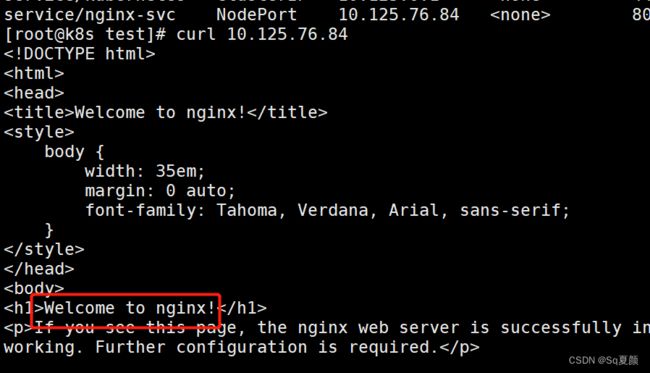

kubectl apply -f nginx-service.yaml

kubectl get svc

3.1.2 headless方式

因为Pod动态IP地址,所以常用于绑定DNS访问—来尽可能固定Pod的位置

vim headless.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

kubectl apply -f headless.yaml

kubectl get svc

再定义一个pod,用于测试

vim dns-test.yaml

apiVersion: v1

kind: Pod

metadata:

name: dns-test

spec:

containers:

- name: busybox

image: busybox:1.28.4

args:

- /bin/sh

- -c

- sleep 36000

restartPolicy: Never

kubectl create -f dns-test.yaml

kubectl get svc

#进入内部进行nds解析

kubectl exec -it dns-test sh

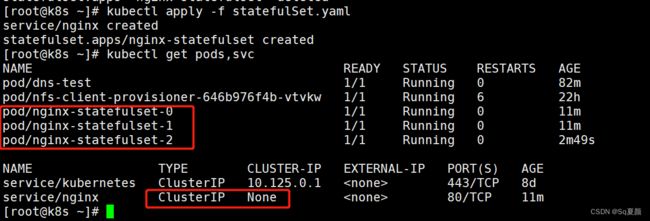

3.2 StatefulSet

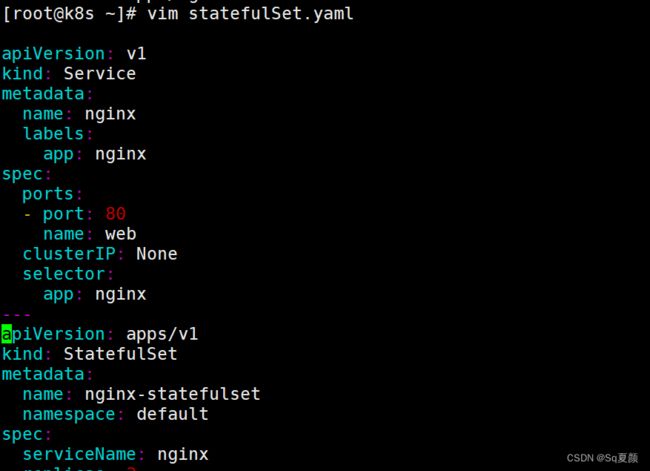

创建StatefulSet.yaml文件

vim statefulSet.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nginx-statefulset

namespace: default

spec:

serviceName: nginx

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

kubectl create -f statefulSet.yaml

#可以跟踪实时查看pod状态

watch -n 1 kubectl get pod

kubectl get pods,svc

另外打开一个终端,在实时查看pod情况时,可以发现pod在创建时是又先后顺序的,会一个个的pod进行创建

解析pod的唯一域名和自身的ip

kubectl apply -f dns-test.yaml

kubectl exec -it dns-test sh

nslookup nginx-statefulset-0.nginx

nslookup nginx-statefulset-1.nginx

nslookup nginx-statefulset-2.nginx

总结

StatefulSet与Deployment区别:有身份的!

身份三要素:

- 域名 nginx-statefulset-0.nginx

- 主机名 nginx-statefulset-0

- 存储(PVC)

四. DaemonSet(守护进程集)

- 在每一个Node上运行一个Pod

- 新加入的Node也同样会自动运行一个Pod

- 应用场景:Agent

示例

vim daemonSet.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.15.4

ports:

- containerPort: 80

kubectl apply -f daemonSet.yaml

kubectl get pods

五. Job

- Job分为普通任务(Job)和定时任务(CronJob)

- 一次性执行

- 应用场景:离线数据处理,视频解码等业务

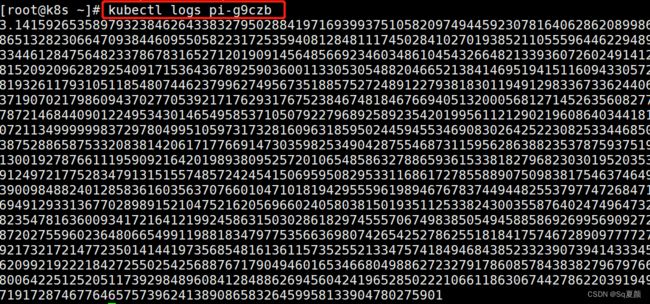

示例

重试次数默认是6次,修改为4次,当遇到异常时Never状态会重启,所以要设定次数。

vim job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: pi

spec:

template:

spec:

containers:

- name: pi

image: perl:5.34.0

command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"]

restartPolicy: Never

backoffLimit: 4

kubectl logs pi-g9czb

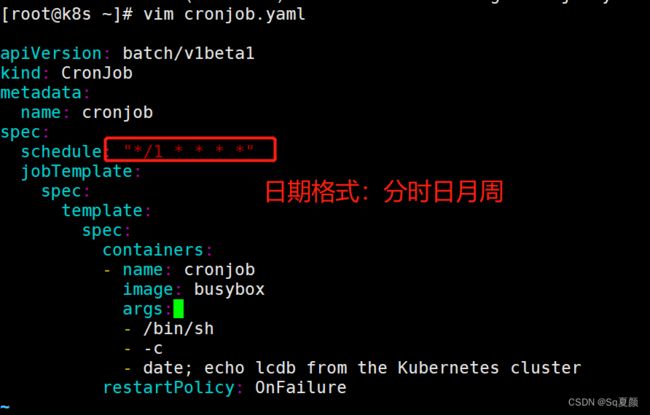

六. CronJob

- 周期性任务,像Linux的Crontab一样。

- 周期性任务

- 应用场景:通知,备份

示例

vim cronjob.yaml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: cronjob

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: cronjob

image: busybox

args:

- /bin/sh

- -c

- date; echo lcdb from the Kubernetes cluster

restartPolicy: OnFailure

kubectl apply -f cronjob.yaml