springboot_canal_redis_springCache_rocketmq实现缓存管理并保证数据最终一致性

先说结束语:最终向恶势力妥协,只支持单表查询,连表查的接口不能保证最终一致性,除非加一个定时校验缓存的逻辑

转载请说明出处

前言

1.为什么要用redis

2.什么是springcache

3.linux基础操作怎么用

4.docker怎么用

以上都不做解答,因为本文重点不是这些基础的东西

一:先说说怎么在docker上安装canal

1.首先直接使用官方提供的镜像

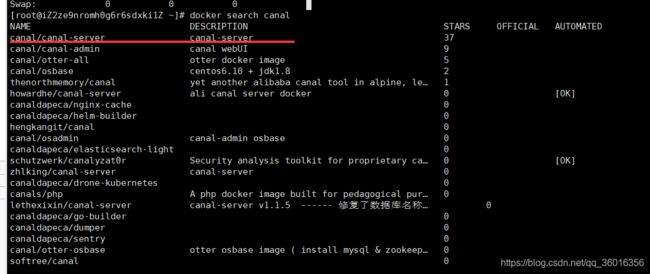

docker search canal

下载下图中这个:docker pull canal/canal-server

2.然后我们暴力的直接创建一个镜像

docker run -itd --name fuck canal/canal-server

目的是想把容器里的配置文件copy出来

然后找一个位置把里面的配置文件路径copy出来,我的文件路径是

/home/fd/wycplus/canal

然后开始复制:

docker cp fuck:/home/admin/canal-server/conf /home/fd/wycplus/canal/

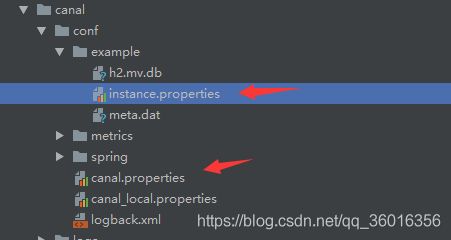

3.canal有两个比较重要的配置文件:下边有图,分别是:

canal.properties (系统根配置文件)

instance.properties (instance级别的配置文件,每个instance一份)

4.先介绍配置文件里都是啥意思(全是截图)

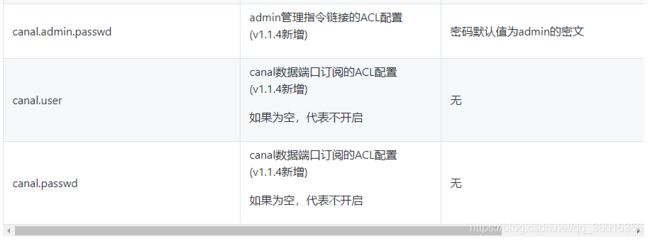

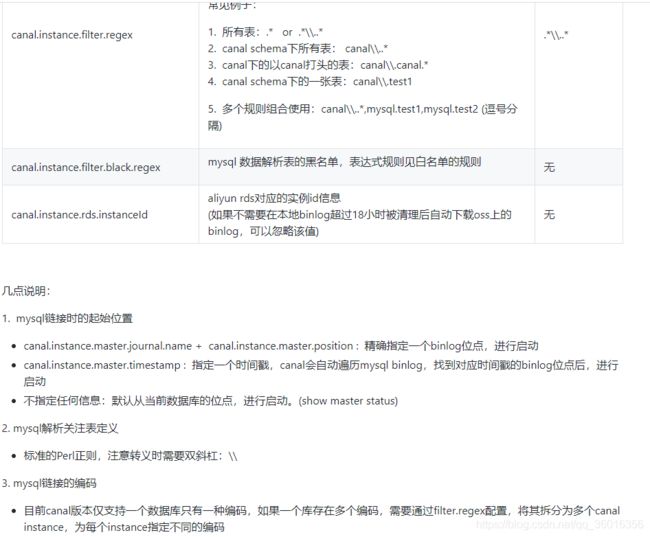

附上官方的图:(图有点长,不想看的就一直往下翻)

首先是cacal、然后是instance,可以直接去git上看

地址是:

https://github.com/alibaba/canal/wiki/AdminGuide

instance.properties介绍:

a. 在canal.properties定义了canal.destinations后,需要在canal.conf.dir对应的目录下建立同名的文件

比如:

canal.destinations = example1,example2

这时需要创建example1和example2两个目录,每个目录里各自有一份instance.properties

略过上述配置文件截图的直接看这里

1.修改canal.properties

修改的地方如下:

canal.ip =8.141.48.104

(现在还没有配mq)

2.修改example目录下的instance.properties

修改的地方如下:

canal.instance.mysql.slaveId=12

#这里填写你的mysql的master的ip

canal.instance.master.address=8.141.48.114:3339和端口

哦,突然想起来了一个事,你的主数据库要保证开启了binlog,

怎么开启,看我之前的主从复制的文章去吧。这里不说了

canal.instance.dbUsername=canal

canal.instance.dbPassword=canal

canal.instance.connectionCharset = UTF-8

canal.instance.filter.regex=.*\\..*

canal.instance.filter.black.regex=mysql\\.slave_.*

这里是指数据库的账号密码,当然我们要在主数据库上创建一个用户:

mysql -uroot -proot

#创建账号(账号:canal;密码:canal)

CREATE USER canal IDENTIFIED BY 'canal';

#授予权限

GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'canal'@'%';

#刷新并应用权限

FLUSH PRIVILEGES;

**如果我说的不明白怎么配置,就直接看下方的文章把,这东西 改改就行,不是我想说的重点内容。**

编写docker-compose.yml

version: "3.2"

services:

canal:

image: canal/canal-server

container_name: wyc_canal_01

volumes:

- "/home/fd/wycplus/canal/conf:/home/admin/canal_server/conf"

- "/home/fd/wycplus/canal/logs:/home/admin/canal_server/logs"

ports: # 11110 admin , 11111 canal , 11112 metrics, 9100 exporter

- "11110:11110"

- "11111:11111"

- "11112:11112"

- "9100:9100"

environment:

- canal.instance.mysql.slaveId=12

- canal.auto.scan=false

- canal.destinations=example

- canal.instance.master.address=8.141.48.104:3339

- canal.instance.dbUsername=canal

- canal.instance.dbPassword=canal

# - canal.mq.topic=test

- canal.instance.filter.regex=.*\\..*

然后docker-compose up -d 创建canal容器就行了

如果我上边写的有不明白的地方看看这两个文章也行

dockercompose安装canal:

https://blog.csdn.net/feichen2016/article/details/100299806?utm_medium=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-2.control&depth_1-utm_source=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-2.control

官网文档:

https://github.com/alibaba/canal/wiki/Docker-QuickStart

现在开始说重要内容

如何不了解缓存与mysql的一致性的话,先看这个文章

redis与mysql数据一致性的扫盲描述:

https://zhuanlan.zhihu.com/p/91770135

二、我要干什么

1.用springcache 实现对接口级的缓存添加和删除,(没有缓存更新奥)

2.使用canal实现清除redis中脏数据

3.修改springcache实现ttl

三、用springcache 实现对接口级的缓存添加和删除

1.首先就是引入依赖, pom文件中添加

org.springframework.boot

spring-boot-starter-data-redis

com.alibaba.otter

canal.client

1.1.0

com.fasterxml.jackson.datatype

jackson-datatype-jsr310

com.fasterxml.jackson.core

jackson-core

2.10.1

com.fasterxml.jackson.core

jackson-databind

2.10.1

com.fasterxml.jackson.core

jackson-annotations

2.10.1

org.apache.commons

commons-pool2

2.在application.yml中添加redis的相关的东东

spring:

redis:

host: 8.141.48.104

port: 6379

ssl: false

database: 0

password:

timeout: 2000

lettuce:

pool:

# 最大空闲连接数

maxIdle: 8

# 最大活动连接数

maxActive: 20

cache:

type: redis

3.配置redisTemplate、redisCacheManager

@Configuration

public class BaseRedisConfig {

@Bean

public RedisTemplate<String, Object> redisTemplate(RedisConnectionFactory redisConnectionFactory) {

RedisSerializer<Object> serializer = redisSerializer();

RedisTemplate<String, Object> redisTemplate = new RedisTemplate<>();

redisTemplate.setConnectionFactory(redisConnectionFactory);

redisTemplate.setKeySerializer(new StringRedisSerializer());

redisTemplate.setValueSerializer(serializer);

redisTemplate.setHashKeySerializer(new StringRedisSerializer());

redisTemplate.setHashValueSerializer(serializer);

redisTemplate.afterPropertiesSet();

return redisTemplate;

}

@Bean

public RedisSerializer<Object> redisSerializer() {

//创建JSON序列化器

Jackson2JsonRedisSerializer<Object> serializer = new Jackson2JsonRedisSerializer<>(Object.class);

ObjectMapper objectMapper = new ObjectMapper();

//下面两行解决Java8新日期API序列化问题

objectMapper.disable(SerializationFeature.WRITE_DATES_AS_TIMESTAMPS);

objectMapper.registerModule(new JavaTimeModule());

//设置所有访问权限以及所有的实际类型都可序列化和反序列化

objectMapper.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY);

//指定序列化输入的类型,类必须是非final修饰的

//必须设置,否则无法将JSON转化为对象,会转化成Map类型

objectMapper.activateDefaultTyping(LaissezFaireSubTypeValidator.instance, ObjectMapper.DefaultTyping.NON_FINAL);

serializer.setObjectMapper(objectMapper);

return serializer;

}

@Bean

public RedisCacheManager redisCacheManager(RedisConnectionFactory redisConnectionFactory) {

RedisCacheWriter redisCacheWriter = RedisCacheWriter.nonLockingRedisCacheWriter(redisConnectionFactory);

//设置Redis缓存有效期为30min

RedisCacheConfiguration redisCacheConfiguration = RedisCacheConfiguration.defaultCacheConfig()

.serializeValuesWith(RedisSerializationContext.SerializationPair.fromSerializer(redisSerializer())).entryTtl(Duration.ofMinutes(30));

return new EnableTTLRedisCacheManager(redisCacheWriter, redisCacheConfiguration);

}

@Bean

public RedisService redisService() {

return new RedisServiceImpl();

}

4.配置自定义RedisCache子类

public class CustomizedRedisCache extends RedisCache {

private final String name;

private final RedisCacheWriter cacheWriter;

private final ConversionService conversionService;

/**

* Create new {@link RedisCache}.

*

* @param name must not be {@literal null}.

* @param cacheWriter must not be {@literal null}.

* @param cacheConfig must not be {@literal null}.

*/

protected CustomizedRedisCache(String name, RedisCacheWriter cacheWriter, RedisCacheConfiguration cacheConfig) {

super(name, cacheWriter, cacheConfig);

this.name = name;

this.cacheWriter = cacheWriter;

this.conversionService = cacheConfig.getConversionService();

}

@Override

public void evict(Object key) {

if (key instanceof String) {

String keyString = key.toString();

if (keyString.endsWith("*")) {

evictLikeSuffix(keyString);

return;

}

if (keyString.startsWith("*")) {

evictLikePrefix(keyString);

return;

}

}

super.evict(key);

}

/**

* 前缀匹配

*

* @param key

*/

public void evictLikePrefix(String key) {

byte[] pattern = this.conversionService.convert(this.createCacheKey(key), byte[].class);

this.cacheWriter.clean(this.name, pattern);

}

/**

* 后缀匹配

*

* @param key

*/

public void evictLikeSuffix(String key) {

byte[] pattern = (byte[]) this.conversionService.convert(this.createCacheKey(key), byte[].class);

this.cacheWriter.clean(this.name, pattern);

}

}

5.配置自定义RedisCacheManager子类

public class EnableTTLRedisCacheManager extends RedisCacheManager {

public EnableTTLRedisCacheManager(RedisCacheWriter cacheWriter, RedisCacheConfiguration defaultCacheConfiguration) {

super(cacheWriter, defaultCacheConfiguration);

}

@Override

protected RedisCache createRedisCache(String name, RedisCacheConfiguration cacheConfig) {

String[] split = name.split("#");

String cacheName = split[0];

if (split.length > 1) {

long ttl = Long.parseLong(split[1]);

cacheConfig = cacheConfig.entryTtl(Duration.ofSeconds(ttl));

}

return super.createRedisCache(cacheName, cacheConfig);

}

}

6.配置自定义Key生成

@Configuration

public class RedisKeyGenerateConfig {

@Bean("fdKeyGenerator")

public KeyGenerator getFdKeyGenerator() {

return new KeyGenerator() {

@Override

public Object generate(Object target, Method method, Object... params) {

String key = "";

key += method.getName();

String paramsStr="";

for (Object o : params) {

String s = o.toString();

paramsStr +=","+ s;

}

paramsStr=paramsStr.replaceFirst(",","");

key+=paramsStr;

System.out.println("key: " + key);

return key;

}

};

}

}

7.编写canal客户端监听

@Service

public class SimpleCanalClientExampleService implements CommandLineRunner {

@Autowired

private RedisTemplate redisTemplate;

@Autowired

RedisService redisService;

// @Autowired

// private String dbName;

@Value("${spring.redis.host}")

private String ip;

private int port = 11111;

private final String prefix = "wyc_";

public void execute() {

// String ip = "8.141.48.104";

CanalConnector connector = CanalConnectors.newSingleConnector(new InetSocketAddress(ip, port), "example", "canal", "canal");

int batchSize = 2;

int emptyCount = 0;

try {

connector.connect();

connector.subscribe(".*\\..*");

connector.rollback();

int totalEmptyCount = 12000;

while (emptyCount < totalEmptyCount) {

Message message = connector.getWithoutAck(batchSize); // 获取指定数量的数据

long batchId = message.getId();

int size = message.getEntries().size();

if (batchId == -1 || size == 0) {

emptyCount++;

System.out.println("empty count : " + emptyCount);

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

}

} else {

emptyCount = 0;

System.out.printf("message[batchId=%s,size=%s] \n", batchId, size);

printEntry(message.getEntries());

}

connector.ack(batchId); // 提交确认

// connector.rollback(batchId); // 处理失败, 回滚数据

}

System.out.println("empty too many times, exit");

} catch (Exception e) {

e.printStackTrace();

} finally {

connector.disconnect();

}

}

private void printEntry(List entrys) {

for (Entry entry : entrys) {

if (entry.getEntryType() == EntryType.TRANSACTIONBEGIN || entry.getEntryType() == EntryType.TRANSACTIONEND) {

continue;

}

RowChange rowChage = null;

try {

rowChage = RowChange.parseFrom(entry.getStoreValue());

} catch (Exception e) {

throw new RuntimeException("ERROR ## parser of eromanga-event has an error , data:" + entry.toString(),

e);

}

EventType eventType = rowChage.getEventType();

System.out.println(String.format("================> binlog[%s:%s] , name[%s,%s] , eventType : %s",

entry.getHeader().getLogfileName(), entry.getHeader().getLogfileOffset(),

entry.getHeader().getSchemaName(), entry.getHeader().getTableName(),

eventType));

// entry.getHeader().getSchemaName(), entry.getHeader().getTableName(),

String dbName = entry.getHeader().getSchemaName();

if (dbName.equals("wyc_test")) {

String tableName = entry.getHeader().getTableName();

if (eventType == EventType.DELETE) {

deleteRedis(tableName);

} else if (eventType == EventType.INSERT) {

insertRedis(tableName);

} else {

updateRedis(tableName);

}

}

// for (RowData rowData : rowChage.getRowDatasList()) {

// if (eventType == EventType.DELETE) {

// printColumn(rowData.getBeforeColumnsList());

// deleteRedis(rowData.getBeforeColumnsList());

//

// } else if (eventType == EventType.INSERT) {

// printColumn(rowData.getAfterColumnsList());

// insertRedis(rowData.getAfterColumnsList());

// } else {

// System.out.println("-------> before");

// printColumn(rowData.getBeforeColumnsList());

// System.out.println("-------> after");

// printColumn(rowData.getAfterColumnsList());

// updateRedis(rowData.getAfterColumnsList());

// }

// }

}

}

private void printColumn(List columns) {

for (Column column : columns) {

System.out.println(column.getName() + " : " + column.getValue() + " update=" + column.getUpdated());

}

}

private void insertRedis(String tableName) {

tableName = tableName.replaceFirst(prefix, "");

Set keys = redisTemplate.keys(tableName + "*");

for (String key : keys) {

Boolean success = redisTemplate.delete(key);

System.out.println("insert result: " + success);

}

}

private void deleteRedis(String tableName) {

tableName = tableName.replaceFirst(prefix, "");

Set keys = redisTemplate.keys(tableName + "*");

for (String key : keys) {

Boolean success = redisTemplate.delete(key);

System.out.println("deleteRedis result: " + success);

}

}

private void updateRedis(String tableName) {

tableName = tableName.replaceFirst(prefix, "");

Set keys = redisTemplate.keys(tableName + "*");

for (String key : keys) {

Boolean success = redisTemplate.delete(key);

System.out.println("updateRedis result: " + success);

}

}

@Override

public void run(String... args) throws Exception {

execute();

}

最后用我的一个service测试一下

@Service

@Transactional(readOnly = true)

@CacheConfig(cacheNames = "vehicle_checking_item")

public class VehicleCheckingItemService extends CrudService {

private final String CACHE_NAME_VEHICLE_CHECKING_ITEM = "vehicle_checking_item";

#添加到redis中,并且300的有效期,通过fdKeyGenerator生成

@Cacheable(value = (CACHE_NAME_VEHICLE_CHECKING_ITEM + "#300"), keyGenerator = "fdKeyGenerator")

public CommonResult> findCheckItemList(VehicleCheckingItemFindCheckItemListReqVO vo) {

VehicleCheckingItem vehicleCheckingItem = new VehicleCheckingItem();

BeanUtils.copyProperties(vo, vehicleCheckingItem);

List list = dao.testFindList(vo);

Page page = vo.getPage();

page.setList(list);

return new CommonResult<>(page);

}

#删除cacheName下所有的数据

@Transactional(readOnly = false)

@CacheEvict(beforeInvocation = true, allEntries = true)

public CommonResult updateTest(String newTopic, String oldTopic) {

dao.updateTest(oldTopic, newTopic);

return new CommonResult();

}

}

之后再详细写写上边都干了啥

四、补充:使用springcache太闹心,设置的expire不够直观,所以自己写一个切面来实现

编写缓存加入、删除注解

1.加入缓存注解

@Documented

@Retention(RetentionPolicy.RUNTIME)

@Target(ElementType.METHOD)

public @interface CusCacheable {

//cacheName 对应hash的名称

String cacheName() default "";

//key值 ,对应hash的key值

String key() default "";

//过期时间 默认 5分钟

int expire() default 5 * 60;

//key生成器

String keyGenerator() default "";

}

2.从缓存中移除注解

@Documented

@Retention(RetentionPolicy.RUNTIME)

@Target(ElementType.METHOD)

public @interface CusCacheDel {

//cacheName 对应hash的名称

String cacheName();

//key值 ,对应hash的key值

String key() default "";

}

3.编写缓存切面实现

/**

* @ClassName BusinessManErrorInfoVO

* @Description

* @Author fd

* @Date 2020/11/6 14:48

* @Version 1.0

*/

@Aspect

@Component

public class CusCacheAspect {

//yml文件中写一个配置用来指定缓存类型,是本地还是redis

@Value("${spring.cache.type}")

private String cacheType = "";

@Pointcut("@annotation(com.jeesite.modules.redis.service.CusCacheable)")

public void putPointCut() {

}

@Pointcut("@annotation(com.jeesite.modules.redis.service.CusCacheDel)")

public void delPointCut() {

}

@Around(value = "putPointCut()")

public Object executeAround(ProceedingJoinPoint joinPoint) {

Method method = ((MethodSignature) joinPoint.getSignature()).getMethod();

CusCacheable cusCacheable = method.getAnnotation(CusCacheable.class);

String keyGeneratorBeanName = cusCacheable.keyGenerator();

boolean useGen = StringUtils.isNotBlank(keyGeneratorBeanName);

Object target = joinPoint.getTarget();

Object[] args = joinPoint.getArgs();

String cacheName = cusCacheable.cacheName();

int expire = cusCacheable.expire();

String key = cusCacheable.key();

//使用key生成器

if (useGen) {

KeyGenerator keyGenerator = SpringUtils.getBean(keyGeneratorBeanName);

key = (String) keyGenerator.generate(target, method, args);

}

boolean isCloud = cacheType.equals("redis");

RedisService redisService = SpringUtils.getBean("redisServiceImpl");

Object cacheResult = isCloud ? redisService.hGet(cacheName, key) : CacheUtils.get(cacheName, key);

Object proceedResult = null;

if (cacheResult == null) {

try {

proceedResult = joinPoint.proceed(args);

if (!isCloud) {

CacheUtils.put(cacheName, key, proceedResult);

} else {

redisService.hSet(cacheName, key, proceedResult);

redisService.expire(cacheName, expire);

}

} catch (Throwable throwable) {

throwable.printStackTrace();

}

} else {

proceedResult = cacheResult;

}

return proceedResult;

}

@Before(value = "delPointCut()")

public void executeBefore(JoinPoint joinPoint) {

Method method = ((MethodSignature) joinPoint.getSignature()).getMethod();

CusCacheDel cusCacheDel = method.getAnnotation(CusCacheDel.class);

String cacheName = cusCacheDel.cacheName();

boolean isCloud = cacheType.equals("redis");

if (isCloud) {

RedisService redisService = SpringUtils.getBean("redisServiceImpl");

boolean successDel = redisService.del(cacheName);

System.out.println(successDel);

} else {

CacheUtils.removeCache(cacheName);

}

}

}