文章目录

- model/region_proposal_network.py

-

- def_enumerate_shifted_anchor_torch:

- class RegionProposalNetwork(nn.Module):

- model/faster_rcnn_vgg16.py

-

- model/faster_rcnn.py

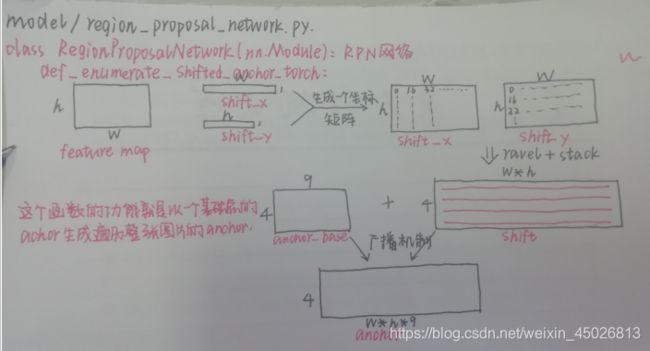

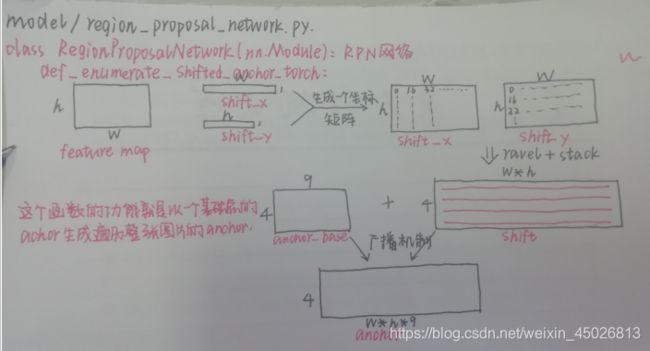

model/region_proposal_network.py

def_enumerate_shifted_anchor_torch:

def _enumerate_shifted_anchor_torch(anchor_base, feat_stride, height, width):

import torch as t

shift_y = t.arange(0, height * feat_stride, feat_stride)

shift_x = t.arange(0, width * feat_stride, feat_stride)

shift_x, shift_y = xp.meshgrid(shift_x, shift_y)

shift = xp.stack((shift_y.ravel(), shift_x.ravel(),

shift_y.ravel(), shift_x.ravel()), axis=1)

A = anchor_base.shape[0]

K = shift.shape[0]

anchor = anchor_base.reshape((1, A, 4)) + \

shift.reshape((1, K, 4)).transpose((1, 0, 2))

anchor = anchor.reshape((K * A, 4)).astype(np.float32)

return anchor

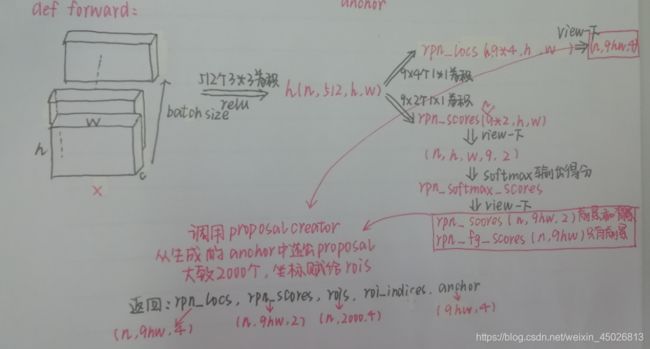

class RegionProposalNetwork(nn.Module):

class RegionProposalNetwork(nn.Module):

"""Region Proposal Network introduced in Faster R-CNN.RPN引入Faster R-CNN

This is Region Proposal Network introduced in Faster R-CNN [#]_.

This takes features extracted from images and propose

class agnostic bounding boxes around "objects".

.. [#] Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun. \

Faster R-CNN: Towards Real-Time Object Detection with \

Region Proposal Networks. NIPS 2015.

Args:

in_channels (int): The channel size of input.

mid_channels (int): The channel size of the intermediate tensor.

ratios (list of floats): This is ratios of width to height of

the anchors.

anchor_scales (list of numbers): This is areas of anchors.

Those areas will be the product of the square of an element in

:obj:`anchor_scales` and the original area of the reference

window.

feat_stride (int): Stride size after extracting features from an

image.

initialW (callable): Initial weight value. If :obj:`None` then this

function uses Gaussian distribution scaled by 0.1 to

initialize weight.

May also be a callable that takes an array and edits its values.

proposal_creator_params (dict): Key valued paramters for

:class:`model.utils.creator_tools.ProposalCreator`.

.. seealso::

:class:`~model.utils.creator_tools.ProposalCreator`

"""

def __init__(

self, in_channels=512, mid_channels=512, ratios=[0.5, 1, 2],

anchor_scales=[8, 16, 32], feat_stride=16,

proposal_creator_params=dict(),

):

super(RegionProposalNetwork, self).__init__()

self.anchor_base = generate_anchor_base(

anchor_scales=anchor_scales, ratios=ratios)

self.feat_stride = feat_stride

self.proposal_layer = ProposalCreator(self, **proposal_creator_params)

n_anchor = self.anchor_base.shape[0]

self.conv1 = nn.Conv2d(in_channels, mid_channels, 3, 1, 1)

self.score = nn.Conv2d(mid_channels, n_anchor * 2, 1, 1, 0)

self.loc = nn.Conv2d(mid_channels, n_anchor * 4, 1, 1, 0)

normal_init(self.conv1, 0, 0.01)

normal_init(self.score, 0, 0.01)

normal_init(self.loc, 0, 0.01)

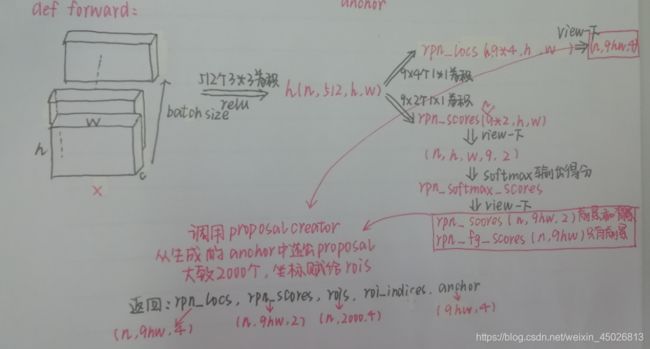

def forward(self, x, img_size, scale=1.):

"""Forward Region Proposal Network.RPN网络前向传播

Here are notations.

* :math:`N` is batch size.

* :math:`C` channel size of the input.

* :math:`H` and :math:`W` are height and witdh of the input feature.

* :math:`A` is number of anchors assigned to each pixel.

Args:

x (~torch.autograd.Variable): The Features extracted from images.

Its shape is :math:`(N, C, H, W)`.

img_size (tuple of ints): A tuple :obj:`height, width`,

which contains image size after scaling.

scale (float): The amount of scaling done to the input images after

reading them from files.对输入图像所做的缩放量

Returns:

(~torch.autograd.Variable, ~torch.autograd.Variable, array, array, array):

This is a tuple of five following values.

* **rpn_locs**: Predicted bounding box offsets and scales for \

anchors. Its shape is :math:`(N, H W A, 4)`.

* **rpn_scores**: Predicted foreground scores for \

anchors. Its shape is :math:`(N, H W A, 2)`.

* **rois**: A bounding box array containing coordinates of \

proposal boxes. This is a concatenation of bounding box \

arrays from multiple images in the batch. \

Its shape is :math:`(R', 4)`. Given :math:`R_i` predicted \

bounding boxes from the :math:`i` th image, \

:math:`R' = \\sum _{i=1} ^ N R_i`.

* **roi_indices**: An array containing indices of images to \

which RoIs correspond to. Its shape is :math:`(R',)`.

* **anchor**: Coordinates of enumerated shifted anchors. \

Its shape is :math:`(H W A, 4)`.

"""

n, _, hh, ww = x.shape

anchor = _enumerate_shifted_anchor(

np.array(self.anchor_base),

self.feat_stride, hh, ww)

n_anchor = anchor.shape[0] // (hh * ww)

h = F.relu(self.conv1(x))

rpn_locs = self.loc(h)

rpn_locs = rpn_locs.permute(0, 2, 3, 1).contiguous().view(n, -1, 4)

rpn_scores = self.score(h)

rpn_scores = rpn_scores.permute(0, 2, 3, 1).contiguous()

rpn_softmax_scores = F.softmax(rpn_scores.view(n, hh, ww, n_anchor, 2), dim=4)

rpn_fg_scores = rpn_softmax_scores[:, :, :, :, 1].contiguous()

rpn_fg_scores = rpn_fg_scores.view(n, -1)

rpn_scores = rpn_scores.view(n, -1, 2)

rois = list()

roi_indices = list()

for i in range(n):

roi = self.proposal_layer(

rpn_locs[i].cpu().data.numpy(),

rpn_fg_scores[i].cpu().data.numpy(),

anchor, img_size,

scale=scale)

batch_index = i * np.ones((len(roi),), dtype=np.int32)

rois.append(roi)

roi_indices.append(batch_index)

rois = np.concatenate(rois, axis=0)

roi_indices = np.concatenate(roi_indices, axis=0)

return rpn_locs, rpn_scores, rois, roi_indices, anchor

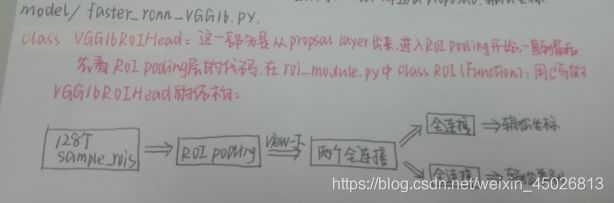

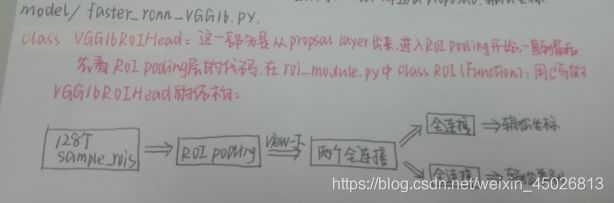

model/faster_rcnn_vgg16.py

class VGG16ROIHead:

class VGG16RoIHead(nn.Module):

"""Faster R-CNN Head for VGG-16 based implementation.

This class is used as a head for Faster R-CNN.

This outputs class-wise localizations and classification based on feature

maps in the given RoIs.

Args:

n_class (int): The number of classes possibly including the background.

roi_size (int): Height and width of the feature maps after RoI-pooling.

spatial_scale (float): Scale of the roi is resized.

classifier (nn.Module): Two layer Linear ported from vgg16

"""

def __init__(self, n_class, roi_size, spatial_scale,

classifier):

super(VGG16RoIHead, self).__init__()

self.classifier = classifier

self.cls_loc = nn.Linear(4096, n_class * 4)

self.score = nn.Linear(4096, n_class)

normal_init(self.cls_loc, 0, 0.001)

normal_init(self.score, 0, 0.01)

self.n_class = n_class

self.roi_size = roi_size

self.spatial_scale = spatial_scale

self.roi = RoIPooling2D(self.roi_size, self.roi_size, self.spatial_scale)

def forward(self, x, rois, roi_indices):

"""Forward the chain.

We assume that there are :math:`N` batches.

Args:

x (Variable): 4D image variable.

rois (Tensor): A bounding box array containing coordinates of

proposal boxes. This is a concatenation of bounding box

arrays from multiple images in the batch.

Its shape is :math:`(R', 4)`. Given :math:`R_i` proposed

RoIs from the :math:`i` th image,

:math:`R' = \\sum _{i=1} ^ N R_i`.

roi_indices (Tensor): An array containing indices of images to

which bounding boxes correspond to. Its shape is :math:`(R',)`.

"""

roi_indices = at.totensor(roi_indices).float()

rois = at.totensor(rois).float()

indices_and_rois = t.cat([roi_indices[:, None], rois], dim=1)

xy_indices_and_rois = indices_and_rois[:, [0, 2, 1, 4, 3]]

indices_and_rois = xy_indices_and_rois.contiguous()

pool = self.roi(x, indices_and_rois)

pool = pool.view(pool.size(0), -1)

fc7 = self.classifier(pool)

roi_cls_locs = self.cls_loc(fc7)

roi_scores = self.score(fc7)

return roi_cls_locs, roi_scores

class RoI(Function):

def __init__(self, outh, outw, spatial_scale):

self.forward_fn = load_kernel('roi_forward', kernel_forward)

self.backward_fn = load_kernel('roi_backward', kernel_backward)

self.outh, self.outw, self.spatial_scale = outh, outw, spatial_scale

def forward(self, x, rois):

x = x.contiguous()

rois = rois.contiguous()

self.in_size = B, C, H, W = x.size()

self.N = N = rois.size(0)

output = t.zeros(N, C, self.outh, self.outw).cuda()

self.argmax_data = t.zeros(N, C, self.outh, self.outw).int().cuda()

self.rois = rois

args = [x.data_ptr(), rois.data_ptr(),

output.data_ptr(),

self.argmax_data.data_ptr(),

self.spatial_scale, C, H, W,

self.outh, self.outw,

output.numel()]

stream = Stream(ptr=torch.cuda.current_stream().cuda_stream)

self.forward_fn(args=args,

block=(CUDA_NUM_THREADS, 1, 1),

grid=(GET_BLOCKS(output.numel()), 1, 1),

stream=stream)

return output

def backward(self, grad_output):

grad_output = grad_output.contiguous()

B, C, H, W = self.in_size

grad_input = t.zeros(self.in_size).cuda()

stream = Stream(ptr=torch.cuda.current_stream().cuda_stream)

args = [grad_output.data_ptr(),

self.argmax_data.data_ptr(),

self.rois.data_ptr(),

grad_input.data_ptr(),

self.N, self.spatial_scale, C, H, W, self.outh, self.outw,

grad_input.numel()]

self.backward_fn(args=args,

block=(CUDA_NUM_THREADS, 1, 1),

grid=(GET_BLOCKS(grad_input.numel()), 1, 1),

stream=stream

)

return grad_input, None

class RoIPooling2D(t.nn.Module):

def __init__(self, outh, outw, spatial_scale):

super(RoIPooling2D, self).__init__()

self.RoI = RoI(outh, outw, spatial_scale)

def forward(self, x, rois):

return self.RoI(x, rois)

model/faster_rcnn.py