Hyperledger Fabric 2.1 树莓派+虚拟机多机环境配置记录

新手刚接触hyperledger fabric,记录一下环境配置过程,踩了很多坑,所以仅作为记录而非教程~

参考教程Hyperledger Fabric多机搭建 - Hyperledger Fabric

系统环境:ubuntu64位虚拟机(虚拟机1、虚拟机2),raspio64位(树莓派)

拟搭建三个组织,每个组织一个节点,具体分配如下:

| 名称 | IP | hosts | 组织结构 | 主机 |

| orderer | 192.168.50.120 | orderer.example.com | orderer | 虚拟机1 |

| org1peer0 | 192.168.50.232 | peer0.org1.example.com | org1 | 虚拟机2 |

| org2peer0 | 192.168.50.46 | peer0.org2.example.com | org2 | 树莓派 |

准备工作

1.配置hosts:

在 /etc/hosts 文件末尾添加主机IP到host的映射(各主机IP需要在同一个网段下,切换wifi时一定要注意修改,有时候树莓派系统反应慢一些,多刷新几次确保IP没有问题,确保主机间相互能够ping通)。

192.168.50.120 orderer.example.com

192.168.50.232 peer0.org1.example.com

192.168.50.46 peer0.0rg2.example.com2.ssh配置

在多机搭建的过程中我们会使用到scp命令。Linux scp 命令用于 Linux 之间复制文件和目录。

scp 是 secure copy 的缩写, scp 是 Linux 系统下基于 ssh 登陆进行安全的远程文件拷贝命令。三台主机需要提前安装好ssh命令。

生成Fabric证书

1.编写证书

首先三台主机里创建项目目录(可以不同,记住就行),将fabric-samples的模板文件crypto-config.yaml复制到项目目录下,并修改为如下内容:

OrdererOrgs:

- Name: Orderer

Domain: example.com

EnableNodeOUs: true

Specs:

- Hostname: orderer

PeerOrgs:

- Name: org1

Domain: org1.example.com

EnableNodeOUs: true

Template:

Count: 1

Users:

Count: 1

- Name: org2

Domain: org2.example.com

EnableNodeOUs: true

Template:

Count: 1

Users:

Count: 1 2.生成证书文件

terminal进入项目目录,使用以下命令生成:

cryptogen generate --config=crypto-config.yaml随后目录下出现crypto-config文件夹,其存放上述三个节点的所有证书文件。将该文件通过scp命令拷贝到另外两台主机中:

scp -r ./crypto-config [email protected]:[该主机项目目录的绝对路径]

scp -r ./crypto-config [email protected]:[该主机项目目录的绝对路径]生成通道文件

1.编写创世文件块

进入虚拟机1,复制官方样例test-network中的configtx.yaml到项目目录下,修改为如下内容:

---

Organizations:

- &OrdererOrg

Name: OrdererOrg

ID: OrdererMSP

MSPDir: ./crypto-config/ordererOrganizations/example.com/msp

Policies:

Readers:

Type: Signature

Rule: "OR('OrdererMSP.member')"

Writers:

Type: Signature

Rule: "OR('OrdererMSP.member')"

Admins:

Type: Signature

Rule: "OR('OrdererMSP.admin')"

OrdererEndpoints:

- orderer.example.com:7050

- &Org1

Name: Org1MSP

ID: Org1MSP

MSPDir: ./crypto-config/peerOrganizations/org1.example.com/msp

Policies:

Readers:

Type: Signature

Rule: "OR('Org1MSP.admin', 'Org1MSP.peer', 'Org1MSP.client')"

Writers:

Type: Signature

Rule: "OR('Org1MSP.admin', 'Org1MSP.client')"

Admins:

Type: Signature

Rule: "OR('Org1MSP.admin')"

Endorsement:

Type: Signature

Rule: "OR('Org1MSP.peer')"

AnchorPeers:

- Host: peer0.org1.example.com

Port: 7051

- &Org2

Name: Org2MSP

ID: Org2MSP

MSPDir: ./crypto-config/peerOrganizations/org2.example.com/msp

Policies:

Readers:

Type: Signature

Rule: "OR('Org2MSP.admin', 'Org2MSP.peer', 'Org2MSP.client')"

Writers:

Type: Signature

Rule: "OR('Org2MSP.admin', 'Org2MSP.client')"

Admins:

Type: Signature

Rule: "OR('Org2MSP.admin')"

Endorsement:

Type: Signature

Rule: "OR('Org2MSP.peer')"

AnchorPeers:

- Host: peer0.org2.example.com

Port: 9051

Capabilities:

Channel: &ChannelCapabilities

V2_0: true

Orderer: &OrdererCapabilities

V2_0: true

Application: &ApplicationCapabilities

V2_0: true

Application: &ApplicationDefaults

Organizations:

Policies:

Readers:

Type: ImplicitMeta

Rule: "ANY Readers"

Writers:

Type: ImplicitMeta

Rule: "ANY Writers"

Admins:

Type: ImplicitMeta

Rule: "MAJORITY Admins"

LifecycleEndorsement:

Type: ImplicitMeta

Rule: "MAJORITY Endorsement"

Endorsement:

Type: ImplicitMeta

Rule: "MAJORITY Endorsement"

Capabilities:

<<: *ApplicationCapabilities

Orderer: &OrdererDefaults

OrdererType: solo

Addresses:

- orderer.example.com:7050

EtcdRaft:

Consenters:

- Host: orderer.example.com

Port: 7050

ClientTLSCert: ../organizations/ordererOrganizations/example.com/orderers/orderer.example.com/tls/server.crt

ServerTLSCert: ../organizations/ordererOrganizations/example.com/orderers/orderer.example.com/tls/server.crt

BatchTimeout: 2s

BatchSize:

MaxMessageCount: 10

AbsoluteMaxBytes: 99 MB

PreferredMaxBytes: 512 KB

Organizations:

Policies:

Readers:

Type: ImplicitMeta

Rule: "ANY Readers"

Writers:

Type: ImplicitMeta

Rule: "ANY Writers"

Admins:

Type: ImplicitMeta

Rule: "MAJORITY Admins"

BlockValidation:

Type: ImplicitMeta

Rule: "ANY Writers"

Channel: &ChannelDefaults

Policies:

Readers:

Type: ImplicitMeta

Rule: "ANY Readers"

Writers:

Type: ImplicitMeta

Rule: "ANY Writers"

Admins:

Type: ImplicitMeta

Rule: "MAJORITY Admins"

Capabilities:

<<: *ChannelCapabilities

Profiles:

TwoOrgsOrdererGenesis:

<<: *ChannelDefaults

Orderer:

<<: *OrdererDefaults

Organizations:

- *OrdererOrg

Capabilities:

<<: *OrdererCapabilities

Consortiums:

SampleConsortium:

Organizations:

- *Org1

- *Org2

TwoOrgsChannel:

Consortium: SampleConsortium

<<: *ChannelDefaults

Application:

<<: *ApplicationDefaults

Organizations:

- *Org1

- *Org2

Capabilities:

<<: *ApplicationCapabilities

2.生成创世块文件和通道文件

使用以下命令生成创世区块:

configtxgen -profile TwoOrgsOrdererGenesis -channelID fabric-channel -outputBlock ./channel-artifacts/genesis.block生成通道文件:

configtxgen -profile TwoOrgsChannel -outputCreateChannelTx ./channel-artifacts/channel.tx -channelID mychannel分别为org1,org2定义锚节点:

configtxgen -profile TwoOrgsChannel -outputAnchorPeersUpdate ./channel-artifacts/Org1MSPanchors.tx -channelID mychannel -asOrg Org1MSP

configtxgen -profile TwoOrgsChannel -outputAnchorPeersUpdate ./channel-artifacts/Org2MSPanchors.tx -channelID mychannel -asOrg Org2MSP使用以下命令将生成的文件拷贝到另两台主机:

scp -r ./channel-artifacts [email protected]:[项目绝对路径]

scp -r ./channel-artifacts [email protected]:[项目绝对路径]编写docker-compose文件

1.orderer

虚拟机1项目目录下创建docker-compose.yaml文件,修改为如下内容:

version: '2'

services:

orderer.example.com:

container_name: orderer.example.com

image: hyperledger/fabric-orderer:latest

environment:

- FABRIC_LOGGING_SPEC=INFO

- ORDERER_GENERAL_LISTENADDRESS=0.0.0.0

- ORDERER_GENERAL_LISTENPORT=7050

- ORDERER_GENERAL_GENESISMETHOD=file

- ORDERER_GENERAL_GENESISFILE=/var/hyperledger/orderer/orderer.genesis.block

- ORDERER_GENERAL_LOCALMSPID=OrdererMSP

- ORDERER_GENERAL_LOCALMSPDIR=/var/hyperledger/orderer/msp

- ORDERER_GENERAL_TLS_ENABLED=true

- ORDERER_GENERAL_TLS_PRIVATEKEY=/var/hyperledger/orderer/tls/server.key

- ORDERER_GENERAL_TLS_CERTIFICATE=/var/hyperledger/orderer/tls/server.crt

- ORDERER_GENERAL_TLS_ROOTCAS=[/var/hyperledger/orderer/tls/ca.crt]

- ORDERER_KAFKA_TOPIC_REPLICATIONFACTOR=1

- ORDERER_KAFKA_VERBOSE=true

- ORDERER_GENERAL_CLUSTER_CLIENTCERTIFICATE=/var/hyperledger/orderer/tls/server.crt

- ORDERER_GENERAL_CLUSTER_CLIENTPRIVATEKEY=/var/hyperledger/orderer/tls/server.key

- ORDERER_GENERAL_CLUSTER_ROOTCAS=[/var/hyperledger/orderer/tls/ca.crt]

working_dir: /opt/gopath/src/github.com/hyperledger/fabric

command: orderer

volumes:

- ./channel-artifacts/genesis.block:/var/hyperledger/orderer/orderer.genesis.block

- ./crypto-config/ordererOrganizations/example.com/orderers/orderer.example.com/msp:/var/hyperledger/orderer/msp

- ./crypto-config/ordererOrganizations/example.com/orderers/orderer.example.com/tls/:/var/hyperledger/orderer/tls

ports:

- 7050:7050

extra_hosts:

- "orderer.example.com:192.168.50.120"

- "peer0.org1.example.com:192.168.50.232"

- "peer0.org2.example.com:192.168.50.46"

extra_hosts修改为实际实验环境的映射即可。

2.org1

创建同上,文件内容如下:

version: '2'

services:

couchdb0.org1.example.com:

container_name: couchdb0.org1.example.com

image: couchdb:3.1

environment:

- COUCHDB_USER=admin

- COUCHDB_PASSWORD=adminpw

ports:

- 5984:5984

peer0.org1.example.com:

container_name: peer0.org1.example.com

image: hyperledger/fabric-peer:latest

environment:

- CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock

- CORE_PEER_ID=peer0.org1.example.com

- CORE_PEER_ADDRESS=peer0.org1.example.com:7051

- CORE_PEER_LISTENADDRESS=0.0.0.0:7051

- CORE_PEER_CHAINCODEADDRESS=peer0.org1.example.com:7052

- CORE_PEER_CHAINCODELISTENADDRESS=0.0.0.0:7052

- CORE_PEER_GOSSIP_BOOTSTRAP=peer0.org1.example.com:7051

- CORE_PEER_GOSSIP_EXTERNALENDPOINT=peer0.org1.example.com:7051

- CORE_PEER_LOCALMSPID=Org1MSP

- FABRIC_LOGGING_SPEC=INFO

- CORE_PEER_TLS_ENABLED=true

- CORE_PEER_GOSSIP_USELEADERELECTION=true

- CORE_PEER_GOSSIP_ORGLEADER=false

- CORE_PEER_PROFILE_ENABLED=true

- CORE_PEER_TLS_CERT_FILE=/etc/hyperledger/fabric/tls/server.crt

- CORE_PEER_TLS_KEY_FILE=/etc/hyperledger/fabric/tls/server.key

- CORE_PEER_TLS_ROOTCERT_FILE=/etc/hyperledger/fabric/tls/ca.crt

- CORE_CHAINCODE_EXECUTETIMEOUT=300s

- CORE_LEDGER_STATE_STATEDATABASE=CouchDB

- CORE_LEDGER_STATE_COUCHDBCONFIG_COUCHDBADDRESS=couchdb0.org1.example.com:5984

- CORE_LEDGER_STATE_COUCHDBCONFIG_USERNAME=admin

- CORE_LEDGER_STATE_COUCHDBCONFIG_PASSWORD=adminpw

depends_on:

- couchdb0.org1.example.com

working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer

command: peer node start

volumes:

- /var/run/:/host/var/run/

- ./crypto-config/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/msp:/etc/hyperledger/fabric/msp

- ./crypto-config/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls:/etc/hyperledger/fabric/tls

ports:

- 7051:7051

- 7052:7052

- 7053:7053

extra_hosts:

- "orderer.example.com:192.168.50.120"

- "peer0.org1.example.com:192.168.50.232"

- "peer0.org2.example.com:192.168.50.46"

cli:

container_name: cli

image: hyperledger/fabric-tools:latest

tty: true

stdin_open: true

environment:

- GOPATH=/opt/gopath

- CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock

- FABRIC_LOGGING_SPEC=INFO

- CORE_PEER_ID=cli

- CORE_PEER_ADDRESS=peer0.org1.example.com:7051

- CORE_PEER_LOCALMSPID=Org1MSP

- CORE_PEER_TLS_ENABLED=true

- CORE_PEER_TLS_CERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls/server.crt

- CORE_PEER_TLS_KEY_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls/server.key

- CORE_PEER_TLS_ROOTCERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls/ca.crt

- CORE_PEER_MSPCONFIGPATH=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/users/[email protected]/msp

working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer

command: /bin/bash

volumes:

- /var/run/:/host/var/run/

- ./chaincode/go/:/opt/gopath/src/github.com/hyperledger/fabric-cluster/chaincode/go

- ./crypto-config:/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/

- ./channel-artifacts:/opt/gopath/src/github.com/hyperledger/fabric/peer/channel-artifacts

extra_hosts:

- "orderer.example.com:192.168.50.120"

- "peer0.org1.example.com:192.168.50.232"

- "peer0.org2.example.com:192.168.50.46"

3.org2

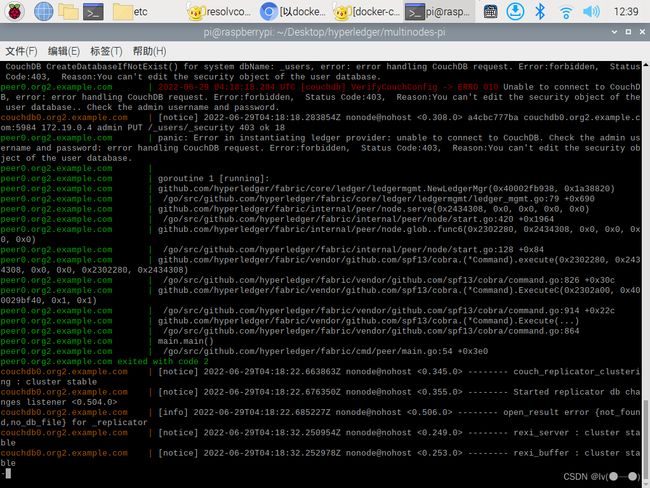

由于org2节点位于树莓派系统中,raspios为arm64架构,而org1节点配置文件中peer、cli、couchdb使用的docker镜像均不支持arm64架构,实际运行过程中服务端窗口会出现如下错误:

因此需要拉取支持arm64架构的镜像,我的docker-compose文件编写如下:

version: '2'

services:

couchdb0.org2.example.com:

container_name: couchdb0.org2.example.com

image: busan15/fabric-couchdb

environment:

- COUCHDB_USER=admin

- COUCHDB_PASSWORD=adminpw

ports:

- 5984:5984

peer0.org2.example.com:

container_name: peer0.org2.example.com

image: busan15/fabric-peer

environment:

- CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock

- CORE_PEER_ID=peer0.org2.example.com

- CORE_PEER_ADDRESS=peer0.org2.example.com:7051

- CORE_PEER_LISTENADDRESS=0.0.0.0:7051

- CORE_PEER_CHAINCODEADDRESS=peer0.org2.example.com:7052

- CORE_PEER_CHAINCODELISTENADDRESS=0.0.0.0:7052

- CORE_PEER_GOSSIP_BOOTSTRAP=peer0.org2.example.com:7051

- CORE_PEER_GOSSIP_EXTERNALENDPOINT=peer0.org2.example.com:7051

- CORE_PEER_LOCALMSPID=Org2MSP

- FABRIC_LOGGING_SPEC=INFO

- CORE_PEER_TLS_ENABLED=true

- CORE_PEER_GOSSIP_USELEADERELECTION=true

- CORE_PEER_GOSSIP_ORGLEADER=false

- CORE_PEER_PROFILE_ENABLED=true

- CORE_PEER_TLS_CERT_FILE=/etc/hyperledger/fabric/tls/server.crt

- CORE_PEER_TLS_KEY_FILE=/etc/hyperledger/fabric/tls/server.key

- CORE_PEER_TLS_ROOTCERT_FILE=/etc/hyperledger/fabric/tls/ca.crt

- CORE_CHAINCODE_EXECUTETIMEOUT=300s

- CORE_LEDGER_STATE_STATEDATABASE=CouchDB

- CORE_LEDGER_STATE_COUCHDBCONFIG_COUCHDBADDRESS=couchdb0.org2.example.com:5984

- CORE_LEDGER_STATE_COUCHDBCONFIG_USERNAME=admin

- CORE_LEDGER_STATE_COUCHDBCONFIG_PASSWORD=adminpw

depends_on:

- couchdb0.org2.example.com

working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer

command: peer node start

volumes:

- /var/run/:/host/var/run/

- ./crypto-config/peerOrganizations/org2.example.com/peers/peer0.org2.example.com/msp:/etc/hyperledger/fabric/msp

- ./crypto-config/peerOrganizations/org2.example.com/peers/peer0.org2.example.com/tls:/etc/hyperledger/fabric/tls

ports:

- 7051:7051

- 7052:7052

- 7053:7053

extra_hosts:

- "orderer.example.com:192.168.50.120"

- "peer0.org1.example.com:192.168.50.232"

- "peer0.org2.example.com:192.168.50.46"

cli:

container_name: cli

image: busan15/fabric-tools

tty: true

stdin_open: true

environment:

- GOPATH=/opt/gopath

- CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock

- FABRIC_LOGGING_SPEC=INFO

- CORE_PEER_ID=cli

- CORE_PEER_ADDRESS=peer0.org2.example.com:7051

- CORE_PEER_LOCALMSPID=Org2MSP

- CORE_PEER_TLS_ENABLED=true

- CORE_PEER_TLS_CERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org2.example.com/peers/peer0.org2.example.com/tls/server.crt

- CORE_PEER_TLS_KEY_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org2.example.com/peers/peer0.org2.example.com/tls/server.key

- CORE_PEER_TLS_ROOTCERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org2.example.com/peers/peer0.org2.example.com/tls/ca.crt

- CORE_PEER_MSPCONFIGPATH=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org2.example.com/users/[email protected]/msp

working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer

command: /bin/bash

volumes:

- /var/run/:/host/var/run/

- ./chaincode/go/:/opt/gopath/src/github.com/hyperledger/fabric-cluster/chaincode/go

- ./crypto-config:/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/

- ./channel-artifacts:/opt/gopath/src/github.com/hyperledger/fabric/peer/channel-artifacts

extra_hosts:

- "orderer.example.com:192.168.50.120"

- "peer0.org1.example.com:192.168.50.232"

- "peer0.org2.example.com:192.168.50.46"

上述操作完成后,三台主机项目目录下使用 docker-compose up 命令启动服务。

通道操作

1.创建通道

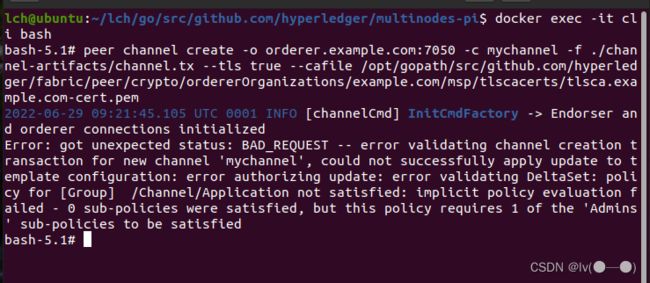

进入虚拟机2,docker exec -it cli bash 进入容器,使用如下命令创建通道:

peer channel create -o orderer.example.com:7050 -c mychannel -f ./channel-artifacts/channel.tx --tls true --cafile /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ordererOrganizations/example.com/msp/tlscacerts/tlsca.example.com-cert.pem这一步可能报错:

可能是IP映射问题,检查一下IP是否有修改过,然后重新生成证书和通道文件即可。

将通道文件拷贝到虚拟机2主机中:

docker cp cli:/opt/gopath/src/github.com/hyperledger/fabric/peer/mychannel.block ./拷贝到树莓派中:

scp mychannel.block [email protected]:[项目目录绝对路径]在树莓派上拷贝到cli中:

docker cp mychannel.block cli:/opt/gopath/src/github.com/hyperledger/fabric/peer/2.加入通道

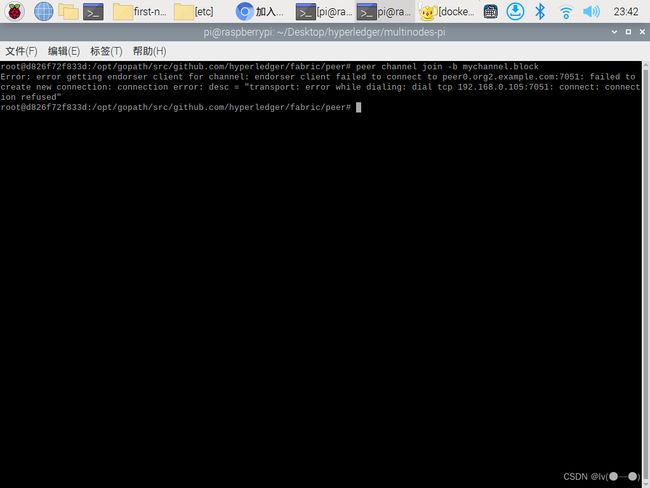

虚拟机2和树莓派上通过以下命令将peer0加入通道:

peer channel join -b mychannel.block原因是couchdb镜像出问题,可能不适配arm64架构,docker-compose中更换镜像源之后可以解决。

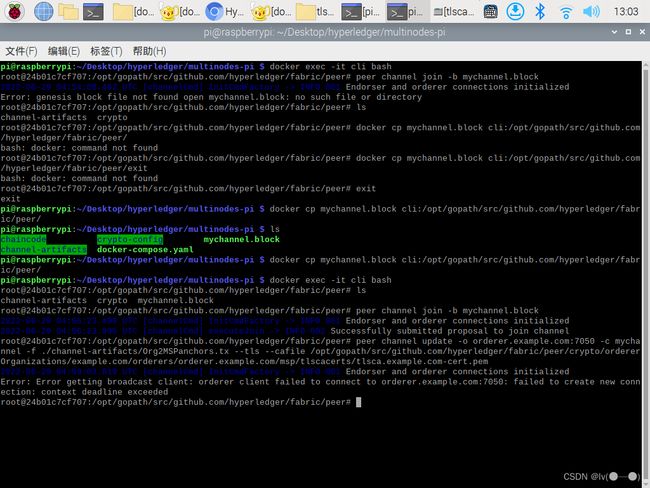

更新锚节点org1和org2:

peer channel update -o orderer.example.com:7050 -c mychannel -f ./channel-artifacts/Org1MSPanchors.tx --tls --cafile /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ordererOrganizations/example.com/orderers/orderer.example.com/msp/tlscacerts/tlsca.example.com-cert.pempeer channel update -o orderer.example.com:7050 -c mychannel -f ./channel-artifacts/Org2MSPanchors.tx --tls --cafile /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ordererOrganizations/example.com/orderers/orderer.example.com/msp/tlscacerts/tlsca.example.com-cert.pem这一步可能会有如下报错:

查了很久。。最后发现是虚拟机网络连接方式不对,应该改成桥接模式。。更改完成之后需要重新修改IP映射,再次确认三台主机之间能够ping通各自的host,然后从头开始配置。

安装调用智能合约

本来使用的是官方给的样例链码sacc,但是最后没能跑起来,然后试了一下fabcar运行没有问题,不过也先记录一下。

将智能合约sacc复制到chaincode/go/文件夹中,进入容器:

docker exec -it cli bash进入链码所在目录:

cd /opt/gopath/src/github.com/hyperledger/fabric-cluster/chaincode/go/sacc设置go语言依赖包(不然可能报错超时):

go env -w GOPROXY=https://goproxy.cn,direct

go mod vendor回到peer目录:

cd /opt/gopath/src/github.com/hyperledger/fabric/peer生命周期命令打包链码:

peer lifecycle chaincode package sacc.tar.gz --path [链码目录的绝对路径] --label sacc_1注意--path后面跟绝对路径,这样不容易报错。

退出容器,将打包好的链码复制到虚拟机2主机上:

docker cp cli:/opt/gopath/src/github.com/hyperledger/fabric/peer/sacc.tar.gz [项目目录绝对路径]scp复制到树莓派主机上:

scp sacc.tar.gz [email protected]:[项目目录绝对路径]树莓派上将链码复制到容器中

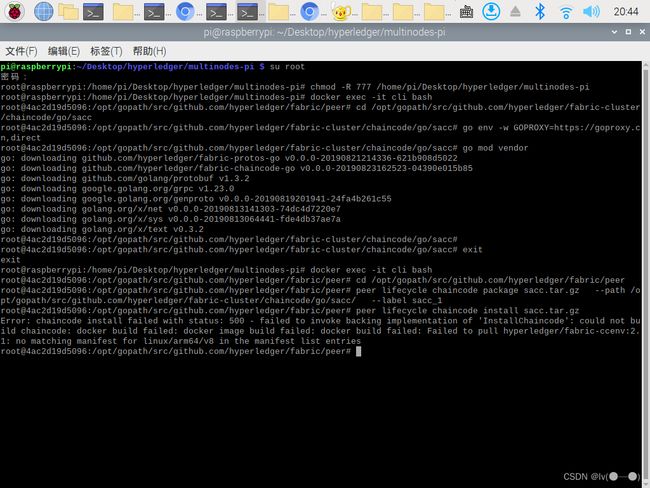

docker cp [链码所在的本地绝对路径] cli:/opt/gopath/src/github.com/hyperledger/fabric/peer两台主机分别安装链码:

peer lifecycle chaincode install sacc.tar.gz又报错:

推测是链码sacc本身的问题,拉取了arm64版本的ccenv镜像,但目前还不知道如何将chaincode install命令指定在新的ccenv镜像下运行,后续先用链码fabcar实验。

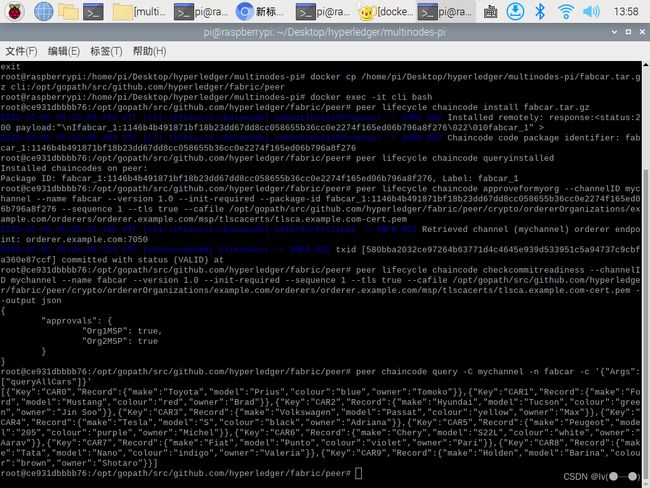

安装fabcar:

peer lifecycle chaincode install fabcar.tar.gz查询一下(两台主机):

peer lifecycle chaincode queryinstalled批准链码(两台主机):

peer lifecycle chaincode approveformyorg --channelID mychannel --name fabcar --version 1.0 --init-required --package-id sacc_1:1d9838e6893e068a94f055e807b18289559af748e5196a79a640b66305a74428 --sequence 1 --tls true --cafile /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ordererOrganizations/example.com/orderers/orderer.example.com/msp/tlscacerts/tlsca.example.com-cert.pem查看是否就绪(两台主机):

peer lifecycle chaincode checkcommitreadiness --channelID mychannel --name fabcar --version 1.0 --init-required --sequence 1 --tls true --cafile /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ordererOrganizations/example.com/orderers/orderer.example.com/msp/tlscacerts/tlsca.example.com-cert.pem --output json显示两个组织都为“true”就ok了!

提交链码(一台主机):

peer lifecycle chaincode commit -o orderer.example.com:7050 --channelID mychannel --name fabcar --version 1.0 --sequence 1 --init-required --tls true --cafile /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ordererOrganizations/example.com/orderers/orderer.example.com/msp/tlscacerts/tlsca.example.com-cert.pem --peerAddresses peer0.org1.example.com:7051 --tlsRootCertFiles /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls/ca.crt --peerAddresses peer0.org2.example.com:7051 --tlsRootCertFiles /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org2.example.com/peers/peer0.org2.example.com/tls/ca.crt初始化链码fabcar:

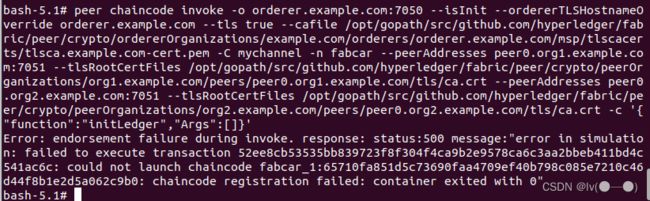

peer chaincode invoke -o orderer.example.com:7050 --isInit --ordererTLSHostnameOverride orderer.example.com --tls true --cafile /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ordererOrganizations/example.com/orderers/orderer.example.com/msp/tlscacerts/tlsca.example.com-cert.pem -C mychannel -n fabcar --peerAddresses peer0.org1.example.com:7051 --tlsRootCertFiles /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls/ca.crt --peerAddresses peer0.org2.example.com:7051 --tlsRootCertFiles /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org2.example.com/peers/peer0.org2.example.com/tls/ca.crt -c '{"function":"initLedger","Args":[]}' 有一次报错:

看了一下服务端窗口发现链码fabcar的容器在提交链码的同时自动退出去了??重新创建通道再配置还是到这一步容器就会自动退出,搞了一晚上也不知道问题出在哪,最后换了个wifi修改了三个主机IP,从头开始配置就没再出现过这个问题了。。。总之还是没搞懂哪里出的问题。。。

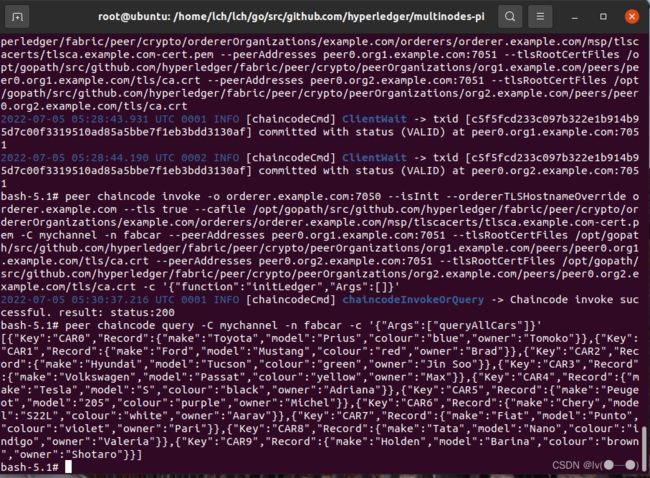

调用“queryAllCars”:

peer chaincode query -C mychannel -n fabcar -c '{"Args":["queryAllCars"]}'org2结果:

调试成功!