整合Springboot + docker + ELK 实现日志收集与展示

文章目录

- 1、ELK简介

- 2、Logstash(简要介绍,因为Logstash需要做较多配置)

-

- 2.1、inputs

- 2.1、filters

- 2.1、outputs

- 3、SpringBoot + ELK环境搭建

-

- 3.1、ELK环境准备

-

- 3.1.1、创建目录及配置文件

- 3.1.2、docker-compose启动elk

- 3.2、SpringBoot项目构建

-

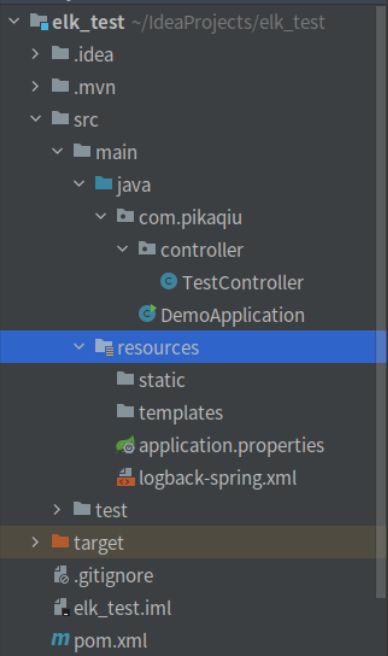

- 3.2.1、微服务1(elk_test)

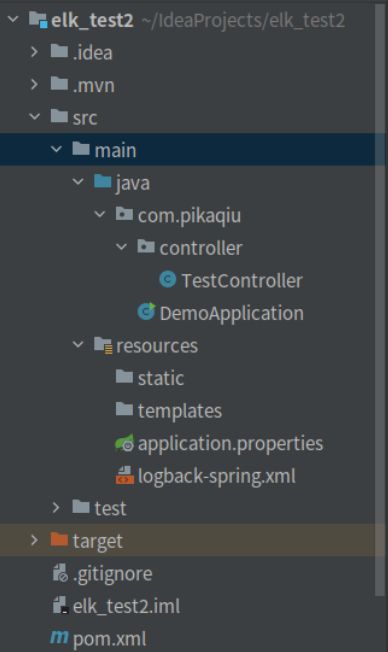

- 3.2.2、微服务1(elk_test2)

- 3.3、kibana配置

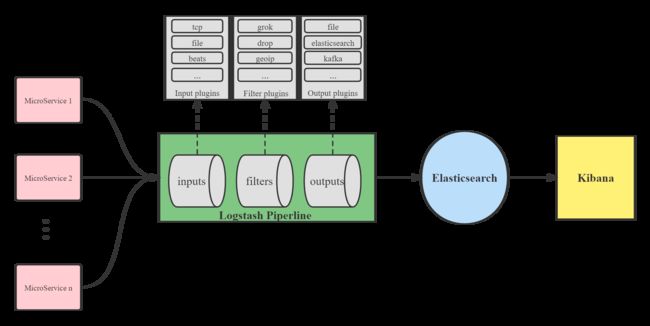

1、ELK简介

ELK是Elastic公司的三个组件,这三个组件配合实现日志收集.

- ElasticSearch: 日志分布式存储/搜索工具,原生支持集群功能,Elasticsearch为所有类型的数据提供近乎实时的搜索和分析。无论是结构化文本还是非结构化文本,数字数据或地理空间数据,Elasticsearch都能以支持快速搜索的方式有效地对其进行存储和索引。

- Logstash: 用于收集、处理和转发日志信息,能够从从本地磁盘,网络服务(自己监听端口,接受用户日志),消息队列等多个来源采集数据,转换数据,然后进行过滤分析并将数据发送到最喜欢的“存储库(Elasticsearch等)”中。Logstash能够动态地采集、转换和传输数据,不受格式或复杂度的影响。利用Grok从非结构化数据中派生出结构,从IP地址解码出地理坐标,匿名化或排除敏感字段,并简化整体处理过程。

- Kibana: 是一个针对Elasticsearch的开源分析及可视化平台,用来搜索、查看交互存储在Elasticsearch索引中的数据。使用Kibana,可以通过各种图表进行高级数据分析及展示。并且可以为Logstash和ElasticSearch提供的日志分析友好的 Web 界面,可以汇总、分析和搜索重要数据日志。还可以让海量数据更容易理解。它操作简单,基于浏览器的用户界面可以快速创建仪表板(Dashboard)实时显示Elasticsearch查询动态

简单的说就是:Logstash收集、处理和转发信息,Elasticsearch存储和搜索信息,Kibana展示信息。

2、Logstash(简要介绍,因为Logstash需要做较多配置)

Logstash 事件处理管道具有三个阶段:inputs→filters→output。 inputs生成事件(收集),filters修改它们(处理),outputs将它们发送到其它地方(转发)。

2.1、inputs

input plugin用于提取数据, 这些数据可以来自日志文件,TCP或UDP侦听器,若干协议特定插件(如syslog或IRC)之一,甚至是排队系统(如Redis,AQMP或Kafka)。 此阶段使用围绕事件来源的元数据标记传入事件。

一些常用的输入:

- file:从文件系统上的文件读取

- redis: 从 redis 服务器读取

- beats:处理 Beats 发送的事件。

- tcp: 从 TCP 套接字读取事件

2.1、filters

filters是 Logstash 管道中的中间处理设备。如果事件符合特定条件,可以将过滤器与条件结合起来对事件执行操作。换句话说可以对事件进行一些处理。

一些有用的过滤器包括:

- grok:解析和构造任意文本。 Grok 目前是 Logstash 中将非结构化日志数据解析为结构化和可查询的最佳方式。

- mutate:对事件字段执行一般转换。 您可以重命名、删除、替换和修改事件中的字段。

- drop:完全删除一个事件,例如调试事件。

- geoip:添加有关 IP 地址地理位置的信息

2.1、outputs

outputs是 Logstash 管道的最后阶段,可以将已处理的事件加载到其他内容中,例如ElasticSearch或其他文档数据库,或排队系统,如Redis,AQMP或Kafka。 它还可以配置为与API通信。 一个事件可以有多个输出,但是一旦所有输出处理完成,事件就完成了它的执行。

一些常用的输出包括:

- elasticsearch:将事件数据发送到 Elasticsearch。

- file:将事件数据写入磁盘上的文件。

- kafka:将事件写入 Kafka 主题

3、SpringBoot + ELK环境搭建

本机环境介绍:

linux:

pikaqiu@pikaqiu-virtual-machine:~$ uname -a

Linux pikaqiu-virtual-machine 5.11.0-27-generic #29~20.04.1-Ubuntu SMP Wed Aug 11 15:58:17 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

Docker 环境:

pikaqiu@pikaqiu-virtual-machine:~$ docker version

Client: Docker Engine - Community

Version: 20.10.0

API version: 1.41

Go version: go1.13.15

Git commit: 7287ab3

Built: Tue Dec 8 18:59:53 2020

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.0

API version: 1.41 (minimum version 1.12)

Go version: go1.13.15

Git commit: eeddea2

Built: Tue Dec 8 18:57:44 2020

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.4.3

GitCommit: 269548fa27e0089a8b8278fc4fc781d7f65a939b

runc:

Version: 1.0.0-rc92

GitCommit: ff819c7e9184c13b7c2607fe6c30ae19403a7aff

docker-init:

Version: 0.19.0

GitCommit: de40ad0

Docker-compose环境:

pikaqiu@pikaqiu-virtual-machine:~$ docker-compose version

docker-compose version 1.24.1, build 4667896b

docker-py version: 3.7.3

CPython version: 3.6.8

OpenSSL version: OpenSSL 1.1.0j 20 Nov 2018

3.1、ELK环境准备

ELK环境使用dokcer-compose搭建

3.1.1、创建目录及配置文件

1)创建elasticsearch数据目录及插件目录

mkdir -p /home/pikaqiu/elk/elasticsearch/data

mkdir -p /home/pikaqiu/elk/elasticsearch/plugins

// elasticsearch数据文件夹授权,保证docker容器中读写权限

chmod 777 /home/pikaqiu/elk/elasticsearch/data

2)创建kibana目录并配置kibana.yml

mkdir -p /home/pikaqiu/elk/kibana/config

touch /home/pikaqiu/elk/kibana/config/kibana.yml

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# Default Kibana configuration for docker target

server.host: "0.0.0.0"

server.shutdownTimeout: "5s"

# 注意是你的本地IP

elasticsearch.hosts: [ "http://192.168.88.158:9200" ]

monitoring.ui.container.elasticsearch.enabled: true

#汉化

i18n.locale: "zh-CN"

3)创建logstash目录并配置logstash.conf文件

mkdir -p /home/pikaqiu/elk/logstash/conf.d

touch /home/pikaqiu/elk/logstash/conf.d/logstash.conf

编辑配置logstash.conf,内容如下:

这里logstash采用的input plugin是 tcp,可根据自己的需要修改为file或beat等。

因为需要启动两个微服务,所以在这里logstash需要设置两个pipeline,我使用了两个tcp,output plugin也做了相应的设置。

input {

# 创建了两个微服务, 所以建立两个不同的输入,将两个服务的日志分别输入到不同的index中

tcp {

mode => "server" #server表示侦听客户端连接,client表示连接到服务器

host => "0.0.0.0" #当mode为server时,表示要监听的地址。当mode为client时,表示要连接的地址。

type => "elk1" #设定type以区分每个输入源。

port => 4560 #当mode为server时,要侦听的端口。当mode为client时,要连接的端口。

codec => json #用于输入数据的编解码器

}

tcp {

mode => "server"

host => "0.0.0.0"

type => "elk2"

port => 4660

codec => json

}

}

filter{

# 可按照需求配置

}

output {

if [type] == "elk1"{

elasticsearch {

action => "index" #输出时创建映射

hosts => "es:9200" #设置远程实例的主机(Elasticsearch的地址和端口,可以用es这个域名访问elasticsearch服务,看完docker-compose即可理解)。

index => "elk1-%{+YYYY.MM.dd}" #指定事件要写入的索引名,对应于kibana中的索引模式

}

}

if [type] == "elk2"{

elasticsearch {

action => "index"

hosts => "es:9200"

index => "elk2-%{+YYYY.MM.dd}"

}

}

}

4)创建docker-compose.yml文件并配置

touch /home/pikaqiu/elk/docker-compose.yml

version: '3.7'

services:

elasticsearch:

# 从指定的镜像中启动容器,可以是存储仓库、标签以及镜像 ID,如果镜像不存在,Compose 会自动拉去镜像

image: elasticsearch:7.17.1

container_name: elasticsearch

privileged: true

user: root

environment:

# 设置集群名称为elasticsearch

- cluster.name=elasticsearch

# 以单一节点模式启动

- discovery.type=single-node

# 设置使用jvm内存大小

- ES_JAVA_OPTS=-Xms512m -Xmx512m

volumes:

# 插件文件挂载

- /home/pikaqiu/elk/elasticsearch/plugins:/usr/share/elasticsearch/plugins

# 数据文件挂载

- /home/pikaqiu/elk/elasticsearch/data:/usr/share/elasticsearch/data

ports:

- 9200:9200

- 9300:9300

logstash:

image: logstash:7.17.1

container_name: logstash

#

ports:

- 4560:4560

- 4660:4660

privileged: true

environment:

- TZ=Asia/Shanghai

volumes:

#挂载logstash的配置文件

- /home/pikaqiu/elk/logstash/conf.d/logstash.conf:/usr/share/logstash/pipeline/logstash.conf

depends_on:

- elasticsearch

links:

#可以用es这个域名访问elasticsearch服务

- elasticsearch:es

kibana:

image: kibana:7.17.1

container_name: kibana

ports:

- 5601:5601

privileged: true

links:

#可以用es这个域名访问elasticsearch服务

- elasticsearch:es

depends_on:

#kibana在elatiscsearch启动之后再启动

- elasticsearch

environment:

# 设置访问elasticsearch的地址

- elasticsearch.hosts=http://elasticsearch:9200

volumes:

- /home/pikaqiu/elk/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml

3.1.2、docker-compose启动elk

完整目录结构如下:

pikaqiu@pikaqiu-virtual-machine:~/elk$ ll

-rw-rw-r-- 1 pikaqiu pikaqiu 1525 4月 27 15:16 docker-compose.yml

drwxrwxr-x 4 pikaqiu pikaqiu 4096 4月 22 14:33 elasticsearch/

drwxrwxr-x 2 pikaqiu pikaqiu 4096 4月 24 20:21 images/

drwxrwxr-x 3 pikaqiu pikaqiu 4096 4月 22 14:33 kibana/

drwxrwxr-x 3 pikaqiu pikaqiu 4096 4月 22 14:39 logstash/

构建并启动ELK容器:

cd /home/pikaqiu/elk

docker-compose up -d

若启动报错,需要先关闭并删除容器后再重新启动。关闭删除命令:

docker-compose down

3.2、SpringBoot项目构建

这里准备了两个微服务以模拟多个微服务的场景。

3.2.1、微服务1(elk_test)

- pom.xml文件

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<parent>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-parentartifactId>

<version>2.6.7version>

<relativePath/>

parent>

<groupId>com.pikaqiugroupId>

<artifactId>elk_testartifactId>

<version>0.0.1-SNAPSHOTversion>

<name>demoname>

<description>Demo project for Spring Bootdescription>

<properties>

<java.version>1.8java.version>

properties>

<dependencies>

<dependency>

<groupId>net.logstash.logbackgroupId>

<artifactId>logstash-logback-encoderartifactId>

<version>5.3version>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

<scope>testscope>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-maven-pluginartifactId>

plugin>

plugins>

build>

project>

- logback-spring.xml

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<include resource="org/springframework/boot/logging/logback/console-appender.xml"/>

<property name="APP_NAME" value="springboot-logback-elk1-test"/>

<contextName>${APP_NAME}contextName>

<appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>192.168.88.158:4560destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"/>

appender>

<root level="info">

<appender-ref ref="LOGSTASH"/>

root>

configuration>

- application.properties

server.port=8080

- TestController.java

package com.pikaqiu.controller;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class TestController {

private Logger logger = (Logger)LogManager.getLogger(this.getClass());

@RequestMapping("/index1")

public void testElk(){

logger.debug("======================= elk1 test ================");

logger.info("======================= elk1 test ================");

logger.warn("======================= elk1 test ================");

logger.error("======================= elk1 test ================");

}

}

3.2.2、微服务1(elk_test2)

- pom.xml文件

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<parent>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-parentartifactId>

<version>2.6.7version>

<relativePath/>

parent>

<groupId>com.pikaqiugroupId>

<artifactId>elk_test2artifactId>

<version>0.0.1-SNAPSHOTversion>

<name>demoname>

<description>Demo project for Spring Bootdescription>

<properties>

<java.version>1.8java.version>

properties>

<dependencies>

<dependency>

<groupId>net.logstash.logbackgroupId>

<artifactId>logstash-logback-encoderartifactId>

<version>5.3version>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

<scope>testscope>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-maven-pluginartifactId>

plugin>

plugins>

build>

project>

- logback-spring.xml

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<include resource="org/springframework/boot/logging/logback/console-appender.xml"/>

<property name="APP_NAME" value="springboot-logback-elk2-test"/>

<appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>192.168.88.158:4660destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"/>

appender>

<root level="info">

<appender-ref ref="LOGSTASH"/>

root>

configuration>

- application.properties

server.port=8081

- TestController.java

package com.pikaqiu.controller;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class TestController {

private Logger logger = (Logger)LogManager.getLogger(this.getClass());

@RequestMapping("/index2")

public void testElk(){

logger.debug("======================= elk2 test ================");

logger.info("======================= elk2 test ================");

logger.warn("======================= elk2 test ================");

logger.error("======================= elk2 test ================");

}

}

注意:微服务1和微服务2的 logback-spring.xml文件中对应的logstash日志收集端口不同,application.properties中对应的server.port不同。

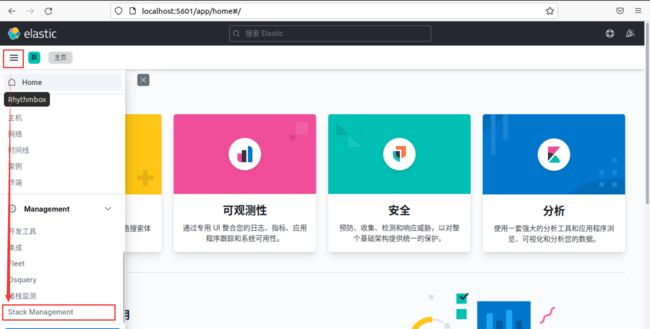

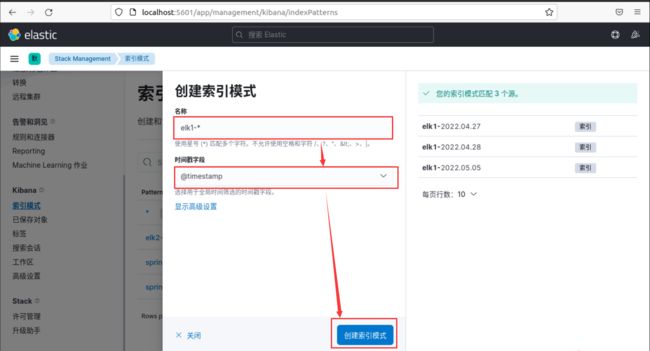

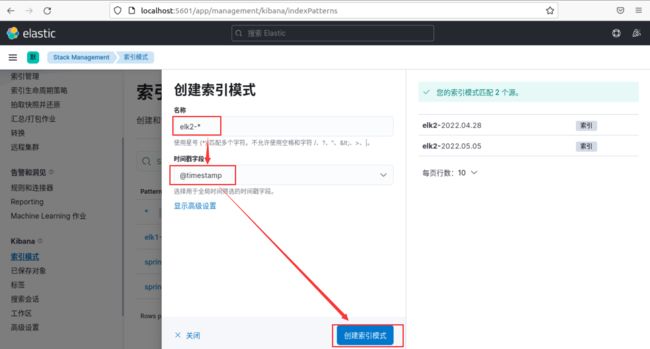

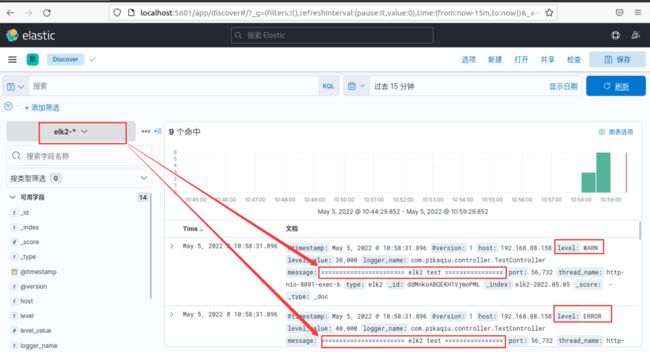

3.3、kibana配置

- 输入http://192.168.88.158:5601/app/home,访问Kibana web界面。点击左侧设置,进入Management界面

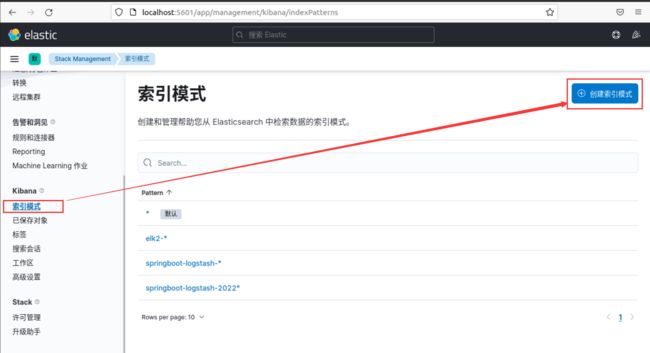

- 选择索引模式,点击创建索引模式

- 填写名称和时间戳字段,并创建该索引模式

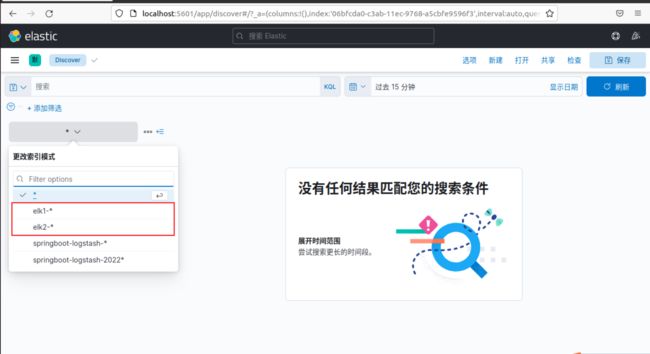

- 选择Discover,可以看到刚刚创建的两个索引模式

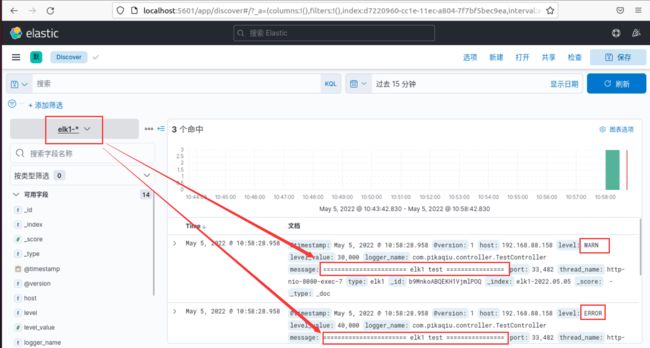

- 选择elk1-*索引模式,可以看到微服务1生成的日志

- 选择elk2-*索引模式,可以看到微服务2生成的日志

参考:

- https://www.laobaiblog.top/2022/03/30/docker-compose%E5%AE%89%E8%A3%85%E9%83%A8%E7%BD%B2elk%E5%B9%B6%E9%9B%86%E6%88%90springboot/

- https://blog.csdn.net/weixin_43184769/article/details/84971532