基于Kubernets+Prometheus+ELK企业级CICD平台建设方案

一、云原生概述

1、云原生简介

云原生 = 微服务 + DevOps + 持续交付 + 容器化。

为什么选择云原生:

云原生核心优势:

解耦软件开发,提高灵活性和可维护性

- 基于容器镜像的软件分层,清晰的依赖管理;

- 玻璃程序、配置和微服务,让开发者聚焦业务发展;

- 通过拆分应用程序为微服务和明确的依赖描述;

多云支持,避免厂商锁定

- 厂商基于标准接口提供服务,互操作性强;

- 开源为主,丰富的标准软件生态,用户多样选择;

- 支持在混合云、私有云或混合云部署;

避免侵入式定制

- 基于k8s松耦合平台架构,易扩展;

- k8s已经是被公认 platform for platform;

提高工作效率和资源利用率

- 通过中心编排过程动态管理和调度应用微服务;

| 传统 | 云原生 | |

|---|---|---|

| 重点关注 | 使用寿命和稳定性 | 上市速度 |

| 开发方法 | 瀑布式半敏捷型开发 | 敏捷开发、DevOps |

| 团队 | 相互独立的开发、运维、质量保证和安全团队 | 协作式 DevOps 团队 |

| 交付周期 | 长 | 短且持续 |

| 应用架构 | 紧密耦合 单体式 |

松散耦合 基于服务 基于应用编程接口(API)的通信 |

| 基础架构 | 以服务器为中心 适用于企业内部 依赖于基础架构 纵向扩展 针对峰值容量预先进行置备 |

以容器为中适用于企业内部和云环境 可跨基础架构进行移植 横向扩展 按需提供容量 |

2、DevOps简介

顾名思义,DevOps就是开发(Development)与运维(Operations)的结合体,其目的就是打通开发与运维之间的壁垒,促进开发、运营和质量保障(QA)等部门之间的沟通协作,以便对产品进行小规模、快速迭代式地开发和部署,快速响应客户的需求变化。它强调的是开发运维一体化,加强团队间的沟通和快速反馈,达到快速交付产品和提高交付质量的目的。

DevOps并不是一种新的工具集,而是一种思想,一种文化,用以改变传统开发运维模式的一组最佳实践。一般做法是通过一些CI/CD(持续集成、持续部署)自动化的工具和流程来实现DevOps的思想,以流水线(pipeline)的形式改变传统开发人员和测试人员发布软件的方式。随着Docker和Kubernetes(以下简称k8s)等技术的普及,容器云平台基础设施越来越完善,加速了开发和运维角色的融合,使云原生的DevOps实践成为以后的趋势。下面我们基于混合容器云平台详细讲解下云平台下DevOps的落地方案。

云原生DevOps特点:

DevOps是PaaS平台里很关键的功能模块,包含以下重要能力:支持代码克隆、编译代码、运行脚本、构建发布镜像、部署yaml文件以及部署Helm应用等环节;支持丰富的流水线设置,比如资源限额、流水线运行条数、推送代码以及推送镜像触发流水线运行等,提供了用户在不同环境下的端到端高效流水线能力;提供开箱即用的镜像仓库中心;提供流水线缓存功能,可以自由配置整个流水线或每个步骤的运行缓存,在代码克隆、编译代码、构建镜像等步骤时均可利用缓存大大缩短运行时间,提升执行效率。具体功能清单如下:

- 缓存加速:自研容器化流水线的缓存技术,通过代码编译和镜像构建的缓存复用,平均加速流水线3~5倍;

- 细粒度缓存配置:任一阶段、步骤可以控制是否开启缓存及缓存路径;

- 支持临时配置:用户无需提交即可运行临时配置,避免频繁提交配置文件污染代码仓库;

- 开箱即用的镜像仓库;

- 提供完整的日志功能;

- 可视化编辑界面,灵活配置流水线;

- 支持多种代码仓库授权:GitHub、GitLab、Bitbucket等;

- 多种流水线触发方式:代码仓库触发,镜像推送触发等;

- 网络优化,加快镜像或依赖包的下载速度;

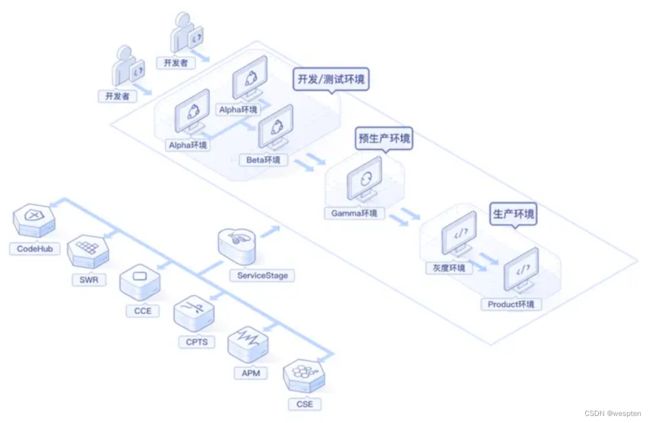

云原生DevOps实践:

- 容器基础平台

- 微服务应用发布平台

- Istio微服务治理

- APM 应用性能检测

3、容器云平台

云原生应用开发所构建和运行的应用,旨在充分利用基于四大原则的云计算模型:

-

基于服务的架构:基于服务的架构(如微服务)提倡构建松散耦合的模块化服务。采用基于服务的松散耦合设计,可帮助企业提高应用创建速度,降低复杂性。

-

基于API 的通信:即通过轻量级 API 来进行服务之间的相互调用。通过API驱动的方式,企业可以通过所提供的API 在内部和外部创建新的业务功能,极大提升了业务的灵活性。此外,采用基于API 的设计,在调用服务时可避免因直接链接、共享内存模型或直接读取数据存储而带来的风险。

-

基于容器的基础架构:云原生应用依靠容器来构建跨技术环境的通用运行模型,并在不同的环境和基础架构(包括公有云、私有云和混合云)间实现真正的应用可移植性。此外,容器平台有助于实现云原生应用的弹性扩展。

-

基于DevOps流程:采用云原生方案时,企业会使用敏捷的方法、依据持续交付和DevOps 原则来开发应用。这些方法和原则要求开发、质量保证、安全、IT运维团队以及交付过程中所涉及的其他团队以协作方式构建和交付应用。

微服务发布流程:

由管理平台实现两种方式发布管理:

- 手动上传资源包,以Deployment 方式部署容器应用;

- 实现自动化CI/CD流程;

1)手动上传资源包流程

- 用户上传本地XXX.zip 资源包

- 资源包包含:可执行文件、可编译Dockerfile文件

- 平台自动完成镜像编译,并上传到harbor仓库。

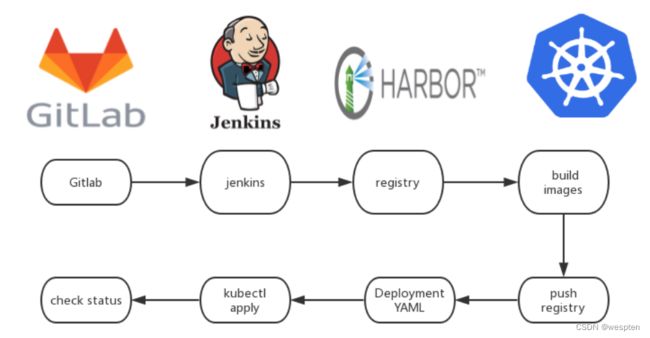

2)实现自动化CI/CD流程

- pull代码

- 测试registry,并登陆registry.

- 编写应用 Dockerfile

- 构建打包 Docker 镜像

- 推送 Docker 镜像到仓库

- 更改 Deployment YAML 文件中参数

- 利用 kubectl 工具部署应用

- 检查应用状态

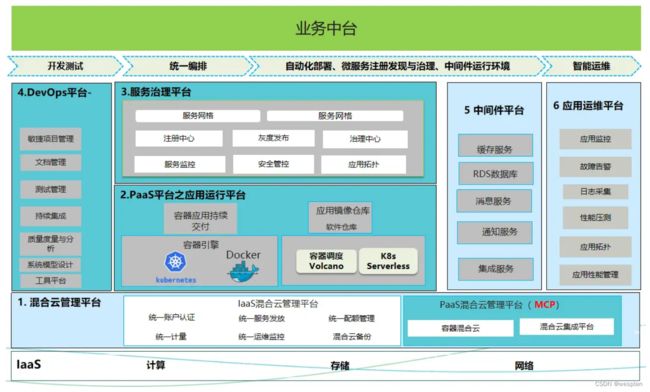

微服务平台架构设计:

基础服务平台设计:

- Jenkins【持续集成】

- Prometheus【监控系统】

- ELK 【日志分析系统】

- Jumpserver【堡垒机】

- Nexus 【制品仓库】

- SWR 【容器镜像仓库】

- LDAP 【统一身份认证】

- DNS 【私有DNS】

- APM 【应用性能分析】

- Ansible 【基础服务管理】

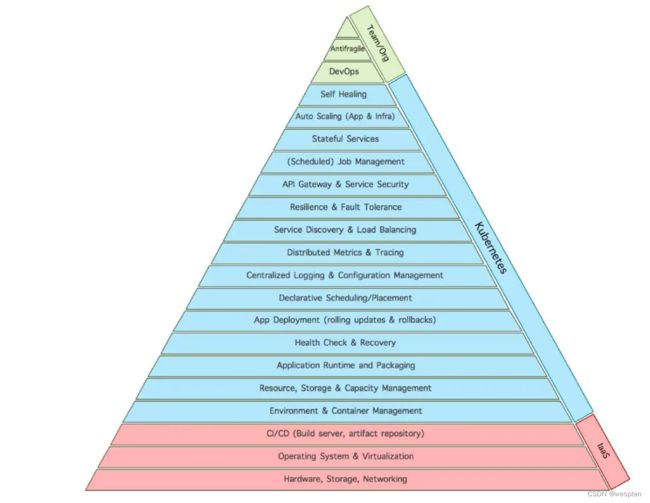

二、Kubernetes架构与术语

kubernetes在云原生中的地位:

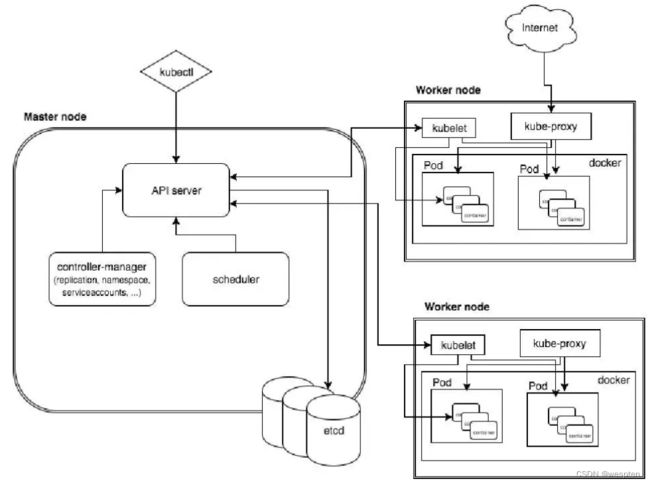

kubernetes架构图:

1. master

-

- Kubernets API server,提供

- Kubernets Controlle Manager (kube-controller-manager),kubernets 里面所有资源对象的自动化控制中心,可以理解为资源对象的大总管。

- Kubernets Scheduler (kube-scheduler),负责资源调度(pod调度)的进程,相当去公交公司的‘调度室’

2. node

每个Node 节点上都运行着以下一组关键进程:

- kubelet:负责pod对应的容器创建、启停等任务,同时与master 节点密切协作,实现集群管理的基本功能。

- kube-proxy:实现kubernetes Service 的通信与负载均衡机制的重要组件。

- Docker Engine: Docker 引擎,负责本机的容器创建和管理工作。

3. pod

Pod是kubernetes中可以创建的最小部署单元,V1 core版本的Pod的配置模板见Pod Template。

创建一个tomcat实例:

apiVersion: vl

kind: Pod

metadata:

name: myweb

labels:

name: myweb

spec:

containers:

name: myweb

image: kubeguide/tomcat-app:vl

ports:

containerPort: 8080

env:

name: MYSQL_SERVICE_HOST

value: 'mysql'

name: MYSQL_SERVICE_PORT

value: '3306'4. label(标签)

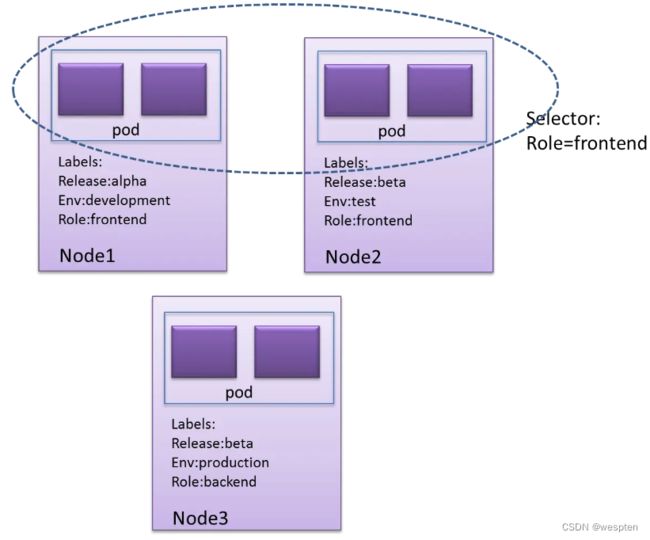

一个label是一个key=value的简直对,其中key与value由用户自己指定。Label可以附加到各种资源对象上,列入Node、pod、Server、RC 等。

Label 示例如下:

版本标签:release:stable,release:canary

环境标签:environment:dev,environment:qa,environment:production

架构标签: tier:frontend,tier:backend,tier:cache

分区标签:partition:customerA,partition:customerB

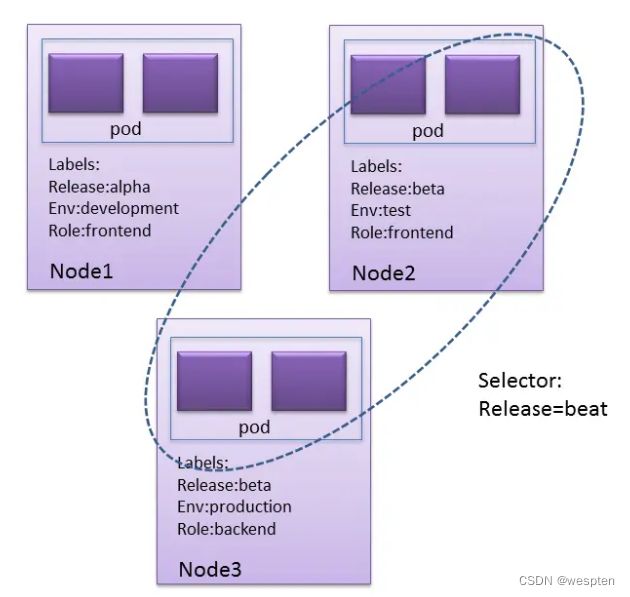

质量管控标签:track:daily,track:weeklyLabel selector:

Label不是唯一的,很多object可能有相同的label。

通过label selector,客户端/用户可以指定一个object集合,通过label selector对object的集合进行操作。

- equality-based :可以使用=、==、!=操作符,可以使用逗号分隔多个表达式

- et-based :可以使用in、notin、!操作符,另外还可以没有操作符,直接写出某个label的key,表示过滤有某个key的object而不管该key的value是何值,!表示没有该label的object

示例:

$ kubectl get pods -l 'environment=production,tier=frontend'

$ kubectl get pods -l 'environment in (production),tier in (frontend)'

$ kubectl get pods -l 'environment in (production, qa)'

$ kubectl get pods -l 'environment,environment notin (frontend)'Label Selector 的作用范围1:

Label Selector 的作用范围2:

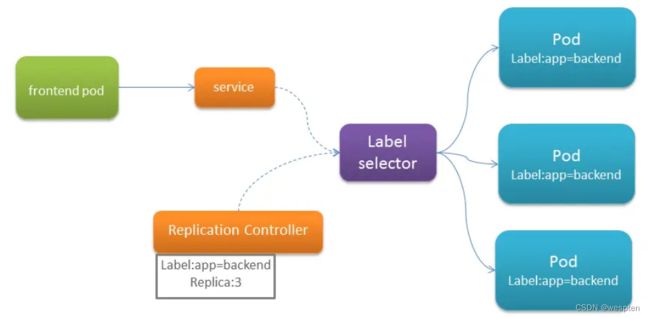

5. Replication Contoller

定义了一个期望的场景,即声明某种Pod的副本数量在任意时刻都符合某个预期值,所以RC的定义包含如下几个场景:

- Pod 期待的副本数

- 用于筛选Pod的Label Selector

- 当pod的副本数量小于预期的时候,用于创建新的Pod的pod模板(Template)

一个完整的RC示例:

apiVersion: extensions/v1beta1

kind: ReplicaSet

metadata:

name: frontend

# these labels can be applied automatically

# from the labels in the pod template if not set

# labels:

# app: guestbook

# tier: frontend

spec:

# this replicas value is default

# modify it according to your case

replicas: 3

# selector can be applied automatically

# from the labels in the pod template if not set,

# but we are specifying the selector here to

# demonstrate its usage.

selector:

matchLabels:

tier: frontend

matchExpressions:

- {key: tier, operator: In, values: [frontend]}

template:

metadata:

labels:

app: guestbook

tier: frontend

spec:

containers:

- name: php-redis

image: gcr.io/google_samples/gb-frontend:v3

resources:

requests:

cpu: 100m

memory: 100Mi

env:

- name: GET_HOSTS_FROM

value: dns

# If your cluster config does not include a dns service, then to

# instead access environment variables to find service host

# info, comment out the 'value: dns' line above, and uncomment the

# line below.

# value: env

ports:

- containerPort: 806. Deployment

Deployment 为 Pod 和 ReplicaSet 提供了一个声明式定义(declarative)方法,用来替代以前的ReplicationController 来方便的管理应用。

典型的应用场景包括:

- 使用Deployment来创建ReplicaSet。ReplicaSet在后台创建pod。检查启动状态,看它是成功还是失败。

- 然后,通过更新Deployment的PodTemplateSpec字段来声明Pod的新状态。这会创建一个新的ReplicaSet,Deployment会按照控制的速率将pod从旧的ReplicaSet移动到新的ReplicaSet中。

- 如果当前状态不稳定,回滚到之前的Deployment revision。每次回滚都会更新Deployment的revision。

- 扩容Deployment以满足更高的负载。

- 暂停Deployment来应用PodTemplateSpec的多个修复,然后恢复上线。

- 根据Deployment 的状态判断上线是否hang住了。

- 清除旧的不必要的 ReplicaSet。

一个简单的nginx 应用可以定义为:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 807. Horizontal Pod Autoscaler

应用的资源使用率通常都有高峰和低谷的时候,如何削峰填谷,提高集群的整体资源利用率,让service中的Pod个数自动调整呢?

这就有赖于Horizontal Pod Autoscaling了,顾名思义,使Pod水平自动缩放。这个Object(跟Pod、Deployment一样都是API resource)也是最能体现kubernetes之于传统运维价值的地方,不再需要手动扩容了,终于实现自动化了,还可以自定义指标,没准未来还可以通过人工智能自动进化呢!

Metrics支持:

在不同版本的API中,HPA autoscale时可以根据以下指标来判断:

- autoscaling/v1

- CPU

- autoscaling/v2alpha1

- 内存

- 自定义

- kubernetes1.6起支持自定义metrics,但是必须在kube-controller-manager中配置如下两项

- --horizontal-pod-autoscaler-use-rest-clients=true

- --api-server指向kube-aggregator,也可以使用heapster来实现,通过在启动heapster的时候指定--api-server=true。查看kubernetes metrics

- kubernetes1.6起支持自定义metrics,但是必须在kube-controller-manager中配置如下两项

- 多种metrics组合

- HPA会根据每个metric的值计算出scale的值,并将最大的那个指作为扩容的最终结果

一个简单的HPA示例:

apiVersion: autoscaling/vl

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

namespace: default

spec:

maxReplicas: 5

minReplicas: 2

scaleTargetRef:

kind: Deployment

name: php-apache

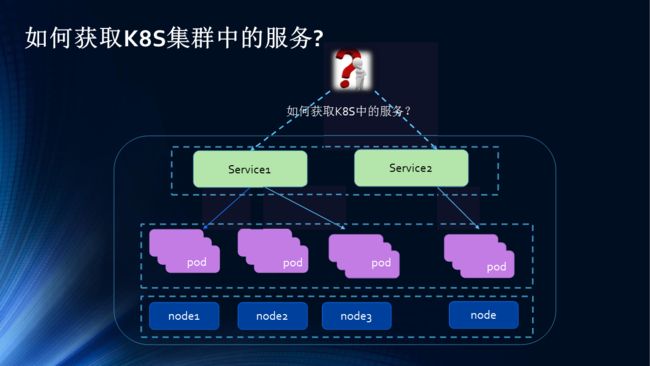

targetCPUUtilizationPercentage: 908. Service

Kubernetes Service 定义了这样一种抽象:一个 Pod 的逻辑分组,一种可以访问它们的策略 —— 通常称为微服务。 这一组 Pod 能够被 Service 访问到,通常是通过 Label Selector(查看下面了解,为什么可能需要没有 selector 的 Service)实现的。

Pod、RC 与Service的关系:

Endpoint=Pod IP + Container Port。

一个service示例:

kind: Service

apiVersion: v1

metadata:

name: my-service

spec:

selector:

app: MyApp

ports:

- protocol: TCP

port: 80

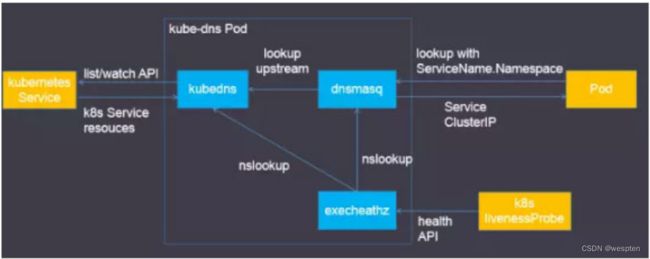

targetPort: 93761)kubernetes的服务发现机制

Kubernetes支持2种基本的服务发现模式 —— 环境变量和DNS。

- 环境变量

当 Pod 运行在 Node 上,kubelet 会为每个活跃的 Service 添加一组环境变量。 它同时支持 Docker links 兼容 变量(查看 makeLinkVariables)、简单的 {SVCNAME}_SERVICE_HOST 和 {SVCNAME}_SERVICE_PORT 变量,这里 Service 的名称需大写,横线被转换成下划线。

举个例子,一个名称为 "redis-master" 的 Service 暴露了 TCP 端口 6379,同时给它分配了 Cluster IP 地址 10.0.0.11,这个 Service 生成了如下环境变量:

REDIS_MASTER_SERVICE_HOST=10.0.0.11

REDIS_MASTER_SERVICE_PORT=6379

REDIS_MASTER_PORT=tcp://10.0.0.11:6379

REDIS_MASTER_PORT_6379_TCP=tcp://10.0.0.11:6379

REDIS_MASTER_PORT_6379_TCP_PROTO=tcp

REDIS_MASTER_PORT_6379_TCP_PORT=6379

REDIS_MASTER_PORT_6379_TCP_ADDR=10.0.0.11-

DNS

kubernets 通过Add-On增值包的方式引入了DNS 系统,把服务名称作为DNS域名,这样一来,程序就可以直接使用服务名来建立通信连接。目前kubernetes上的大部分应用都已经采用了DNS这一种发现机制,在后面的章节中我们会讲述如何部署与使用这套DNS系统。

2)外部系统访问service的问题

为了更加深刻的理解和掌握Kubernetes,我们需要弄明白kubernetes里面的“三种IP”这个关键问题,这三种IP 分别如下:

- Node IP: Node(物理主机)的IP地址

- Pod IP:Pod的IP地址

- Cluster IP: Service 的IP地址

3)服务类型

对一些应用(如 Frontend)的某些部分,可能希望通过外部(Kubernetes 集群外部)IP 地址暴露 Service。

Kubernetes ServiceTypes 允许指定一个需要的类型的 Service,默认是 ClusterIP 类型。

Type 的取值以及行为如下:

- ClusterIP:通过集群的内部 IP 暴露服务,选择该值,服务只能够在集群内部可以访问,这也是默认的 ServiceType

- NodePort:通过每个 Node 上的 IP 和静态端口(NodePort)暴露服务。NodePort 服务会路由到 ClusterIP 服务,这个 ClusterIP 服务会自动创建。通过请求 :,可以从集群的外部访问一个 NodePort 服务

- LoadBalancer:使用云提供商的负载均衡器,可以向外部暴露服务。外部的负载均衡器可以路由到 NodePort 服务和 ClusterIP 服务。

- ExternalName:通过返回 CNAME 和它的值,可以将服务映射到 externalName 字段的内容(例如, foo.bar.example.com)。 没有任何类型代理被创建,这只有 Kubernetes 1.7 或更高版本的 kube-dns 才支持。

一个Node Port 的简单示例:

apiVersion: vl

kind: Service

metadata;

name: tomcat-service

spec:

type: NodePort

ports:

- port: 8080

nodePort: 31002

selector:

tier: frontend9. Volume 存储卷

我们知道默认情况下容器的数据都是非持久化的,在容器消亡以后数据也跟着丢失,所以Docker提供了Volume机制以便将数据持久化存储。类似的,Kubernetes提供了更强大的Volume机制和丰富的插件,解决了容器数据持久化和容器间共享数据的问题。

1)Volume

目前,Kubernetes支持以下Volume类型:

- emptyDir

- hostPath

- gcePersistentDisk

- awsElasticBlockStore

- nfs

- iscsi

- flocker

- glusterfs

- rbd

- cephfs

- gitRepo

- secret

- persistentVolumeClaim

- downwardAPI

- azureFileVolume

- vsphereVolume

- flexvolume 注意,这些volume并非全部都是持久化的,比如emptyDir、secret、gitRepo等,这些volume会随着Pod的消亡而消失。

2)PersistentVolume

对于持久化的Volume,PersistentVolume (PV)和PersistentVolumeClaim (PVC)提供了更方便的管理卷的方法:PV提供网络存储资源,而PVC请求存储资源。这样,设置持久化的工作流包括配置底层文件系统或者云数据卷、创建持久性数据卷、最后创建claim来将pod跟数据卷关联起来。PV和PVC可以将pod和数据卷解耦,pod不需要知道确切的文件系统或者支持它的持久化引擎。

3)PV

PersistentVolume(PV)是集群之中的一块网络存储。跟 Node 一样,也是集群的资源。PV 跟 Volume (卷) 类似,不过会有独立于 Pod 的生命周期。比如一个本地的PV可以定义为

kind: PersistentVolume

apiVersion: v1

metadata:

name: grafana-pv-volume

labels:

type: local

spec:

storageClassName: grafana-pv-volume

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

hostPath:

path: "/data/volume/grafana"pv的访问模式有三种:

- 第一种,ReadWriteOnce:是最基本的方式,可读可写,但只支持被单个Pod挂载。

- 第二种,ReadOnlyMany:可以以只读的方式被多个Pod挂载。

- 第三种,ReadWriteMany:这种存储可以以读写的方式被多个Pod共享。不是每一种存储都支持这三种方式,像共享方式,目前支持的还比较少,比较常用的是NFS。在PVC绑定PV时通常根据两个条件来绑定,一个是存储的大小,另一个就是访问模式

4)StorageClass

上面通过手动的方式创建了一个NFS Volume,这在管理很多Volume的时候不太方便。Kubernetes还提供了StorageClass来动态创建PV,不仅节省了管理员的时间,还可以封装不同类型的存储供PVC选用。

Ceph RBD的例子:

apiVersion: storage.k8s.io/v1beta1

kind: StorageClass

metadata:

name: fast

provisioner: kubernetes.io/rbd

parameters:

monitors: 10.16.153.105:6789

adminId: kube

adminSecretName: ceph-secret

adminSecretNamespace: kube-system

pool: kube

userId: kube

userSecretName: ceph-secret-user5)PVC

PV是存储资源,而PersistentVolumeClaim (PVC) 是对PV的请求。PVC跟Pod类似:Pod消费Node的源,而PVC消费PV资源;Pod能够请求CPU和内存资源,而PVC请求特定大小和访问模式的数据卷。

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: grafana-pvc-volume

namespace: "monitoring"

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: grafana-pv-volumePVC可以直接挂载到Pod中:

kind: Pod

apiVersion: v1

metadata:

name: mypod

spec:

containers:

- name: myfrontend

image: dockerfile/nginx

volumeMounts:

- mountPath: "/var/www/html"

name: mypd

volumes:

- name: mypd

persistentVolumeClaim:

claimName: grafana-pvc-volume6)其他volume 说明

① NFS

NFS 是Network File System的缩写,即网络文件系统。Kubernetes中通过简单地配置就可以挂载NFS到Pod中,而NFS中的数据是可以永久保存的,同时NFS支持同时写操作。

volumes:

- name: nfs

nfs:

# FIXME: use the right hostname

server: 10.254.234.223

path: "/data"② emptyDir

如果Pod配置了emptyDir类型Volume, Pod 被分配到Node上时候,会创建emptyDir,只要Pod运行在Node上,emptyDir都会存在(容器挂掉不会导致emptyDir丢失数据),但是如果Pod从Node上被删除(Pod被删除,或者Pod发生迁移),emptyDir也会被删除,并且永久丢失。

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: gcr.io/google_containers/test-webserver

name: test-container

volumeMounts:

- mountPath: /test-pd

name: test-volume

volumes:

- name: test-volume

emptyDir: {}10. namespace

在一个Kubernetes集群中可以使用namespace创建多个“虚拟集群”,这些namespace之间可以完全隔离,也可以通过某种方式,让一个namespace中的service可以访问到其他的namespace中的服务,我们在CentOS中部署kubernetes1.6集群的时候就用到了好几个跨越namespace的服务,比如Traefik ingress和kube-systemnamespace下的service就可以为整个集群提供服务,这些都需要通过RBAC定义集群级别的角色来实现。

哪些情况下适合使用多个namesapce?

因为namespace可以提供独立的命名空间,因此可以实现部分的环境隔离。当你的项目和人员众多的时候可以考虑根据项目属性,例如生产、测试、开发划分不同的namespace。

Namespace使用,获取集群中有哪些namespace:

kubectl get ns集群中默认会有default和kube-system这两个namespace。

在执行kubectl命令时可以使用-n指定操作的namespace。

用户的普通应用默认是在default下,与集群管理相关的为整个集群提供服务的应用一般部署在kube-system的namespace下,例如我们在安装kubernetes集群时部署的kubedns、heapseter、EFK等都是在这个namespace下面。

另外,并不是所有的资源对象都会对应namespace,node和persistentVolume就不属于任何namespace。

三、Jenkins CI/CD

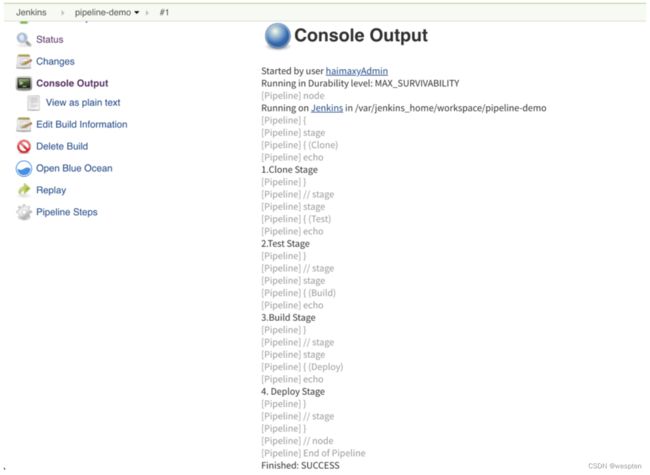

1、Jenkins CI/CD 流程

- pull代码

- 测试registry,并登陆registry.

- 编写应用 Dockerfile

- dockerfile 对应用代码无侵入,可以实时修改。

- 构建打包 Docker 镜像

- 推送 Docker 镜像到仓库

- 持续发布至容器集群

- 支持自动化自定义渲染多种K8S 资源类型:deployment、statefulset、pvc、configmap

- 持续发布支持常见集成工具:helm、kustomize、argocd

- 应用发布支持多环境部署:区分namespace、集群、权限审核

- 支持微服务应用一件发布

- Deployment 部署模板设计

- 镜像ID:使用8位Commit ID

- 启动探针:存活探针、启动探针、业务探针

- 应用cpu mem资源限制:limit、request

- 检查应用状态

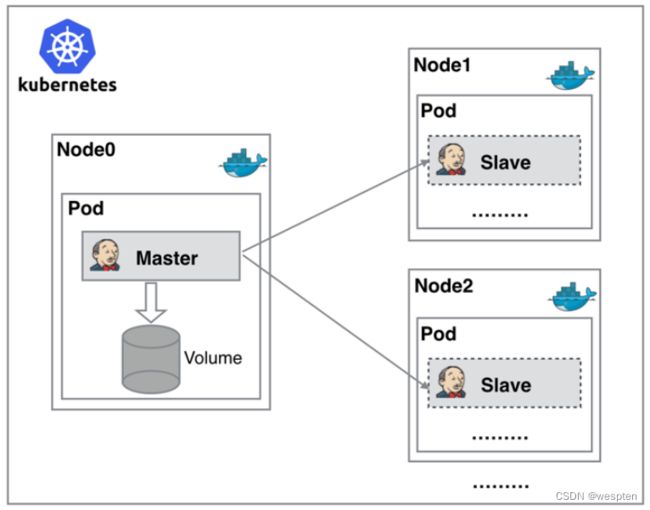

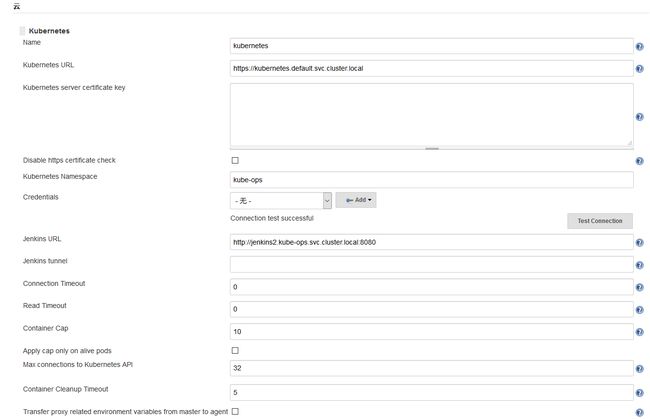

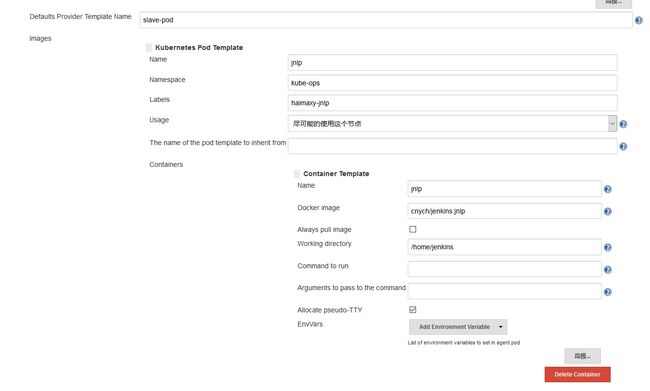

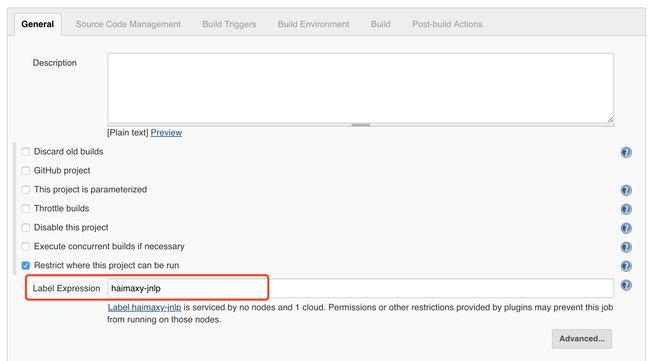

2、Jenkins in Kubernetes

传统的 Jenkins Slave 一主多从方式会存在一些痛点?

- 主 Master 发生单点故障时,整个流程都不可用了

- 每个 Slave 的配置环境不一样,来完成不同语言的编译打包等操作,但是这些差异化的配置导致管理起来非常不方便,维护起来也是比较费劲

- 资源分配不均衡,有的 Slave 要运行的 job 出现排队等待,而有的 Slave 处于空闲状态

- 资源有浪费,每台 Slave 可能是物理机或者虚拟机,当 Slave 处于空闲状态时,也不会完全释放掉资源。

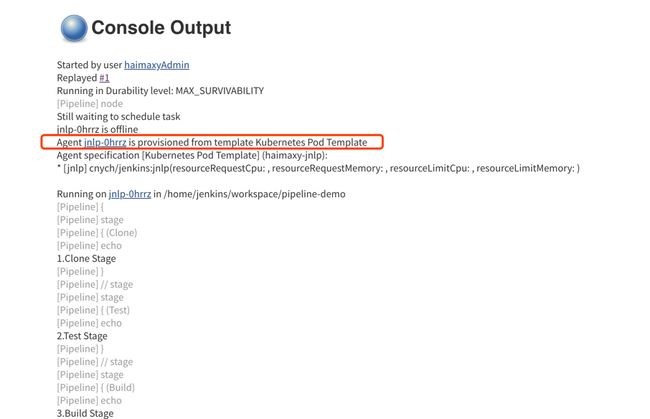

正因为上面的这些种种痛点,我们渴望一种更高效更可靠的方式来完成这个 CI/CD 流程,而 Docker 虚拟化容器技术能很好的解决这个痛点,又特别是在 Kubernetes 集群环境下面能够更好来解决上面的问题,下图是基于 Kubernetes 搭建 Jenkins 集群的简单示意图:

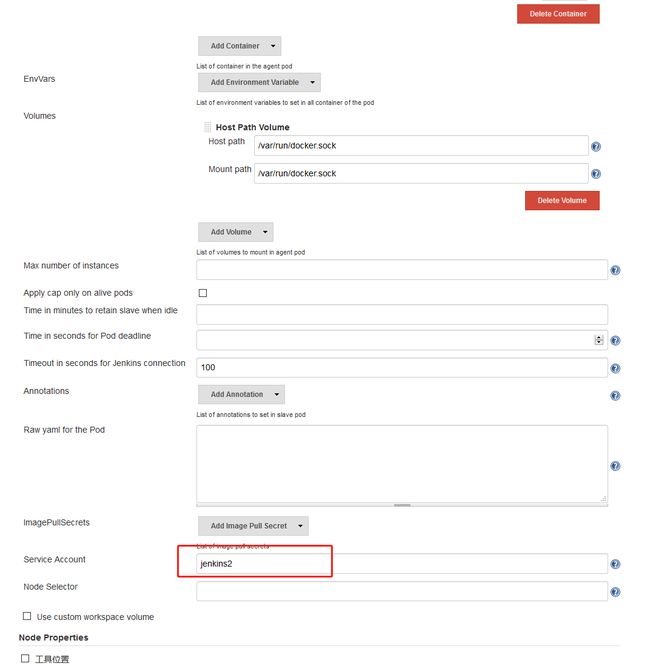

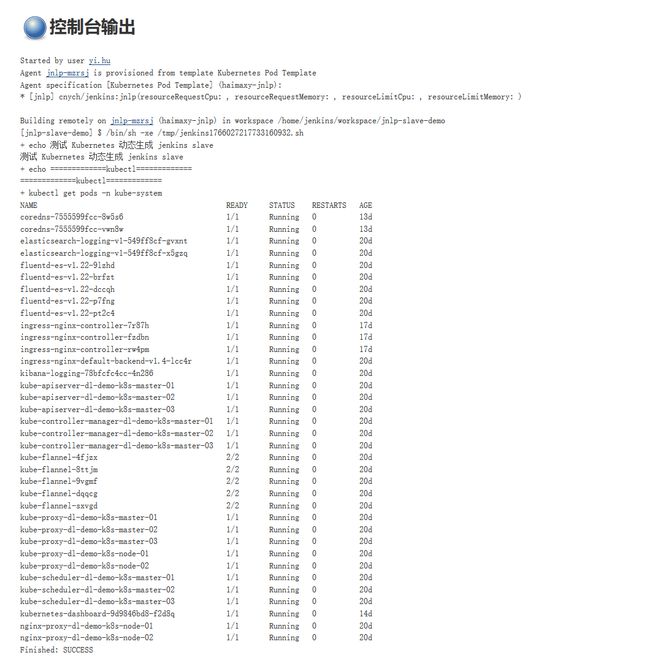

从图上可以看到 Jenkins Master 和 Jenkins Slave 以 Pod 形式运行在 Kubernetes 集群的 Node 上,Master 运行在其中一个节点,并且将其配置数据存储到一个 Volume 上去,Slave 运行在各个节点上,并且它不是一直处于运行状态,它会按照需求动态的创建并自动删除。

这种方式的工作流程大致为:当 Jenkins Master 接受到 Build 请求时,会根据配置的 Label 动态创建一个运行在 Pod 中的 Jenkins Slave 并注册到 Master 上,当运行完 Job 后,这个 Slave 会被注销并且这个 Pod 也会自动删除,恢复到最初状态。

那么我们使用这种方式带来了哪些好处呢?

- 服务高可用,当 Jenkins Master 出现故障时,Kubernetes 会自动创建一个新的 Jenkins Master 容器,并且将 Volume 分配给新创建的容器,保证数据不丢失,从而达到集群服务高可用。

- 动态伸缩,合理使用资源,每次运行 Job 时,会自动创建一个 Jenkins Slave,Job 完成后,Slave 自动注销并删除容器,资源自动释放,而且 Kubernetes 会根据每个资源的使用情况,动态分配 Slave 到空闲的节点上创建,降低出现因某节点资源利用率高,还排队等待在该节点的情况。

- 扩展性好,当 Kubernetes 集群的资源严重不足而导致 Job 排队等待时,可以很容易的添加一个 Kubernetes Node 到集群中,从而实现扩展。

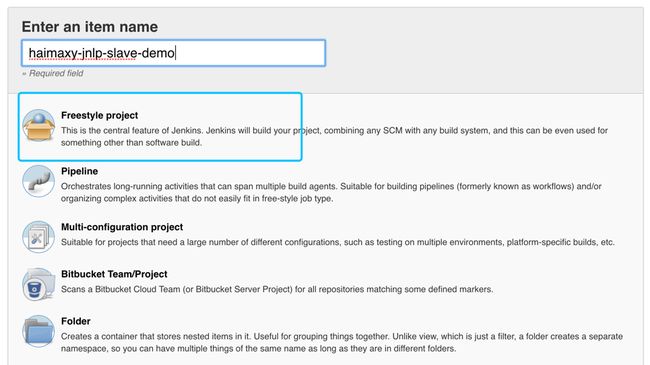

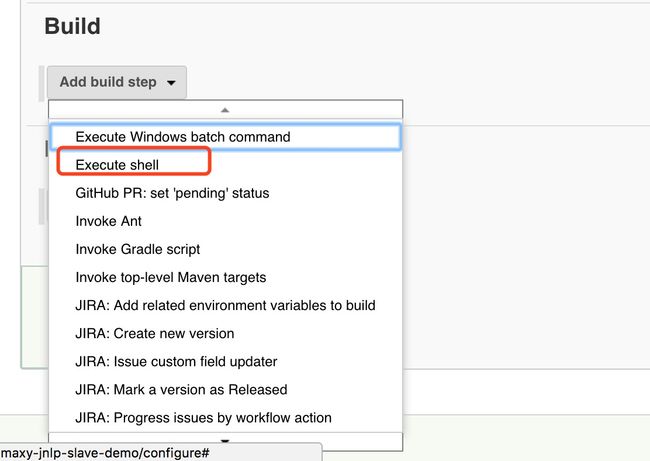

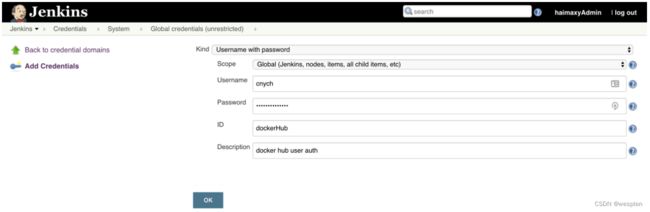

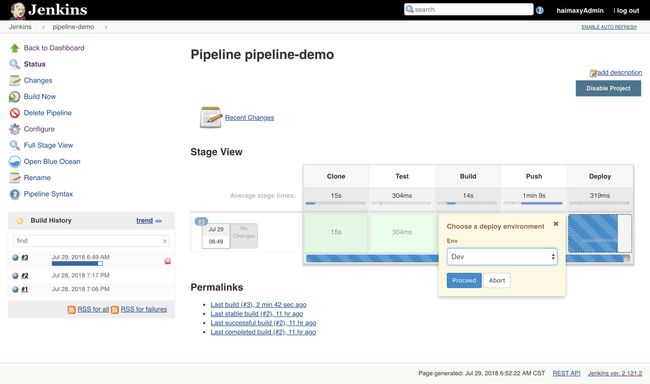

3、在Jenkins中部署Kubernetes应用

如何部署一个云原生的Kubernetes应用?

说明:

- 用户向Gitlab提交代码,代码中必须包含Dockerfile;

- 将代码提交到远程仓库;

- 用户在发布应用时需要填写git仓库地址和分支、服务类型、服务名称、资源数量、实例个数,确定后触发Jenkins自动构建;

- Jenkins的CI流水线自动编译代码并打包成docker镜像推送到Harbor镜像仓库;

- Jenkins的CI流水线中包括了自定义脚本,根据我们已准备好的kubernetes的YAML模板,将其中的变量替换成用户输入的选项;

- 生成应用的kubernetes YAML配置文件;

- 更新Ingress的配置,根据新部署的应用的名称,在ingress的配置文件中增加一条路由信息;

- 更新PowerDNS,向其中插入一条DNS记录,IP地址是边缘节点的IP地址。关于边缘节点,请查看边缘节点配置;

- Jenkins调用kubernetes的API,部署应用;

kubernetes应用构建和发布流程流程概括如下:

- pull代码

- 测试registry,并登陆registry.

- 编写应用 Dockerfile

- 构建打包 Docker 镜像

- 推送 Docker 镜像到仓库

- 更改 Deployment YAML 文件中参数

- 利用 kubectl 工具部署应用

- 检查应用状态

第一步,Pull 代码及全局环境变量申明

#!/bin/bash

# Filename: k8s-deploy_v0.2.sh

# Description: jenkins CI/CD 持续发布脚本

# Author: yi.hu

# Email: [email protected]

# Revision: 1.0

# Date: 2018-08-10

# Note: prd

# zookeeper基础服务,依照环境实际地址配置

init() {

local lowerEnv="$(echo ${AppEnv} | tr '[:upper:]' 'lower')"

case "${lowerEnv}" in

dev)

CFG_ADDR="10.34.11.186:4181"

DR_CFG_ZOOKEEPER_ENV_URL="10.34.11.186:4181"

;;

demo)

CFG_ADDR="10.34.11.186:4181"

DR_CFG_ZOOKEEPER_ENV_URL="10.34.11.186:4181"

;;

*)

echo "Not support AppEnv: ${AppEnv}"

exit 1

;;

esac

}

# 函数执行

init

# 初始化变量

AppId=$(echo ${AppOrg}_${AppEnv}_${AppName} |sed 's/[^a-zA-Z0-9_-]//g' | tr "[:lower:]" "[:upper:]")

CFG_LABEL=${CfgLabelBaseNode}/${AppId}

CFG_ADDR=${CFG_ADDR}

VERSION=$(echo "${GitBranch}" | sed 's@release/@@')第二步,登录harbor 仓库

docker_login () {

docker login ${DOCKER_REGISTRY} -u${User} -p${PassWord}

}第三步,编译代码,制作应用镜像,上传镜像到harbor仓库。

build() {

if [ "x${ACTION}" == "xDEPLOY" ] || [ "x${ACTION}" == "xPRE_DEPLOY" ]; then

echo "Test harbor registry: ${DOCKER_REGISTRY}"

curl --connect-timeout 30 -I ${DOCKER_REGISTRY}/api/projects 2>/dev/null | grep 'HTTP/1.1 200 OK' > /dev/null

echo "Check image EXIST or NOT: ${ToImage}"

ImageCheck_Harbor=$(echo ${ToImage} | sed 's/\([^/]\+\)\([^:]\+\):/\1\/api\/repositories\2\/tags\//')

Responed_Code=$(curl -u${User}:${PassWord} -so /dev/null -w '%{response_code}' ${ImageCheck_Harbor} || true)

echo ${Responed_Code}

if [ "${NoCache}" == "true" ] || [ "x${ResponedCode}" != "x200" ] ; then

if [ "x${ActionAfterBuild}" != "x" ]; then

eval ${ActionAfterBuild}

fi

echo "生成Dockerfile文件"

echo "FROM ${FromImage}" > Dockerfile

cat >> Dockerfile <<-EOF

${Dockerfile}

EOF

echo "同步上层镜像: ${FromImage}"

docker pull ${FromImage} # 同步上层镜像

echo "构建镜像,并Push到仓库: ${ToImage}"

docker build --no-cache=${NoCache} -t ${ToImage} . && docker push ${ToImage} || exit 1 # 开始构建镜像,成功后Push到仓库

echo "删除镜像: ${ToImage}"

docker rmi ${ToImage} || echo # 删除镜像

fi

fi

}第四步,发布、预发布、停止、重启

deploy() {

if [ "x${ACTION}" == "xSTOP" ]; then

# 停止当前实例

kubectl delete -f ${AppName}-deploy.yaml

elif [ "x${ACTION}" == "xRESTART" ]; then

kubectl delete pod -n ${NameSpace} -l app=${AppName}

elif [ "x${ACTION}" == "xDEPLOY" ]; then

kubectl apply -f ${AppName}-deploy.yaml

fi

}第五步,查看pod 是否正常启动,如果失败则返回1,进而会详细显示报错信息。

check_status() {

RETRY_COUNT=5

echo "检查 pod 运行状态"

while (( $RETRY_COUNT )); do

POD_STATUS=$(kubectl get pod -n ${NameSpace} -l app=${AppName} )

AVAILABLE_COUNT=$(kubectl get deploy -n ${NameSpace} -l app=${AppName} | awk '{print $(NF-1)}' | grep -v 'AVAILABLE')

if [ "X${AVAILABLE_COUNT}" != "X${Replicas}" ]; then

echo "[$(date '+%F %T')] Show pod Status , wait 30s and retry #$RETRY_COUNT "

echo "${POD_STATUS}"

let RETRY_COUNT-- || true

sleep 30

elif [ "X${AVAILABLE_COUNT}" == "X${Replicas}" ]; then

echo "Deploy Running successed"

break

else

echo "[$(date '+%F %T')] NOT expected pod status: "

echo "${POD_STATUS}"

return 1

fi

done

if [ "X${RETRY_COUNT}" == "X0" ]; then

echo "[$(date '+%F %T')] show describe pod status: "

echo -e "`kubectl describe pod -n ${NameSpace} -l app=${AppName}`"

fi

}

#主流程函数执行

docker_login

build第六步, 更改 YAML 文件中参数

cat > ${WORKSPACE}/${AppName}-deploy.yaml <<- EOF

#####################################################

#

# ${ACTION} Deployment

#

#####################################################

apiVersion: apps/v1beta2 # for versions before 1.8.0 use apps/v1beta1

kind: Deployment

metadata:

name: ${AppName}

namespace: ${NameSpace}

labels:

app: ${AppName}

version: ${VERSION}

AppEnv: ${AppEnv}

spec:

replicas: ${Replicas}

selector:

matchLabels:

app: ${AppName}

template:

metadata:

labels:

app: ${AppName}

spec:

containers:

- name: ${AppName}

image: ${ToImage}

ports:

- containerPort: ${ContainerPort}

livenessProbe:

httpGet:

path: ${HealthCheckURL}

port: ${ContainerPort}

initialDelaySeconds: 90

timeoutSeconds: 5

periodSeconds: 5

readinessProbe:

httpGet:

path: ${HealthCheckURL}

port: ${ContainerPort}

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 5

# configmap env

env:

- name: CFG_LABEL

value: ${CFG_LABEL}

- name: HTTP_SERVER

value: ${HTTP_SERVER}

- name: CFG_ADDR

value: ${CFG_ADDR}

- name: DR_CFG_ZOOKEEPER_ENV_URL

value: ${DR_CFG_ZOOKEEPER_ENV_URL}

- name: ENTRYPOINT

valueFrom:

configMapKeyRef:

name: ${ConfigMap}

key: ENTRYPOINT

- name: HTTP_TAR_FILES

valueFrom:

configMapKeyRef:

name: ${ConfigMap}

key: HTTP_TAR_FILES

- name: WITH_SGHUB_APM_AGENT

valueFrom:

configMapKeyRef:

name: ${ConfigMap}

key: WITH_SGHUB_APM_AGENT

- name: WITH_TINGYUN

valueFrom:

configMapKeyRef:

name: ${ConfigMap}

key: WITH_TINGYUN

- name: CFG_FILES

valueFrom:

configMapKeyRef:

name: ${ConfigMap}

key: CFG_FILES

# configMap volume

volumeMounts:

- name: applogs

mountPath: /volume_logs/

volumes:

- name: applogs

hostPath:

path: /opt/app_logs/${AppName}

imagePullSecrets:

- name: ${ImagePullSecrets}

---

apiVersion: v1

kind: Service

metadata:

name: ${AppName}

namespace: ${NameSpace}

labels:

app: ${AppName}

spec:

ports:

- port: ${ContainerPort}

targetPort: ${ContainerPort}

selector:

app: ${AppName}

EOF第七步,创建configmap 环境变量

kubectl delete configmap ${ConfigMap} -n ${NameSpace}

kubectl create configmap ${ConfigMap} ${ConfigMapData} -n ${NameSpace}

# 执行部署

deploy

# 打印配置

cat ${WORKSPACE}/${AppName}-deploy.yaml

# 执行启动状态检查

check_status4、应用资源分配规则

给一个namespace 设置全局资源限制规则:

cat ftc_demo_limitRange.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: ftc-demo-limit

namespace: ftc-demo

spec:

limits:

- max : # 在一个pod 中最高使用资源

cpu: "2"

memory: "6Gi"

min: # 在一个pod中最低使用资源

cpu: "100m"

memory: "2Gi"

type: Pod

- default: # 启动一个Container 默认资源规则

cpu: "1"

memory: "4Gi"

defaultRequest:

cpu: "200m"

memory: "2Gi"

max: #匹配用户手动指定Limits 规则

cpu: "1"

memory: "4Gi"

min:

cpu: "100m"

memory: "256Mi"

type: Container限制规则说明:

- CPU 资源可以超限使用,内存不能超限使用。

- Container 资源限制总量必须 <= Pod 资源限制总和

- 在一个pod 中最高使用2 核6G 内存

- 在一个pod中最低使用100m核 2G 内存

- 默认启动一个Container服务 强分配cpu 200m,内存2G。Container 最高使用 1 cpu 4G 内存。

- 如果用户私有指定limits 规则。最高使用1 CPU 4G内存,最低使用 cpu 100m 内存 256Mi

默认创建一个pod 效果如下:

kubectl describe pod -l app=ftc-saas-service -n ftc-demo

Controlled By: ReplicaSet/ftc-saas-service-7f998899c5

Containers:

ftc-saas-service:

Container ID: docker://9f3f1a56e36e1955e6606971e43ee138adab1e2429acc4279d353dcae40e3052

Image: dl-harbor.dianrong.com/ftc/ftc-saas-service:6058a7fbc6126332a3dbb682337c0ac68abc555e

Image ID: docker-pullable://dl-harbor.dianrong.com/ftc/ftc-saas-service@sha256:a96fa52b691880e8b21fc32fff98d82156b11780c53218d22a973a4ae3d61dd7

Port: 8080/TCP

Host Port: 0/TCP

State: Running

Started: Tue, 21 Aug 2018 19:11:29 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 1

memory: 4Gi

Requests:

cpu: 200m

memory: 2Gi生产案列:

apiVersion: v1

kind: LimitRange

metadata:

name: jccfc-prod-limit

namespace: jccfc-prod

spec:

limits:

- max: # pod 中所有容器,Limit值和的上限,也是整个pod资源的最大Limit

cpu: "4000m"

memory: "8192Mi"

min: # pod 中所有容器,request的资源总和不能小于min中的值

cpu: "1000m"

memory: "2048Mi"

type: Pod

- default: # 默认的资源限制

cpu: "4000m"

memory: "4096Mi"

defaultRequest: # 默认的资源需求

cpu: "2000m"

memory: "4096Mi"

maxLimitRequestRatio:

cpu: 2

memory: 2

type: Container四、监控kubernetes集群服务

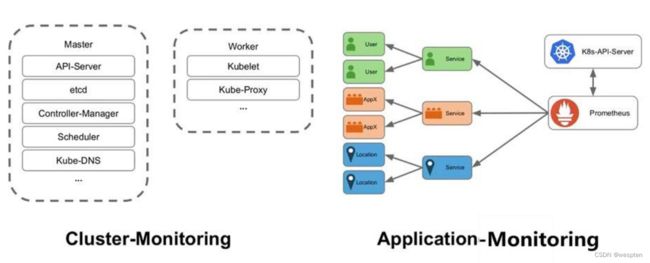

1、监控系统

- 基础监控:是针运行服务的基础设施的监控,比如容器、虚拟机、物理机等,监控的指标主要有内存的使用率

- 运行时监控:运行时监控主要有 GC 的监控包括 GC 次数、GC 耗时,线程数量的监控等等

- 通用监控:通用监控主要包括对流量和耗时的监控,通过流量的变化趋势可以清晰的了解到服务的流量高峰以及流量的增长情况

- 错误监控:错误监控: 错误监控是服务健康状态的直观体现,主要包括请求返回的错误码,如 HTTP 的错误码 5xx、4xx,熔断、限流等等

为什么选择Prometheus?

Prometheus架构:

- Heapster 采集数据单一

- Influxdb 缺少开源精神

2、prometheus-opeartor

prometheus-operator集群:

- 支持自动化方式管理Prometheus和alertmanager

- 支持原生配置管理

1)Operator:固化到软件中的运维技能

SRE 是用开发软件的方式来进行应用运维的人。他们是工程师、开发者,通晓如何进行软件开发,尤其是特定应用域的开发。他们做出的东西,就是包含这一应用的运维领域技能的软件。

我们的团队正在 Kubernetes 社区进行一个概念的设计和实现,这一概念就是:在 Kubernetes 基础之上,可靠的创建、配置和管理复杂应用的方法。

我们把这种软件称为 Operator。一个 Operator 指的是一个面向特定应用的控制器,这一控制器对 Kubernetes API 进行了扩展,使用 Kubernetes 用户的行为方式,创建、配置和管理复杂的有状态应用的实例。他构建在基础的 Kubernetes 资源和控制器概念的基础上,但是包含了具体应用领域的运维知识,实现了日常任务的自动化。

2)kubernetes operator

在 Kubernetes 的支持下,管理和伸缩 Web 应用、移动应用后端以及 API 服务都变得比较简单了。其原因是这些应用一般都是无状态的,所以 Deployment 这样的基础 Kubernetes API 对象就可以在无需附加操作的情况下,对应用进行伸缩和故障恢复了。

而对于数据库、缓存或者监控系统等有状态应用的管理,就是个挑战了。这些系统需要应用领域的知识,来正确的进行伸缩和升级,当数据丢失或不可用的时候,要进行有效的重新配置。我们希望这些应用相关的运维技能可以编码到软件之中,从而借助 Kubernetes 的能力,正确的运行和管理复杂应用。

Operator 这种软件,使用 TPR(第三方资源,现在已经升级为 CRD) 机制对 Kubernetes API 进行扩展,将特定应用的知识融入其中,让用户可以创建、配置和管理应用。和 Kubernetes 的内置资源一样,Operator 操作的不是一个单实例应用,而是集群范围内的多实例。

3)Operator如何构建

Operator 基于两个 Kubernetes 的核心概念:资源和控制器。例如内置的 ReplicaSet 资源让用户能够设置指定数量的 Pod 来运行,Kubernetes 内置的控制器会通过创建或移除 Pod 的方式,来确保 ReplicaSet 资源的状态合乎期望。Kubernetes 中有很多基础的控制器和资源用这种方式进行工作,包括 Service,Deployment 以及 DaemonSet。

用户将一个 Pod 的 RS 扩展到三个。

一段时间之后,Kubernetes 的控制器按照用户意愿创建新的 Pod。

Operator 在基础的 Kubernetes 资源和控制器之上,加入了相关的知识和配置,让 Operator 能够执行特定软件的常用任务。例如当手动对 etcd 集群进行伸缩的时候,用户必须执行几个步骤:为新的 etcd 示例创建 DNS 名称,加载新的 etcd 示例,使用 etcd 管理工具(etcdctl member add)来告知现有集群加入新成员。etcd Operator 的用户就只需要简单的把 etcd 的集群规模字段加一而已。

用户使用 kubectl 触发了一次备份。

复杂的管理任务还有很多,包括应用升级、配置备份、原生 Kubernetes API 的服务发现,应用的 TLS 认证配置以及灾难恢复等。

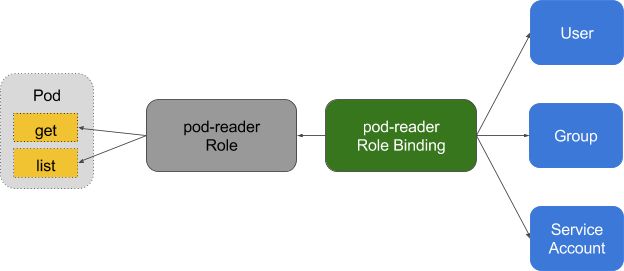

3、prometheus-operator RBAC 权限管理

创建集群角色用户prometheus-operator:

cat prometheus-operator-service-account.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus-operator

namespace: monitoring

创建prometheus-operator 集群角色:

cat prometheus-operator-cluster-role.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: prometheus-operator

rules:

- apiGroups:

- extensions

resources:

- thirdpartyresources

verbs:

- "*"

- apiGroups:

- apiextensions.k8s.io

resources:

- customresourcedefinitions

verbs:

- "*"

- apiGroups:

- monitoring.coreos.com

resources:

- alertmanagers

- prometheuses

- servicemonitors

verbs:

- "*"

- apiGroups:

- apps

resources:

- statefulsets

verbs: ["*"]

- apiGroups: [""]

resources:

- configmaps

- secrets

verbs: ["*"]

- apiGroups: [""]

resources:

- pods

verbs: ["list", "delete"]

- apiGroups: [""]

resources:

- services

- endpoints

verbs: ["get", "create", "update"]

- apiGroups: [""]

resources:

- nodes

verbs: ["list", "watch"]

- apiGroups: [""]

resources:

- namespaces

verbs: ["list"]

绑定集群角色:

cat prometheus-operator-cluster-role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus-operator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus-operator

subjects:

- kind: ServiceAccount

name: prometheus-operator

namespace: monitoring

部署prometheus-operator:

# cat prometheus-operator.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

k8s-app: prometheus-operator

name: prometheus-operator

namespace: monitoring

spec:

replicas: 1

template:

metadata:

labels:

k8s-app: prometheus-operator

spec:

containers:

- args:

- --kubelet-service=kube-system/kubelet

- --config-reloader-image=quay.io/coreos/configmap-reload:v0.0.1

image: quay.io/coreos/prometheus-operator:v0.15.0

name: prometheus-operator

ports:

- containerPort: 8080

name: http

resources:

limits:

cpu: 200m

memory: 100Mi

requests:

cpu: 100m

memory: 50Mi

serviceAccountName: prometheus-operator

部署prometheus-opeartor service:

cat prometheus-operator-service.yaml

apiVersion: v1

kind: Service

metadata:

name: prometheus-operator

namespace: monitoring

labels:

k8s-app: prometheus-operator

spec:

type: ClusterIP

ports:

- name: http

port: 8080

targetPort: http

protocol: TCP

selector:

k8s-app: prometheus-operator

部署 prometheus-operator-service-monitor:

cat prometheus-k8s-service-monitor-prometheus-operator.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: prometheus-operator

namespace: monitoring

labels:

k8s-app: prometheus-operator

spec:

endpoints:

- port: http

selector:

matchLabels:

k8s-app: prometheus-operator4、K8s部署prometheus

创建monitoring namespaces:

apiVersion: v1

kind: Namespace

metadata:

name: monitoring

1)Prometheus RBAC 权限管理

创建prometheus-k8s 角色账号:

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus-k8s

namespace: monitoring

在kube-system与monitoring namespaces空间,创建 prometheus-k8s 角色用户权限:

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: prometheus-k8s

namespace: monitoring

rules:

- apiGroups: [""]

resources:

- nodes

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources:

- configmaps

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: prometheus-k8s

namespace: kube-system

rules:

- apiGroups: [""]

resources:

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: prometheus-k8s

namespace: default

rules:

- apiGroups: [""]

resources:

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: prometheus-k8s

rules:

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

绑定用户Role:

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: prometheus-k8s

namespace: default

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: prometheus-k8s

subjects:

- kind: ServiceAccount

name: prometheus-k8s

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: prometheus-k8s

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: prometheus-k8s

subjects:

- kind: ServiceAccount

name: prometheus-k8s

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: prometheus-k8s

namespace: monitoring

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: prometheus-k8s

subjects:

- kind: ServiceAccount

name: prometheus-k8s

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus-k8s

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus-k8s

subjects:

- kind: ServiceAccount

name: prometheus-k8s

namespace: monitoring

2)使用statefulset方式部署prometheus

kind: Secret

apiVersion: v1

data:

key: QVFCZU54dFlkMVNvRUJBQUlMTUVXMldSS29mdWhlamNKaC8yRXc9PQ==

metadata:

name: ceph-secret

namespace: monitoring

type: kubernetes.io/rbd

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: prometheus-ceph-fast

namespace: monitoring

provisioner: ceph.com/rbd

parameters:

monitors: 10.18.19.91:6789

adminId: admin

adminSecretName: ceph-secret

adminSecretNamespace: monitoring

userSecretName: ceph-secret

pool: prometheus-dev

userId: admin

---

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

name: k8s

namespace: monitoring

labels:

prometheus: k8s

spec:

replicas: 2

version: v2.0.0

serviceAccountName: prometheus-k8s

serviceMonitorSelector:

matchExpressions:

- {key: k8s-app, operator: Exists}

ruleSelector:

matchLabels:

role: prometheus-rulefiles

prometheus: k8s

resources:

requests:

memory: 4G

storage:

volumeClaimTemplate:

metadata:

name: prometheus-data

annotations:

volume.beta.kubernetes.io/storage-class: prometheus-ceph-fast

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 50Gi

alerting:

alertmanagers:

- namespace: monitoring

name: alertmanager-main

port: web

3)使用ceph RBD 作为prometheus 持久化存储。(此处有坑)

本次环境使用容器化方式部署api-server 。因api-server 默认镜像没有ceph 驱动,会导致pod在挂载存储步奏启动失败。

使用provisioner方式给apis-server提供ceph 驱动:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: rbd-provisioner

namespace: monitoring

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: rbd-provisioner

spec:

containers:

- name: rbd-provisioner

image: "quay.io/external_storage/rbd-provisioner:latest"

env:

- name: PROVISIONER_NAME

value: ceph.com/rbd

args: ["-master=http://10.18.19.143:8080", "-id=rbd-provisioner"]

4)创建prometheus svc

apiVersion: v1

kind: Service

metadata:

labels:

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

nodePort: 30900

port: 9090

protocol: TCP

targetPort: web

selector:

prometheus: k8s

5)使用configmap创建prometheus报警规则文件

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-k8s-rules

namespace: monitoring

labels:

role: prometheus-rulefiles

prometheus: k8s

data:

alertmanager.rules.yaml: |+

groups:

- name: alertmanager.rules

rules:

- alert: AlertmanagerConfigInconsistent

expr: count_values("config_hash", alertmanager_config_hash) BY (service) / ON(service)

GROUP_LEFT() label_replace(prometheus_operator_alertmanager_spec_replicas, "service",

"alertmanager-$1", "alertmanager", "(.*)") != 1

for: 5m

labels:

severity: critical

annotations:

description: The configuration of the instances of the Alertmanager cluster

`{{$labels.service}}` are out of sync.

- alert: AlertmanagerDownOrMissing

expr: label_replace(prometheus_operator_alertmanager_spec_replicas, "job", "alertmanager-$1",

"alertmanager", "(.*)") / ON(job) GROUP_RIGHT() sum(up) BY (job) != 1

for: 5m

labels:

severity: warning

annotations:

description: An unexpected number of Alertmanagers are scraped or Alertmanagers

disappeared from discovery.

- alert: AlertmanagerFailedReload

expr: alertmanager_config_last_reload_successful == 0

for: 10m

labels:

severity: warning

annotations:

description: Reloading Alertmanager's configuration has failed for {{ $labels.namespace

}}/{{ $labels.pod}}.

etcd3.rules.yaml: |+

groups:

- name: ./etcd3.rules

rules:

- alert: InsufficientMembers

expr: count(up{job="etcd"} == 0) > (count(up{job="etcd"}) / 2 - 1)

for: 3m

labels:

severity: critical

annotations:

description: If one more etcd member goes down the cluster will be unavailable

summary: etcd cluster insufficient members

- alert: NoLeader

expr: etcd_server_has_leader{job="etcd"} == 0

for: 1m

labels:

severity: critical

annotations:

description: etcd member {{ $labels.instance }} has no leader

summary: etcd member has no leader

- alert: HighNumberOfLeaderChanges

expr: increase(etcd_server_leader_changes_seen_total{job="etcd"}[1h]) > 3

labels:

severity: warning

annotations:

description: etcd instance {{ $labels.instance }} has seen {{ $value }} leader

changes within the last hour

summary: a high number of leader changes within the etcd cluster are happening

- alert: HighNumberOfFailedGRPCRequests

expr: sum(rate(etcd_grpc_requests_failed_total{job="etcd"}[5m])) BY (grpc_method)

/ sum(rate(etcd_grpc_total{job="etcd"}[5m])) BY (grpc_method) > 0.01

for: 10m

labels:

severity: warning

annotations:

description: '{{ $value }}% of requests for {{ $labels.grpc_method }} failed

on etcd instance {{ $labels.instance }}'

summary: a high number of gRPC requests are failing

- alert: HighNumberOfFailedGRPCRequests

expr: sum(rate(etcd_grpc_requests_failed_total{job="etcd"}[5m])) BY (grpc_method)

/ sum(rate(etcd_grpc_total{job="etcd"}[5m])) BY (grpc_method) > 0.05

for: 5m

labels:

severity: critical

annotations:

description: '{{ $value }}% of requests for {{ $labels.grpc_method }} failed

on etcd instance {{ $labels.instance }}'

summary: a high number of gRPC requests are failing

- alert: GRPCRequestsSlow

expr: histogram_quantile(0.99, rate(etcd_grpc_unary_requests_duration_seconds_bucket[5m]))

> 0.15

for: 10m

labels:

severity: critical

annotations:

description: on etcd instance {{ $labels.instance }} gRPC requests to {{ $labels.grpc_method

}} are slow

summary: slow gRPC requests

- alert: HighNumberOfFailedHTTPRequests

expr: sum(rate(etcd_http_failed_total{job="etcd"}[5m])) BY (method) / sum(rate(etcd_http_received_total{job="etcd"}[5m]))

BY (method) > 0.01

for: 10m

labels:

severity: warning

annotations:

description: '{{ $value }}% of requests for {{ $labels.method }} failed on etcd

instance {{ $labels.instance }}'

summary: a high number of HTTP requests are failing

- alert: HighNumberOfFailedHTTPRequests

expr: sum(rate(etcd_http_failed_total{job="etcd"}[5m])) BY (method) / sum(rate(etcd_http_received_total{job="etcd"}[5m]))

BY (method) > 0.05

for: 5m

labels:

severity: critical

annotations:

description: '{{ $value }}% of requests for {{ $labels.method }} failed on etcd

instance {{ $labels.instance }}'

summary: a high number of HTTP requests are failing

- alert: HTTPRequestsSlow

expr: histogram_quantile(0.99, rate(etcd_http_successful_duration_seconds_bucket[5m]))

> 0.15

for: 10m

labels:

severity: warning

annotations:

description: on etcd instance {{ $labels.instance }} HTTP requests to {{ $labels.method

}} are slow

summary: slow HTTP requests

- alert: EtcdMemberCommunicationSlow

expr: histogram_quantile(0.99, rate(etcd_network_member_round_trip_time_seconds_bucket[5m]))

> 0.15

for: 10m

labels:

severity: warning

annotations:

description: etcd instance {{ $labels.instance }} member communication with

{{ $labels.To }} is slow

summary: etcd member communication is slow

- alert: HighNumberOfFailedProposals

expr: increase(etcd_server_proposals_failed_total{job="etcd"}[1h]) > 5

labels:

severity: warning

annotations:

description: etcd instance {{ $labels.instance }} has seen {{ $value }} proposal

failures within the last hour

summary: a high number of proposals within the etcd cluster are failing

- alert: HighFsyncDurations

expr: histogram_quantile(0.99, rate(etcd_disk_wal_fsync_duration_seconds_bucket[5m]))

> 0.5

for: 10m

labels:

severity: warning

annotations:

description: etcd instance {{ $labels.instance }} fync durations are high

summary: high fsync durations

- alert: HighCommitDurations

expr: histogram_quantile(0.99, rate(etcd_disk_backend_commit_duration_seconds_bucket[5m]))

> 0.25

for: 10m

labels:

severity: warning

annotations:

description: etcd instance {{ $labels.instance }} commit durations are high

summary: high commit durations

general.rules.yaml: |+

groups:

- name: general.rules

rules:

- alert: TargetDown

expr: 100 * (count(up == 0) BY (job) / count(up) BY (job)) > 10

for: 10m

labels:

severity: warning

annotations:

description: '{{ $value }}% of {{ $labels.job }} targets are down.'

summary: Targets are down

- alert: DeadMansSwitch

expr: vector(1)

labels:

severity: none

annotations:

description: This is a DeadMansSwitch meant to ensure that the entire Alerting

pipeline is functional.

summary: Alerting DeadMansSwitch

- record: fd_utilization

expr: process_open_fds / process_max_fds

- alert: FdExhaustionClose

expr: predict_linear(fd_utilization[1h], 3600 * 4) > 1

for: 10m

labels:

severity: warning

annotations:

description: '{{ $labels.job }}: {{ $labels.namespace }}/{{ $labels.pod }} instance

will exhaust in file/socket descriptors within the next 4 hours'

summary: file descriptors soon exhausted

- alert: FdExhaustionClose

expr: predict_linear(fd_utilization[10m], 3600) > 1

for: 10m

labels:

severity: critical

annotations:

description: '{{ $labels.job }}: {{ $labels.namespace }}/{{ $labels.pod }} instance

will exhaust in file/socket descriptors within the next hour'

summary: file descriptors soon exhausted

kube-controller-manager.rules.yaml: |+

groups:

- name: kube-controller-manager.rules

rules:

- alert: K8SControllerManagerDown

expr: absent(up{job="kube-controller-manager"} == 1)

for: 5m

labels:

severity: critical

annotations:

description: There is no running K8S controller manager. Deployments and replication

controllers are not making progress.

runbook: https://coreos.com/tectonic/docs/latest/troubleshooting/controller-recovery.html#recovering-a-controller-manager

summary: Controller manager is down

kube-scheduler.rules.yaml: |+

groups:

- name: kube-scheduler.rules

rules:

- record: cluster:scheduler_e2e_scheduling_latency_seconds:quantile

expr: histogram_quantile(0.99, sum(scheduler_e2e_scheduling_latency_microseconds_bucket)

BY (le, cluster)) / 1e+06

labels:

quantile: "0.99"

- record: cluster:scheduler_e2e_scheduling_latency_seconds:quantile

expr: histogram_quantile(0.9, sum(scheduler_e2e_scheduling_latency_microseconds_bucket)

BY (le, cluster)) / 1e+06

labels:

quantile: "0.9"

- record: cluster:scheduler_e2e_scheduling_latency_seconds:quantile

expr: histogram_quantile(0.5, sum(scheduler_e2e_scheduling_latency_microseconds_bucket)

BY (le, cluster)) / 1e+06

labels:

quantile: "0.5"

- record: cluster:scheduler_scheduling_algorithm_latency_seconds:quantile

expr: histogram_quantile(0.99, sum(scheduler_scheduling_algorithm_latency_microseconds_bucket)

BY (le, cluster)) / 1e+06

labels:

quantile: "0.99"

- record: cluster:scheduler_scheduling_algorithm_latency_seconds:quantile

expr: histogram_quantile(0.9, sum(scheduler_scheduling_algorithm_latency_microseconds_bucket)

BY (le, cluster)) / 1e+06

labels:

quantile: "0.9"

- record: cluster:scheduler_scheduling_algorithm_latency_seconds:quantile

expr: histogram_quantile(0.5, sum(scheduler_scheduling_algorithm_latency_microseconds_bucket)

BY (le, cluster)) / 1e+06

labels:

quantile: "0.5"

- record: cluster:scheduler_binding_latency_seconds:quantile

expr: histogram_quantile(0.99, sum(scheduler_binding_latency_microseconds_bucket)

BY (le, cluster)) / 1e+06

labels:

quantile: "0.99"

- record: cluster:scheduler_binding_latency_seconds:quantile

expr: histogram_quantile(0.9, sum(scheduler_binding_latency_microseconds_bucket)

BY (le, cluster)) / 1e+06

labels:

quantile: "0.9"

- record: cluster:scheduler_binding_latency_seconds:quantile

expr: histogram_quantile(0.5, sum(scheduler_binding_latency_microseconds_bucket)

BY (le, cluster)) / 1e+06

labels:

quantile: "0.5"

- alert: K8SSchedulerDown

expr: absent(up{job="kube-scheduler"} == 1)

for: 5m

labels:

severity: critical

annotations:

description: There is no running K8S scheduler. New pods are not being assigned

to nodes.

runbook: https://coreos.com/tectonic/docs/latest/troubleshooting/controller-recovery.html#recovering-a-scheduler

summary: Scheduler is down

kube-state-metrics.rules.yaml: |+

groups:

- name: kube-state-metrics.rules

rules:

- alert: DeploymentGenerationMismatch

expr: kube_deployment_status_observed_generation != kube_deployment_metadata_generation

for: 15m

labels:

severity: warning

annotations:

description: Observed deployment generation does not match expected one for

deployment {{$labels.namespaces}}{{$labels.deployment}}

- alert: DeploymentReplicasNotUpdated

expr: ((kube_deployment_status_replicas_updated != kube_deployment_spec_replicas)

or (kube_deployment_status_replicas_available != kube_deployment_spec_replicas))

unless (kube_deployment_spec_paused == 1)

for: 15m

labels:

severity: warning

annotations:

description: Replicas are not updated and available for deployment {{$labels.namespaces}}/{{$labels.deployment}}

- alert: DaemonSetRolloutStuck

expr: kube_daemonset_status_current_number_ready / kube_daemonset_status_desired_number_scheduled

* 100 < 100

for: 15m

labels:

severity: warning

annotations:

description: Only {{$value}}% of desired pods scheduled and ready for daemon

set {{$labels.namespaces}}/{{$labels.daemonset}}

- alert: K8SDaemonSetsNotScheduled

expr: kube_daemonset_status_desired_number_scheduled - kube_daemonset_status_current_number_scheduled

> 0

for: 10m

labels:

severity: warning

annotations:

description: A number of daemonsets are not scheduled.

summary: Daemonsets are not scheduled correctly

- alert: DaemonSetsMissScheduled

expr: kube_daemonset_status_number_misscheduled > 0

for: 10m

labels:

severity: warning

annotations:

description: A number of daemonsets are running where they are not supposed

to run.

summary: Daemonsets are not scheduled correctly

- alert: PodFrequentlyRestarting

expr: increase(kube_pod_container_status_restarts[1h]) > 5

for: 10m

labels:

severity: warning

annotations:

description: Pod {{$labels.namespaces}}/{{$labels.pod}} is was restarted {{$value}}

times within the last hour

kubelet.rules.yaml: |+

groups:

- name: kubelet.rules

rules:

- alert: K8SNodeNotReady

expr: kube_node_status_condition{condition="Ready",status="true"} == 0

for: 1h

labels:

severity: warning

annotations:

description: The Kubelet on {{ $labels.node }} has not checked in with the API,

or has set itself to NotReady, for more than an hour

summary: Node status is NotReady

- alert: K8SManyNodesNotReady

expr: count(kube_node_status_condition{condition="Ready",status="true"} == 0)

> 1 and (count(kube_node_status_condition{condition="Ready",status="true"} ==

0) / count(kube_node_status_condition{condition="Ready",status="true"})) > 0.2

for: 1m

labels:

severity: critical

annotations:

description: '{{ $value }}% of Kubernetes nodes are not ready'

- alert: K8SKubeletDown

expr: count(up{job="kubelet"} == 0) / count(up{job="kubelet"}) * 100 > 3

for: 1h

labels:

severity: warning

annotations:

description: Prometheus failed to scrape {{ $value }}% of kubelets.

- alert: K8SKubeletDown

expr: (absent(up{job="kubelet"} == 1) or count(up{job="kubelet"} == 0) / count(up{job="kubelet"}))

* 100 > 1

for: 1h

labels:

severity: critical

annotations:

description: Prometheus failed to scrape {{ $value }}% of kubelets, or all Kubelets

have disappeared from service discovery.

summary: Many Kubelets cannot be scraped

- alert: K8SKubeletTooManyPods

expr: kubelet_running_pod_count > 100

for: 10m

labels:

severity: warning

annotations:

description: Kubelet {{$labels.instance}} is running {{$value}} pods, close

to the limit of 110

summary: Kubelet is close to pod limit

kubernetes.rules.yaml: |+

groups:

- name: kubernetes.rules

rules:

- record: pod_name:container_memory_usage_bytes:sum

expr: sum(container_memory_usage_bytes{container_name!="POD",pod_name!=""}) BY

(pod_name)

- record: pod_name:container_spec_cpu_shares:sum

expr: sum(container_spec_cpu_shares{container_name!="POD",pod_name!=""}) BY (pod_name)

- record: pod_name:container_cpu_usage:sum

expr: sum(rate(container_cpu_usage_seconds_total{container_name!="POD",pod_name!=""}[5m]))

BY (pod_name)

- record: pod_name:container_fs_usage_bytes:sum

expr: sum(container_fs_usage_bytes{container_name!="POD",pod_name!=""}) BY (pod_name)

- record: namespace:container_memory_usage_bytes:sum

expr: sum(container_memory_usage_bytes{container_name!=""}) BY (namespace)

- record: namespace:container_spec_cpu_shares:sum

expr: sum(container_spec_cpu_shares{container_name!=""}) BY (namespace)

- record: namespace:container_cpu_usage:sum

expr: sum(rate(container_cpu_usage_seconds_total{container_name!="POD"}[5m]))

BY (namespace)

- record: cluster:memory_usage:ratio

expr: sum(container_memory_usage_bytes{container_name!="POD",pod_name!=""}) BY

(cluster) / sum(machine_memory_bytes) BY (cluster)

- record: cluster:container_spec_cpu_shares:ratio

expr: sum(container_spec_cpu_shares{container_name!="POD",pod_name!=""}) / 1000

/ sum(machine_cpu_cores)

- record: cluster:container_cpu_usage:ratio

expr: rate(container_cpu_usage_seconds_total{container_name!="POD",pod_name!=""}[5m])

/ sum(machine_cpu_cores)

- record: apiserver_latency_seconds:quantile

expr: histogram_quantile(0.99, rate(apiserver_request_latencies_bucket[5m])) /

1e+06

labels:

quantile: "0.99"

- record: apiserver_latency:quantile_seconds

expr: histogram_quantile(0.9, rate(apiserver_request_latencies_bucket[5m])) /

1e+06

labels:

quantile: "0.9"

- record: apiserver_latency_seconds:quantile

expr: histogram_quantile(0.5, rate(apiserver_request_latencies_bucket[5m])) /

1e+06

labels:

quantile: "0.5"

- alert: APIServerLatencyHigh

expr: apiserver_latency_seconds:quantile{quantile="0.99",subresource!="log",verb!~"^(?:WATCH|WATCHLIST|PROXY|CONNECT)$"}

> 1

for: 10m

labels:

severity: warning

annotations:

description: the API server has a 99th percentile latency of {{ $value }} seconds

for {{$labels.verb}} {{$labels.resource}}

- alert: APIServerLatencyHigh

expr: apiserver_latency_seconds:quantile{quantile="0.99",subresource!="log",verb!~"^(?:WATCH|WATCHLIST|PROXY|CONNECT)$"}

> 4

for: 10m

labels:

severity: critical

annotations:

description: the API server has a 99th percentile latency of {{ $value }} seconds

for {{$labels.verb}} {{$labels.resource}}

- alert: APIServerErrorsHigh

expr: rate(apiserver_request_count{code=~"^(?:5..)$"}[5m]) / rate(apiserver_request_count[5m])

* 100 > 2

for: 10m

labels:

severity: warning

annotations:

description: API server returns errors for {{ $value }}% of requests

- alert: APIServerErrorsHigh

expr: rate(apiserver_request_count{code=~"^(?:5..)$"}[5m]) / rate(apiserver_request_count[5m])

* 100 > 5

for: 10m

labels:

severity: critical

annotations:

description: API server returns errors for {{ $value }}% of requests

- alert: K8SApiserverDown

expr: absent(up{job="apiserver"} == 1)

for: 20m

labels:

severity: critical

annotations:

description: No API servers are reachable or all have disappeared from service

discovery

node.rules.yaml: |+

groups:

- name: node.rules

rules:

- record: instance:node_cpu:rate:sum

expr: sum(rate(node_cpu{mode!="idle",mode!="iowait",mode!~"^(?:guest.*)$"}[3m]))

BY (instance)

- record: instance:node_filesystem_usage:sum

expr: sum((node_filesystem_size{mountpoint="/"} - node_filesystem_free{mountpoint="/"}))

BY (instance)

- record: instance:node_network_receive_bytes:rate:sum

expr: sum(rate(node_network_receive_bytes[3m])) BY (instance)

- record: instance:node_network_transmit_bytes:rate:sum

expr: sum(rate(node_network_transmit_bytes[3m])) BY (instance)

- record: instance:node_cpu:ratio

expr: sum(rate(node_cpu{mode!="idle"}[5m])) WITHOUT (cpu, mode) / ON(instance)

GROUP_LEFT() count(sum(node_cpu) BY (instance, cpu)) BY (instance)

- record: cluster:node_cpu:sum_rate5m

expr: sum(rate(node_cpu{mode!="idle"}[5m]))

- record: cluster:node_cpu:ratio

expr: cluster:node_cpu:rate5m / count(sum(node_cpu) BY (instance, cpu))

- alert: NodeExporterDown

expr: absent(up{job="node-exporter"} == 1)

for: 10m

labels:

severity: warning

annotations:

description: Prometheus could not scrape a node-exporter for more than 10m,

or node-exporters have disappeared from discovery

- alert: NodeDiskRunningFull

expr: predict_linear(node_filesystem_free[6h], 3600 * 24) < 0

for: 30m

labels:

severity: warning

annotations:

description: device {{$labels.device}} on node {{$labels.instance}} is running

full within the next 24 hours (mounted at {{$labels.mountpoint}})

- alert: NodeDiskRunningFull

expr: predict_linear(node_filesystem_free[30m], 3600 * 2) < 0

for: 10m

labels:

severity: critical

annotations:

description: device {{$labels.device}} on node {{$labels.instance}} is running

full within the next 2 hours (mounted at {{$labels.mountpoint}})

- alert: NodeCPUUsage

expr: (100 - (avg by (instance) (irate(node_cpu{job="node-exporter",mode="idle"}[5m])) * 100)) > 5

for: 10m

labels:

severity: critical

annotations:

# description: {{$labels.instance}} CPU usage is above 75% (current value is {{ $value }})

prometheus.rules.yaml: |+

groups:

- name: prometheus.rules

rules:

- alert: PrometheusConfigReloadFailed

expr: prometheus_config_last_reload_successful == 0

for: 10m

labels:

severity: warning

annotations:

description: Reloading Prometheus' configuration has failed for {{$labels.namespace}}/{{$labels.pod}}

- alert: PrometheusNotificationQueueRunningFull

expr: predict_linear(prometheus_notifications_queue_length[5m], 60 * 30) > prometheus_notifications_queue_capacity

for: 10m

labels:

severity: warning

annotations:

description: Prometheus' alert notification queue is running full for {{$labels.namespace}}/{{

$labels.pod}}

- alert: PrometheusErrorSendingAlerts

expr: rate(prometheus_notifications_errors_total[5m]) / rate(prometheus_notifications_sent_total[5m])

> 0.01

for: 10m

labels:

severity: warning

annotations:

description: Errors while sending alerts from Prometheus {{$labels.namespace}}/{{

$labels.pod}} to Alertmanager {{$labels.Alertmanager}}

- alert: PrometheusErrorSendingAlerts

expr: rate(prometheus_notifications_errors_total[5m]) / rate(prometheus_notifications_sent_total[5m])

> 0.03

for: 10m

labels:

severity: critical

annotations:

description: Errors while sending alerts from Prometheus {{$labels.namespace}}/{{

$labels.pod}} to Alertmanager {{$labels.Alertmanager}}

- alert: PrometheusNotConnectedToAlertmanagers

expr: prometheus_notifications_alertmanagers_discovered < 1

for: 10m

labels:

severity: warning

annotations:

description: Prometheus {{ $labels.namespace }}/{{ $labels.pod}} is not connected

to any Alertmanagers

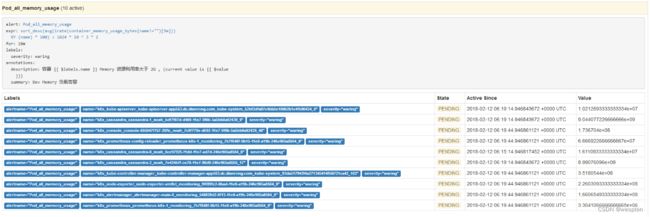

noah_pod.rules.yaml: |+

groups:

- name: noah_pod.rules

rules:

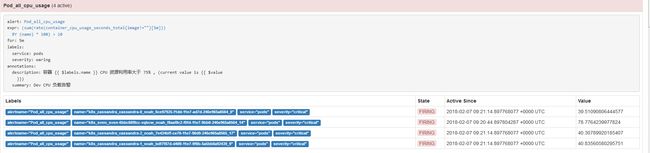

- alert: Pod_all_cpu_usage

expr: (sum by(name)(rate(container_cpu_usage_seconds_total{image!=""}[5m]))*100) > 10

for: 5m

labels:

severity: critical

service: pods

annotations:

description: 容器 {{ $labels.name }} CPU 资源利用率大于 75% , (current value is {{ $value }})

summary: Dev CPU 负载告警

- alert: Pod_all_memory_usage

expr: sort_desc(avg by(name)(irate(container_memory_usage_bytes{name!=""}[5m]))*100) > 1024*10^3*2

for: 10m

labels:

severity: critical

annotations:

description: 容器 {{ $labels.name }} Memory 资源利用率大于 2G , (current value is {{ $value }})

summary: Dev Memory 负载告警

- alert: Pod_all_network_receive_usage

expr: sum by (name)(irate(container_network_receive_bytes_total{container_name="POD"}[1m])) > 1024*1024*50

for: 10m

labels:

severity: critical

annotations:

description: 容器 {{ $labels.name }} network_receive 资源利用率大于 50M , (current value is {{ $value }})

summary: network_receive 负载告警5、部署kube-state-metrics

kube-state-metricsgithub 项目地址

查看kube-state配置文件:

# tree kube-state-metrics/

kube-state-metrics/

├── kube-state-metrics-cluster-role-binding.yaml

├── kube-state-metrics-cluster-role.yaml

├── kube-state-metrics-deployment.yaml

├── kube-state-metrics-role-binding.yaml

├── kube-state-metrics-role.yaml

├── kube-state-metrics-service-account.yaml

├── kube-state-metrics-service.yaml

└── prometheus-k8s-service-monitor-kube-state-metrics.yaml

0 directories, 8 files

执行创建 kube-state-metrics:

kubectl apply -f kube-state-metrics/

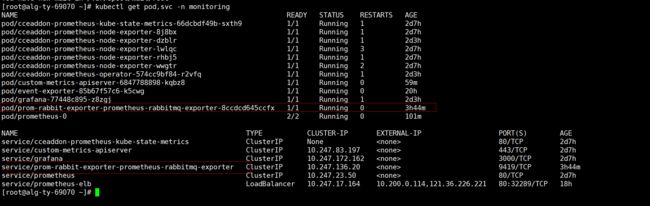

检查服务启动状态:

[root@app553 ~]# kubectl get pod,svc -n monitoring

NAME READY STATUS RESTARTS AGE

po/kube-state-metrics-678d94f978-nv4g5 2/2 Running 0 52m

po/prometheus-k8s-0 2/2 Running 0 4d

po/prometheus-k8s-1 2/2 Running 0 4d

po/prometheus-operator-68589bfbfd-pf4tk 1/1 Running 0 4d

po/rbd-provisioner-698ddf4568-w7s29 1/1 Running 0 4d

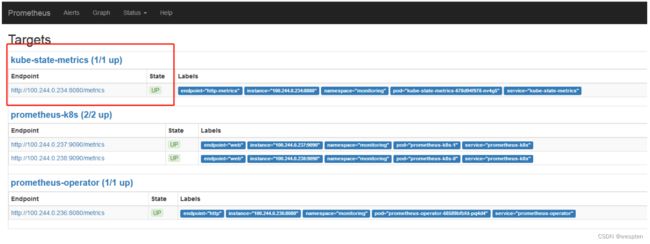

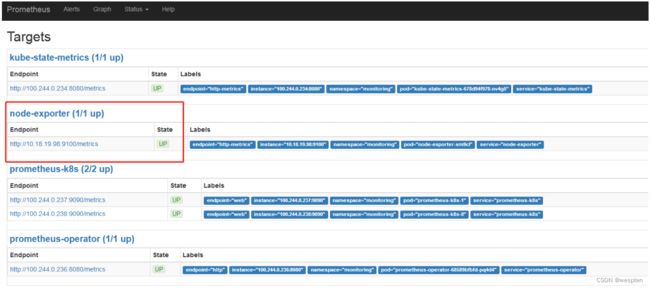

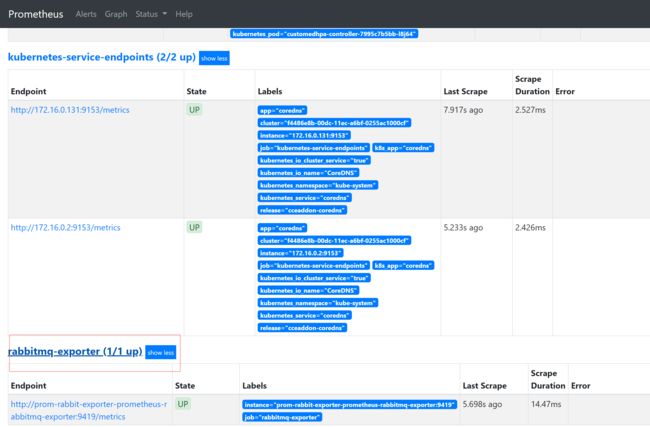

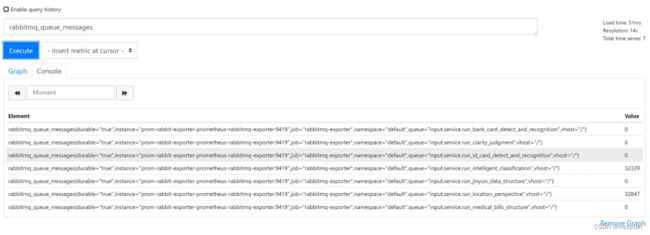

检查kube-state-metrics在prometheus 启动状态:

6、node-export

node-export 主要主要是监控kubernetes 集群node 物理主机:cpu、memory、network、disk 等基础监控资源。

使用daemonset 方式 自动为每个node部署监控agent:

# tree node-exporter/

node-exporter/

├── node-exporter-daemonset.yaml

├── node-exporter-service.yaml

└── prometheus-k8s-service-monitor-node-exporter.yaml

创建node-export 服务:

kubectl apply -f node-exporter/

查看 pod 是状态:

# kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

kube-state-metrics-678d94f978-nv4g5 2/2 Running 0 19h

node-exporter-xm9cl 1/1 Running 0 1m

prometheus-k8s-0 2/2 Running 0 18h

prometheus-k8s-1 2/2 Running 0 18h

prometheus-operator-68589bfbfd-pq4d4 1/1 Running 0 18h

rbd-provisioner-698ddf4568-d57l2 1/1 Running 0 18h

查看prometheus target状态:

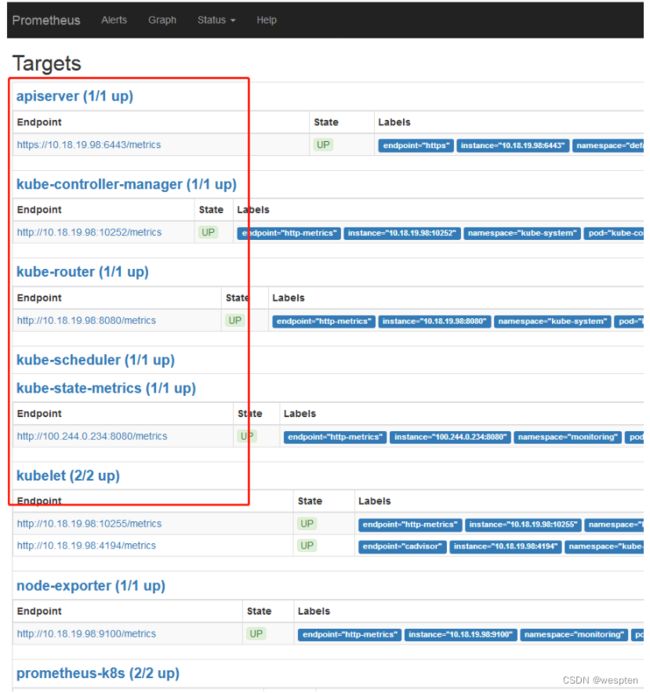

7、K8s核心组件target监控

修改api-server、kube-controller-manager、kube-scheduler、kubelets、kube-router配置文件监听地址,添加如下选项,使其能访问 metrics。

api-server 添加选项:

--insecure-port=8080

--insecure-bind-address=0.0.0.0

kube-controller-manager:

--bind-address=0.0.0.0

--secure-port=10257

kube-scheduler:

kubelet 添加endpoint 配置prometheus taget 访问权限。

--address=0.0.0.0

--authentication-token-webhook=true

--authorization-mode=Webhook

kube-router配置metrics:

参考地址

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "10258"

添加启动选项:

---master=10.18.19.98:8080

--metrics-port=10258

检查k8s 核心组件监听地址:

netstat -tlunp | grep kube

tcp 0 0 0.0.0.0:50051 0.0.0.0:* LISTEN 86466/kube-router

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 108134/kubelet

tcp 0 0 0.0.0.0:10250 0.0.0.0:* LISTEN 108134/kubelet

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 175680/kube-apiserv

tcp 0 0 0.0.0.0:10252 0.0.0.0:* LISTEN 168570/kube-control

tcp 0 0 0.0.0.0:10255 0.0.0.0:* LISTEN 108134/kubelet

tcp 0 0 0.0.0.0:8080 0.0.0.0:* LISTEN 175680/kube-apiserv

tcp 0 0 0.0.0.0:10258 0.0.0.0:* LISTEN 86466/kube-router

tcp 0 0 0.0.0.0:179 0.0.0.0:* LISTEN 86466/kube-router

tcp 0 0 0.0.0.0:20244 0.0.0.0:* LISTEN 86466/kube-router

tcp 0 0 0.0.0.0:4194 0.0.0.0:* LISTEN 108134/kubelet

访问各个组件 metrics:

curl http://10.18.19.98:8080/metrics #kube-apiser

curl http://10.18.19.98:10251/metrics #kube-schedul

curl http://10.18.19.98:10252/metrics #kube-controlle

curl http://10.18.19.98:10255/metrics #kubelet

curl http://10.18.19.98:10258/metrics #kube-router

创建k8s 组件servermonitor服务:

tree exporter-kube-apiserver/ exporter-kube-controller-manager/ exporter-kube-scheduler/ exporter-kube-router/ exporter-kubelets/

exporter-kube-apiserver/

└── prometheus-k8s-service-monitor-apiserver.yaml

exporter-kube-controller-manager/

├── kube-controller-manager-svc.yaml

└── prometheus-k8s-service-monitor-kube-controller-manager.yaml

exporter-kube-scheduler/

├── kube-scheduler-svc.yaml

└── prometheus-k8s-service-monitor-kube-scheduler.yaml

exporter-kube-router/

├── kube-router-svc.yaml

└── prometheus-k8s-service-monitor-kube-scheduler.yaml

exporter-kubelets/

└── prometheus-k8s-service-monitor-kubelet.yaml

kubectl apply -f exporter-kube-apiserver/

注意 api-server 服务 namespace 在default使用默认svc kubernetes。其余组件服务在kube-system 空间 ,需要单独创建svc。

prometheus target检查组件状态:

查看api-server servicemonitor 资源:

kubectl get servicemonitors -n monitoring

NAME AGE

kube-apiserver 18m

kube-controller-manager 18m

kube-router 18m

kube-scheduler 18m

kube-state-metrics 1d

kubelet 18m

node-exporter 7h

prometheus 1d

prometheus-operator 1d8、Prometheus基本查询语法

prometheus从根本上存储的所有数据都是时间序列: 具有时间戳的数据流只属于单个度量指标和该度量指标下的多个标签维度。除了存储时间序列数据外,Prometheus也可以利用查询表达式存储5分钟的返回结果中的时间序列数据。

Prometheus查询:

Prometheus提供一个函数式的表达式语言,可以使用户实时地查找和聚合时间序列数据。表达式计算结果可以在图表中展示,也可以在Prometheus表达式浏览器中以表格形式展示,或者作为数据源, 以HTTP API的方式提供给外部系统使用。

表达式语言数据类型:

在Prometheus的表达式语言中,任何表达式或者子表达式都可以归为四种类型:

- 即时向量(instant vector) 包含每个时间序列的单个样本的一组时间序列,共享相同的时间戳。

- 范围向量(Range vector) 包含每个时间序列随时间变化的数据点的一组时间序列。

- 标量(Scalar) 一个简单的数字浮点值

- 字符串(String) 一个简单的字符串值(目前未被使用)

examples:

查询K8S集群内所有apiserver健康状态

(sum(up{job="apiserver"} == 1) / count(up{job="apiserver"})) * 100

查询pod 聚合一分钟之内的cpu 负载

sum by (container_name)(rate(container_cpu_usage_seconds_total{image!="",container_name!="POD",pod_name="acw62egvxd95l7t3q5uxee"}[1m]))

时间序列选择器:

- 即时向量选择器

即时向量选择器允许选择一组时间序列,或者某个给定的时间戳的样本数据。下面这个例子选择了具有container_cpu_usage_seconds_total的时间序列:

container_cpu_usage_seconds_total

你可以通过附加一组标签,并用{}括起来,来进一步筛选这些时间序列。下面这个例子只选择有container_cpu_usage_seconds_total名称的、有prometheus工作标签的、有pod_name组标签的时间序列:

container_cpu_usage_seconds_total{image!="",container_name!="POD",pod_name="acw62egvxd95l7t3q5uxee"}

另外,也可以也可以将标签值反向匹配,或者对正则表达式匹配标签值。下面列举匹配操作符:

=:选择正好相等的字符串标签

!=:选择不相等的字符串标签

=~:选择匹配正则表达式的标签(或子标签)

!=:选择不匹配正则表达式的标签(或子标签)

例如,选择staging、testing、development环境下的,GET之外的HTTP方法的http_requests_total的时间序列:

http_requests_total{environment=~"staging|testing|development",method!="GET"}

- 范围向量选择器

范围向量表达式正如即时向量表达式一样运行,前者返回从当前时刻的时间序列回来。语法是,在一个向量表达式之后添加[]来表示时间范围,持续时间用数字表示,后接下面单元之一:

时间长度有一个数值决定,后面可以跟下面的单位:

s- secondsm- minutesh- hoursd- daysw- weeksy- years

在下面这个例子中,我们选择此刻开始1分钟内的所有记录,metric名称为container_cpu_usage_seconds_total、作业标签为pod_name的时间序列的所有值:

irate(container_cpu_usage_seconds_total{image!="",container_name!="POD",pod_name="acw62egvxd95l7t3q5uxee"}[1m])

- 偏移修饰符(offset modifier)

偏移修饰符允许更改查询中单个即时向量和范围向量的时间偏移量,例如,以下表达式返回相对于当前查询时间5分钟前的container_cpu_usage_seconds_total值:

container_cpu_usage_seconds_total offset 5m

如下是范围向量的相同样本。这返回container_cpu_usage_seconds_total在一天前5分钟内的速率:

(rate(container_cpu_usage_seconds_total{pod_name="acw62egvxd95l7t3q5uxee"} [5m] offset 1d))

- 操作符

Prometheus支持多种二元和聚合的操作符请查看这里 - 函数

Prometheus支持多种函数,来对数据进行操作请查看这里

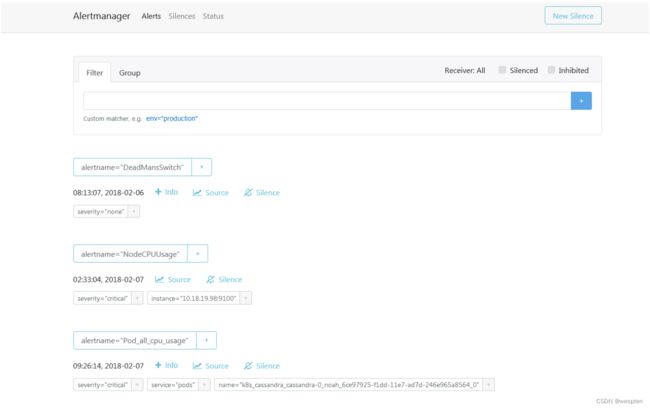

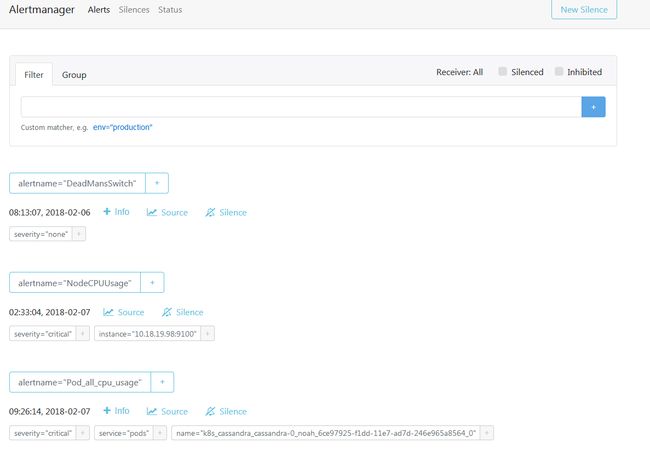

9、Prometheus alerts模块部署

Pormetheus的警告由独立的两部分组成。Prometheus服务中的警告规则发送警告到Alertmanager。然后这个Alertmanager管理这些警告。包括silencing, inhibition, aggregation,以及通过一些方法发送通知,例如:email,PagerDuty和HipChat。

建立警告和通知的主要步骤:

- 创建和配置Alertmanager

- 启动Prometheus服务时,通过

-alertmanager.url标志配置Alermanager地址,以便Prometheus服务能和Alertmanager建立连接。 - 在Prometheus服务中创建警告规则

创建和配置Alertmanager:

kubectl apply -f alertmanager/

文件说明:

# tree alertmanager/

alertmanager/

├── alertmanager.conf # 配置文件

├── alertmanager.conf.base64 # 配置文件转化为base64格式

├── alertmanager-config.sh # base64 转换脚本

├── alertmanager-config.yaml # 以Secret 方式加载alertmanager 配置

├── alertmanager-service.yaml # 创建alert svc

├── alertmanager-templates-default.conf # 邮件告警通知模板

├── alertmanager-templates-slack.conf

├── alertmanager.yaml # 在K8S 中创建Alertmanager资源类型

├── default.base64

└── prometheus-k8s-service-monitor-alertmanager.yaml

查看alertmanager 管理平台:

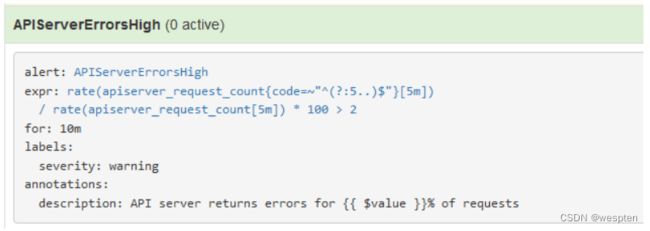

http://10.18.19.98:30903/#/status10、alertmanager报警规则

prometheus的配置文件名为prometheus.yml,alertmanager的配置文件名为alertmanager.yml

报警:指prometheus将监测到的异常事件发送给alertmanager,而不是指发送邮件通知

通知:指alertmanager发送异常事件的通知(邮件、webhook等)

1. 报警规则

在prometheus.yml中指定匹配报警规则的间隔

# How frequently to evaluate rules.

[ evaluation_interval: | default = 1m ]

在prometheus.yml中指定规则文件(可使用通配符,如rules/*.rules)

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

- "/etc/prometheus/alert.rules"

并基于以下模板:

ALERT

IF

[ FOR ]

[ LABELS 其中:

Alert name是警报标识符。它不需要是唯一的。

Expression是为了触发警报而被评估的条件。它通常使用现有指标作为/metrics端点返回的指标。

Duration是规则必须有效的时间段。例如,5s表示5秒。

Label set是将在消息模板中使用的一组标签。

在prometheus-k8s-statefulset.yaml 文件创建ruleSelector,标记报警规则角色。在prometheus-k8s-rules.yaml 报警规则文件中引用

ruleSelector:

matchLabels:

role: prometheus-rulefiles

prometheus: k8s

在prometheus-k8s-rules.yaml 使用configmap 方式引用prometheus-rulefiles

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-k8s-rules

namespace: monitoring

labels:

role: prometheus-rulefiles

prometheus: k8s

data:

pod.rules.yaml: |+

groups:

- name: noah_pod.rules

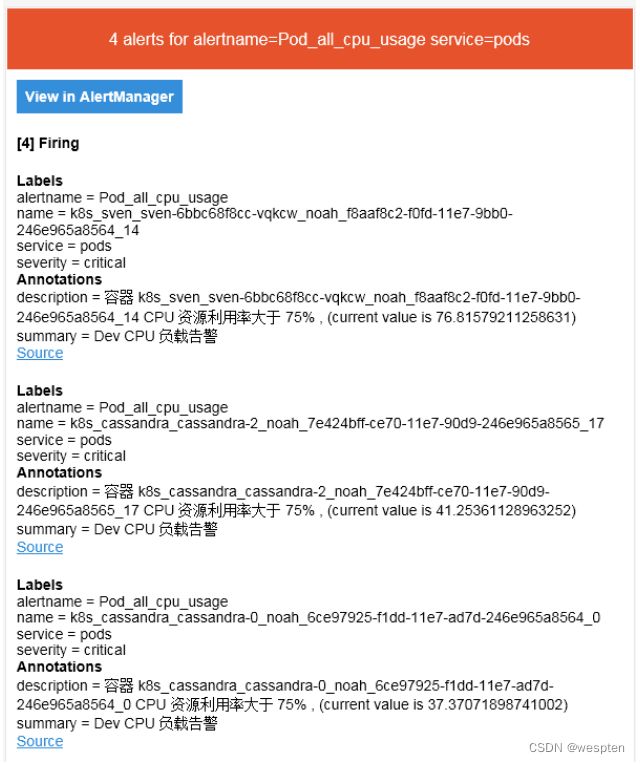

rules:

- alert: Pod_all_cpu_usage

expr: (sum by(name)(rate(container_cpu_usage_seconds_total{image!=""}[5m]))*100) > 10

for: 5m

labels:

severity: critical

service: pods

annotations:

description: 容器 {{ $labels.name }} CPU 资源利用率大于 75% , (current value is {{ $value }})

summary: Dev CPU 负载告警

- alert: Pod_all_memory_usage

expr: sort_desc(avg by(name)(irate(container_memory_usage_bytes{name!=""}[5m]))*100) > 1024*10^3*2

for: 10m

labels:

severity: critical

annotations:

description: 容器 {{ $labels.name }} Memory 资源利用率大于 2G , (current value is {{ $value }})

summary: Dev Memory 负载告警

- alert: Pod_all_network_receive_usage

expr: sum by (name)(irate(container_network_receive_bytes_total{container_name="POD"}[1m])) > 1024*1024*50

for: 10m

labels:

severity: critical

annotations:

description: 容器 {{ $labels.name }} network_receive 资源利用率大于 50M , (current value is {{ $value }})

summary: network_receive 负载告警

配置文件设置好后,prometheus-opeartor自动重新读取配置。

如果二次修改comfigmap 内容只需要apply:

kubectl apply -f prometheus-k8s-rules.yaml

将邮件通知与rules对比一下(还需要配置alertmanager.yml才能收到邮件):

2. 通知规则

设置alertmanager.yml的的route与receivers:

global:

# ResolveTimeout is the time after which an alert is declared resolved

# if it has not been updated.

resolve_timeout: 5m

# The smarthost and SMTP sender used for mail notifications.

smtp_smarthost: 'xxxxx'

smtp_from: 'xxxxxxx'

smtp_auth_username: 'xxxxx'

smtp_auth_password: 'xxxxxx'

# The API URL to use for Slack notifications.

slack_api_url: 'https://hooks.slack.com/services/some/api/token'

# # The directory from which notification templates are read.

templates:

- '*.tmpl'

# The root route on which each incoming alert enters.

route:

# The labels by which incoming alerts are grouped together. For example,

# multiple alerts coming in for cluster=A and alertname=LatencyHigh would

# be batched into a single group.

group_by: ['alertname', 'cluster', 'service']

# When a new group of alerts is created by an incoming alert, wait at

# least 'group_wait' to send the initial notification.

# This way ensures that you get multiple alerts for the same group that start

# firing shortly after another are batched together on the first

# notification.

group_wait: 30s

# When the first notification was sent, wait 'group_interval' to send a batch

# of new alerts that started firing for that group.

group_interval: 5m

# If an alert has successfully been sent, wait 'repeat_interval' to

# resend them.

#repeat_interval: 1m

repeat_interval: 15m

# A default receiver

# If an alert isn't caught by a route, send it to default.

receiver: default

# All the above attributes are inherited by all child routes and can

# overwritten on each.

# The child route trees.

routes:

- match:

severity: critical

receiver: email_alert

receivers:

- name: 'default'

email_configs:

- to : '[email protected]'

send_resolved: true

- name: 'email_alert'

email_configs:

- to : '[email protected]'

send_resolved: true

名词解释:

- Route

route属性用来设置报警的分发策略,它是一个树状结构,按照深度优先从左向右的顺序进行匹配

// Match does a depth-first left-to-right search through the route tree

// and returns the matching routing nodes.

func (r *Route) Match(lset model.LabelSet) []*Route {

- Alert

Alert是alertmanager接收到的报警,类型如下:

// Alert is a generic representation of an alert in the Prometheus eco-system.

type Alert struct {

// Label value pairs for purpose of aggregation, matching, and disposition

// dispatching. This must minimally include an "alertname" label.

Labels LabelSet `json:"labels"`

// Extra key/value information which does not define alert identity.

Annotations LabelSet `json:"annotations"`

// The known time range for this alert. Both ends are optional.

StartsAt time.Time `json:"startsAt,omitempty"`

EndsAt time.Time `json:"endsAt,omitempty"`

GeneratorURL string `json:"generatorURL"`

}

具有相同Lables的Alert(key和value都相同)才会被认为是同一种。在prometheus rules文件配置的一条规则可能会产生多种报警。

-

Group

alertmanager会根据group_by配置将Alert分组。如下规则,当go_goroutines等于4时会收到三条报警,alertmanager会将这三条报警分成两组向receivers发出通知:

ALERT test1

IF go_goroutines > 1

LABELS {label1="l1", label2="l2", status="test"}

ALERT test2

IF go_goroutines > 2

LABELS {label1="l2", label2="l2", status="test"}

ALERT test3

IF go_goroutines > 3

LABELS {label1="l2", label2="l1", status="test"}

主要处理流程:

-

接收到Alert,根据labels判断属于哪些Route(可存在多个Route,一个Route有多个Group,一个Group有多个Alert)

-

将Alert分配到Group中,没有则新建Group

-

新的Group等待group_wait指定的时间(等待时可能收到同一Group的Alert),根据resolve_timeout判断Alert是否解决,然后发送通知

-

已有的Group等待group_interval指定的时间,判断Alert是否解决,当上次发送通知到现在的间隔大于repeat_interval或者Group有更新时会发送通知

3)Alertmanager

Alertmanager是警报的缓冲区,它具有以下特征:

可以通过特定端点(不是特定于Prometheus)接收警报。

可以将警报重定向到接收者,如hipchat、邮件或其他人。

足够智能,可以确定已经发送了类似的通知。所以,如果出现问题,你不会被成千上万的电子邮件淹没。

Alertmanager客户端(在这种情况下是Prometheus)首先发送POST消息,并将所有要处理的警报发送到/ api / v1 / alerts。例如:

[

{

"labels": {

"alertname": "low_connected_users",

"severity": "warning"

},

"annotations": {

"description": "Instance play-app:9000 under lower load",

"summary": "play-app:9000 of job playframework-app is under lower load"

}

}]

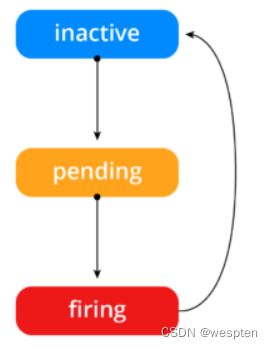

alert工作流程:

一旦这些警报存储在Alertmanager,它们可能处于以下任何状态:

-

Inactive:这里什么都没有发生。

-

Pending:客户端告诉我们这个警报必须被触发。然而,警报可以被分组、压抑/抑制或者静默/静音。一旦所有的验证都通过了,我们就转到Firing。

-

Firing:警报发送到Notification Pipeline,它将联系警报的所有接收者。然后客户端告诉我们警报解除,所以转换到状Inactive状态。

Prometheus有一个专门的端点,允许我们列出所有的警报,并遵循状态转换。Prometheus所示的每个状态以及导致过渡的条件如下所示:

规则不符合:

警报没有激活。

规则符合:

警报现在处于活动状态。 执行一些验证是为了避免淹没接收器的消息。

警报发送到接收者:

4)Inhibition

抑制是指当警报发出后,停止重复发送由此警报引发其他错误的警报的机制。

例如,当警报被触发,通知整个集群不可达,可以配置Alertmanager忽略由该警报触发而产生的所有其他警报,这可以防止通知数百或数千与此问题不相关的其他警报。

抑制机制可以通过Alertmanager的配置文件来配置。

Inhibition允许在其他警报处于触发状态时,抑制一些警报的通知。例如,如果同一警报(基于警报名称)已经非常紧急,那么我们可以配置一个抑制来使任何警告级别的通知静音。 alertmanager.yml文件的相关部分如下所示:

inhibit_rules:- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['low_connected_users']

配置抑制规则,是存在另一组匹配器匹配的情况下,静音其他被引发警报的规则。这两个警报,必须有一组相同的标签。

# Matchers that have to be fulfilled in the alerts to be muted.

target_match:

[ : , ... ]

target_match_re:

[ : , ... ]

# Matchers for which one or more alerts have to exist for the

# inhibition to take effect.

source_match:

[ : , ... ]

source_match_re:

[ : , ... ]

# Labels that must have an equal value in the source and target

# alert for the inhibition to take effect.

[ equal: '[' , ... ']' ]

5)Silences

Silences是快速地使警报暂时静音的一种方法。 我们直接通过Alertmanager管理控制台中的专用页面来配置它们。在尝试解决严重的生产问题时,这对避免收到垃圾邮件很有用。

参考:

alertmanager 参考资料

抑制规则 inhibit_rule参考资料

五、云原生设计方案实践

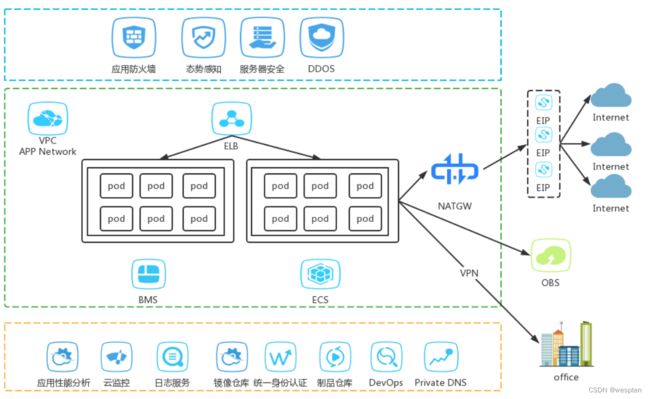

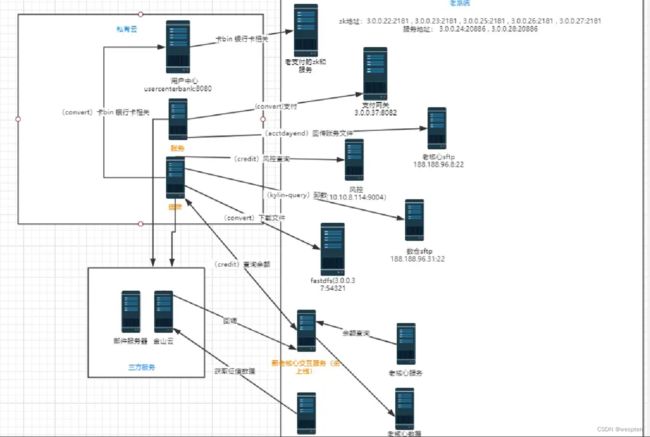

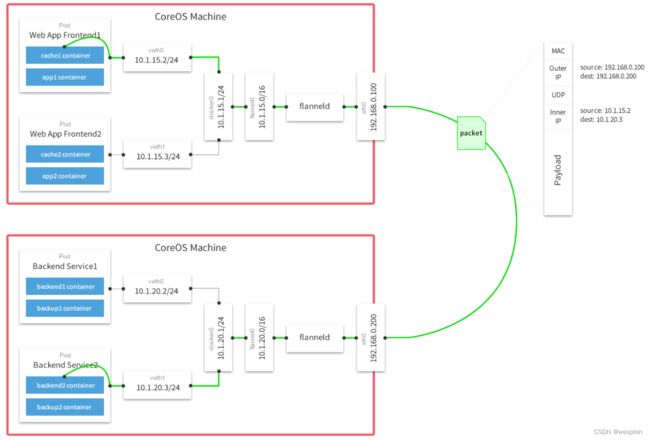

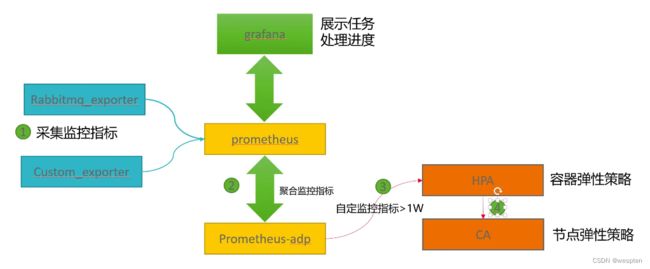

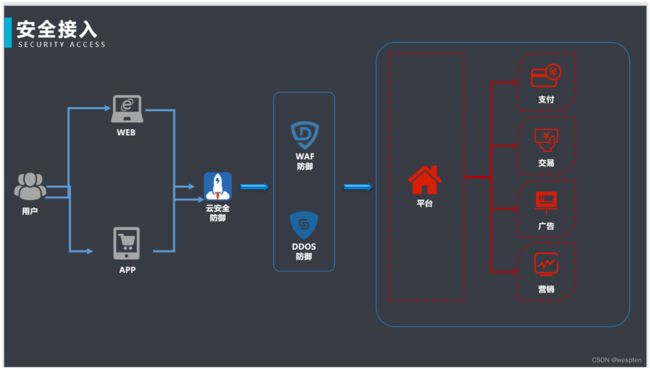

1、方案设计

1. 应用架构案例