ClickHouse学习笔记之优化

文章目录

- 建表优化

-

- 数据类型

-

- 时间字段的类型

- 空值存储类型

- 分区和索引

- 表参数

- 写入和删除优化

- 常见配置

-

- CPU资源

- 内存资源

- 存储

- 语法优化

-

- count优化

- 消除子查询重复字段

- 谓词下推

- 聚合计算外推

- 聚合函数消除

- 删除重复的`order by key`

- 删除重复的`limit by key`

- 删除重复的`using key`

- 标量替换

- 三元运算优化

- 查询优化

-

- 单表查询

-

- `prewhere`代替`where`

- 数据采样

- 列裁剪和分区裁剪

- `order by`结合`where`与`limit`

- 避免构建虚拟列

- `uniqCombined`代替`distinct`

- 使用物化视图

- 查询熔断

- 关闭虚拟内存

- 配置`join_use_nulls`

- 批量写入前先排序

- 关注CPU

- 多表关联

-

- 用`in`代替`join`

- 大小表`join`

- 注意谓词下推

- 分布式表用`global`

- 使用字典表

- 提前过滤

- 物化视图

-

- 概述

-

- 物化视图和普通视图的区别

- 优缺点

- 基本语法

- 案例

-

- 准备测试表和数据

- 创建物化视图

- 导入增量数据

- 导入历史数据

建表优化

数据类型

时间字段的类型

建表时能用数值型或日期时间型表示的字段就不要用字符串,Hive中经常出现全String类型的表,但ClickHouse中不应该这样。虽然ClickHouse底层将DateTime存储为Long类型的时间戳,但仍不建议存储Long类型,因为DateTime不需要经过函数转换处理,拥有更高的执行效率和可读性:

create table t_type2(

id UInt32,

sku_id String,

total_amount Decimal(16,2) ,

create_time Int32

) engine =ReplacingMergeTree(create_time)

partition by toYYYYMMDD(toDate(create_time))

primary key (id)

order by (id, sku_id);

上例中create_time是Int32类型,但要把它传给toYYYYMMDD()函数,就必须先通过toDate()函数对其进行类型转换

空值存储类型

官方指出Nullable类型几乎总是拖累性能,因为存储Nullable列时需要创建一个文件存储NULL标记,并且Nullable列不能被索引。因此,需要直接使用字段默认值或非法值(如-1)来表示空。

下例是一个使用Nullable列建表的例子:

CREATE TABLE t_null(x Int8, y Nullable(Int8)) ENGINE TinyLog;

INSERT INTO t_null VALUES (1, NULL), (2, 3);

SELECT x + y FROM t_null;

查看Nullable列的标记存储文件:

[root@scentos clickhouse-server]# cd /var/lib/clickhouse/data/default/t_null/ # default是数据库名,t_null是表名

[root@scentos t_null]# ll

total 16

-rw-r----- 1 clickhouse clickhouse 95 Dec 11 19:08 sizes.json

-rw-r----- 1 clickhouse clickhouse 28 Dec 11 19:08 x.bin

-rw-r----- 1 clickhouse clickhouse 28 Dec 11 19:08 y.bin

-rw-r----- 1 clickhouse clickhouse 28 Dec 11 19:08 y.null.bin # Nullable列(y列)的标记存储文件

官网说明

分区和索引

分区粒度根据业务特点决定,一般选择按天分区,也可以制定为Tuple(),如果单表数据量为亿,分区大小控制在10-30个为佳。

必须指定索引列,ClickHouse中的索引列即排序列,通过order by指定,一般在查询条件中经常被用来充当筛选条件的属性可以被纳入为索引列:可以是单一维度,也可以是组合维度。通常需要满足高级列在前,查询频率大的在前原则,基数特别大的列不适合做索引列,比如用户表的userid字段,通常筛选后的数据量满足在百万以内的为佳。

以官方案例为例:

……

PARTITION BY toYYYYMM(EventDate)

ORDER BY (CounterID, EventDate, intHash32(UserID))

……

表参数

Index_granularity是用来控制索引粒度的,默认值8192,不建议调整。如果表中不是必须保留全量历史数据,建议指定TTL,可免去手动去除过期历史数据的麻烦,TTL也可以通过alter table语句随时修改,参考相关笔记——表引擎。

写入和删除优化

尽量不要执行单条或小批量的删除和插入操作,这样会产生小分区文件,给后台merge任务带来巨大压力。

不要一次写入太多分区,或写入数据太快,数据写入太快会导致merge速度跟不上而报错,一般建议每秒发起2~3次写入操作,每次操作写入2w~5w条数据,当然这由服务器性能决定。

写入过快报错信息如下:

1. Code: 252, e.displayText() = DB::Exception: Too many parts(304). Merges are processing significantly slower than inserts

2. Code: 241, e.displayText() = DB::Exception: Memory limit (for query) exceeded:would use 9.37 GiB (attempt to allocate chunk of 301989888 bytes), maximum: 9.31 GiB

处理方式:

Too many parts:使用WAL预写日志,提高写入性能,而in_memory_parts_enable_wal默认即为true;Memory limit:内存爆满,在服务器内存充裕的情况下可增加内存配额,一般通过max_memory_usage实现;- 在服务器内存不充裕的情况下,建议将超出部分内容分配到系统硬盘上,但会降低执行速度,一般通过

max_bytes_before_external_group_by、max_bytes_before_external_sort参数实现。

常见配置

配置项主要在config.xml和users.xml中,基本都在users.xml里。参见官方说明:

config.xml配置项

users.xml配置项

CPU资源

内存资源

存储

ClickHouse不支持设置多数据目录,为了提升数据的IO性能,可以挂在虚拟卷组,一个卷组绑定多块物理磁盘以提升读写性能。多数据查询场景下,SSD会比普通机械硬盘快2~3倍。

语法优化

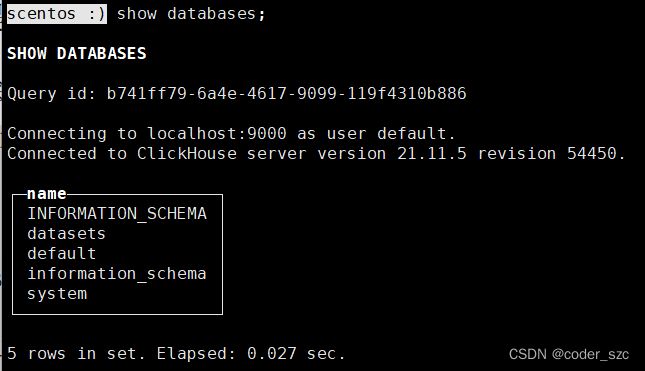

ClickHouse的SQL优化规则是基于RBO(Rule Based Optimization,基于规则的优化)的,在介绍这些规则前,我们需要先准备测试表。

先把数据压缩包解压到ClickHouse的数据路径:

[root@scentos clickHouse_data]# tar -xvf hits_v1.tar -C /var/lib/clickhouse/

[root@scentos clickHouse_data]# tar -xvf visits_v1.tar -C /var/lib/clickhouse/

再修改数据集目录的所属用户:

[root@scentos clickHouse_data]# chown -R clickhouse:clickhouse /var/lib/clickhouse/data/datasets/

[root@scentos clickHouse_data]# chown -R clickhouse:clickhouse /var/lib/clickhouse/metadata/datasets/

重启ClickHouse服务器:

[root@scentos clickHouse_data]# systemctl restart clickhouse-server

scentos :) use datasets;

USE datasets

Query id: 6a4e442e-f6df-41ac-a529-4b8546097e01

Ok.

0 rows in set.

Elapsed: 0.001 sec.

scentos :) show tables;

SHOW TABLES

Query id: c99f28d7-e952-47ea-8080-38836e1f6e9c

┌─name──────┐

│ hits_v1 │

│ visits_v1 │

└───────────┘

2 rows in set.

Elapsed: 0.002 sec.

scentos :) select count(*) from hits_v1;

SELECT count(*)

FROM hits_v1

Query id: 9cc1e5c5-8102-4782-aeee-80d890a40c08

┌─count()─┐

│ 8873898 │

└─────────┘

1 rows in set.

Elapsed: 0.002 sec.

至此,数据导入完毕。

count优化

调用count()函数时,如果使用的是count()或count(*),且没有where条件,则直接使用system.tables的total_rows,例如:

scentos :) explain select count() from hits_v1;

EXPLAIN

SELECT count()

FROM hits_v1

Query id: 5b1f854b-4289-4204-b748-2cd4282a0095

┌─explain──────────────────────────────────────────────┐

│ Expression ((Projection + Before ORDER BY)) │

│ MergingAggregated │

│ ReadFromPreparedSource (Optimized trivial count) │

└──────────────────────────────────────────────────────┘

3 rows in set. Elapsed: 0.006 sec.

其中的Optimized trivial count就是对count()的优化。

如果count()具体的字段,则不会有此优化:

scentos :) explain select count(CounterID) from hits_v1;

EXPLAIN

SELECT count(CounterID)

FROM hits_v1

Query id: ec045da4-33a3-4c5d-b3b7-d9e560a6b16b

┌─explain───────────────────────────────────────────────────────────────────────┐

│ Expression ((Projection + Before ORDER BY)) │

│ Aggregating │

│ Expression (Before GROUP BY) │

│ SettingQuotaAndLimits (Set limits and quota after reading from storage) │

│ ReadFromMergeTree │

└───────────────────────────────────────────────────────────────────────────────┘

5 rows in set. Elapsed: 0.038 sec.

消除子查询重复字段

下面语句的子查询中有两个重复的字段(UserID),将会被去重:

EXPLAIN SYNTAX SELECT

a.UserID,

b.VisitID,

a.URL,

b.UserID

FROM

hits_v1 AS a

LEFT JOIN (

SELECT

UserID,

UserID as HaHa,

VisitID

FROM visits_v1) AS b

USING (UserID)

limit 3;

返回的优化语句为:

┌─explain───────────────┐

│ SELECT │

│ UserID, │

│ VisitID, │

│ URL, │

│ b.UserID │

│ FROM hits_v1 AS a │

│ ALL LEFT JOIN │

│ ( │

│ SELECT │

│ UserID, │

│ VisitID │

│ FROM visits_v1 │

│ ) AS b USING (UserID) │

│ LIMIT 3 │

└───────────────────────┘

谓词下推

当group by有having子句,但没有with cube/with rollup/with totals时,having过滤会被下推到where中提前过滤,例如下例中having name变成了where name,在group by之前过滤:

scentos :) EXPLAIN SYNTAX SELECT UserID FROM hits_v1 GROUP BY UserID HAVING UserID = '8585742290196126178';

EXPLAIN SYNTAX

SELECT UserID

FROM hits_v1

GROUP BY UserID

HAVING UserID = '8585742290196126178'

Query id: 7566eef5-8026-4c70-b7c3-57372879ae71

┌─explain──────────────────────────────┐

│ SELECT UserID │

│ FROM hits_v1 │

│ WHERE UserID = '8585742290196126178' │

│ GROUP BY UserID │

└──────────────────────────────────────┘

4 rows in set. Elapsed: 0.002 sec.

scentos :)

子查询也支持谓词下推,下例中,where条件被加入到了子查询中:

scentos :) EXPLAIN SYNTAX

:-] SELECT *

:-] FROM

:-] (

:-] SELECT UserID

:-] FROM visits_v1

:-] )

:-] WHERE UserID = '8585742290196126178';

EXPLAIN SYNTAX

SELECT *

FROM

(

SELECT UserID

FROM visits_v1

)

WHERE UserID = '8585742290196126178'

Query id: 04c3ee06-f5ea-4b5e-9e3b-c9919f31cb5a

┌─explain──────────────────────────────────┐

│ SELECT UserID │

│ FROM │

│ ( │

│ SELECT UserID │

│ FROM visits_v1 │

│ WHERE UserID = '8585742290196126178' │

│ ) │

│ WHERE UserID = '8585742290196126178' │

└──────────────────────────────────────────┘

8 rows in set. Elapsed: 0.003 sec.

再来一个比较复杂的例子:

scentos :) EXPLAIN SYNTAX

:-] SELECT * FROM (

:-] SELECT

:-] *

:-] FROM

:-] (

:-] SELECT

:-] UserID

:-] FROM visits_v1)

:-] UNION ALL

:-] SELECT

:-] *

:-] FROM

:-] (

:-] SELECT

:-] UserID

:-] FROM visits_v1)

:-] )

:-] WHERE UserID = '8585742290196126178';

EXPLAIN SYNTAX

SELECT *

FROM

(

SELECT *

FROM

(

SELECT UserID

FROM visits_v1

)

UNION ALL

SELECT *

FROM

(

SELECT UserID

FROM visits_v1

)

)

WHERE UserID = '8585742290196126178'

Query id: 2bc733ea-cd02-4e13-8f47-1a5f73acceba

┌─explain──────────────────────────────────────┐

│ SELECT UserID │

│ FROM │

│ ( │

│ SELECT UserID │

│ FROM │

│ ( │

│ SELECT UserID │

│ FROM visits_v1 │

│ WHERE UserID = '8585742290196126178' │

│ ) │

│ WHERE UserID = '8585742290196126178' │

│ UNION ALL │

│ SELECT UserID │

│ FROM │

│ ( │

│ SELECT UserID │

│ FROM visits_v1 │

│ WHERE UserID = '8585742290196126178' │

│ ) │

│ WHERE UserID = '8585742290196126178' │

│ ) │

│ WHERE UserID = '8585742290196126178' │

└──────────────────────────────────────────────┘

22 rows in set. Elapsed: 0.006 sec.

聚合计算外推

聚合函数内的计算会被外推,例如:

scentos :) EXPLAIN SYNTAX

:-] SELECT sum(UserID * 2)

:-] FROM visits_v1;

EXPLAIN SYNTAX

SELECT sum(UserID * 2)

FROM visits_v1

Query id: cd319404-bdf9-4634-b8c5-c40b48cca2dd

┌─explain────────────────┐

│ SELECT sum(UserID) * 2 │

│ FROM visits_v1 │

└────────────────────────┘

2 rows in set. Elapsed: 0.013 sec.

聚合函数消除

如果对聚合键,如group by key使用min、max、any等聚合函数,这些聚合函数会被消除,例如:

scentos :) EXPLAIN SYNTAX

:-] SELECT

:-] sum(UserID * 2),

:-] max(VisitID),

:-] max(UserID)

:-] FROM visits_v1

:-] GROUP BY UserID;

EXPLAIN SYNTAX

SELECT

sum(UserID * 2),

max(VisitID),

max(UserID)

FROM visits_v1

GROUP BY UserID

Query id: 06c4cc13-a0a3-4665-bb6e-e321f11d87a6

┌─explain──────────────┐

│ SELECT │

│ sum(UserID) * 2, │

│ max(VisitID), │

│ UserID │

│ FROM visits_v1 │

│ GROUP BY UserID │

└──────────────────────┘

6 rows in set. Elapsed: 0.002 sec.

删除重复的order by key

重复的order by key会被去重,例如:

scentos :) EXPLAIN SYNTAX

SELECT *

FROM visits_v1

ORDER BY

UserID ASC,

UserID ASC,

VisitID ASC,

VisitID ASC;

EXPLAIN SYNTAX

SELECT *

FROM visits_v1

ORDER BY

UserID ASC,

UserID ASC,

VisitID ASC,

VisitID ASC

Query id: 1d2ca7c8-2e21-44e7-912b-083c3284b07b

┌─explain───────────────────────────────────┐

│ SELECT │

│ CounterID, │

│ StartDate, │

│ Sign, │

│ IsNew, │

│ VisitID, │

│ UserID, │

│ .......... │

│ FROM visits_v1 │

│ ORDER BY │

│ UserID ASC, │

│ VisitID ASC │

└───────────────────────────────────────────┘

186 rows in set. Elapsed: 0.004 sec.

删除重复的limit by key

例如下面的语句,重复声明的VisitID会被去重:

scentos :) EXPLAIN SYNTAX

:-] SELECT *

:-] FROM visits_v1

:-] LIMIT 3 BY

:-] VisitID,

:-] VisitID

:-] LIMIT 10;

EXPLAIN SYNTAX

SELECT *

FROM visits_v1

LIMIT 3 BY

VisitID,

VisitID

LIMIT 10

Query id: 98ba0545-6ab4-4a61-b7a0-db8e93ee3b4a

┌─explain───────────────────────────────────┐

│ SELECT │

│ CounterID, │

│ StartDate, │

│ Sign, │

│ IsNew, │

│ VisitID, │

│ UserID, │

│ StartTime, │

│ Duration, │

│ UTCStartTime, │

│ PageViews, │

│ ........ │

│ FROM visits_v1 │

│ LIMIT 3 BY VisitID │

│ LIMIT 10 │

└───────────────────────────────────────────┘

185 rows in set. Elapsed: 0.003 sec.

删除重复的using key

例如下面语句,重复的关联键UserID字段会被去重:

scentos :) EXPLAIN SYNTAX

:-] SELECT

:-] a.UserID,

:-] a.UserID,

:-] b.VisitID,

:-] a.URL,

:-] b.UserID

:-] FROM hits_v1 AS a

:-] LEFT JOIN visits_v1 AS b USING (UserID, UserID);

EXPLAIN SYNTAX

SELECT

a.UserID,

a.UserID,

b.VisitID,

a.URL,

b.UserID

FROM hits_v1 AS a

LEFT JOIN visits_v1 AS b USING (UserID, UserID)

Query id: 8901a535-e976-4460-86d7-2a7dc431d927

┌─explain─────────────────────────────────────┐

│ SELECT │

│ UserID, │

│ UserID, │

│ VisitID, │

│ URL, │

│ b.UserID │

│ FROM hits_v1 AS a │

│ ALL LEFT JOIN visits_v1 AS b USING (UserID) │

└─────────────────────────────────────────────┘

8 rows in set. Elapsed: 0.005 sec.

标量替换

如果子查询只返回一行数据,那么在被引用的时候会用标量(即结果)替换该子查询,例如下面语句的total_disk_usage字段:

scentos :) EXPLAIN SYNTAX

:-] WITH

:-] (

:-] SELECT sum(bytes)

:-] FROM system.parts

:-] WHERE active

:-] ) AS total_disk_usage

:-] SELECT

:-] (sum(bytes) / total_disk_usage) * 100 AS table_disk_usage,

:-] table

:-] FROM system.parts

:-] GROUP BY table

:-] ORDER BY table_disk_usage DESC

:-] LIMIT 10;

EXPLAIN SYNTAX

WITH (

SELECT sum(bytes)

FROM system.parts

WHERE active

) AS total_disk_usage

SELECT

(sum(bytes) / total_disk_usage) * 100 AS table_disk_usage,

table

FROM system.parts

GROUP BY table

ORDER BY table_disk_usage DESC

LIMIT 10

Query id: 7a776482-04fd-4df2-9913-5796792072cd

┌─explain─────────────────────────────────────────────────────────────────────────┐

│ WITH identity(_CAST(0, 'Nullable(UInt64)')) AS total_disk_usage │

│ SELECT │

│ (sum(bytes_on_disk AS bytes) / total_disk_usage) * 100 AS table_disk_usage, │

│ table │

│ FROM system.parts │

│ GROUP BY table │

│ ORDER BY table_disk_usage DESC │

│ LIMIT 10 │

└─────────────────────────────────────────────────────────────────────────────────┘

8 rows in set. Elapsed: 0.002 sec.

三元运算优化

如果开启了optimize_if_chain_to_multiif参数,三元运算符会被替换成multiIf函数,例如:

scentos :) EXPLAIN SYNTAX

:-] SELECT number = 1 ? 'hello' : (number = 2 ? 'world' : 'szc')

:-] FROM numbers(10)

:-] settings optimize_if_chain_to_multiif = 1;

EXPLAIN SYNTAX

SELECT if(number = 1, 'hello', if(number = 2, 'world', 'szc'))

FROM numbers(10)

SETTINGS optimize_if_chain_to_multiif = 1

Query id: 7f0af9fa-f80b-4cb3-a62c-fbc600a218d3

┌─explain─────────────────────────────────────────────────────────┐

│ SELECT multiIf(number = 1, 'hello', number = 2, 'world', 'szc') │

│ FROM numbers(10) │

│ SETTINGS optimize_if_chain_to_multiif = 1 │

└─────────────────────────────────────────────────────────────────┘

3 rows in set. Elapsed: 0.001 sec.

查询优化

单表查询

prewhere代替where

prewhere语句和where语句相同,都是用来过滤数据。不同之处在于prewhere只支持合并树系列的引擎表,首先会读取指定的列数据,来进行数据过滤,再将过滤后剩下的数据读取select中需要读取的列字段,形成完整的返回数据。

当查询列明显多于筛选列时使用prewhere可显著提高查询性能,prewhere会自动优化执行过滤阶段的数据读取方式,降低IO频率。在某些场合下,prewhere子句要比where子句处理的数据量更少,性能更高。

我们可以先关闭where自动转prewhere:

set optimize_move_to_prewhere=0;

再分别使用where和prewhere进行查询:

scentos :) select WatchID,

:-] JavaEnable,

:-] Title,

:-] GoodEvent,

:-] EventTime,

:-] EventDate,

:-] CounterID,

:-] ClientIP,

:-] ClientIP6,

:-] RegionID,

:-] UserID,

:-] CounterClass,

:-] OS,

:-] UserAgent,

:-] URL,

:-] Referer,

:-] URLDomain,

:-] RefererDomain,

:-] Refresh,

:-] IsRobot,

:-] RefererCategories,

:-] URLCategories,

:-] URLRegions,

:-] RefererRegions,

:-] ResolutionWidth,

:-] ResolutionHeight,

:-] ResolutionDepth,

:-] FlashMajor,

:-] FlashMinor,

:-] FlashMinor2

:-] from datasets.hits_v1 where UserID='3198390223272470366';

SELECT

WatchID,

JavaEnable,

Title,

GoodEvent,

EventTime,

EventDate,

CounterID,

ClientIP,

ClientIP6,

RegionID,

UserID,

CounterClass,

OS,

UserAgent,

URL,

Referer,

URLDomain,

RefererDomain,

Refresh,

IsRobot,

RefererCategories,

URLCategories,

URLRegions,

RefererRegions,

ResolutionWidth,

ResolutionHeight,

ResolutionDepth,

FlashMajor,

FlashMinor,

FlashMinor2

FROM datasets.hits_v1

WHERE UserID = '3198390223272470366'

Query id: 53cd4c0a-14f6-4584-80bd-5ba0d20b4250

.......

152 rows in set. Elapsed: 1.648 sec. Processed 8.87 million rows, 3.86 GB (5.38 million rows/s., 2.34 GB/s.)

scentos :) select WatchID,

JavaEnable,

Title,

GoodEvent,

EventTime,

EventDate,

CounterID,

select WatchID,

JavaEnable,

Title,

GoodEvent,

EventTime,

EventDate,

CounterID,

select WatchID,

JavaEnable,

Title,

GoodEvent,

EventTime,

EventDate,

CounterID,

select WatchID,

JavaEnable,

Title,

GoodEvent,

EventTime,

EventDate,

CounterID,

select WatchID,

JavaEnable,

Title,

GoodEvent,

EventTime,

EventDate,

CounterID,

select WatchID,

JavaEnable,

Title,

GoodEvent,

EventTime,

EventDate,

CounterID,

ClientIP,

ClientIP6,

RegionID,

UserID,

CounterClass,

OS,

UserAgent,

URL,

Referer,

URLDomain,

RefererDomain,

Refresh,

IsRobot,

RefererCategories,

URLCategories,

URLRegions,

RefererRegions,

ResolutionWidth,

ResolutionHeight,

ResolutionDepth,

FlashMajor,

FlashMinor,

FlashMinor2

from datasets.hits_v1 prewhere UserID='3198390223272470366';

SELECT

WatchID,

JavaEnable,

Title,

GoodEvent,

EventTime,

EventDate,

CounterID,

ClientIP,

ClientIP6,

RegionID,

UserID,

CounterClass,

OS,

UserAgent,

URL,

Referer,

URLDomain,

RefererDomain,

Refresh,

IsRobot,

RefererCategories,

URLCategories,

URLRegions,

RefererRegions,

ResolutionWidth,

ResolutionHeight,

ResolutionDepth,

FlashMajor,

FlashMinor,

FlashMinor2

FROM datasets.hits_v1

PREWHERE UserID = '3198390223272470366'

Query id: a739c72e-393f-4f8d-9c27-a2600aaa9099

.......

152 rows in set. Elapsed: 0.085 sec. Processed 8.87 million rows, 110.00 MB (104.62 million rows/s., 1.30 GB/s.)

行处理效率:104.62:5.83,prewhere的效率提升非常明显。因此,默认情况,我们肯定不会关闭where自动优化成prewhere,在某些场景下,计时开启优化,又不会自动转换成prewhere,需要我们手动指定:

- 使用常量表达式;

- 使用默认值为

alias类型的字段; - 包含了

arrayJoin、globalIn、globalNotIn或indexHint的查询; select查询的字段和where过滤的字段一样;where中使用了主键字段。

数据采样

通过采样运算可以极大提升数据分析的性能:

scentos :) SELECT Title,count(*) AS PageViews

:-] FROM hits_v1

:-] SAMPLE 0.1

:-] WHERE CounterID =57

:-] GROUP BY Title

:-] ORDER BY PageViews DESC LIMIT 1000;

SELECT

Title,

count(*) AS PageViews

FROM hits_v1

SAMPLE 1 / 10

WHERE CounterID = 57

GROUP BY Title

ORDER BY PageViews DESC

LIMIT 1000

Query id: cce550d8-9aa0-4f57-96bc-34b63278cd06

┌─Title────────────────────────────────────────────────────────────────┬─PageViews─┐

│ │ 77 │

│ Фильмы онлайн на сегодня │ 6 │

│ Сбербанка «Работа, мебель обувь бензор.НЕТ « Новости, аксессионально │ 6 │

└──────────────────────────────────────────────────────────────────────┴───────────┘

3 rows in set. Elapsed: 0.046 sec. Processed 8.19 thousand rows, 1.16 MB (177.52 thousand rows/s., 25.24 MB/s.)

SAMPLE 0.1表示采样10%,也可以指定采样多少行。采样修饰符只有在合并树MergeTree引擎表中才有效,且在创建表时需要制定采样策略。

列裁剪和分区裁剪

列裁剪:数据量太大时应该避免使用select *操作,查询的字段越少,查询的性能就越好:

select WatchID,

JavaEnable,

Title,

GoodEvent,

EventTime,

EventDate,

CounterID,

ClientIP,

ClientIP6,

RegionID,

UserID

from datasets.hits_v1;

分区裁剪:只读取需要的分区,在where中指定即可:

select WatchID,

JavaEnable,

Title,

GoodEvent,

EventTime,

EventDate,

CounterID,

ClientIP,

ClientIP6,

RegionID,

UserID

from datasets.hits_v1

where EventDate='2014-03-23';

order by结合where与limit

千万级以上的数据集进行order by查询时需要搭配where和limit一起使用:

SELECT UserID,Age

FROM hits_v1

WHERE CounterID=57

ORDER BY Age DESC LIMIT 1000;

避免构建虚拟列

如非必需,不要在结果集上构建虚拟列,虚拟列非常消耗资源,可以考虑在前端处理,或者在表中构造实际字段:

SELECT Income,Age FROM datasets.hits_v1;

拿到Income和Age后再在使用端进行处理。

uniqCombined代替distinct

uniqCombined的性能可以是distinct的十倍以上,uniqCombined底层采用类似HyperLog算法时间,能接收2%的数据误差,可直接使用这种去重方式提升查询性能,而distinct则是uniqExact进行精确去重。在千万级数据集上建议使用uniqCombined,而非distinct:

SELECT uniqCombined(rand()) from datasets.hits_v1;

使用物化视图

参见本文第4章,此处略过。

查询熔断

为了避免因为个别慢查询引起的服务器崩盘,除了可以为单个查询设置超时外,还可以配置周期熔断,在一个查询周期内,如果用户的慢查询操作数超过规定阈值后将在此周期内无法进行查询操作。

关闭虚拟内存

物理内存和虚拟内存之间的数据交换会导致查询变慢,因此在资源允许的情况下建议关闭虚拟内存。

配置join_use_nulls

为每一个账户添加join_use_nulls配置,两表join时,如果左表中的一条记录在右表中不存在,右表的相应字段会返回该字段对应数据类型的默认值,而不是标准SQL中的Null。

批量写入前先排序

批量写入数据时,必须控制每个批次的数据中涉及到的分区数,在写入之前也最好对需要导入的数据进行合并。无序的数据或涉及的分区太多,会导致ClickHouse无法及时对新导入的数据进行合并,从而影响查询性能。

关注CPU

CPU使用率在50%左右会出现查询波动,70%时会出现大范围的查询超时,因此需要额外关注服务器的CPU使用率。

多表关联

首先我们要创建visits_v1的子集表:

CREATE TABLE visits_v2

ENGINE = CollapsingMergeTree(Sign)

PARTITION BY toYYYYMM(StartDate)

ORDER BY (CounterID, StartDate, intHash32(UserID), VisitID)

SAMPLE BY intHash32(UserID)

SETTINGS index_granularity = 8192

as select * from visits_v1 limit 10000;

然后创建join结果表,以避免控制台疯狂输出数据:

CREATE TABLE hits_v2

ENGINE = MergeTree()

PARTITION BY toYYYYMM(EventDate)

ORDER BY (CounterID, EventDate, intHash32(UserID))

SAMPLE BY intHash32(UserID)

SETTINGS index_granularity = 8192

as select * from hits_v1 where 1=0;

用in代替join

多表联查时,如果查询的数据仅从其中的一张表输出,可以考虑使用in而不是join:

scentos :) insert into hits_v2 select a.* from hits_v1 a where a. CounterID in (select CounterID from visits_v1);

INSERT INTO hits_v2 SELECT a.*

FROM hits_v1 AS a

WHERE a.CounterID IN (

SELECT CounterID

FROM visits_v1

)

Query id: 2c9bbd2f-225e-4b07-81de-1d1154178be9

Ok.

0 rows in set. Elapsed: 1.941 sec. Processed 5.41 million rows, 5.14 GB (2.79 million rows/s., 2.65 GB/s.)

大小表join

多表join时,要满足小表在右的原则,因为右表关联时会被加载内存中和左表进行比较。ClickHouse中无论是LeftJoin、RightJoin还是InnerJoin,都是拿着右表中的每一条数据到左表中查找记录是否存在,因此右表必须是小表:

insert into table hits_v2

select a.* from hits_v1 a left join visits_v2 b on a. CounterID=b.

CounterID;

上例中,hits_v1是大表,visits_v2是小表,小表在右。

注意谓词下推

ClickHouse在join查询时不会主动发起谓词下推操作,需要每个子查询提前完成过滤。需要注意的是,是否执行谓词下推对性能影响差别很大,不过在新版本中已经不存在此问题,但是要注意版本差异。

scentos :) Explain syntax

:-] select a.* from hits_v1 a left join visits_v2 b on a. CounterID=b.CounterID

:-] having a.EventDate = '2014-03-17';

EXPLAIN SYNTAX

SELECT a.*

FROM hits_v1 AS a

LEFT JOIN visits_v2 AS b ON a.CounterID = b.CounterID

HAVING a.EventDate = '2014-03-17'

Query id: d4aeff23-530a-45cb-bdf2-3c7c3a768a22

┌─explain─────────────────────────────────────────────────┐

│ SELECT │

│ WatchID, │

│ JavaEnable, │

│ Title, │

│ GoodEvent, │

│ EventTime, │

│ .......... │

│ FROM hits_v1 AS a │

│ ALL LEFT JOIN visits_v2 AS b ON CounterID = b.CounterID │

│ PREWHERE EventDate = '2014-03-17' │

└─────────────────────────────────────────────────────────┘

137 rows in set. Elapsed: 0.005 sec.

上例中,将having子句推入了到主查询中,成为prewhere子句,下例也是如此,只不过变成了where子句:

scentos :) Explain syntax

:-] select a.* from hits_v1 a left join visits_v2 b on a. CounterID=b.CounterID

:-] having b.StartDate = '2014-03-17';

EXPLAIN SYNTAX

SELECT a.*

FROM hits_v1 AS a

LEFT JOIN visits_v2 AS b ON a.CounterID = b.CounterID

HAVING b.StartDate = '2014-03-17'

Query id: 20382e92-4dd0-4711-a37e-19da94fead58

┌─explain─────────────────────────────────────────────────┐

│ SELECT │

│ WatchID, │

│ JavaEnable, │

│ Title, │

│ GoodEvent, │

│ EventTime, │

│ .......... │

│ FROM hits_v1 AS a │

│ ALL LEFT JOIN visits_v2 AS b ON CounterID = b.CounterID │

│ WHERE StartDate = '2014-03-17' │

└─────────────────────────────────────────────────────────┘

137 rows in set. Elapsed: 0.004 sec.

分布式表用global

在两张分布式表上进行的in或join必须加上global关键字,右表只会在接收查询请求的结点查询一次,再把结果分发到其他结点。不加global的话,每个结点都会单独发起一次对右表的查询,而右表又是分布式表,导致右表会被查询N^2次(N为该分布式表的分片数),从而发生查询放大现象,严重影响性能。

使用字典表

将一些需要关联分析的业务创建成字典表进行join操作,不过字段表不宜太大,以为它会常驻内存。

提前过滤

通过增加逻辑过滤可以减少数据扫描,以达到提高执行速度和降低内存消耗的目的。

物化视图

ClickHouse的物化视图是对查询结果的持久化,可以提升查询效率。物化视图是一张表,仿佛时刻都在进行预计算,它创建时使用了一种特殊的引擎,可以提高查询效率。查询结果集的范围很宽泛,可以是基础表中部分数据的简单拷贝,也可以是多表join产生的结果或其子集,也可以是原始数据的聚合指标等。物化视图是一种快照,不会随着基础表的变化而变化。

概述

物化视图和普通视图的区别

普通视图不保存数据,仅保存查询语句,查询的时候还是从数据表中读取数据,所以普通视图是一个子查询。物化视图则是把查询结果根据相应的引擎存入到了内存或磁盘中,重新对数据进行组织,可以把物化视图理解成一张新表。

优缺点

优点:查询速度快,如果把物化视图的规则全部写好,使用它时将比原始数据查询快很多,因为已经执行了预计算;

缺点:物化视图本质是流式数据,是累加式的技术,因此要使用历史数据进行去重等分析,所以使用难度较大、场景优先,且如果一张表中加入了很多物化视图,对该表的写操作也会消耗很多额外的资源。

基本语法

是create语法,ClickHouse会创建一个隐藏的目标表来保存视图数据。to后面可以自定义物化视图对应的普通表表名,默认是.inner.物化视图名:

CREATE [MATERIALIZED] VIEW [IF NOT EXISTS] [db.]table_name [TO[db.]name] [ENGINE = engine] [POPULATE] AS SELECT ...

创建物化视图的限制:

- 必须指定物化视图的

engine; to [db.]name时,不能使用populate;select语句中可以包含distinct、group by、order by、limit等子句;- 物化视图的

alter操作有限制,操作起来不太方便; - 若物化视图定义时使用了

to [db.]name,则可以将目标表的视图卸载(detach)再装载(attach)。

物化视图的数据更新:

- 物化视图创建好后,源数据表被写入数据时也会同步更新物化视图;

populate关键字决定了物化视图的更新策略:- 若有

populate,则在创建视图的过程中将源表中已经存在的数据一并导入; - 否则,物化视图在创建后没有数据,只会在同步之后进行更新。

- ClickHouse官方不推荐使用

populate,因为在创建物化视图过程中同时写入的数据,不能被插入到物化视图中;

- 若有

- 物化视图不支持同步删除,若源表的数据不存在了,物化视图中的数据依旧保留;

- 物化视图是一种特殊的表,可以用

show tables查看。

案例

对于一些确定的数据模型,可以将统计指标通过物化视图的方式创建,可以避免查询时的重复计算,并实时更新数据。

准备测试表和数据

建表:

CREATE TABLE hits_test

(

EventDate Date,

CounterID UInt32,

UserID UInt64,

URL String,

Income UInt8

)

ENGINE = MergeTree()

PARTITION BY toYYYYMM(EventDate)

ORDER BY (CounterID, EventDate, intHash32(UserID))

SAMPLE BY intHash32(UserID)

SETTINGS index_granularity = 8192

导入数据:

INSERT INTO hits_test

SELECT

EventDate,

CounterID,

UserID,

URL,

Income

FROM hits_v1

limit 10000;

创建物化视图

CREATE MATERIALIZED VIEW hits_mv

ENGINE=SummingMergeTree

PARTITION BY toYYYYMM(EventDate) ORDER BY (EventDate, intHash32(UserID))

AS SELECT

UserID,

EventDate,

count(URL) as ClickCount,

sum(Income) AS IncomeSum

FROM hits_test

WHERE EventDate >= '2014-03-20'

GROUP BY UserID,EventDate;

WHERE EventDate >= '2014-03-20'用来设置更新点,该时间点之前的数据可以另外通过insert into select进行插入。

也可以使用以下语法,表A是一张合并树表:

CREATE MATERIALIZED VIEW 物化视图名 TO 表 A

AS SELECT FROM 表 B;

不建议添加populate关键字进行全量更新。

因为我们没有指定to [db.]name,所以会创建名为.inner.hits_mv的表,用来保存物化视图数据

scentos :) show tables;

SHOW TABLES

Query id: a3acf91a-1308-46f6-a609-364d16adcc34

┌─name───────────┐

│ .inner.hits_mv │

│ hits_mv │

│ hits_test │

│ hits_v1 │

│ hits_v2 │

│ visits_v1 │

│ visits_v2 │

└────────────────┘

我们直接通过查询hits_mv物化视图就好。

导入增量数据

先查询一下物化视图:

scentos :) select * from hits_mv;

SELECT *

FROM hits_mv

Query id: a1915362-5a0a-4921-9a55-c50df710e43d

Ok.

0 rows in set. Elapsed: 0.001 sec.

再插入增量数据:

INSERT INTO hits_test

SELECT

EventDate,

CounterID,

UserID,

URL,

Income

FROM hits_v1

WHERE EventDate >= '2014-03-23'

limit 10;

再查询物化视图:

scentos :) select * from hits_mv;

SELECT *

FROM hits_mv

Query id: 46661160-76d1-436f-9a8f-21161998a21e

┌──────────────UserID─┬──EventDate─┬─ClickCount─┬─IncomeSum─┐

│ 8585742290196126178 │ 2014-03-23 │ 8 │ 16 │

│ 1095363898647626948 │ 2014-03-23 │ 2 │ 0 │

└─────────────────────┴────────────┴────────────┴───────────┘

2 rows in set. Elapsed: 0.002 sec.

导入历史数据

比如我们导入2014年3月20号的数据:

INSERT INTO hits_mv

SELECT

UserID,

EventDate,

count(URL) as ClickCount,

sum(Income) AS IncomeSum

FROM hits_test

WHERE EventDate = '2014-03-20'

GROUP BY UserID,EventDate;

然后查询物化视图:

scentos :) select * from hits_mv;

SELECT *

FROM hits_mv

Query id: 03700a27-929a-4b4a-b51f-b22d6e73f9b9

┌───────────────UserID─┬──EventDate─┬─ClickCount─┬─IncomeSum─┐

│ 8682581061680449960 │ 2014-03-20 │ 36 │ 0 │

│ 1685423974857227293 │ 2014-03-20 │ 87 │ 261 │

│ 9912771070916119619 │ 2014-03-20 │ 1 │ 3 │

│ 10163473165296684099 │ 2014-03-20 │ 90 │ 0 │

│ ......................................................... │

│ 35119926053556948 │ 2014-03-20 │ 2 │ 4 │

│ 1913746513358768143 │ 2014-03-20 │ 2 │ 4 │

└──────────────────────┴────────────┴────────────┴───────────┘

┌──────────────UserID─┬──EventDate─┬─ClickCount─┬─IncomeSum─┐

│ 8585742290196126178 │ 2014-03-23 │ 8 │ 16 │

│ 1095363898647626948 │ 2014-03-23 │ 2 │ 0 │

└─────────────────────┴────────────┴────────────┴───────────┘

341 rows in set. Elapsed: 0.008 sec.

可见数据同步导入到了物化视图中。