基于深度学习算法实现视频人脸自动打码

前言

1.在当下的环境上,短视频已是生活的常态,但这是很容易就侵犯别人肖像权,好多视频都会在后期给不相关的人打上码,这里是基于yolov5的人脸检测实现人脸自动打码功能。

2.开发环境是win10,显卡RTX3080,cuda10.2,cudnn7.1,OpenCV4.5,NCNN,IDE 是Vs2019。

一、人脸检测

1.首先最主要的一步是肯定是先检测到当前图像是否存在人脸,这个属于人脸检测的范围,目前有很多开源的人脸检测算法和模型,OpenCV本身也带有人脸检测的算法,但了帧率跟得上,这里使用更轻快些的yolov5。

2.实现代码

#ifndef YOLOFACE_H

#define YOLOFACE_H

#include #include "yoloface.h"

#include 2.人脸检测效果

二、人脸打码

代码:

#include 打码效果:

三、源码

1.源码地址:https://mp.csdn.net/mp_download/manage/download/UpDetailed

2.源码配置方法

1.文件目录

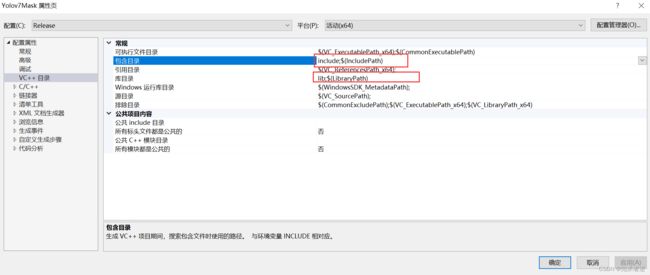

2.配置include和lib路径

3.添加lib名,就是源码目录里面所有点lib后缀的名称。

4.IDE的配置