【K8S】K8S服务搭建

文章目录

- 【K8S】K8S服务搭建

-

- 环境

- 关闭防火墙

- 关闭 selinux

- 关闭 swap

- 添加主机IP对应关系

- 修改主机名

- 将桥接的 IPv4 流量传递到 iptables 的链

- 进行时间同步

- 安装docker

-

- 安装依赖包

- 设置阿里云镜像源

- 安装docker-ce 社区版,设置为开机自动启动

- 配置K8S源

- 安装 kubelet、kubeadm、kubectl 组件

- master 节点制作

- 创建K8S组件的家目录 提权(必做)

- 复制、记录申请加入集群命令【在两个节点服务器上写】

- 上传 kube-flannel.yaml 或者直接下载或者使用镜像包导入的方式完成flannel的部署

- 给node节点打上“node”的标签

- 测试 k8s 集群,在集群中创建一个 pod,验证是否能正常运行

【K8S】K8S服务搭建

环境

| 192.168.116.137 | master |

|---|---|

| 192.168.116.138 | node1 |

| 192.168.116.139 | node2 |

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

关闭 selinux

sed -i ‘s/enforcing/disabled/’ /etc/selinux/config

或者

setenforce 0

关闭 swap

临时禁用

swapoff -a

永久禁用

若需要重启后也生效,在禁用swap后还需修改配置文件/etc/fstab,注释swap

sed -ri 's/.*swap.*/#&/' /etc/fstab #永久关闭swap分区,&符号在sed命令中代表上次匹配的结果

添加主机IP对应关系

vim /etc/hosts

192.168.116.137 master

192.168.116.138 node01

192.168.116.139 node02

修改主机名

hostnamectl set-hostname master

hostnamectl set-hostname node01

hostnamectl set-hostname node02

将桥接的 IPv4 流量传递到 iptables 的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

进行时间同步

ntpdate ntp1.aliyun.com

安装docker

安装依赖包

yum install -y yum-utils device-mapper-persistent-data lvm2

设置阿里云镜像源

cd /etc/yum.repos.d/

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装docker-ce 社区版,设置为开机自动启动

yum install -y docker-ce

systemctl start docker.service

systemctl enable docker.service

配置K8S源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum clean all && yum makecache

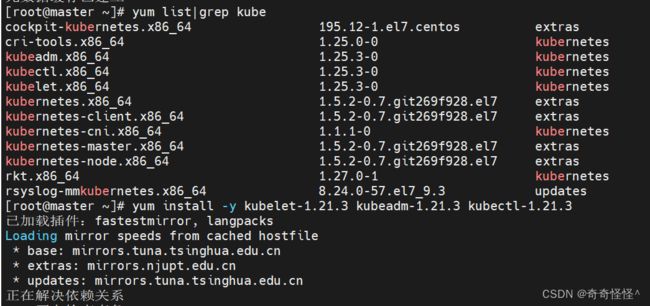

安装 kubelet、kubeadm、kubectl 组件

yum list|grep kube

yum install -y kubelet-1.21.3 kubeadm-1.21.3 kubectl-1.21.3

systemctl enable kubelet

systemctl start kubelet

master 节点制作

kubeadm init \

--apiserver-advertise-address=192.168.116.137 \ #指定master监听的地址,修改为自己的master地址

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \ #指定为aliyun的下载源,最好用国内的

--kubernetes-version v1.21.3 \ #指定k8s版本

--service-cidr=10.125.0.0/16 \ #设置集群内部的网络

--pod-network-cidr=10.150.0.0/16 #设置pod的网络

报错就用下面的这个

kubeadm init --apiserver-advertise-address=192.168.116.137 --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version v1.21.3 --service-cidr=10.125.0.0/16 --pod-network-cidr=10.150.0.0/16

创建K8S组件的家目录 提权(必做)

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

##镜像批量导出,方便以后使用docker save `docker images | grep -v TAG | awk '{print $1":"$2}' `-o name.tar.gz

复制、记录申请加入集群命令【在两个节点服务器上写】

kubeadm join 192.168.116.137:6443 --token 0xkk5o.fep3dm6u9i8lew1x \

--discovery-token-ca-cert-hash sha256:3a565993d61b8b745c0a5d6c2589584f4399bdf

出现的报错点

查看/proc/sys/net/ipv4/ip_forward发现是0,没有开启转发

执行sysctl -w net.ipv4.ip_forward=1

原因:master节点的token过期了

重新生成新token

kubeadm token list | awk -F" " '{print $1}' |tail -n 1 或者

kubeadm token create #列出token

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^ .* //' #获取CA公钥的的hash值

组合一下替换旧的

kubeadm join 192.168.116.137:6443 --token hkuo49.f6awsiqsaji8hxko --discovery-token-ca-cert-hash sha256:3a565993d61b8b745c0a5d6c2589584f4399bdf0a4737186a0b1771da5e9fa3d

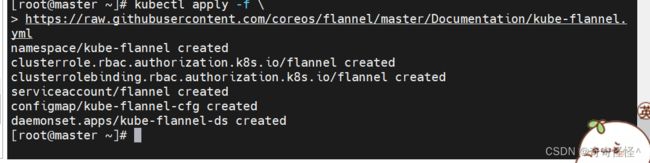

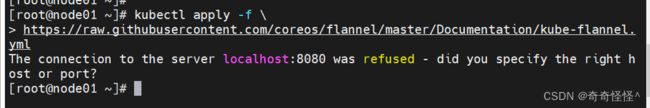

上传 kube-flannel.yaml 或者直接下载或者使用镜像包导入的方式完成flannel的部署

直接上传

kubectl apply -f kube-flannel.yaml

或者使用在线源下载

wget http://120.78.77.38/file/kube-flannel.yaml

vim kube-flannel.yaml

给node节点打上“node”的标签

kubectl label node node01 node-role.kubernetes.io/node=node

kubectl label node node02 node-role.kubernetes.io/node=node

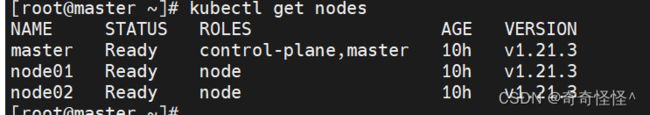

kubectl get nodes

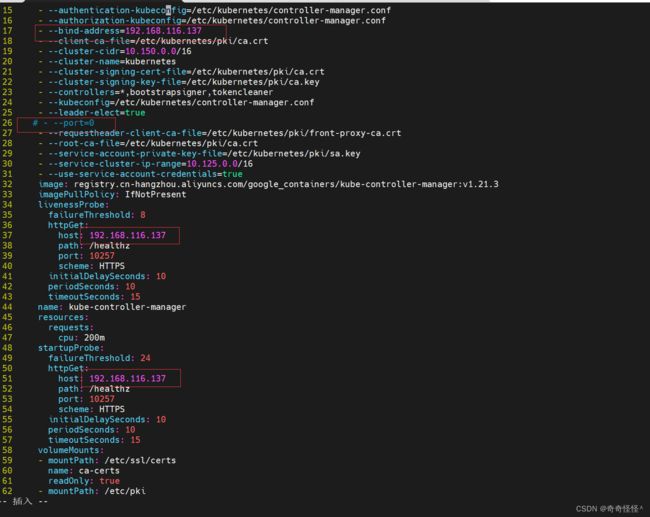

kubectl get cs 发现集群不健康,更改以下两个文件

vim /etc/kubernetes/manifests/kube-scheduler.yaml

# 修改如下内容

把--bind-address=127.0.0.1变成--bind-address=192.168.116.137 #修改成k8s的控制节点master01的ip

把httpGet:字段下的hosts由127.0.0.1变成192.168.116.137(有两处)

#- --port=0 # 搜索port=0,把这一行注释掉

vim /etc/kubernetes/manifests/kube-controller-manager.yaml

# 修改如下内容

把--bind-address=127.0.0.1变成--bind-address=192.168.116.137 #修改成k8s的控制节点master01的ip

把httpGet:字段下的hosts由127.0.0.1变成192.168.116.137(有两处)

#- --port=0 # 搜索port=0,把这一行注释掉

systemctl restart kubelet

#查询master是否正常

kubectl get cs

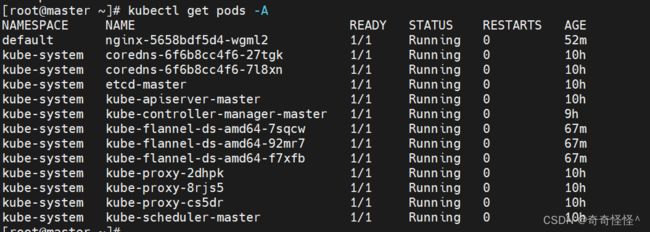

#查询所有pod是否正常运行

kubectl get pods -A

#查询node节点是否ready

kubectl get nodes

测试 k8s 集群,在集群中创建一个 pod,验证是否能正常运行

#部署服务

kubectl create deployment nginx --image=nginx:1.14

#暴露端口

kubectl expose deployment nginx --port=80 --type=NodePort

kubectl get pods

kubectl get svc(service)

#删除pod与svc

kubectl delete deploy/nginx

kubectl delete svc/nginx

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-y4Kh6vyX-1667148672385)(F:\typorase\jpg\1049.png)]](http://img.e-com-net.com/image/info8/3bbd8da016c64be2a3fd07fd6371cda0.jpg)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-rwT9emcR-1667148672388)(F:\typorase\jpg\1052.png)]](http://img.e-com-net.com/image/info8/1efa1e4d06fd4e0c9b691f8dfc583c2e.jpg)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-xpHQgc6V-1667148672389)(F:\typorase\jpg\1055.png)]](http://img.e-com-net.com/image/info8/ceb21ebf7506428abc46e4f80be1cbe4.jpg)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-F1ZRikp7-1667148672391)(F:\typorase\jpg\1062.png)]](http://img.e-com-net.com/image/info8/82b7ae000fc24f9795cf20e59a857b02.jpg)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-aXMx17Ov-1667148672392)(F:\typorase\jpg\1067.png)]](http://img.e-com-net.com/image/info8/09e23ad42319425cad4b8d95cc9f5c5e.jpg)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-IrMGVJWR-1667148672393)(F:\typorase\jpg\1069.png)]](http://img.e-com-net.com/image/info8/24b5f6a60eb44e4288d0805fc510edb2.jpg)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-KyfkUe2r-1667148672393)(F:\typorase\jpg\1070.png)]](http://img.e-com-net.com/image/info8/c8700bd792dc42218f999ed5bfb23907.jpg)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-kBlFPSTJ-1667148672394)(F:\typorase\jpg\1072.png)]](http://img.e-com-net.com/image/info8/a50640cbd723476ba92bd911262ab775.jpg)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-MsGlPR1p-1667148672395)(F:\typorase\jpg\1077.png)]](http://img.e-com-net.com/image/info8/1b1dd3d0a4a940219487caddffe2f616.jpg)