centos7中kubeadm方式搭建k8s集群(crio+calico)(k8s v1.21.0)

文章目录

- centos7中kubeadm方式搭建k8s集群(crio+calico)(k8s v1.21.0)

-

- 环境说明

- 注意事项及说明

-

- 1.版本兼容问题

- 2.镜像问题

- 安装步骤

-

- 安装要求

- 准备环境

- 初始配置(每台机器都执行)

- 安装cri-o(源码方式)(每台机器都执行)

-

- 安装依赖

- 安装cri-o(安装go)

- 安装conmon

- Setup CNI networking

- cri-o配置

- (选做)Validate registries in registries.conf

- Starting CRI-O

- 安装crictl

- 使用kubeadm部署Kubernetes

-

- 添加kubernetes软件源

- 安装kubeadm,kubelet和kubectl

- 部署Kubernetes Master【master节点】

- 加入Kubernetes Node【Slave节点】

- 部署CNI网络插件

- 测试kubernetes集群

- 错误汇总

-

- 0.kubeadm init配置文件

- 1.问题:cri-o编译时提示:go:未找到命令

- 2.错误:cri-o编译时提示:cannot find package "." in:

- 3.错误:cc:命令未找到

- 3.错误:kubelet启动时报错:Flag --cgroup-driver has been deprecated

- 4.错误:kubeadm init显示下载不到镜像

-

- 解决办法

- 5.错误:Error initializing source docker://k8s.gcr.io/pause:3.5:

- 6.错误:Error getting node" err="node \"node\" not found"

- 7.错误: "Error getting node" err="node \"cnio-master\" not found"

- 8.错误: [ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

-

- 解决办法

- 9.错误:

- 10.错误:kubectl get cs出现错误

- 11.错误:Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

- 12.错误:Unable to connect to the server: x509: certificate signed by unknown authority

- 13.错误:[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

- 14.错误:安装calico网络插件coredns一直显示ContainerCreating

- 15.其他配置镜像的地方,记录于此,以后有需要直接查找

- 16.错误:coredns一直处于ContainerCreating的状态 error adding seccomp rule for syscall socket: requested action matches default action of filter\""

- 小知识科普

-

- seccomp

- make -j

- 参考文献

centos7中kubeadm方式搭建k8s集群(crio+calico)(k8s v1.21.0)

环境说明

操作系统:centos7

cri-o:1.21

go:1.17

kubelet-1.21.2 kubeadm-1.21.2 kubectl-1.21.2

kubernetes:1.21.0

runc:1.0.2

本实验所需的全部文件已上传,如需要,请自行下载!

其实,我有一点疑惑,这个是不是calico的网络!

注意事项及说明

1.版本兼容问题

参考k8s官方文档与cri-o官方文档选择容器运行时cri-o

参考下图,准备安装k8s 1.21,故选择cri-o 1.21.x。

1.21版本必须下载go1.16版本之上才可以。

2.镜像问题

kubeadm默认在imageRepository定制集群初始化时拉取k8s所需镜像的地址,默认为k8s.gcr.io,大家一般都会改为registry.aliyuncs.com/google_containers。

- 如果用docker作为容器运行时,可以从阿里云下载好镜像之后,直接利用tag改为k8s.gcr.io的前缀即可。

- 如果用containerd作为容器运行时,也可以使用ctr改镜像前缀。

- 但是cri-o却不可以,所以只能保证所有的镜像前缀都一样。可是阿里云的镜像不全,有部分镜像只能从其他地方下载,这样就会有两个前缀。kubeadm init的时候就会出现问题。解决办法是:自己建一个镜像仓库,把需要的镜像都传到自己的镜像仓库,统一把前缀改为自己的仓库地址即可。

安装步骤

安装要求

在开始之前,部署Kubernetes集群机器需要满足以下几个条件:

- 一台或多台机器,操作系统 CentOS7.x-86_x64

- 硬件配置:2GB或更多RAM,2个CPU或更多CPU,硬盘30GB或更多【注意master需要两核】

- 可以访问外网,需要拉取镜像,如果服务器不能上网,需要提前下载镜像并导入节点

- 禁止swap分区

准备环境

| 角色 | IP |

|---|---|

| master | 192.168.56.153 |

| node1 | 192.168.56.154 |

| node2 | 192.168.56.155 |

初始配置(每台机器都执行)

# 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

# 关闭selinux

# 永久关闭

sed -i 's/enforcing/disabled/' /etc/selinux/config

# 临时关闭

setenforce 0

# 关闭swap

# 临时

swapoff -a

# 永久关闭

sed -ri 's/.*swap.*/#&/' /etc/fstab

# 根据规划设置主机名【master节点上操作】

hostnamectl set-hostname crio-master

# 根据规划设置主机名【node1节点操作】

hostnamectl set-hostname crio-node1

# 根据规划设置主机名【node2节点操作】

hostnamectl set-hostname crio-node2

# 在master添加hosts

cat >> /etc/hosts << EOF

192.168.56.153 crio-master

192.168.56.154 crio-node1

192.168.56.155 crio-node2

EOF

cat >> /etc/hosts << EOF

192.168.56.142 crio-master

192.168.56.154 crio-node1

192.168.56.55 crio-node2

EOF

# 时间同步

yum install ntpdate -y

ntpdate time.windows.com

# 设置内核参数

cat > /etc/sysctl.d/99-kubernetes-cri.conf <<EOF

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

vm.swappiness=0

EOF

modprobe overlay

modprobe br_netfilter

# 生效

sysctl --system

# 安装ipvsadm,并设置ipvs模块自启

yum install ipvsadm

cat > /etc/sysconfig/modules/ipvs.modules << EOF

/sbin/modinfo -F filename ip_vs > /dev/null 2>&1

if [ $? -eq 0 ];then

/sbin/modprobe ip_vs

fi

EOF

安装cri-o(源码方式)(每台机器都执行)

安装依赖

yum install -y \

containers-common \

device-mapper-devel \

git \

glib2-devel \

glibc-devel \

glibc-static \

go \

gpgme-devel \

libassuan-devel \

libgpg-error-devel \

libseccomp-devel \

libselinux-devel \

pkgconfig \

make \

runc

[root@crio-master ~]# runc -v

runc version spec: 1.0.1-dev

安装cri-o(安装go)

需要先安装go,go的版本需要高一些,要不然编译不过去,make会失败

网上说需要1.16之上的版本,此处使用1.17版本

# 安装go

wget https://dl.google.com/go/go1.17.linux-amd64.tar.gz

tar -xzf go1.17.linux-amd64.tar.gz -C /usr/local

ln -s /usr/local/go/bin/* /usr/bin/ # 创建软链接 ln -s [源文件或目录] [目标文件或目录]

go version

[root@crio-master ~]# go version

go version go1.17 linux/amd64

# 安装cri-o

git clone -b release-1.21 https://github.com/cri-o/cri-o.git

cd cri-o

make # make -j8 #把项目并行编译 让make最多允许n个编译命令同时执行,这样可以更有效的利用CPU资源。

sudo make install

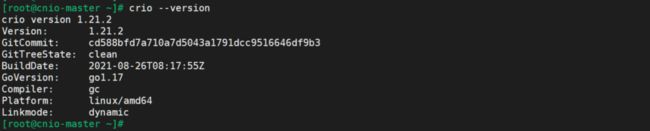

[root@crio-master ~]# crio -v

crio version 1.21.2

Version: 1.21.2

GitCommit: unknown

GitTreeState: unknown

BuildDate: 2021-09-03T07:28:21Z

GoVersion: go1.17

Compiler: gc

Platform: linux/amd64

Linkmode: dynamic

crio的配置文件默认为/etc/crio/crio.conf,可以通过命令crio config --default > /etc/crio/crio.conf来生成默认配置文件。

crio config --default > /etc/crio/crio.conf

安装conmon

git clone https://github.com/containers/conmon

cd conmon

make

sudo make install

Setup CNI networking

https://github.com/cri-o/cri-o/blob/master/contrib/cni/README.md

#下载不下来的话,就本地下载再上传

wget https://github.com/cri-o/cri-o/blob/master/contrib/cni/10-crio-bridge.conf

cp 10-crio-bridge.conf /etc/cni/net.d

# 没有文件夹的话 就自己新建就好了 mkdir -p /etc/cni/net.d

git clone https://github.com/containernetworking/plugins

cd plugins

# git checkout v0.8.7

./build_linux.sh

sudo mkdir -p /opt/cni/bin

sudo cp bin/* /opt/cni/bin/

cri-o配置

如果是第一次安装,生成并安装配置文件

[root@crio-node2 cri-o]# make install.config

install -Z -d /usr/local/share/containers/oci/hooks.d

install -Z -d /etc/crio/crio.conf.d

install -Z -D -m 644 crio.conf /etc/crio/crio.conf

install -Z -D -m 644 crio-umount.conf /usr/local/share/oci-umount/oci-umount.d/crio-umount.conf

install -Z -D -m 644 crictl.yaml /etc

[root@crio-node2 cri-o]#

(选做)Validate registries in registries.conf

Edit /etc/containers/registries.conf and verify that the registries option has valid values in it. For example:

[registries.search]

registries = ['registry.access.redhat.com', 'registry.fedoraproject.org', 'quay.io', 'docker.io']

[registries.insecure]

registries = []

[registries.block]

registries = []

Starting CRI-O

make install.systemd

[root@crio-master cri-o]# make install.systemd

install -Z -D -m 644 contrib/systemd/crio.service /usr/local/lib/systemd/system/crio.service

ln -sf crio.service /usr/local/lib/systemd/system/cri-o.service

install -Z -D -m 644 contrib/systemd/crio-shutdown.service /usr/local/lib/systemd/system/crio-shutdown.service

install -Z -D -m 644 contrib/systemd/crio-wipe.service /usr/local/lib/systemd/system/crio-wipe.service

[root@crio-master cri-o]#

sudo systemctl daemon-reload

sudo systemctl enable crio

sudo systemctl start crio

systemctl status crio

crio --version

安装crictl

官方安装方法:https://github.com/kubernetes-sigs/cri-tools/

VERSION="v1.21.0"

wget https://github.com/kubernetes-sigs/cri-tools/releases/download/$VERSION/crictl-$VERSION-linux-amd64.tar.gz

sudo tar zxvf crictl-$VERSION-linux-amd64.tar.gz -C /usr/local/bin

rm -f crictl-$VERSION-linux-amd64.tar.gz

crictl --runtime-endpoint unix:///var/run/crio/crio.sock version

使用kubeadm部署Kubernetes

添加kubernetes软件源

然后我们还需要配置一下yum的k8s软件源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装kubeadm,kubelet和kubectl

您需要在每台机器上安装以下的软件包:

kubeadm:用来初始化集群的指令。kubelet:在集群中的每个节点上用来启动 pod 和容器等。kubectl:用来与集群通信的命令行工具。

kubeadm 不能 帮您安装或者管理 kubelet 或 kubectl,所以您需要确保它们与通过 kubeadm 安装的控制平面的版本相匹配。如果不这样做,则存在发生版本偏差的风险,可能会导致一些预料之外的错误和问题。

由于版本更新频繁,这里指定版本号部署:

# 安装kubelet、kubeadm、kubectl,同时指定版本

# yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

#yum install -y kubelet kubeadm kubectl

#yum install -y kubelet-1.20.0 kubeadm-1.20.0 kubectl-1.20.0

yum install -y kubelet-1.21.2 kubeadm-1.21.2 kubectl-1.21.2

#### 这一步很重要

cat > /etc/sysconfig/kubelet <<EOF

KUBELET_EXTRA_ARGS=--container-runtime=remote --container-runtime-endpoint='unix:///var/run/crio/crio.sock'

EOF

# 设置开机启动

systemctl daemon-reload && systemctl enable kubelet --now

#systemctl status kubelet 目前的status是activating的状态

# 查看日志 journalctl -xe

[root@crio-node2 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.2", GitCommit:"092fbfbf53427de67cac1e9fa54aaa09a28371d7", GitTreeState:"clean", BuildDate:"2021-06-16T12:57:56Z", GoVersion:"go1.16.5", Compiler:"gc", Platform:"linux/amd64"}

[root@crio-node2 ~]#

[root@crio-node2 ~]#

[root@crio-node2 ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.2", GitCommit:"092fbfbf53427de67cac1e9fa54aaa09a28371d7", GitTreeState:"clean", BuildDate:"2021-06-16T12:59:11Z", GoVersion:"go1.16.5", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@crio-node2 ~]#

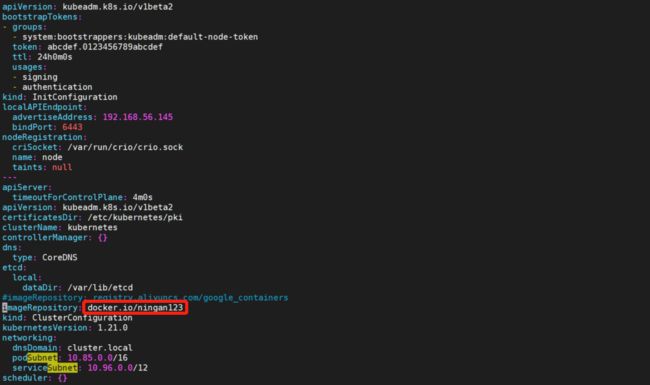

部署Kubernetes Master【master节点】

生成 kubeadm 配置文件:

[root@crio-master ~]# kubeadm config print init-defaults > kubeadm-config.yaml

W0903 15:50:51.208437 16483 kubelet.go:210] cannot automatically set CgroupDriver when starting the Kubelet: cannot execute 'docker info -f {{.CgroupDriver}}': executable file not found in $PATH

虽然会出现这句话,但是依然生成了kubeadm-config.yaml文件

# cat kubeadm-config.yaml # 初始生成的

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.2.3.4

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: node

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: 1.21.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

# 修改后的

apiVersion: kubeadm.k8s.io/v1beta2

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.56.153

bindPort: 6443

nodeRegistration:

criSocket: /var/run/crio/crio.sock

taints:

- effect: PreferNoSchedule

key: node-role.kubernetes.io/master

---

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.21.0

imageRepository: docker.io/ningan123

networking:

podSubnet: 10.85.0.0/16

注意这里的 PodSubnet 要和 /etc/cni/net.d/10-crio-bridge.conf 中的一致。

controlPlaneEndpoint 在需要做高可用时必须要加入,这里最好用一个虚拟 IP 来代理。

使用 kubeadm config images list 查看 kubeadm 需要的镜像

criSocket 要配置为 crio 的 socket 文件。

imageRepository 改为自己的镜像仓库。如果不改的话,可以先试试,后面就会报错,自己就明白啦!

kubeadm默认在

imageRepository定制集群初始化时拉取k8s所需镜像的地址,默认为k8s.gcr.io,大家一般都会改为registry.aliyuncs.com/google_containers。

如果用docker作为容器运行时,可以从阿里云下载好镜像之后,直接利用tag改为k8s.gcr.io的前缀即可。

如果用containerd作为容器运行时,也可以使用ctr改镜像前缀。

但是cri-o却不可以,所以只能保证所有的镜像前缀都一样。可是阿里云的镜像不全,没有coredns:v1.8.0。有部分镜像只能从其他地方下载,这样就会有两个前缀。kubeadm init的时候就会出现问题。解决办法是:自己建一个镜像仓库,把需要的镜像都传到自己的镜像仓库,统一把前缀改为自己的仓库地址即可。

在开始初始化集群之前可以使用kubeadm config images pull --config kubeadm.yaml预先在各个服务器节点上拉取所k8s需要的容器镜像。

[root@crio-master ~]# kubeadm config images list

I0903 15:57:47.465274 16788 version.go:254] remote version is much newer: v1.22.1; falling back to: stable-1.21

k8s.gcr.io/kube-apiserver:v1.21.4

k8s.gcr.io/kube-controller-manager:v1.21.4

k8s.gcr.io/kube-scheduler:v1.21.4

k8s.gcr.io/kube-proxy:v1.21.4

k8s.gcr.io/pause:3.4.1

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns/coredns:v1.8.0

可以看到,默认是从k8s.gcr.io下载镜像的

[root@crio-master ~]# kubeadm config images list --config kubeadm-config.yaml

docker.io/ningan123/kube-apiserver:v1.21.0

docker.io/ningan123/kube-controller-manager:v1.21.0

docker.io/ningan123/kube-scheduler:v1.21.0

docker.io/ningan123/kube-proxy:v1.21.0

docker.io/ningan123/pause:3.4.1

docker.io/ningan123/etcd:3.4.13-0

docker.io/ningan123/coredns:v1.8.0

因为配置文件里面的镜像仓库是docker.io/ningan123,所以此处显示的镜像是自己的镜像仓库

可以先按照这个要求把镜像上传到自己的镜像仓库中,具体方法参考自己下面的错误(kubeadm init下载不到镜像)

修改 /etc/crio/crio.conf 中的配置: (要记住所有的node都要修改,否则就会出现你想半天都想不出来的问题,我真的是调了好久好久,某一天突然脑子一亮,想到了解决办法,开心)

crio的配置文件默认为/etc/crio/crio.conf,可以通过命令crio config --default > /etc/crio/crio.conf来生成默认配置文件。

crio config --default > /etc/crio/crio.conf

# 原博客

insecure_registries = ["registry.prod.bbdops.com"]

pause_image = "registry.prod.bbdops.com/common/pause:3.2"

# 自己的配置 修改为自己的镜像仓库

insecure_registries = ["docker.io"]

pause_image = "docker.io/ningan123/pause:3.5"

# 修改完记得重启

[root@crio-master ~]# systemctl daemon-reload

[root@crio-master ~]# systemctl restart crio

[root@crio-master ~]# systemctl status crio

部署:

[root@crio-master ~]# kubeadm init --config kubeadm-config.yaml

因为要拉取镜像,所以需要时间长一点,可以提前把镜像拉取下来。

加入集群节点的命令

kubeadm join 192.168.56.142:6443 --token fvfg1j.ri0yl3hi80d3bv6o \

--discovery-token-ca-cert-hash sha256:b7d05f887a406c28da9eebb5b519207d4839373295454dd93b225da015d6b6fe

使用kubectl工具 【master节点操作】

执行如下命令为客户端工具kubectl配置上下文,其中文件**/etc/kubernetes/admin.conf**具备整个集群管理员权限。

# 如果前面init过,先删除再新建 rm -rf $HOME/.kube

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

执行完成后,我们使用下面命令,查看我们正在运行的节点

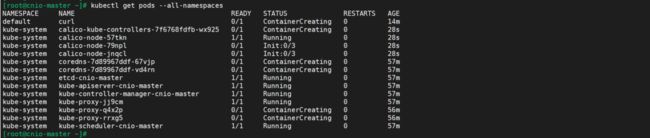

kubectl get nodes

kubectl get pods --all-namespaces -o wide

kubectl describe pod coredns-7d89967ddf-4qjvl -n kube-system

kubectl describe pod coredns-7d89967ddf-6md2p -n kube-system

不知道为什么已经是ready了,难道是因为前面设置了网络插件??

如果coredns一直处于ContainerCreating的状态 查看coredns那个pod的状态

kubectl describe pod pod名字 -n kube-system

出现错误:error adding seccomp rule for syscall socket: requested action matches default action of filter""

参考下面的解决办法,将runc的版本升高就可以了

查看集群状态,如果状态异常参见下面错误解决办法

kubectl get cs

默认创建了4个命名空间

k8s系统组件全部至于kube-system命名空间中

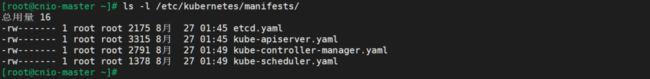

注意:etcd、kube-apiserver、kube-controller-manager、kube-scheduler组件为静态模式部署,其部署清单在主机的**/etc/kubernetes/manifests**目录里,kubelet会自动加载此目录并启动pod。

ls -l /etc/kubernetes/manifests/

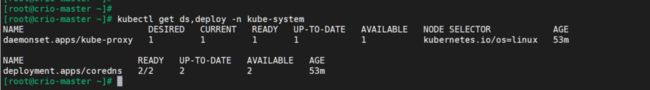

coredns使用deployment部署,而kube-proxy使用daemonset模式部署:

加入Kubernetes Node【Slave节点】

下面我们需要到 node1 和 node2服务器,执行下面的代码向集群添加新节点

执行在kubeadm init输出的kubeadm join命令:

注意,以下的命令是在master初始化完成后,每个人的都不一样!!!需要复制自己生成的

kubeadm join 192.168.56.142:6443 --token fvfg1j.ri0yl3hi80d3bv6o \

--discovery-token-ca-cert-hash sha256:b7d05f887a406c28da9eebb5b519207d4839373295454dd93b225da015d6b6fe

如果显示XXX exists,就在前面加上kubeadm reset

kubeadm reset kubeadm join 192.168.56.142:6443 --token fvfg1j.ri0yl3hi80d3bv6o \

--discovery-token-ca-cert-hash sha256:b7d05f887a406c28da9eebb5b519207d4839373295454dd93b225da015d6b6fe

默认token有效期为24小时,当过期之后,该token就不可用了。这时就需要重新创建token,操作如下:

kubeadm token create --print-join-command

当我们把两个节点都加入进来后,我们就可以去Master节点 执行下面命令查看情况

kubectl get node

部署CNI网络插件

不用部署已经是ready了,迷!

可能是上面设置了CNI的缘故。

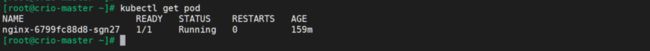

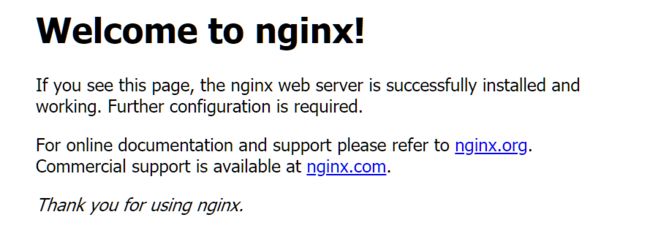

测试kubernetes集群

我们都知道K8S是容器化技术,它可以联网去下载镜像,用容器的方式进行启动

在Kubernetes集群中创建一个pod,验证是否正常运行:

# 下载nginx 【会联网拉取nginx镜像】

kubectl create deployment nginx --image=nginx

# 查看状态

kubectl get pod -o wide

如果我们出现Running状态的时候,表示已经成功运行了

下面我们就需要将端口暴露出去,让其它外界能够访问

# 暴露端口

kubectl expose deployment nginx --port=80 --type=NodePort

# 查看一下对外的端口

kubectl get pod,svc

能够看到,我们已经成功暴露了 80端口 到 30529上

我们到我们的宿主机浏览器上,访问如下地址

http://192.168.56.154:30318/

发现我们的nginx已经成功启动了

到这里为止,我们就搭建了一个单master的k8s集群

错误汇总

0.kubeadm init配置文件

# 第二次部署用的这个

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.56.153

bindPort: 6443

nodeRegistration:

criSocket: /var/run/crio/crio.sock

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: docker.io/ningan123

kind: ClusterConfiguration

kubernetesVersion: 1.21.0

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

1.问题:cri-o编译时提示:go:未找到命令

安装go语言环境

![]()

2.错误:cri-o编译时提示:cannot find package “.” in:

/root/cri-o/vendor/archive/tar

[root@cnio-master cri-o]# make

go build -trimpath -ldflags '-s -w -X github.com/cri-o/cri-o/internal/pkg/criocli.DefaultsPath="" -X github.com/cri-o/cri-o/internal/version.buildDate='2021-08-26T07:31:09Z' -X github.com/cri-o/cri-o/internal/version.gitCommit=87d9f169dad21a9049f25e47c3c8f78635bea340 -X github.com/cri-o/cri-o/internal/version.gitTreeState=clean ' -tags "containers_image_ostree_stub exclude_graphdriver_btrfs btrfs_noversion containers_image_openpgp seccomp selinux " -o bin/crio github.com/cri-o/cri-o/cmd/crio

vendor/github.com/containers/storage/pkg/archive/archive.go:4:2: cannot find package "." in:

/root/cri-o/vendor/archive/tar

vendor/github.com/containers/storage/pkg/archive/archive.go:5:2: cannot find package "." in:

/root/cri-o/vendor/bufio

vendor/github.com/containers/storage/layers.go:4:2: cannot find package "." in:

/root/cri-o/vendor/bytes

vendor/github.com/containers/storage/pkg/archive/archive.go:7:2: cannot find package "." in:

/root/cri-o/vendor/compress/bzip2

vendor/golang.org/x/crypto/openpgp/packet/compressed.go:9:2: cannot find package "." in:

/root/cri-o/vendor/compress/flate

vendor/golang.org/x/net/http2/transport.go:12:2: cannot find package "." in:

/root/cri-o/vendor/compress/gzip

vendor/golang.org/x/crypto/openpgp/packet/compressed.go:10:2: cannot find package "." in:

/root/cri-o/vendor/compress/zlib

vendor/github.com/vbauerster/mpb/v7/progress.go:5:2: cannot find package "." in:

/root/cri-o/vendor/container/heap

vendor/github.com/containers/storage/drivers/copy/copy_linux.go:14:2: cannot find package "." in:

/root/cri-o/vendor/container/list

cmd/crio/main.go:4:2: cannot find package "." in:

/root/cri-o/vendor/context

vendor/github.com/opencontainers/go-digest/algorithm.go:19:2: cannot find package "." in:

/root/cri-o/vendor/crypto

解决办法:

go的版本太低了,之前go的版本是1.15.14,需要是1.16之上

参考:

crio官方github问题

[Linux下Go的安装、配置 、升级和卸载](Linux下Go的安装、配置 、升级和卸载 - Go语言中文网 - Golang中文社区 (studygolang.com))

![]()

[root@cnio-master cri-o]# make -j8

go build -ldflags '-s -w -X github.com/cri-o/cri-o/internal/pkg/criocli.DefaultsPath="" -X github.com/cri-o/cri-o/internal/version.buildDate='2021-08-26T08:17:55Z' -X github.com/cri-o/cri-o/internal/version.gitCommit=cd588bfd7a710a7d5043a1791dcc9516646df9b3 -X github.com/cri-o/cri-o/internal/version.gitTreeState=clean ' -tags "containers_image_ostree_stub exclude_graphdriver_btrfs btrfs_noversion seccomp selinux" -o bin/crio github.com/cri-o/cri-o/cmd/crio

go build -ldflags '-s -w -X github.com/cri-o/cri-o/internal/pkg/criocli.DefaultsPath="" -X github.com/cri-o/cri-o/internal/version.buildDate='2021-08-26T08:17:55Z' -X github.com/cri-o/cri-o/internal/version.gitCommit=cd588bfd7a710a7d5043a1791dcc9516646df9b3 -X github.com/cri-o/cri-o/internal/version.gitTreeState=clean ' -tags "containers_image_ostree_stub exclude_graphdriver_btrfs btrfs_noversion seccomp selinux" -o bin/crio-status github.com/cri-o/cri-o/cmd/crio-status

make -C pinns

make[1]: 进入目录“/root/cri-o/pinns”

make[1]: 对“all”无需做任何事。

make[1]: 离开目录“/root/cri-o/pinns”

(/root/cri-o/build/bin/go-md2man -in docs/crio-status.8.md -out docs/crio-status.8.tmp && touch docs/crio-status.8.tmp && mv docs/crio-status.8.tmp docs/crio-status.8) || \

(/root/cri-o/build/bin/go-md2man -in docs/crio-status.8.md -out docs/crio-status.8.tmp && touch docs/crio-status.8.tmp && mv docs/crio-status.8.tmp docs/crio-status.8)

(/root/cri-o/build/bin/go-md2man -in docs/crio.conf.5.md -out docs/crio.conf.5.tmp && touch docs/crio.conf.5.tmp && mv docs/crio.conf.5.tmp docs/crio.conf.5) || \

(/root/cri-o/build/bin/go-md2man -in docs/crio.conf.5.md -out docs/crio.conf.5.tmp && touch docs/crio.conf.5.tmp && mv docs/crio.conf.5.tmp docs/crio.conf.5)

(/root/cri-o/build/bin/go-md2man -in docs/crio.conf.d.5.md -out docs/crio.conf.d.5.tmp && touch docs/crio.conf.d.5.tmp && mv docs/crio.conf.d.5.tmp docs/crio.conf.d.5) || \

(/root/cri-o/build/bin/go-md2man -in docs/crio.conf.d.5.md -out docs/crio.conf.d.5.tmp && touch docs/crio.conf.d.5.tmp && mv docs/crio.conf.d.5.tmp docs/crio.conf.d.5)

(/root/cri-o/build/bin/go-md2man -in docs/crio.8.md -out docs/crio.8.tmp && touch docs/crio.8.tmp && mv docs/crio.8.tmp docs/crio.8) || \

(/root/cri-o/build/bin/go-md2man -in docs/crio.8.md -out docs/crio.8.tmp && touch docs/crio.8.tmp && mv docs/crio.8.tmp docs/crio.8)

./bin/crio -d "" --config="" config > crio.conf

INFO[2021-08-26 16:20:36.694730863+08:00] Starting CRI-O, version: 1.21.2, git: cd588bfd7a710a7d5043a1791dcc9516646df9b3(clean)

INFO Using default capabilities: CAP_CHOWN, CAP_DAC_OVERRIDE, CAP_FSETID, CAP_FOWNER, CAP_SETGID, CAP_SETUID, CAP_SETPCAP, CAP_NET_BIND_SERVICE, CAP_KILL

[root@cnio-master cri-o]#

编译通过,棒~

真的是debug了好久好久呀

3.错误:cc:命令未找到

[root@crio-node2 cri-o]# make -j8

mkdir -p "/root/cri-o/_output/src/github.com/cri-o"

make -C pinns

make[1]: 进入目录“/root/cri-o/pinns”

cc -std=c99 -Os -Wall -Werror -Wextra -static -O3 -o src/sysctl.o -c src/sysctl.c

make[1]: cc:命令未找到

make[1]: *** [src/sysctl.o] 错误 127

make[1]: 离开目录“/root/cri-o/pinns”

ln -s "/root/cri-o" "/root/cri-o/_output/src/github.com/cri-o/cri-o"

make: *** [bin/pinns] 错误 2

make: *** 正在等待未完成的任务....

touch "/root/cri-o/_output/.gopathok"

# 安装gcc即可

yum install -y gcc

3.错误:kubelet启动时报错:Flag --cgroup-driver has been deprecated

8月 26 17:46:41 cnio-master kubelet[29519]: Flag --cgroup-driver has been deprecated, This parameter should be set via the config file specified by the Kubelet's --config flag. See https://kubernetes.io/docs/task

8月 26 17:46:41 cnio-master kubelet[29519]: Flag --runtime-request-timeout has been deprecated, This parameter should be set via the config file specified by the Kubelet's --config flag. See https://kubernetes.io

8月 26 17:46:41 cnio-master kubelet[29519]: E0826 17:46:41.446034 29519 server.go:204] "Failed to load kubelet config file" err="failed to load Kubelet config file /var/lib/kubelet/config.yaml, error failed to

8月 26 17:46:41 cnio-master systemd[1]: kubelet.service: main process exited, code=exited, status=1/FAILURE

因为有的参数过时了

# 更改配置 重新启动

cat > /etc/sysconfig/kubelet <<EOF

KUBELET_EXTRA_ARGS=--container-runtime=remote --container-runtime-endpoint='unix:///var/run/crio/crio.sock'

EOF

# 设置开机启动

systemctl daemon-reload && systemctl enable kubelet --now

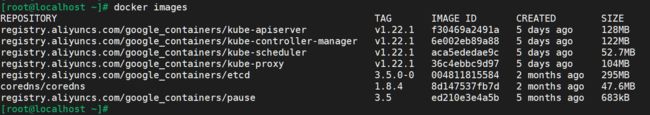

4.错误:kubeadm init显示下载不到镜像

[ERROR ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/coredns:v1.8.0:

# 错误提示

[ERROR ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/coredns:v1.8.0: output: time="2021-08-26T19:09:02+08:00" level=fatal msg="pulling image: rpc error: code = Unknowror reading manifest v1.8.0 in registry.aliyuncs.com/google_containers/coredns: manifest unknown: manifest unknown"

阿里云里面没有:

1.21版本的coredns:v1.8.0

1.22版本的coredns:v1.8.4

所以需要更改为自己的地址,将需要的镜像都上传到自己的服务器上面

如何确定都需要上传什么镜像呢?

运行以下命令报错,就知道该上传什么镜像了,

kubeadm init --config kubeadm-config.yaml --upload-certs

更改为自己的服务器之后

kubeadm config images list

kubeadm config images list --config kubeadm-config.yaml

解决办法

自己在dockerhub上面建了一个自己的仓库,名为ningan123

# 另一台装了docke的主机 假设名字为AAA

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.21.0

docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.21.0

docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.21.0

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.21.0

docker pull registry.aliyuncs.com/google_containers/pause:3.4.1

docker pull registry.aliyuncs.com/google_containers/etcd:3.4.13-0

#docker pull registry.aliyuncs.com/google_containers/coredns:v1.8.0 这个源里面没有

#docker pull networkman/coredns:v1.8.0

#docker pull docker.io/coredns/coredns:v1.8.0 v1.8.0没有,只有1.8.0

docker pull docker.io/coredns/coredns:1.8.0

# 在AAA上操作

docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.21.0 ningan123/kube-apiserver:v1.21.0

docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.21.0 ningan123/kube-controller-manager:v1.21.0

docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.0 ningan123/kube-scheduler:v1.21.0

docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.21.0 ningan123/kube-proxy:v1.21.0

docker tag registry.aliyuncs.com/google_containers/pause:3.4.1 ningan123/pause:3.4.1

docker tag registry.aliyuncs.com/google_containers/etcd:3.4.13-0 ningan123/etcd:3.4.13-0

docker tag docker.io/coredns/coredns:1.8.0 ningan123/coredns:v1.8.0

# 在AAA上操作

docker push ningan123/kube-apiserver:v1.21.0

docker push ningan123/kube-controller-manager:v1.21.0

docker push ningan123/kube-scheduler:v1.21.0

docker push ningan123/kube-proxy:v1.21.0

docker push ningan123/pause:3.4.1

docker push ningan123/etcd:3.4.13-0

docker push ningan123/coredns:v1.8.0

# 在master上面下载镜像 (好像不用用户名和密码也可以)

crictl pull docker.io/ningan123/kube-apiserver:v1.21.0 --creds ningan123:awy199525

crictl pull docker.io/ningan123/kube-controller-manager:v1.21.0 --creds ningan123:awy199525

crictl pull docker.io/ningan123/kube-scheduler:v1.21.0 --creds ningan123:awy199525

crictl pull docker.io/ningan123/kube-proxy:v1.21.0 --creds ningan123:awy199525

crictl pull docker.io/ningan123/pause:3.4.1 ningan123:awy199525

crictl pull docker.io/ningan123/etcd:3.4.13-0 --creds ningan123:awy199525

crictl pull docker.io/ningan123/coredns:v1.8.0 --creds ningan123:awy199525

5.错误:Error initializing source docker://k8s.gcr.io/pause:3.5:

Error initializing source docker://k8s.gcr.io/pause:3.5: error pinging docker registry k8s.gcr.io: Get “https://k8s.gcr.io/v2/”: dial tcp 64.233.188.82:443: connect: connection refused"

修改 /etc/crio/crio.conf 中的配置: (要记住所有的node都要修改,否则就会出现你想半天都想不出来的问题,我真的是调了好久好久,某一天突然脑子一亮,想到了解决办法,开心)

# 原博客

insecure_registries = ["registry.prod.bbdops.com"]

pause_image = "registry.prod.bbdops.com/common/pause:3.2"

# 自己的配置 修改为自己的镜像仓库

insecure_registries = ["docker.io"]

pause_image = "docker.io/ningan123/pause:3.5"

# 修改完记得重启

[root@crio-master ~]# systemctl daemon-reload

[root@crio-master ~]# systemctl restart crio

[root@crio-master ~]# systemctl status crio

6.错误:Error getting node" err=“node “node” not found”

将node注释掉

7.错误: “Error getting node” err=“node “cnio-master” not found”

在/etc/hosts文件中添加地址映射关系

8.错误: [ERROR FileContent–proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

[ERROR FileContent–proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

[preflight] If you know what you are doing, you can make a check non-fatal with --ignore-preflight-errors=...

解决办法

[root@cnio-master ~]# cat > /etc/sysctl.d/99-kubernetes-cri.conf <

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> net.bridge.bridge-nf-call-ip6tables = 1

> vm.swappiness=0

> EOF

# 之前没有加vm.swappiness

[root@cnio-master ~]# modprobe br_netfilter

[root@cnio-master ~]#

[root@cnio-master ~]#

[root@cnio-master ~]# sysctl -p /etc/sysctl.d/99-kubernetes-cri.conf

9.错误:

[root@cnio-master ~]# kubeadm reset && kubeadm init --config kubeadm-config.yaml --upload-certs

[reset] Reading configuration from the cluster...

[reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

[reset] Removing info for node "cnio-master" from the ConfigMap "kubeadm-config" in the "kube-system" Namespace

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

[reset] Deleting contents of stateful directories: [/var/lib/etcd /var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni]

The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

invalid configuration for GroupVersionKind /, Kind=: kind and apiVersion is mandatory information that must be specified

To see the stack trace of this error execute with --v=5 or higher

[root@cnio-master ~]#

错误原因:因为自己的配置文件更改的不正确

# 1 有问题的配置文件 这个文件只是在原始生成文件上做了一点点的修改

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.56.145

bindPort: 6443

nodeRegistration:

criSocket: /var/run/crio/crio.sock

#name: node

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

#imageRepository: registry.aliyuncs.com/google_containers

imageRepository: docker.io/ningan123

kind: ClusterConfiguration

kubernetesVersion: 1.21.0

networking:

dnsDomain: cluster.local

podSubnet: 10.85.0.0/16

serviceSubnet: 10.96.0.0/16

scheduler: {}

# 2 这个文件是在上面的文件的基础上去掉了bootstrapTokens相关的东西 可以正常successful

apiVersion: kubeadm.k8s.io/v1beta2

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.56.145

bindPort: 6443

nodeRegistration:

criSocket: /var/run/crio/crio.sock

name: node

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: docker.io/ningan123

kind: ClusterConfiguration

kubernetesVersion: 1.21.0

networking:

dnsDomain: cluster.local

podSubnet: 10.85.0.0/16

serviceSubnet: 10.96.0.0/16

scheduler: {}

# 这个是别人家的格式 这个也可以successful

apiVersion: kubeadm.k8s.io/v1beta2

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.56.145

bindPort: 6443

nodeRegistration:

criSocket: /var/run/crio/crio.sock

taints:

- effect: PreferNoSchedule

key: node-role.kubernetes.io/master

---

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.21.0

imageRepository: docker.io/ningan123

networking:

podSubnet: 10.85.0.0/16

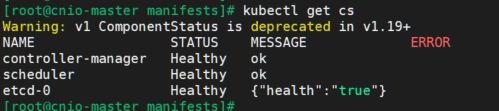

10.错误:kubectl get cs出现错误

[root@cnio-master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

etcd-0 Healthy {"health":"true"}

controller-manager和scheduler为不健康状态,修改/etc/kubernetes/manifests/下的静态pod配置文件kube-controller-manager.yaml和kube-scheduler.yaml,删除这两个文件中命令选项中的- --port=0这行,重启kubelet,再次查看一切正常。

11.错误:Get “http://localhost:10248/healthz”: dial tcp [::1]:10248: connect: connection refused.

The HTTP call equal to ‘curl -sSL http://localhost:10248/healthz’ failed with error: Get “http://localhost:10248/healthz”: dial tcp [::1]:10248: connect: connection refused.

方法1:参考上一个错误的解决办法,查看一下集群的状态,应该是集群的部分组件没有正常运行

方法2:重启 然后会出现错误8,按照下面执行 (我调了一上午,最后居然要靠重启来解决问题,我我我,能说什么呢?)

modprobe overlay

modprobe br_netfilter

# 生效

sysctl --system

然后重新join就可以了

12.错误:Unable to connect to the server: x509: certificate signed by unknown authority

[root@cnio-master ~]# kubectl get node

Unable to connect to the server: x509: certificate signed by unknown authority (possibly because of “crypto/rsa: verification error” while trying to verify candidate authority certificate “kubernetes”)

13.错误:[ERROR FileContent–proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

出现错误:

ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

解决方法:

modprobe br_netfilter

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

————————————————

版权声明:本文为CSDN博主「永远在路上啊」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/qq_34988341/article/details/109501589

14.错误:安装calico网络插件coredns一直显示ContainerCreating

# 查看错误原因

kubectl describe pod kube-proxy-rrxg5 -n kube-system

# 失败提示:

Warning FailedCreatePodSandBox <invalid> (x56 over <invalid>) kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = error creating pod sandbox with name "k8s_kube-proxy-rrxg5_kube-system_17893532-5a8f-45ba-9b3b-7ad08264f68a_0": Error initializing source docker://k8s.gcr.io/pause:3.5: error pinging docker registry k8s.gcr.io: Get "https://k8s.gcr.io/v2/": dial tcp 64.233.188.82:443: connect: connection refused

又是一个k8s.gcr.io/pause:3.5

这个bug我真的是调了好久,我把所有能试的方案都试过了,都不行。

我本来已经放弃了,干了几天别的事情,后来有一天早上上班的路上,脑子灵光一现,我好像知道问题在哪了。

问题所在:自己只改了 master里面的crio.conf里面关于镜像的下载地址,但是没有改node里面的配置。所以pod里面有三个kube-proxy,master的那个是好的,其他node的有问题。

解决办法:在node节点也进行修改

如果node节点没有crio.conf文件,通过下面命令生成:

crio config --default > /etc/crio/crio.conf

修改 /etc/crio/crio.conf 中的配置:

# 原博客

insecure_registries = ["registry.prod.bbdops.com"]

pause_image = "registry.prod.bbdops.com/common/pause:3.2"

# 自己的配置 修改为自己的镜像仓库

insecure_registries = ["docker.io"]

pause_image = "docker.io/ningan123/pause:3.5"

# 修改完记得重启

[root@crio-master ~]# systemctl daemon-reload

[root@crio-master ~]# systemctl restart crio

[root@crio-master ~]# systemctl status crio

15.其他配置镜像的地方,记录于此,以后有需要直接查找

我们为docker.io与k8s.gcr.io配置mirror镜像仓库。注意:为k8s.gcr.io配置镜像仓库很重要,虽然我们可设置--image-repository registry.aliyuncs.com/google_containers来告知kubeinit从阿里云镜像仓库下载镜像,但本人发现部署Pod时仍使用k8s.gcr.io/pause镜像,故为了避免因此产生报错,此处为其配置Mirror镜像仓库。

cat > /etc/containers/registries.conf <registry.aliyuncs.com/google_containers

注意:“安装容器运行时”一节有提到过,即使我们在调用kubeadm init时通过参数--image-repository指定镜像仓库地址,但pause镜像仍从k8s.gcr.io获取从而导致异常,为解决此问题,我们在容器运行时为k8s.gcr.io配置了镜像仓库,但还有一种方式可供选择:调整kubelet配置指定pause镜像。

# /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS=--pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.5

16.错误:coredns一直处于ContainerCreating的状态 error adding seccomp rule for syscall socket: requested action matches default action of filter""

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 29s default-scheduler Successfully assigned default/nginx-6799fc88d8-vrrz8 to crio-node2

Warning FailedCreatePodSandBox 26s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = container create failed: time="2021-09-03T10:40:10+08:00" level=error msg="container_linux.go:349: starting container process caused \"error adding seccomp rule for syscall socket: requested action matches default action of filter\""

container_linux.go:349: starting container process caused "error adding seccomp rule for syscall socket: requested action matches default action of filter"

Warning FailedCreatePodSandBox 12s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = container create failed: time="2021-09-03T10:40:24+08:00" level=error msg="container_linux.go:349: starting container process caused \"error adding seccomp rule for syscall socket: requested action matches default action of filter\""

container_linux.go:349: starting container process caused "error adding seccomp rule for syscall socket: requested action matches default action of filter"

网上说是runc的版本太低了,自己就把runc的版本调整了一下

# 修改前

[root@crio-master ~]# runc -v

runc version spec: 1.0.1-dev

# 修改后

[root@crio-master runc]# runc -v

runc version 1.0.2

commit: v1.0.2-0-g52b36a2

spec: 1.0.2-dev

go: go1.17

libseccomp: 2.3.1

git clone https://github.com/opencontainers/runc -b v1.0.2

cd runc

make

make install

[root@crio-node1 runc-1.0.2]# make install

fatal: Not a git repository (or any of the parent directories): .git

fatal: Not a git repository (or any of the parent directories): .git

install -D -m0755 runc /usr/local/sbin/runc

# 安装成功之后,通过runc -v查看版本 还是之前的版本

[root@crio-node2 ~]# runc -v

runc version spec: 1.0.1-dev

# 查看runc的位置 看到有其他地方的

[root@crio-node2 ~]# whereis runc

runc: /usr/bin/runc /usr/local/sbin/runc /usr/share/man/man8/runc.8.gz

# 先备份之前的文件

[root@crio-master ~]# mv /usr/bin/runc /usr/bin/runc_backup

# 解决办法:存的位置和读取的位置不在一起,那就在读的位置也放一份文件就好了

[root@crio-node1 runc]# cp /usr/local/sbin/runc /usr/bin/runc

# 成功解决问题!

[root@crio-node2 ~]# runc -v

runc version 1.0.2

spec: 1.0.2-dev

go: go1.17

libseccomp: 2.3.1

小知识科普

seccomp

什么是seccomp

seccomp(全称securecomputing mode)是linuxkernel从2.6.23版本开始所支持的一种安全机制。在Linux系统里,大量的系统调用(systemcall)直接暴露给用户态程序。但是,并不是所有的系统调用都被需要,而且不安全的代码滥用系统调用会对系统造成安全威胁。通过seccomp,我们限制程序使用某些系统调用,这样可以减少系统的暴露面,同时是程序进入一种“安全”的状态。

————————————————

原文链接:https://blog.csdn.net/mashimiao/article/details/73607485

make -j

既然IO不是瓶颈,那CPU就应该是一个影响编译速度的重要因素了。

用make -j带一个参数,可以把项目在进行并行编译,比如在一台双核的机器上,完全可以用make -j4,让make最多允许4个编译命令同时执行,这样可以更有效的利用CPU资源。

还是用Kernel来测试:

用make: 40分16秒

用make -j4:23分16秒

用make -j8:22分59秒

由此看来,在多核CPU上,适当的进行并行编译还是可以明显提高编译速度的。但并行的任务不宜太多,一般是以CPU的核心数目的两倍为宜。

参考文献

-

cri-o官方安装文档

-

使用CRI-O 和 Kubeadm 搭建高可用 Kubernetes 集群(crio1.19 k8s1.18.4)

-

k8s crio 测试环境搭建

-

使用kubeadm部署Kubernetes 1.22(k8s1.22 )

-

[使用kubeadm搭建一单节点k8s测试集群](https://segmentfault.com/a/1190000022907793)

-

[解决kubeadm部署kubernetes集群镜像问题](https://www.cnblogs.com/nb-blog/p/10636733.html)

runc version 1.0.2

spec: 1.0.2-dev

go: go1.17

libseccomp: 2.3.1

[外链图片转存中...(img-lWTarBGx-1630666371190)]

## 小知识科普

### seccomp

> 什么是seccomp

> seccomp(全称securecomputing mode)是linuxkernel从2.6.23版本开始所支持的一种安全机制。

>

> 在Linux系统里,大量的系统调用(systemcall)直接暴露给用户态程序。但是,并不是所有的系统调用都被需要,而且不安全的代码滥用系统调用会对系统造成安全威胁。通过seccomp,我们限制程序使用某些系统调用,这样可以减少系统的暴露面,同时是程序进入一种“安全”的状态。

> ————————————————

> 原文链接:https://blog.csdn.net/mashimiao/article/details/73607485

### make -j

既然IO不是瓶颈,那CPU就应该是一个影响编译速度的重要因素了。

用make -j带一个参数,可以把项目在进行并行编译,比如在**一台双核的机器上,完全可以用make -j4**,让make最多允许4个编译命令同时执行,这样可以更有效的利用CPU资源。

还是用Kernel来测试:

用make: 40分16秒

用make -j4:23分16秒

用make -j8:22分59秒

由此看来,在多核CPU上,适当的进行并行编译还是可以明显提高编译速度的。但并行的任务不宜太多,一般是以[CPU的核心](https://www.baidu.com/s?wd=CPU的核心&tn=24004469_oem_dg&rsv_dl=gh_pl_sl_csd)数目的两倍为宜。

## 参考文献

- [cri-o官方安装文档](https://github.com/cri-o/cri-o/blob/master/install.md)

- [使用CRI-O 和 Kubeadm 搭建高可用 Kubernetes 集群](https://xujiyou.work/%E4%BA%91%E5%8E%9F%E7%94%9F/CRI-O/%E4%BD%BF%E7%94%A8CRI-O%E5%92%8CKubeadm%E6%90%AD%E5%BB%BA%E9%AB%98%E5%8F%AF%E7%94%A8%20Kubernetes%20%E9%9B%86%E7%BE%A4.html)(crio1.19 k8s1.18.4)

- [k8s crio 测试环境搭建](https://hanamichi.wiki/posts/k8s-ciro/)

- [使用kubeadm部署Kubernetes 1.22](https://blog.frognew.com/2021/08/kubeadm-install-kubernetes-1.22.html#24-%E9%83%A8%E7%BD%B2pod-network%E7%BB%84%E4%BB%B6calico)(k8s1.22 )

- [[使用kubeadm搭建一单节点k8s测试集群](https://segmentfault.com/a/1190000022907793)](https://segmentfault.com/a/1190000022907793)

- [[解决kubeadm部署kubernetes集群镜像问题](https://www.cnblogs.com/nb-blog/p/10636733.html)](https://www.cnblogs.com/nb-blog/p/10636733.html)