Tensorrt部署BBAVectors从入门到入坟

本博客将从标图到最终采用tensorrt部署BBAVectors,一步一步手把手教你如何成为一个合格的算法搬运工。BBAVectors是一款用于旋转框目标检测的神经网络,采用anchor-free机制,地址:见这里,具体原理我就不说了,关于这个网络的中文博客百度一大堆。直接进入实操。。。。这个博客原则上需要具有一定深度学习能力的搬运工食用,指能够熟练torch,opencv,cuda,cudnn,tensorrt的安装与部分使用。

目录

一、模型训练与onnx转换

1.数据标注

2.标签转换

3.模型训练

3.1 编译安装

3.2 数据分割

3.3 划分训练集与验证集

3.4 模型训练

4.生成onnx模型

二、Tensorrt模型转换

1.编译安装

2.生成engine文件

3.tensorrt推理

三、参考

硬件:GTX1080TI,cuda10.2,cudnn8.1,tensorrt8.0,torch1.8.1

一、模型训练与onnx转换

原作者的代码我为了将decoder加入到onnx中,对里面的代码进行了修改,直接上我修改后的代码。代码地址:这里

1.数据标注

标注软件为rolabelimg,标注方法见这里,直接百度爬取到番茄来搞,我们检测番茄及其方向。标注如图所示。

2.标签转换

将标注好的xml文件,转换为DOTA_devkit的dota格式,如下:

txt的格式为:Format: x1, y1, x2, y2, x3, y3, x4, y4, category, difficulty

275.0 463.0 411.0 587.0 312.0 600.0 222.0 532.0 tomato 0

341.0 376.0 487.0 487.0 434.0 556.0 287.0 444.0 tomato 0

428.0 6.0 519.0 66.0 492.0 108.0 405.0 50.0 tomato 0

代码如下:

# *_* coding : UTF-8 *_*

# 功能描述 :把旋转框 cx,cy,w,h,angle,转换成四点坐标x1,y1,x2,y2,x3,y3,x4,y4,class,difficulty

import os

import xml.etree.ElementTree as ET

import math

label=['tomato']

def edit_xml(xml_file):

"""

修改xml文件

:param xml_file:xml文件的路径

:return:

"""

print(xml_file)

tree = ET.parse(xml_file)

f=open(xml_file.replace('xml','txt').replace('anns','labelTxt'),'w')

objs = tree.findall('object')

for ix, obj in enumerate(objs):

obj_type = obj.find('type')

type = obj_type.text

if type == 'bndbox':

obj_bnd = obj.find('bndbox')

obj_xmin = obj_bnd.find('xmin')

obj_ymin = obj_bnd.find('ymin')

obj_xmax = obj_bnd.find('xmax')

obj_ymax = obj_bnd.find('ymax')

xmin = float(obj_xmin.text)

ymin = float(obj_ymin.text)

xmax = float(obj_xmax.text)

ymax = float(obj_ymax.text)

obj_bnd.remove(obj_xmin) # 删除节点

obj_bnd.remove(obj_ymin)

obj_bnd.remove(obj_xmax)

obj_bnd.remove(obj_ymax)

x0 = xmin

y0 = ymin

x1 = xmax

y1 = ymin

x2 = xmin

y2 = ymax

x3 = xmax

y3 = ymax

elif type == 'robndbox':

obj_bnd = obj.find('robndbox')

obj_bnd.tag = 'bndbox' # 修改节点名

obj_cx = obj_bnd.find('cx')

obj_cy = obj_bnd.find('cy')

obj_w = obj_bnd.find('w')

obj_h = obj_bnd.find('h')

obj_angle = obj_bnd.find('angle')

cx = float(obj_cx.text)

cy = float(obj_cy.text)

w = float(obj_w.text)

h = float(obj_h.text)

angle = float(obj_angle.text)

x0, y0 = rotatePoint(cx, cy, cx - w / 2, cy - h / 2, -angle)

x1, y1 = rotatePoint(cx, cy, cx + w / 2, cy - h / 2, -angle)

x2, y2 = rotatePoint(cx, cy, cx + w / 2, cy + h / 2, -angle)

x3, y3 = rotatePoint(cx, cy, cx - w / 2, cy + h / 2, -angle)

classes=int(obj.find('name').text)

axis=list([str(x0),str(y0),str(x1), str(y1),str(x2), str(y2),str(x3), str(y3),label[classes],'0'])

bb = " ".join(axis)

f.writelines(bb)

f.writelines("\n")

f.close()

# 转换成四点坐标

def rotatePoint(xc, yc, xp, yp, theta):

xoff = xp - xc;

yoff = yp - yc;

cosTheta = math.cos(theta)

sinTheta = math.sin(theta)

pResx = cosTheta * xoff + sinTheta * yoff

pResy = - sinTheta * xoff + cosTheta * yoff

return int(xc + pResx), int(yc + pResy)

if __name__ == '__main__':

for path in os.listdir('anns/'):

edit_xml('anns/'+path)

转换好之后,新建images与labelTxt文件夹,把图片与标签复制过去,最终目录为:

data_dir/images/*.jpg

data_dir/labelTxt/*.txt

3.模型训练

3.1 编译安装

这里默认你已经下好了我修改后的BBAVectors代码,这个时候你只需要cd到DOTA_devkit文件夹下,用下面几行代码就能安装好需要的polyiou。

sudo apt-get install swig

swig -c++ -python polyiou.i

python setup.py build_ext --inplace

3.2 数据分割

BBAVectors要求输入的图片是矩形的,为此需要采用DOTA_devkit的ImgSplit_multi_process.py转换为512x512的图像输入。最终得到的目录如下:

data_dir/split/images/*.jpg

data_dir/split/labelTxt/*.txt

代码如下:

3.3 划分训练集与验证集

主要是生成train.txt与val.txt,代码如下

# -*- coding: utf-8 -*-

import os

import random

# obb data split

annfilepath=r'/split/labelTxt/'

saveBasePath=r'split/'

train_percent=0.95

total_file = os.listdir(annfilepath)

num=len(total_file)

list=range(num)

tr=int(num*train_percent)

train=random.sample(list,tr)

ftrain = open(os.path.join(saveBasePath,'train.txt'), 'w')

fval = open(os.path.join(saveBasePath,'val.txt'), 'w')

for i in list:

name=total_file[i].split('.')[0]+'\n'

if i in train:

ftrain.write(name)

else:

fval.write(name)

ftrain.close()

fval.close()

print("train size",tr)

print("valid size",num-tr)

最终得到的目录如下,我的test.txt是直接复制的val.txt:

data_dir/split/images/*.jpg

data_dir/split/labelTxt/*.txt

data_dir/split/train.txt

data_dir/split/val.txt

data_dir/split/test.txt

3.4 模型训练

如果你要更换数据集,需要改以下几个地方

1.datasets/dataset_dota.py ---self.category改成自己的类别;----self.color_pans改为对应的数量。

2.main.py ---修改data_dir路径;---num_classes = {'dota': 1, 'hrsc': 1},修改dota的类别数;---以及其他训练参数

修改完成后运行main.py即可

4.生成onnx模型

通过上面训练后,我们weights_dota文件夹下得到了model_best.pth,现在运行export_onnx.py,将会生成model_best.onnx,采用Netron打开这个onnx模型,可以看到input是1x3x512x512,output是1x500x12,解析如下:输出是500个box ,每个box的有12个属性,前2个是中心点,然后8个是框框的各种长度,剩下两个参数分别是置信度得分和类别。知道输出是什么,才能做后续的输出后处理。

顺便计算一下模型推理时间,平均下来是43ms。

二、Tensorrt模型转换

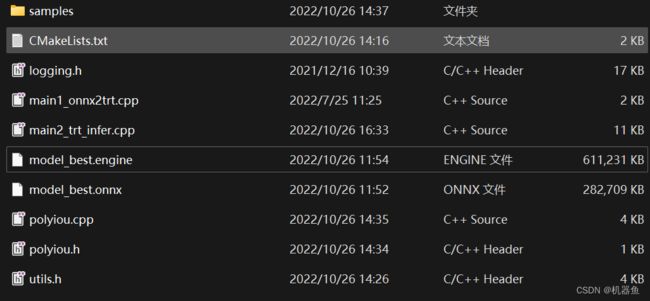

为了方便使用,我已经把代码打包好了,你只需要下载即可使用,代码链接在评论区里面。文件包括

1.编译安装

这里默认你已经装好了cuda10.2、cudnn8.2、opencv4.5、tensorrt8.0,cmake3.15,等一下其他组件,采用下面方法进行编译

cd 到下来解压后的目录

mkdir build

cd build

cmake ..

make 2.生成engine文件

生成engine需要用到tensorrt的NvOnnxParser,对上面生成onnx模型进行解析,main1_onnx2trt.cpp代码如下:

#include

#include "logging.h"

#include "NvOnnxParser.h"

#include "NvInfer.h"

#include

using namespace nvinfer1;

using namespace nvonnxparser;

static Logger gLogger;

int main(int argc,char** argv) {

IBuilder* builder = createInferBuilder(gLogger);

const auto explicitBatch = 1U << static_cast(NetworkDefinitionCreationFlag::kEXPLICIT_BATCH);

INetworkDefinition* network = builder->createNetworkV2(explicitBatch);

nvonnxparser::IParser* parser = nvonnxparser::createParser(*network, gLogger);

const char* onnx_filename = argv[1];

parser->parseFromFile(onnx_filename, static_cast(Logger::Severity::kWARNING));

for (int i = 0; i < parser->getNbErrors(); ++i)

{

std::cout << parser->getError(i)->desc() << std::endl;

}

std::cout << "successfully load the onnx model" << std::endl;

// 2build the engine

unsigned int maxBatchSize = 1;

builder->setMaxBatchSize(maxBatchSize);

IBuilderConfig* config = builder->createBuilderConfig();

//config->setMaxWorkspaceSize(1 << 20);

config->setMaxWorkspaceSize(128 * (1 << 20)); // 16MB

config->setFlag(BuilderFlag::kFP16);

ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

// 3serialize Model

IHostMemory *gieModelStream = engine->serialize();

std::ofstream p(argv[2], std::ios::binary);

if (!p)

{

std::cerr << "could not open plan output file" << std::endl;

return -1;

}

p.write(reinterpret_cast(gieModelStream->data()), gieModelStream->size());

gieModelStream->destroy();

//std::ofstream serialize_output_stream;

//serialize_str.resize(gieModelStream->size());

//memcpy((void*)serialize_str.data(), gieModelStream->data(), gieModelStream->size());

//serialize_output_stream.open("../../segment.engine");

//serialize_output_stream << serialize_str;

//serialize_output_stream.close();

std::cout << "successfully generate the trt engine model" << std::endl;

return 0;

} 在1中make之后,会在build生成onnx2trt这个可以执行的文件(windows中的exe),在终端运行

./onnx2trt 目录至/model_best.onnx model_best.engine不出意外的,经过一些时间的运行,会在build文件夹下生成model_best.engine这个文件(最好是是按照我的软件版本安装)。

3.tensorrt推理

主要就是用到opencv与tensorrt中nvinfer这个库了,部分代码如下:

Mat src=imread(argv[2]);

if (src.empty()) {std::cout << "image load faild" << std::endl;return 1;}

int img_width = src.cols;

int img_height = src.rows;

// Subtract mean from image

static float data[3 * INPUT_H * INPUT_W];

Mat pr_img0, pr_img;

cv::resize(src, pr_img,Size(512,512), 0, 0, cv::INTER_LINEAR);

int i = 0;// [1,3,INPUT_H,INPUT_W]

//std::cout << "pr_img.step" << pr_img.step << std::endl;

for (int row = 0; row < INPUT_H; ++row) {

uchar* uc_pixel = pr_img.data + row * pr_img.step;//pr_img.step=widthx3 就是每一行有width个3通道的值

for (int col = 0; col < INPUT_W; ++col)

{

data[i] = (float)uc_pixel[2] / 255.0-0.5;

data[i + INPUT_H * INPUT_W] = (float)uc_pixel[1] / 255.0 - 0.5;

data[i + 2 * INPUT_H * INPUT_W] = (float)uc_pixel[0] / 255.0 - 0.5;

uc_pixel += 3;

++i;

}

}

IRuntime* runtime = createInferRuntime(gLogger);

assert(runtime != nullptr);

bool didInitPlugins = initLibNvInferPlugins(nullptr, "");

ICudaEngine* engine = runtime->deserializeCudaEngine(trtModelStream, size, nullptr);

assert(engine != nullptr);

IExecutionContext* context = engine->createExecutionContext();

assert(context != nullptr);

delete[] trtModelStream;

// Run inference

static float prob[OUTPUT_SIZE];

for (int i = 0; i < 10; i++) {//计算10次的推理速度

auto start = std::chrono::system_clock::now();

doInference(*context, data, prob, 1);

auto end = std::chrono::system_clock::now();

std::cout << std::chrono::duration_cast(end - start).count() << "ms" << std::endl;

}

// 解析数据 输出是500个box 每个box的有12个,前2个是中心点 然后8个是框框的各种长度 剩下两分别是置信度和cls

std::map> m;

auto start = std::chrono::system_clock::now();

for (int position = 0; position < Num_box; position++) {

float *row = prob + position * CLASSES;

//这些都是原python程序里面的

float cen_pt_0 = row[0];

float cen_pt_1 = row[1];

float tt_2 = row[2];

float tt_3 = row[3];

float rr_4 = row[4];

float rr_5 = row[5];

float bb_6 = row[6];

float bb_7 = row[7];

float ll_8 = row[8];

float ll_9 = row[9];

float tl_0 = tt_2 + ll_8 - cen_pt_0;

float tl_1 = tt_3 + ll_9 - cen_pt_1;

float bl_0 = bb_6 + ll_8 - cen_pt_0;

float bl_1 = bb_7 + ll_9 - cen_pt_1;

float tr_0 = tt_2 + rr_4 - cen_pt_0;

float tr_1 = tt_3 + rr_5 - cen_pt_1;

float br_0 = bb_6 + rr_4 - cen_pt_0;

float br_1 = bb_7 + rr_5 - cen_pt_1;

float pts_tr_0 = tr_0 * down_ratio / INPUT_W * img_width;

float pts_br_0 = br_0 * down_ratio / INPUT_W * img_width;

float pts_bl_0 = bl_0 * down_ratio / INPUT_W * img_width;

float pts_tl_0 = tl_0 * down_ratio / INPUT_W * img_width;

float pts_tr_1 = tr_1 * down_ratio / INPUT_H * img_height;

float pts_br_1 = br_1 * down_ratio / INPUT_H * img_height;

float pts_bl_1 = bl_1 * down_ratio / INPUT_H * img_height;

float pts_tl_1 = tl_1 * down_ratio / INPUT_H * img_height;

auto score = row[10];

auto cls = row[11];

if (score < CONF_THRESHOLD)//置信度筛选

continue;

Detection box;

box.conf = score;

box.class_id = cls;

float ploybox[8] = { pts_tr_0, pts_tr_1, pts_br_0, pts_br_1, pts_bl_0, pts_bl_1, pts_tl_0, pts_tl_1 };

for (int i = 0; i < 8; i++) {

box.bbox[i] = ploybox[i];

}

if (m.count(box.class_id) == 0) {

m.emplace(box.class_id, std::vector());

}

m[box.class_id].push_back(box);

}

std::vector res;//最终结果

for (auto it = m.begin(); it != m.end(); it++) {//分别导出每一类的数据 在每一类里面做nms

//std::cout << it->second[0].class_id << " --- " << std::endl;

auto& dets = it->second;

std::sort(dets.begin(), dets.end(), cmp);//按照置信度大小从高到低排序

for (size_t m = 0; m < dets.size(); ++m) {

auto& item = dets[m];

res.push_back(item);

for (size_t n = m + 1; n < dets.size(); ++n) {

if (iou_poly(item.bbox, dets[n].bbox) > NMS_THRESHOLD) {//nms筛选

dets.erase(dets.begin() + n);

--n;

}

}

}

} 在1中make之后,会在build生成trt_infer这个可以执行的文件(windows中的exe),在终端运行

./trt_infer model_best.engine ../samples/test.jpg不出意外的话,会显示下面这个结果,可以看到经过tensorrt推理之后的速度是27ms左右

三、参考

BBAVectors旋转目标检测算法安装部署使用笔记_HNU_刘yuan的博客-CSDN博客

BBAVectors:无Anchor的旋转物体检测方法 - 知乎

40、使用BBAVectors-Oriented-Object-Detection 进行旋转目标检测,并使用mnn和ncnn进行部署_天昼AI实验室的博客-CSDN博客