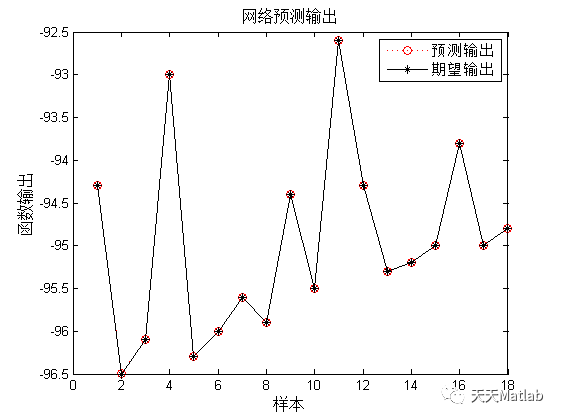

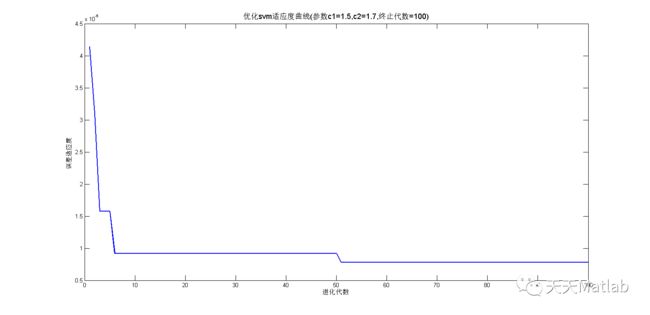

【lssvm预测】基于天鹰算法优化最小二乘支持向量机lssvm实现数据回归预测附matlab代码

1 简介

短时交通流预测是实现智能交通控制与管理,交通流状态辨识和实时交通流诱导的前提及关键,也是智能化交通管理的客观需要.到目前为止,它的研究结果都不尽如人意.现有的以精确数学模型为基础的传统预测方法存在计算复杂,运算时间长,需要大量历史数据,预测精度不高等缺点.因此通过研究新型人工智能方法改进短期交通流预测具有一定的现实意义.本文在对现有短期交通流预测模型对比分析及交通流特性研究分析基础上,采用天鹰算法优化最小二乘支持向量机方法进行短期交通流预测模型,取得较好的效果. 支持向量机是一种新的机器学习算法,建立在统计学习理论的基础上,采用结构风险最小化原则,具有预测能力强,全局最优化以及收敛速度快等特点,相比较以经验风险化为基础的神经网络学习算法有更好的理论依据和更好的泛化性能.对于支持向量机模型而言,其算法相对简单,运算时间短,预测精度较高,比较适用于交通流预测研究,特别是在引入最小二乘理论后,计算简化为求解一个线性方程组,同时精度也能得到保证.,该方法首先利用粒子群算法的全局搜索能力来获取最小二乘支持向量机的惩罚因子和核函数宽度,有效解决了最小二乘支持向量机难以快速精准寻找最优参数的问题.

2 部分代码

function model = initlssvm(X,Y,type, gam,sig2, kernel_type, preprocess)

% Initiate the object oriented structure representing the LS-SVM model

%

% model = initlssvm(X,Y, type, gam, sig2)

% model = initlssvm(X,Y, type, gam, sig2, kernel_type)

%

% Full syntax

%

% >> model = initlssvm(X, Y, type, gam, sig2, kernel, preprocess)

%

% Outputs

% model : Object oriented representation of the LS-SVM model

% Inputs

% X : N x d matrix with the inputs of the training data

% Y : N x 1 vector with the outputs of the training data

% type : 'function estimation' ('f') or 'classifier' ('c')

% kernel(*) : Kernel type (by default 'RBF_kernel')

% preprocess(*) : 'preprocess'(*) or 'original'

%

% see also:

% trainlssvm, simlssvm, changelssvm, codelssvm, prelssvm

% Copyright (c) 2011, KULeuven-ESAT-SCD, License & help @ http://www.esat.kuleuven.be/sista/lssvmlab

% check enough arguments?

if nargin<5,

error('Not enough arguments to initialize model..');

elseif ~isnumeric(sig2),

error(['Kernel parameter ''sig2'' needs to be a (array of) reals' ...

' or the empty matrix..']);

end

%

% CHECK TYPE

%

if type(1)~='f'

if type(1)~='c'

if type(1)~='t'

if type(1)~='N'

error('type has to be ''function (estimation)'', ''classification'', ''timeserie'' or ''NARX''');

end

end

end

end

model.type = type;

%

% check datapoints

%

model.x_dim = size(X,2);

model.y_dim = size(Y,2);

if and(type(1)~='t',and(size(X,1)~=size(Y,1),size(X,2)~=0)), error('number of datapoints not equal to number of targetpoints...'); end

model.nb_data = size(X,1);

%if size(X,1)

%

% initializing kernel type

%

try model.kernel_type = kernel_type; catch, model.kernel_type = 'RBF_kernel'; end

%

% using preprocessing {'preprocess','original'}

%

try model.preprocess=preprocess; catch, model.preprocess='preprocess';end

if model.preprocess(1) == 'p',

model.prestatus='changed';

else

model.prestatus='ok';

end

%

% initiate datapoint selector

%

model.xtrain = X;

model.ytrain = Y;

model.selector=1:model.nb_data;

%

% regularisation term and kenel parameters

%

if(gam<=0), error('gam must be larger then 0');end

model.gam = gam;

%

% initializing kernel type

%

try model.kernel_type = kernel_type; catch, model.kernel_type = 'RBF_kernel';end

if sig2<=0,

model.kernel_pars = (model.x_dim);

else

model.kernel_pars = sig2;

end

%

% dynamic models

%

model.x_delays = 0;

model.y_delays = 0;

model.steps = 1;

% for classification: one is interested in the latent variables or

% in the class labels

model.latent = 'no';

% coding type used for classification

model.code = 'original';

try model.codetype=codetype; catch, model.codetype ='none';end

% preprocessing step

model = prelssvm(model);

% status of the model: 'changed' or 'trained'

model.status = 'changed';

%settings for weight function

model.weights = [];

function [out1,out2] = mapminmax(in1,in2,in3,in4)

%MAPMINMAX Map matrix row minimum and maximum values to [-1 1].

%

% Syntax

%

% [y,ps] = mapminmax(x,ymin,ymax)

% [y,ps] = mapminmax(x,fp)

% y = mapminmax('apply',x,ps)

% x = mapminmax('reverse',y,ps)

% dx_dy = mapminmax('dx',x,y,ps)

% dx_dy = mapminmax('dx',x,[],ps)

% name = mapminmax('name');

% fp = mapminmax('pdefaults');

% names = mapminmax('pnames');

% mapminmax('pcheck', fp);

%

% Description

%

% MAPMINMAX processes matrices by normalizing the minimum and maximum values

% of each row to [YMIN, YMAX].

%

% MAPMINMAX(X,YMIN,YMAX) takes X and optional parameters,

% X - NxQ matrix or a 1xTS row cell array of NxQ matrices.

% YMIN - Minimum value for each row of Y. (Default is -1)

% YMAX - Maximum value for each row of Y. (Default is +1)

% and returns,

% Y - Each MxQ matrix (where M == N) (optional).

% PS - Process settings, to allow consistent processing of values.

%

% MAPMINMAX(X,FP) takes parameters as struct: FP.ymin, FP.ymax.

% MAPMINMAX('apply',X,PS) returns Y, given X and settings PS.

% MAPMINMAX('reverse',Y,PS) returns X, given Y and settings PS.

% MAPMINMAX('dx',X,Y,PS) returns MxNxQ derivative of Y w/respect to X.

% MAPMINMAX('dx',X,[],PS) returns the derivative, less efficiently.

% MAPMINMAX('name') returns the name of this process method.

% MAPMINMAX('pdefaults') returns default process parameter structure.

% MAPMINMAX('pdesc') returns the process parameter descriptions.

% MAPMINMAX('pcheck',fp) throws an error if any parameter is illegal.

%

% Examples

%

% Here is how to format a matrix so that the minimum and maximum

% values of each row are mapped to default interval [-1,+1].

%

% x1 = [1 2 4; 1 1 1; 3 2 2; 0 0 0]

% [y1,ps] = mapminmax(x1)

%

% Next, we apply the same processing settings to new values.

%

% x2 = [5 2 3; 1 1 1; 6 7 3; 0 0 0]

% y2 = mapminmax('apply',x2,ps)

%

% Here we reverse the processing of y1 to get x1 again.

%

% x1_again = mapminmax('reverse',y1,ps)

%

% Algorithm

%

% It is assumed that X has only finite real values, and that

% the elements of each row are not all equal.

%

% y = (ymax-ymin)*(x-xmin)/(xmax-xmin) + ymin;

%

% See also FIXUNKNOWNS, MAPSTD, PROCESSPCA, REMOVECONSTANTROWS

% Copyright 1992-2007 The MathWorks, Inc.

% $Revision: 1.1.6.11 $

% Process function boiler plate script

boiler_process

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% Name

function n = name

n = 'Map Minimum and Maximum';

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% Parameter Defaults

function fp = param_defaults(values)

if length(values)>=1, fp.ymin = values{1}; else fp.ymin = -1; end

if length(values)>=2, fp.ymax = values{2}; else fp.ymax = fp.ymin + 2; end

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% Parameter Names

function names = param_names()

names = {'Mininum value for each row of Y.', 'Maximum value for each row of Y.'};

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% Parameter Check

function err = param_check(fp)

mn = fp.ymin;

mx = fp.ymax;

if ~isa(mn,'double') || any(size(mn)~=[1 1]) || ~isreal(mn) || ~isfinite(mn)

err = 'ymin must be a real scalar value.';

elseif ~isa(mx,'double') || any(size(mx)~=[1 1]) || ~isreal(mx) || ~isfinite(mx) || (mx <= mn)

err = 'ymax must be a real scalar value greater than ymin.';

else

err = '';

end

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% New Process

function [y,ps] = new_process(x,fp)

% Replace NaN with finite values in same row

rows = size(x,1);

for i=1:rows

finiteInd = find(full(~isnan(x(i,:))),1);

if isempty(finiteInd)

xfinite = 0;

else

xfinite = x(finiteInd);

end

nanInd = isnan(x(i,:));

x(i,nanInd) = xfinite;

end

ps.name = 'mapminmax';

ps.xrows = size(x,1);

ps.xmax = max(x,[],2);

ps.xmin = min(x,[],2);

ps.xrange = ps.xmax-ps.xmin;

ps.yrows = ps.xrows;

ps.ymax = fp.ymax;

ps.ymin = fp.ymin;

ps.yrange = ps.ymax-ps.ymin;

if any(ps.xmax == ps.xmin)

warning('NNET:Processing','Use REMOVECONSTANTROWS to remove rows with constant values.');

end

y = apply_process(x,ps);

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% Apply Process

function y = apply_process(x,ps)

Q = size(x,2);

oneQ = ones(1,Q);

rangex = ps.xmax-ps.xmin;

rangex(rangex==0) = 1; % Avoid divisions by zero

rangey = ps.ymax-ps.ymin;

y = rangey * (x-ps.xmin(:,oneQ))./rangex(:,oneQ) + ps.ymin;

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% Reverse Process

function x = reverse_process(y,ps)

Q = size(y,2);

oneQ = ones(1,Q);

rangex = ps.xmax-ps.xmin;

rangey = ps.ymax-ps.ymin;

x = rangex(:,oneQ) .* (y-ps.ymin)*(1/rangey) + ps.xmin(:,oneQ);

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% Derivative of Y w/respect to X

function dy_dx = derivative(x,y,ps);

Q = size(x,2);

rangex = ps.xmax-ps.xmin;

rangey = ps.ymax-ps.ymin;

d = diag(rangey ./ rangex);

dy_dx = d(:,:,ones(1,Q));

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% Derivative of Y w/respect to X

function dx_dy = reverse_derivative(x,y,ps);

Q = size(x,2);

rangex = ps.xmax-ps.xmin;

rangey = ps.ymax-ps.ymin;

d = diag(rangex ./ rangey);

dx_dy = d(:,:,ones(1,Q));

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

function p = simulink_params(ps)

p = ...

{ ...

'xmin',mat2str(ps.xmin);

'xmax',mat2str(ps.xmax);

'ymin',mat2str(ps.ymin);

'ymax',mat2str(ps.ymax);

};

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

function p = simulink_reverse_params(ps)

p = ...

{ ...

'xmin',mat2str(ps.xmin);

'xmax',mat2str(ps.xmax);

'ymin',mat2str(ps.ymin);

'ymax',mat2str(ps.ymax);

};

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

function [model,Yt] = prelssvm(model,Xt,Yt)

% Preprocessing of the LS-SVM

%

% These functions should only be called by trainlssvm or by

% simlssvm. At first the preprocessing assigns a label to each in-

% and output component (c for continuous, a for categorical or b

% for binary variables). According to this label each dimension is rescaled:

%

% * continuous: zero mean and unit variance

% * categorical: no preprocessing

% * binary: labels -1 and +1

%

% Full syntax (only using the object oriented interface):

%

% >> model = prelssvm(model)

% >> Xp = prelssvm(model, Xt)

% >> [empty, Yp] = prelssvm(model, [], Yt)

% >> [Xp, Yp] = prelssvm(model, Xt, Yt)

%

% Outputs

% model : Preprocessed object oriented representation of the LS-SVM model

% Xp : Nt x d matrix with the preprocessed inputs of the test data

% Yp : Nt x d matrix with the preprocessed outputs of the test data

% Inputs

% model : Object oriented representation of the LS-SVM model

% Xt : Nt x d matrix with the inputs of the test data to preprocess

% Yt : Nt x d matrix with the outputs of the test data to preprocess

%

%

% See also:

% postlssvm, trainlssvm

% Copyright (c) 2011, KULeuven-ESAT-SCD, License & help @ http://www.esat.kuleuven.be/sista/lssvmlab

if model.preprocess(1)~='p', % no 'preprocessing

if nargin>=2, model = Xt; end

return

end

%

% what to do

%

if model.preprocess(1)=='p',

eval('if model.prestatus(1)==''c'',model.prestatus=''unschemed'';end','model.prestatus=''unschemed'';');

end

if nargin==1, % only model rescaling

%

% if UNSCHEMED, redefine a rescaling

%

if model.prestatus(1)=='u',% 'unschemed'

ffx =[];

for i=1:model.x_dim,

eval('ffx = [ffx model.pre_xscheme(i)];',...

'ffx = [ffx signal_type(model.xtrain(:,i),inf)];');

end

model.pre_xscheme = ffx;

ff = [];

for i=1:model.y_dim,

eval('ff = [ff model.pre_yscheme(i)];',...

'ff = [ff signal_type(model.ytrain(:,i),model.type)];');

end

model.pre_yscheme = ff;

model.prestatus='schemed';

end

%

% execute rescaling as defined if not yet CODED

%

if model.prestatus(1)=='s',% 'schemed'

model=premodel(model);

model.prestatus = 'ok';

end

%

% rescaling of the to simulate inputs

%

elseif model.preprocess(1)=='p'

if model.prestatus(1)=='o',%'ok'

eval('Yt;','Yt=[];');

[model,Yt] = premodel(model,Xt,Yt);

else

warning('model rescaling inconsistent..redo ''model=prelssvm(model);''..');

end

end

function [type,ss] = signal_type(signal,type)

%

% determine the type of the signal,

% binary classifier ('b'), categorical classifier ('a'), or continuous

% signal ('c')

%

%

ss = sort(signal);

dif = sum(ss(2:end)~=ss(1:end-1))+1;

% binary

if dif==2,

type = 'b';

% categorical

elseif dif

% continu

else

type ='c';

end

%

% effective rescaling

%

function [model,Yt] = premodel(model,Xt,Yt)

%

%

%

if nargin==1,

for i=1:model.x_dim,

% CONTINUOUS VARIABLE:

if model.pre_xscheme(i)=='c',

model.pre_xmean(i)=mean(model.xtrain(:,i));

model.pre_xstd(i) = std(model.xtrain(:,i));

model.xtrain(:,i) = pre_zmuv(model.xtrain(:,i),model.pre_xmean(i),model.pre_xstd(i));

% CATEGORICAL VARIBALE:

elseif model.pre_xscheme(i)=='a',

model.pre_xmean(i)= 0;

model.pre_xstd(i) = 0;

model.xtrain(:,i) = pre_cat(model.xtrain(:,i),model.pre_xmean(i),model.pre_xstd(i));

% BINARY VARIBALE:

elseif model.pre_xscheme(i)=='b',

model.pre_xmean(i) = min(model.xtrain(:,i));

model.pre_xstd(i) = max(model.xtrain(:,i));

model.xtrain(:,i) = pre_bin(model.xtrain(:,i),model.pre_xmean(i),model.pre_xstd(i));

end

end

for i=1:model.y_dim,

% CONTINUOUS VARIABLE:

if model.pre_yscheme(i)=='c',

model.pre_ymean(i)=mean(model.ytrain(:,i),1);

model.pre_ystd(i) = std(model.ytrain(:,i),1);

model.ytrain(:,i) = pre_zmuv(model.ytrain(:,i),model.pre_ymean(i),model.pre_ystd(i));

% CATEGORICAL VARIBALE:

elseif model.pre_yscheme(i)=='a',

model.pre_ymean(i)=0;

model.pre_ystd(i) =0;

model.ytrain(:,i) = pre_cat(model.ytrain(:,i),model.pre_ymean(i),model.pre_ystd(i));

% BINARY VARIBALE:

elseif model.pre_yscheme(i)=='b',

model.pre_ymean(i) = min(model.ytrain(:,i));

model.pre_ystd(i) = max(model.ytrain(:,i));

model.ytrain(:,i) = pre_bin(model.ytrain(:,i),model.pre_ymean(i),model.pre_ystd(i));

end

end

else %if nargin>1, % testdata Xt,

if ~isempty(Xt),

if size(Xt,2)~=model.x_dim, warning('dimensions of Xt not compatible with dimensions of support vectors...');end

for i=1:model.x_dim,

% CONTINUOUS VARIABLE:

if model.pre_xscheme(i)=='c',

Xt(:,i) = pre_zmuv(Xt(:,i),model.pre_xmean(i),model.pre_xstd(i));

% CATEGORICAL VARIBALE:

elseif model.pre_xscheme(i)=='a',

Xt(:,i) = pre_cat(Xt(:,i),model.pre_xmean(i),model.pre_xstd(i));

% BINARY VARIBALE:

elseif model.pre_xscheme(i)=='b',

Xt(:,i) = pre_bin(Xt(:,i),model.pre_xmean(i),model.pre_xstd(i));

end

end

end

if nargin>2 & ~isempty(Yt),

if size(Yt,2)~=model.y_dim, warning('dimensions of Yt not compatible with dimensions of training output...');end

for i=1:model.y_dim,

% CONTINUOUS VARIABLE:

if model.pre_yscheme(i)=='c',

Yt(:,i) = pre_zmuv(Yt(:,i),model.pre_ymean(i), model.pre_ystd(i));

% CATEGORICAL VARIBALE:

elseif model.pre_yscheme(i)=='a',

Yt(:,i) = pre_cat(Yt(:,i),model.pre_ymean(i),model.pre_ystd(i));

% BINARY VARIBALE:

elseif model.pre_yscheme(i)=='b',

Yt(:,i) = pre_bin(Yt(:,i),model.pre_ymean(i),model.pre_ystd(i));

end

end

end

% assign output

model=Xt;

end

function X = pre_zmuv(X,mean,var)

%

% preprocessing a continuous signal; rescaling to zero mean and unit

% variance

% 'c'

%

X = (X-mean)./var;

function X = pre_cat(X,mean,range)

%

% preprocessing a categorical signal;

% 'a'

%

X=X;

function X = pre_bin(X,min,max)

%

% preprocessing a binary signal;

% 'b'

%

if ~sum(isnan(X)) >= 1 %--> OneVsOne encoding

n = (X==min);

p = not(n);

X=-1.*(n)+p;

end

3 仿真结果

4 参考文献

[1]刘林. 基于LSSVM的短期交通流预测研究与应用[D]. 西南交通大学, 2011.

博主简介:擅长智能优化算法、神经网络预测、信号处理、元胞自动机、图像处理、路径规划、无人机等多种领域的Matlab仿真,相关matlab代码问题可私信交流。

部分理论引用网络文献,若有侵权联系博主删除。