条件随机场CRF之从公式到代码

前言

基础的理论推导我就不再搬运了,网上有很多大大们写的都很好,但是我发现文章基本分成了两类,一类讲理论讲的特别好,但是缺少了与实际代码的结合;一类讲实践,主要是如何使用顺带提一下公式,主要是默认读者已经对公式烂熟于心了。所以我想做个桥梁,结合公式和代码实现把CRF捋一遍。对于理论还不是很熟的童鞋请参考文章最后的引用链接,讲的非常详细。文章中使用的代码来自于pytorch官方文档:https://pytorch.org/tutorials/beginner/nlp/advanced_tutorial.html

CRF实现细节

-

生成模型和判别式模型

简单一点理解,生成模型就是根据数据集去建立联合概率分布 P ( X , Y ) P(X,Y) P(X,Y)的模型,比如贝叶斯模型、HMM;判别式模式就是根据数据集中X和Y的对应关系去建立一个超平面用于分类,判别式模型的目的就是为了分类,比如SVM、神经网络。切记,切记HMM是生成模型而CRF是判别式模型! 更详细的解释请参考https://zhuanlan.zhihu.com/p/33397147里的2.2节。 -

无向图的概率计算

设有无向图,一般指马尔科夫网络,可以用因子分解将 P ( Y ) P(Y) P(Y) 写成若干个联合概率的乘积,这里的“若干个联合概率”是指将图分解成若干个最大团的乘积。比如下图中可以分解成两个最大团 ( x 1 , x 3 , x 4 ) 和 ( x 2 , x 3 , x 4 ) (x_1, x_3, x_4)和(x_2, x_3, x_4) (x1,x3,x4)和(x2,x3,x4),所以:

P ( Y ) = 1 Z ( x ) ∏ c = 1 2 ψ c ( c ) = 1 Z ( x ) ψ 1 ( x 1 , x 3 , x 4 ) ⋅ ψ 2 ( x 1 , x 3 , x 4 ) P(Y)=\frac{1}{Z(x)}\prod_{c=1}^{2}\psi_c(c)=\frac{1}{Z(x)}\psi_1(x_1, x_3, x_4)\cdot\psi_2(x_1, x_3, x_4) P(Y)=Z(x)1∏c=12ψc(c)=Z(x)1ψ1(x1,x3,x4)⋅ψ2(x1,x3,x4)

其中 Z ( x ) = ∑ Y ∏ c ψ c ( c ) Z(x)= \sum_{Y}\prod_{c}\psi_c (c) Z(x)=∑Y∏cψc(c)是归一化因子,这里可以参考softmax公式的分母。

-

ψ c ( c ) \psi_c(c) ψc(c)的定义

ψ c ( c ) \psi_c(c) ψc(c)是一个最大团 C C C上随机变量们的联合概率,一般取指数函数:

ψ c ( Y c ) = e − E ( Y c ) = e ∑ k λ k f k ( c , y , x ) \psi_c (Y_c)=e^{-E(Y_c)}=e^{\sum_{k}\lambda_k f_k(c,y,x)} ψc(Yc)=e−E(Yc)=e∑kλkfk(c,y,x),先不管 λ k f k ( c , y , x ) \lambda_k f_k(c,y,x) λkfk(c,y,x)是个什么鬼,把这一坨带入到上面的计算 P ( Y ) P(Y) P(Y)的公式中:

P ( Y ) = 1 Z ( x ) ∏ c e ∑ k λ k f k ( c , y , x ) = 1 Z ( x ) e ∑ c ∑ k λ k f k ( c , y , x ) P(Y)=\frac{1}{Z(x)}\prod_{c}e^{\sum_{k}\lambda_k f_k(c,y,x)}=\frac{1}{Z(x)}e^{\sum_c\sum_{k}\lambda_k f_k(c,y,x)} P(Y)=Z(x)1∏ce∑kλkfk(c,y,x)=Z(x)1e∑c∑kλkfk(c,y,x)

现在是不是已经得到的crf的计算公式,到底要如何计算后面再说,先看看这个式子是怎么来的。首先,为什么长这个样子?因为弄这个东东出来的目的就是在给定序列 X X X的情况下判定序列 Y Y Y的,奔着 P ( Y ∣ X ) P(Y|X) P(Y∣X)去的,判别式模型!其次,为什么在最后的公式中连乘符号没了?因为 ψ c ( c ) \psi_c(c) ψc(c)这东东被定义成了指数函数,所以连乘变成了求和! -

如何最大化 P ( Y ) P(Y) P(Y)

继续不管刚才那一坨怎么算,先从整体上把流程搞定再说细节。

根据概率论课上老师教的,我们可以使用最大似然估计来计算分布的参数,即我们的目标就是最大化 l o g P ( Y ) logP(Y) logP(Y)

l o g P ( Y ) = l o g 1 Z ( x ) e ∑ c ∑ k λ k f k ( c , y , x ) = ∑ c ∑ k λ k f k ( c , y , x ) − l o g Z ( x ) logP(Y) = log\frac{1}{Z(x)}e^{\sum_c\sum_{k}\lambda_k f_k(c,y,x)}=\sum_c\sum_{k}\lambda_k f_k(c,y,x) - logZ(x) logP(Y)=logZ(x)1e∑c∑kλkfk(c,y,x)=∑c∑kλkfk(c,y,x)−logZ(x)

最大化 l o g P ( Y ) logP(Y) logP(Y)等价于最小化 − l o g P ( Y ) -logP(Y) −logP(Y),所以目标就变成了最小化 − l o g P ( Y ) -logP(Y) −logP(Y)

− l o g P ( Y ) = l o g Z ( x ) − ∑ c ∑ k λ k f k ( c , y , x ) -logP(Y) = logZ(x) - \sum_c\sum_{k}\lambda_k f_k(c,y,x) −logP(Y)=logZ(x)−∑c∑kλkfk(c,y,x)

对应到代码实现中,关注neg_log_likelihood()函数的最后一行

def neg_log_likelihood(self, sentence, tags):

feats = self._get_lstm_features(sentence)

forward_score = self._forward_alg(feats)

gold_score = self._score_sentence(feats, tags)

return forward_score - gold_score

forward_score 就是我们的 l o g Z ( x ) logZ(x) logZ(x), gold_score是后面的那一坨。

- 解决细节

上面一直都在把 ∑ c ∑ k λ k f k ( c , y , x ) \sum_c\sum_{k}\lambda_k f_k(c,y,x) ∑c∑kλkfk(c,y,x)当成一坨不去纠结他,但还是到了不得不面对的时候(终是庄周梦了蝶,你是恩赐也是劫)。

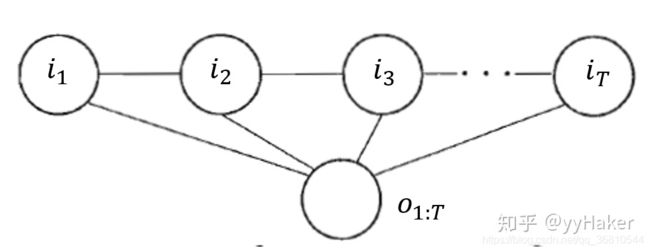

先来看一下crf的长相(开始疯狂盗图)

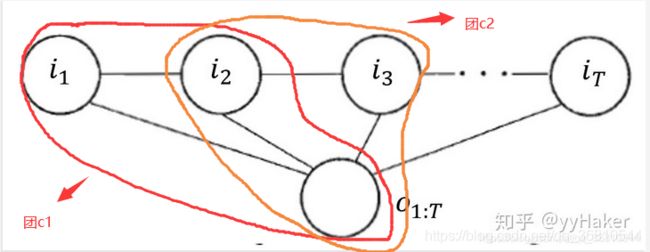

还记得上面提到的无向图的概率计算吗?需要分解成若干最大团然后连乘,OK!辣么把上面的图分解成若干最大团即可。怎么分?因为模型建立的初衷就是要考虑到 i k − 1 i_{k-1} ik−1对 i k i_k ik的影响和 X X X对观测序列的影响,所以我们将图分解成若干个 ( i k − 1 , i k , X ) ({i_{k-1}, i_k, X}) (ik−1,ik,X),其中 i k i_k ik表示观测变量的状态值,比如在BIO标注中状态取值范围是{B,I,O,START,STOP},则k最大取5, i k i_k ik有5个状态值可取。

还记得上面提到的无向图的概率计算吗?需要分解成若干最大团然后连乘,OK!辣么把上面的图分解成若干最大团即可。怎么分?因为模型建立的初衷就是要考虑到 i k − 1 i_{k-1} ik−1对 i k i_k ik的影响和 X X X对观测序列的影响,所以我们将图分解成若干个 ( i k − 1 , i k , X ) ({i_{k-1}, i_k, X}) (ik−1,ik,X),其中 i k i_k ik表示观测变量的状态值,比如在BIO标注中状态取值范围是{B,I,O,START,STOP},则k最大取5, i k i_k ik有5个状态值可取。

只关注其中的某一个团 C i C_i Ci,将 ∑ c ∑ k λ k f k ( c , y , x ) \sum_c\sum_{k}\lambda_k f_k(c,y,x) ∑c∑kλkfk(c,y,x)看成一个打分函数,表示在给定序列 X X X情况下,表现出( i k − 1 , i k i_{k-1}, i_k ik−1,ik)的非归一化概率,这个概率与两个东东有关,一个是在序列 X X X情况下由 i k − 1 转 移 到 i k i_{k-1}转移到i_k ik−1转移到ik的概率(在线性CRF中,假设观测变量只受临近结点的影响),一个是给定序列 X X X情况下出现 i k i_k ik的概率,设:

g ( i k − 1 , i k ; X ) g(i_{k-1},i_k;X) g(ik−1,ik;X)表示在序列 X X X情况下由 i k − 1 转 移 到 i k i_{k-1}转移到i_k ik−1转移到ik的概率;

h ( i k ; X ) h(i_k;X) h(ik;X)表示给定序列 X X X情况下出现 i k i_k ik的概率

我们使用LSTM或者CNN来建模 X X X对应 i k i_k ik的映射,所以使用lstm的输出来代替 h ( i k ; X ) h(i_k;X) h(ik;X);考虑到深度学习模型已经能比较充分捕捉各个 i k i_k ik 与X 的联系,所以假设 i k − 1 转 移 到 i k i_{k-1}转移到i_k ik−1转移到ik的概率与X无关,所以 g ( i k − 1 , i k ; X ) = g ( i k − 1 , i k ) g(i_{k-1},i_k;X)=g(i_{k-1},i_k) g(ik−1,ik;X)=g(ik−1,ik)这个转移概率是我们要学习的参数。于是就把 ∑ c ∑ k λ k f k ( c , y , x ) \sum_c\sum_{k}\lambda_k f_k(c,y,x) ∑c∑kλkfk(c,y,x)写成了 ∑ c ∑ k ( h ( i k ; X ) + g ( i k − 1 , i k ) ) \sum_c\sum_{k}(h(i_k;X) +g(i_{k-1},i_k)) ∑c∑k(h(ik;X)+g(ik−1,ik))

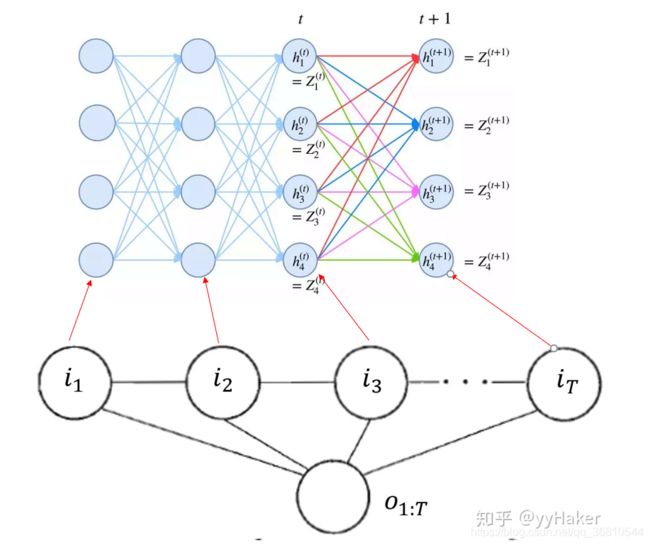

再盗一张图来说明计算过程

先看一下上面两张图的关系,图中表示每一个 i k i_k ik分别有4个状态可以取,对应到代码中是5个状态。

将图对应到公式中,k = 4对于每一步 t t t都需要知道 h k t + 1 ( i k ; X ) h_k^{t+1}(i_{k};X) hkt+1(ik;X)

而 Z i t Z_i^{t} Zit表示在当前时刻 t 以状态(标签) y 1 , … , y k y_1,…,y_k y1,…,yk 为终点的所有路径的得分指数和,即前向算法中的前向变量。我们来关注一下 Z i t + 1 Z_i^{t+1} Zit+1是怎么来的, Z 1 t + 1 Z_1^{t+1} Z1t+1是图中(在时刻t中所有红色的连线) × \times ×(在t+1时刻状态为1的值)

Z 1 t + 1 = Z 1 t ⋅ G 11 ⋅ H t + 1 ( 1 ∣ X ) + Z 1 t ⋅ G 21 ⋅ H t + 1 ( 1 ∣ X ) + Z 1 t ⋅ G 31 ⋅ H t + 1 ( 1 ∣ X ) + Z 1 t ⋅ G 41 ⋅ H t + 1 ( 1 ∣ X ) Z_1^{t+1}=Z_1^{t}\cdot G_{11}\cdot H_{t+1}(1|X) +Z_1^{t}\cdot G_{21}\cdot H_{t+1}(1|X) +Z_1^{t}\cdot G_{31}\cdot H_{t+1}(1|X) +Z_1^{t}\cdot G_{41}\cdot H_{t+1}(1|X) Z1t+1=Z1t⋅G11⋅Ht+1(1∣X)+Z1t⋅G21⋅Ht+1(1∣X)+Z1t⋅G31⋅Ht+1(1∣X)+Z1t⋅G41⋅Ht+1(1∣X)

Z 2 t + 1 = Z 2 t ⋅ G 12 ⋅ H t + 1 ( 2 ∣ X ) + Z 2 t ⋅ G 22 ⋅ H t + 1 ( 2 ∣ X ) + Z 2 t ⋅ G 32 ⋅ H t + 1 ( 2 ∣ X ) + Z 2 t ⋅ G 42 ⋅ H t + 1 ( 2 ∣ X ) Z_2^{t+1}=Z_2^{t}\cdot G_{12}\cdot H_{t+1}(2|X) +Z_2^{t}\cdot G_{22}\cdot H_{t+1}(2|X) +Z_2^{t}\cdot G_{32}\cdot H_{t+1}(2|X) +Z_2^{t}\cdot G_{42}\cdot H_{t+1}(2|X) Z2t+1=Z2t⋅G12⋅Ht+1(2∣X)+Z2t⋅G22⋅Ht+1(2∣X)+Z2t⋅G32⋅Ht+1(2∣X)+Z2t⋅G42⋅Ht+1(2∣X)

…

Z i t + 1 = Z i t ⋅ G 1 i ⋅ H t + 1 ( i ∣ X ) + Z i t ⋅ G 2 i ⋅ H t + 1 ( i ∣ X ) + Z i t ⋅ G 3 i ⋅ H t + 1 ( i ∣ X ) + Z i t ⋅ G 4 i ⋅ H t + 1 ( i ∣ X ) Z_i^{t+1}=Z_i^{t}\cdot G_{1i}\cdot H_{t+1}(i|X) +Z_i^{t}\cdot G_{2i}\cdot H_{t+1}(i|X) +Z_i^{t}\cdot G_{3i}\cdot H_{t+1}(i|X) +Z_i^{t}\cdot G_{4i}\cdot H_{t+1}(i|X) Zit+1=Zit⋅G1i⋅Ht+1(i∣X)+Zit⋅G2i⋅Ht+1(i∣X)+Zit⋅G3i⋅Ht+1(i∣X)+Zit⋅G4i⋅Ht+1(i∣X)

其中 G 是对 g ( y i , y j ) g(y_i,y_j) g(yi,yj) 各个元素取指数后的矩阵

G i j = e g ( y i , y j ) G_{ij}=e^{g(y_i,y_j)} Gij=eg(yi,yj)

同理, H t + 1 ( y k ∣ X ) = e h t + 1 ( ( y k ∣ X ) H_{t+1}(y_{k}|X)=e^{h_{t+1}((y_{k}|X)} Ht+1(yk∣X)=eht+1((yk∣X)

对应到代码中

def _forward_alg(self, feats):

# Do the forward algorithm to compute the partition function

init_alphas = torch.full((1, self.tagset_size), -10000.)

# START_TAG has all of the score.

init_alphas[0][self.tag_to_ix[START_TAG]] = 0.

# Wrap in a variable so that we will get automatic backprop

forward_var = init_alphas

# Iterate through the sentence

for feat in feats:

alphas_t = [] # The forward tensors at this timestep

for next_tag in range(self.tagset_size):

# 状态特征函数的得分

emit_score = feat[next_tag].view(1, -1).expand(1, self.tagset_size)

# 状态转移函数的得分

trans_score = self.transitions[next_tag].view(1, -1)

# 从上一个单词的每个状态转移到next_tag状态的得分

# 所以next_tag_var是一个大小为tag_size的数组

next_tag_var = forward_var + trans_score + emit_score

# The forward variable for this tag is log-sum-exp of all the

# scores.

alphas_t.append(log_sum_exp(next_tag_var).view(1))

forward_var = torch.cat(alphas_t).view(1, -1)

terminal_var = forward_var + self.transitions[self.tag_to_ix[STOP_TAG]]

alpha = log_sum_exp(terminal_var)

return alpha

解释一下:

- 在上述的代码中,所有计算都在 l o g log log空间下进行,所以公式中的 × \times ×都变成了 + + +;

- 示例中的状态总共5个,分别是[B,I,O,START,STOP],所以self.transitions是一个(5,5)的矩阵;

- feats是BiLstm的输出,形状(句子数量,单词数量,5),所以feats.shape()[1]就是步长,即上图中t的取值,比如一句话有11个单词,那么 t ∈ ( 1 , 2 , . . . 11 ) t\in (1,2,...11) t∈(1,2,...11);

- emit_score 就是我们公式中的H()函数的输出,代码中每次先expand了一次,对应着公式 Z i t + 1 = Z i t ⋅ G 11 ⋅ H t + 1 ( i ∣ X ) + Z i t ⋅ G 21 ⋅ H t + 1 ( i ∣ X ) + Z i t ⋅ G 31 ⋅ H t + 1 ( i ∣ X ) + Z i t ⋅ G 41 ⋅ H t + 1 ( i ∣ X ) Z_i^{t+1}=Z_i^{t}\cdot G_{11}\cdot H_{t+1}(i|X) +Z_i^{t}\cdot G_{21}\cdot H_{t+1}(i|X) +Z_i^{t}\cdot G_{31}\cdot H_{t+1}(i|X) +Z_i^{t}\cdot G_{41}\cdot H_{t+1}(i|X) Zit+1=Zit⋅G11⋅Ht+1(i∣X)+Zit⋅G21⋅Ht+1(i∣X)+Zit⋅G31⋅Ht+1(i∣X)+Zit⋅G41⋅Ht+1(i∣X)中的 H t + 1 ( i ∣ X ) H_{t+1}(i|X) Ht+1(i∣X);

- trans_score 就是我们的状态转移函数G(),代码中每次取一行进行计算

trans_score = self.transitions[next_tag].view(1, -1),即公式中的 G 1 i , G 2 i , G 3 i , G 4 i G_{1i},G_{2i},G_{3i}, G_{4i} G1i,G2i,G3i,G4i; - 代码中的next_tag_var = forward_var + trans_score + emit_score就是公式中的 Z i t Z_i^{t} Zit了,我们的前向变量;

- 求出所有的 Z i t Z_i^{t} Zit后使用log_sum_exp()来计算我们所需要的logZ(x);

- 如何计算 l o g ∑ i = 1 k e a i log\sum_{i=1}^{k}e^{a_i} log∑i=1keai

l o g ∑ i = 1 k e a i = A − l o g ∑ i = 1 k e a i − A log\sum_{i=1}^{k}e^{a_i}=A-log\sum_{i=1}^{k}e^{a_i-A} log∑i=1keai=A−log∑i=1keai−A 其中 A = m a x ( a 1 , a 2 , . . . , a n ) A=max({a_1, a_2, ..., a_n}) A=max(a1,a2,...,an)

所以log_sum_exp()长这样:

def log_sum_exp(vec):

max_score = vec[0, argmax(vec)]

max_score_broadcast = max_score.view(1, -1).expand(1, vec.size()[1])

return max_score + torch.log(torch.sum(torch.exp(vec - max_score_broadcast)))

- viterbi解码算法

官方给出的代码中实现了很标准的viterbi算法,只要记住

δ t + 1 = m a x 1 ⩽ j ⩽ k ( δ t + ∑ c ∑ k λ k f k ( c , y , x ) ) \delta _{t+1}=max_{1\leqslant j\leqslant k }(\delta _t+\sum_c\sum_{k}\lambda_k f_k(c,y,x)) δt+1=max1⩽j⩽k(δt+∑c∑kλkfk(c,y,x))具体理论请参加李航大大的统计学习,就不再搬运了。

def _viterbi_decode(self, feats):

backpointers = []

# Initialize the viterbi variables in log space

init_vvars = torch.full((1, self.tagset_size), -10000.)

init_vvars[0][self.tag_to_ix[START_TAG]] = 0

# forward_var at step i holds the viterbi variables for step i-1

forward_var = init_vvars

for feat in feats:

bptrs_t = [] # holds the backpointers for this step

viterbivars_t = [] # holds the viterbi variables for this step

for next_tag in range(self.tagset_size):

# next_tag_var[i] holds the viterbi variable for tag i at the

# previous step, plus the score of transitioning

# from tag i to next_tag.

# We don't include the emission scores here because the max

# does not depend on them (we add them in below)

next_tag_var = forward_var + self.transitions[next_tag]

best_tag_id = argmax(next_tag_var)

bptrs_t.append(best_tag_id)

viterbivars_t.append(next_tag_var[0][best_tag_id].view(1))

# Now add in the emission scores, and assign forward_var to the set

# of viterbi variables we just computed

forward_var = (torch.cat(viterbivars_t) + feat).view(1, -1)

backpointers.append(bptrs_t)

# Transition to STOP_TAG

terminal_var = forward_var + self.transitions[self.tag_to_ix[STOP_TAG]]

best_tag_id = argmax(terminal_var)

path_score = terminal_var[0][best_tag_id]

# Follow the back pointers to decode the best path.

best_path = [best_tag_id]

for bptrs_t in reversed(backpointers):

best_tag_id = bptrs_t[best_tag_id]

best_path.append(best_tag_id)

# Pop off the start tag (we dont want to return that to the caller)

start = best_path.pop()

assert start == self.tag_to_ix[START_TAG] # Sanity check

best_path.reverse()

return path_score, best_path

参考文档

[1] https://www.jiqizhixin.com/articles/2018-05-23-3

[2] https://zhuanlan.zhihu.com/p/71190655

[3] https://zhuanlan.zhihu.com/p/33397147

[4] https://pytorch.org/tutorials/beginner/nlp/advanced_tutorial.html

[5] https://blog.csdn.net/zycxnanwang/article/details/90385259