李宏毅机器学习笔记二

Contents

- 8 Keras使用

- 9-1 Tips for Training DNN

- 9-2 keras demo

- 11 Why Deep?

8 Keras使用

可以看出,batch_size 为1 和为10,参数更新速度是一样的(相同时间下,都用166s),这是由于vectorization带来的加速,并行运算。但随着batch_size 增大,速度会减慢。

mini-batch,参数更新有较大随机性,帮助跳出local minimum。如果不使用mini=batch,计算机可能会卡(内存不足),或则容易陷入local minimum。

上面的时间是下面的两倍,所以,当有GPU计算资源时,应该使用mini-batch,否则不会带来加速。

手写数字识别

import numpy as np

from keras.models import Sequential

from keras.layers import Dense, Activation

from keras.datasets import mnist

from keras.utils import np_utils

from keras.optimizers import SGD,Adam

import keras.callbacks

import time

a = time.time()

def load_data():

(x_train,y_train),(x_test,y_test)=mnist.load_data()

# print(x_train.shape,y_train.shape) #(60000, 28, 28) (60000,)

# print(x_test.shape, y_test.shape) # (10000, 28, 28) (10000,)

number = 10000

x_train = x_train[:number]

y_train = y_train[:number]

x_train = x_train.reshape(number,28*28)

# print(y_train.shape) # (10000,)

x_test = x_test.reshape(x_test.shape[0],28*28)

x_train = x_train.astype(np.float32)

x_test = x_test.astype(np.float32)

y_train = np_utils.to_categorical(y_train,10)

y_test = np_utils.to_categorical(y_test, 10)

# print(y_train.shape) # (10000, 10)

x_train /= 255

x_test /= 255

# print(x_train.shape,y_train.shape,x_test.shape,y_test.shape) # (10000, 784) (10000, 10) (10000, 784) (10000, 10)

return (x_train,y_train),(x_test,y_test)

(x_train,y_train),(x_test,y_test) = load_data()

model = Sequential()

# 全连接层

model.add(Dense(input_dim=28*28,units=500,activation='relu')) # 'relu' 'sigmoid'

model.add(Dense(units=500,activation='relu'))

model.add(Dense(units=10,activation='softmax'))

# model.compile(loss='categorical_crossentropy',optimizer='adam',metrics=['accuracy'])

# model.compile(loss='mse',optimizer='sgd',metrics=['accuracy'])

# model.compile(loss='categorical_crossentropy',optimizer=SGD(lr=0.01, momentum=0.9, nesterov=True),metrics=['accuracy'])

model.compile(loss='categorical_crossentropy',optimizer=Adam(),metrics=['accuracy'])

#

#可视化

log_filepath = 'E:\AAA\PYTHON\conda_test\keras_log'

tb_cb = keras.callbacks.TensorBoard(log_dir=log_filepath, write_images=1)

# 设置log的存储位置,将网络权值以图片格式保持在tensorboard中显示,设置每一个周期计算一次网络的权值,每层输出值的分布直方图

cbks = [tb_cb]

model.fit(x_train,y_train,batch_size=128,epochs=20, callbacks=cbks)

score = model.evaluate(x_test,y_test)

print('Total loss on Testing Set:',score[0])

print('Accuracy of Testing Set":',score[1])

# print(score)

# result = model.predict(x_test)

# 终端输入 tensorboard --logdir=E:\AAA\PYTHON\conda_test\keras_log

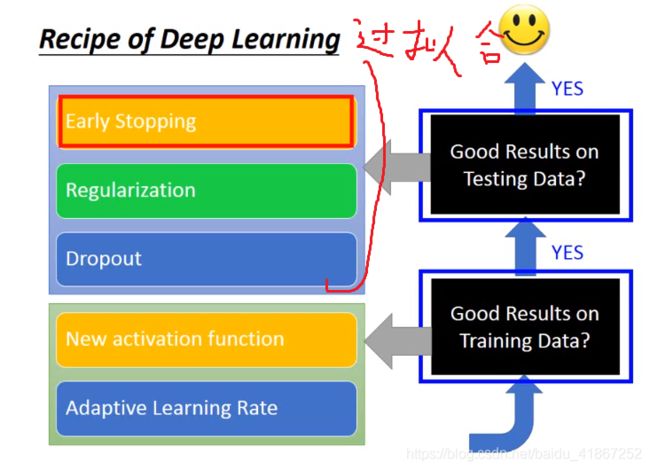

9-1 Tips for Training DNN

![]()

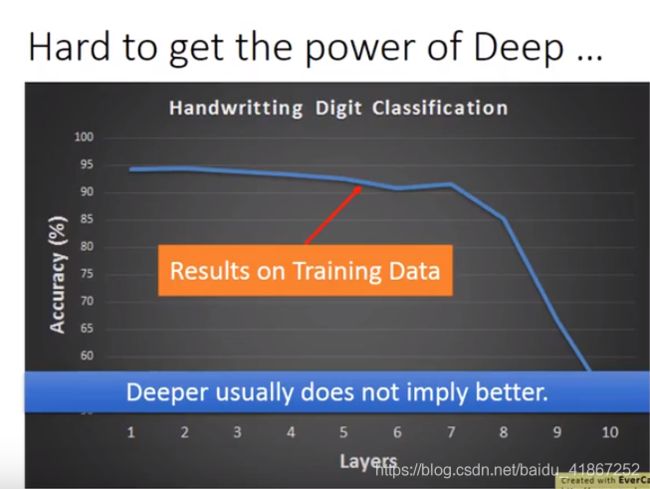

看到在testing data 上56层的误差比20层的大,并不能推出是过拟合导致的,还应该参考在training data上的表现。如图,可以看出并不是过拟合,因为56层在training data上的表现并不好,此时并不适合叫欠拟合,欠拟合是参数较少时出现。

上图是在 training data上的表现,层数较多,性能较差,此时并不是overfitting。

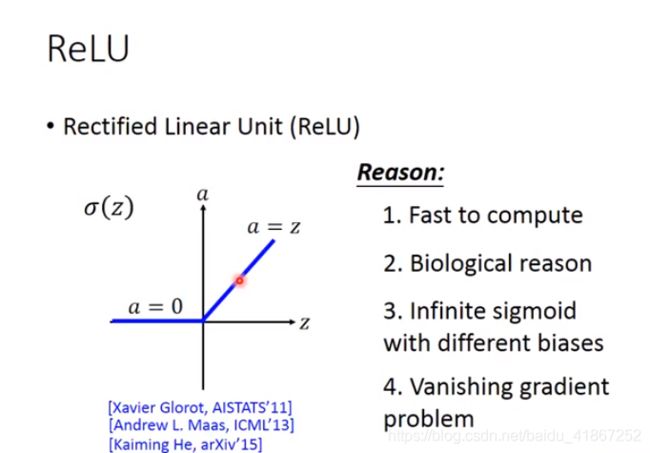

用sigmoid激活函数,容易发生 vanishing gradient。直观解释,给 w 一个增量,导致 C 有一个增量。随着层数增加,在sigmoid的作用下,w对C的影响慢慢减弱,导致梯度很小。

解决方法:

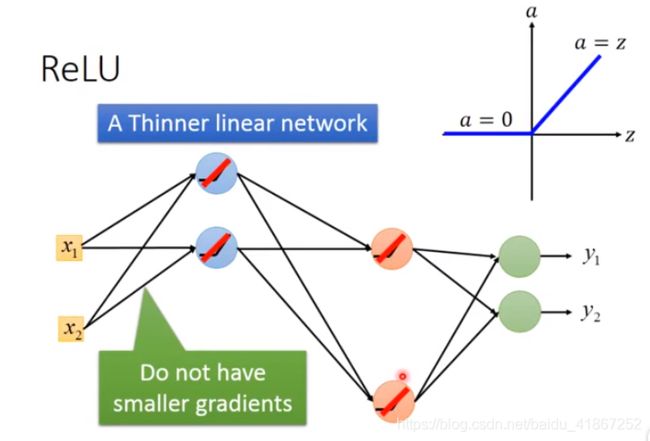

值为0的神经元,不起作用,可以删去不看。

小范围内是线性的,整体来看仍是非线性的

relu 的变形:

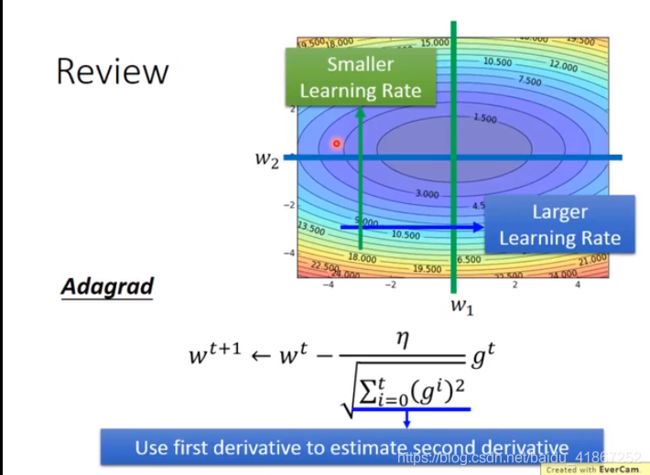

adagrad的进阶版

注:当 α \alpha α 较小时,表明比较当前的梯度所占权重较大

想象在真实世界中,小球在惯性的作用下不会陷入 local minimum ,plateau等区域。将这种特性加如梯度下降中。

v 3 = − λ 2 η ∇ L ( θ 0 ) − λ η ∇ L ( θ 1 ) − η ∇ L ( θ 2 ) v^3=-\lambda^2\eta\nabla L(\theta ^0)-\lambda\eta\nabla L(\theta ^1)-\eta\nabla L(\theta ^2) v3=−λ2η∇L(θ0)−λη∇L(θ1)−η∇L(θ2)

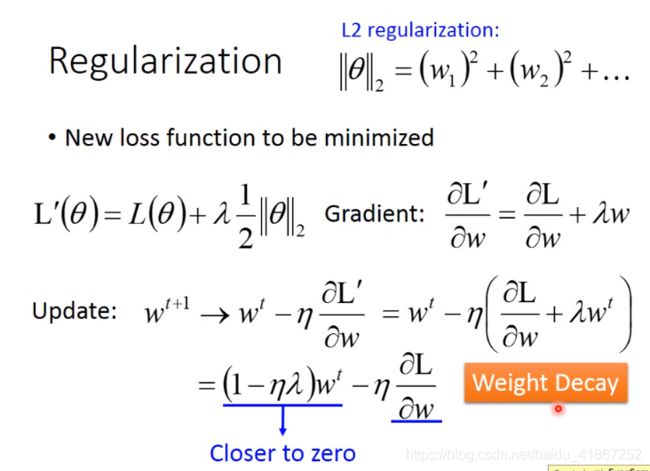

正则项可不加偏置,加上也可以。

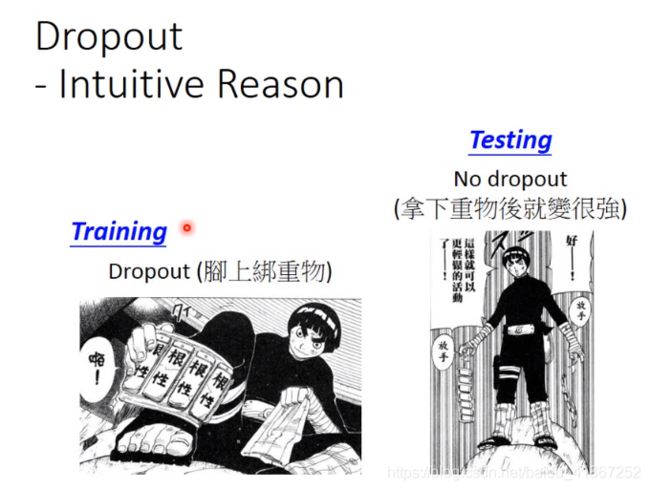

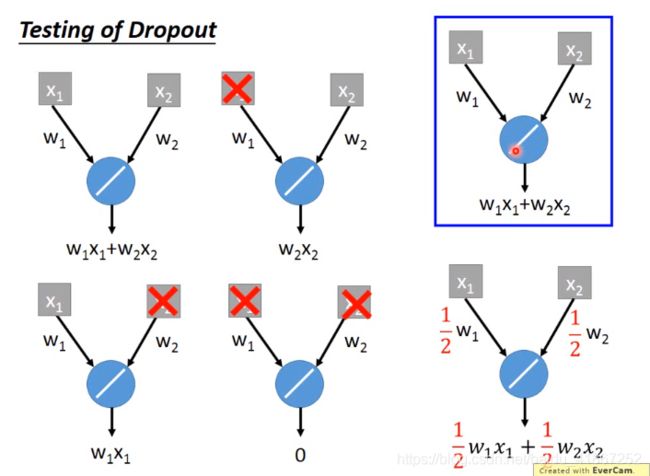

输出层不用dropout

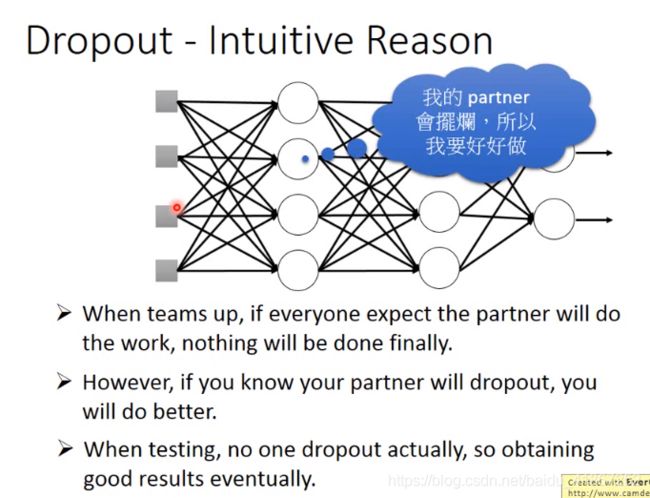

单个网络误差可能大,几个网络平均一下就能得到较好的结果。

ensemble 集成

![]()

举例说明:

上图激活函数换成非线性的,两者就不近似相等,但神奇的是dropout仍work。如果激活函数接近线性,dropout 的效果会更好。

9-2 keras demo

import numpy as np

from keras.models import Sequential

from keras.layers import Dense, Activation

from keras.datasets import mnist

from keras.utils import np_utils

from keras.optimizers import SGD, Adam

import keras.callbacks

import time

from keras.layers import Dropout

a = time.time()

def load_data():

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# print(x_train.shape,y_train.shape) #(60000, 28, 28) (60000,)

# print(x_test.shape, y_test.shape) # (10000, 28, 28) (10000,)

number = 10000

x_train = x_train[:number]

y_train = y_train[:number]

x_train = x_train.reshape(number, 28 * 28)

# print(y_train.shape) # (10000,)

x_test = x_test.reshape(x_test.shape[0], 28 * 28)

x_train = x_train.astype(np.float32)

x_test = x_test.astype(np.float32)

y_train = np_utils.to_categorical(y_train, 10)

y_test = np_utils.to_categorical(y_test, 10)

# print(y_train.shape) # (10000, 10)

x_train /= 255

x_test /= 255

# 给 x_test 加一点噪声

x_test = np.random.normal(x_test)

# print(x_train.shape,y_train.shape,x_test.shape,y_test.shape) # (10000, 784) (10000, 10) (10000, 784) (10000, 10)

return (x_train, y_train), (x_test, y_test)

(x_train, y_train), (x_test, y_test) = load_data()

model = Sequential()

# 全连接层

model.add(Dense(input_dim=28 * 28, units=500, activation='relu')) # 'relu' 'sigmoid'

# dropout 加在隐藏层后

model.add(Dropout(0.7))

model.add(Dense(units=500, activation='relu'))

model.add(Dropout(0.7))

model.add(Dense(units=10, activation='softmax'))

# model.compile(loss='categorical_crossentropy',optimizer='adam',metrics=['accuracy'])

# model.compile(loss='mse',optimizer='sgd',metrics=['accuracy'])

# model.compile(loss='categorical_crossentropy',optimizer=SGD(lr=0.01, momentum=0.9, nesterov=True),metrics=['accuracy'])

model.compile(loss='categorical_crossentropy', optimizer=Adam(), metrics=['accuracy'])

#

# 可视化

log_filepath = 'E:\AAA\PYTHON\conda_test\keras_log'

tb_cb = keras.callbacks.TensorBoard(log_dir=log_filepath, write_images=1)

# 设置log的存储位置,将网络权值以图片格式保持在tensorboard中显示,设置每一个周期计算一次网络的权值,每层输出值的分布直方图

cbks = [tb_cb]

model.fit(x_train, y_train, batch_size=128, epochs=20, callbacks=cbks)

score = model.evaluate(x_test, y_test,batch_size=10000)

print('Total loss on Testing Set:', score[0])

print('Accuracy of Testing Set":', score[1])

# print(score)

# score = model.evaluate(x_train, y_train,batch_size=10000)

# print('Total loss on Training Set:', score[0])

# print('Accuracy of Training Set":', score[1])

# print(score)

# result = model.predict(x_test)

# 终端输入 tensorboard --logdir=E:\AAA\PYTHON\conda_test\keras_log

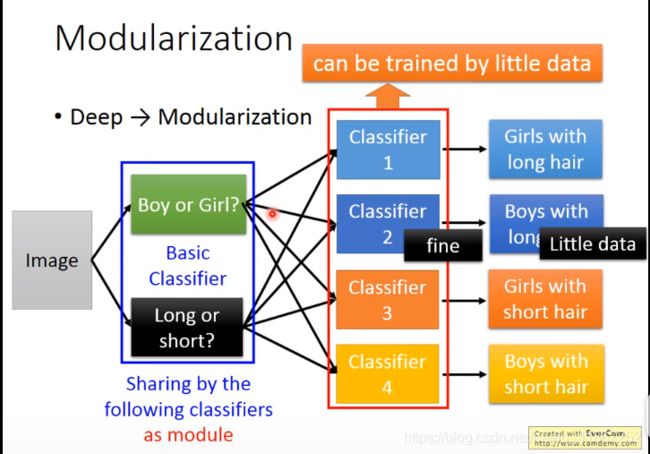

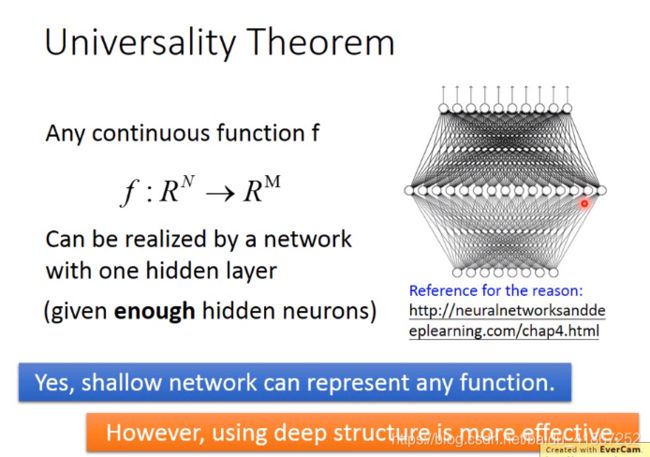

11 Why Deep?

长发男数据太少

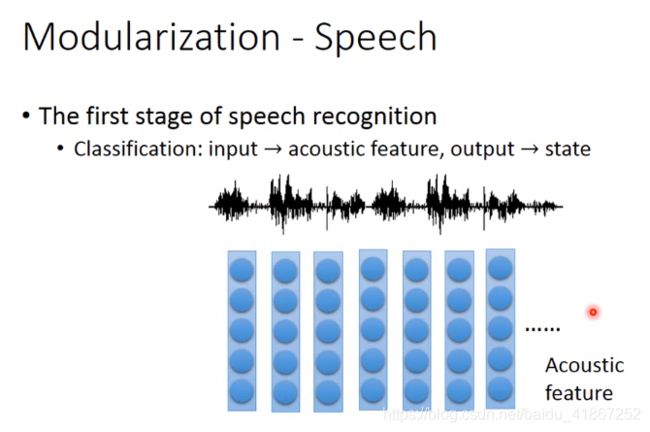

phoneme 音位

phone 音素

acoustic feature 声学特征

![]()

而GMM(高斯混合模型),每个state 都有一个高斯分布

manner of articulation 发音方式

analogy类比

DNN与逻辑电路做类比

多叠几层后再剪,比较容易;直接剪第三张难度太大。

仅一层网络,无法将上面两类分开。加一隐藏层,相当于做了特征转换。