Resnet的pytorch官方实现代码解读

Resnet的pytorch官方实现代码解读

目录

-

- Resnet的pytorch官方实现代码解读

- 前言

- 概述

-

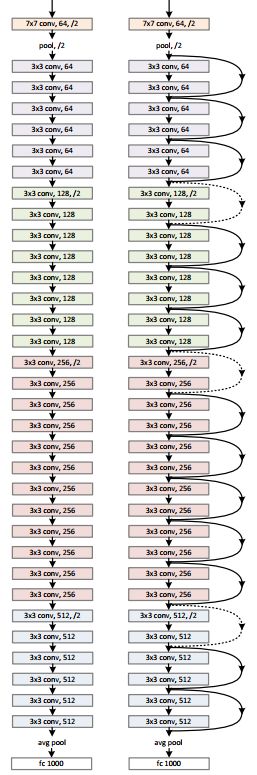

- 34层网络结构的“平原”网络与“残差”网络的结构图对比

- 不同结构的resnet的网络架构设计

- resnet代码细节分析

前言

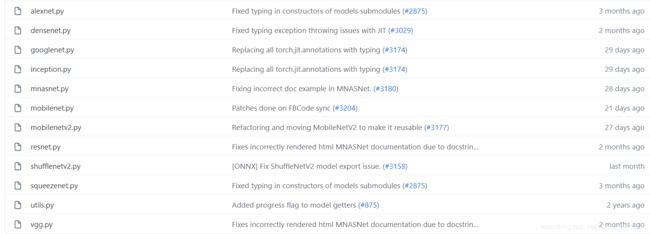

pytorch官方给出了现在的常见的经典网络的torch版本实现。仔细看看这些网络结构的实现,可以发现官方给出的代码比较精简,大部分致力于实现最朴素结构,没有用很多的技巧,在网络结构之外的分组卷积、膨胀卷积等等技巧已经略去(分组数目设置为1,膨胀系数设置为1),为理解网络结构略去了很多不必要的麻烦。

概述

34层网络结构的“平原”网络与“残差”网络的结构图对比

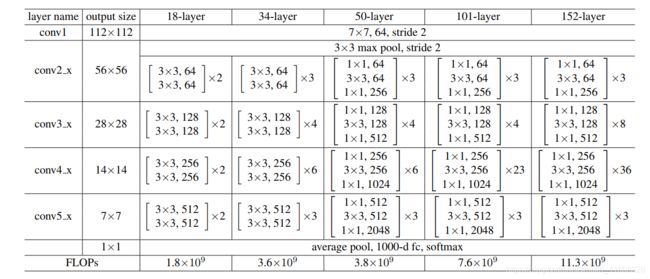

不同结构的resnet的网络架构设计

resnet有很多不一样的结构变种,大体上的框架没有变化,都是在原来的“直通”式结构的基础上加上跳跃连接,变化的是网络层数是参数量。

resnet代码细节分析

这个系列的博客致力于分析代码的细节,略过网络结构的优缺点理论分析,下面讲讲resnet是具体怎么操作的。

import torch

from torch import Tensor

import torch.nn as nn

from .utils import load_state_dict_from_url

from typing import Type, Any, Callable, Union, List, Optional

# 所有可用的网络模型的名称

__all__ = ['ResNet', 'resnet18', 'resnet34', 'resnet50', 'resnet101','resnet152', 'resnext50_32x4d', 'resnext101_32x8d','wide_resnet50_2', 'wide_resnet101_2']

# 预训练权重的下载地址,通过model_urls这个字典的key获取对应的键值value,也就是下载地址

model_urls = {'resnet18': 'https://download.pytorch.org/models/resnet18-5c106cde.pth',

'resnet34': 'https://download.pytorch.org/models/resnet34-333f7ec4.pth',

'resnet50': 'https://download.pytorch.org/models/resnet50-19c8e357.pth',

'resnet101': 'https://download.pytorch.org/models/resnet101-5d3b4d8f.pth',

'resnet152': 'https://download.pytorch.org/models/resnet152-b121ed2d.pth',

'resnext50_32x4d': 'https://download.pytorch.org/models/resnext50_32x4d-7cdf4587.pth',

'resnext101_32x8d': 'https://download.pytorch.org/models/resnext101_32x8d-8ba56ff5.pth',

'wide_resnet50_2': 'https://download.pytorch.org/models/wide_resnet50_2-95faca4d.pth',

'wide_resnet101_2': 'https://download.pytorch.org/models/wide_resnet101_2-32ee1156.pth',}

# 将conv2d封装起来,其中第一个参数代表input_channels,第二个参数代表output_channels

# 对conv2d的封装其实作用不大,也可以不封装,直接输入输入维度、输出维度、卷积核大小、

# 步长、补零值、分组卷积数量、膨胀卷积数量、偏置的值

def conv3x3(in_planes:int,out_planes:int, stride:int = 1, groups:int = 1, dilation: int = 1)->nn.conv2d:

return nn.conv2d(in_planes,out_planes,kernel_size = 3,stride = stride, padding = dilation, groups = groups,bias = False,dilation = dilation)

def conv1x1(in_planes:int,out_planes:int, stride:int = 1)->nn.conv2d:

return nn.conv2d(in_planes,out_planes,kernel_size = 1,stride = stride, bias = False)

# 定义基础版的参数模块,由两个3x3的卷积叠加而成

class BasicBlock(nn.Module):

expansion: int = 1

def __init__(

self,

inplanes:int,

planes:int,

stride:int = 1,

downsample:Optional[nn.Module] = None,

# 分组卷积,这里设置groups = 1,也就是不分组卷积

groups:int = 1,

base_width:int = 64,

# 膨胀卷积的膨胀系数设为1,也就是不膨胀卷积

dilation:int = 1,

norm_layer:Optional[Callable[..,nn.Module]] = None

)->None:

# 超父类,习惯上在一个类的开头写上super(class_name, self).__init__()来进行初始化

super(BasicBlock,self).__init__()

# 加上batch_normlization层

if norm_layer is None:

norm_layer = nn.BatchNorm2d

# 异常判断

if groups != 1 or base_width != 64:

raise ValueError('BasicBlock only supports groups=1 and base_width=64')

if dilation > 1:

raise NotImplementError('Dilation > 1 not supported in BasicBlock')

# 最普通的conv、bn、relu流程

self.conv1 = conv3x3(inplanes,planes,stride)

self.bn1 = norm_layer(planes)

self.relu = nn.ReLU(inplace = True)

self.conv2 = conv3x3(planes,planes)

self.bn2 = norm_layer(planes)

self.downsample = downsample

self.stride = stride

def forward(self,x:Tensor)->Tensor:

indentity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

# 如果出现下采样,要对输入的数据做上采样(乘以expansion系数),这样才能保证输入输出的维度一致,

# 这样子两个tensor才能够相加

if downsample is not None:

identity = self.downsample(x)

# 加上输入

out+=identity

out = self.relu(out)

return out

# 加强版的残差网络,与基础版本的不同之处在于从两个3x3卷积变成了

# 1x1、3x3、1x1卷积,前一个1x1卷积用来压缩维度,后一个1x1卷积用来恢复维度

# 卷积的时候都把bias设成False了,这是因为每次卷积之后都会做一个bn的操作,

# 经过bn操作,会生成新的数据分布,偏置项的作用将被消除掉,所以不用加偏置

class Bottleneck(nn.Module):

expansion: int = 4

def __init__(

self,

inplanes:int,

planes:int,

stride:int = 1,

downsample:Optional[nn.Module] = None,

groups:int = 1,

base_width:int = 64,

dilation:int = 1,

norm_layer:Optional[Callable[..,nn.Module]] = None

)->None:

super(BasicBlock,self).__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

width = int(planes*(base_width/64.))*groups

self.conv1 = conv1x1(inplanes,width)

self.bn1 = norm_layer(width)

self.conv2 = conv3x3(width,width,stride,groups,dilation)

self.bn2 = norm_layer(width)

self.conv3 = conv1x1(width,width,stride,groups,dilation)

self.bn3 = norm_layer(planes*self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self,x:Tensor)->Tensor:

indentity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

# 如果出现下采样,要对输入的数据做上采样(乘以expansion系数),这样才能保证输入输出的维度一致,

# 这样子两个tensor才能够相加

if downsample is not None:

identity = self.downsample(x)

out+=identity

out = self.relu(out)

return out

class Resnet:

def __init__(

self,

block:Type[Union[[BasicBlock,Bottleneck]],

layers:List[int],

num_classes:int = 1000,

zero_init_residual:bool = False,

groups: int = 1,

width_per_group:int = 64,

replace_stride_with_dilation:Optional[List[bool]] = None,

norm_layer = Optional[Callable[..,nn.Module]] = None

)->None:

super(Resnet,self).__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

self._norm_layer = norm_layer

self.inplanes = 64

self.dilation = 1

if replace_stride_with_dilation is None:

replace_stride_with_dilation = [False,False,False]

if len(replace_stride_with_dilation)!=3:

raise ValueError("replace_stride_with_dilation should be None "

"or a 3-element tuple, got {}".format(replace_stride_with_dilation))

self.groups = groups

self.base_width = width_per_group

self.conv1 = nn.Conv2d(3,self.inplanes,kernel_size = 7,stride = 2, padding = 3,bias = False)

self.bn1 = norm_layer(self.inplanes)

self.relu = nn.ReLU(inplace = True)

self.maxpool = nn.MaxPool2d(kernel_size = 3,stride = 2,padding = 1)

self.layer1 = self._make_layer(block,64,layers[0])

self.layer2 = self._make_layer(block,128,layers[1],stride = 2, dilate = replace_stride_with_dilation[0])

self.layer3 = self._make_layer(block,256,layers[2],stride = 2, dilate = replace_stride_with_dilation[1])

self.layer4 = self._make_layer(block,512,layers[3],stride = 2, dilate = replace_stride_with_dilation[2])

self.avgpool = nn.AdaptiveAvgPool2d((1,1))

self.fc = nn.Linear(512*block.expansion,num_classes)

for m in self.modules():

if isinstance(m,nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode = 'fan_out',nonlinearity='relu')

elif isinstance(m,(nn.BatchNorm2d,nn.GroupNorm)):

nn.init.constant_(m.weight,1)

nn.init.constant_(m.bias,0)

if zero_init_residual:

for m in self.modules():

if isinstance(m,Bottleneck):

nn.init.constant_(m.bn3.weight,0)

elif isinstance(m,BasicBlock):

nn.init.constant_(m.bn2.weight,0)

def _make_layer(self,block:Type[Union[BasicBlock,Bottleneck]],planes:int, blocks:int,stride:int = 1,dilate:bool = False)->nn.Sequential:

norm_layer = self._norm_layer

downsample = None

previous_dilation = self.dilation

if dilate:

self.dilation*=stride

stride = 1

if stride != 1 or self.inplanes != planes*block.expansion:

# downsample就是一堆1x1的卷积核接bn操作,目的是为了让残差连接的tensor的通道数能够对应的上

downsample = nn.Sequential(

conv1x1(self.inplanes,planes*block.expansion,stride),

norm_layer(planes*block.expansion),

)

layers = []

layers.append(block(self.inplanes,planes,stride,downsample,self.groups,self.base_width,previous_dilation,norm_layer))

self.inplanes = planes*block.expansion

for _ in range(1,blocks):

layers.append(block(self.inplanes,planes,groups = self.groups,base_width = self.base_width, dilation = self.dilation ,norm_layer))

# 将网络结构打包成Sequential格式返回的好处在于不用每次都

# 在函数里面写conv、relu、maxpool的操作,直接打包成一个功能列表送进去

# *layers,单个*号表示这个位置接收任意多个非关键字参数,并且转化成列表

return nn.Sequential(*layers)

def _forward_impl(self,x:Tensor)->Tensor:

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = x.view(x.size(0),-1)

x = self.fc(x)

return x

def forward(self,x:Tensor)->Tensor:

return self._forward_impl(x)

# 输入basicblock或bottleneck以及layers参数,返回resnet网络结构

def _resnet(

arch:str,

block:Type[Union[BasicBlock,Bottleneck]],

layers:List[int],

pretrained:bool,

progress:bool,

**kwargs:Any

)->Resnet:

model = Resnet(block,layers,**kwargs)

if pretrained:

state_dict = load_state_dict_from_url(model_urls[arch],progress = progress)

model.load_state_dict_from_url(state_dict)

return model

# 预定义的resnet不同结构

def resnet18(pretrained:bool = False, progress: bool = True, **kwargs: Any)->ResNet:

return _resnet('resnet18',BasicBlock,[2,2,2,2],pretrained, progress, **kwargs)

def resnet34(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNet-34 model from

`"Deep Residual Learning for Image Recognition" `_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

return _resnet('resnet34', BasicBlock, [3, 4, 6, 3], pretrained, progress,

**kwargs)

def resnet50(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNet-50 model from

`"Deep Residual Learning for Image Recognition" `_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

return _resnet('resnet50', Bottleneck, [3, 4, 6, 3], pretrained, progress,

**kwargs)

def resnet101(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNet-101 model from

`"Deep Residual Learning for Image Recognition" `_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

return _resnet('resnet101', Bottleneck, [3, 4, 23, 3], pretrained, progress,

**kwargs)

def resnet152(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNet-152 model from

`"Deep Residual Learning for Image Recognition" `_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

return _resnet('resnet152', Bottleneck, [3, 8, 36, 3], pretrained, progress,

**kwargs)

def resnext50_32x4d(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNeXt-50 32x4d model from

`"Aggregated Residual Transformation for Deep Neural Networks" `_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

kwargs['groups'] = 32

kwargs['width_per_group'] = 4

return _resnet('resnext50_32x4d', Bottleneck, [3, 4, 6, 3],

pretrained, progress, **kwargs)

def resnext101_32x8d(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNeXt-101 32x8d model from

`"Aggregated Residual Transformation for Deep Neural Networks" `_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

kwargs['groups'] = 32

kwargs['width_per_group'] = 8

return _resnet('resnext101_32x8d', Bottleneck, [3, 4, 23, 3],

pretrained, progress, **kwargs)

def wide_resnet50_2(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""Wide ResNet-50-2 model from

`"Wide Residual Networks" `_.

The model is the same as ResNet except for the bottleneck number of channels

which is twice larger in every block. The number of channels in outer 1x1

convolutions is the same, e.g. last block in ResNet-50 has 2048-512-2048

channels, and in Wide ResNet-50-2 has 2048-1024-2048.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

kwargs['width_per_group'] = 64 * 2

return _resnet('wide_resnet50_2', Bottleneck, [3, 4, 6, 3],

pretrained, progress, **kwargs)

def wide_resnet101_2(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""Wide ResNet-101-2 model from

`"Wide Residual Networks" `_.

The model is the same as ResNet except for the bottleneck number of channels

which is twice larger in every block. The number of channels in outer 1x1

convolutions is the same, e.g. last block in ResNet-50 has 2048-512-2048

channels, and in Wide ResNet-50-2 has 2048-1024-2048.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

kwargs['width_per_group'] = 64 * 2

return _resnet('wide_resnet101_2', Bottleneck, [3, 4, 23, 3],

pretrained, progress, **kwargs)

resnet18每一层的输入输出的tensor的shape到底是多少呢,输入是3x224x224的tensor,将输出的tensor打印如下:

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 112, 112] 9,408

BatchNorm2d-2 [-1, 64, 112, 112] 128

ReLU-3 [-1, 64, 112, 112] 0

MaxPool2d-4 [-1, 64, 56, 56] 0

Conv2d-5 [-1, 64, 56, 56] 36,864

BatchNorm2d-6 [-1, 64, 56, 56] 128

ReLU-7 [-1, 64, 56, 56] 0

Conv2d-8 [-1, 64, 56, 56] 36,864

BatchNorm2d-9 [-1, 64, 56, 56] 128

ReLU-10 [-1, 64, 56, 56] 0

BasicBlock-11 [-1, 64, 56, 56] 0

Conv2d-12 [-1, 64, 56, 56] 36,864

BatchNorm2d-13 [-1, 64, 56, 56] 128

ReLU-14 [-1, 64, 56, 56] 0

Conv2d-15 [-1, 64, 56, 56] 36,864

BatchNorm2d-16 [-1, 64, 56, 56] 128

ReLU-17 [-1, 64, 56, 56] 0

BasicBlock-18 [-1, 64, 56, 56] 0

Conv2d-19 [-1, 128, 28, 28] 73,728

BatchNorm2d-20 [-1, 128, 28, 28] 256

ReLU-21 [-1, 128, 28, 28] 0

Conv2d-22 [-1, 128, 28, 28] 147,456

BatchNorm2d-23 [-1, 128, 28, 28] 256

Conv2d-24 [-1, 128, 28, 28] 8,192

BatchNorm2d-25 [-1, 128, 28, 28] 256

ReLU-26 [-1, 128, 28, 28] 0

BasicBlock-27 [-1, 128, 28, 28] 0

Conv2d-28 [-1, 128, 28, 28] 147,456

BatchNorm2d-29 [-1, 128, 28, 28] 256

ReLU-30 [-1, 128, 28, 28] 0

Conv2d-31 [-1, 128, 28, 28] 147,456

BatchNorm2d-32 [-1, 128, 28, 28] 256

ReLU-33 [-1, 128, 28, 28] 0

BasicBlock-34 [-1, 128, 28, 28] 0

Conv2d-35 [-1, 256, 14, 14] 294,912

BatchNorm2d-36 [-1, 256, 14, 14] 512

ReLU-37 [-1, 256, 14, 14] 0

Conv2d-38 [-1, 256, 14, 14] 589,824

BatchNorm2d-39 [-1, 256, 14, 14] 512

Conv2d-40 [-1, 256, 14, 14] 32,768

BatchNorm2d-41 [-1, 256, 14, 14] 512

ReLU-42 [-1, 256, 14, 14] 0

BasicBlock-43 [-1, 256, 14, 14] 0

Conv2d-44 [-1, 256, 14, 14] 589,824

BatchNorm2d-45 [-1, 256, 14, 14] 512

ReLU-46 [-1, 256, 14, 14] 0

Conv2d-47 [-1, 256, 14, 14] 589,824

BatchNorm2d-48 [-1, 256, 14, 14] 512

ReLU-49 [-1, 256, 14, 14] 0

BasicBlock-50 [-1, 256, 14, 14] 0

Conv2d-51 [-1, 512, 7, 7] 1,179,648

BatchNorm2d-52 [-1, 512, 7, 7] 1,024

ReLU-53 [-1, 512, 7, 7] 0

Conv2d-54 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-55 [-1, 512, 7, 7] 1,024

Conv2d-56 [-1, 512, 7, 7] 131,072

BatchNorm2d-57 [-1, 512, 7, 7] 1,024

ReLU-58 [-1, 512, 7, 7] 0

BasicBlock-59 [-1, 512, 7, 7] 0

Conv2d-60 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-61 [-1, 512, 7, 7] 1,024

ReLU-62 [-1, 512, 7, 7] 0

Conv2d-63 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-64 [-1, 512, 7, 7] 1,024

ReLU-65 [-1, 512, 7, 7] 0

BasicBlock-66 [-1, 512, 7, 7] 0

================================================================

Total params: 11,176,512

Trainable params: 11,176,512

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 62.78

Params size (MB): 42.64

Estimated Total Size (MB): 105.99

----------------------------------------------------------------

同样输入是3x224x224的tensor,如果是resnet50,那么就会用到bottleneck。那么什么是basicblock,什么是bottleneck呢?

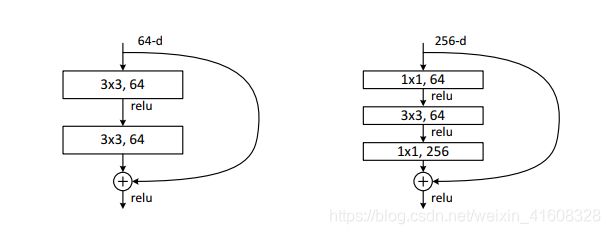

如上图所示,左图和右图分别是basicblock和bottleneck。从两者的结构图中可以发现,bottleneck相比起basicblock,在开头和结尾的地方多了1x1的卷积,1x1的卷积的作用在于变换通道数,通过控制1x1卷积核的数量,可以方便地调整通道数的大小。

resnet50输出的shape为:

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 112, 112] 9,408

BatchNorm2d-2 [-1, 64, 112, 112] 128

ReLU-3 [-1, 64, 112, 112] 0

MaxPool2d-4 [-1, 64, 56, 56] 0

Conv2d-5 [-1, 64, 56, 56] 4,096

BatchNorm2d-6 [-1, 64, 56, 56] 128

ReLU-7 [-1, 64, 56, 56] 0

Conv2d-8 [-1, 64, 56, 56] 36,864

BatchNorm2d-9 [-1, 64, 56, 56] 128

ReLU-10 [-1, 64, 56, 56] 0

Conv2d-11 [-1, 256, 56, 56] 16,384

BatchNorm2d-12 [-1, 256, 56, 56] 512

Conv2d-13 [-1, 256, 56, 56] 16,384

BatchNorm2d-14 [-1, 256, 56, 56] 512

ReLU-15 [-1, 256, 56, 56] 0

Bottleneck-16 [-1, 256, 56, 56] 0

Conv2d-17 [-1, 64, 56, 56] 16,384

BatchNorm2d-18 [-1, 64, 56, 56] 128

ReLU-19 [-1, 64, 56, 56] 0

Conv2d-20 [-1, 64, 56, 56] 36,864

BatchNorm2d-21 [-1, 64, 56, 56] 128

ReLU-22 [-1, 64, 56, 56] 0

Conv2d-23 [-1, 256, 56, 56] 16,384

BatchNorm2d-24 [-1, 256, 56, 56] 512

ReLU-25 [-1, 256, 56, 56] 0

Bottleneck-26 [-1, 256, 56, 56] 0

Conv2d-27 [-1, 64, 56, 56] 16,384

BatchNorm2d-28 [-1, 64, 56, 56] 128

ReLU-29 [-1, 64, 56, 56] 0

Conv2d-30 [-1, 64, 56, 56] 36,864

BatchNorm2d-31 [-1, 64, 56, 56] 128

ReLU-32 [-1, 64, 56, 56] 0

Conv2d-33 [-1, 256, 56, 56] 16,384

BatchNorm2d-34 [-1, 256, 56, 56] 512

ReLU-35 [-1, 256, 56, 56] 0

Bottleneck-36 [-1, 256, 56, 56] 0

Conv2d-37 [-1, 128, 56, 56] 32,768

BatchNorm2d-38 [-1, 128, 56, 56] 256

ReLU-39 [-1, 128, 56, 56] 0

Conv2d-40 [-1, 128, 28, 28] 147,456

BatchNorm2d-41 [-1, 128, 28, 28] 256

ReLU-42 [-1, 128, 28, 28] 0

Conv2d-43 [-1, 512, 28, 28] 65,536

BatchNorm2d-44 [-1, 512, 28, 28] 1,024

Conv2d-45 [-1, 512, 28, 28] 131,072

BatchNorm2d-46 [-1, 512, 28, 28] 1,024

ReLU-47 [-1, 512, 28, 28] 0

Bottleneck-48 [-1, 512, 28, 28] 0

Conv2d-49 [-1, 128, 28, 28] 65,536

BatchNorm2d-50 [-1, 128, 28, 28] 256

ReLU-51 [-1, 128, 28, 28] 0

Conv2d-52 [-1, 128, 28, 28] 147,456

BatchNorm2d-53 [-1, 128, 28, 28] 256

ReLU-54 [-1, 128, 28, 28] 0

Conv2d-55 [-1, 512, 28, 28] 65,536

BatchNorm2d-56 [-1, 512, 28, 28] 1,024

ReLU-57 [-1, 512, 28, 28] 0

Bottleneck-58 [-1, 512, 28, 28] 0

Conv2d-59 [-1, 128, 28, 28] 65,536

BatchNorm2d-60 [-1, 128, 28, 28] 256

ReLU-61 [-1, 128, 28, 28] 0

Conv2d-62 [-1, 128, 28, 28] 147,456

BatchNorm2d-63 [-1, 128, 28, 28] 256

ReLU-64 [-1, 128, 28, 28] 0

Conv2d-65 [-1, 512, 28, 28] 65,536

BatchNorm2d-66 [-1, 512, 28, 28] 1,024

ReLU-67 [-1, 512, 28, 28] 0

Bottleneck-68 [-1, 512, 28, 28] 0

Conv2d-69 [-1, 128, 28, 28] 65,536

BatchNorm2d-70 [-1, 128, 28, 28] 256

ReLU-71 [-1, 128, 28, 28] 0

Conv2d-72 [-1, 128, 28, 28] 147,456

BatchNorm2d-73 [-1, 128, 28, 28] 256

ReLU-74 [-1, 128, 28, 28] 0

Conv2d-75 [-1, 512, 28, 28] 65,536

BatchNorm2d-76 [-1, 512, 28, 28] 1,024

ReLU-77 [-1, 512, 28, 28] 0

Bottleneck-78 [-1, 512, 28, 28] 0

Conv2d-79 [-1, 256, 28, 28] 131,072

BatchNorm2d-80 [-1, 256, 28, 28] 512

ReLU-81 [-1, 256, 28, 28] 0

Conv2d-82 [-1, 256, 14, 14] 589,824

BatchNorm2d-83 [-1, 256, 14, 14] 512

ReLU-84 [-1, 256, 14, 14] 0

Conv2d-85 [-1, 1024, 14, 14] 262,144

BatchNorm2d-86 [-1, 1024, 14, 14] 2,048

Conv2d-87 [-1, 1024, 14, 14] 524,288

BatchNorm2d-88 [-1, 1024, 14, 14] 2,048

ReLU-89 [-1, 1024, 14, 14] 0

Bottleneck-90 [-1, 1024, 14, 14] 0

Conv2d-91 [-1, 256, 14, 14] 262,144

BatchNorm2d-92 [-1, 256, 14, 14] 512

ReLU-93 [-1, 256, 14, 14] 0

Conv2d-94 [-1, 256, 14, 14] 589,824

BatchNorm2d-95 [-1, 256, 14, 14] 512

ReLU-96 [-1, 256, 14, 14] 0

Conv2d-97 [-1, 1024, 14, 14] 262,144

BatchNorm2d-98 [-1, 1024, 14, 14] 2,048

ReLU-99 [-1, 1024, 14, 14] 0

Bottleneck-100 [-1, 1024, 14, 14] 0

Conv2d-101 [-1, 256, 14, 14] 262,144

BatchNorm2d-102 [-1, 256, 14, 14] 512

ReLU-103 [-1, 256, 14, 14] 0

Conv2d-104 [-1, 256, 14, 14] 589,824

BatchNorm2d-105 [-1, 256, 14, 14] 512

ReLU-106 [-1, 256, 14, 14] 0

Conv2d-107 [-1, 1024, 14, 14] 262,144

BatchNorm2d-108 [-1, 1024, 14, 14] 2,048

ReLU-109 [-1, 1024, 14, 14] 0

Bottleneck-110 [-1, 1024, 14, 14] 0

Conv2d-111 [-1, 256, 14, 14] 262,144

BatchNorm2d-112 [-1, 256, 14, 14] 512

ReLU-113 [-1, 256, 14, 14] 0

Conv2d-114 [-1, 256, 14, 14] 589,824

BatchNorm2d-115 [-1, 256, 14, 14] 512

ReLU-116 [-1, 256, 14, 14] 0

Conv2d-117 [-1, 1024, 14, 14] 262,144

BatchNorm2d-118 [-1, 1024, 14, 14] 2,048

ReLU-119 [-1, 1024, 14, 14] 0

Bottleneck-120 [-1, 1024, 14, 14] 0

Conv2d-121 [-1, 256, 14, 14] 262,144

BatchNorm2d-122 [-1, 256, 14, 14] 512

ReLU-123 [-1, 256, 14, 14] 0

Conv2d-124 [-1, 256, 14, 14] 589,824

BatchNorm2d-125 [-1, 256, 14, 14] 512

ReLU-126 [-1, 256, 14, 14] 0

Conv2d-127 [-1, 1024, 14, 14] 262,144

BatchNorm2d-128 [-1, 1024, 14, 14] 2,048

ReLU-129 [-1, 1024, 14, 14] 0

Bottleneck-130 [-1, 1024, 14, 14] 0

Conv2d-131 [-1, 256, 14, 14] 262,144

BatchNorm2d-132 [-1, 256, 14, 14] 512

ReLU-133 [-1, 256, 14, 14] 0

Conv2d-134 [-1, 256, 14, 14] 589,824

BatchNorm2d-135 [-1, 256, 14, 14] 512

ReLU-136 [-1, 256, 14, 14] 0

Conv2d-137 [-1, 1024, 14, 14] 262,144

BatchNorm2d-138 [-1, 1024, 14, 14] 2,048

ReLU-139 [-1, 1024, 14, 14] 0

Bottleneck-140 [-1, 1024, 14, 14] 0

Conv2d-141 [-1, 512, 14, 14] 524,288

BatchNorm2d-142 [-1, 512, 14, 14] 1,024

ReLU-143 [-1, 512, 14, 14] 0

Conv2d-144 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-145 [-1, 512, 7, 7] 1,024

ReLU-146 [-1, 512, 7, 7] 0

Conv2d-147 [-1, 2048, 7, 7] 1,048,576

BatchNorm2d-148 [-1, 2048, 7, 7] 4,096

Conv2d-149 [-1, 2048, 7, 7] 2,097,152

BatchNorm2d-150 [-1, 2048, 7, 7] 4,096

ReLU-151 [-1, 2048, 7, 7] 0

Bottleneck-152 [-1, 2048, 7, 7] 0

Conv2d-153 [-1, 512, 7, 7] 1,048,576

BatchNorm2d-154 [-1, 512, 7, 7] 1,024

ReLU-155 [-1, 512, 7, 7] 0

Conv2d-156 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-157 [-1, 512, 7, 7] 1,024

ReLU-158 [-1, 512, 7, 7] 0

Conv2d-159 [-1, 2048, 7, 7] 1,048,576

BatchNorm2d-160 [-1, 2048, 7, 7] 4,096

ReLU-161 [-1, 2048, 7, 7] 0

Bottleneck-162 [-1, 2048, 7, 7] 0

Conv2d-163 [-1, 512, 7, 7] 1,048,576

BatchNorm2d-164 [-1, 512, 7, 7] 1,024

ReLU-165 [-1, 512, 7, 7] 0

Conv2d-166 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-167 [-1, 512, 7, 7] 1,024

ReLU-168 [-1, 512, 7, 7] 0

Conv2d-169 [-1, 2048, 7, 7] 1,048,576

BatchNorm2d-170 [-1, 2048, 7, 7] 4,096

ReLU-171 [-1, 2048, 7, 7] 0

Bottleneck-172 [-1, 2048, 7, 7] 0

================================================================

Total params: 23,508,032

Trainable params: 23,508,032

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 286.54

Params size (MB): 89.68

Estimated Total Size (MB): 376.79

----------------------------------------------------------------