Pytorch 深度学习特征图可视化——(Yolo网络、rcnn)

写在前面

想系统学习深度学西可查看weibuweibu123此人博文

下面代码可以对实现特征图的可视化;主要以Yolov4作为例子,使用者仅仅修改自己想可视乎网络的层数或者某层的任意维度

目录

写在前面

对Yolo系列、r-cnn系列都有较好的表现形式,下面我们以Yolov4网络做详细介绍

1:获得网络的模型结构:

Yolobody(

(backbone): CSPDarkNet(

(conv1): BasicConv(

(conv): Conv2d(3, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(mish): Mish()

)

(stages): ModuleList(

(0): Resblock_body(

(downsaple_conv): BasicConv(

(conv): Conv2d(32, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(mish): Mish()

)

(split_conv0): BasicConv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(mish): Mish()

)

(split_conv1): BasicConv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(mish): Mish()

)

(block_conv): Sequential(

(0): Resblock(

(block): Sequential(

(0): BasicConv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(mish): Mish()

)

(1): BasicConv(

(conv): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(mish): Mish()

)

)

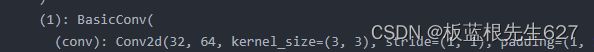

)2:定义钩子函数

# 定义钩子函数,获取指定层名称的特征

activation = {} # 保存获取的输出

def get_activation(name):

def hook(model, input, output):

activation[name] = output.detach()

return hook

3:根据钩子函数可视化特征图

比如我们想可视化 上述模型中倒数第六行的卷积层的特征图,我们从模型上发现他的模型位置是:backbone->stage[0]->block_conv[0]->block[1]->conv !!!!看()里面的

4:详细代码

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

from torch.nn import functional as F

from torchvision import transforms

import numpy as np

from PIL import Image

from collections import OrderedDict

import cv2

from net.yolov4 import Yolobody

# 定义钩子函数,获取指定层名称的特征

activation = {} # 保存获取的输出

def get_activation(name):

def hook(model, input, output):

activation[name] = output.detach()

return hook

def main():

anchors_mask = [[6, 7, 8], [3, 4, 5], [0, 1, 2]]

#调用模型

model = Yolobody(anchors_mask,20,False)

# print(model)

model.eval()

# 根据刚刚指定的层数更改

model.backbone.stages[0].block_conv[0].block[1].conv.register_forward_hook(get_activation('conv'))

_,_,_ = model(input_img)

bn = activation['conv'] # 结果将保存在activation字典中

print(bn.shape)

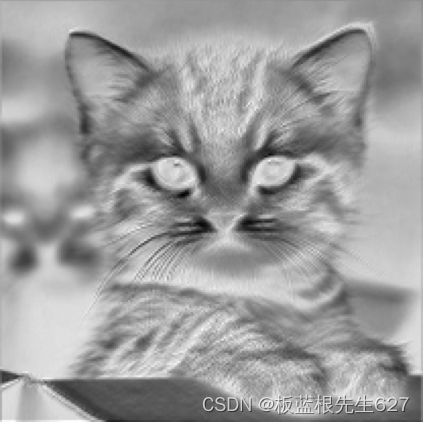

#显示特征图,通过更改下面的代码可以看到64维中任何几维

plt.figure(figsize=(12,12))

for i in range(64):

if i <63:

continue

else:

j=i

j = j-63

plt.subplot(1,2,j+1)

#显示每个通道的特征图因此用灰色显示

plt.imshow(bn[0,i,:,:], cmap='gray')

plt.axis('off')

plt.show()

return 0

if __name__=='__main__':

transform = transforms.Compose([

transforms.Resize([416,416]),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

# 从测试集中读取一张图片,并显示出来

img_path = 'F:/tupian/0048.jpg'

img = Image.open(img_path)

#给图片增加一个N维度

input_img = transform(img).unsqueeze(0)

#[1,3,224,224]

print(input_img.shape)

# imgarray = np.array(img) / 255.0

# plt.figure(figsize=(8,8))

# plt.imshow(imgarray)

# plt.axis('off')

# plt.title("the first picture")

# plt.show()

# #将图片resize。精度变低

# image = img.resize([416,416])

# imgarray = np.array(image) / 255.0

# plt.figure(figsize=(8,8))

# plt.imshow(imgarray)

# plt.title("the second picture")

# plt.show()

main()

# 将图片处理成模型可以预测的形式