附代码 Vision Transformer(VIT)模型解读

AN IMAGE IS WORTH 16X16 WORDS: TRANSFORMERS FOR IMAGE RECOGNITION AT SCALE

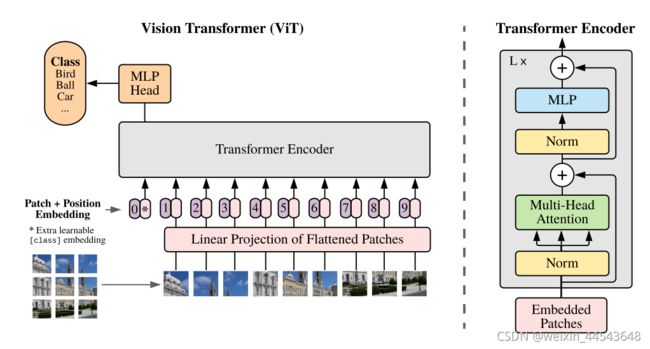

该论文主要介绍了如何仅仅使用Trnsformers来进行图像分类。

Transformers lack some of the inductive biases inherent to CNNs, such as translation equivariance and locality, and therefore do not generalize well when trained on insufficient amounts of data.

当训练集不足时,Transformer 相比于CNN缺少归纳偏置,因此很难在训练集数量不多时,表现出色。

Our Vision Transformer (ViT) attains excellent results when pre-trained at sufficient scale and transferred to tasks with fewer datapoints.

而该论文提出的VIT结构可以在大数据集预训练后,转移到小数据集依旧表现出色。

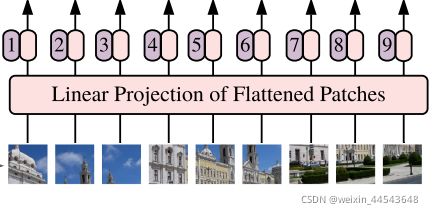

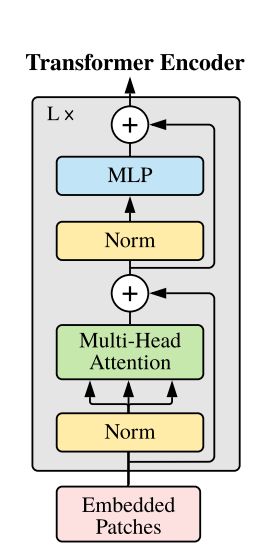

方法:

结论:

Note that this resolution adjustment and patch extraction are the only points at which an inductive bias about the 2D structure of the images is manually injected into the Vision Transformer.

其中分辨率调整和patch 提取是将图像二维架构的归纳偏差手动注入VIT中最重要的点

**

附上找到的代码:

**

import torch

from torch import nn

from einops import rearrange, repeat

from einops.layers.torch import Rearrange

# helpers

def pair(t):

return t if isinstance(t, tuple) else (t, t)

# classes

class PreNorm(nn.Module):

def __init__(self, dim, fn):

super().__init__()

self.norm = nn.LayerNorm(dim)

self.fn = fn

print(fn)

def forward(self, x, **kwargs):

return self.fn(self.norm(x), **kwargs)

class FeedForward(nn.Module):

def __init__(self, dim, hidden_dim, dropout = 0.):

super().__init__()

self.net = nn.Sequential(

nn.Linear(dim, hidden_dim),

nn.GELU(),

nn.Dropout(dropout),

nn.Linear(hidden_dim, dim),

nn.Dropout(dropout)

)

def forward(self, x):

return self.net(x)

class Attention(nn.Module):

def __init__(self, dim, heads = 8, dim_head = 64, dropout = 0.):

super().__init__()

inner_dim = dim_head * heads

project_out = not (heads == 1 and dim_head == dim)

self.heads = heads

self.scale = dim_head ** -0.5

self.attend = nn.Softmax(dim = -1)

self.to_qkv = nn.Linear(dim, inner_dim * 3, bias = False)

self.to_out = nn.Sequential(

nn.Linear(inner_dim, dim),

nn.Dropout(dropout)

) if project_out else nn.Identity()

def forward(self, x):

qkv = self.to_qkv(x).chunk(3, dim = -1)

q, k, v = map(lambda t: rearrange(t, 'b n (h d) -> b h n d', h = self.heads), qkv)

dots = torch.matmul(q, k.transpose(-1, -2)) * self.scale

attn = self.attend(dots)

out = torch.matmul(attn, v)

out = rearrange(out, 'b h n d -> b n (h d)')

return self.to_out(out)

class Transformer(nn.Module):

def __init__(self, dim, depth, heads, dim_head, mlp_dim, dropout = 0.):

super().__init__()

self.layers = nn.ModuleList([])

for _ in range(depth):

self.layers.append(nn.ModuleList([

PreNorm(dim, Attention(dim, heads = heads, dim_head = dim_head, dropout = dropout)),

PreNorm(dim, FeedForward(dim, mlp_dim, dropout = dropout))

]))

def forward(self, x):

for attn, ff in self.layers:

x = attn(x) + x

x = ff(x) + x

return x

class ViT(nn.Module):

def __init__(self, *, image_size, patch_size, num_classes, dim, depth, heads, mlp_dim, pool = 'cls', channels = 3, dim_head = 64, dropout = 0., emb_dropout = 0.):

super().__init__()

image_height, image_width = pair(image_size)

patch_height, patch_width = pair(patch_size)

assert image_height % patch_height == 0 and image_width % patch_width == 0, 'Image dimensions must be divisible by the patch size.'

num_patches = (image_height // patch_height) * (image_width // patch_width)

patch_dim = channels * patch_height * patch_width

assert pool in {'cls', 'mean'}, 'pool type must be either cls (cls token) or mean (mean pooling)'

self.to_patch_embedding = nn.Sequential(

Rearrange('b c (h p1) (w p2) -> b (h w) (p1 p2 c)', p1 = patch_height, p2 = patch_width),

nn.Linear(patch_dim, dim),

)

self.pos_embedding = nn.Parameter(torch.randn(1, num_patches + 1, dim))

self.cls_token = nn.Parameter(torch.randn(1, 1, dim))

self.dropout = nn.Dropout(emb_dropout)

self.transformer = Transformer(dim, depth, heads, dim_head, mlp_dim, dropout)

self.pool = pool

self.to_latent = nn.Identity()

self.mlp_head = nn.Sequential(

nn.LayerNorm(dim),

nn.Linear(dim, num_classes)

)

def forward(self, img):

x = self.to_patch_embedding(img)

b, n, _ = x.shape

cls_tokens = repeat(self.cls_token, '() n d -> b n d', b = b)

x = torch.cat((cls_tokens, x), dim=1)

x += self.pos_embedding[:, :(n + 1)]

x = self.dropout(x)

x = self.transformer(x)

x = x.mean(dim = 1) if self.pool == 'mean' else x[:, 0]

x = self.to_latent(x)

return self.mlp_head(x)

if __name__ == '__main__':

v = ViT(

image_size=256,

patch_size=32,

num_classes=1000,

dim=1024,

depth=6,

heads=16,

mlp_dim=2048,

dropout=0.1,

emb_dropout=0.1

)

img = torch.randn(1, 3, 256, 256)

preds = v(img) # (1, 1000)

代码转载自:

https://github.com/lucidrains/vit-pytorch