YOLOV5从训练到部署测试NCNN安卓端部署保姆级教程

一、基础配置

- 为防止环境差异,造成教程中部分步骤出现差异,造成部署失败,下面将组件版本列举出来。

| 组件 |

版本 |

| anaconda |

4.8.3 |

| python |

3.8.13 |

| yolov5 |

6.0 |

| System |

Ubuntu 22.04.1 x86_64 |

| System Core |

5.10.102.1 |

| gcc |

11.2.0 |

| g++ |

11.2.0 |

其中System、System Core、gcc、g++版本也许其他低版本也可以,但需自测。建议在docker中进行环境搭建。

- 初始化系统配置

sudo apt update

sudo apt install git gcc g++ vim curl wget cmake -y

1、Anaconda

- 下载Anaconda(如果使用Miniconda也可以从此处下载)

- 安装完毕后初始化配置conda

conda config --set show_channel_urls yes #在用户目录下生成.condarc文件- 为了加快下载速度,我们可以配置大陆境内conda加速镜像。打开用户目录下的.condarc文件,编辑内部信息

channels:

- defaults

show_channel_urls: true

default_channels:

- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/r

- https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/msys2

custom_channels:

conda-forge: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud

msys2: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud

bioconda: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud

menpo: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud

pytorch: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud

pytorch-lts: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud

simpleitk: https://mirrors.tuna.tsinghua.edu.cn/anaconda/clou- 创建YOLO基础环境

conda create -n yolo python=3.8 # 创建一个名为yolo的python3.8环境2、YoloV5

git clone https://github.com/ultralytics/yolov5.git # 下载yolov5源码

cd yolov5

git checkout v6.0 -b v6.0 # 切换为6.0版本

git checkout

conda activate yolo # 切换到前面创建的conda环境- 打开requirements.txt文件,修改其中内容

# pip install -r requirements.txt

# Base ----------------------------------------

matplotlib>=3.2.2

numpy>=1.18.5

opencv-python>=4.1.2

Pillow>=7.1.2

PyYAML>=5.3.1

requests>=2.23.0

scipy>=1.4.1

torch==1.7.1

torchvision==0.8.1

tqdm>=4.41.0

# Logging -------------------------------------

tensorboard==2.4.1

# wandb

# Plotting ------------------------------------

pandas>=1.1.4

seaborn>=0.11.0

# Export --------------------------------------

# coremltools>=4.1 # CoreML export

# onnx>=1.9.0 # ONNX export

# onnx-simplifier>=0.3.6 # ONNX simplifier

# scikit-learn==0.19.2 # CoreML quantization

# tensorflow>=2.4.1 # TFLite export

# tensorflowjs>=3.9.0 # TF.js export

# Extras --------------------------------------

# albumentations>=1.0.3

# Cython # for pycocotools https://github.com/cocodataset/cocoapi/issues/172

# pycocotools>=2.0 # COCO mAP

# roboflow

thop # FLOPs computation- 安装Yolo基础环境

pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/

conda install onnx

pip install onnxsim

pip install onnx-simplifier

pip install onnxruntime二、训练样本

- 准备labelimg环境

conda create -n labelimg python=3.8

conda activate labelimg

conda install labelimg

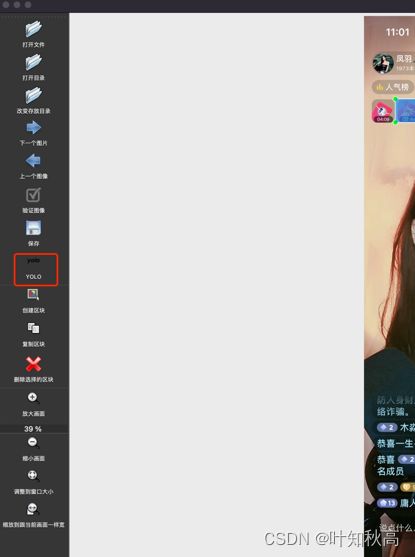

labelimg此时,系统会打开labelimg工具

- 创建样本

- 我们在系统系统目录下创建metadata目录,在目录下分别创建images、labels文件夹。

- 将包含需要识别目标的图片全部放入到images文件夹下。

- 打开labelimg,点击打开文件夹(打开目录),选择images所在文件夹。

- 点击改变存放目录,选择labels所在文件夹

- 点击标记模式为yolo模式,如下图所示。

点击查看 -> 自动保存模式,即打开自动保存模式。

- 开始标注

labelimg快捷键:W,开启标注框。A,上一张。D,下一张。将全部图片标注完毕。(准备的单个图片不需要包含所有需要识别的类别)

- 创建配置 xxx.yml

# 训练集,yolo会读取此文件夹下所有图片进行训练,有条件建议切分样本或者分批标注训练集、测试集、验证集。此处路径使用了相对路径,因此yolo会以 train.py 所在目录为根目录进行样本的搜索

train: ./metadata/images

# 验证集

val: ./metadata/images

# 验证集

test: ./metadata/images

# 类别个数,标注的时候共涉及到了几个类别,就填几,跟下面names下的个数相等

nc: 3

# 类别列表, 枚举所有类别, 可以直接复制 labels/classes.txt 下的所有类别,粘贴到此处,记得带引号,与classes.txt 顺序不要错

names: ["a", "b", "c"]此处我们将训练集、验证集、测试集放到了相同位置,可能造成结果与样本拟合,我们也可以将训练集、测试集、验证集按照下面的目录结构放置样本及配置

── images // 存放所有图像数据

├── test // 测试集图片存放位置

│ ├── xxx.jpg

├── train // 训练集图片存放位置

│ ├── xxx.jpg

└── val // 验证集图片存放位置

├── xxx.jpg

── labels // 所有标注完毕后的数据

├── test // 测试集标注标签文件所在位置

│ ├── xxx.txt

├── train// 训练集标注标签文件所在位置

│ ├── xxx.txt

└── val// 验证集标注标签文件所在位置

├── xxx.txt注意:images下样本集各自文件夹下的样本名称必须与labels下各自文件夹下的样本配置名称相同。

此处提供一个可以自动切分样本的py脚本

import os

import random

import argparse

import shutil

# 默认会从指定的目录的上级目录下的labels下查找。例如sourcepath指定为 ./dataset/images 则会认为 ./dataset/labels中存放的是所有标注完毕后的标签

LABELS_PATH = "./labels"

CLASSES_PROPERTIES = LABELS_PATH + "/classes.txt"

parser = argparse.ArgumentParser()

# 未切分的数据集地址

parser.add_argument('--source_path', type=str, help='input images path')

# 数据集划分完毕后存放的位置,不存在会自己创建

parser.add_argument('--save_path', default='./save_path', type=str, help='new train dataset path')

# parser.add_argument("--val_path", default="./val", type=str, help="new verify dataset path")

# parser.add_argument("--test_path", default="./test", type=str, help="new verify dataset path")

# 分割比例

parser.add_argument("--dataset_split_rate", default="7:2:1", type=str, help="dataset split rate, default: 7:2:1 as train:val:test")

opt = parser.parse_args()

source_path = opt.source_path

train_path = os.path.join(opt.save_path, "./images/train")

val_path = os.path.join(opt.save_path, "./images/val")

test_path = os.path.join(opt.save_path, "./images/test")

dataset_split_rate = opt.dataset_split_rate

train_dataset_rate = 0

val_dataset_rate = 0

test_dataset_rate = 0

if not os.path.exists(source_path):

raise Exception(source_path + " not found exists")

if not os.path.exists(train_path):

os.makedirs(train_path)

if not os.path.exists(val_path):

os.makedirs(val_path)

if not os.path.exists(test_path):

os.makedirs(test_path)

dataset_split_rate = dataset_split_rate.split(":")

if len(dataset_split_rate) != 3:

raise Exception("dataset_split_rate must be third numbers exp: 7:2:1")

train_dataset_rate = dataset_split_rate[0]

val_dataset_rate = dataset_split_rate[1]

test_dataset_rate = dataset_split_rate[2]

if not train_dataset_rate.isdigit():

raise Exception("dataset_split_rate must be third numbers exp: 7:2:1")

if not val_dataset_rate.isdigit():

raise Exception("dataset_split_rate must be third numbers exp: 7:2:1")

if not test_dataset_rate.isdigit():

raise Exception("dataset_split_rate must be third numbers exp: 7:2:1")

train_dataset_rate = int(train_dataset_rate)

val_dataset_rate = int(val_dataset_rate)

test_dataset_rate = int(test_dataset_rate)

if train_dataset_rate + val_dataset_rate + test_dataset_rate != 10:

raise Exception("dataset sum rate must be 10, current val : " + str(train_dataset_rate + val_dataset_rate + test_dataset_rate))

train_dataset_rate = train_dataset_rate * 0.1

val_dataset_rate = val_dataset_rate * 0.1

test_dataset_rate = test_dataset_rate * 0.1

# 读取源数据路径

all_dataset = {}

for image in os.listdir(source_path):

image_path = os.path.abspath(os.path.join(source_path, image))

fname = os.path.splitext(image)[-2]

label_path = os.path.abspath(os.path.join(source_path, "../" + LABELS_PATH + "/" + fname + '.txt'))

all_dataset[image_path] = label_path

if len(all_dataset) < 3:

raise Exception("dataset images number must > 3...")

image_orders = list(all_dataset.keys())

random.shuffle(image_orders)

train_dataset = {}

val_dataset = {}

test_dataset = {}

# 分割样本

train_dataset_max_index = int(train_dataset_rate * len(image_orders))

val_dataset_max_index = int(val_dataset_rate * len(image_orders))

test_dataset_max_index = int(test_dataset_rate * len(image_orders))

for k in image_orders:

if len(train_dataset) >= train_dataset_max_index:

if len(val_dataset) >= val_dataset_max_index:

test_dataset[k] = all_dataset[k]

else:

val_dataset[k] = all_dataset[k]

else:

train_dataset[k] = all_dataset[k]

# 保存训练集

for f in train_dataset.keys():

f = str(f)

shutil.copy(f, train_path)

clas_path = os.path.abspath(os.path.join(train_path, "../../" + LABELS_PATH + "/train"))

if not os.path.exists(clas_path):

os.makedirs(clas_path)

shutil.copy(train_dataset[f], clas_path)

for f in val_dataset.keys():

f = str(f)

shutil.copy(f, val_path)

clas_path = os.path.abspath(os.path.join(train_path, "../../" + LABELS_PATH + "/val"))

if not os.path.exists(clas_path):

os.makedirs(clas_path)

shutil.copy(val_dataset[f], clas_path)

for f in test_dataset.keys():

f = str(f)

shutil.copy(f, test_path)

clas_path = os.path.abspath(os.path.join(train_path, "../../" + LABELS_PATH + "/test"))

if not os.path.exists(clas_path):

os.makedirs(clas_path)

shutil.copy(test_dataset[f], clas_path)

执行分割

# source_path 所有的样本图片所在目录。save_path:分割完毕后的图片存放目录。data_split_rate:样本切分比例(训练集:验证集:测试集)

python xxx.py --source_path ./metadata/images --save_path ./dataset --dataset_split_rate 7:2:1样本被保存到了 dataset下,我们在dataset下创建xxx.yaml配置文件。

# 例如根目录名称为dataset

train: ./dataset/images/train

# 验证集

val: ./dataset/images/val

# 测试集

test: ./dataset/images/test

# 类别个数,标注的时候共涉及到了几个类别,就填几

nc: 3

# 类别列表, 枚举所有类别, 可以直接复制 labels/classes.txt 下的所有类别,粘贴到此处,记得带引号,与ckasses.txt 顺序不要错

names: ["a", "b", "c"]- 训练样本

conda activate yolo

# 进入yolov5源代码目录

cd yolov5

# 手动下载基础模型,指定为6.0版本

wget https://github.com/ultralytics/yolov5/releases/download/v6.0/yolov5s.pt

# 测试模型,执行完毕后,控制台会输出推理结果,Results saved to runs/detect/xxx 打开此文件夹,查看推理完毕后的结果是否正常

python detect.py --weights yolov5s.pt --img 640 --conf 0.25 --source data/images/

# 开始训练, 注意 --data 后参数,指向上面创建的yaml文件, 训练完毕后,系统会提示样本保存的目录:Optimizer stripped from runs/train/exp/weights/xxx.pt

python train.py --img 640 --batch 50 --epochs 100 --data ../metadata/xxx.yaml --weights yolov5s.pt --nosave --cache

# 如果出现 requirements: tensorboard>=2.4.1 not found and is required by YOLOv5,手动执行下面的命令

pip install tensorboard==2.4.1

# 测试模型是否正常 --weights 为训练完毕后的模型文件, --img 同训练时 img参数 --conf 最低置信度,当分值低于此分数时,不在图片中标注。--source 需要被推理的图片所在目录

python detect.py --weights runs/train/exp/weights/xxx.pt --img 640 --conf 0.25 --source data/images/- 检出为ncnn可用模型

# 检出为onnx模型,注意weights指向上一步训练出来的模型

python export.py --weights ./run/train/exp/weights/xxx.pt --img 640 640 --batch 1 --train --simplify --include onnx --opset 11

# 简化onnx模型

python -m onnxsim ./run/train/exp/weights/xxx.onnx ./run/train/exp/weights/yolov5s-sim.onnx

# 转换为ncnn模型,可以打开此网站 https://convertmodel.com/ 选择onnx转ncnn,如果自行编译了ncnn,可以打开install/bin 执行下边的命令

./onnx2ncnn ./run/train/exp/weights/yolov5s-sim.onnx ./run/train/exp/weights/yolov5s.param ./run/train/exp/weights/yolov5s.bin

- 修改配置

打开生成的yolov5s.param文件,修改Reshape参数,将0=6400、1600、400全部改为 0=-1

如下图

记录三组Permute数值的倒数第二列信息,如下图,例如我的样本配置结果为 "output", "365", "385"。如果你的Permute倒数第二列参数不是纯数值(除output之外),此时即代表后面所有的步骤都可能出现无法控制的结果,请重新开始训练,及检查你的yolo版本。

三、安卓端部署

- 打开Android Studio项目,创建或打开一个项目,切换项目显示模式为project

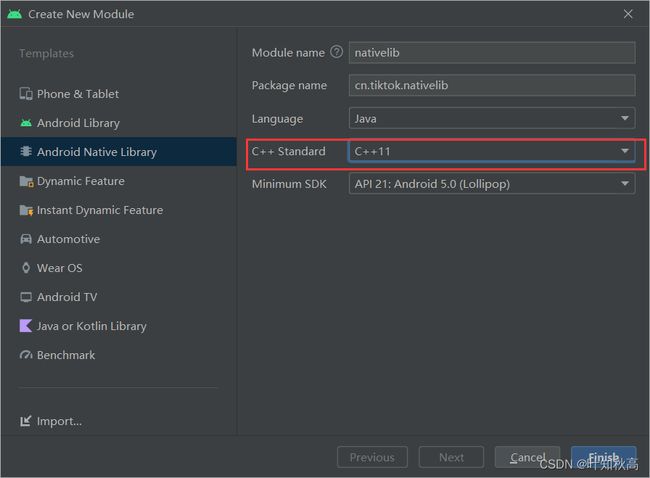

- 创建模块,模块类型选择Android Native Library,注意选择c++11

- 点击Android Studio工具栏 Tools -> SDK Manager,检查cmake 3.10.2是否已安装,如果没有安装,请安装此工具

- 修改插件目录下 src -> main -> cpp -> CMakeLists.txt文件。

project(yolov5ncnn) # 记录此名称,建议设置为插件名称

cmake_minimum_required(VERSION 3.4.1)

set(ncnn_DIR ${CMAKE_SOURCE_DIR}/ncnnvulkan/${ANDROID_ABI}/lib/cmake/ncnn)

find_package(ncnn REQUIRED)

# yolov5ncnn 参数与上名称对应,yolov5ncnn_jni.cpp即为模块创建时,自动创建在与CMakeLists.txt 平级的cpp文件,当然也可以后面手动创建

add_library(yolov5ncnn SHARED yolov5ncnn_jni.cpp)

# yolov5ncnn同上,下边两个参数不变

target_link_libraries(yolov5ncnn

ncnn

jnigraphics

)其中ncnnvulkan需要自行下载编译,或到此下载发行包,选择ncnn-xxx-android-vulkan.zip。如果github打不开,请在此下载(希望大家在此处下载,创作不易,支持一下)。

将ncnnvulkan依赖放入与CMakeLists.txt平级目录,结构如下

ncnnvulkan

├─arm64-v8a

│ ├─include

│ │ ├─glslang

│ │ │ ├─Include

│ │ │ ├─MachineIndependent

│ │ │ │ └─preprocessor

│ │ │ ├─Public

│ │ │ └─SPIRV

│ │ └─ncnn

│ └─lib

│ └─cmake

│ └─ncnn

├─armeabi-v7a

│ ├─include

│ │ ├─glslang

│ │ │ ├─Include

│ │ │ ├─MachineIndependent

│ │ │ │ └─preprocessor

│ │ │ ├─Public

│ │ │ └─SPIRV

│ │ └─ncnn

│ └─lib

│ └─cmake

│ │ ├─Include

│ │ ├─MachineIndependent

│ │ │ └─preprocessor

│ │ ├─Public

│ │ └─SPIRV

│ └─ncnn

└─lib

└─cmake

└─ncnn

CMakeLists.txt- 修改与CMakeLists.txt平级的yolov5ncnn_jni.cpp文件(CMakeLists.txt中指定的cpp文件)

// Tencent is pleased to support the open source community by making ncnn available.

//

// Copyright (C) 2020 THL A29 Limited, a Tencent company. All rights reserved.

//

// Licensed under the BSD 3-Clause License (the "License"); you may not use this file except

// in compliance with the License. You may obtain a copy of the License at

//

// https://opensource.org/licenses/BSD-3-Clause

//

// Unless required by applicable law or agreed to in writing, software distributed

// under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR

// CONDITIONS OF ANY KIND, either express or implied. See the License for the

// specific language governing permissions and limitations under the License.

#include

#include

#include

#include

#include

#include

// ncnn

#include "layer.h"

#include "net.h"

#include "benchmark.h"

static ncnn::UnlockedPoolAllocator g_blob_pool_allocator;

static ncnn::PoolAllocator g_workspace_pool_allocator;

static ncnn::Net yolov5;

class YoloV5Focus : public ncnn::Layer

{

public:

YoloV5Focus()

{

one_blob_only = true;

}

virtual int forward(const ncnn::Mat& bottom_blob, ncnn::Mat& top_blob, const ncnn::Option& opt) const

{

int w = bottom_blob.w;

int h = bottom_blob.h;

int channels = bottom_blob.c;

int outw = w / 2;

int outh = h / 2;

int outc = channels * 4;

top_blob.create(outw, outh, outc, 4u, 1, opt.blob_allocator);

if (top_blob.empty())

return -100;

#pragma omp parallel for num_threads(opt.num_threads)

for (int p = 0; p < outc; p++)

{

const float* ptr = bottom_blob.channel(p % channels).row((p / channels) % 2) + ((p / channels) / 2);

float* outptr = top_blob.channel(p);

for (int i = 0; i < outh; i++)

{

for (int j = 0; j < outw; j++)

{

*outptr = *ptr;

outptr += 1;

ptr += 2;

}

ptr += w;

}

}

return 0;

}

};

DEFINE_LAYER_CREATOR(YoloV5Focus)

struct Object

{

float x;

float y;

float w;

float h;

int label;

float prob;

};

static inline float intersection_area(const Object& a, const Object& b)

{

if (a.x > b.x + b.w || a.x + a.w < b.x || a.y > b.y + b.h || a.y + a.h < b.y)

{

// no intersection

return 0.f;

}

float inter_width = std::min(a.x + a.w, b.x + b.w) - std::max(a.x, b.x);

float inter_height = std::min(a.y + a.h, b.y + b.h) - std::max(a.y, b.y);

return inter_width * inter_height;

}

static void qsort_descent_inplace(std::vector - 注意 432、451、470行,在第 “二” 步中,“修改配置”步骤中Permute的值,将三处参数依次修改为上同参数值,即 432行修改为 **ex.extract("output", out);** 451行修改为 **ex.extract("365", out);** 470行修改为"ex.extract("385", out);"。

- 修改521行列表,与样本集中 labels下的classes.txt列表对应。

- 修改351行 constructortorId = env->GetMethodID(objCls, "", "(Lcom/tencent/yolov5ncnn/YoloV5Ncnn;)V");,将“com/tencent/yolov5ncnn/YoloV5Ncnn” 修改为自定义java类,注意前部的“L”和尾部的“V”保留(或者直接按照此包结构在模块下的src/main/java下创建此包和类文件)

- 修改348行 jclass localObjCls = env->FindClass("com/tencent/yolov5ncnn/YoloV5Ncnn$Obj"); 中 "com/tencent/yolov5ncnn/YoloV5Ncnn$Obj" 为自定义java类,同上,但不可丢掉后面的$Obj。

- 创建反射类

// Tencent is pleased to support the open source community by making ncnn available.

//

// Copyright (C) 2020 THL A29 Limited, a Tencent company. All rights reserved.

//

// Licensed under the BSD 3-Clause License (the "License"); you may not use this file except

// in compliance with the License. You may obtain a copy of the License at

//

// https://opensource.org/licenses/BSD-3-Clause

//

// Unless required by applicable law or agreed to in writing, software distributed

// under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR

// CONDITIONS OF ANY KIND, either express or implied. See the License for the

// specific language governing permissions and limitations under the License.

package com.tencent.yolov5ncnn;

import android.content.res.AssetManager;

import android.graphics.Bitmap;

public class YoloV5Ncnn

{

public native boolean Init(AssetManager mgr);

public class Obj {

// x

public float x;

// y

public float y;

// w

public float w;

// h

public float h;

// 标签

public String label;

// 置信度

public float prob;

@Override

public String toString() {

return "Obj{" +

"x=" + x +

", y=" + y +

", w=" + w +

", h=" + h +

", label='" + label + '\'' +

", prob=" + prob +

'}';

}

}

public native Obj[] Detect(Bitmap bitmap, boolean use_gpu);

static {

// 为CMakeLists.txt中配置的project名称

System.loadLibrary("yolov5ncnn");

}

}- 修改模块的build.grade配置

plugins {

id 'com.android.library'

}

android {

namespace 'cn.tiktok.ncnn'

compileSdk 24

defaultConfig {

minSdk 24

archivesBaseName = "$applicationId"

testInstrumentationRunner "androidx.test.runner.AndroidJUnitRunner"

consumerProguardFiles "consumer-rules.pro"

externalNativeBuild {

cmake {

cppFlags "-std=c++11"

}

}

ndk {

// 可减少平台支持,减小包体积

abiFilters "armeabi-v7a", "arm64-v8a", "x86", "x86_64"

}

}

buildTypes {

release {

minifyEnabled false

proguardFiles getDefaultProguardFile('proguard-android-optimize.txt'), 'proguard-rules.pro'

}

}

externalNativeBuild {

cmake {

// 此处指向CMakeLists.txt 所在文件夹

path "src/main/jni/CMakeLists.txt"

version "3.10.2"

}

}

compileOptions {

sourceCompatibility JavaVersion.VERSION_1_8

targetCompatibility JavaVersion.VERSION_1_8

}

}

dependencies {

implementation 'androidx.appcompat:appcompat:1.4.1'

implementation 'com.google.android.material:material:1.5.0'

testImplementation 'junit:junit:4.13.2'

androidTestImplementation 'androidx.test.ext:junit:1.1.3'

androidTestImplementation 'androidx.test.espresso:espresso-core:3.4.0'

}- 部署模型

在模块的src/main目录下创建 assets 文件夹,然后检查文件夹属性。如果被创建为了普通文件夹,如下图所示。

则在此文件夹上右键,选择 Mark Directory as,选择Resources root。

我们在第“二”步的“检出为ncnn可用模型”步骤,生成了 yolov5s.param 和 yolov5s.bin两个文件,将两个文件复制到assets文件夹下。

然后在app中引入此模块,并使用,注意修改为模块名称

最后选择Android Studio菜单栏 File -> Sync Project wth Gradle FIles,等待执行完毕。

四、测试使用

public class MainActivity extends Activity{

private YoloV5Ncnn yolov5ncnn = new YoloV5Ncnn();

/** Called when the activity is first created. */

@Override

public void onCreate(Bundle savedInstanceState){

super.onCreate(savedInstanceState);

// 初始化

boolean ret_init = yolov5ncnn.Init(getAssets());

if (!ret_init){

Log.e("MainActivity", "yolov5ncnn Init failed");

}

// 图片、是否使用GPU

YoloV5Ncnn.Obj[] objects = yolov5ncnn.Detect(imageBitmap, false);

// 根据注释解析YoloV5Ncnn.Obj

...

}

}