深度学习第三周,天气识别

第三周,天气识别

要求:

本地读取并加载数据,可选择本地图片进行识别

如何加速代码训练?

测试集accuracy到达91%

拔高:

如何解决过拟合问题?

测试集accuracy到达93%

参考文章:https://mtyjkh.blog.csdn.net/article/details/117186183

作者:K同学啊

本文为360天深度学习训练营中的学习记录博客

一、导入数据

import matplotlib.pyplot as plt

import numpy as np

from tensorflow import keras

from tensorflow.keras import layers,models

import os,PIL,pathlib

data_dir = 'D:\AIoT学习\深度学习训练营\第三周\weather_photos'

data_dir = pathlib.Path(data_dir)

该文章数据为训练内部数据集

2、查看数据

Tips:

path.glob(pattern):在此路径表示的目录中全局查找给定的相对pattern,生成所有匹配的文件(任何类型)

在转成列表后可以查看具体文件名

image_count = len(list(data_dir.glob('*/*.jpg')))

print('图片总数为:',image_count)

图片总数为: 1125

roses = list(data_dir.glob('sunrise/*.jpg'))

PIL.Image.open(str(roses[0])) #根据path直接打开图片

二、 数据预处理

1、加载数据

使用image_dataset_from_directory方法将磁盘中的数据加载到tf.data.Dataset中

- 作用

将文件夹中的数据加载到tf.data.Dataset中,且加载的同时会打乱数据。 - 返回一个tf.data.Dataset对象

参数

- directory: 数据所在目录。如果标签是inferred(默认),则它应该包含子目录,每个目录包含一个类的图像。否则,将忽略目录结构。

- labels: inferred(标签从目录结构生成),或者是整数标签的列表/元组,其大小与目录中找到的图像文件的数量相同。标签应根据图像文件路径的字母顺序排序(通过Python中的os.walk(directory)获得)。

- label_mode:

int:标签将被编码成整数(使用的损失函数应为:sparse_categorical_crossentropy loss)。

categorical:标签将被编码为分类向量(使用的损失函数应为:categorical_crossentropy loss)。

binary:意味着标签(只能有2个)被编码为值为0或1的float32标量(例如:binary_crossentropy)。

None:(无标签)。 - class_names: 仅当labels为inferred时有效。这是类名称的明确列表(必须与子目录的名称匹配)。用于控制类的顺序(否则使用字母数字顺序)。

- color_mode: grayscale、rgb、rgba之一。默认值:rgb。图像将被转换为1、3或者4通道。

- batch_size: 数据批次的大小。默认值:32

- image_size: 从磁盘读取数据后将其重新调整大小。默认:(256,256)。由于管道处理的图像批次必须具有相同的大小,因此该参数必须提供。

- shuffle: 是否打乱数据。默认值:True。如果设置为False,则按字母数字顺序对数据进行排序。

- seed: 用于shuffle和转换的可选随机种子。

- validation_split: 0和1之间的可选浮点数,可保留一部分数据用于验证。

- subset: training或validation之一。仅在设置validation_split时使用。

- interpolation: 字符串,当调整图像大小时使用的插值方法。默认为:bilinear。支持bilinear, nearest, bicubic, area, lanczos3, lanczos5, gaussian, mitchellcubic。

- follow_links: 是否访问符号链接指向的子目录。默认:False。

————————————————

原文链接:https://blog.csdn.net/qq_38251616/article/details/117018789

train_ds = keras.preprocessing.image_dataset_from_directory(

directory = data_dir,

validation_split = 0.2,

subset = 'training',

seed = 92,

batch_size = 32,

)

Found 1125 files belonging to 4 classes.

Using 900 files for training.

#设置验证集

val_ds = keras.preprocessing.image_dataset_from_directory(

directory = data_dir,

validation_split = 0.2,

subset = 'validation',

seed = 92,#随机种子与训练集相同

batch_size = 32)

Found 1125 files belonging to 4 classes.

Using 225 files for validation.

#查看数据集集标签,标签按字母顺序对应目录名

class_name = train_ds.class_names

print(class_name)

[‘cloudy’, ‘rain’, ‘shine’, ‘sunrise’]

2、可视化数据

通过tf.data.Dataset对象的take方法

take()

功能:用于返回一个新的Dataset对象,新的Dataset对象包含的数据是原Dataset对象的子集。

参数:

count:整型,用于指定前count条数据用于创建新的Dataset对象,如果count为-1或大于原Dataset对象的size,则用原Dataset对象的全部数据创建新的对象。

import tensorflow as tf

plt.figure(figsize=(20,10))

for images,labels in train_ds.take(1): #由于前面batch_size选的默认32,因此一批take中由32张图数据,这里我们只选取第一批作为展示

for i in range(20):

ax = plt.subplot(5,10,i+1)

plt.imshow(images[i].numpy().astype('uint8')) #这里调用的astype时tensorflow中与numpy相关的方法而不是numpy本身

plt.title(class_name[labels[i]])

plt.axis('off')

3、再次检查数据

for image_batch,labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break

(32, 256, 256, 3)

(32,)

- Image_batch是形状的张量(32,180,180,3)。这是一批形状180x180x3的32张图片(最后一维指的是彩色通道RGB)。

- Label_batch是形状(32,)的张量,这些标签对应32张图片

4、配置数据

Datase对象的shuffle(buffer_size缓冲区大小)方法,随机打乱,=1时无打乱效果,数据集本身比较随机可以设置小一些

- 首先,Dataset会取所有数据的前buffer_size数据项,填充 buffer

- 然后,从buffer中随机选择一条数据输出

- 然后,从Dataset中顺序选择最新的一条数据填充到buffer中

- 然后在从Buffer中随机选择下一条数据输出。

- 当值为整个Dataset元素总数时,完全打乱。

tf.data.AUTOTUNE

- 可以通过该方法自动选择你需要的缓冲区尺寸

Dataset的cache(filename)方法,可以将数据缓存到内存中,加速运行

- 当卡按数据迭代完成,元素在特定位置实现缓存,后续迭代会利用缓存数据集

Dataset 的 prefetch()方法:

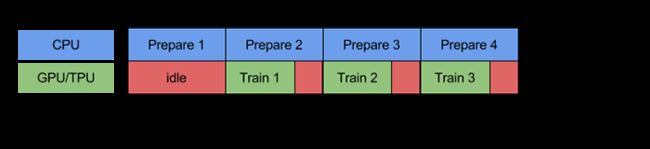

- prefetch(buffer_size)功能详细介绍:CPU 正在准备数据时,加速器处于空闲状态。相反,当加速器正在训练模型时,CPU 处于空闲状态。因此,训练所用的时间是 CPU 预处理时间和加速器训练时间的总和。prefetch()将训练步骤的预处理和模型执行过程重叠到一起。当加速器正在执行第 N 个训练步时,CPU 正在准备第 N+1 步的数据。这样做不仅可以最大限度地缩短训练的单步用时(而不是总用时),而且可以缩短提取和转换数据所需的时间。如果不使用prefetch(),CPU 和 GPU/TPU 在大部分时间都处于空闲状态

AUTOTUNE = tf.data.AUTOTUNE #-1完全打散

train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)

三、构建CNN网络

卷积神经网络(CNN)的输入是张量 (Tensor) 形式的 (image_height, image_width, color_channels),包含了图像高度、宽度及颜色信息。不需要输入batch size。color_channels 为 (R,G,B) 分别对应 RGB 的三个颜色通道(color channel)。我们需要在声明第一层时将形状赋值给参数input_shape

卷积运算一个重要的特点就是,通过卷积运算,可以使原信号特征增强,并且降低噪音。

model = models.Sequential([

layers.experimental.preprocessing.Rescaling(1./255,input_shape=(256,256,3)), #数据标准化

layers.Conv2D(16,(3,3),activation='relu',input_shape=(256,256,3)), #参数数量 3*3*3*16 + 16

layers.MaxPool2D((2,2)), #先通过最大值池化过滤掉不重要的信息,关注纹理

layers.Conv2D(32,(3,3),activation='relu'),

layers.AveragePooling2D((2,2)), #在深层则平均池化,保留低频的背景信号

layers.Conv2D(64,(3,3),activation='relu'),

layers.Flatten(), #维度等于60*60*64

layers.Dense(128,activation='relu'),

layers.Dropout(0.2), #230400*128+128

layers.Dense(len(class_name),activation='softmax')

])

model.summary()

_____________________________________Layer (type) Output Shape Param

Rescaling (None, 256, 256, 3) 0

Conv2D (None, 254, 254, 16) 448

MaxPooling (None, 127, 127, 16) 0

2D

Conv2D (None, 125, 125, 32) 4640

AveragePool2D (None, 62, 62, 32) 0

Conv2D (None, 60, 60, 64) 18496

Flatten (None, 230400) 0

Dense (None, 128) 29491328

Dropout None, 128) 0

Dense (None, 4) 516

=================================================================

Total params: 29,515,428

Trainable params: 29,515,428

Non-trainable params: 0

四、编译模型

# 设置优化其

opt = keras.optimizers.Adam(learning_rate=0.001)

model.compile(optimizer=opt,loss=keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

五、训练模型

epochs = 10

histroy = model.fit(train_ds,validation_data=val_ds,epochs=epochs)

六、模型评估

plt.figure(figsize=(12,4))

plt.subplot(121)

plt.plot(histroy.history['accuracy'],label = 'accuracy')

plt.plot(histroy.history['val_accuracy'],label='val_accuracy')

plt.legend(loc='lower right')

plt.xlabel('encho num')

plt.title('Train and val accuracy')

plt.subplot(122)

plt.plot(histroy.history['loss'],label = 'loss')

plt.plot(histroy.history['val_loss'],label = 'val_loss')

plt.legend(loc='lower right')

plt.xlabel('encho num')

plt.title('Train and val loss')

- 该模型测试集准确率与损失函数均明显低于训练集,有过拟合的可能

- 测试与训练集损失函数均未收敛,因此需要增加训练轮次

七、实验过程优化

方案一、

- 增加轮次到32轮,并将drop层超参设置为0.3,以减弱过拟合

model2 = models.Sequential([

layers.experimental.preprocessing.Rescaling(1./255,input_shape=(256,256,3)), #数据标准化

layers.Conv2D(16,(3,3),activation='relu',input_shape=(256,256,3)), ###此处16可以理解为卷积窗种类,可训练参数数量为3*3*16*3+16

#卷积层输出单个特征图大小256-3+1=254*254,共16个map

layers.MaxPool2D((2,2)), 息,关注纹理

layers.Conv2D(32,(3,3),activation='relu'),

layers.AveragePooling2D((2,2)), #在深层则平均池化,保留低频的背景信号

layers.Conv2D(64,(3,3),activation='relu'),

layers.Flatten(), #60*60*64

layers.Dense(128,activation='relu'), #Flatten输出维度*128+128

layers.Dropout(0.3),

layers.Dense(len(class_name),activation='softmax')

])

model2.summary()

opt = keras.optimizers.Adam(learning_rate=0.001)

model2.compile(optimizer=opt,loss=keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

epochs = 32

histroy = model2.fit(train_ds,validation_data=val_ds,epochs=epochs)

plt.figure(figsize=(12,4))

plt.subplot(121)

plt.plot(histroy.history['accuracy'],label = 'accuracy')

plt.plot(histroy.history['val_accuracy'],label='val_accuracy')

plt.legend(loc='lower right')

plt.xlabel('encho num')

plt.title('Train and val accuracy')

plt.subplot(122)

plt.plot(histroy.history['loss'],label = 'loss')

plt.plot(histroy.history['val_loss'],label = 'val_loss')

plt.legend(loc='lower right')

plt.xlabel('encho num')

plt.title('Train and val loss')

- 训练集准确率仍在97%以上,测试准确率没有明显改善,相对稳定后很少超过90%,甚至随轮次增加,损失函数增加,表明过拟合问题仍然存在,但波动程度随着轮次增加相对减弱

- 可以试着将第一个最大值池化层变为平均池化层,更多关注背景信息

方案二

- 更改池化层

model3 = models.Sequential([

layers.experimental.preprocessing.Rescaling(1./255,input_shape=(256,256,3)),

layers.Conv2D(16,(3,3),activation='relu',input_shape=(256,256,3)),

layers.AveragePooling2D((2,2)),

layers.Conv2D(32,(3,3),activation='relu'),

layers.AveragePooling2D((2,2)),

layers.Conv2D(64,(3,3),activation='relu'),

layers.Flatten(),

layers.Dense(128,activation='relu'), *128+128

layers.Dropout(0.3),

layers.Dense(len(class_name),activation='softmax')

])

model3.summary()

准确率与损失函数图像为

相对前一个方案测试集损失函数有些许降低,但稳定性下降,过拟合仍存在,考虑是否与图片结构有关,可能边缘也包含了不少的信息,将卷积层padding方案改为padding

方案三

- 更改padding方案

layers.Conv2D(16,(3,3),padding='same',activation='relu',input_shape=(256,256,3)), #将前两层padding方案改为padding,保留更多边缘信息

方案四

加入L2正则化

对第一个卷积层进行修改

layers.Conv2D(16,(3,3),padding='same',activation='relu',input_shape=(256,256,3),kernel_regularizer=keras.regularizers.l2(0.1)),

测试集和训练集准确率仍有明显差异,由此怀疑是否时模型本身的性能问题,选择增加卷积层

model6 = models.Sequential([

layers.experimental.preprocessing.Rescaling(1./255,input_shape=(256,256,3)), #数据标准化

layers.Conv2D(16,(3,3),padding='same',activation='relu',input_shape=(256,256,3),kernel_regularizer=keras.regularizers.l2(0.1)),

layers.AveragePooling2D((2,2)),

layers.Conv2D(32,(3,3),padding='same',activation='relu'),

layers.AveragePooling2D((2,2)),

layers.Conv2D(64,(3,3),activation='relu'),

layers.AveragePooling2D((2,2)),

layers.Conv2D(128,(3,3),activation='relu'), #添加新卷积层

layers.Flatten(),

layers.Dense(128,activation='relu'),

layers.Dropout(0.3),

layers.Dense(len(class_name),activation='softmax')

])

- 本次实验结果,在后8轮模型测试集准确率稳定在90%以上,后两轮均在93%以上,训练集在97%左右。

- 可以发现虽然训练集准确率达标,但是损失函数却保持在较高水平甚至超过前面的实验方案,表明可能存在少数极端的错误分类样本主导了损失函数的大小。

总结

- 针对不同的目标应选择不同的池化层,当更注重背景信息时,更倾向于average pool,更注重图片纹理时选择max pool

- 采用平均池化有时可以减弱过拟合现象

- 针对过拟合我们也可以通过在卷积层设置时加入L2正则化,或是增大drop层参数来改善

- 有时模型准确率高但损失函数反而偏大,可能是受到了少数极端错误分类样本的影响

- 若采取各种方法后模型仍不能达到要求,可以通过增加卷积层改善模型性能

- 对于训练集,在训练之前要进行shuffle操作,以确保模型的泛化能力

- 通过Dataset对象的cache()方法和prefetch()通过缓存到内存中加速模型运行