【CV项目-PyTorch垃圾分类】6.项目实战

1.主程序代码

# 1. 导入库

## 系统库

import os

from os import walk

## torch 相关的库包

import torch

import torch.nn as nn

from torchvision import datasets

## 相关参数

from args import args

## 数据预处理函数定义

from transform import preprocess

## 模型pre_trained_model 加载、训练、评估、标签映射关系

from model import train, evaluate, initital_model, class_id2name

## 工具类: 日志类工具、模型保存、优化器

from utils.logger import Logger

from utils.misc import save_checkpoint, get_optimizer

## 训练矩阵效果评估工具类

from sklearn import metrics

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# 获取所有的参数

state = {k: v for k, v in args._get_kwargs()}

print('state = ', state)

# 2. 数据整体探测

# 通过目录查询目录下的数据格式

base_path = 'data/garbage-classify-for-pytorch'

for (dirpath, dirnames, filenames) in os.walk(base_path):

if len(filenames) > 0:

print('*' * 60)

print('Diretory path:', dirpath)

print('total examples = ', len(filenames))

print('File name Example = ', filenames[:2])

# 3. 数据封装ImageFolder 格式

TRAIN = "{}/train".format(base_path)

VALID = "{}/val".format(base_path)

print('train data_path = ', TRAIN)

print('val data_path = ', VALID)

# 数据加载器 ImageFolder 输入格式:

# root/dog/xxx.png

# root/dog/xxy.png

# root/dog/xxz.png

# root/cat/123.png

# root/cat/nsdf3.png

# root/cat/asd932_.png

# # root (string): 根目录路径

# # transform: 定义的数据预处理函数

train_data = datasets.ImageFolder(root=TRAIN, transform=preprocess)

val_data = datasets.ImageFolder(root=VALID, transform=preprocess)

assert train_data.class_to_idx.keys() == val_data.class_to_idx.keys() # assert(断言)用于判断一个表达式,在表达式条件为 false 的时候触发异常。

print('imgs = ', train_data.imgs[:2])

# 4. 批量数据加载

batch_size = 10

num_workers = 2

train_loader = torch.utils.data.DataLoader(train_data, batch_size=batch_size, num_workers=num_workers, shuffle=True)

val_loader = torch.utils.data.DataLoader(val_data, batch_size=batch_size, num_workers=num_workers, shuffle=False)

image, label = next(iter(train_loader))

print(label)

print(image.shape)

class_list = [class_id2name()[i] for i in list(range(len(train_data.class_to_idx.keys())))]

print('class_list = ', class_list)

# 定义全局变量,保存准确率

best_acc = 0

# 5. 定义模型训练和验证方法

def run(model, train_loader, val_loader):

'''

模型训练和预测

:param model: 初始化的model

:param train_loader: 训练数据

:param val_loader: 验证数据

:return:

'''

# 初始化变量

## 模型保存的变量

global best_acc

## 训练C类别的分类问题,我们CrossEntropyLoss(交叉熵损失函数)

criterion = nn.CrossEntropyLoss()

## torch.optim 是一个各种优化算法库

## optimizer 对象能保存当前的参数状态并且基于计算梯度更新参数

optimizer = get_optimizer(model, args)

# 加载checkpoint: 可以指定迭代的开始位置进行重新训练

if args.resume:

# --resume checkpoint/checkpoint.pth.tar

# load checkpoint

print('Resuming from checkpoint...')

assert os.path.isfile(args.resume), 'Error: no checkpoint directory found!!'

checkpoint = torch.load(args.resume)

best_acc = checkpoint['best_acc']

state['start_epoch'] = checkpoint['epoch']

model.load_state_dict(checkpoint['state_dict'])

optimizer.load_state_dict(checkpoint['optimizer'])

# 评估: 混淆矩阵;准确率、召回率、F1-score

if args.evaluate:

print('\nEvaluate only')

test_loss, test_acc, predict_all, labels_all = evaluate(val_loader, model, criterion, test=True)

print('Test Loss:%.8f,Test Acc:%.2f' % (test_loss, test_acc))

# 混淆矩阵

report = metrics.classification_report(labels_all, predict_all, target_names=class_list, digits=4)

confusion = metrics.confusion_matrix(labels_all, predict_all)

print('\n report ', report)

print('\n confusion', confusion)

return

# 模型的训练和验证

## append logger file

logger = Logger(os.path.join(args.checkpoint, 'log.txt'), title=None)

## 设置logger 的头信息

logger.set_names(['Learning Rate', 'epoch', 'Train Loss', 'Valid Loss', 'Train Acc.', 'Valid Acc.'])

for epoch in range(state['start_epoch'], state['epochs'] + 1):

print('[{}/{}] Training'.format(epoch, args.epochs))

# train

train_loss, train_acc = train(train_loader, model, criterion, optimizer)

# val

test_loss, test_acc = evaluate(val_loader, model, criterion, test=None)

# 核心参数保存logger

logger.append([state['lr'], int(epoch), train_loss, test_loss, train_acc, test_acc])

print('train_loss:%f, val_loss:%f, train_acc:%f, val_acc:%f' % (

train_loss, test_loss, train_acc, test_acc,))

# 保存模型

is_best = test_acc > best_acc

best_acc = max(test_acc, best_acc)

save_checkpoint({

'epoch': epoch + 1,

'state_dict': model.state_dict(),

'train_acc': train_acc,

'test_acc': test_acc,

'best_acc': best_acc,

'optimizer': optimizer.state_dict()

}, is_best, checkpoint=args.checkpoint)

print('Best acc:')

print(best_acc)

# 入门程序

if __name__ == '__main__':

print("hello")

# 模型初始化

model_name = args.model_name

num_classes = args.num_classes

model_ft = initital_model(model_name, num_classes, feature_extract=True) # feature_extract=True 由于是迁移学习,只抽取特征

# 设置模型运行模式(cuda/cpu)

model_ft.to(device)

# 打印模型参数大小

print('Total params: %.2fM' % (sum(p.numel() for p in model_ft.parameters()) / 1000000.0))

# print(model_ft)

run(model_ft, train_loader, val_loader)

2.model代码

# 导入torch 相关包

import time

import torch

import torch.nn as nn

# 导入模型定义方法

import models

# 导入工具类

from utils.eval import accuracy

from utils.misc import AverageMeter

import numpy as np

# 导入进度条库

from progress.bar import Bar

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

def train(train_loader, model, criterion, optimizer):

'''

模型训练

:param train_loader:

:param model:

:param criterion:

:param optimizer:

:return:

'''

# 定义保存更新变量

data_time = AverageMeter()

batch_time = AverageMeter()

losses = AverageMeter()

top1 = AverageMeter()

end = time.time()

#################

# train the model

#################

model.train()

# 训练每批数据,然后进行模型的训练

## 定义bar 变量

bar = Bar('Processing',max = len(train_loader))

for batch_index, (inputs, targets) in enumerate(train_loader):

data_time.update(time.time() - end)

# move tensors to GPU if cuda is_available

inputs, targets = inputs.to(device), targets.to(device)

# 在进行反向传播之前,我们使用zero_grad方法清空梯度

optimizer.zero_grad()

# 模型的预测

outputs = model(inputs)

# 计算loss

loss = criterion(outputs, targets)

# backward pass:

loss.backward()

# perform as single optimization step (parameter update)

optimizer.step()

# 计算acc和变量更新

prec1, _ = accuracy(outputs.data, targets.data, topk=(1, 1))

losses.update(loss.item(), inputs.size(0))

top1.update(prec1.item(), inputs.size(0))

batch_time.update(time.time() - end)

end = time.time()

# plot progress

## 把主要的参数打包放进bar中

# plot progress

bar.suffix = '({batch}/{size}) Data: {data:.3f}s | Batch: {bt:.3f}s | Total: {total:} | ETA: {eta:} | Loss: {loss:.4f} | top1: {top1: .4f}'.format(

batch=batch_index + 1,

size=len(train_loader),

data=data_time.val,

bt=batch_time.val,

total=bar.elapsed_td,

eta=bar.eta_td,

loss=losses.avg,

top1=top1.avg

)

bar.next()

bar.finish()

return (losses.avg, top1.avg)

def evaluate(val_loader,model, criterion,test = None):

'''

模型评估

:param val_loader:

:param model:

:param criterion:

:param test:

:return:

'''

global best_acc

batch_time = AverageMeter()

data_time = AverageMeter()

losses = AverageMeter()

top1 = AverageMeter()

predict_all = np.array([],dtype=int)

labels_all = np.array([],dtype=int)

#################

# val the model

#################

model.eval()

end = time.time()

# 训练每批数据,然后进行模型的训练

## 定义bar 变量

bar = Bar('Processing', max=len(val_loader))

for batch_index, (inputs, targets) in enumerate(val_loader):

data_time.update(time.time() - end)

# move tensors to GPU if cuda is_available

inputs, targets = inputs.to(device), targets.to(device)

# 模型的预测

outputs = model(inputs)

# 计算loss

loss = criterion(outputs, targets)

# 计算acc和变量更新

prec1, _ = accuracy(outputs.data, targets.data, topk=(1, 1))

losses.update(loss.item(), inputs.size(0))

top1.update(prec1.item(), inputs.size(0))

batch_time.update(time.time() - end)

end = time.time()

# 评估混淆矩阵的数据

targets = targets.data.cpu().numpy() # 真实数据的y数值

predic = torch.max(outputs.data,1)[1].cpu().numpy() # 预测数据y数值

labels_all = np.append(labels_all,targets) # 数据赋值

predict_all = np.append(predict_all,predic)

## 把主要的参数打包放进bar中

# plot progress

bar.suffix = '({batch}/{size}) Data: {data:.3f}s | Batch: {bt:.3f}s | Total: {total:} | ETA: {eta:} | Loss: {loss:.4f} | top1: {top1: .4f}'.format(

batch=batch_index + 1,

size=len(val_loader),

data=data_time.val,

bt=batch_time.val,

total=bar.elapsed_td,

eta=bar.eta_td,

loss=losses.avg,

top1=top1.avg

)

bar.next()

bar.finish()

if test:

return (losses.avg, top1.avg,predict_all,labels_all)

else:

return (losses.avg, top1.avg)

def set_parameter_requires_grad(model, feature_extract):

'''

:param model: 模型

:param feature_extract: true 固定特征抽取层

:return:

'''

if feature_extract:

for param in model.parameters():

# 不需要更新梯度,冻结某些层的梯度

param.requires_grad = False

def initital_model(model_name, num_classes, feature_extract=True):

"""

基于提供的pre_trained_model 进行初始化

:param model_name:

提供的模型名称,例如: resnext101_32x16d/resnext101_32x8d..

:param num_classes: 图片分类个数

:param feature_extract: 设置true ,固定特征提取层,优化全连接的分类器

:return:

"""

model_ft = None

if model_name == 'resnext101_32x16d':

# 加载facebook pre_trained_model resnext101,默认1000 类

model_ft = models.resnext101_32x16d_wsl()

# 设置 固定特征提取层

set_parameter_requires_grad(model_ft, feature_extract)

# 调整分类个数

num_ftrs = model_ft.fc.in_features # 输入特征的值

# 修改fc 的分类个数

model_ft.fc = nn.Sequential(

nn.Dropout(0.2), # 防止过拟合

nn.Linear(in_features=num_ftrs, out_features=num_classes)

)

elif model_name == 'resnext101_32x8d':

# 加载facebook pre_trained_model resnext101,默认1000 类

model_ft = models.resnext101_32x8d()

# 设置 固定特征提取层

set_parameter_requires_grad(model_ft, feature_extract)

# 调整分类个数

num_ftrs = model_ft.fc.in_features # 输入特征的值

# 修改fc 的分类个数

model_ft.fc = nn.Sequential(

nn.Dropout(0.2), # 防止过拟合

nn.Linear(in_features=num_ftrs, out_features=num_classes)

)

else:

print('Invalid model name,exiting..')

exit()

return model_ft

import codecs

def class_id2name():

'''

标签关系映射

:return:

'''

clz_id2name = {}

for line in codecs.open('data/garbage_label.txt','r',encoding='utf-8'):

line = line.strip()

_id = line.split(":")[0]

_name = line.split(":")[1]

clz_id2name[int(_id)] = _name

return clz_id2name

3.args代码

# 导入模块

import argparse # 用于参数分析

# 创建一个参数的解析对象

parser = argparse.ArgumentParser(description='Pytorch garbage Training ')

# 设置参数信息

# # 模型名称

parser.add_argument('--model_name', default='resnext101_32x8d', type=str,

choices=['resnext101_32x8d', 'resnext101_32x16d'],

help='model_name selected in train')

# # 学习率

parser.add_argument('--lr', '--learning-rate', default=0.001, type=float,

metavar='LR', help='initital learning rate 1e-2,12-4,0.001')

# # 模型评估 默认false,指定 -e true

parser.add_argument('-e', '--evaluate', dest='evaluate', action='store_true',

help='evaluate model on validation set')

# # 模型的存储路径

parser.add_argument('--resume', default="", type=str, metavar='PATH', help='path to latest checkpoint')

parser.add_argument('-c', '--checkpoint', default="checkpoint", type=str, metavar='PATH',

help='path to save checkpoint')

# # 模型迭代次数

parser.add_argument('--epochs', default=2, type=int, metavar='N', help='number of total epochs to run')

# # 图片分类g

parser.add_argument('--num_classes', default=4, type=int, metavar='N', help='number of classes')

# # 从那个epoch 开始训练

parser.add_argument('--start_epoch', default=1, type=int, metavar='N', help='manual epoch number')

# 模型优化器

parser.add_argument('--optimizer', default='adam', choices=['sgd', 'adam'], metavar='N',

help='optimizer(default adam)')

# 进行参数解析

args = parser.parse_args()4.transform代码

import io

import torchvision.transforms as transforms

from PIL import Image

# 数据预处理方法定义

preprocess = transforms.Compose([

# 1. 图片变换:重置图像的分辨率,图片缩放 256

transforms.Resize(256),

# 2. 裁剪: 中心裁剪,给定的size 从中心裁剪

transforms.CenterCrop(224),

# 3. 数据归一化[0,1] 除以255

transforms.ToTensor(),

# 4. 对数据进行标准化,即减去我们的均值,然后在除以标准差

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

])

def transform_image(img_bytes):

"""

图片数据-> 数据预处理

:param img_bytes:

:return:

"""

image = Image.open(io.BytesIO(img_bytes))

image_tensor = preprocess(image)

image_tensor = image_tensor.unsqueeze(0)

return image_tensor

5.misc代码

'''

Some helper functions for PyTorch, including:

'''

import torch

import os

__all__ = ['AverageMeter', 'get_optimizer', 'save_checkpoint']

def get_optimizer(model, args):

if args.optimizer == 'sgd':

return torch.optim.SGD(model.parameters(),

args.lr)

elif args.optimizer == 'rmsprop':

return torch.optim.RMSprop(model.parameters(),

args.lr)

elif args.optimizer == 'adam':

return torch.optim.Adam(model.parameters(),

args.lr)

else:

raise NotImplementedError

class AverageMeter(object):

"""Computes and stores the average and current value

Imported from https://github.com/pytorch/examples/blob/master/imagenet/main.py#L247-L262

"""

def __init__(self):

self.reset()

def reset(self):

self.val = 0

self.avg = 0

self.sum = 0

self.count = 0

def update(self, val, n=1):

self.val = val

self.sum += val * n

self.count += n

self.avg = self.sum / self.count

def save_checkpoint(state, is_best, checkpoint='checkpoint', filename='checkpoint.pth.tar'):

if not os.path.exists(checkpoint):

os.makedirs(checkpoint)

# 保存断点信息

filepath = os.path.join(checkpoint, filename)

print('checkpoint filepath = ',filepath)

torch.save(state, filepath)

# 模型保存

if is_best:

model_name = 'garbage_resnext101_model_' + str(state['epoch']) + '_' + str(

int(round(state['train_acc'] * 100, 0))) + '_' + str(

int(round(state['test_acc'] * 100, 0))) + '.pth'

print('Validation loss decreased Saving model ..,model_name = ', model_name)

model_path = os.path.join(checkpoint, model_name)

print('model_path = ',model_path)

torch.save(state['state_dict'], model_path)

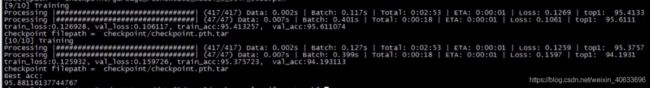

GPU环境下的模型训练结果: